Abstract

We consider a disjoint cover (partition) of an undirected weighted finite or infinite graph G by J connected subgraphs (clusters) \(\{S_{j}\}_{j\in J}\) and select functions \(\psi _{j}\) on each of the clusters. For a given signal f on G the set of its weighted average values samples is defined via inner products \(\{\langle f, \psi _{j}\rangle \}_{j\in J}\). The main results of the paper are based on Poincare-type inequalities that we introduce and prove. These inequalities provide an estimate of the norm of the signal f on the entire graph G from sets of samples of f and its local gradient on each of the subgraphs. This allows us to establish discrete Plancherel-Polya-type inequalities (or Marcinkiewicz-Zigmund-type or frame inequalities) for signals whose gradients satisfy a Bernstein-type inequality. These results enable the development of a sampling theory for signals on undirected weighted finite or infinite graphs. For reconstruction of the signals from their samples an interpolation theory by weighted average variational splines is developed. Here by a weighted average variational spline we understand a minimizer of a discrete Sobolev norm which takes on the prescribed weighted average values on a set of clusters (in particular, just values on a subset of vertices). Although our approach is applicable to general graphs it’s especially well suited for finite and infinite graphs with multiple clusters. Such graphs are known as community graphs and they find many important applications in materials science, engineering, computer science, economics, biology, and social studies.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Signal processing on combinatorial weighted undirected graphs (without loops and multiple edges) has been developed during the course of past decade. Vertex-wise sampling of Paley-Wiener functions (signals) on finite and infinite graphs was initiated in [25] and was further expanded (mainly for finite graphs) in a number of papers (see for example [2, 19, 20, 35,36,37]). The goal of the present article is to go beyond the vertex-wise approach and explore sampling based on weighted averages over relatively small subgraphs. One immediate advantage of using averages is that it enables us to deal with random noise that is intrinsic to point-wise measurements. Our results hold true for general finite and infinite graphs but they are most effective for community graphs, i.e. graphs whose set of vertices can be covered by a set of finite clusters with many heavily weighted edges inside and a few light edges outside. It is known that such structures are ubiquities in chemistry, computer science, engineering, biology, economics, social sciences, etc. [9].

The structure of the paper is as follows. In Sect. 2 we introduce some general information about analysis on combinatorial graphs. In Sect. 3 we start by establishing an analog of the Poincare inequality (Theorem 3.1) for finite graphs. In Sect. 4 we consider a disjoint cover (a partition) of a general graph G (finite or infinite) by connected finite subgraphs \({\mathcal {S}}=\{S_{j}\}_{j\in J}\) and assume existence of ”measuring devices” \(\{\psi _{j}\}_{j\in J}\) where a ”measurement” itself is the inner product of a signal with a function \(\psi _{j}\in \ell ^{2}(G)\) supported on \(S_{j}\). Our main result (and our tool) is an inequality which provides an estimate of the norm of a signal on G through its local measurements and its local gradients on each \(S_{j}\) (Theorem 4.1). This inequality enables us to establish some Plancherel-Polya-type (or Marcinkiewicz-Zigmund-type, or frame) inequalities (Theorem 4.5) for signals whose gradient satisfies a Bernstein-type inequality. This, in turn, allows us to develop a sampling theory for signals in spaces which we denote as \({\mathcal {X}}(\omega )\) and \(PW_{\omega }\) (see Definitions 2, 3 below). It is interesting, that our approach also permits us to estimate the number of frequencies (counted with multiplicities) which can be recovered on a finite community graph (Remark 4.8). Namely, if there are \(|J|<\infty \) clusters which cover G and if the connections between the clusters are very weak compared to the connections inside of the clusters then exactly |J| first frequencies can be recovered. In Sects. 5 and 6 an interpolation theory by average variational splines is developed. The interpolation concept is understood in some generalized sense, i.e. if \(\psi _{j}\in \ell ^{2}(S_{j})\) is a ”measuring device” associated with a cluster \(S_{j}\) then for a given \(f\in \ell ^{2}(G)\) we say that \(s_{k}(f)\) is a variational spline interpolating f by its average weighted values if

-

(1)

\(\langle \psi _{j}, f\rangle =\langle \psi _{j}, s_{k}(f)\rangle \) for all \(j\in J\),

-

(2)

\(s_{k}(f)\) minimizes the functional \( u\rightarrow \Vert L^{k/2}u\Vert , \)

where L is the Laplace operator on G.

We show that such interpolant exists for any function in \(\ell ^{2}(G)\) and moreover, there is a class of functions (functions of small bandwidths) which can be reconstructed from their sets of samples \(\left\{ \langle \psi _{j}, f\rangle \right\} _{j\in J}\) as limits if interpolating average weighted splines when k (the degree of smoothness) goes to infinity. This result is a graph analog of the classical results [32] and [4] (see also [26]). It is interesting to note that although the set of samples \(\left\{ \langle \psi _{j}, f\rangle \right\} _{j\in J}\) does not contain (in general) the values of f at vertices of G, the limit of interpolating average weighted splines reconstructs those values of f (if f is a function of a small bandwidth). This is reminiscent of a function reconstruction in integral geometry where one starts with the information about the function given by integrals over submanifolds and then reconstructs this function at every point of the manifold. In Sect. 7 we describe an algorithm for computing variational interpolating weighted average splines for finite graphs. The idea to use local information (other than point values) for reconstruction of bandlimited functions on graphs was explored in [16, 38, 41, 42]. We discuss their results and compare them with ours in Sect. 8. Variational splines on graphs which interpolate functions by using their point values on a subset of vertices where introduced in [26] and then further developed and applied in [5, 6, 15, 21, 33, 43, 44]. The ideas and methods of sampling and interpolation are deep-rooted in many aspects of signal analysis on graphs. For example, they are inseparable from problems related to quadrature formulas on graphs [5, 6, 14, 28], Spatially Distributed Networks [3], etc.

We want to mention that results of the present paper are similar to results of our papers [23] and [24] in which sampling by weighted average values was developed in abstract Hilbert spaces and on Riemannian manifolds. One could also consider iterative methods (combined with spline interpolation) for reconstruction of bandlimited functions similar to those developed in [7, 8] for manifolds. Adaptation of these methods for graphs will be considered in a separate paper.

2 Analysis and Sampling of Graph Signals

2.1 Analysis on Combinatorial Graphs

Let G denote an undirected weighted graph, with a finite or countable number of vertices V(G) and weight function \(w: V(G) \times V(G) \rightarrow [0, \infty )\), \(\>\>w\) is symmetric, i.e., \(w(u,v) = w(v,u)\), and \(w(u,u)=0\) for all \(u,v \in V(G)\). The edges of the graph are the pairs (u, v) with \(w(u,v) \not = 0\). Our assumption is that for every \(v\in V(G)\) the following finiteness condition holds

Let \(\ell ^{2}(G)\>\>\) denote the space of all complex-valued functions with the inner product

and the norm

For a set \(S\subset V(G)\) the notation \(\ell ^{2}(S)\) is used for all functions in \(\ell ^{2}(G)\) supported on S.

Definition 1

The weighted gradient norm of a function f on V(G) is defined by

We intend to prove the Poincaré-type estimates involving weighted gradient norm. In the case of a finite graph and \(\ell ^{2}(G)\)-space the weighted Laplace operator \(L: \ell ^{2}(G) \rightarrow \ell ^{2}(G)\) is introduced via

This graph Laplacian is a positive-semidefinite self-adjoint bounded operator. According to Theorem 8.1 and Corollary 8.2 in [11] if for an infinite graph there exists a \(C>0\) such that the degrees are uniformly bounded

then the operator which is defined by (2.3) on functions with compact supports has a unique positive-semidefinite self-adjoint bounded extension L which is acting according to (2.3). We will always assume that (2.4) is satisfied. Note that due to condition (2.4) one has that \(\Vert \nabla f\Vert <\infty \) for any \(f\in \ell ^{2}(G)\). We will also need the following equality which holds true for all graphs for which (2.4) holds (see [10, 11, 13, 17])

We are using the spectral theorem for the operator L to introduce the associated Paley-Wiener spaces which are also known as the spaces of bandlimited functions.

Definition 2

The Paley-Wieners space \(PW_{\omega } (L)\subset \ell ^{2}(G)\) is the image space of the projection operator \(\mathbf{1}_{[0,\>\omega ]}(L)\) (to be understood in the sense of Borel functional calculus). For a given \(f\in PW_{\omega }(L)\) the smallest \(\omega \ge 0\) such that \(f\in PW_{\omega }(L)\) is called the bandwidth of f.

By using the Spectral theorem one can show [25] that a function f belongs to the space \(PW_{\omega } (L)\) if and only if for every positive \(t>0\) the following Bernstein inequality holds

If G is a finite connected graph then L has a discrete spectrum \(0=\lambda _{0}<\lambda _{1}\le ...\le \lambda _{|G|-1}\), and multiplicity of 0 is the number of connected components of G. A set of corresponding orthonormal eigenfunctions will be denoted as \(\{\varphi _{j}\}_{j=0}^{|G|-1}\). In this case the space \(PW_{\omega }(L)\) coincides with

For infinite graphs the spectrum of L is typically not discrete [18, 25]. We will also need the following definition.

Definition 3

For a given graph G and a given \(\tau \ge 0\) let \({\mathcal {X}}(\tau ) \subset \ell ^{2} (G)\) denote the subset of all \(f\in \ell ^{2}(G)\) fulfilling the inequality \(\Vert \nabla f \Vert \le \tau \Vert f \Vert \) .

Although the sets \({\mathcal {X}}(\tau )\) are closed with respect to multiplication they are not linear spaces (in contrast to \(PW_{\omega }(L)\)). Clearly, for every \(\omega \ge 0\) one has the inclusion \(PW_{\omega }(L)\subset {\mathcal {X}}(\sqrt{\omega })\). However, the space \(PW_{\omega }(L)\) can be trivial but the sets \({\mathcal {X}}(\tau )\) are never trivial (think about a function whose norm is much bigger than its variation). Note also that if f has a very large norm its variations \(\Vert \nabla f\Vert \) can be large too even if \(\tau \) is small.

3 A Poincare-Type Inequality for Finite Graphs.

For a finite connected graph G which contains more than one vertex let \(\Psi \) be a functional on \(\ell ^{2}(G)\) which is defined by a function \(\psi \in \ell ^{2}(G)\), i.e.

Note, that the normalized eigenfunction \(\varphi _{0}\) which corresponds to the eigenvalue \(\lambda _{0}=0\) is given by the formula \(\frac{\chi _{G}}{\sqrt{|G|}}=\varphi _{0}\) where \(\chi _{G}(v)=1\) for all \(v\in V(G)\).

Theorem 3.1

Let G be a finite connected graph which contains more than one vertex and \(\Psi (\varphi _{0})=\langle \psi , \varphi _{0}\rangle \) is not zero. If \(f\in Ker(\Psi )\) then

where \(\lambda _{1}\) is the first non zero eigenvalue of the Laplacian (2.3) and

Note, that \(\theta \ge 1.\) This theorem is a particular case of the following general fact.

Lemma 3.2

Let T be a non-negative self-adjoint bounded operator with a discrete spectrum (counted with multiplicities) \(0=\sigma _{0}<\sigma _{1}\le .... \) in a Hilbert space H. Let \(\varphi _{0}, \varphi _{1},...,\) be a corresponding set of orthonormal eigenfunctions which is a basis in H. For any non-trivial \(\psi \in H\) let \(H_{\psi }^{\bot }\) be a subspace of all \(f\in H\) which are orthogonal to \(\psi \). If \(f\in H_{\psi }^{\bot }\) then

Proof

For the Fourier coefficients \(\{c_{k}(f)=\langle f, \varphi _{k}\rangle \}\) one has

and then for \(\Psi (f)={\langle }f, \psi {\rangle }\)

Using the Parseval equality and Schwartz inequality we obtain

At the same time we have

and from the Parseval formula

We plug the right-hand side of this formula into (3.4) and obtain the following inequality

\(\square \)

By applying this Lemma to the operator \(L^{1/2}\) with eigenvalues \(\lambda _{k}^{1/2}\) and using equality (2.5) we obtain Theorem 3.1 if \(\Psi (\varphi _{0})=\langle \psi , \varphi _{0}\rangle \) is not zero.

Theorem 3.3

If \(\Psi (\varphi _{0})=\langle \psi , \varphi _{0}\rangle \) is not zero then the following inequality holds for every \(f\in \ell ^{2}(G)\) and every \(\epsilon >0\)

Proof

By using the inequality

which holds for every positive \(\epsilon >0\) we obtain

Note, that if \(\Psi (f)= {\langle }\psi , \>\varphi _{0}{\rangle }\ne 0\) then \(f-\frac{{\langle }\psi ,\>f{\rangle }}{{\langle }\psi , \>\varphi _{0}{\rangle }}\varphi _{0}\) belongs to \(H_{\psi }^{\bot }\). This fact along with the previous theorem implies that

\(\square \)

4 Generalized Poincare-Type Inequalities for Finite and Infinite Graphs and Sampling Theorems

4.1 Generalized Poincare-Type Inequalities

For a finite or infinite G we consider the following assumption.

Assumption 1

We assume that \({\mathcal {S}}=\{S_{j}\}_{j\in J}\) form a disjoint cover of V(G)

Let \(L_{j}\) be the Laplacian for the induced subgraph \(S_{j}\). In order to insure that \(L_{j}\) has at least one non zero eigenvalue, we assume that every \(S_{j}\subset V(G),\>\>j\in J, \) is a finite and connected subset of vertices with more than one vertex. The spectrum of the operator \(L_{j}\) will be denoted as \(0=\lambda _{0,j}< \lambda _{1, j}\le ... \le \lambda _{|S_{j}|, j} \) and the corresponding o.n.b. of eigenfunctions as \(\{\varphi _{k,j}\}_{k=0}^{|S_{j}|}\). Thus the first non-zero eigenvalue for a subgraph \(S_{j}\) is \(\lambda _{1, j}\).

Let \(\Vert \nabla _{j}f_{j}\Vert \) be the weighted gradient for the induced subgraph \(S_{j}\). With every \(S_{j},\>\>j\in J,\) we associate a function \(\psi _{j}\in \ell ^{2}(G)\) whose support is in \(S_{j}\) and introduce the functionals \(\Psi _{j}\) on \(\ell ^{2}(G)\) defined by these functions

Notation \(\chi _{j}\) will be used for the characteristic function of \(S_{j}\) and we use \(f_{j}\) for \(f\chi _{j},\>\>f\in \ell ^{2}(G)\).

As usual, the induced graph \(S_{j}\) has the same vertices as the set \(S_{j}\) but only such edges of E(G) which have both ends in \(S_{j}\). The inequality (4.3) below is our next result. We call it a generalized Poincaré-type inequality since it contains an estimate of a function through its gradient.

Applying Theorem 3.3 to every \(L_{j}^{1/2}\) in the space \(\ell ^{2}(S_{j})\) we obtain the following result.

Theorem 4.1

Let G be a connected finite or infinite and countable graph and \({\mathcal {S}}=\{S_{j}\}\) is its disjoint cover by finite sets. Let \(L_{j}\) be the Laplace operator of the induced subgraph \(S_{j}\) whose first nonzero eigenvalue is \(\lambda _{1, j}\) and \(\varphi _{0, j}=1/\sqrt{|S_{j}|}\) is its normalized eigenfunction with eigenvalue zero. Assume that for every j function \(\psi _{j}\in \ell ^{2}(G)\) has support in \(S_{j}\), \(\>\>\>\Psi _{j}(f)= \langle \psi _{j}, f \rangle ,\) and \(\Psi _{j}(\varphi _{0,j})=\langle \psi _{j}, \varphi _{0, j}\rangle \ne 0\). Then the following inequality holds true

for every \(f\in \ell ^{2}(G)\) and every \(\epsilon >0\)

Remark 4.2

It is interesting to note that the inequality (4.3) is independent of the edges outside of the clusters \(S_{j}\). In other words, if one rearranges and mutually connects subgraphs \(\{S_{j}\}_{j\in J}\) in any other way in order to obtain a new graph \({\widetilde{G}}\), the inequality (4.3) would remain the same.

Remark 4.3

In this connection it is worth noting that in [10, 27] another family of inequalities of Poincare-type and Plancherel-Polya-type was established. They also rely on a certain disjoint cover of a graph by subgraphs (clusters), however those inequalities are independent of the edges inside of the clusters and depend solely on the edges between them.

Let’s introduce notations

and

Since our consideration includes infinite graphs we will always assume that

We note the following obvious inequality

Combining it with our assumption that the equality \(\Vert \nabla f\Vert ^{2}=\Vert L^{1/2} f\Vert ^{2}\) holds (see (2.5)) we can formulate the following consequence of the previous theorem.

Theorem 4.4

Assume that all the assumptions of Theorem 4.1 are satisfied. Then for every \(f\in \ell ^{2}(G)\) and every \(\epsilon >0\) the following inequalities hold true

4.2 Plancherel-Polya Inequalities and Sampling Theorems

Another result that follows from (4.3) is this statement about the Plancherel-Polya (or Marcinkiewicz-Zygmund, or frame) inequalities.

Theorem 4.5

If all assumptions of Theorem 4.1 hold then the following Plancherel-Polya inequalities hold

for every \(f\in \ell ^{2}(G)\) such that \(f|_{S_{j}}=f_{j}\in {\mathcal {X}}_{j}(\tau _{j})\) for all j and there exists a constant \(\sigma >0\) for which

for some \(\epsilon >0\).

Proof

To prove Theorem 4.5 we are using its conditions to obtain for each j:

Along with (4.3) it gives

and then since \(\sum _{j}\Vert f_{j}\Vert ^{2}=\Vert f\Vert ^{2}\) and \(1/|\Psi _{j}(\varphi _{0,j})|^{2}\le a\) we obtain

On the other hand because \(\Vert \psi _{j}\Vert ^{2}\le c\) for all j we have

This proves the Plancherel-Polya inequality (4.10). \(\square \)

Remark 4.6

In connection with this statement it can be useful to re-read comments which follow Definition 3.

The Plancherel-Polya inequalities obviously imply the following Corollary.

Corollary 4.1

Assume that all assumptions of Theorem 4.1 hold true. Then If for \(f, g\in \ell ^{2}(G)\) and every j:

-

(a)

\(\Psi _{j}(f_{j})=\Psi _{j}(g_{j}),\>\>\>\>f_{j}=f|_{S_{j}},\>\>\>\>g_{j}=g|_{S_{j}}\),

-

(b)

\(f_{j}-g_{j}\) belongs to \({\mathcal {X}}_{j}(\tau _{j})\) and (4.11), are satisfied, then

$$\begin{aligned} f=g. \end{aligned}$$

In particular, if for every j one has that \(\Psi _{j}(f_{j})=0\), and every \(f_{j}\) belongs to \({\mathcal {X}}_{j}(\tau _{j})\) then \(f=0\).

At the same time the inequality (4.3) has the following implication.

Theorem 4.7

Assume that all assumptions of Theorem 4.5 hold true. For every \(f\in PW_{\omega }(G)\) with \(\omega \) satisfying

the Plancherel-Polya inequalities hold

for those \(\epsilon \) for which the inequality \(\mu =(1+\epsilon )\>\frac{\Theta _{\Xi }}{\Lambda _{{\mathcal {S}}}}\>\omega <1\) holds.

Proof

We are using the assumption \(0\le \omega <\frac{ \Lambda _{{\mathcal {S}}} }{ \Theta _{\Xi } } \) along with (4.9) and (2.6) to obtain for \(f\in PW_{\omega }(L)\)

and then if \(\mu =a\>\omega <1\) we have

for those \(\epsilon \) for which the inequality \(\mu =(1+\epsilon )\>\frac{\Theta _{\Xi }}{\Lambda _{{\mathcal {S}}}}\>\omega <1\) holds. It proves Theorem 4.7. \(\square \)

It implies the following uniqueness and reconstruction result.

Corollary 4.2

Assume that all assumptions of Theorem 4.5 hold. If for \(f, g\in PW_{\omega }(G)\) with \(\omega \) satisfying (4.13) one has

for all j then \(f=g\). In particular, if for \(f\in PW_{\omega }(G)\) one has that \(\Psi _{j}(f_{j})=0\) for all j then \(f=0\). Moreover, every \(f\in PW_{\omega }(G)\) can be reconstructed from the set of its ”samples” \( \Psi _{j}(f)\) in a stable way.

We will call the interval \([0,\>\>\frac{ \Lambda _{{\mathcal {S}}} }{ \Theta _{\Xi } })\) in (4.13) the admissible interval, the eigenvalues of L, which belong to it will be called the admissible eigenvalues of L, and the corresponding eigenfunctions will be called the reconstructable eigenfunctions.

Remark 4.8

The following important question arises in connection with the two last statements: how many frequencies of L are contained in the admissible interval \( \left[ 0,\> \frac{ \Lambda _{{\mathcal {S}}} }{ \Theta _{\Xi } }\right) ? \) If the spectrum of L contains an interval of the form \((0,\>\nu ),\>\>\nu >0,\) (it can happen only if G is infinite) then the admissible interval \( \left[ 0,\> \frac{ \Lambda _{{\mathcal {S}}} }{ \Theta _{\Xi } }\right) \) contains ”many” frequencies of L.

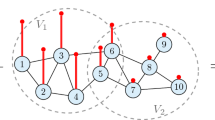

Now, suppose that G is finite. If the sets of clusters \({\mathcal {S}}=\{S_{j}\}\) and functionals \(\{\Psi _{j}\}\) are fixed one can see that \(\frac{\Theta _{\Xi }}{\Lambda _{{\mathcal {S}}}}\) is determined by \(\Lambda _{{\mathcal {S}}}=\min _{j}\{\lambda _{1,j}\}>0\). As it has been mentioned, our inequality (4.3) is independent of edges between the clusters \(S_{j}\). Let us consider the limiting case of a disconnected graph whose connected components are exactly our clusters. The Laplacian of such disconnected graph is a direct sum of the Laplacians \(L_{j}\) and it’s spectrum is the union of spectrums of all \(L_{j}\). Thus, in this case the interval \( \left[ 0,\> \frac{ \Lambda _{{\mathcal {S}}} }{ \Theta _{\Xi } }\right) \) contains only eigenvalue zero of multiplicity \(\left| {\mathcal {S}}\right| =J\) which is the number of all clusters. Clearly, by ’slightly’ perturbing this disconnected graph (i.e. by adding a ”few light” edges between the clusters) one can construct many community-type graphs for which the admissible interval will contain exactly J eigenvalues counted with multiplicities. More substantial perturbations will reduce the number of reconstructable eigenvalues and eigenfunctions. This pattern is illustrated in Figs. 1 and 2.

Adjacency matrix A of a community graph with 45 vertices and 11 clusters. These plots demonstrate that when connections (weights) within clusters are substantially stronger than the ones outside of the clusters then the number of eigenvalues in the admissible interval is exactly the number of clusters. Left: the adjacency matrix A of the entire graph. It consists of clusters with random weights (of the order of magnitude \(\sim 1\)) and size (between 2 and 6). The dark-blue pixels have random weights of the order of magnitude \(\sim 1.0e-03\), the two (barely visible) diagonals parallel to the main one have weights \(\sim 1.0e-02\). Right: the admissible eigenvalues. The graph is covered by 11 clusters as shown on the left figure. The figure on the right illustrates that for graphs with |J| ’strong’ (in the sense described above) clusters there are exactly |J| admissible eigenvalues (see Remark 4.8); (the zero eigenvalue is not shown). For this example \(\Lambda _{{\mathcal {S}}} = 0.8786\) (not shown). Our method enables the exact recovery of functions from the span of the corresponding |J| first reconstructable eigenfunctions of G by using their average values over clusters (see Sect. 4.4 and Sect. 6)

The reconstructable eigenvalues for a graph with 801 nodes, and 201 clusters. The inside weights are of magnitude \(\sim 1\) and outside weights are \(\sim 1.0e-04\). The smallest non-zero eigenvalue of all sub-matrices \(\Lambda _{{\mathcal {S}}} = 0.4917\) (not shown). There are 201 reconstructable eigenfunctions. This is another illustration of the fact that when a graph G is covered by |J| clusters and connections (weights) inside of clusters are substantially greater than connections outside of them then there are exactly |J| reconstructable eigenvalues. Our method enables an efficient recovery of functions from the span of the reconstructable eigenfunctions of L by using interpolation based on weighted average splines (see Sect. 4.4)

4.3 Sampling by Averages

As an illustration of our previous results let us consider the sampling procedure based on average values of functions. By this we mean a particular situation when every \( \psi _{j}\) is a characteristic function \(\chi _{U_{j}}\) of a subset \(U_{j}\subseteq S_{j}\) (Fig. 3). In this case one has

and then

Thus Theorem 4.7 says that for

the Plancherel-Polya inequality holds

for

and all \(\epsilon >0\) for which

4.4 Averages Over Clusters

Let’s consider the limiting situations such that \(U_{j}=S_{j}\) for all j and the samples are averages over \(S_{j}\) (Fig. 4). In this case

It means that for

we obtain the following Plancherel-Polya inequality

for all \(\epsilon >0\) for which the inequality \(\mu =\frac{(1+\epsilon ) }{\Lambda _{{\mathcal {S}}}}\omega <1\) holds.

4.5 Point-Wise Sampling

Another limiting case is the pointwise sampling

when for every j the corresponding function \(\psi _{j}\) is given by \( \psi _{j}=\delta _{u_{j}}, \) where \(\>\>\delta _{u_{j}}\) a Dirac measure \(\delta _{u_{j}}\) at a vertex (any) \(u_{j}\in S_{j}\). Thus for every j one has

and

So for

one has the following Plancherel-Polya inequality

for all \(\epsilon >0\) for which the inequality \( \mu =\frac{ (1+\epsilon ) \sup _{j}|S_{j}| }{ \Lambda _{{\mathcal {S}}} }\omega <1 \) holds.

5 Weighted Average Variational Splines

5.1 Variational Interpolating Splines

As in the previous sections we assume that G is a connected finite or infinite graph, \({\mathcal {S}}=\{S_{j}\}_{j\in J}\), is a disjoint cover of V(G) by connected and finite subgraphs \(S_{j}\) and every \(\psi _{j}\in \ell ^{2}(S_{j}),\>\>\>j\in J,\) has support in \(S_{j}\).

For a given sequence \(\mathbf {\alpha }=\{\alpha _{j}\}\in l_{2}\) the set of all functions in \(\ell ^{2}(G)\) such that \(\Psi _{j}(f)={\langle }f, \psi _{j}{\rangle }=\alpha _{j}\) will be denoted by \(Z_{\mathbf {\alpha }}\). In particular,

corresponds to the sequence of zeros. We consider the following optimization problem:

For a given sequence \(\mathbf{\alpha }=\{\alpha _{j}\}\in l_{2}\) find a function f in the set \(Z_{\mathbf {\alpha }}\subset \ell ^{2}(G)\) which minimizes the functional

Definition 4

Every solution of the above variational problem is called weighted average spline of order k.

The following Lemmas were proved in [22, 23].

Lemma 5.1

If T is a self-adjoint operator in a Hilbert space and for some f from the domain of T

then for all \(m=2^{l}, l=0,1,2, ...\)

as long as f belongs to the domain of \(T^{m}\).

Lemma 5.2

The norms \(\left( \Vert L^{k/2}f+\Vert f\Vert ^{2}\Vert ^{2}\right) ^{1/2} \) and \( \left( \Vert L^{k/2}f\Vert ^{2}+\sum _{j}|\Psi _{j}(f)|^{2}\right) ^{1/2} \) are equivalent.

Proof

According to (4.9) there exists a constant \(C_{1}\) such that

By using Lemma 5.1 we obtain for every natural k existence of a constant \(C_{k}>0\) such that for every f

It gives that

The inverse inequality follows from the estimate

where \( c=\sup _{j}\Vert \psi _{j}\Vert ^{2}\). Lemma is proven \(>>\) This completes the proof of the lemma. \(\square \)

Remark 5.3

Note that L is not always invertible!

We have the following characterization of variational splines.

Theorem 5.4

A function \(s_{k}\in \ell ^{2}(G)\) is a variational spline if and only if \(L^{k/2}s_{k}\) is orthogonal to \(L^{k/2}Z_{{\mathbf {0}}}\).

Corollary 5.1

Splines of the same order k form a linear space.

The last theorem implies the next one.

Theorem 5.5

Under the above assumptions the optimization problem has a unique solution for every k.

5.2 Solving the Variational Problem

Theorem 5.4 also justifies the following algorithm to find a variational interpolating spline.

-

(1)

Pick any function \(f\in Z_{\mathbf {\alpha }}\).

-

(2)

Construct \({\mathcal {P}}_{0}f\) where \({\mathcal {P}}_{0}\) is the orthogonal projection of f onto \(Z_{{\mathbf {0}}}\) with respect to the inner product

$$\begin{aligned} {\langle }f,g{\rangle }_{k}= {\langle }f, g{\rangle }+ \langle L^{k/2}f, L^{k/2}g \rangle . \end{aligned}$$ -

(3)

The function \(f-{\mathcal {P}}_{0}f\) is the unique solution to the given optimization problem.

5.3 Representations of Splines

We keep the same notations as above.

Definition 5

For a \(\>\>\nu \in J\>\>\) we say that \({\mathcal {L}}^{\nu }_{k}\) is a Lagrangian spline supported on \(S_{\nu }\) it is a function in \(\ell ^{2}(G)\) such that

-

(1)

\(\langle \psi _{j}, {\mathcal {L}}^{\nu }_{k}\rangle =\delta _{\nu ,j}\) where \(\delta _{\nu ,j}\) is the Kronecker delta,

-

(2)

\({\mathcal {L}}^{\nu }_{k}\) is a minimizer of the functional (5.1).

The theorem below is a direct consequence of the Corollary 5.1.

Theorem 5.6

If \(s_{k}\) is a spline of order k and \(\langle \psi _{\nu }, s_{k}\rangle =\alpha _{\nu }\) then

The next lemma provides another test for being a variational interpolating spline.

Lemma 5.7

A function \(s_{k}\) is a spline if and only if \(L^{k}s_{k}\) belongs to the span of \(\{\psi _{j}\}\). Moreover, the following equality holds

where

The proof is similar to the proof of the corresponding lemma in [26]. This theorem implies another representation of splines.

Theorem 5.8

If \(F^{j}_{k}\) is a ”fundamental solution of \(L^{k}\)” in the sense that it is a solution to the equation

then for every spline \(s_{k} \) of order k there exist coefficients \(\mu _{j,k}\) such that the following representation holds

Remark 5.9

Note, that the last representation is not unique at least in the case of a finite graph, since in this case the operator L has a non-trivial kernel and any two solutions \(F^{j,(1)}_{k},\>F^{j,(2)}_{k}\) of equation (5.5) are differ by a constant function \(c\chi _{G}\).

6 Interpolation and Approximation by Splines. Reconstruction of Paley-Wiener Functions Using Splines

The goal of this section is to prove a reconstruction theorem for interpolating appropriate Paley-Wiener functions from their average samples by using average variational interpolating splines.

6.1 Interpolation by Splines. Reconstruction of Paley-Wiener Functions Using Splines

The following lemma was proved in [26].

Lemma 6.1

If T is a self-adjoint non-negative operator in a Hilbert space H and for an \(\varphi \in X\) and a positive b the following inequality holds

then for the same \(\varphi \in H\), and all \( k=2^{l}, l=0,1,2,...\) the following inequality holds

Theorem 6.2

Let’s assume that G is a connected finite or infinite graph, \(\{S_{j}\}_{j\in J}\) is a disjoint cover of V(G) by connected and finite subgraphs \(S_j\) and every \(\psi _{j} \in \ell ^{2}(S_{j}),\> j \in J\), has support in \(S_j\). If

then any function f in \(PW_{\omega }(L),\>\>\>\> \omega >0,\) can be reconstructed from a set of values \(\{{\langle }f, \psi _{j}{\rangle }\}\) using the formula

and the error estimate is

where

Proof

For a \(k=2^{l},\>\>l=0,1,2,....\) apply to the function \(f-s_{k}(f)\) inequality (4.9) to obtain

Since \(s_{k}(f)\) interpolates f the last term here is zero. Because \(\epsilon \) here is any positive number it brings us to the next inequality

and an application of Lemma 6.1 gives

Using minimization property of \(s_{k}(f)\) and the Bernstein inequality (2.6) for \(f\in PW_{\omega }(L)\) one obtains (6.4). \(\square \)

Interpolation based on averaged measurements. There are 801 nodes and 201 clusters in the graph. The number of reconstructable eigenfunctions is 201 (see Figs 1 and 2). A linear combination f with random coefficients of 20 reconstructable eigenfunctions was generated and its averages \(|S_{j}|^{-1}\sum _{v\in S_{j}}f(v)\) over each \(S_{j}\) were calculated. Using these values the variational average weighted spline of order \(k=10\) was constructed by using the regularized Laplacian \((I+L)\) (see Remark 7.1). The values of the spline almost perfectly overlap with the values of the function f (only 250 nodes are plotted.) MAE over all vertices of the graph is 1.07e-08

Mean absolute error (MAE) for spline approximation as a function of k and \(\beta \) (see Remark 7.1). The graph G and the signal f are the same as in the Fig. 4, but the interpolation procedure is point-wise. It means that a single point \(u_{j}\) from every cluster \(S_{j}\) was chosen and the values \(f(u_{j})\) were used in the interpolation process

7 Algorithm for Computing Variational Interpolating Weighted Average Splines

7.1 Computing Variational Interpolating Weighted Average Splines for Finite Graphs

The above results give a constructive way for computing variational splines. For a given cover \({\mathcal {S}}=\{S_{j}\}\), a set of functions \(\Psi =\{\psi _{j}\},\>\>\>support\>\psi _{j}\subseteq \>S_{j}\), a sequence \(\alpha =\{\alpha _{j}\}\) we are going to construct a spline \(Y_{k}^{\alpha }\) which has prescribed values \(\langle Y_{k}^{\alpha }, \psi _{j} \rangle =\alpha _{j}\) (Figs. 5, 6).

-

(1)

First, one has to fix a \(k\in {\mathbb {N}}\) and to solve the following J systems of linear equations of the size \(|V(G)|\times |V(G)|\)

$$\begin{aligned} L^{k}F_{k}^{j}=\psi _{j},\>\>\>\> j\in J, \>\>\>k\in {\mathbb {N}}, \end{aligned}$$(7.1)in order to determine corresponding ”fundamental solutions” \(F_{k}^{j}\) which are functions on V(G). Note, that since the operator L is not invertible the solution to each of each of the systems (7.1) is not unique (see Remark 7.1).

-

(2)

The next step is to find representation (5.6) of the corresponding Lagrangian splines in the sense of Definition 5. To do this one has to solve J linear system of the size \(J\times J \) to determine coefficients \(\mu _{j}^{\nu }\)

$$\begin{aligned} \sum _{j\in J}\mu _{j}^{\nu } \langle F_{k}^{j}, \psi _{\rho }\rangle =\delta _{\nu , \rho } , \>\>\>\> \nu , \rho \in J, \end{aligned}$$(7.2)where \(\delta _{\nu , \rho } \) is the Kronecker delta.

-

(3)

Every Lagrangian spline \({\mathcal {L}}^{\nu }_{k}\) which has order \(k\in {\mathbb {N}}\) and the property \(\langle {\mathcal {L}}^{\nu }_{k}, \psi _{j}\rangle =\delta _{\nu , j}\) (\(\delta _{\nu , j} \) is the Kronecker delta) has the following representation

$$\begin{aligned} {\mathcal {L}}^{\nu }_{k}=\sum _{j\in J}\mu _{j}^{\nu }F_{k}^{j},\>\>\>\> \nu \in J. \end{aligned}$$(7.3) -

(4)

Every spline \(Y_{k}^{\alpha }\) which takes prescribed values \(\langle Y_{k}^{\alpha }, \psi _{j}\rangle =\alpha _{j}\) can be written explicitly as

$$\begin{aligned} Y_{k}^{\alpha }=\sum _{j\in J} \alpha _{j}{\mathcal {L}}^{j}_{k}. \end{aligned}$$

In particular, when every \(\psi _{j}\) is a Dirac measure \(\delta _{u_{j}}\) at a vertex \(u_{j}\in S_{j}\) then the systems (7.1) and (7.2) take the following form respectively:

-

(1)

$$\begin{aligned} L^{k}F_{k}^{j}=\delta _{u_{j}}\>\>\>\> j\in J, \>\>\>k\in {\mathbb {N}}, \end{aligned}$$(7.4)

-

(2)

$$\begin{aligned} \sum _{j\in J}\mu _{j}^{\nu } F_{k}^{j}(u_{\rho }) =\delta _{\nu , \rho } , \>\>\>\> \nu , \rho \in J, \end{aligned}$$(7.5)

Remark 7.1

The problem with equation (7.1) is that the operator L is not invertible (at least for all finite graphs). One way to overcome this obstacle is to use, say, the Moore-Penrose inverse of L. Another way is to consider a regularization of L of the form \( \beta I + L\), with a \(\beta > 0\). In all our calculations we adopted this approach with \(\beta < 1\).

8 Background and Additional Comments

8.1 Known Results About Sampling Based on Subgraphs

As it has been mentioned, the idea to use local information (other than point values) for representation, sampling and reconstruction of bandlimited functions on graphs was explored in [12, 16, 38, 41, 42].

In [12] authors suggesting a unified approach to the point-wise sampling, aggregation sampling and local weighted sampling on finite graphs. For a given a family \(\zeta _{i}\in \ell ^{2}(G), \>\>i=1,2, ..., M,\) authors consider the matrix \(\Psi =(\zeta _{1}, ... ,\zeta _{M})^{t}\) and call it a uniqueness operator for a space \(PW_{\omega }(L)\) if for any two \(f, g\in PW_{\omega }(L)\) the equality \(\Phi f=\Phi g\) implies that f and g are identical. One of the main results of [12] states that \(\Phi \) is a uniqueness operator for a space \(PW_{\omega }(L)\) if and only if the orthogonal projections \({\mathcal {P}}_{\omega }(\zeta _{i}), \>\>i=1,2, ..., M,\) onto \(PW_{\omega }(L)\) form a frame. Moreover, they determine the exact constants in the corresponding frame inequality. Namely, consider a set of orthonormal eigenfunctions \(\varphi _{0}, \varphi _{1}, .., \varphi _{k}\) which form s basis of \(PW_{\omega }(L)\) and let \(U_{k}\) be the matrix whose columns are these vectors \(\varphi _{i},\>\>i=0, ... ,k,\). If \(\sigma _{\min }\) and \(\sigma _{\max }\) are the smallest and the largest singular values of the matrix \(\Phi U_{k}\) then the following frame inequality holds for all \(f\in PW_{\omega }(L)\)

The so-called aggregation sampling which was developed in [16] relies on samples of a signal of the form \(f(v_{0}),\> Lf(v_{0}),\> ... ,\>L^{m}f(v_{0}),\) where L is the Laplacian and \(v_{0}\in V(G)\) is a fixed vertex. Since L is self-adjoint, each of the samples \(L^{k}f(v_{0}),\>\>k=1,\>,...\>, m,\) is the same as the inner product of the original signal f with the function \(\mu _{k}=L^{k}\delta _{v_{0}}, \>\>k=1,\>,...\>, m,\) where \(\delta _{v_{0}}\) is the Delta function supported at the vertex \(v_{0}\in V(G)\). Namely,

Due to the property that every application of L to a compactly supported function extends the function to the vertex-neighborhood of its support, one obtains an increasing ladder of subgraphs \(\{v_{0}\}\subset U_{1}\subset U_{2}\subset ... \subset U_{m}\) and a set of functions \(\mu _{0}=\delta _{v_{0}}, \>\mu _{1},\> ..., \>\mu _{m}\), where every \(\mu _{k}\) is supported on \(U_{k}\). In other words, authors of [16] are using the collection of ”local measurements” \(\{\langle f, \mu _{k}\rangle \}, \>\>f \in \ell ^{2}(G), \>\>k=1,\>,...\>, m,\) to develop a specific approach to sampling of bandlimited functions and to obtain sparse representations of signals in the graph-frequency domain.

The objective of the paper [38] was to develop a method of decomposition of signals on graphs for efficient compression and denoising. First, the authors partitioned a finite weighted graph G into connected subgraphs \({\mathcal {G}}_{k}\). Given a signal \(f\in \ell ^{2}(G)\) its restriction to each \({\mathcal {G}}_{k}\) was decomposed into Fourier basis of the Laplacian associated with \({\mathcal {G}}_{k}\). Using these decompositions the authors constructed a sequence of approximations to the original signal f which they treated as signals on a series of coarse versions of G constructed by using subgraphs \({\mathcal {G}}_{k}\) as ”super-nodes”.

In [41] authors considered a finite and unweighted graph G and its disjoint covering by connected subgraphs \(\{{\mathcal {N}}_{i}\}_{i\in {\mathcal {I}}}\). Given a signal \(f\in PW_{\omega }(L)\) they evaluated \(f(u_{i})\) at random single points \(u_{i}\in {\mathcal {N}}_{i}\) and defined a piecewise constant function \(F(v)=f(u_{i})\) iff v and \(u_{i}\) belong to the same \({\mathcal {N}}_{i}\). The orthogonal projection \(f_{0}\) of F onto \(PW_{\omega }(L)\) was used as a first approximation to f in an iterative procedure which converges to \(f\in PW_{\omega }(L)\) if \(\omega \) satisfies the inequality

where

with \( {\mathcal {T}}(u_{i})\) being the shortest-path tree of the subgraph \({\mathcal {N}}(u_{i})\) rooted at \(u_{i}\), and \({\mathcal {T}}_{u_{i}}(v)\) being a subtree which v belongs to when \(u_{i}\) and its associated edges are removed from \({\mathcal {T}}(u_{i})\).

Authors of [42] developed what can be called the local weighted sampling. They also considered a finite and unweighted graph G and its disjoint covering by connected subgraphs \(\{{\mathcal {N}}_{i}\}_{i\in {\mathcal {I}}}\). With every \({\mathcal {N}}_{i}\) they associated a non-negative function \(\varphi _{i}\) which is supported on \({\mathcal {N}}_{i}\) and such that \(\sum _{v\in {\mathcal {N}}_{i}}\varphi _{i}=1\). Let \(D_{i}\) be a diameter of \({\mathcal {N}}_{i}\).

According to [42], if

then every \(f\in PW_{\omega }(L)\) can be uniquely reconstructed from the set of its ”samples” \(\{\langle f, \varphi _{i}\rangle \}_{i\in {\mathcal {I}}}\). The reconstruction is given by an iterative procedure which requires knowledge of eigenfunctions of the Laplacian L.

Remark 8.1

In fact, the correct estimate of the frequency interval is given not by the inequality (8.2) but by the following one

Indeed, the proof in [42] of Lemma 1 in which the condition (8.2) was obtained relied on the incorrect formula

while the correct one is

(see our formulas (2.2 and (2.5)).

The results of [42] are the closest to our Theorem 4.7. Let’s compare our condition (4.13) with (8.3) in the case when every subgraph \({\mathcal {N}}_{i}\) coincides with the unweighted complete graph \(K_{n}\) of n vertices. Note, that this graph has just two eigenvalues 0 and n (with multiplicity \(n-1\)). In this case (for averages over subgraphs \({\mathcal {N}}_{i}\)) our interval (4.13) for all the frequencies which can be recovered is

while the interval which is given by (8.3) is only

since the diameter of \(K_{n}\) is 1. In the same situation but for the signal sampled at randomly chosen vertices \(\{v_{i}\} \) where \(\>\>v_{i}\in {\mathcal {N}}_{i},\) our interval for the recoverable frequencies \(\omega \) (see ( 4.19)) is \(0\le \omega <n/n=1,\) while (8.3) would give \(0\le \omega <1/2n\).

8.2 Comparison with the Poincaré Inequality on Riemannian Manifolds

Here we are using the same notation as in Sect. 3. Our Theorem 3.1 implies the following inequality

which looks essentially like the Poincare inequality for compact Riemannian manifolds. Note that the Poincaré inequality on Riemannian manifolds is formulated usually for balls B(r) of a small radius r and has the form

However, our Poincaré-type inequality (8.4) is valid for any finite graph. The constants on the right sides in (8.4) and (8.5) look very different. It should be mentioned in this connection that on domains in \({\mathbb {R}}^{n}\) (and on balls on Riemannian manifolds) the diameter of a domain is essentially reciprocal to the first eigenvalue (which is never zero) of the corresponding Dirichlet Laplacian L (assuming the formula \(L u_{j}=\lambda _{j}u_{j}\), where \(u_{j}\) and \(\lambda _{j}\) are eigenfunctions and eigenvalues respectively). This shows that if a graph has the property that for every ball B(r) the following inequality holds

then our (8.4) is analogous to the ”regular” inequality.

8.3 Other Poincaré-Type Inequalities

We keep the same notations as in Sects. 3 and 4. Having Theorems 4.1 and 4.4 one can easily obtain the following Poincaré-type inequalities.

Corollary 8.1

For every function such that \(f\in \cap _{j\in J} Ker \>\Psi _{j} \) one has

and

More general, if \(J_{0}\subset J\) and \(G_{0}=\cup _{j\in J_{0}} S_{j}\) and

then one has

and

where \(L_{G_{0}}\) is the Laplacian of the induced graph \(G_{0}\). Here

and

Note, that in the case when \(\{\Psi _{j}\}\) is a set of Dirac functions similar inequalities played important role in the sampling and interpolation theories on Riemannian manifolds in [22,23,24]. In the case of graphs they were recently explored in [43].

References

Anis, A., Gadde, A., Ortega, A.: Towards a sampling theorem for signals on arbitrary graphs. In: 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, pp. 3864–3868 (2014)

Chen, S., Varma, R., Sandryhaila, A., Kovacevich, J.: Discrete signal processing on graphs: sampling theory. IEEE Trans. Signal Process. 63(24), 6510–6523 (2015)

Cheng, C., Jiang, Y., Sun, Q.: Spatially distributed sampling and reconstruction. Appl. Comput. Harmon. Anal. 47(1), 109–148 (2019)

de Boor, C., Hllig, K., Riemenschneider, S.: Convergence of cardinal series. Proc. Am. Math. Soc. 98(3), 457–460 (1986)

Erb, W.: Graph signal interpolation with positive definite graph basis functions. arXiv preprint arXiv:1912.02069 (2019)

Erb, W.: Semi-supervised learning on graphs with feature-augmented graph basis functions. arXiv:2003.07646v1 [cs.LG] 17 Mar 2020

Feichtinger, H., Pesenson, I.: Iterative recovery of band limited functions on manifolds. Contemp. Math. 137–153, (2004)

Feichtinger, H., Pesenson, I.: A reconstruction method for band-limited signals on the hyperbolic plane. Sampl. Theory Signal Image Process. 4(2), 107–119 (2005)

Fortunato, S.: Community detection in graphs. Phys. Rep. 486(3), 75–174 (2010)

Führ, H., Pesenson, I.: Poincaré and Plancherel-Polya inequalities in harmonic analysis on weighted combinatorial graphs. SIAM J. Discrete Math. 27(4), 2007–2028 (2013)

Haeseler, S., Keller, M., Lenz, D., Wojciechowski, R.: Laplacians on infinite graphs: Dirichlet and Neumann boundary conditions. J. Spectr. Theory 2(4), 397–432 (2012)

Huang, C., Zhang, Q., Huang, J., Yang, L.: Reconstruction of bandlimited graph signals from measurements. Digital Signal Process. 101, 102728 (2020)

Jorgensen, P.E.T., Pearse, E.P.J.: A discrete Gauss-Green identity for unbounded Laplace operators, and the transience of random walks. Israel J. Math. 196(1), 113–160 (2013)

Linderman, G.C., Steinerberger, S.: Numerical integration on graphs: where to sample and how to weigh. Math. Comp. 89(324), 1933–1952 (2020)

Madeleine, S., Kotzagiannidis, Pier Luigi Kotzagiannidis, P.L.D.: Sampling and reconstruction of sparse signals on circulant graphs—an introduction to graph-FRI. Appl. Comput. Harmon. Anal. 47(3), 539–565 (2019)

Marques, A.G., Segarra, S., Leus, G., Ribeiro, A.: Sampling of graph signals with successive local aggregations. IEEE Trans. Signal Process. 64(7), 1832–1843 (2016)

Mohar, B.: Some applications of Laplace eigenvalues of graphs. In: G. Hahn and G. Sabidussi, editors, Graph Symmetry: Algebraic Methods and Applications (Proc. Montreal 1996), volume 497 of Adv. Sci. Inst. Ser. C. Math. Phys. Sci., pp. 225-275, Dordrecht (1997), Kluwer

Mohar, B., Woess, W.: A survey on spectra of infinite graphs. Bull. London Math. Soc. 21(3), 209–234 (1989)

Narang, S.K., Gadde, A., Ortega, A.: Signal processing techniques for interpolation in graph structured data. In: Acoustics, Speech and Signal Processing (ICASSP), 2013 IEEE International Conference on. IEEE, pp. 54455449 (2013)

Ortega, A., Frossard, P., Kovacevic, J., Moura, J.M.F., Vandergheynst, P.: Graph Signal Processing: Overview, Challenges and Applications. In: Proceedings of the IEEE, pp. 808–828 (2018)

Perraudin, N., Paratte, J., Shuman, D.I., Kalofolias, V., Vandergheynst, P., Hammond, D.K.: GSPBOX: A toolbox for signal processing on graphs. https://lts2.epfl.ch/gsp/

Pesenson, I.: A sampling theorem on homogeneous manifolds. Trans. Am. Math. Soc. 352(9), 4257–4269 (2000)

Pesenson, I.: Sampling of band limited vectors. J. Fourier Anal. Appl. 7(1), 93–100 (2001)

Pesenson, I.: Poincaré-type inequalities and reconstruction of Paley-Wiener functions on manifolds. J. Geometric Anal. 4(1), 101–121 (2004)

Pesenson, I.: Sampling in Paley-Wiener spaces on combinatorial graphs. Trans. Am. Math. Soc. 360(10), 5603–5627 (2008)

Pesenson, I.Z.: Variational splines and Paley-Wiener spaces on combinatorial graphs. Constr. Approx. 29(1), 1–21 (2009)

Pesenson, I.Z., Pesenson, M.Z.: Sampling, filtering and sparse approximations on combinatorial graphs. J. Fourier Anal. Appl. 16(6), 921–942 (2010)

Pesenson, I.Z, Pesenson, M.Z., Führ, H.: Cubature formulas on combinatorial graphs. arXiv:1104.0963 (2011)

Pesenson, I.: Sampling solutions of Schrodinger equations on combinatorial graphs. arXiv:1502.07688v2 [math.SP] (2015)

Pesenson, I.Z: Sampling by averages and average splines on Dirichlet spaces and on combinatorial graphs. arXiv:1901.08726v3 [math.FA] (2019)

Puy, G., Tremblay, N., Gribonval, R., Vandergheynst, P.: Random sampling of bandlimited signals on graphs. Appl. Comput. Harmon. Anal. 44(2), 446475 (2018)

Schoenberg, I.J.: Notes on spline functions. III. On the convergence of the interpolating cardinal splines as their degree tends to infinity. Israel J. Math. 16, 87–93 (1973)

Shuman, D.I.: Localized Spectral Graph Filter Frames. arXiv: 2006.11220v1 [eess.SP] (2020)

Shuman, D.I., Faraji, M.J., Vandergheynst, P.: A multiscale pyramid transform for graph signals. IEEE Trans. Signal Process. 64(8), 2119–2134 (2016)

Strichartz, R.S.: Half sampling on bipartite graphs. J. Fourier Anal. Appl. 22(5), 1157–1173 (2016)

Tanaka, Y., Eldar, Y.C., Ortega, A., Cheung, G.: Sampling Signals on Graphs. From Theory to Applications. arXiv:2003.03957v4 [ eess.SP] (2020)

Tanaka, Y., Sakiyama, A.: M-channel oversampled graph filter banks. IEEE Trans. Signal Process. 62(14), 3578–3590 (2014)

Tremblay, N., Borgnat, P.: Subgraph-based filterbanks for graph signals. IEEE Trans. Signal Process. 64(15) (2016)

Tremblay, N., Amblard, P.O., Barthelme, S.: Graph sampling with determinantal processes. In: 2017 25th European Signal Processing Conference (EUSIPCO)

Tsitsvero, M., Barbarossa, S.: Di Lorenzo, Paolo, Signals on graphs: uncertainty principle and sampling. IEEE Trans. Signal Process. 64(18), 4845–4860 (2016)

Wang, X., Liu, P., Gu, Y.: Local-set-based graph signal reconstruction. In: IEEE Transactions on Signal Processing (2015)

Wang, X., Chen, J., Gu, Y.: Local measurement and reconstruction for noisy bandlimited graph signals. Signal Process. 129, 119–129 (2016)

Ward, J.P., Narcowich, F.J., Ward, J.D.: Interpolating splines on graphs for data science applications. Appl. Computat. Harmon. Anal. 49(2), 540–557 (2020)

Yazaki, Y., Tanaka, Y., Chan, S.H.: Interpolation and denoising of graph signals using plug-and-play ADMM. In: CASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (2019)

Acknowledgements

MZP was supported by the U.S. Department of Energy, Office of Science, Office of Basic Energy Sciences, under Award DE-SC0020383.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Pesenson, I.Z., Pesenson, M.Z. Graph Signal Sampling and Interpolation Based on Clusters and Averages . J Fourier Anal Appl 27, 39 (2021). https://doi.org/10.1007/s00041-021-09828-z

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00041-021-09828-z

Keywords

- Combinatorial graph

- combinatorial Laplace operator

- Poincaré-type inequality

- Paley-Wiener spaces

- Plancherel-Polya-type inequality

- splines

- interpolation