Abstract

We provide quantitative estimates for the supremum of the Hausdorff dimension of sets in the real line which avoid \(\varepsilon \)-approximations of arithmetic progressions. Some of these estimates are in terms of Szemerédi bounds. In particular, we answer a question of Fraser, Saito and Yu (IMRN 14:4419–4430, 2019) and considerably improve their bounds. We also show that Hausdorff dimension is equivalent to box or Assouad dimension for this problem, and obtain a lower bound for Fourier dimension.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The study of the relationship between the size of a set and the existence of arithmetic progressions contained in the set has been a major problem for a long time. We write \(k-AP \) to mean an arithmetic progression of length k. In the discrete context, the celebrated Szemerédi’s theorem [17] states that if \(A \subseteq \mathbb {N}\) has positive upper density then A contains arbitrarily long arithmetic progressions, that is, it contains a \(k-AP \) for arbitrarily large \(k \ge 3\). This can be restated as saying that if

then \(r_k(N)/N\rightarrow 0\) as \(N\rightarrow \infty \) for any \(k\ge 3\). Finding precise asymptotics for \(r_k\) remains a major open problem to this day. The best known upper bounds (valid for large N) are:

-

\(r_3(N)/N \le (\log N)^{-1-c}\) for some \(c>0\) ( [3], improving [2, 15]).

-

\(r_4(N)/N \le (\log N)^{-c}\) for some absolute \(c>0\) ( [9]).

-

If \(k\ge 5\), then \(r_k(N)/N \le (\log \log N)^{-a_k}\), where \(a_k = 2^{-2^{k+9}}\) ( [8]).

In the opposite direction, Behrend [1] showed that

where \(c,C>0\) are absolute constants. Note that, in particular, for all \(\varepsilon >0\), we have \(r_k(N)>N^{1-\varepsilon }\) if N is large enough. See [13] for recent improvement to this lower bound for general values of \(k\ge 3\).

In the continuous context, Keleti [10, 11] proved that there exists a compact set \(E\subset \mathbb {R}\) of Hausdorff dimension 1 that does not contain any \(3-AP \). Later, Yavicoli [19] obtained the stronger result that for any dimension function h(x) such that \(\frac{x}{h(x)}\rightarrow _{x \rightarrow 0^+} 0\), there exists a compact set of positive h-Hausdorff measure avoiding \(3-APs \). Hence, while in the discrete context the function \(r_3(N)\) distinguishes between sets that necessarily contain, or may fail to contain, \(3-APs \), no such function exists in the continuous context.

In [5], Fraser, Saito and Yu introduced a new related problem: how large can the Hausdorff dimension of a set avoiding approximate arithmetic progressions be? Given \(k \ge 3\) and \(\varepsilon \in (0,1)\), we say that a set \(E \subset \mathbb {R}\) \(\varepsilon \)-avoids \(k-APs \) if, for every \(k-AP \) P, one has

where \(\lambda \) is the gap length of P. To be more precise, in [5] this is defined with strict inequality. In practice, this makes almost no difference, but the discussion in Sect. 2 below becomes simpler if we allow equality in (1.1).

We define

Because of \(\sigma \)-stability of Hausdorff dimension, it is equivalent to consider the supremum over (not necessarily bounded) sets that \(\varepsilon \)-avoid \(k-APs \). We state the definition in this way because we will at times consider the Assouad or box dimensions of E as well, which are usually defined only for bounded sets.

In [5], Fraser, Saito and Yu obtained the following upper and lower bounds for \(d(k,\varepsilon )\):

(In fact, they obtained the upper bound for Assouad dimension instead of Hausdorff dimension. While this is a priori stronger, we will later show that it is in fact equivalent.) In particular, in contrast to Keleti’s result, sets of full Hausdorff (or even Assouad) dimension necessarily contain arbitrarily good approximations to arithmetic progressions of any length, see [6]. Nevertheless, one might expect that, for each fixed k, \(d(k, \varepsilon )\rightarrow _{\varepsilon \rightarrow 0^+} 1\), but this does not follow from the above lower bound and was left as a question in [5]. In this paper we obtain new upper and lower bounds for \(d(k,\varepsilon )\) that considerably improve upon (1.2) and, in particular, show that indeed \(d(k, \varepsilon )\rightarrow _{\varepsilon \rightarrow 0^+} 1\).

Theorem 1.1

Fix \(k \in \mathbb {N}_{\ge 3}\).

- (a):

-

For any \(\varepsilon \in (0,1/12)\),

$$\begin{aligned} d(k,\varepsilon ) \ge \frac{\log (r_k(\lfloor \frac{1}{12\varepsilon } \rfloor ))}{\log (12 \lfloor \frac{1}{12\varepsilon } \rfloor )}. \end{aligned}$$ - (b):

-

For any \(\varepsilon \) such that \(1/\varepsilon > k\),

$$\begin{aligned} d(k,\varepsilon ) \le \frac{1}{2} \left( \frac{\log (r_k(\lfloor 1/\varepsilon +1\rfloor )+1)}{\log (\lfloor 1/\varepsilon +1\rfloor )}+1\right) . \end{aligned}$$ - (c):

-

Let \(k \ge 3\) and \(\varepsilon \in (0,1/10)\). Then

$$\begin{aligned} d(k,\varepsilon ) \le \frac{\log ( \lceil 1/\varepsilon \rceil +1)}{\log ( \lceil 1/\varepsilon \rceil +1)-\log (1-1/k)} \le 1-\frac{c}{k |\log \varepsilon |}, \end{aligned}$$where \(c>0\) is a universal constant.

We make some remarks on this statement.

-

(1)

A conceptual novelty of this work is that, even though there is no analog of Szemerédi’s Theorem for the presence of exact arithmetic progressions inside fractal sets, we show that Szemerédi bounds greatly influence the presence of approximate progressions in fractals. In order to construct large sets without progressions, the papers [10, 11, 19] rely on a type of construction in which patterns are “killed” at much later stages of the construction; i.e. they crucially exploit the existence of infinitely many scales in the real numbers. The property of uniformly avoiding progressions is scale-invariant in a sense that precludes such an approach (this is related to the discussion in Sect. 2) and may suggest why there is a connection to Szemerédi in this case.

-

(2)

As we saw before, Behrend’s example shows that \(r_k(N) \ge r_3(N) \ge N^{1-\delta }\) for all \(\delta >0\) and all N large enough in terms of \(\delta \). Then (a) easily gives that \(\lim _{\varepsilon \rightarrow 0^+} d(k,\varepsilon )=1\).

-

(3)

The two upper bounds we give are proved using completely different methods. The bound (b) is better asymptotically as \(\varepsilon \rightarrow 0^+\) (this follows from Szemerédi’s Theorem, i.e. \(r_k(N)/N\rightarrow 0\), and a short calculation). However, for moderate values of \(\varepsilon \) the bound (c) may be better, and in any case the bound (b) may be hard to estimate for specific values of \(\varepsilon \) (we note that the bounds on \(r_k\) discussed above are asymptotic) while (c) is completely explicit. This makes sense because as \(\varepsilon \rightarrow 0^+\) we are closer to the discrete setting while for “large” \(\varepsilon \) we are firmly in the “fractal” realm and avoiding arithmetic progressions can be seen as a sort of (multi)porosity. We note that the dependence in (c) on both \(\varepsilon \) and k is much better than that of the upper bound of (1.2).

-

(4)

We defined \(d(k,\varepsilon )\) using Hausdorff dimension. However, we show in Corollary 2.6 below that the value of \(d(k,\varepsilon )\) remains the same if Hausdorff dimension is replaced by box, packing or Assouad dimension; moreover, there is a compact set that attains the supremum in the definition of \(d(k,\varepsilon )\). Furthermore, for the lower bound (a), Hausdorff dimension can even be replaced by (the a priori smaller) Fourier dimension, see Proposition 3.2 below.

After the first version of this paper appeared in the arXiv, we learned that Kota Saito independently and simultaneously established bounds very similar to those in (a), (b) from Theorem 1.1, using a related approach [14]. In fact, Saito proved versions of these bounds also in higher dimensions. He did not obtain bounds analogous to (c), nor any results about Fourier dimension or the behaviour described in Corollary 2.6 .

2 Sets Avoiding Approximate Progressions and Galleries

Note that a set E \(\varepsilon \)-avoids \(k-APs \) if and only if \({\overline{E}}\) \(\varepsilon \)-avoids \(k-APs \). Since \({{\,\mathrm{dim_H}\,}}({\overline{E}})\ge {{\,\mathrm{dim_H}\,}}(E)\), we can therefore consider only closed sets in the definition of \(d(k,\varepsilon )\).

We write \(\mathcal {F}\) to denote the set consisting of the non-empty closed subsets of [0, 1]. Endowed with the Hausdorff metric D, the set \(\mathcal {F}\) is a complete metric space. We recall some concepts introduced by Furstenberg [7].

Definition 2.1

Let \(F \in \mathcal {F}\). A set \(F' \in \mathcal {F}\) is a mini-set of F, if for some \(r\ge 1\) and \(u \in \mathbb {R}\), we have \(F' \subset rF+u\).

Definition 2.2

A family \(\mathcal {G} \subset \mathcal {F}\) is called a gallery if it satisfies simultaneously:

-

\(\mathcal {G}\) is closed in \((\mathcal {F}, D)\),

-

for each \(E \in \mathcal {G}\), every mini-set of E is also in \(\mathcal {G}\).

In [7, Theorem 5.1], Furstenberg established the following dimensional homogeneity property of galleries.

Theorem 2.3

Let \(\mathcal {G}\) be a gallery. Let

where \(\mathcal {D}_k\) denotes the collection of half-open dyadic intervals of side length \(2^{-k}\) and \(\log \) is the base-2 logarithm. Then there exists a set \(A\in \mathcal {G}\) such that

We note that the set A in the previous theorem satisfies an ergodic-theoretic version of self-similarity.

To put this result into context, we recall the definition of Assouad dimension:

Definition 2.4

Let \(E \subseteq \mathbb {R}\) be a bounded set. For \(r>0\), let \(N_r(E)\) denote the least number of open balls of radius less than or equal to r with which it is possible to cover the set E. We define the Assouad dimension of a (possibly unbounded) set \(E \subseteq \mathbb {R}\) as

It is easy to see that \({{\,\mathrm{dim_H}\,}}(X)\le {{\,\mathrm{dim_A}\,}}(X)\le \Delta (\mathcal {G})\) for any \(X\in \mathcal {G}\), and therefore Furstenberg’s Theorem implies that, for any gallery \(\mathcal {G}\), the suprema

coincide with each other and with \(\Delta (\mathcal {G})\) and, moreover, they are attained. This implies that the analogous suprema for lower box, upper box and packing dimensions also coincide with \(\Delta (\mathcal {G})\).

Lemma 2.5

Let \(\varepsilon >0\) and \(k \in \mathbb {N}_{\ge 3}\). Then, the set

is a gallery.

Proof

If \(E \in \mathcal {G}\) and A is a mini-set of E, by invariance of the \(\varepsilon \)-avoidance of \(k-APs \) under homothetic functions, we have \(A \in \mathcal {G}\).

Suppose now \(E_n \in \mathcal {G}\), \(\delta _n:=D(E_n,E) \rightarrow _{n \rightarrow \infty } 0^+\). We want to see that \(E\in \mathcal {G}\). Let P be a \(k-AP \) of gap \(\lambda \). Since for every \(x \in E\) there exists \(x_n \in E_n\) such that \(|x-x_n|<\delta _n\), for each point p we have

Hence

So, since \(E_n \in \mathcal {G}\),

Since \(\delta _n\rightarrow 0\), we have

as desired. \(\square \)

Combining this fact with Theorem 2.3 and the remark afterward we get:

Corollary 2.6

For any \(k\ge 3\) and \(\varepsilon >0\),

and moreover the supremum is realized.

Here we are using that, since scaling and translation do not change the Hausdorff or Assouad dimensions or the property of \(\varepsilon \)-avoiding \(k-APs \), there is no loss of generality in restricting to subsets of the unit interval in the above corollary (so that Theorem 2.3 is indeed applicable).

3 Proof of Theorem 1.1

3.1 Proof of the Lower Bound (a)

We prove the lower bound in Theorem 1.1, which we repeat for the reader’s convenience:

Proposition 3.1

Let \(k \in \mathbb {N}_{\ge 3}\) and \(\varepsilon \in (0,1/12]\). We have

Proof

Given \(\varepsilon \in (0,\frac{1}{12}]\) there exists \(N \in \mathbb {N}\) such that \(\varepsilon _{N+1}<\varepsilon \le \varepsilon _{N}\) where we define \(\varepsilon _N:=\frac{1}{12N}\); i.e. \(N:=\lfloor \frac{1}{12\varepsilon } \rfloor \).

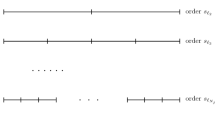

By definition of \(r_k(N)\), we can take \(A_N \subseteq \{1, \cdots , N\}\) which does not contain a \(k-AP \) and \(\# A_N= r_k(N)\). We will construct a set \(E_N\) \(\varepsilon _N\)-avoiding \(k-APs \) (in particular, \(\varepsilon \)-avoiding \(k-APs \)) with \({{\,\mathrm{dim_H}\,}}(E_N)=\frac{\log (r_k(N))}{\log (12N)}\).

The set \(E_N\) is defined as the self-similar attractor for the IFS \(\{f_j : \ j \in A_N\}\), where

In other words, since \(f_j([0,1])\subset [0,1]\) for all j, the set \(E_N\) is given by

We call the intervals \(f_{i_1}\cdots f_{i_\ell }([0,1])\) construction intervals of level \(\ell \).

Clearly \({{\,\mathrm{dim_H}\,}}(E_N)=\frac{\log (\# A_N)}{\log (12N)}=\frac{\log (r_k(N))}{\log (12N)}\), see [4, Chapter 9]. To complete the proof, we will show that \(E_N\) \(\varepsilon _N\)-avoids \(k-APs \).

We proceed by contradiction. Suppose there exist \({\tilde{x}}_1< \cdots <{\tilde{x}}_k\) in \(E_N\) and a \(k-AP \), say \(x_1< \cdots <x_k\), such that \(|x_i-{\tilde{x}}_i|< \varepsilon _N \lambda \) for all \(i \in \{1, \cdots , k\}\), where \(\lambda =\frac{x_{i+2}-x_i}{2}\) (for \(i = 1, \dots , k-2\)) is the gap length of the \(k-AP \).

There exists a minimal construction interval I containing \({\tilde{x}}_1\) and \({\tilde{x}}_k\) (so \({\tilde{x}}_i \in I\) for every i); let \(\ell \) be its level and \(z_I\) its left endpoint. The length of the interval I is \(|I|=(12N)^{-\ell }\). For each \(i \in \{1, \cdots , k\}\), we write

where \(\delta _i \in [0, \frac{1}{12N})\), \(a_i\in A_N\) for every i, \(a_1 \le a_2 \le \cdots \le a_k\), and not all of the \(a_i\) are equal (because we have taken I minimal). Our goal is to show that the \(a_i\) form an arithmetic progression. We write

Since

we have that

On the other hand, for \(i=1,\ldots ,k-2\),

where we define \({\widetilde{\varepsilon }}_i:=\frac{\varepsilon _{x_i} +\varepsilon _{x_{i+2}}}{2}-\varepsilon _{x_{i+1}}\). We deduce that

Hence

Now the left-hand side belongs to \(\frac{1}{2}\mathbb {Z}\). But using that \(\varepsilon _{x_i} \in (-\varepsilon _N, \varepsilon _N)\), \(\delta _i \in [0, \frac{1}{12N}]\), the definition of \(\varepsilon _N\) and (3.1), we see that the right-hand side above lies in \((-\frac{1}{2}, \frac{1}{2})\), and therefore must vanish. Since we had already observed that the \(a_i\) are not all equal, we conclude that the \(a_i\) form an arithmetic progression. This contradicts the definition of \(A_N\), finishing the proof. \(\square \)

3.2 Lower Bound on the Fourier Dimension

We now use the approach of [16] to adapt the previous construction to construct a set of large Fourier dimension that \(\varepsilon \)-avoids \(k-APs \). We begin by recalling the definition of Fourier dimension. Given a Borel set \(A\subset \mathbb {R}^d\), let \(\mathcal {P}_A\) denote the family of all Borel probability measures \(\mu \) on \(\mathbb {R}^d\) with \(\mu (A)=1\). The Fourier dimension is defined as

It is well known that \({{\,\mathrm{dim_F}\,}}(A)\le {{\,\mathrm{dim_H}\,}}(A)\), with strict inequality possible (and frequent). Sets for which \({{\,\mathrm{dim_F}\,}}(A)={{\,\mathrm{dim_H}\,}}(A)\) are called Salem sets and while many random sets are known to be Salem, few deterministic examples exist. See [12, §12.17] for more details on Fourier dimension and Salem sets.

Proposition 3.2

Let \(k \in \mathbb {N}_{\ge 3}\) and \(\varepsilon \in (0,1/12]\). Then there exists a compact Salem set E that \(\varepsilon \)-avoids \(k-APs \) with

Proof

The construction is similar to that in the previous section, but at each level and location in the construction we rotate the set \(6 A_N\) randomly on the cyclic group \(\mathbb {Z}/(12 N \mathbb {Z})\), with all the random choices independent of each other. To be more precise, let \(N=\lfloor \frac{1}{12\varepsilon } \rfloor \) and let \(A_N\subset \{1,\ldots ,N\}\) be a set of size \(r_k(A_N)\) avoiding \(k-APs \), just as above. Write \(\mathcal {I}_{12N}\) for the collection of (12N)-adic intervals in [0, 1], and let \(\{ X_I, I\in \mathcal {I}_{12N}\}\) be IID random variables, uniform in \(\{0,1,\ldots ,12N-1\}\). Set

Note that \((B_{N,I})_{I}\) are IID random subsets of \(\{0,1,\ldots ,12N-1\}\). It is critical for us that \(B_{N,I}\) does not contain any \(k-APs \), which holds since \(A_N\) avoids \(k-APs \) and, when \(B_{N,I}\) wraps around 12N, the gap in the middle prevents the existence of even \(3-APs \) in \(B_{N,I}\) that are not translations of corresponding progressions in \(6 A_N\).

Now starting with \(I=[0,1]\), we inductively replace each interval \(I=[z_I,z_I+(12N)^{-\ell }]\in \mathcal {I}_{12N}\) by the union of the intervals

Let \(E_\ell \) be the union of all the intervals of length \((12N)^{-\ell }\) generated in this way, and define \(E=\cap _\ell E_\ell \). It is easy to check that \({{\,\mathrm{dim_H}\,}}(E)=\log |A_N|/\log (12 N)\); indeed, E is even Ahlfors-regular. On the other hand, the randomness of the construction (more precisely, the independence of the \(X_I\) together with the fact that each element of \(\{0,\ldots , 12N-1\}\) has the same probability of belonging to \(B_{N,I}\)) ensures that E is a Salem set, see [16, Theorem 2.1].

Finally, the same argument in the proof of Proposition 3.1 shows that E \(\varepsilon \)-avoids \(k-APs \). \(\square \)

3.3 Proof of the Upper Bound (b)

We now prove the upper bound (b) from Theorem 1.1:

Proposition 3.3

For any \(\varepsilon \) such that \(1/\varepsilon > k\),

We start with a lemma in the discrete context, which is related to (but simpler than) Varnavides’ Theorem (see e.g. [18, Theorem 10.9]); it allows us to find arithmetic progressions with large gaps.

Lemma 3.4

Fix \(k,\lambda ,m \in \mathbb {N}\) such that \(k<m\). For every subset \(A \subseteq \{1, \cdots , \lambda m\}\) such that \(\# A\ge \lambda (r_k(m)+1)\), we have that A contains an arithmetic progression of length k and gap \(\ge \lambda \).

Proof

We split \(\{1, \cdots , m\lambda \}\) into \(\lambda \) disjoint arithmetic progressions of length m:

Since by hypothesis \(\# (A\cap \{1, \cdots , m\lambda \}) \ge \lambda (r_k(m)+1)\), there exists j such that \(\# (A\cap P_j) \ge r_k(m)+1\). Then, by definition of \(r_k(m)\), the set \(A\cap P_j\) contains an arithmetic progression of length k. So, A contains an arithmetic progression of length k and gap \(\ge \lambda \). \(\square \)

Proof of Proposition 3.3

Pick m such that \(1/m < \varepsilon \le 1/(m-1)\). Let \(E \subseteq \mathbb {R}\) be a bounded set that \(\varepsilon \)-avoids \(k-APs \). Since the claim is invariant under homotheties, we may assume \(E \subseteq [0,1]\). We will get an upper bound for the Minkowski dimension of E, and so also for the Hausdorff dimension. For this, we split the interval [0, 1] into N-adic intervals, where \(N=m^2\), and count the number of subintervals of the next level intersecting E.

Claim: For every j, and for each N-adic interval I of length \(N^{-j}\), the number of N-adic intervals of length \(N^{-j-1}\) intersecting E is \(<m(r_k(m)+1)\).

Assuming the claim, a standard argument gives the desired upper bound for the Minkowski dimension of E.

We prove the claim by contradiction. Suppose I is an interval for which the claim fails. Let \(\mathcal {L}\) denote the set of leftmost points of the \(N^{-j-1}\)-sub-intervals of I intersecting E. Then \(\mathcal {L}\) can be naturally identified (up to homothety) with a subset \(A \subseteq \{1, \cdots , N\}\) with \(\# A \ge m(r_k(m)+1)\). Then, by Lemma 3.4 applied with \(\lambda =m\), the set A contains an arithmetic progression of length m and gap \(\ge m\). So, \(\mathcal {L}\) contains an arithmetic progression P of length k and gap length equal to \(\text {gap}(P)\ge m N^{-(j+1)}\).

We conclude that

which is a contradiction, because E \(\varepsilon \)-avoids \(k-APs \). \(\square \)

3.4 Proof of the Upper Bound (c)

Finally, we prove the upper bound (c) in Theorem 1.1, which again we repeat for convenience:

Proposition 3.5

Let \(k \ge 3\) and \(\varepsilon \in (0,1/10)\). Then

where \(c>0\) is a universal constant.

Proof

Fix \(k \ge 3\) and \(\varepsilon \in (0,\frac{1}{10})\) which we may assume for now to be the reciprocal of an integer, \(\varepsilon =1/m\). We find an upper bound for the Assouad dimension of a bounded set E which \(\varepsilon \)-avoids \(k-APs \). This requires estimating the cardinality of efficient r-covers of an R-ball centred in E for small scales \(0<r<R\). To this end, fix \(x \in E\) and \(0<r<R\), assuming without loss of generality that \(r \le \varepsilon R/k\). Consider the interval \(B(x,R):=[x-R,x+R)\) and express it as the union of \(\frac{k}{\varepsilon }\) intervals of common length \(\frac{2 \varepsilon R}{k}\) as follows:

We partition the set of indices \(\mathcal {I} = \{0, \dots , \frac{k}{\varepsilon }-1\}\) into sets \(\mathcal {I}_j = \{i \in \mathcal {I} : i \equiv j (mod \frac{1}{\varepsilon })\}\) for \(j \in \{0, 1, \dots , \frac{1}{\varepsilon } - 1\}\). Note that each partition element \(\mathcal {I}_j\) contains k indices and the midpoints of the intervals with labels in the same partition element form a \(k-AP \) with gap length \(\frac{2R}{k}\).

Since E \(\varepsilon \)-avoids \(k-APs \), for each j at least one of the intervals indexed by an element of \(\mathcal {I}_j\) must not intersect E. Consider the original interval B(x, R) with these non-intersecting intervals removed and express it as a finite union of pairwise disjoint half open intervals given by the connected components of B(x, R) once the non-intersecting intervals have been removed. Note the number of such intervals could be one if the non-intersecting intervals lie next to each other and the number of intervals is at most \(\frac{1}{\varepsilon }+1\).

We now proceed iteratively, repeating the above process within each of the pairwise disjoint intervals intersecting E formed at the previous stage of the construction. If an interval has length less than or equal to r, then we do not iterate the procedure inside that interval. This means the procedure terminates in finitely many steps (once all intervals under consideration have length less than or equal to r). The intervals which remain provide an r-cover of \(B(x,R) \cap E\) and therefore bounding the number of such intervals gives an upper bound for the Assouad (and thus Hausdorff) dimension of E. This number depends on the relative position of the non-intersecting intervals at each stage in the iterative procedure and we need to understand the ‘worst case’. Here it is convenient to consider a slightly more general problem where the nested intervals do not lie on a grid.

Given an interval J and a finite collection of (at most \(\frac{1}{\varepsilon }+1\)) pairwise disjoint subintervals \(J_i\), let \(s \in [0,1]\) be the unique solution of

and let \(p_i\) be the weights \(p_i = \left( \frac{|J_i|}{|J|}\right) ^s\). The value s may be expressed as a continuous function with finitely many variables \(\left\{ \frac{|J_i|}{|J|}\right\} _i\) on a compact domain. We define \(s_{\max }\) as the maximum possible value of s given the constraint

which is well-defined, and attained, by the extreme value theorem.

Define a probability measure \(\mu \) on the collection of intervals we are trying to count by starting with measure 1 uniformly distributed on \(J = B(x,R)\) and then subdividing it across the intervals \(J_i\) formed in the iterative construction subject to the weights \(p_i\). Write

where the interval \(J_{i_{\ell }}\) is the interval containing I at the \(\ell \)-th stage in the iterative procedure, noting that

Writing \(p_{i_l}\) for the weight associated with \(J_{i_l}\),

Therefore, writing N for the total number of intervals I,

and

proving \(\dim _A E \le s_{\max }\). It remains to estimate \(s_{\max }\) in terms of k and \(\varepsilon \).

We claim that s is maximised subject to (3.2) by choosing the largest number of intervals possible (i.e.: \(\frac{1}{\varepsilon }+1\)) and, moreover, choosing them to have equal length

for all i. This yields

as required. Recall that the maximum exists by compactness. Observe that s depends only on the lengths of the intervals \(J_i\) and on the number of them. If we choose less than the maximal number of intervals, then s can always be increased by splitting an interval into two pieces, using the general inequality \((a+b)^s<a^s+b^s\) for \(a,b,s \in (0,1)\) and the fact that (3.2) forces \(s_{\max } < 1\). From then, a simple optimisation argument yields that s is maximised when all the intervals have the same length. Indeed, if there were two intervals with distinct lengths \(a<b\), then averaging them to form two intervals of length \((a+b)/2\) increases s, using the general inequality \(a^s+b^s < 2((a+b)/2)^s\) for all \( s \in (0,1)\). We have proved the result in the case where \(\varepsilon \) is the reciprocal of an integer. However, if \(\varepsilon \) is not the reciprocal of an integer then we replace it with \(\varepsilon ' = \frac{1}{\lceil \frac{1}{\varepsilon } \rceil }\) which is the reciprocal of an integer and, moreover, E \(\varepsilon '\)-avoids \(k-APs \) and the general result follows by applying the integer case established above. \(\square \)

4 Open Questions

There is still a gap between the lower and upper bounds provided by Theorem 1.1, even though both bounds (a) and (b) are closely connected to Szemerédi-type bounds in the discrete context.

Question 4.1

For a fixed \(k \ge 3\), is \(d(k,\varepsilon ) \sim \frac{\log (r_k(\lfloor \frac{1}{\varepsilon } \rfloor ))}{\log (\lfloor \frac{1}{\varepsilon } \rfloor )}\) as \(\varepsilon \rightarrow 0\)?

We have seen that

and that the value of \(d(k,\varepsilon )\) remains the same if Hausdorff dimension is replaced by box, packing or Assouad dimension. So it seems natural to ask:

Question 4.2

Is \(d(k,\varepsilon )=\sup \{ {{\,\mathrm{dim_F}\,}}(E): E \text { is Borel and } \varepsilon \text {-avoids } k-APs \}\)?

References

Behrend, F.A.: On sets of integers which contain no three terms in arithmetical progression. Proc. Natl. Acad. Sci. USA 32, 331–332 (1946)

Bloom, T.F.: A quantitative improvement for Roth’s theorem on arithmetic progressions. J. Lond. Math. Soc 93(3), 643–663 (2016)

Bloom, T.F., Sisask, O.: Breaking the logarithmic barrier in Roth’s theorem on arithmetic progressions. Preprint, arXiv:2007.03528, (2020)

Falconer, K.J.: Fractal Geometry. Mathematical foundations and applications, 3rd edn. Wiley, Chichester (2014)

Fraser, J.M., Saito, K., Yu, H.: Dimensions of sets which uniformly avoid arithmetic progressions. Int. Math. Res. Not. IMRN 14, 4419–4430 (2019)

Fraser, J.M., Yu, H.: Arithmetic patches, weak tangents, and dimension. Bull. Lond. Math. Soc. 50(1), 85–95 (2018)

Furstenberg, H.: Ergodic fractal measures and dimension conservation. Ergodic Theory Dynam. Syst. 28(2), 405–422 (2008)

Gowers, W.T.: A new proof of Szemerédi’s theorem. Geom. Funct. Anal. 11(3), 465–588 (2001)

Green, B., Tao, T.: New bounds for Szemerédi’s theorem, III: a polylogarithmic bound for \(r_4(N)\). Mathematika 63(3), 944–1040 (2017)

Keleti, T.: A 1-dimensional subset of the reals that intersects each of its translates in at most a single point. Real Anal. Exchange, 24(2), 843–844 (1998/1999)

Keleti, T.: Construction of one-dimensional subsets of the reals not containing similar copies of given patterns. Anal. PDE 1(1), 29–33 (2008)

Mattila, P.: Geometry of sets and measures in Euclidean spaces, volume 44 of Cambridge Studies in Advanced Mathematics. Fractals and rectifiability. Cambridge University Press, Cambridge (1995)

O’Bryant, K.: Sets of integers that do not contain long arithmetic progressions. Electron. J. Combin. 18(1), 59 (2011)

Saito, K.: New bounds for dimensions of a set uniformly avoiding multi-dimensional arithmetic progressions. arXiv:1910.13071, (2019)

Sanders, T.: On Roth’s theorem on progressions. Ann. Math. 174(1), 619–636 (2011)

Shmerkin, P.: Salem sets with no arithmetic progressions. Int. Math. Res. Not. IMRN 7, 1929–1941 (2017)

Szemerédi, E.: On sets of integers containing no \(k\) elements in arithmetic progression. Acta Arith. 27, 199–245 (1975)

Tao, T., Vu, V.H.: Additive combinatorics, volume 105 of Cambridge Studies in Advanced Mathematics. Cambridge University Press, Cambridge, 2010. Paperback edition [of MR2289012]

Yavicoli, A.: Large sets avoiding linear patterns. Proc. Am. Math. Soc., to appear, 2017. arXiv:1706.08118

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Alex Iosevich.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

JMF is financially supported by an EPSRC Standard Grant (EP/R015104/1) and a Leverhulme Trust Research Project Grant (RPG-2019-034). PS is supported by a Royal Society International Exchange Grant and by Project PICT 2015-3675 (ANPCyT). AY is financially supported by the Swiss National Science Foundation, Grant No. P2SKP2_184047.

Rights and permissions

About this article

Cite this article

Fraser, J.M., Shmerkin, P. & Yavicoli, A. Improved Bounds on the Dimensions of Sets that Avoid Approximate Arithmetic Progressions. J Fourier Anal Appl 27, 4 (2021). https://doi.org/10.1007/s00041-020-09807-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00041-020-09807-w