Abstract

Given a distribution of earthquake-induced seafloor elevations, we present a method to compute the probability of the resulting tsunamis reaching a certain size on shore. Instead of sampling, the proposed method relies on optimization to compute the most likely fault slips that result in a seafloor deformation inducing a large tsunami wave. We model tsunamis induced by bathymetry change using the shallow water equations on an idealized slice through the sea. The earthquake slip model is based on a sum of multivariate log-normal distributions, and follows the Gutenberg-Richter law for seismic moment magnitudes ranging from 7 to 9. For a model problem inspired by the Tohoku-Oki 2011 earthquake and tsunami, we quantify annual probabilities of differently sized tsunami waves. Our method also identifies the most effective tsunami mechanisms. These mechanisms have smoothly varying fault slip patches that lead to an expansive but moderately large bathymetry change. The resulting tsunami waves are compressed as they approach shore and reach close-to-vertical leading wave edge close to shore.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Among all potential sources, megathrust earthquakes are likely to cause the most extreme tsunami hazards for coastal regions (Grezio et al., 2017; Behrens et al., 2021). Recent work has focused on defining megathrust tsunami source scenarios for simulations to evaluate a quantity of interest (QoI) such as the maximal wave height (Gao et al., 2018). Since earthquake fault slip patterns are unpredictable, a probabilistic study of these QoIs is needed. In LeVeque et al. (2016) and Williamson et al. (2020), the authors construct realistic tsunami source distributions and use simulations based on samples from that distribution to obtain hazard curves for maximum water depth, i.e., the annual probabilities of the water depth exceeding certain values. Typically, estimating small probabilities requires a large number of Monte Carlo simulations to find samples corresponding to probability tails. Importance sampling (IS) can be an improvement, but requires a properly chosen proposal density and, typically, still a large number of samples (Liu, 2001). In this paper, we present a sampling-free method for computing annual probabilities of tsunamis on shore with uncertainty from fault slips using ideas from optimization and applied probability. The approach uses a probabilistic model of earthquake fault slips, adapting ideas from LeVeque et al. (2016) and Gao et al. (2018), and simulates tsunami waves using the one-dimensional nonlinear shallow water equations. Our target is not an online tsunami warning system, but offline hazard estimation that is capable of accurately computing very small probabilities. The proposed method is most efficient for scalar QoIs, e.g., to compute tsunami size probabilities in a single location on shore.

This paper builds on the approach proposed in Dematteis et al. (2019) for extreme event probability estimation and extends our recent work (Tong et al., 2021) on tsunami prediction in multiple directions. First, it considers fault slip events that take into account the physics constraints such as slip orientation and magnitude and whose distribution fits the Gutenberg-Richter law for earthquake moment magnitudes between 7 and 9. To obtain a realistic fault slip model distribution, we use a sum of weighted multivariate log-normals. Additionally, we study the most effective fault mechanisms for obtaining a tsunami of a certain height on shore. These optimizers are a side products of the proposed method which uses a sequence of optimization problems for probability estimation.

The proposed approach for probability estimation is not specific to tsunamis. Rather, it is applicable to a wide class of problems involving complex systems (e.g., governed by partial differential equations) with high-dimensional parameters. The method assumes some regularity of the functions involved and that certain optimization problems have a unique solution. Additionally, it requires access to derivatives of the map from parameters to the QoI, which typically can be computed efficiently using adjoint methods. The tsunami issue discussed in this paper assumes that the main uncertainty stems from the fault slips, and slips are of the buried rupture type, but the method can be also applied to other types (splay-faulting, trench-breaching) by choosing different slip distribution means.

2 Sampling-Free Estimation of Small Probabilities

We briefly present our approach to estimate probabilities of outputs (QoIs) arising from complex systems that depend on potentially high-dimensional uncertainties. We summarize the method for multivariate Gaussian parameter distributions. For the tsunami problem discussed in the next section, the approach is adapted for a sum of log-normal distributions.

We assume a random variable vector \(\theta \in {\mathbb {R}}^n\) with \(\theta \sim {\mathcal{N}}(\mu ,C)\), i.e., \(\theta\) follows a multivariate Gaussian distribution with mean \(\mu \in {\mathbb {R}}^n\) and a symmetric, positive definite covariance matrix \(C\in {\mathbb {R}}^{n\times n}\). The negative log-probability density function for this distribution is \(I(\theta ):=\frac{1}{2}(\theta -\mu )^TC^{-1}(\theta -\mu )\). This quadratic is, at the same time, the so-called rate function in large deviation theory, which allows generalization of the approach to non-Gaussian distributions (Dematteis et al., 2019). However, for Gaussians, log-normals and their sums as considered here, it is sufficient to consider \(I(\cdot )\). The parameter vectors \(\theta\) are the inputs into the parameter-to-QoI map F discussed next.

We assume a sufficiently regular, possibly complicated map \(F:{\mathbb {R}}^n\mathbb \rightarrow {\mathbb {R}}\), which maps the parameter vector to a scalar QoI \(F(\theta )\in {\mathbb {R}}\). We are interested in approximating the probability

where z is a large value and thus \(P(z)\ll 1\). While such a probability can be estimated using Monte-Carlo sampling, the performance of these samplers typically degrades for large z and thus small probabilities P(z), since random samples are unlikely to lead to large QoIs. Using geometric arguments and results from probability, in particular large deviation theory (LDT), shows that the following optimization problem plays an important role for the estimation of (1):

The LDT-minimizer \(\theta ^\star =\theta ^\star (z)\) is the most likely point in the set \(\Omega (z)=\{\theta : F(\theta )\ge z\}\), i.e., the random parameters that correspond to a QoI of size z or larger. Under reasonable assumptions, in particular the uniqueness of the solution of (2) [for details see Tong et al. (2021), Dematteis et al. (2019)], one can show that for large z, the probability measure is concentrated around the optimizer \(\theta ^\star\). Moreover, the probability can be well approximated using local derivative information of F and I at \(\theta ^\star\). Namely, provided sufficient smoothness of F, we can approximate the nonlinear equation \(F(\theta )=z\) using a Taylor expansion about \(\theta ^\star\) truncated after the quadratic term:

Replacing the boundary of the extreme QoI set, i.e., \(\{\theta : F(\theta )=z\}\) with the quadratic approximation of the boundary, i.e., \(\{\theta : F^{SO}(\theta )=z\}\) allows to compute the integration (1) analytically, resulting in an estimate of the form \(P(z)\approx D_0(z) e^{-I(\theta ^\star )}\), where the prefactor \(D_0(z)\) can be computed using local curvature information of F at \(\theta ^\star\). Here, randomized linear algebra methods can be used to only compute the eigenvalues that are important for \(D_0(z)\). The number of such eigenvalues depends on the geometry of \(F^{SO}(\theta )\) and the underlying multivariate Gaussian, and is typically small.

Two-dimensional illustration of the second-order approximation of the set \(\Omega (z)\) for given z. These approximations exploit properties of the minimizer \(\theta ^\star\), the normal direction \(n^\star :=\nabla F(\theta ^\star )/ \Vert \nabla F(\theta ^\star )\Vert =\nabla I(\theta ^\star )/ \Vert \nabla I(\theta ^\star )\Vert\) and the curvature of \(\partial \Omega (z)\) at \(\theta ^\star\)

The most important step for probability estimation using the LDT approach is to solve the optimization problem (2); see also Dematteis et al. (2018, 2019). After obtaining the optimizer \(\theta ^\star (z)\), we can integrate the probability measure of the set bounded by the second-order Taylor expansion (3) to obtain an approximation of the probability (1), as shown in Fig. 1. For computing the prefactor \(D_0(z)\) in the estimate \(P(z)\approx D_0(z) e^{-I(\theta ^\star )}\), we use local derivative information of F and I at \(\theta ^\star (z)\). The resulting expression for \(D_0(z)\) is given by

Here, for brevity, we write \(\theta ^\star\) instead of \(\theta ^\star (z)\). The operator \(P_\text {Pr}\) is the projection onto the orthogonal space of the gradient \(\nabla F(\theta ^\star )\). Moreover, \(\lambda _i(\cdot )\) represents the ith eigenvalue of a matrix. This estimation is equivalent to the Second Order Reliability Method (SORM) in engineering (Rackwitz, 2001). However, we use an formulation lending itself to higher dimensions, since it only requires the application of the second derivative matrix \(\nabla ^2F(\theta ^*)\) to vectors, rather than building this matrix explicitly. We use finite differences of gradients to approximate these Hessian-vector products. Such gradients are computed using adjoints, as summarized in Sect. 4. The estimation then uses randomized linear algebra methods to compute the dominating eigenvalues efficiently (Halko et al., 2011); details can be found in Tong et al. (2021).

3 Earthquake-Induced Tsunamis

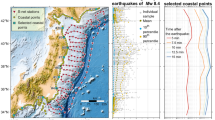

Next, we present the tsunami model. We describe how we model the distribution of uncertain fault slips corresponding to the random parameter \(\theta\) and the forward model F, which involves the solution of the shallow water equation. We use a model setup sketched in Fig. 2, which is inspired by the 2011 Tohoku-Oki earthquake and tsunami (Fujiwara et al., 2011; Dao and Tkalich, 2007). The geometry represents a two-dimensional slice with a bathymetry that models the continental shelf and the pacific ocean to the east of Japan. The fault location and dip angle are taken from Zhan et al. (2012). We generate random slips as discussed in the next section. In Fig. 3, we show snapshots of tsunami waves traveling towards shore, which is located on the left side of the domain.

Problem setup inspired by Tohoku-Oki 2011 earthquake/tsunami. Bathymetry changes (area in purple) are modeled as resulting from 20 randomly slipping patches in the fault region (in green, with end points \((120\,{{\text {km}}},-19.64\,{{\text {km}}})\) and \((180\,{{\text {km}}},-9.75\,{{\text {km}}})\)), using the Okada model. The tsunami event QoI is the average wave height in the interval [34 km,35 km] close to shore (shaded in red), where the water depth at rest is 20 m

Snapshots of tsunami waves generated by the optimizer with \(z=4\) (dashed line in Fig. 7B) at different times t. The seafloor deformation generates waves in both directions, but we focus on the waves traveling towards the shore on the left. The region where we measure average tsunami height at time \(t_{\max }\) is shaded in red. Note that the tsunami wave is compressed as it travels towards shore, its height increases and its leading wave edge steepens

3.1 Modeling Bathymetry Change as Random Field

We model earthquake-induced bathymetry change as a random variable to account for its uncertainty. More precisely, we model fault slips along patches in the subduction fault using a multivariate log-normal distribution. Random fault slips are propagated to the seafloor bathymetry change using the Okada model (Okada, 1985), which assumes a linear elastic crust. The use of a log-normal distribution ensures that fault slips have a uniform sign as is realistic when stress in the overriding plate is released through slip along the fault. We assume that slip at the ith patch has the form \(s_i = \exp (\theta _i)\), where \(\theta _i\) is a component of a multivariate Gaussian distribution for the random vector \(\theta\). The choice of a mean \({\hat{\mu }}\) and covariance \({\hat{C}}\) for the log-normal slip vector s corresponds to slip events leading by earthquakes around a certain Gutenberg-Richter moment magnitude \(M_w\). The slips \(s=\exp (\theta )\) follow log-normal distributions, where \(\theta\) is a multivariate Gaussian random parameter with mean \(\mu\) and covariance matrix C. The mean \(\mu\) and covariance matrix C for \(\theta\) are given in terms of \({{\hat{\mu }}}\) and \({{\hat{C}}}\) as follows:

We next show how we choose the mean \({\hat{\mu }}\) and covariance \({{\hat{C}}}\) such that samples of the distribution correspond to earthquaked with a certain magnitude. We use four pairs of \(({{\hat{\mu }}},{{\hat{C}}})\) and compute a weighted sum of multivariate log-normal distributions such that the corresponding earthquakes follow the Gutenberg-Richter scale. The mean \({\hat{\mu }}\) is defined as the multiple of the taper proposed in Gao et al. (2018),

where \(S_{M_w}\) is a constant multiple determined by the moment magnitude discussed later. The value \(x_i\) is the horizontal location of the center in the ith sub-fault, and \(x_{\text {t}}\) and \(x_{\text {b}}\) are the horizontal location of the top and the bottom of the fault, respectively. The function \(\tau\) is the taper from Gao et al. (2018):

We use the values \(b=0.25\), \(q=0.65\). The covariance is defined similarly as in LeVeque et al. (2016), namely as

The only thing left is to choose \(S_{M_w}\) such that \({\hat{\mu }}\) produce to an earthquake with moment magnitude \(M_w\), by solving the relation

where the seismic moment \(M_0 = A \times\) (average slip) \(\times\) (rigidity). Following Murotani et al. (2008), the area A is defined as

The average overall slip is computed as the average of (5). The rigidity is \(35\times 10^9 N/m^2\), as suggested in Hashima et al. (2016).

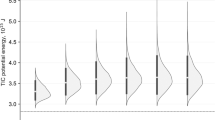

The framework summarized in Sect. 2 uses the multivariate Gaussian variable \(\theta\) underlying the log-normal variable s. We use four mean slips and covariances for earthquakes of moment magnitude \(M_w=7.5,8,8.5,9\). These mean slips, as well as random draws from the four log-normal distributions are shown in Fig. 4A. Note that this distribution assumes that the faults are of buried type, i.e., there is no slip close to the seafloor. By changing the means, other scenarios such as trench-breaking earthquakes can be modeled (Gao et al., 2018). The bathymetry changes induced by mean and random fault slips are shown in Fig. 4B.

Note that the proposed method for estimating the probability of the tsunami height on shore is not limited to the slip distributions used in this section (i.e., log-normal distributions for slips means and the exponential covariance function (8) as suggested in LeVeque et al. (2016)). The approach is generic and can be modified to other settings that have been proposed in the literature. For example, it is straightforward to change the exponential dependency of the covariance (8) from the distance \(|x_i-x_j|\) to the von Karman distribution (Mai and Beroza, 2002; Crempien et al., 2020). This will only impact the definitions for mean and covariance in (4), but all other steps remain unchanged. Also heavy-tailed distributions built by discrete Fourier transformations and power law dependency of the spectrum (Lavallée and Archuleta, 2003; Lavallée et al., 2006) could be used. This would require to incorporate the discrete Fourier functions into the map F, similar to the way we incorporate the exponential transformation into F to obtain log-normal distributions.

Shown in (A) are random slip patterns (solid and dashed lines) from the log-normal distributions whose means (faded solid lines) generate earthquake with moment magnitudes \(M_w = 7.5, 8, 8.5, 9\). Different colors indicate slips originating from distributions with different means. Shown in (B) are the vertical seafloor deformation induced by the slip samples and means shown in (A). These seafloor deformations are computed using the Okada model. Shown in (C) are the tsunami waves close to shore generated by the solid line samples and the mean seafloor deformations in (B). The tsunami waves are shown at the time when they maximize the objective (13), i.e., their average wave height is maximal in the measurement interval (red shaded area)

3.2 Governing Nonlinear Shallow Water Equations

We model tsunami waves using the one-dimensional shallow water equations, describing the conservation of mass and momentum for points x in space and times \(t\in [0,T]\), \(T>0\). These nonlinear hyperbolic equations, written in terms of the water height h and the momentum variable v are:

Here, \(v:=hu\) with u being the velocity, g is the gravitational constant, and B the earthquake-induced bathymetry change, which enters through its spatial derivative \(B_x\). The sea is assumed to be in rest at initial time \(t=0\) for the given bathymetry \(B_0\), i.e., \(v(x,0)=0\) and \(h(x,0)=-B_0(x)\) for all x. Together with these initial conditions, we assume suitable boundary conditions that are sufficiently far away from the observation interval and the source such they do not interact with the solution in the considered time interval. The shallow water model equations (11) do not take into account a horizontal bathymetry change. Any non-zero vertical bathymetry change resulting from a fault slip lifts the water column and thus leads to waves traveling in both directions, towards and away from shore; see Fig. 3. Next, we describe our measure of tsunami size on shore.

3.3 Parameter-to-QoI Map and Combining Gaussians

We use the average tsunami wave height in a small interval [c, d] close to shore to measure the tsunami size. This interval is indicated in red in Fig. 3 and in Fig. 2. Since we cannot exactly predict how long after the bathymetry change the largest tsunami wave reaches shore, we approximate the maximum average wave height using a smoothing parameter \(\gamma >0\), resulting in

Here, h is considered a function of B through the solution of the shallow water equations (11). Moreover, \(h+B_0\) is the water height above the resting state, and  denotes the average integral over [c, d]. It can be shown that

denotes the average integral over [c, d]. It can be shown that

i.e., for large \(\gamma\), (12) approximately measures the largest average wave height in [c, d] over all times in [0, T].

We apply the approach summarized in Sect. 2 individually to the multivariate Gaussians underlying the sum of log-normal distributions used to model the distribution of fault slips. The parameter-to-QoI map F includes the transformation from Gaussian to log-normal parameters, the map from slip patches to bathymetry change B governed by the Okada model, and the solution of the shallow water equations to map the bathymetry change to the average wave height close to shore (12), i.e., F is defined as:

Components of this map are illustrated in Fig. 4. Note that all samples from the bathymetry change distribution are negative on the left, i.e., closer to shore, and positive on the right. This is a consequence of only using positive slips reflecting the mechanisms behind plate subduction, i.e., the overriding plate releases stress during fault slip events. Due to the structure of these random slip-induced bathymetry changes, the trough of a tsunami wave reaches the shore first, followed by the wave crest as seen in Fig. 4C.

The distribution is the sum of four log normals with different means, which are combined such that the resulting distribution of earthquakes follows the Gutenberg-Richert (GR) law for earthquakes with moment magnitude ranging from 7 to 9. The previous sections detail the probability distribution for \(\theta\) with a fixed mean corresponding to moment magnitude \(M_w\). We denote this multivariate Gaussian distribution as \(\pi _{M_w}\). For this setup, we apply the methods discussed in Sect. 2 to estimate \({\mathbb {P}}_{\pi _{M_w}}(F(\theta )\ge z)\), for the probability of observing an average wave height of z or higher from random slips from the distribution with moment magnitude \(M_w\) mean. To obtain the annual assessment of this probability \(P_{\text {an}}(z)\), i.e., the annual probability of wave higher than z, we use a weighted sum similar to Williamson et al. (2020)

where the weight \(w_{M_w}=10^{6.456-M_w}\) is the annual probability of a moment magnitude \(M_w\) earthquake following the Gutenberg-Richter (GR) law. The Gutenberg-Richter (GR) law describes the return period of certain magnitude earthquakes. We choose 350 years as the return period for the Tohoku area from the study in Kagan & Jackson (2013), so the annual probability of occurring earthquakes with moment magnitude larger than \(M_w\) is \(10^{6.456-M_w}\). Using these weights and the distribution \(\pi _{M_w}\), we compute the annual probability for earthquakes with magnitude larger than \(M_w\) when samples are from \(\pi _{M_w}\). Their sum fits the annual probability curve \(10^{6.456-M_w}\) from the GR law, as shown in Fig. 5.

Annual probabilities of earthquakes magnitude larger than \(M_w\) caused by samples generated from \(\pi _{M_w}\) whose means correspond to moment magnitude \(M_w= 7.5, 8, 8.5, 9\). The sum of the four probability distributions provides the annual probabilities of observing an earthquake with magnitude from 7 to 9. We compare it with the probability distribution \(10^{6.456-M}\) from the Gutenberg-Richter law. It can be seen that our model fits the distribution of earthquakes for magnitude from 7 to 9

4 Numerical and Optimization Methods

Our approach for probability estimation requires to solve a sequence of optimization problems of the form (2) with the parameter-to-event map F specified above. Since F involves solution of the shallow water equations for given B (and thus \(\theta\)), this is a PDE-constrained optimization problem (Borzi & Schulz, 2011; Hinze et al., 2009; De Los Reyes, 2015). For solving the one-dimensional shallow water equations, we use the discontinuous Galerkin finite element method (DG-FEM) with linear interpolating polynomials and a global Lax-Friedrichs flux to discretize the equations in space, and the strong stability-preserving second-order Runge–Kutta (SSP-RK2) method to discretize the equations in time (Hesthaven & Warburton, 2007). We use adjoint methods to efficiently compute gradients for this optimization problem, and a descent algorithm that approximates second-order derivative information using the BFGS method (Nocedal & Wright, 2006). After finding the optimizer, we use finite differences of gradients to approximate the application of Hessians to vectors as required to find the dominating curvature directions; for details we refer to Tong et al. (2021). Numerical values used in the discretization the problem is summarized in Table 1.

As we have seen, solutions of nonlinear hyperbolic equations such as the shallow water equations can develop steep slopes or shocks, which play an important role for the dynamics of the system. These phenomena are challenging for numerical simulations. To prevent infinite slopes, in our simulations we use artificial viscosity, which decreases upon mesh refinement, thus retaining important aspects of the dynamics, details can be found in Tong et al. (2021).

5 Results

First, we compare the estimation of tsunami wave height probabilities from the optimization-based method with the probability estimation from Monte Carlo sampling. In Fig. 6 it can be seen that the sampling-based and the LDT-based probability estimates are visually indistinguishable. In this figure we also illustrate how the log-normal distributions around the four different slip means (corresponding to earthquakes of different magnitude) contribute to the overall probability. Note that for large tsunami waves, the distribution centered at magnitude \(M_w=9\) event dominates the probability. While typically Monte Carlo requires a large number of samples to estimate low probabilities, this effect is mitigated here by the fact that the small probabilities are dominated by the events in the distribution around the magnitude 9 earthquake mean.

Comparison of the annual probability of \(F(\theta )\ge z\), i.e., tsunami waves on shore of size z or larger between Monte Carlo sampling (dashed lines) and LDT approximation (solid lines). Shown in color are the contribution of the log-normal distributions centered at magnitude 7.5, 8, 8.5 and 9 to the overall probability as discussed in Sect. 3.3. The points for \(z=1,\ldots , 5\), labeled with (a)–(e), correspond to the optimized fault slips shown in Fig. 7A and represent the dominant contribution to the overall probability. Monte Carlo sampling uses \(10^4\) samples for each of the four earthquake magnitudes

Shown in (A) are the slips corresponding to the LDT-optimizers that contributes most to the overall probability. These probabilities are indicated by the solid colored spheres in Fig. 6. The dominant contributions for \(z=1\) is from the log-normal distribution with mean \(M_w=7.5\), for \(z=2\) from the distribution with mean \(M_w=8.5\) and for \(z=3,4,5\) from the distribution with mean \(M_w=9\). Shown in the middle are the corresponding vertical seafloor deformations. Shown in (C) are the tsunami waves close to shore generated by the seafloor deformations in (B). Snapshots of the waves are shown when (12), i.e., the average wave height is largest in the measurement interval (red shaded area)

The fault slips dominating the probabilities for \(z=1,\ldots , 5\) meters, which are computed as minimizers of (2), are labeled in Fig. 6. These correspond to the most likely slip mechanism that results in a tsunami on shore of size z. These slips, the corresponding seafloor deformation and the resulting tsunami waves on shore are shown in Fig. 7. In Fig. 7A it can be seen that the slips that dominate the probability vary smoothly. To explain this, note that the map from slip patches to seafloor deformation, given by the Okada model, is smoothing. That is, smooth slip patch patterns can lead to similar seafloor deformations as less smooth patterns, which are less likely in our slip patch model. Figure 7C shows the tsunami waves at shore induced by the seafloor elevation changes shown in Fig. 7B. The tsunami waves are shown at times \(t_{\max }\) when they have maximal average height in [c, d], i.e., they maximize the right hand side in (13). In particular the largest waves have a steep gradient or a shock at their leading edge.

Comparison of waves on shore modeled by the shallow water equations and their linearization about the rest state. The waves are initialized by the bathymetry change corresponding to the optimizers from Fig. 7. Snapshots show the waves when their average is largest in the observation interval. The gray waves, shown for reference, are the same as in Fig. 7C). The waves shown in color are obtained by solving the linearized equations using the same initializations

To further study this behavior, in Fig. 3 we show snapshots of the wave corresponding to the \(z=4\) QoI as it travels towards shore. The wave trough and crest initially stretches over about 80 km. As approaching shore, the wave is compressed to a few kilometers. The wave’s crest leading edge steepens towards a shock as it approaches the observation region close to shore. We postulate that reaching a shocked state further away from shore would be a less efficient mechanism and, hence, is not found by the LDT-optimization.

To study this further, in Fig. 8, we compare snapshots of waves computed by the shallow water equations and their linearization, both driven by the same bathymetry change profiles shown in Fig. 7B. First, it can be seen that the differences between the nonlinear and linearized equations are more substantial for larger initial conditions, i.e., larger z. That is, the nonlinearity is more important for larger bathymetry changes, i.e., when the linearization around the rest state is a poorer approximation to the nonlinear problem. This observation also coincides with results from other studies on earthquake-induced tsunamis (An et al., 2014). It can also be seen that waves from the linearized equations are generally higher and less steep, while the waves from the shallow water equations have steep leading edge crests, which are caused by the nonlinearity in the equations. Since shocks (or, correspondingly, the steep regions in our numerical simulations in combination with the artificial viscosity) introduce dissipation at the wave crests, in Fig. 8 the snapshots for the linearized system are slightly higher. While the linearized problem uses the same amount of artificial viscosity, it plays little role as the wave’s slopes are significantly smaller. This again illustrates the importance of the nonlinearity in the equations for the dynamics and thus the tsunami probabilities on shore.

6 Discussion

Using a tsunami model and a realistic distribution of random earthquake slips, we study the probability of large tsunamis occurring close to shore. We use an earthquake slip distribution that reflects a realistic distribution of seismic moment magnitude from 7 to 9 on the Gutenberg-Richter scale. We show that tsunami height probabilities on shore can be estimated accurately by solving a series of optimization problems and verify these probabilities by comparison with direct but more costly Monte Carlo sampling. These optimization problems identify the most likely and thus most effective mechanism that result in tsunamis of a certain size. We find that the most effective earthquake mechanisms have smoothly varying slip patches. These lead to waves with a steepening gradient as the crest travels towards shore, typically resulting in an approximate shock right around the region close to shore. This indicates that the nonlinearity in the shallow water equations, which are used to model tsunami waves, plays an important role for the generation of tsunami waves. We find that tsunamis with average wave height of \(z\ge 3\) correspond to slips from the log-normal distribution with mean corresponding to the \(M_w=9\) earthquake.

Note that in Fig. 6, the probability curve bends upwards. That is, tsunami size probabilities do not decay exponentially with earthquake moment magnitude, i.e., the Gutenberg-Richter law (see also Fig. 5). Thus, model predicts a higher rate of large tsunamis than of large earthquakes. The onset of a similar behavior can also be seen in Williamson et al. (2020).

A limitation of the presented results is that they are based on a one-dimensional tsunami model and use one-dimensional slip patches to model random bathymetry changes. From the methodology perspective, an identical approach applies to two-dimensional shallow water models and fault slips, but would require access to efficiently computed gradients of the parameter-to-QoI map (14). Further, while the proposed optimization-based technique provides an approximation to the true probability, it can be combined with importance sampling to find the exact probabilities (Tong et al., 2021). Finally, note that the optimization-based method employed here looses its computational advantage over Monte Carlo sampling if one aims at computing hazard probabilities at many different points and for many different probability thresholds z.

References

An, C., Sepúlveda, I., & Liu, P.L.-F. (2014). Tsunami source and its validation of the 2014 Iquique, Chile, earthquake. Geophysical Research Letters, 41(11), 3988–3994.

Behrens, J., Løvholt, F., Jalayer, F., Lorito, S., Salgado-Gálvez, M. A., Sørensen, M., Abadie, S., Aguirre-Ayerbe, I., Aniel-Quiroga, I., Babeyko, A., et al. (2021). Probabilistic tsunami hazard and risk analysis: A review of research gaps. Frontiers in Earth Science, 9, 628772.

Borzi, A., & Schulz, V. (2011). Computational optimization of systems governed by partial differential equations (Vol. 8). SIAM.

Crempien, J. G. F., Urrutia, A., Benavente, R., & Cienfuegos, R. (2020). Effects of earthquake spatial slip correlation on variability of tsunami potential energy and intensities. Scientific Reports, 10(1), 1–10.

Dao, M. H., & Tkalich, P. (2007). Tsunami propagation—A sensitivity study. Natural Hazards and Earth System Sciences, 7(6), 741–754.

De Los Reyes, J. C. (2015). Numerical PDE-constrained optimization. Springer.

Dematteis, G., Grafke, T., & Vanden-Eijnden, E. (2018). Rogue waves and large deviations in deep sea. Proceedings of the National Academy of Sciences, 115(5), 855–860.

Dematteis, G., Grafke, T., & Vanden-Eijnden, E. (2019). Extreme event quantification in dynamical systems with random components. SIAM/ASA Journal on Uncertainty Quantification, 7(3), 1029–1059.

Fujiwara, T., Kodaira, S., No, T., Kaiho, Y., Takahashi, N., & Kaneda, Y. (2011). The 2011 Tohoku-Oki earthquake: Displacement reaching the trench axis. Science, 334(6060), 1240–1240. https://doi.org/10.1126/science.1211554

Gao, D., Wang, K., Insua, T. L., Sypus, M., Riedel, M., & Sun, T. (2018). Defining megathrust tsunami source scenarios for northernmost Cascadia. Natural Hazards, 94(1), 445–469.

Grezio, A., Babeyko, A., Baptista, M. A., Behrens, J., Costa, A., Davies, G., Geist, E. L., Glimsdal, S., González, F. I., Griffin, J., et al. (2017). Probabilistic tsunami hazard analysis: Multiple sources and global applications. Reviews of Geophysics, 55(4), 1158–1198.

Halko, N., Martinsson, P. G., & Tropp, J. A. (2011). Finding structure with randomness: Probabilistic algorithms for constructing approximate matrix decompositions. SIAM Review, 53(2), 217–288.

Hashima, A., Becker, T. W., Freed, A. M., Sato, H., & Okaya, D. A. (2016). Coseismic deformation due to the 2011 Tohoku-oki earthquake: Influence of 3-D elastic structure around Japan. Earth, Planets and Space, 68(1), 1–15.

Hesthaven, J. S., & Warburton, T. (2007). Nodal discontinuous Galerkin methods: Algorithms, analysis, and applications. Springer.

Hinze, M., Pinnau, R., Ulbrich, M., & Ulbrich, S. (2009). Optimization with PDE constraints. Springer.

Kagan, Y. Y., & Jackson, D. D. (2013). Tohoku earthquake: A surprise? Bulletin of the Seismological Society of America, 103(2B), 1181–1194.

Lavallée, D., & Archuleta, R. J. (2003). Stochastic modeling of slip spatial complexities for the 1979 Imperial Valley, California, earthquake. Geophysical Research Letters, 30(5), 622–640.

Lavallée, D., Liu, P., & Archuleta, R. J. (2006). Stochastic model of heterogeneity in earthquake slip spatial distributions. Geophysical Journal International, 165(2), 622–640.

LeVeque, R. J., Waagan, K., González, F. I., Rim, D. & Lin, G. (2016) Generating random earthquake events for probabilistic tsunami hazard assessment. In Global Tsunami Science: Past and Future (vol. I, pp. 3671–3692). Cham: Birkhäuser.

Liu, J. S. (2001). Monte Carlo strategies in scientific computing (Vol. 10). Springer.

Mai, P. M., & Beroza, G. C. (2002). A spatial random field model to characterize complexity in earthquake slip. Journal of Geophysical Research: Solid Earth, 107(B11), 10.

Murotani, S., Miyake, H., & Koketsu, K. (2008). Scaling of characterized slip models for plate-boundary earthquakes. Earth, Planets and Space, 60(9), 987–991.

Nocedal, J., & Wright, S. J. (2006). Numerical optimization (2nd ed.). Springer.

Okada, Y. (1985). Surface deformation due to shear and tensile faults in a half-space. Bulletin of the Seismological Society of America, 75(4), 1135–1154.

Rackwitz, R. (2001). Reliability analysis—A review and some perspectives. Structural Safety, 23(4), 365–395.

Tong, S., Vanden-Eijnden, E., & Stadler, G. (2021). Extreme event probability estimation using PDE-constrained optimization and large deviation theory, with application to tsunamis. Communications in Applied Mathematics and Computational Science, 16(2), 181–225.

Williamson, A. L., Rim, D., Adams, L. M., LeVeque, R. J., Melgar, D., & González, F. I. (2020). A source clustering approach for efficient inundation modeling and regional scale probabilistic tsunami hazard assessment. Frontiers in Earth Science, 8, 591663.

Zhan, Z., Helmberger, D., Simons, M., Kanamori, H., Wu, W., Cubas, N., Duputel, Z., Chu, R., Tsai, V. C., Avouac, J.-P., et al. (2012). Anomalously steep dips of earthquakes in the 2011 Tohoku-Oki source region and possible explanations. Earth and Planetary Science Letters, 353, 121–133.

Funding

S. T. and G. S. were partially supported by the US National Science Foundation (NSF) through Grant DMS #1723211. E. V.-E. was supported in part by the NSF Materials Research Science and Engineering Center Program Grant DMR #1420073, by NSF Grant DMS #152276, by the Simons Collaboration on Wave Turbulence, Grant #617006, and by ONR Grant #N4551-NV-ONR.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors have not disclosed any competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Tong, S., Vanden-Eijnden, E. & Stadler, G. Estimating Earthquake-Induced Tsunami Height Probabilities without Sampling. Pure Appl. Geophys. 180, 1587–1597 (2023). https://doi.org/10.1007/s00024-023-03281-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00024-023-03281-3