Abstract

We consider the Peierls model for closed polyactetylene chains with an even number of carbon atoms as well as infinite chains, in the presence of temperature. We prove the existence of a critical temperature below which the chain is dimerized and above which it is 1-periodic. The chain behaves like an insulator below the critical temperature and like a metal above it. We characterize the critical temperature in the thermodynamic limit model and prove that it is exponentially small in the rigidity of the chain. We study the phase transition around this critical temperature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

It is a well-known fact that in closed polyacetylene molecular chains having an even number of carbon atoms (e.g. benzene), the valence electrons arrange themselves one link in two. This phenomenon is well understood in the Peierls model, introduced in 1930 (see [10, p.108] and [2]), which is a simple nonlinear functional describing, in particular, polyacetylene chains. This model is invariant under 1-translations, but there is a symmetry breaking: the minimizers are dimerized, in the sense that they are 2-periodic, but not 1-periodic. This is known as Peierls instability or Peierls distortion and is responsible for the high diamagnetism and low conductivity of certain materials such as bismuth [4].

In this paper, we study the Peierls model with temperature, and describe the corresponding phase diagram. We prove the existence of a critical temperature below which the chain is dimerized and above which the chain is 1-periodic. We characterize this critical temperature and study the transition around it. In order to state our main results, let us first recall what is known for the Peierls model without temperature.

1.1 The Peierls Model at Null Temperature

We focus on the case of even chains: We consider a periodic linear chain with \(L=2N\) classical atoms (for an integer \(N \ge 2\)), together with quantum non-interacting electrons. We denote by \(t_i\) the distance between the i-th and \((i + 1)\)-th atoms and set \(\{ {{\textbf {t}}}\}:=~\{ t_1, \ldots , t_L\}\). By periodicity, we mean that the atoms indices are taken modulo L. The electrons are represented by a one-body density matrix \(\gamma \), which is a self-adjoint operator on \(\ell ^2({\mathbb {C}}^L)\), satisfying the Pauli principle \(0 \le \gamma \le 1\). In this simple model, the electrons can hop between nearest neighbor atoms and feel a Hamiltonian of the form

The Peierls energy of such a system reads [5, 8,9,10, 12]

The first term is the distortion energy of the atoms. Here, \(b > 0\) is the equilibrium distance between two atoms and \(g > 0\) is the rigidity of the chain. The second term models the electronic energy of the valence electrons (the 2 factor stands for the spin). By scaling, setting \(\tilde{t_i} = b t_i\) and \(\mu = gb\), we have \({\widetilde{{{\mathcal {E}}}}}_{\textrm{full}}^{(L)}(\{ {\tilde{t}} \}, \gamma ) = b {{\mathcal {E}}}_{\textrm{full}}^{(L)}(\{ t\}, \gamma )\), with the energy

There is only one parameter in the model, which is the strength \(\mu > 0 \). In the so-called half-filled model, this energy is minimized over all \(t_i > 0\) and all one-body density matrices (there is no constraint on the number of electrons):

One can perform the minimization in \(\gamma \) first. We get

where we used here that T is unitarily equivalent to \(-T\), so that its spectrum is symmetric with respect to the origin. The optimal density matrix in this case is \(\gamma _* = {\mathbbm {1}}(T < 0)\), which has \(\textrm{Tr} \, (\gamma _* ) = N\) electrons (hence the denomination half-filled). The energy simplifies into

The energy \({{\mathcal {E}}}^{(L)}\) only depends on \(\{ {{\textbf {t}}}\}\), and is translationally invariant, in the sense that \({{\mathcal {E}}}^{(L)}(\{{{\textbf {t}}}\}) = {{\mathcal {E}}}^{(L)}(\{\tau _k {{\textbf {t}}}\})\) where \(\{\tau _k {{\textbf {t}}}\}:= \{ t_{k+1}, \ldots , t_{k+L} \}\). However, the minimizers of this energy are usually 2-periodic, as proved by Kennedy and Lieb [5] and Lieb and Nachtergaele [7]. More specifically, they proved the following:

\(\underline{\hbox {Case}~L \equiv 0 \mod 4.}\) There are two minimizing configurations for \(E^{(2N)}\), of the form

These two configurations are called dimerized configurations [6]: they are 2-periodic but not 1-periodic. In other words, it is energetically favorable for the chain to break the 1-periodicity of the model. We prove in Appendix A that the corresponding gain of energy is actually exponentially small in the limit \(\mu \rightarrow \infty \).

\(\underline{Case~L~\equiv 2 \mod 4.}\) This case is similar, but we may have \(\delta = 0\) for small values of L, or large values of \(\mu \) (see also [6]). There is \(0< \mu _c(L) < \infty \) so that, for \(0< \mu < \mu _c(L)\), there are still two dimerized minimizers, as in (4), while for \(\mu > \mu _c(L)\), there is only one minimizer, which is 1-periodic, that is \(\delta = 0\).

In all cases (with L even), one can restrict the minimization problem to configurations \(\{ {{\textbf {t}}}\}\) of the form \(t_i = W \pm (-1)^i \delta \) and obtain a minimization problem with only two parameters.

Although L is always even in the present paper, let us mention that molecules with L odd and very large have been studied at zero temperature by Garcia Arroyo and Séré [3]. In that case one gets “kink solutions” in the limit \(L\rightarrow \infty \).

1.2 The Peierls Model with Temperature, Main Results

In the present article, we extend the results in the positive temperature case by modifying the Peierls model in order to take the entropy of the electrons into account. We denote by \(\theta \) the temperature (the letter T is reserved for the matrix in (1)). Following the general scheme described in [1, Section 4], the free energy is now given by (compare with (2))

with \(S(x):= x \log (x) + (1 - x) \log (1-x)\) the usual entropy function. We consider again the minimization over all one-body density matrices and study the minimization problem

There are now two parameters in the model, namely \(\mu \) and \(\theta \). The main goal of the paper is to study the phase diagram in the \((\mu , \theta )\) plane.

As in (3), one can perform the minimization in \(\gamma \) first (see Sect. 2.1 for the proof).

Lemma 1.1

We have

with the function

The minimization problem in the l.h.s of (6) has the unique minimizer \(\gamma _* = (1+{\textrm{e}}^{ T/\theta })^{-1}\).

The properties of the function \(h_\theta \) are given in Proposition 2.1. The free Peierls energy therefore simplifies into a minimization problem in \(\{ {{\textbf {t}}}\}\) only:

Our first theorem states that minimizers are always 2-periodic, and that they become 1-periodic when the temperature is large enough (phase transition).

Theorem 1.2

For any \(L=2N\), with N an integer and \(N \ge 2 \), there exists a critical temperature \(\theta _c^{(L)}:=\theta _c^{(L)}(\mu ) \ge 0\) such that:

-

for \(\theta \ge \theta _c^{(L)}\), the minimizer of \({{\mathcal {F}}}_\theta ^{(L)}\) is unique and 1-periodic;

-

for \(\theta \in (0, \theta _c^{(L)})\) (this set is empty if \(\theta _c^{(L)} = 0\)), there are exactly two minimizers, which are dimerized, of the form (4).

In addition,

-

(i)

If \(L\equiv 0\mod 4\), this critical temperature is positive (\(\theta _c^{(L)}(\mu ) > 0\) for all \(\mu > 0\)).

-

(ii)

If \(L\equiv 2\mod 4\), there is \(\mu _c:= \mu _c(L) > 0\) such that for \(\mu \le \mu _c\), \(\theta _c^{(L)} \) is positive (\(\theta _c^{(L)} >0\)), whereas for \(\mu > \mu _c\), \(\theta _c^{(L)} = 0\). Moreover, as a function of L we have \(\mu _c(L) \sim \frac{2}{\pi }\ln (L)\) at \(+\infty \).

This theorem only deals with an even number L of atoms. One expects a similar behavior for L odd and large, but the arguments in the proof are not sufficient to guarantee this: they only imply that the minimizer is one-periodic when the temperature is large enough (see Remark 2.4). We do not know what exactly happens for a small positive temperature and an odd number L.

We postpone the proof of Theorem 1.2 until Sect. 2. The first part uses the concavity of the function \(h_\theta \) on \(\mathbb {R}_+\), while those of i) and ii) are based on the Euler–Lagrange equations.

As in the null temperature case, minimizers are always 2-periodic; hence, the minimization problem is a minimization over the two variables W and \(\delta \). Actually, we have

with the energy per unit atom (the following expression is justified in Eq. (13))

We recognize a Riemann sum in the last expression. This suggests that we can take the thermodynamic limit \(L \rightarrow \infty \). This limit is quite standard in the physics literature on long polymers: many theoretical papers present models of polymers at null temperature that are directly written for infinite chains (see e.g [12]).

We define the thermodynamic limit free energy (per unit atom) as

As expected, we have the following (see Sect. 3.1 for the proof).

Lemma 1.3

We have \(f_\theta = \min \left\{ g_\theta (W, \delta ), \quad W \ge 0, \ \delta \ge 0 \right\} \) with

The next theorem is similar to Theorem 1.2 and shows the existence of a critical temperature for the thermodynamic model. Its proof is postponed until Sect. 3.2 and is based on the study of the Euler–Lagrange equations.

Theorem 1.4

There is a critical (thermodynamic) temperature \(\theta _c = \theta _c(\mu ) > 0\), which is always positive, and so that for all \(\theta \ge \theta _c,\) the minimizer of \(g_\theta \) satisfies \(\delta = 0\), whereas for all \(\theta < \theta _c\), it satisfies \(\delta > 0\).

In the large \(\mu \) limit, we have

This reflects the fact that for an infinite chain, there is a transition between the dimerized states (\(\delta > 0\)), which are insulating (actually, one can show that the gap of the T matrix is of order \(\delta \)), and the 1-periodic state (with \(\delta = 0\)), which is metallic, as the temperature increases. This can be interpreted as an insulating/metallic transition for polyacetylene. Such a phase transition has been observed experimentally in the blue bronze in [11]. We display in Fig. 1 (left) the map \(\mu \mapsto \theta _c(\mu )\) in the \((\mu , \theta )\) plane.

In (9), we only consider the limit \(L = 2N \rightarrow \infty \) to define the thermodynamic critical temperature \(\theta _c\). Note that the cases \(L\equiv 0\mod 4\) and \(L\equiv 2\mod 4\) merge when L tends to infinity: this is consistent with the fact that the critical stiffness \(\mu _c(L)\) tends to infinity as \(L\rightarrow \infty \) in Theorem 1.2. We also expect odd chains to behave like even chains, but the study of the odd case is more delicate since we do not have an analogue of (8) and we leave it for future work.

Finally, we study the nature of the transition. It is not difficult to see that \(\delta \rightarrow 0\) as \(\theta \rightarrow \theta _c\). Actually, there is a bifurcation around this critical temperature, see also Fig. 1 (right).

Theorem 1.5

There is \(C > 0,\) such that \(\delta (\theta ) = C\sqrt{(\theta _c - \theta )_+} + o\left( \sqrt{(\theta _c - \theta )_+}\right) .\)

We postpone the proof of Theorem 1.5 until Sect. 3.3. It mainly uses the implicit function theorem. The value of C is explicit and is given in the proof.

2 Proofs in the Finite Chain Peierls Model with Temperature

We now provide the proofs of our results. We gather in this section the proofs of the finite \(L = 2N\) model and postpone the ones of the thermodynamic model to the next section.

2.1 Proof of Lemma 1.1 and Properties of the h Functional

First, we justify the functional \({{\mathcal {F}}}^{(L)}_\theta \) appearing in (7) and provide the proof of Lemma 1.1.

Proof

We study the minimization problem

Any critical point \(\gamma ^*\) of the functional satisfies the Euler–Lagrange equation

There is therefore only one such critical point, given by

By convexity of the functional, this critical point is the minimizer. For this one-body density matrix, we obtain using (10)

Finally, since T is unitary equivalent to \(-T\), we have \(\textrm{Tr} \, (T) = 0\). This gives as wanted

\(\square \)

Let us gather here some properties of the function \(h_\theta \) that we will use throughout the article.

Proposition 2.1

We have \(h_\theta (x) = \theta h \left( \frac{x}{4 \theta ^2} \right) \) and \(h'_\theta (x) = \frac{1}{4 \theta } h' \left( \frac{x}{4 \theta ^2} \right) \), with

In particular, h (hence \(h_\theta \)) is positive, increasing and concave. We have \(\lim _{y \rightarrow 0} h'(y) = 1\), and the inequality \(h_\theta (x) \ge \sqrt{x}\), valid for all \(\theta > 0\) and all \(x \ge 0\). In addition, we have the pointwise convergence \(h_\theta (x) \rightarrow \sqrt{x}\) as \(\theta \rightarrow 0\).

The last part shows that we recover the model at zero temperature. The concavity of h comes from the fact that \(h'\) is positive and decreasing. Another way to see concavity is that \(h_\theta (t) = \min _{0 \le g \le 1} 2 \{ t g + \theta S(g) \}\) is the minimum of linear functions (in t), hence concave. The inequality \(h_\theta (x) \ge \sqrt{x}\) comes from \(2 \cosh (x) \ge {\textrm{e}}^x\).

2.2 Proof of Theorem 1.2: Existence of a Critical Temperature

We now study the minimizers of \({{\mathcal {F}}}^{(L)}_\theta (\{ {{\textbf {t}}}\})\) in (7), which we recall is given by

First, we prove that the minimizers are always 2-periodic. We then study the existence of a critical temperature. For the first part, our strategy follows closely the argument of Kennedy and Lieb in [5] and relies on the concavity of \(h_\theta \).

\(\underline{\hbox {All minimizers are}\,\,2-\hbox {periodic}}\). Recall that if \(x \mapsto \varphi (x)\) is concave over \(\mathbb {R}_+\); then, \(A \mapsto \textrm{Tr} \, (\varphi (A))\) is concave over the set of positive matrices. Applying this property to \(h_\theta \) which is concave on \(\mathbb {R}_+\), we have

where \(\langle T^2 \rangle \) is defined as in [5] as the average of \(T^2\) over all translations:

This implies the lower bound

where

In addition, we have equality in (11) iff the optimal \(\{{{\textbf {t}}}\}\) for \(G_\theta ^{(L)}\) satisfies \(T(\{ {{\textbf {t}}}\})^2 = \langle T(\{ {{\textbf {t}}}\})^2 \rangle \). Note that

So we have \(T(\{ {{\textbf {t}}}\})^2 = \langle T(\{ {{\textbf {t}}}\})^2 \rangle \) iff \(t_i^2 + t_{i+1}^2\) and \(t_i t_{i+1}\) are independent of i. This happens only if T is 2-periodic.

Introducing the variables (our notation slightly differ from the ones in [5]: we put \(z^2\) instead of z, so that all quantities (x, y, z) are homogeneous)

we obtain \(\langle T^2 \rangle = 2 y^2 \mathbb {I}_L + z^2 \Omega _L\) with \(\Omega _L:= \Theta _2 + \Theta _2^*\), and

The function \({\widetilde{{{\mathcal {G}}}}}^{(L)}_\theta \) is much easier to study, as it only depends on the three variables (x, y, z). Let us identify the triplets (x, y, z) coming from a 2-periodic or 1-periodic state.

Lemma 2.2

-

For all \({{\textbf {t}}}\in \mathbb {R}^L_+\), the corresponding triplet (x, y, z) belongs to

$$\begin{aligned} X:= \left\{ (x, y, z) \in \mathbb {R}^3_+, \quad y^2 \ge x^2, \quad z^2 \ge \max \{ 0, 2 x^2 - y^2 \} \right\} . \end{aligned}$$ -

If \(L = 2N\) is even, the configuration \({{\textbf {t}}}\) is 2-periodic of the form (4) iff the triple (x, y, z) belongs to

$$\begin{aligned}{} & {} X_2:= \left\{ (x, y, z) \in \mathbb {R}^3_+ \ \text {of the form} \ x = W,\, y^2= W^2 + \delta ^2, \ z^2 = W^2 - \delta ^2 \right\} . \end{aligned}$$This happens iff \(z^2 = 2x^2 - y^2\).

-

The configuration \({{\textbf {t}}}\) is 1-periodic, of the form \({{\textbf {t}}}= (W, \ldots , W)\) iff (x, y, z) belongs to

$$\begin{aligned} X_1:= \left\{ (x, y, z) \in \mathbb {R}^3_+ \ \text {of the form} \ x = y = z = W \right\} . \end{aligned}$$This happens iff \(z^2 = 2x^2 - y^2\) and \(x = y\).

Proof

By Cauchy–Schwarz, we have

which is the first equality. Next, we have

On the other hand, we have by Cauchy–Schwarz,

This proves that \(z^2 \ge 2 x^2 - y^2\). The other parts of the lemma can be easily checked. \(\square \)

Lemma 2.3

For any integer \(L > 2\) and all \(\theta \ge 0\), the minimizers of \({\widetilde{{{\mathcal {G}}}}}^{(L)}_\theta \) over X belong to \(X_2\).

Proof

Let us fix x and y, and look at the minimization over the variable z only. Setting \(Z:= z^2\), we see that

is concave. In addition, the derivative of \(\varphi \) at \(Z = 0\) equals

where we used that \(\Omega _L\) only has null elements on its diagonal. We deduce that \(\varphi \) is decreasing on \(\mathbb {R}_+\). So the minimizer of \({\widetilde{{{\mathcal {G}}}}}^{(L)}_\theta \) must saturate the lower bound constraint \(z^2 = \max \{ 0, 2x^2 - y^2\}\).

We now claim that the optimal triplet (x, y, z) satisfies \(2x^2 - y^2 \ge 0\). Assume otherwise that \(2x^2 - y^2 < 0\), hence \(z^2 = 0\). We have

This function is decreasing in x, so the optimal x saturates the constraint \(x^2 = y^2\). But in this case, we have \(2x^2 - y^2 = y^2 \ge 0 \), a contradiction. This proves that, for the optimizer, we have \(2 x^2 - y^2 \ge 0\), and \(z^2 = 2x^2 - y^2\). Finally, (x, y, z) belongs in \(X_2\). \(\square \)

Let \((x_*, y_*, z_*) \in X_2\) be the minimizer of \({\widetilde{{{\mathcal {G}}}}}_\theta ^{(L)}\), and let \(W \ge 0\) and \(\delta \ge 0\) be so that \(x_* = W\), \(y_*^2 = W^2 + \delta ^2\), and \(z_* = W^2 - \delta ^2\). Let \({{\textbf {t}}}_*\) be one of the two 2-periodic states \(W \pm (-1)^i \delta \). We have \(T(\{ {{\textbf {t}}}_* \})^2 = \langle T(\{ {{\textbf {t}}}_* \})^2 \rangle \), which leads to the chain of inequalities

We therefore have equalities everywhere. Since only the 2-periodic states \(W \pm (-1)^i \delta \) give the optimal triplet \((x_*, y_*, z_*)\), they are the only minimizers. This proves that all minimizer of \({{\mathcal {F}}}_\theta ^{(L)}\) are 2-periodic. They are two dimerized minimizers if \(\delta > 0\), and a unique 1-periodic minimizer if \(\delta = 0\).

Remark 2.4

In the case of odd chains, we still have the equation \(F_\theta ^{(L)} \ge G_\theta ^{(L)}\) in (11). However, the optimal triplet \((x_*, y_*, z_*)\) does not usually come from a state \(\{ {{\textbf {t}}}_* \}\): an odd chain cannot be dimerized. It can, however, come from such a state if \(\delta = 0\), that is, if \({{\textbf {t}}}_*\) is actually one-periodic. One can therefore prove that also for odd chains, minimizers become 1-periodic for large enough temperature.

\(\underline{\hbox {Existence of the critical temperature}.}\) Since all minimizers are 2-periodic, we can parametrize \({{\mathcal {G}}}_\theta ^{(L)}\) as a function of \((W, \delta )\) instead of \(\{ {{\textbf {t}}}\}\). So we write (in what follows, we normalize by L to get the energy per atom)

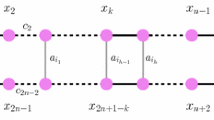

To compute the last trace, we compute the spectrum of \(\Omega _L\). We have, for all \(1 \le k \le L\),

So

This shows that

which is the expression given in (8). The function \(g_\theta \) appearing in Lemma 1.3 has a similar expression, but we replace the last Riemann sum by the corresponding integral.

First, we prove that for \(\theta \) large enough, the minimizer is 1-periodic (corresponding to \(\delta = 0\)).

Lemma 2.5

For all \(\theta \ge \frac{1}{\mu }\), the minimizer of \({{\mathcal {G}}}_\theta ^{(L)}\) satisfies \(\delta = 0\). The same holds for the function \(g_\theta \) (thermodynamic limit case).

Proof

We prove the result in the thermodynamic limit, but the proof works similarly at fixed L. Let \((W_1, 0)\) denote the minimizer of \(g_\theta \) among 1-periodic configurations (that is with the extra constraint that \(\delta = 0\)). Writing that \(\partial _W g_\theta (W_1, 0)= 0\), we obtain that

For any other configurations \((W, \delta )\), we write \(W = W_1 + \varepsilon \) and obtain that

Using that h is concave, we have \(h(a + b) - h(a) \le h'(a)b\), so, with \(a = W_1^2 \cos ^2(s) / \theta ^2\) and \(b = \left[ \delta ^2 \sin ^2(s) + (2W_1\varepsilon + \varepsilon ^2) \cos ^2( s) \right] /\theta ^2\), we get

Using (14), the term linear in \(\varepsilon \) vanishes. In addition, since \(h'' < 0\) on \(\mathbb {R}_+\), we have \(h'(x) \le ~h'(0)=~1\). This gives

The right-hand side is positive whenever \(\theta >\frac{1}{\mu }\), which proves the result. \(\square \)

In what follows, we define the critical temperature \(\theta _c = \theta _c(\mu )\) by

We define similarly \(\theta _c^{(L)} = \theta _c^{(L)}(\mu )\) for the case of finite chains.

\(\underline{\hbox {Study of the critical temperature in the case}\, L \in 2 \mathbb {N}.}\) We now study \(\theta _c^{(L)}\) with \(L = 2N\), \(N \ge 2\), and prove that it is strictly positive if \(L \equiv 0 \mod 4\), and that, if \(L \equiv 2 \mod 4\), there is \(\mu _c = \mu _c(L)\) so that \(\theta _c^{(L)}(\mu ) > 0\) iff \(\mu < \mu _c(L)\).

For fixed \(\theta \), any minimizing configuration \((W, \delta )\) satisfies the Euler–Lagrange equations

This gives the set of equations

Note that the second equation always admits the trivial solution \(\delta = 0\). This corresponds to the critical point among 1-periodic configurations. It is the unique solution if \(\theta \ge \theta _c^{(L)}\), but for \(\theta \in (0, \theta _c^{(L)})\), there are other critical points, corresponding to the dimerized configurations. Actually, as \(\theta \) varies, we expect two branches of solutions: the branch of 1-periodic configuration and the branch of dimerized configurations. These two branches cross only at \(\theta = \theta _c\) (see Fig. 1 (right)).

In order to focus on the branch of dimerized configurations, we factor out the \(\delta \) factor in the second equation. Now, \(\delta = 0\) is no longer a solution, unless we are exactly at the critical temperature \(\theta _c^{(L)}\). So, in order to find this critical temperature, we seek the solution, in \((W, \theta )\), of (we multiply the second equation by W for clarity)

Lemma 2.6

For all \(\mu > 0\), there is a unique solution \((W, \theta )\) of (16) in the case \(L~=~0\mod 4\), whereas if \(L=2\mod 4\), there is some value \(\mu _c:=\mu _c(L)\) such that for all \(\mu > \mu _c\), (16) has no solution and has a unique one if \(\mu \le \mu _c\). Moreover, in the last case \(\mu _c(L)\sim \frac{2}{\pi }\ln (L)\) at \(+\infty \).

Proof

We write \(L = 2N\) and note that the terms k and \(k + N\) gives the same contribution. Taking the difference of the second and first equations of (16), we obtain

Recall that \(h'(t) = \frac{\tanh (\sqrt{t})}{\sqrt{t}}\) for \(t\ne 0\) and \(h'(0) = 1\). The point \(t = 0\) therefore plays a special role. The argument of \(h'\) equals 0 for \(k =\frac{N}{2}\), which happens only if \(N \equiv 0 \mod 2\) (that is \(L \equiv 0 \mod 4\)). In this case, the equation becomes, with \(x:= \frac{W}{\theta }\) (we write \(L = 2N = 4n\))

The function \({{\mathcal {J}}}_{2n}\) is smooth. The first sum is uniformly bounded for \(x \in \mathbb {R}_+\) while the second diverges, so \({{\mathcal {J}}}_{2n} = 0\) and \({{\mathcal {J}}}_{2n}(+ \infty ) = + \infty \). We claim that \({{\mathcal {J}}}_{2n}\) is increasing. The intermediate value theorem then gives the existence and uniqueness of the solution of \({{\mathcal {J}}}_{2n}(x) = \mu \) on \(\mathbb {R}_+\). This gives \(\frac{W}{\theta } = {{\mathcal {J}}}_{2n}^{-1}(\mu )\). We then deduce, respectively, \(\theta \) and W from the first and second equations of (16). This proves that (16) has a unique solution. The corresponding temperature is the critical temperature \(\theta _c^{(L)}\).

It remains to prove that \({{\mathcal {J}}}_{2n}\) is increasing. Splitting the sum in (17) into 2 sums of size \((n-1)\), we get

Its derivative is given by

For all \(s \in [0, 1]\), the function

is positive (both terms are positive if \(s \in [0, 1/2]\), and both are negative if \(s \in [1/2, 1]\)). This shows that \({{\mathcal {J}}}_{2n}\) is increasing as wanted.

In the case \(N \equiv 1 \mod 4\) (that is \(L = 2 \mod 4\)), the argument of \(h'\) is never null, and we simply have (we write \(L = 2N = 4n + 2\))

We claim again that \({{\mathcal {J}}}_{2n+1}\) is increasing (see below). However, we now have

If \(\mu \in (0, \mu _c(L))\), we can apply again the intermediate value theorem and deduce that the equation \({{\mathcal {J}}}_{2N}(x) = \mu \) has the unique solution \(x = {{\mathcal {J}}}_{2n+1}^{-1}(\mu )\). We deduce as before that there is unique solution of system (16) in this case. If instead \(\mu > \mu _c(L)\), then the system (16) has no solution.

Let us prove that \({{\mathcal {J}}}_{2n+1}\) is increasing (this will eventually prove that \(\mu _c(L) > 0\). Its derivative is given by

In the last equality, we isolated the \(k = 2n+1\) term, and use the change of variable \(k' = 2n+1 - k\) for \(n+1 \le k \le 2n\). When \(1 \le k \le n/2\), we have \(\cos \left( \frac{2k\pi }{2n+1}\right) \ge 0\), while \(\frac{1}{\sqrt{2}} \le \cos (\tfrac{k \pi }{2n+1}) \le 1\). On the other hand, if \(n/2 \le k \le n\), we have \(\cos \left( \frac{2k\pi }{2n+1}\right) \le 0\), and \(0 \le \cos (\tfrac{k \pi }{2n+1}) \le \frac{1}{\sqrt{2}}\). In both cases, we deduce that

Summing over k, and using that

we obtain the lower bound

which proves that \({{\mathcal {J}}}_{2n+1}\) is increasing.

Finally, we estimate \(\mu _c(L)\), defined in (18). We rewrite \(\mu _c(L)\) as

We recognize a Riemann sum in the first term. Since the function f is integrable on [0, 1] (there is no singularity at \(s = \frac{1}{2}\)), this term converges to the integral of f. For the second term, we recognize a harmonic sum. More specifically, we have

This proves that \(\mu _c(L) \sim \frac{2}{\pi }\log (L)\) at \(+\infty \) and completes the proof. \(\square \)

3 Proofs in the Thermodynamic Model

We now focus on the thermodynamic model.

3.1 Proof of Lemma 1.3: Justification of the Thermodynamic Model

First, we show that this model is indeed the limit of the finite chain model as \(L \rightarrow \infty \). We denote by \(f_\theta ^{(2N)}\) the minimum of \(g_\theta ^{(2N)}\) (so \(f_\theta ^{(2N)} = \frac{1}{2N} F_\theta ^{(2N)}\)) and by \({\widetilde{f}}_\theta \) the minimum of \(g_\theta \). Our goal is to prove that \({\widetilde{f}}_\theta = f_\theta \), where we recall that \(f_\theta := \liminf _N f_\theta ^{(2N)}\).

We denote by \((W_{2N}, \delta _{2N})\) the optimizer of \(g_\theta ^{(2N)}\), and by \((W_*, \delta _*)\) the one of \(g_\theta \). First, from the pointwise convergence \(g_\theta ^{(2N)}(W, \delta ) \rightarrow g_\theta (W, \delta )\), we obtain

For the other in equality, we use that so \(h_\theta (x)\) \(+2\theta \ln (2)\), \(\le \sqrt{x}\)

In particular, \(g_\theta ^{(2N)}\) is lower bounded and coercive, uniformly in N. So if \((W_{2N}, \delta _{2N})\) denotes the optimizer of \(g_\theta ^{(2N)}\), the sequence \((W_{2N}, \delta _{2N})\) is bounded in \(\mathbb {R}^2_+\). Up to a not displayed subsequence, we may assume that

We then have

The first limit converges to \(g_\theta (W_\infty , \delta _\infty )\), by continuity of the \(g_\theta \) functional. For the second limit, we use that \(g_\theta ^{(2N)} - g_\theta \) is the difference between an integral and a corresponding Riemann sum. If \({{\mathcal {I}}}_N(s)\) denotes the integrand, this difference is controlled by \(\frac{c}{2N} \sup _{s} \Vert {{\mathcal {I}}}_N'(s) \Vert \). In our case, \({{\mathcal {I}}}_N(s) = h_\theta (4 W_{2N}^2 \cos ^2(\pi s) + 4 \delta _{2N}^2 \sin ^2(\pi s))\), whose derivative is uniformly bounded in N, since \((W_{2N}, \delta _{2N})\) is bounded. This proves that the last limit goes to zero, hence

We conclude that \(f_\theta = {\widetilde{f}}_\theta \). In particular, by uniqueness of the minimizer of \(g_\theta \), we must have \((W_\infty , \delta _\infty ) = (W_*, \delta _*)\), and the whole sequence \((W_{2N}, \delta _{2N})\) converges to \((W_*, \delta _*)\).

3.2 Proof of Theorem 1.4: Estimation of the Critical Temperature

We now study the properties of \(\theta _c\), the critical temperature in the thermodynamic limit. Reasoning as in the finite L case, the critical temperature \(\theta _c\) can be found by solving the equations in \((W, \theta )\) (compare with (16))

Using again the expression \(h'(t):= \frac{\tanh (\sqrt{t})}{\sqrt{t}}\), and splitting the integrals between \((0, 2 \pi )\) into four of size \(\pi /2\), this is also

Let us prove that this system always admits a unique solution. The proof is similar to the previous \(L \equiv 0 \mod 4\) case. Taking the difference of the two equations gives, with \(x:= \frac{W}{\theta }\),

The function \({{\mathcal {J}}}\) is derivable on \(\mathbb {R}_+\) with derivative given by

The integrand is positive for all \(s \in [0, s/4]\), so \({{\mathcal {J}}}\) is a strictly increasing function on \(\mathbb {R}_+\), and since \({{\mathcal {J}}}([0, +\infty )) = [0, +\infty )\), we get \(x = \frac{W}{\theta } = {{\mathcal {J}}}^{-1}(\mu )\). The first equation of (19) gives

This proves that \(\theta _c\) is well defined and depends only on \(\mu \).

We now estimate this critical temperature. We are interested in the large \(\mu \) limit. First, since \(\mathbb {R}\ni u\mapsto \tanh (u)\) is a bounded function, the first equation shows that \(\mu (W - 1)\) is uniformly bounded in \(\mu \), so \(W = 1 + O(\mu ^{-1})\) as \(\mu \rightarrow \infty \). Then, we must have \(\theta \rightarrow 0\) as \(\mu \rightarrow \infty \) in order to satisfy the second equation. Using the dominated convergence in the first integral gives

so the first equation gives

We now evaluate the integral of the right-hand side in the second equation, in the limit \(\theta \rightarrow 0\). It is convenient to make the change of variable \(s \mapsto \pi /2 - s\), so we compute

In order to evaluate \(I(\theta )\) as \(\theta \rightarrow 0\), we write \(I = I_1 + I_2\) with

For the first integral, we make the change of variable \(u = \frac{W}{\theta } \sin (s)\) and get

The value of \(c_1\) is computed numerically to be \(c_1 \approx 0.8188\). For the second integral \(I_2\), we remark that the integrand is uniformly bounded in \(\theta \) and s, so \(I_2 = O(1)\). Actually, since \(\theta \rightarrow 0\), we have by the dominated convergence theorem that

Altogether, we obtain that

Together with the second equation of (19), we obtain

which gives, as wanted, in the limit \(\mu \rightarrow \infty \)

3.3 Proof of Theorem 1.5: Study of the Phase Transition

In the previous section, we found the critical temperature. We now study the bifurcation of \(\delta \) around this temperature. The critical points of \(g_\theta \) are given by the Euler–Lagrange equations

Recall that one can remove the 1-periodic minimizers by factoring out \(\delta \) in the second equation. This gives a set of equation involving \(\delta \) through the variable \(\Delta := \delta ^2\) only. In what follows, we fix \(\mu \), and set (we multiply the equations by \(\theta /W\) in order to have simpler computations afterward)

Recall that \({{\mathcal {F}}}\left( \theta _c; (W_*, 0) \right) = (0, 0)\), where \(W_*\) is the optimal W at the critical temperature. If \({{\mathcal {F}}}\left( \theta ; (W, \Delta ) \right) = (0, 0)\) with \(\Delta > 0\), the configurations \((W, \pm \sqrt{\Delta })\) are minimizers of \(g_\theta \). If \({{\mathcal {F}}}\left( \theta ; (W, \Delta ) \right) = (0, 0)\) with \(\Delta < 0\), it does not correspond to a physical solution.

We want to apply the implicit function theorem for \({{\mathcal {F}}}\) at the point \((\theta _c; (W_*, 0))\). In order to do so, we first record all derivatives. We denote by \({{\mathcal {F}}}= ({{\mathcal {F}}}_1, {{\mathcal {F}}}_2)\) the components of \({{\mathcal {F}}}\). The derivatives of \({{\mathcal {F}}}\), evaluated at \(\Delta = 0\), \(\theta = \theta _c\) and \(W = W_*\) are given by

where we set (we split the integral in four parts of size \(\pi /2\))

Since h is concave, A, B and C are negative. In addition, by Cauchy–Schwarz, we have

The Jacobian \(J:= \left( \partial _{(W, \Delta )} {{\mathcal {F}}}\right) (\theta _c; (W_*, 0))\) is of the form

Since \(C < 0\) and \(B^2 - AC < 0\), we have \(\det J > 0\), so J is invertible. We can therefore apply the implicit function theorem for \({{\mathcal {F}}}\) at \((\theta _c, (W_*, 0))\). There is a function \(\theta \mapsto (W(\theta ), \Delta (\theta )) \) so that, locally around \((\theta _c, (W_*, 0))\), we have

The derivatives \((W'(\theta ), \Delta '(\theta ))\) are given by

This gives

We claim that \(B \ge A\) (for the proof see below). This shows that \(\Delta '(\theta _c) < 0\). So, restoring the variable \(\delta ^2\), we have

It remains to prove that \(B \ge A\). This comes from the fact that \(h''\) is increasing negative. First, we notice that |A| and |C| are of the form

with \(f(s):= \left| h''(W_*^2 \cos ^2(s)/\theta _c^2) \right| \) and \(g(s):= \cos ^4(s)\). The functions f and g are both decreasing on \([0, \frac{\pi }{2}]\). By re-arrangement, we deduce that \(| A | > | C |\). Actually, we have

Together with Cauchy–Schwarz in (21), this gives \(| B |^2 \le | A | \cdot | C | < | A |^2\), since A and B are negative, we get \(B > A\), as wanted. This concludes the proof of Theorem 1.5.

References

Bach, V., Lieb, E.H., Solovej, J.P.: Generalized Hartree-Fock theory and the Hubbard model. J. Stat. Phys. 76(1), 3–89 (1994)

Fröhlich, H.: On the theory of superconductivity: the one-dimensional case. Proc. R. Soc. Lond. Ser. A. Math. Phys. Sci. 223(1154), 296–305 (1954)

Garcia Arroyo, M., Séré, E.: Existence of kink solutions in a discrete model of the polyacetylene molecule. Preprint hal-00769075 (2012)

Jones, H.: Applications of the Bloch theory to the study of alloys and of the properties of bismuth. Proc. R. Soc. Lond. Ser. A-Math. Phys. Sci. 147(861), 396–417 (1934)

Kennedy, T., Lieb, E.H.: Proof of the Peierls instability in one dimension. In: Condensed Matter Physics and Exactly Soluble Models, pp. 85–88. Springer (2004)

Kivelson, S., Heim, D.: Hubbard versus Peierls and the Su-Schrieffer-Heeger model of polyacetylene. Phys. Rev. B 26(8), 4278 (1982)

Lieb, E.H., Nachtergaele, B.: Stability of the Peierls instability for ring-shaped molecules. Phys. Rev. B 51(8), 4777 (1995)

Lieb, E.H., Schultz, T., Mattis, D.: Two soluble models of an antiferromagnetic chain. Ann. Phys 16(3), 407–466 (1961)

Macris, N., Nachtergaele, B.: On the flux phase conjecture at half-filling: an improved proof. J. Stat. Phys. 85(5–6), 745–761 (1996)

Peierls, R.E.: Quantum Theory of Solids. Clarendon Press (1996)

Pouget, J.P., Kagoshima, S., Schlenker, C., Marcus, J.: Evidence for a Peierls transition in the blue bronzes K0. 30MoO 3 and Rb0. 30MoO3. J. de Phys. Lettres 44(3), 113–120 (1983)

Su, W.P., Schrieffer, J.R., Heeger, A.: Solitons in polyacetylene. Phys. Rev. Lett. 42(25), 1698 (1979)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Vieri Mastropietro.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Gain of Energy in the Thermodynamic Limit

Appendix A: Gain of Energy in the Thermodynamic Limit

In this section, we prove that the gain of energy due to Peierls dimerization is exponentially small in \(\mu \). We focus on the thermodynamic limit case (although the proof is similar in the \(L \in 2 \mathbb {N}\) case). We also focus only on the null temperature case \(\theta = 0\). In this case, the thermodynamic energy reads

We introduce

In other words, \(f_0\) is the minimum of \(g_0\) over 2–periodic (and all) configurations, and \(f_{0, \textrm{per}}\) is the minimum over 1-periodic configurations. We prove the following

Theorem A.1

There is \(C > 0\) such that, for all \(\mu \) large enough,

In other words, the energy gained by the Peierls distorsion is exponentially small in the \(\mu \) parameter. The first inequality states that in the thermodynamic limit at null temperature, the minimizers are always dimerized, as first proved by Kennedy and Lieb [5].

Proof

Let us first compute \(W_1\), the optimizer of \(g_0(W, 0)\). This is simply the minimum of

The minimizer satisfies \(\mu (W_1 - 1) = \frac{4}{\pi }\), hence \(W_1 = 1 + \frac{4}{\pi \mu }\). In particular,

We now compute the energy gain from the breaking of periodicity. For \((W, \delta )\) a trial pair, we write \(W = W_1 + \varepsilon .\) We assume that \(g_0(W,\delta )< g_0(W_1,0). { \mathrm Then} \)

To compute the integral, we make the change of variable \(u=\cos (s),\) and get that the integral equals

Using that

where E is a complete elliptic integral of the second kind, we get

We now minimize the right-hand side. For large \(\mu \), we have \(W_1 \approx 1\) and the minimization in \(\varepsilon \) gives \(\varepsilon = 0\). So

We optimize the right-hand side by taking \(\delta = {\textrm{e}}^{-(\frac{\pi }{4}\mu + \frac{1}{2})} \), and this completes the proof. \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Gontier, D., Kouande, A.E.K. & Séré, É. Phase Transition in the Peierls Model for Polyacetylene. Ann. Henri Poincaré 24, 3945–3966 (2023). https://doi.org/10.1007/s00023-023-01299-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00023-023-01299-w