Abstract

Background

Implementation of the new competency-based post-graduate medical education curriculum has renewed the push by medical regulatory bodies in Canada to strongly advocate and/or mandate continuous quality improvement (cQI) for all physicians. Electronic anesthesia information management systems contain vast amounts of information yet it is unclear how this information could be used to promote cQI for practicing anesthesiologists. The aim of this study was to create a refined list of meaningful anesthesia quality indicators to assist anesthesiologists in the process of continuous self-assessment and feedback of their practice.

Methods

An initial list of quality indicators was created though a literature search. A modified-Delphi (mDelphi) method was used to rank these indicators and achieve consensus on those indicators considered to be most relevant. Fourteen anesthesiologists representing different regions across Canada participated in the panel.

Results

The initial list contained 132 items and through 3 rounds of mDelphi the panelists selected 56 items from the list that they believed to be top priority. In the fourth round, a subset of 20 of these indicators were ranked as highest priority. The list included items related to process, structure and outcome.

Conclusion

This ranked list of anesthesia quality indicators from this modified Delphi study could aid clinicians in their individual practice assessments for continuous quality improvement mandated by Canadian medical regulatory bodies. Feasibility and usability of these quality indicators, and the significance of process versus outcome measures in assessment, are areas of future research.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Background

Continuing professional development (CPD) refers to the ongoing process of developing new knowledge, skills, and competencies necessary to maintain and improve professional practice. Continuing quality improvement (cQI) is a systematic approach to assessing and improving quality of care by professionals which involves collecting data on indicators of quality and using this data to identify areas for improvement and develop strategies to address them [1]. Anesthesia quality indicators are specific measures used to assess clinical care in anesthesia. Ongoing learning and professional development with change implementation informed by regular feedback using quality indicators that are transparent, reliable, evidence-based, measurable, and improvable is critical to ensuring anesthesiologists continue to provide high-quality and relevant care that meets the needs of their patients.

Recent changes in post-graduate medical education (PGME) training in Canada have necessitated changes in continuing professional development (CPD) requirements for practicing clinicians. While the adoption of competency-based education has fully penetrated anesthesia postgraduate medical education (PGME) training programs in Canada, it is in much earlier stages of implementation in the continuing education realm beyond PGME. The current Royal College of Physicians and Surgeons of Canada (RCPSC) Maintenance of Certification (MOC) program, the national CPD program for specialists, states that, “All licensed physicians in Canada must participate in a recognized revalidation process in which they demonstrate their commitment to continued competent performance in a framework that is fair, relevant, inclusive, transferable, and formative” [1]. This mandate for continuing quality improvement (cQI) applies not only to physicians but also to the national specialty societies providing continuing professional development resources to their physician members.

The Federation of Medical Regulatory Authorities of Canada (FMRAC) published a document titled, “Physician Practice Improvement” in 2016, with the goal of supporting physicians in their continuous commitment to improve their practice [2]. Their suggested five-step iterative process involves (1) understanding your practice, (2) assessing your practice, (3) creating a learning plan, (4) implementing the learning plan, and (5) evaluating the outcomes.

In 2018, the CPD report from The Future of Medical Education in Canada (FMEC) project was published [3]. In this report, principle #2 states, “The new continuing professional development (CPD) system must be informed by scientific evidence and practice-based data” and should, “…encourage practitioners to look outward, harness the value of external data, and focus on how these data should be received and used”, stressing the importance of the data being from physicians’ own practices.

Although these reports make clear a link between competency-based continuing professional development as a physician in practice and the importance of gathering and analyzing physician specific data, it neither provides guidance on what data is relevant for anesthesia, nor how to gather it. While many national organizations including the Canadian Anesthesiologists’ Society publish Guidelines for the Practice of Anesthesia [4], these guidelines are distinct from practice quality indicators. Internationally, national anesthesia specialty societies and safety groups have published lists of anesthesia quality indicators, but the evidence for many of these indicators is weak and not broad-based. Haller et al. published a systematic review of quality indicators in anesthesia in 2009; however, the focus was neither on physician CPD nor cQI [5]. An important distinction exists between the goal of this study and from that of the recent Standardized Endpoints in Perioperative Medicine and the Core Outcome Measures in Perioperative and Anesthetic Care (StEP-COMPAC) initiative, which focused on establishing clear definitions for outcomes for clinical trials [6,7,8,9,10], and not for physician performance improvement.

Therefore, a need currently exists for a list of quality indicators that are relevant to physicians’ goals of continuing quality improvement and ongoing professional development. Furthermore, as electronic anesthesia information management systems (AIMS) become ubiquitous, it is essential that a list of indicators relevant to individuals and the anesthesia community be developed to forward the goal of competency-based CPD. Ideally these indicators would be readily extractable from an AIMS. The purpose of this study was to create a list of anesthesia quality indicators for anesthesiologists to help guide self-assessment and continuing quality improvement.

Methods

This study received Johns Hopkins Institutional Review Board application acknowledgement (HIRB00008519) on May 27, 2019.

The original Delphi method, first described by Dakley and Helmer in 1962 [11], was used as a method to generate specific information for United States National Defense using a panel of selected experts starting with an open questionnaire. The modified Delphi technique was used to streamline the time and effort of the participants, and the modification involved starting with a pre-selected set of items identified by a literature search rather than with an open questionnaire.

The literature search was performed with the help of a medical health informationist by a review of the literature published between 2009 and 2019 in Pubmed, including Ovid Medline and Cochrane content, using the search protocol outlined in Supplementary Table S1.

Retrieved articles were reviewed by the principal author to determine the relevance to the topic. Inclusion criteria included items deemed to be anesthesia quality indicators in systematic reviews completed within 10 years of the study start date, anesthesia quality indicators currently in use in Canadian academic institutions, anesthesia quality and safety indicators in published articles in peer-reviewed journals, anesthesia quality indicators identified in the Anesthesia Quality Institute National Anesthesia Clinical Outcomes Registry, as well as any additional items generated by the panel. The list of anesthesia quality indicators was reviewed by the second author prior to distribution. The focus on the last ten years of published data helped ensure that the indicators were the most up-to-date available.

Selection of the Delphi panel was based on a stratified random sampling technique [11]. Anesthesiologists representing the different regions across Canada were identified and approached based on their active involvement in the Canadian Anesthesiologists’ Society Continuing Education & Professional Development Committee, Quality & Patient Safety Committee, Standards Committee, Association of Canadian University Departments of Anesthesia Education Committee, the Royal College of Physicians and Surgeons of Canada Specialty Committee in Anesthesiology, or academic involvement. A minimum of 12 participants was sought to ensure validity of the responses [12]. Written informed consent was obtained from all participants.

The survey was created using a matrix table question type with a 2-point binary scale (agree/disagree) with a single answer option. The survey was optimized for mobile devices and each item had an adjacent textbox for comments. Additional items and general comments were solicited at the end of each survey round. A reiteration of the study purpose, research questions, and instructions were emailed to participants along with an anonymous link to the survey. The surveys required 10 to 15 min to complete. Panelists were given a window of 2 weeks to complete each survey round, with 4 weeks between each round.

All responses were gathered anonymously and tallied by Qualtrics survey collection and analysis software (Johns Hopkins University access). Consensus was a priori defined as agreement of greater than 70% of the group. With 14 panelists, a consensus was equivalent to 10 or more points on any given item. Subsequent Delphi rounds were planned to continue until stability with less than 15% change in responses from the previous round was achieved. Items that reached consensus would be removed and not recirculated. New items generated by the panelists, items that did not reach consensus, and panelist comments were shared anonymously in subsequent rounds.

Results

A total of 28 articles published on anesthesia quality indicators from 2009 to 2019 were identified. A subset of these articles was useful for item generation [5,6,7,8,9,10, 13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30], including several systematic reviews [5, 6, 8,9,10, 21, 25, 30]. Review of the American Society of Anesthesiologists Anesthesia Quality Institute and Wake Up Safe websites, as well as communication with anesthesia quality experts (separate from the study panel) from two academic centers, provided additional information. A total of 132 anesthesia quality indicators were identified for the initial round of the study. These indicators are presented in Supplementary Table S2.

Twenty-one Canadian anesthesiologists were approached and fourteen consented to participate. A consent form was emailed to those who expressed interest in participating and those who returned a signed consent form were included in the study.

An expert is a person who has a high degree of skill and knowledge in a particular field or subject, acquired through training, education, and experience. They are considered to be authoritative and capable of providing valuable advice and guidance in their area or expertise. The members of the expert panel for this study were identified based on their ongoing involvement with the Canadian Anesthesiologists’ Society (CAS) Continuing Education and Professional Development Committee, the CAS Quality & Patient Safety Committee, the CAS Standards Committee, the Canadian Journal of Anesthesia editorial board, the Royal College of Physicians & Surgeons of Canada Specialty Committee in Anesthesiology, the Association of Canadian University Departments of Anesthesia Education Committee, and University of Toronto Department of Anesthesiology & Pain Medicine faculty.

This expert panel was representative of the different regions across Canada (British Columbia 1; Alberta 2; Manitoba 3; Ontario 4; Quebec 1; Nova Scotia 1; Newfoundland 2). The group spanned all levels of practice with 2 members in practice for < 5 years, 2 members between 5 and 10 years in practice, and the remaining 10 members in practice for > 10 years. There were 7 self-identified females and 7 self-identified males on the panel (Table 1. Panelist Demographics).

Iterations

For Rounds 1 through 3, expert panelists were given the following instructions, “The following items are elements of quality in anesthesia care. Please evaluate each item or event to determine if you think it is reasonable and appropriate for use as a measure of an individual anesthesiologist’s practice by ticking ‘agree’ or ‘disagree”.

Round 1

One hundred thirty-two indicators were circulated to the panel in the initial round. Thirteen out of 14 participants (93%) responded to the survey. Item response rate variability; one hundred twenty-one items had 13 respondents, 10 items had 12 respondents, and 1 item had 11 respondents. Consensus (> 70%) was achieved for 85 items (83 accept; 2 reject). The item with only 11 responses reached consensus to reject. The 85 items that reached consensus were removed from the list and 47 items remained. By combining the 47 remaining items with 9 new items generated from the panel, a total of 56 items were prepared for circulation in the next round.

Round 2

Fifty-six items were circulated. Twelve out of 14 participants (86%) responded. Item response rate variability: 50 items had 12 respondents; 6 items had 11 respondents. Consensus (> 70%) was achieved for 13 items (11 accept; 2 reject). The 13 items that reached consensus were removed from the list and 43 items remained. Since the process produced duplicate items in concept, with differing wording, both authors reviewed and curated the list to combine duplicate items without adding or removing any concepts, and in so doing the list was condensed down to 37 items. An example of this process is that failed spinal block, incomplete spinal block, and postdural puncture headache were 3 separate items and were combined into a single item, “complications of neuraxial block”.

Round 3

Of the 37 items circulated, consensus was achieved for 7 items (5 accept; 2 reject). Thirteen out of 14 participants (93%) responded. Item response rate variability: 35 items had 13 respondents; 2 items had 12 respondents. The thirty items that did not reach consensus were eliminated.

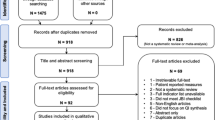

After 3 rounds, a total of 132 items were evaluated. Ninety-nine items were accepted with greater than 70% consensus. Six items out of 132 were rejected with greater than 70% consensus. Nine new items were generated from the panel. Items that reached consensus were not recirculated to panelists. Significant redundancy in the 99 items that reached consensus was eliminated by combining items, reducing the list to 56 items (Fig. 1).

There was a 10-month pause between rounds 3 and 4 due to Covid19 pandemic related disruptions. In the 4th round, the 56-item list was sent out to the study panel with specific instructions to “select 20 anesthesia quality indicators from the list below that you believe to be of top priority in the continuous self-assessment and feedback of an anesthesiologists’ practice”. The electronic survey tool required exactly 20 responses, ranking of these 20 responses was not required. All 14 study panelists responded in the Round 4. Table 2 ranks the 56 indicators according to the number of votes received from the panel in Round 4.

Discussion

The overall goal of this initiative was to answer the question of whether in the current era of competency-based medical education and the increasing use of electronic medical records and AIMS, can a consensus list of indicators be identified to aid clinicians and Departments in promoting practice and performance improvement by measuring, analyzing, and using the data to improve the quality of anesthetic care. This process requires establishing a list of anesthesia quality indicators as an essential first step. Our study determined that airway complications, incidence & duration of perioperative adverse events, number of medical errors, patient satisfaction, perioperative residual neuromuscular blockade requiring intervention by an anesthesiologist, patient temperature less than 35.5 Celsius on arrival to PACU, complications of or failed neuraxial block, and incidence of severe PONV to be the most important anesthesia specific quality indicators for continuous self-assessment and feedback of an anesthesiologist’s practice.

It is useful to determine the type of categories under which these quality indicators can be grouped. In a seminal manuscript, Donabedian categorized quality indicators into 3 groups: structure (supportive and administrative), process (provision of care), and outcomes (measurable and patient related) [31]. In their 2009 systematic review Haller et al. identified 108 quality indicators (only 40% of which were validated beyond face validity), and found that 57% were outcome metrics, 42% measured process of care metrics, and 1% were structure-related metrics [5]. Hamilton et al. (2021) reviewed regional anesthesia quality indicators and found that 76% of 68 identified items were outcome measures, 18% process of care measures, and 6% structure-related [25]. Our findings identified 56 consensus quality indicators, 52% were outcome-related, 35% were process-related, and 12% were structure-related indicators. This is in agreement with other studies, with the top results being primarily outcome indicators, followed by process and then structure. Process indicators in anesthesia can be difficult to measure because there is variability in practice between providers and healthcare settings that make it difficult to develop standardized processes. Anesthesia care is a complex process with multiple steps making measuring and tracking time-consuming and resource intensive. Smaller health care settings and outpatient procedures may have limited opportunity to collect data on anesthesia processes. There is also a lack of consensus among healthcare providers regarding the most important process to measure and track in anesthesia care. For all these reasons, there are relatively less process indicators compared to outcome quality indicators in anesthesia.

Demographic indicators were included in the outset of this study because items such as surgical service, surgical priority, ASA status, caseload, number of GA cases, number of spinals provide a snapshot of an individual anesthesiologist’s practice and serves to help clinicians understand and assess their practice by following the first 2 steps of the FMRAC’s 5-step iterative process to practice improvement: (1) understanding your practice and (2) assessing your practice.

Perioperative mortality is a notably absent quality indicator in this study. Benn et al. noted that as the anesthesia specialty has been at the forefront of improving safety in healthcare, significant morbidity and mortality attributable to anesthesia has decreased significantly over the last half century. Mortality is a poor anesthesia quality indicator because it is rare and usually related to factors outside the anesthesiologists’ control. Data from the UK reveals that less than 1% of all patients undergoing surgery die during the same hospital admission and perioperative mortality of a healthy elective patient undergoing surgery is a mere 0.2% [32].

Relying on expert opinion and consensus, the modified Delphi technique was intentionally chosen for this study because a strong level of evidence for most anesthesia quality indicators is lacking. Expert opinion, therefore, provides a level of face validity. Advantages of the modification, include improved initial round response rates, solid grounding in previously developed work, reduced effect of bias due to group interaction, and assured anonymity while providing controlled feedback to participants [33]. The variable item response rates on the Delphi rounds are a common challenge to this method despite measures to prevent panel attrition including, (1) ensuring each round required less than 15 min to complete, (2) not recirculating items that reached consensus, and (3) using two options agree/disagree rather than a rank scale (e.g. Likert). Large datasets containing many items is a recognized challenge. However, previous attempts to reduce fatigue by creating competency subsets, sub-panels, or rotational modifications were largely unsuccessful, resulting in an increased number of rounds and introduction of bias, while being subject to the same factors that threaten the validity of any Delphi study (lack of experts on the panel, lack of clear content definition, poorly developed initial dataset) [34]. The item response rates of the 14-member panel ranged between 86 and 100% indicating that there was a consistent level of interest among the group members in participating in this study.

The modified Delphi study begins with a list of pre-selected items, but also gives panel members opportunity to generate new items. The elements of quality can be used to define what is considered to be good quality, and the specific quality indicators can be selected to measure and track each element. The term ‘element of quality’ was used in the instruction to panel members to keep the process open, inclusive, and as broad-based as possible as there may be newly emerging elements of anesthesia care or that have yet to be fully defined or properly studied, that could be added to the list for consideration.

Using quality indicators with the intent of providing effective feedback to improve quality requires that indicators be transparent, reliable, evidence-based, measurable, and improvable. Feedback processes should be regular, continuously updated, comparative to peers, non-judgmental, confidential, and from a credible source [32]. EMR/AIMS is an excellent source of data with these qualities yet requires time, technological skills, and institutional financial investments to initiate and maintain. The intent of this study was to focus on quality indicators extractable from an EMR/AIMS, and the participants were informed of this goal in the introduction to this study. Nonetheless, many of the indicators proposed by the participants are broad and may not be easily extractable from an electronic system. The use of EMR/AIMS in Canada at the time of the study was highly variable in both the availability of use and the specific software being used and may have contributed to the generated item list not including exclusively extractable items. This discrepancy between intent and outcomes of this study are indicative of the challenges of identifying, gathering, and distilling the massive quantity of extractable data from an EMR/AIMS.

There were several limitations to this study. The final list of generated items in Table 1 has been reviewed and items marked with an asterisk have been deemed to be most likely to be extractable from an EMR, based on the quantitative nature of the item, recognizing there is heterogeneity in the data mining capabilities of various electronic records and that the quality of data extraction is directly related to the quality and detail of input data. For example, aspects of care which are multi-dimensional, such as patient satisfaction would be more difficult to extract from most EMR’s compared to a concise, focused element, such as measured temperature of less than 35.5 Celsius on arrival in the post anesthetic care unit. Some institutions might consider an automated dashboard using indicators which would require efforts to set up but once in place could provide ongoing, on-demand clinician feedback [35]. Quality indicators can be used in a balanced score card or a quality clinical dashboard for the purposes of continuing quality improvement. The balanced scorecard approach functions by linking clinical indicators to an organization’s mission and strategy in a multi-dimensional framework. A quality clinical dashboard is used to provide clinicians with relevant and timely information that informs decisions and helps monitor and improve patient care [26]. Regardless of the feedback methods, effecting lasting change in clinician practice and patient outcomes can be challenging.

Although efforts were made to obtain broad national geographic representation of participants and individuals were chosen based on their background in education and quality improvement, it is recognized that some valuable data may have been overlooked by not including allied health workers and patients in this study. However, continuous performance improvement, a form of Lean Improvement [36], emphasizes the tenets that ideas for improvement originate from people who do the work and that it is essential to understand the work process before trying to fix it. Additionally, a recent study by Bamber et al. [27]., found that both allied health members and patients included in their study demonstrated significant participant attrition of both these groups through the Delphi process. A consensus face-to-face meeting was not included in our study to reduce the risk of nuances lost in virtual meetings during the pandemic and because the panel had anesthesiologists of different career stages, to mitigate the potential influence of senior panelists on junior panelists voicing differing opinions and to reduce the risk of ‘group think’.

Our study was paused after the third round to avoid attrition as the participants were dealing the clinical challenges at the onset of the COVID-19 pandemic. The fourth and last round of this study is a slight deviation from the original study methods and was decided on after the authors recognized the need to prioritize the list of indicators. Twenty items were chosen to aid the reader in prioritizing these indicators.

While there remain questions regarding how these indicators can be best used, as well as hurdles related to cost of implementation and end-user buy-in, it is recognized that comprehensive practice assessment must be based on more than data collected from an electronic record. The next steps in this project would be to further refine those indicators that are both feasible to collect and most desirable to end users.

Conclusion

This study has identified and prioritized a list of 56 anesthesia quality indicators deemed to be both relevant to an anesthesiologist’s practice and obtainable from an electronic record. This is an essential step in the goal of aiding clinicians and departments in meeting ongoing cQI requirements recommended by professional societies and medical regulatory bodies.

Data Availability

The datasets generated and/or analyzed during the current study are not publicly available because the institutional rules strictly prohibit releasing the native data on the web but are available from the corresponding author on reasonable request.

Abbreviations

- CPD:

-

continuing professional development

- MOC:

-

maintenance of certification

- RCPSC:

-

Royal College of Physicians and Surgeons of Canada

- AQI:

-

anesthesia quality indicators

- EMR:

-

electronic medical records

- AIMS:

-

anesthesia information management system

- mDelphi:

-

modified Delphi

- ASA:

-

American Society of Anesthesia

- cQI:

-

continuing quality improvement

- FMRAC:

-

Federation of Medical Regulatory Authorities of Canada

- GA:

-

general anesthesia

- PGME:

-

post-graduate medical education

References

Sergeant J et al. Royal College of Physicians and Surgeons of Canada Competency-based CPD white paper series no. 3: Assessment and feedback for continuing competence and enhanced expertise in practice. http://www.royalcollege.ca/rcsite/cbd/cpd/competency-cpd-white-paper-e (2018).

Federation of Medical Regulatory Authorities of Canada. Physician practice improvement. https://fmrac.ca/wp-content/uploads/2016/04/PPI-System_ENG.pdf (2016).

Campbell C, Sisler J. Collective Vision A for CPD in Canada Collective Vision A for CPD in Canada the future of medical education in canada l’avenir de l’éducation médicale au canada supporting learning and continuous practice improvement for physicians in canada: a new way forward summary report of the future of medical education in canada continuing professional development (fmec cpd) project Physician Learning and Practice Improvement. (2019).

Dobson G, et al. Guidelines to the practice of anesthesia - revised Edition 2022. Can J Anaesth. 2022;69:24–61.

Haller G, Stoelwinder J, Myles PS, Mcneil J. Quality and Safety Indicators in Anesthesia A Systematic Review. Anesthesiology vol. 110 http://pubs.asahq.org/anesthesiology/article-pdf/110/5/1158/247299/0000542-200905000-00034.pdf (2009).

Bampoe S, et al. Clinical indicators for reporting the effectiveness of patient quality and safety-related interventions: a protocol of a systematic review and Delphi consensus process as part of the international standardised endpoints for Perioperative Medicine initiati. BMJ Open. 2018;8:e023427.

Boney O, Moonesinghe SR, Myles PS, Grocott M. P. W. standardizing endpoints in perioperative research. Can J Anaesth. 2016;63:159–68.

Moonesinghe SR, et al. Systematic review and consensus definitions for the standardised Endpoints in Perioperative Medicine initiative: patient-centred outcomes. Br J Anaesth. 2019;123:664–70.

Abbott TEF, et al. A systematic review and consensus definitions for standardised end-points in perioperative medicine: pulmonary complications. Br J Anaesth. 2018;120:1066–79.

Haller G, et al. Systematic review and consensus definitions for the standardised Endpoints in Perioperative Medicine initiative: clinical indicators. Br J Anaesth. 2019;123:228–37.

Dakley N, Helmer O. An experimental application of the Delphi Method to the Use of experts | enhanced reader. United States Air Force under Project RAND https://www.rand.org/content/dam/rand/pubs/research_memoranda/2009/RM727.1.pdf (1962).

Burns KEA, et al. A guide for the design and conduct of self-administered surveys of clinicians. CMAJ • JULY. 2008;179:245–52.

Grant MC, et al. The impact of anesthesia-influenced process measure compliance on length of Stay: results from an enhanced recovery after surgery for colorectal surgery cohort. Anesth Analg. 2019;128:68–74.

Hensley N, Stierer TL, Koch CG. Defining quality markers for Cardiac Anesthesia: what, why, how, where to, and who’s on Board? J Cardiothorac Vasc Anesth. 2016;30:1656–60.

Murphy PJ. Measuring and recording outcome. Br J Anaesth. 2012;109:92–8.

Pediatric Anesthesia Quality Improvement Initiative. Wake Up Safe. http://wakeupsafe.org/.

Varughese AM, et al. Quality and safety in pediatric anesthesia. Anesth Analg. 2013;117:1408–18.

Shapiro FE, et al. Office-based anesthesia: safety and outcomes. Anesth Analg. 2014;119:276–85.

Sites B, Barrington M, Davis M. Using an international clinical registry of regional anesthesia to identify targets for quality improvement. Reg Anesth Pain Med. 2014;39:487–95.

Walker EMK, Bell M, Cook TM, Grocott MPW, Moonesinghe SR. Patient reported outcome of adult perioperative anaesthesia in the United Kingdom: a cross-sectional observational study. Br J Anaesth. 2016;117:758–66.

Chazapis M, et al. Perioperative structure and process quality and safety indicators: a systematic review. Br J Anaesth. 2018;120:51–66.

Choi CK, Saberito D, Tyagaraj C, Tyagaraj K. Organizational performance and regulatory compliance as measured by clinical pertinence indicators before and after implementation of Anesthesia Information Management System (AIMS). J Med Syst. 2014;38:1–6.

Dobson G, et al. Procedural sedation: a position paper of the canadian Anesthesiologists’ Society. Can J Anaesth. 2018;65:1372–84.

Glance LG, et al. Feasibility of Report Cards for Measuring Anesthesiologist Quality for Cardiac surgery. Anesth Analg. 2016;122:1603–13.

Hamilton GM, MacMillan Y, Benson P, Memtsoudis S, McCartney CJ. L. Regional anaesthesia quality indicators for adult patients undergoing non-cardiac surgery: a systematic review. Anaesthesia. 2021;76(Suppl 1):89–99.

Motamed C, Bourgain JL. An anaesthesia information management system as a tool for a quality assurance program: 10years of experience. Anaesth Crit Care Pain Med. 2016;35:191–5.

Bamber JH, et al. The identification of key indicators to drive quality improvement in obstetric anaesthesia: results of the Obstetric Anaesthetists’ Association/National Perinatal Epidemiology Unit collaborative Delphi project. Anaesthesia. 2020;75:617–25.

Zhu B, Gao H, Zhou X, Huang J. Anesthesia Quality and Patient Safety in China: a Survey. Am J Med Qual. 2018;33:93–9.

Anesthesia Quality Institute National Anesthesia Clinical Outcomes Registry. 2022 QCDR Measure Specifications | Version 1.0. (2022).

van der Veer SN, de Keizer NF, Ravelli ACJ, Tenkink S, Jager KJ. Improving quality of care. A systematic review on how medical registries provide information feedback to health care providers. Int J Med Inform. 2010;79:305–23.

Donabedian A. The quality of care: how can it be assessed? JAMA: The Journal of the American Medical Association. 1988;260:1743–8.

Benn J, Arnold G, Wei I, Riley C, Aleva F. Using quality indicators in anaesthesia: feeding back data to improve care. Br J Anaesth. 2012;109:80–91.

Custer RL, Scarcella JA, Stewart BR. The modified Delphi technique - A rotational modification. J Career Tech Educ 15, (1999).

Eubank BH, et al. Using the modified Delphi method to establish clinical consensus for the diagnosis and treatment of patients with rotator cuff pathology. BMC Med Res Methodol. 2016;16:56.

Laurent G, et al. Development, implementation and preliminary evaluation of clinical dashboards in a department of anesthesia. J Clin Monit Comput. 2021;35:617–26.

Womack JP, Jones DT. Lean Thinking—Banish Waste and Create Wealth in your Corporation. https://doi.org/10.1057/palgrave.jors.2600967 48, 1148 (2017).

Acknowledgements

Special thanks to Dr. G. Bryson, Dr. L. Filteau, Dr. H. Pellerin, Dr. M. Sullivan, Dr. H. Grocott, Dr. J. Loiselle, Dr. P. Collins, Dr. K. Sparrow, Dr. S. Rashiq, Dr. M. Cohen, Dr. M.J. Nadeau, Dr. F. Manji, Dr. R. Merchant, Dr. S. Microys, Dr. M. Thorleifson, and Dr. V. Sweet for their time and input on this study.

Funding

The authors declare they have no funding.

Author information

Authors and Affiliations

Contributions

MSY and JT collected and analyzed the data, wrote the main manuscript, prepared the figures and tables, reanalyzed the data, and revised the manuscript. Both authors reviewed and approved the final manuscript for submission.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study received Johns Hopkins Institutional Review Board application acknowledgement (HIRB00008519) on May 27, 2019. Written informed consent was obtained from all participants. All methods were performed in accordance with the relevant guidelines and regulations.

Consent for publication

Not applicable.

Competing interests

The authors declare they have no competing interests.

Additional information

Consent for publication: not applicable.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Yee, MS., Tarshis, J. Anesthesia quality indicators to measure and improve your practice: a modified delphi study. BMC Anesthesiol 23, 256 (2023). https://doi.org/10.1186/s12871-023-02195-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12871-023-02195-w