Abstract

On-the-go soil sensors have emerged as promising tools for real-time, high-resolution soil nutrient monitoring in precision agriculture. This review provides a comprehensive overview of the current state-of-the-art in on-the-go soil sensor technology, discussing the potential benefits, limitations, and applications of various sensor types, including optical sensors (Vis-NIR, MIR, ATR spectroscopy) and electrochemical sensors (ISEs, ISFETs). The integration of these sensors with positioning systems (GPS) enables the generation of detailed soil nutrient maps, which can guide site-specific management practices and optimize fertilizer application rates. However, factors such as soil moisture, texture, and heterogeneity can affect sensor performance, necessitating robust calibration models and standardized protocols. Future perspectives highlight the need for multi-sensor systems, incorporation into IoT networks for smart farming, and enhancing affordability and adoptability of on-the-go sensor technologies to promote widespread adoption in precision agriculture.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

INTRODUCTION

Soil nutrient management plays a critical role in optimizing crop productivity, minimizing environmental impacts, and ensuring the sustainability of agricultural systems [55]. Precision agriculture, which aims to manage spatial and temporal variability within fields, heavily relies on accurate and timely information about soil nutrient status [60]. Traditional soil testing methods involve collecting soil samples from the field, sending them to the laboratory for analysis, and waiting for the results [6]. This process is time-consuming, labor-intensive, and often fails to capture the high spatial variability of soil nutrients within fields. Moreover, the low sampling density and the delay between sampling and receiving results limit the applicability of traditional methods for real-time nutrient management decisions.

In recent years, there has been a growing interest in developing on-the-go soil sensors that can measure soil nutrient concentrations in real-time, directly in the field [14, 54, 67]. These sensors have the potential to revolutionize soil nutrient management by providing high-resolution, site-specific information that can be used to optimize fertilizer application rates [33], reduce nutrient losses [53], and improve overall nutrient use efficiency [45]. On-the-go soil sensors can be integrated with other precision agriculture technologies, such as global positioning systems (GPS) and variable rate application equipment, to enable real-time, site-specific nutrient management [49].

Several types of on-the-go soil sensors have been developed, each with its own advantages and limitations. Optical sensors, such as visible and near-infrared (Vis-NIR) spectroscopy [43], mid-infrared (MIR) spectroscopy [24], attenuated total reflectance (ATR) spectroscopy [59], and Raman spectroscopy [18], use the interaction of electromagnetic radiation with soil constituents to estimate soil nutrient concentrations. These sensors are non-destructive, require minimal sample preparation, and can provide rapid measurements. However, their performance can be affected by soil moisture, texture, and the presence of interfering substances. Electrochemical sensors, such as ion-selective electrodes (ISEs) [3] and ion-selective field effect transistors (ISFETs) [65], use the electrical properties of soil solutions to measure the concentration of specific ions, such as nitrate, potassium, and phosphate [66]. These sensors are sensitive, selective, and can provide real-time measurements, but their performance can be influenced by factors such as soil pH, temperature, and the presence of interfering ions.

The integration of on-the-go soil sensors with positioning systems, such as GPS, allows for the creation of high-resolution soil nutrient maps that can be used to delineate management zones within fields [49]. These maps can help farmers identify areas with nutrient deficiencies or excesses, and adjust fertilizer application rates accordingly. By applying fertilizers only where and when they are needed, farmers can optimize crop yields, reduce input costs, and minimize the risk of nutrient losses to the environment. Moreover, the use of on-the-go soil sensors can facilitate the implementation of variable rate application technologies, which can further improve the precision and efficiency of nutrient management [62].

Despite the potential benefits of on-the-go soil sensors, there are still several challenges that need to be addressed to ensure their reliable and effective use in precision agriculture. One of the main challenges is the influence of soil heterogeneity on sensor performance. Soil properties, such as moisture content, texture, organic matter, and pH, can vary significantly within fields, and these variations can affect the accuracy and reproducibility of sensor measurements [63]. Therefore, calibration models that account for the specific soil conditions of each field may be necessary to ensure the reliability of sensor readings [2]. Additionally, the quality of soil-to-sensor contact and the sampling depth can also impact the performance of on-the-go sensors, particularly for sensors that require direct contact with the soil, such as ISEs and ISFETs [52]. Another challenge is the need for efficient data management and interpretation tools to process the large amounts of data generated by on-the-go soil sensors [40]. The integration of sensor data with other sources of information, such as yield maps, soil surveys, and weather data, can provide a more comprehensive understanding of the factors influencing soil nutrient dynamics and crop performance. However, this integration requires the development of advanced data analytics and decision support tools that can help farmers translate sensor data into actionable management decisions.

The motivation for writing this review stems from the need to provide a comprehensive overview of the current state-of-the-art in on-the-go soil sensor technology and its applications in precision agriculture. The rapid advancements in sensor technologies, data analytics, and precision agriculture practices have created new opportunities for real-time soil nutrient monitoring and management. However, the adoption of these technologies in real-world agricultural settings is still limited by various technical, economic, and practical challenges. This review aims to synthesize the existing knowledge on on-the-go soil sensors, discuss their potential benefits and limitations, and identify the key research gaps and future directions in this field. By doing so, we hope to stimulate further research and development efforts to overcome the current challenges and promote the widespread adoption of on-the-go soil sensors in precision agriculture. Ultimately, the goal is to provide farmers and agricultural professionals with the tools and knowledge needed to optimize soil nutrient management, improve crop productivity, and ensure the sustainability of agricultural systems in the face of growing global food demands and environmental pressures.

TYPES OF ON-THE-GO SOIL SENSORS

Optical sensors. Optical sensors have emerged as promising tools for on-the-go soil nutrient monitoring due to their non-destructive nature, rapid measurement capabilities, and potential for high-resolution soil mapping. These sensors rely on the interaction of electromagnetic radiation with soil constituents to estimate soil nutrient concentrations. The most common types of optical sensors used for on-the-go soil sensing include Vis-NIR spectroscopy, MIR spectroscopy, ATR spectroscopy, and Raman spectroscopy.

Vis-NIR spectroscopy operates in the wavelength range of 400–2500 nm and has been widely used for estimating various soil properties, including soil organic matter, clay content, and nutrient concentrations. Vis-NIR spectroscopy measures the reflectance or absorbance of light by soil samples [47], which is influenced by the presence of specific chemical bonds and functional groups. The resulting spectra can be analyzed using multivariate calibration techniques, such as partial least squares regression (PLSR) or principal component regression (PCR), to develop predictive models for soil nutrient concentrations [10, 30, 57]. For example, Reyes and Ließ [43] explored the effectiveness of on-the-go Vis-NIR spectroscopy for assessing soil organic carbon (SOC) at a field scale. Employing a two-step modeling process, they first used PLSR to correlate spectral data with SOC content, followed by ordinary kriging to spatially interpolate the PLSR predictions. They tested various spectral preprocessing techniques and semivariogram models to optimize the SOC predictions. The combination of Savitzky–Golay preprocessing with a Gaussian semivariogram model emerged as the most accurate, achieving a root mean square error (RMSE) of 1.24 g kg–1 (Figs. 1a-1c). Similarly, the Gap-Segment derivative preprocessing paired with the Gaussian model yielded an RMSE of 1.26 g kg–1. The study also tackled potential issues like the striping effect caused by transect-based data collection, suggesting that increasing spatial separation, data aggregation, and block kriging could effectively mitigate these effects. In another work, Rodionov et al. [46] developed a tractor-mounted measuring chamber to conduct on-the-go visible and Vis-NIRS for the assessment of SOC in arable fields. This chamber was designed to standardize field spectra acquisition, ensuring consistent and optimized illumination conditions, which is crucial for accurate SOC prediction. During field tests, the chamber, attached to a tractor, could collect spectra both in a stop-and-go mode and while moving at a speed of 3 km/h (Fig. 1d). The system’s performance was evaluated by comparing the SOC predictions with standard laboratory elemental analyses. The results were promising, indicating that the on-the-go Vis-NIRS system could predict SOC with a root mean squared error of cross-validation (RMSECV) below 0.73 g SOC kg–1 soil and a ratio of performance to deviation (RPD) greater than 2.0. These metrics suggest that the system could reliably estimate SOC concentrations across varying field conditions.

MIR spectroscopy operates in the wavelength range of 2500–25 000 nm and is sensitive to the fundamental vibrations of chemical bonds in soil constituents. MIR spectroscopy has been shown to provide more accurate predictions of soil nutrient concentrations compared to Vis-NIR spectroscopy, particularly for soil organic carbon and total nitrogen [8]. Hutengs and co-workers [24] investigated the effectiveness of handheld MIR spectroscopy for in-field estimation of SOC. They analyzed 90 agricultural loess soil samples using both MIR and VIS-NIR spectroscopy, performing measurements directly in the field and on laboratory-prepared samples. The study revealed that in situ MIR spectroscopy yielded more precise SOC estimates than VIS-NIR, with a lower RMSE of prediction by approximately 5 g/kg. However, when compared to laboratory measurements on finely ground samples, the accuracy of in-field MIR decreased notably, with an RMSE increase of up to 2 g/kg. This reduction in performance was attributed to factors such as soil moisture variability and surface heterogeneity, which affected the spectral data quality. To overcome these limitations, some researchers have combined MIR spectroscopy with other techniques, such as ATR spectroscopy or diffuse reflectance spectroscopy, to improve the robustness and accuracy of soil nutrient predictions. For instance, Hong et al. [22] explored the efficacy of combining VIS-NIR and MIR spectroscopy for predicting SOC. They employed two distinct strategies for data fusion: a straightforward concatenation of the full absorbance spectra and a more refined approach using selected predictors derived from an optimal band combination (OBC) algorithm. To enhance the spectral data, continuous wavelet transform (CWT) was applied both pre- and post-fusion (Fig. 2). The study, set in Belgium, gathered soil samples from various farms and used PLSR to develop SOC prediction models. Models utilizing the CWT-optimized, fused data outperformed those based on raw spectral data, demonstrating the value of data transformation and fusion techniques in improving SOC estimation. Specifically, the concatenated full-spectrum model achieved an R2 value of 0.82 and a RMSE of 3.45 g/kg for SOC prediction, indicating a strong predictive capability. Meanwhile, the OBC algorithm-enhanced model further refined the prediction accuracy, yielding an R2 of 0.85 and an RMSE of 3.12 g kg–1.

Model construction based on VIS-NIR and MIR spectroscopy for SOC measurement [22].

ATR spectroscopy is a variant of infrared spectroscopy that allows direct measurement of soil samples without extensive sample preparation. ATR spectroscopy uses a crystal with a high refractive index (e.g., diamond, germanium, or zinc selenide) to measure the changes in the totally internally reflected infrared beam when it comes into contact with the soil sample. The resulting spectrum is similar to that of transmission spectroscopy but with a reduced penetration depth into the sample. ATR spectroscopy has been used for field precision fertilizer management. Rogovska et al. [48] evaluated a handheld ATR-FTIR spectrometer as a soil nitrate sensor. The performance of the ATR-FTIR spectrometer was assessed through two datasets: one from field samples and another from laboratory-prepared samples. In the field dataset, 720 spectra from 124 average raw spectra were analyzed, while the laboratory dataset involved 844 spectra from 135 average raw spectra. The results showed that the ATR-FTIR spectrometer could predict soil nitrate concentrations with a standard error margin that would be acceptable for real-time fertilizer application adjustments.

In summary, optical sensors, particularly Vis-NIR, MIR, and ATR, have shown great potential for on-the-go soil nutrient monitoring. These sensors provide rapid, non-destructive, and high-resolution measurements of soil nutrient concentrations, which can be used to generate detailed soil maps and guide precision nutrient management decisions. However, the performance of these sensors is influenced by various soil factors, such as moisture content, texture, and organic matter, which can affect the accuracy and reproducibility of the predictions. Therefore, robust calibration models and standardized protocols are needed to ensure the reliability and transferability of the sensor measurements across different soil types and environmental conditions. Furthermore, the integration of optical sensors with other sensing technologies, such as electrochemical sensors or soil penetration resistance sensors, can provide a more comprehensive assessment of soil nutrient status and improve the overall performance of on-the-go soil sensing systems.

Electrochemical sensors. Electrochemical sensors have emerged as another promising technology for on-the-go soil nutrient monitoring. These sensors measure the electrical properties of soil solutions to determine the concentration of specific ions, such as nitrate, potassium, and phosphate. The two main types of electrochemical sensors used for on-the-go soil sensing are ISEs and ISFETs.

ISEs are electrochemical sensors that measure the activity of specific ions in a solution. ISEs consist of a sensing electrode (working electrode) and a reference electrode, which are connected through a selective membrane that allows only the ion of interest to pass through [16]. The potential difference between the sensing and reference electrodes is proportional to the logarithm of the ion activity in the solution, as described by the Nernst equation. ISEs have been widely used for on-the-go soil nutrient monitoring due to their simplicity, low cost, and ability to provide real-time measurements [3]. For example, Chen et al. [12] developed an all-solid-state ISE for the direct measurement of soil nitrate-nitrogen (\({\text{NO}}_{3}^{ - }\)-N). This electrode was uniquely crafted using a nanohybrid composite film that combined gold nanoparticles (AuNPs) with electrochemically reduced graphene oxide (ERGO), enhancing its sensitivity and stability. Laboratory tests were conducted using a 3-stage column to simulate in-situ conditions, where variables such as soil texture, moisture, and NO3−-N content were carefully manipulated to assess the electrode’s performance. The ISE showcased a robust detection range from 10–5 to 10–1 M and a theoretical detection limit of 10−(5.2 ± 0.1) M. Notably, the ERGO/AuNPs composite film significantly outperformed its ERGO-only counterpart in key aspects such as sensitivity, accuracy, and response time, with the latter clocking in at approximately 10 s. The hydrophobic nature of the film also contributed to the electrode’s impressive lifetime of 65 days, which is superior to many existing nitrate ISEs. The recovery rate for the ISE was between 91.2–109.7%, indicating high repeatability for soil nutrient detection. When field-testing the ISE for in-situ monitoring, results from soil percolate \({\text{NO}}_{3}^{ - }\)-N closely mirrored those from laboratory-prepared extract solutions, suggesting that the ISE could reliably track \({\text{NO}}_{3}^{ - }\)-N content variations in actual soil environments.

One of the main challenges in using ISEs for on-the-go soil sensing is the need for frequent calibration due to drift and sensitivity to environmental factors, such as temperature and humidity. Normally, the calibration of the ISEs for soil pH, potassium, and nitrate content involved a meticulous process to ensure accurate soil property mapping [56]. The ISEs were calibrated using standard buffer solutions for pH, and an integrated calibration solution containing KNO3 for potassium and nitrate ISEs, with Na2SO4 as the ionic strength adjuster (ISA) to maintain consistent ionic strength across measurements. The calibration equation was based on the Nernst equation, relating the electrode potential to the ion activity of the sample. The calibration process was done before each experiment and after every set of soil samples to account for any drift in the electrode readings. The pH electrode required separate calibration due to interference issues with the nitrate PVC membranes when using pH buffering compounds. The automatic calibration system that can perform in-situ calibration of the ISEs during the measurement process is a challenge.

Ion-selective field effect transistors (ISFETs) are another type of electrochemical sensor that have been used for on-the-go soil nutrient monitoring [11]. ISFETs are similar to ISEs in that they measure the activity of specific ions in a solution, but instead of using a selective membrane, they use a semiconductor device (field effect transistor) to convert the ion activity into an electrical signal. The gate of the ISFET is coated with a selective membrane that allows only the ion of interest to interact with the gate insulator, modulating the current flow through the transistor. ISFETs have several advantages over ISEs, including higher sensitivity, faster response time, and the ability to be miniaturized and integrated into multi-sensor arrays [58]. However, ISFETs are also more susceptible to interference from other ions and require more complex instrumentation and data processing compared to ISEs.

Archbold et al. [7] reviewed the operational principles of ISFETs, their non-idealities, and the practical aspects of their simulation. It also delved into the necessary electronic instrumentation and provided examples of ISFET applications in agricultural settings. Performance specifics indicated that ISFETs could deliver real-time soil analysis, which is critical for the high-resolution data collection that precision agriculture demands. However, the review also identified several challenges that hinder the widespread adoption of ISFET technology in agriculture. These included environmental influences on sensor stability, the complexity of soil matrix interactions, and the need for robust calibration methods to ensure accuracy. The review underscored a significant gap in the adoption of ISFET sensors in agriculture compared to other fields like biomedical sciences. It suggested that more research and case studies focusing on ISFET instrumentation for soil analysis could foster their usage in precision agriculture.

Several researchers have developed ISFET-based systems for on-the-go soil nutrient monitoring. For example, Joly et al. [25] designed a silicon chip equipped with ISFET microsensors to monitor soil nutrients in wheat crops, focusing on the nitrogen cycle. The chip’s pH-ISFET sensors proved capable of accurately measuring soil pH over a six-month period, matching standard methods with a maximum deviation of 0.5 pH units and unaffected by soil moisture levels. For the first time, the adaptation of pH-ISFET to detect nitrate and ammonium ions allowed in-situ tracking of natural soil nitrogen fluctuations due to microbial activity. The microsensors demonstrated a quasi-Nernstian sensitivity of 59.0 mV per unit change in ammonium ion concentration and 56.2 mV for nitrate ions. They also had detection limits of 3.2 µM for ammonium and 17 µM for nitrate, aligning with standard mineral nitrogen levels in agricultural fields. Recently, they also demonstrated a pH-sensitive chemical field effect transistor (pH-ChemFET) platform to fabricate ISFETs capable of detecting ammonium and nitrate ions in soil with high sensitivity and selectivity [26]. These sensors, pNH4-ISFET and pNO3-ISFET, incorporated fluoropolysiloxane polymer matrices that adhered well to silicon-based films, enhancing the longevity of the ion-sensitive layers (Fig. 3a). The pNH4-ISFET showed a sensitivity range of 53–56 mV/pNH4 within a concentration range of 10–1.5 to 10–4.5 M, while the pNO3-ISFET demonstrated a similar sensitivity range for nitrate ions. Notably, the pNO3-ISFET exhibited quasi-Nernstian behavior with an impressive measurement accuracy of ±1 mV and a response time of approximately two minutes, which is particularly advantageous for in-situ soil analysis where swift measurements are crucial. These performance metrics were validated in various soil matrices with different pH levels, ensuring the sensors’ applicability in real-world agricultural settings.

Another example of an ISFET-based system for on-the-go soil sensing was developed by Hong et al. [23]. The authors developed a portable soil pH sensor employing an ISFET electrode. The sensor consisted of an electrode unit, a portable console, and a USB connector. It was designed to measure relative pH values, accommodating the variability in crop growth stages. The performance of the sensor was evaluated through tests on artificial soil samples with different soil water contents (SWC) and electrical conductivities (EC). Results showed that stable pH measurements were achievable at SWCs greater than 20 mL (16.3%). The electric potential difference (EPD) remained constant at 2.5 g of NaCl, indicating that while SWC significantly influenced the electrical resistance of soil, EC did not affect soil pH at SWCs less than 10 mL. The study concluded that the ISFET-based sensor could measure up to a SWC of 16.3%.

In summary, electrochemical sensors, particularly ISEs and ISFETs, have shown great potential for on-the-go soil nutrient monitoring. These sensors provide real-time, in-situ measurements of soil nutrient concentrations, which can be used to generate high-resolution maps of soil fertility and guide precision nutrient management decisions. However, the performance of these sensors is influenced by various factors, such as temperature, humidity, and interference from other ions, which can affect their accuracy and reliability. Therefore, frequent calibration and maintenance of the sensors are necessary to ensure their long-term stability and performance. Furthermore, the integration of electrochemical sensors with other sensing technologies, such as optical sensors or soil moisture sensors, can provide a more comprehensive assessment of soil nutrient status and improve the overall efficiency of on-the-go soil sensing systems. As research continues to advance in this area, it is expected that electrochemical sensors will play an increasingly important role in precision agriculture and sustainable soil management.

INTEGRATION OF ON-THE-GO SOIL SENSORS WITH POSITIONING SYSTEMS

The integration of on-the-go soil sensors with positioning systems, such as GPS, is a critical step in realizing the full potential of precision agriculture. By combining real-time soil sensing with accurate spatial information, farmers can generate high-resolution maps of soil nutrient variability within their fields and use this information to optimize fertilizer application rates and improve nutrient use efficiency [32, 50]. This section discusses the integration of on-the-go soil sensors with GPS, the generation of high-resolution soil nutrient maps, and the application of these maps for variable rate fertilizer application.

One example of the integration of on-the-go soil sensors with GPS is the soil pH and nutrient mapping system developed by Scudiero et al. [54]. A mobile platform was employed to collect concurrent soil ECa and gamma-ray spectrometry data. The platform, equipped with a Global Navigation Satellite System (GNSS)/GPS (Fig. 3b), was designed to conduct accurate ECa surveys close to the irrigation driplines, where the soil moisture content is higher, and gamma-ray sensing in the drier alleyways between the orchard rows. GNSS/GPS played a crucial role in ensuring precise geolocation of the sensor data, which is vital for the accurate mapping of soil properties. The study’s results indicated that the GNSS/GPS-enabled system could successfully account for the sensor’s offset from the GNSS antenna, ensuring accurate positioning of the ECa readings. The platform was tested in a 0.4-hectare navel orange orchard with a soil type classified as Monserate sandy loam. Traveling at speeds below 5 mph, the system collected ECa data for the 0–1.5 m soil profile and gamma-ray spectrometry total counts (TC), with measurements ranging from 0.4 to 2.81 MeV. The integration of ECa and gamma-ray data facilitated a more nuanced soil texture map of the citrus orchard. The performance of this data fusion was underscored by a leave-one-out cross-validation of the gamma-ray interpolation, which yielded an R2 value of 0.75, indicating a strong correlation between observed and predicted values. Furthermore, 20 sampling locations were identified using a response surface sampling design strategy that maximized the representativeness and minimized spatial autocorrelation. This approach demonstrated the potential of GNSS/GPS-enhanced mobile platforms to significantly improve the spatial characterization of soil properties in precision agriculture applications.

Another example is the gamma-ray spectrometer platform developed by Kassim et al. [27] for enhancing soil potassium management. The objective was to accurately determine the spatial distribution of plant-available potassium (Ka) across agricultural fields. A measurement system was developed to collect gamma-ray spectra while moving across a field, with DGPS ensuring precise location tracking for the collected data. The gamma-ray spectral data underwent preprocessing and analysis using PLSR to predict Ka levels. Comparative analysis between univariate and multivariate methods revealed that the PLSR model, which incorporated multiple spectral lines, outperformed univariate models that relied on single spectral peaks. This multivariate approach allowed for a more robust prediction of Ka, taking into account the complex interactions between different spectral features. Specifically, the study demonstrated that the PLSR model could predict Ka with a R2 of 0.82 and a RMSEP of 12 mg/kg. The incorporation of DGPS was pivotal in ensuring that the spectrometry data could be accurately georeferenced, thus enabling precise soil mapping. This integration allowed for the development of detailed soil potassium maps that could inform targeted fertilization strategies (Fig. 4a).

(a) Recommendation maps of K2O fertilization developed based on on-the-go available potassium (Ka) predicted using gamma-ray spectral analysis with PLSR [27]. (b) ECa maps for the four signals with raw and corrected coordinates. The inset for the P2 signal shows in detail how the sawtooth pattern disappears for the corrected coordinates [20].

The integration of on-the-go soil sensors with GPS and variable rate fertilizer application has the potential to significantly improve the efficiency and sustainability of nutrient management in precision agriculture. However, there are still several challenges that need to be addressed to fully realize the benefits of this technology. One of the main challenges is the need for accurate and reliable soil nutrient maps, which require high-quality soil sensing data and robust calibration models. In a recent study, Jiménez et al. [20] developed a approach to address the issue of coordinate mismatches in field measurements collected on the move. The core of this method involved optimizing time lag values, which significantly enhanced the accuracy of spatial data. The team demonstrated the method’s efficacy using ECa data, gathered with electromagnetic induction (EMI) sensors paired with GPS receivers. The results indicated a notable reduction in coordinate mismatches when the optimized time lag was applied. Specifically, the study showed that for different signal configurations and search strategies, the optimized time lags (Δt) and corresponding average spatial offsets (〈Δs〉) varied. For instance, with a 1 m coil spacing, the 〈Δs〉 ranged from 2.0 to 3.3 m, while a 2 m coil spacing resulted in 〈Δs〉 ranging from 2.6 to 4.0 m. These offsets were contingent on the driving speed during data collection. The optimal Δt values were consistent across overlapping measurements, with S1 and S2 search strategies yielding similar time lags when such overlaps existed. Without overlapping data, only S2 provided reliable Δt values. The correction method’s impact was also evident in the spatial correlation structures of the ECa data (Fig. 4b). The average ECa and its coefficient of variation (CV) were affected by depth, with increases in ECa and decreases in CV as depth increased. Notably, the average ECa was higher for data set C, which suggested that the northwest part of the field had lower ECa values that were not included due to technical issues. This research presents a significant advancement in precision agriculture, offering a robust solution to improve the quality of spatial data in real-time field measurements. By minimizing positional errors, this method paves the way for more accurate soil mapping and informed decision-making in farm management.

Despite these challenges, the integration of on-the-go soil sensors with GPS and variable rate fertilizer application is an active area of research and development in precision agriculture. Researchers are developing new sensor technologies and data analysis methods to improve the accuracy and reliability of soil nutrient mapping, such as multi-sensor data fusion and machine learning algorithms [4, 9, 28, 31]. Industry stakeholders are also working to develop standards and protocols for data exchange and communication between different components of precision agriculture systems, such as the ISOBUS standard for agricultural equipment [5, 35]. As these technologies continue to advance and become more accessible, it is expected that they will play an increasingly important role in optimizing nutrient management and improving the sustainability of agricultural production.

FACTORS AFFECTING PERFORMANCE OF ON-THE-GO SOIL SENSORS

While on-the-go soil sensors have shown great promise for real-time, high-resolution soil nutrient mapping, several factors can affect their performance and reliability in field conditions. One of the most significant factors affecting the performance of on-the-go soil sensors is soil moisture content. Soil moisture can influence the electrical conductivity, dielectric properties, and reflectance spectra of soils, which are the basis for many on-the-go soil sensing technologies. For example, Zhou et al. [68] tackled the challenge of predicting soil total nitrogen (TN) concentration using NIR spectroscopy, which was previously hindered by interference from soil moisture and particle size. They developed a new coupled elimination method (Fig. 5a) that combines the moisture absorption correction index (MACI) and the particle size correction index (PSCI), designed to mitigate these interferences in real-time. The study’s findings showing that the new coupled method improved the prediction accuracy of TN concentration. Specifically, the MACI and PSCI methods successfully reduced the RMSEP for TN concentration from a baseline of 0.1% to an impressive 0.07%, indicating a substantial enhancement in the accuracy of NIR spectroscopy for soil analysis.

(a) Flowchart of the coupled elimination method [68]. (b) Maps showing spatial variability in field 98 compared to carbon values from soil sampling. Wetness index map (Top left). Aerial photograph–blue band (Top right). SC prediction after using aerial photograph along with topographical features (Bottom) [37]. (c) Flow diagram of Smart soil property prediction system [1].

Soil texture and heterogeneity can also affect the performance of on-the-go soil sensors. Soil texture refers to the relative proportions of sand, silt, and clay particles in a soil, which can influence the soil’s physical and chemical properties. Soil heterogeneity refers to the spatial variability of soil properties within a field, which can be caused by factors such as topography, parent material, and management practices. Reyes and Ließ [44] recently reported that soil heterogeneity can significantly affect the results of spectral data processing for field-scale SOC monitoring. Heterogeneous soils have variable compositions, which can lead to differences in the spectral signatures that are used to estimate SOC content. This variability can result from differences in soil types, mineralogy, moisture, and other soil properties. The models used for spectral data processing often require calibration and validation against reference SOC measurements. Heterogeneous soils can make it difficult to obtain representative samples for these purposes, potentially reducing the accuracy of the models. Microsite conditions such as shading, vegetation cover, and soil surface roughness can influence the spectral reflectance. In heterogeneous fields, these effects can vary widely across the area, complicating the interpretation of spectral data. Accounting for soil heterogeneity often requires more complex models that can handle the variability in the data. This can increase the computational demand and the need for more sophisticated algorithms. An appropriate sampling strategy is crucial when dealing with heterogeneous soils. A stratified sampling approach might be necessary to ensure that all variations are adequately represented.

Another important factor affecting the performance of on-the-go soil sensors is the quality of soil-to-sensor contact and the sampling depth. Many on-the-go soil sensors, such as ISEs and NIR spectrometers, require direct contact with the soil to obtain accurate measurements. Poor soil-to-sensor contact can result in inaccurate or noisy data, which can reduce the reliability of the soil maps generated by these sensors. Additionally, the sampling depth of the sensors can affect their ability to capture the spatial variability of soil properties, particularly in fields with variable soil profiles or layering. For example, Masch et al. [34] evaluated the effect of sampling depth on the mapping soil mechanical resistance. The sensor, equipped with three embedded load cells, was adept at measuring soil mechanical resistance at varying depths (0–80, 80–160 and 160–240 mm). The correlation between the soil horizontal resistance index (HRI) and the cone index (CI) improved with increased depth, with correlation coefficients of 0.42, 0.68, and 0.81 for the respective depth intervals. This trend suggests that deeper soil layers provide more consistent resistance measurements, likely due to reduced variability in soil properties and compaction effects at these depths. The critical depth was identified around 100 mm, below which the soil’s failure mode shifted, leading to a bearing-capacity type failure characterized by lateral soil displacement. This phenomenon resulted in a resistance increase with depth and lower data variability, thereby enhancing the sensor’s performance at depths beyond this critical point.

Franceschini et al. [19] examined the impact of external factors on soil reflectance measurements obtained directly in the field and assessed various spectral correction methods to enhance the prediction of soil properties necessary for liming. Through comprehensive testing, they discovered that techniques such as external parameter orthogonalization (EPO), direct standardization (DS), and orthogonal signal correction (OSC) significantly improved the accuracy of on-the-go soil property predictions. Notably, the EPO method showcased a remarkable performance in predicting soil pH, with a R2 increasing from 0.55 to 0.82 after correction. Similarly, the OSC method enhanced the prediction of organic carbon content, with R2 values rising from 0.45 to 0.78. The study underscored the effectiveness of these spectral correction techniques in mitigating the interference of varying external conditions, such as soil texture and moisture content, during spectral data acquisition.

APPLICATIONS OF ON-THE-GO SOIL SENSORS IN PRECISION AGRICULTURE

On-the-go soil sensors have numerous applications in precision agriculture, enabling farmers and researchers to collect high-resolution, real-time data on soil properties and nutrient status. This information can be used to optimize nutrient management practices, improve crop yields, and reduce the environmental impacts of agriculture. This section discusses the applications of on-the-go soil sensors for real-time soil nutrient monitoring, soil nutrient mapping and management zone delineation, integration with decision support systems for optimized fertilization, and the economic and environmental benefits of these technologies.

One of the primary applications of on-the-go soil sensors is real-time soil nutrient monitoring. By continuously measuring soil nutrient concentrations as the sensor moves through the field, farmers can quickly identify areas of nutrient deficiency or excess and take corrective actions. For example, Smolka et al. [61] developed a portable lab-on-a-chip device capable of conducting soil nutrient analysis directly in the field. This innovative tool employed capillary electrophoresis to quantify key soil nutrients from liquid soil extracts. In testing, the device demonstrated its utility by accurately measuring multi-ion solutions. When applied to actual soil samples, the device’s performance indicated a strong potential for real-world application in agriculture and environmental monitoring. Specifically, the device showed a quantification range that was well-suited for agricultural needs. It was able to detect nitrate and ammonium with a sensitivity reaching down to 1 ppm, while for potassium and phosphate, the sensitivity was slightly less, around 10 ppm. The results from the field tests revealed that the device could measure nutrient levels with a precision that closely mirrored those obtained from standard laboratory equipment, typically within a 5–10% variance. This mobile sensor’s development marked a significant step towards simplifying soil nutrient analysis. By providing on-site results, it aimed to enable more timely and informed decisions for soil management. However, it was noted that further enhancements were necessary to optimize both the sensor’s performance and the nutrient extraction process.

Another important application of on-the-go soil sensors is soil nutrient mapping and management zone delineation. By collecting high-resolution soil data across a field, farmers can create detailed maps of soil nutrient variability and use these maps to delineate management zones with similar soil properties and nutrient requirements. These management zones can then be used to guide site-specific management practices, such as variable-rate fertilization, irrigation, or seeding. For example, Ezrin et al. [17] developed a system capable of mapping soil nutrients in real time within paddy fields. The integration of this sensor with geographic information system (GIS) technology enabled the generation of accurate soil nutrient maps, facilitating better site-specific fertilizer management by farmers. Conducted in Malaysia’s Tanjung Karang paddy fields, the system was tested across 118 lots, with results indicating a strong correlation between the sensor’s readings and actual soil nutrient content. The system’s reliability was further underscored by the consistency of its nutrient maps with those produced using traditional kriging methods in ArcGIS software. With a total of 21 642 data points collected, the study demonstrated the system’s potential to enhance fertilizer application efficiency, thereby optimizing crop yields while minimizing environmental impact and production costs.

The integration of on-the-go soil sensors with decision support systems (DSS) is another important application in precision agriculture. Decision support systems are computer-based tools that integrate soil, crop, and weather data to provide farmers with recommendations for optimal management practices. By incorporating real-time soil data from on-the-go sensors, decision support systems can provide more accurate and site-specific recommendations for fertilization, irrigation, and other management practices. For example, Thompson and Puntel [64] developed a DSS that integrated on-the-go soil sensors on unmanned aerial vehicles (UAVs) to enhance nitrogen management in corn cultivation. This system was designed to leverage high-resolution multispectral imagery to provide dynamic, in-season nitrogen application recommendations. Field trials conducted to assess this system’s efficacy revealed significant improvements in nitrogen use efficiency (NUE). Specifically, the sensor-based management approach resulted in an 18.3% average increase in NUE compared to the farmer’s traditional nitrogen application practices. Furthermore, this method allowed for a reduction in nitrogen rates by an average of 31 kg N/ha without compromising yield, indicating a more precise and environmentally friendly approach to nitrogen application. In another work, Dammer et al. [15] explored the effectiveness of an on-the-go camera vision sensor system paired with a d DSS for real-time phenotyping in potato fields. This system was designed to measure green coverage levels and estimate biomass, thereby providing valuable data for crop management decisions. The camera sensor, mounted on a tractor, was capable of distinguishing green plant tissue from soil and senescent material by utilizing specific wavelengths of light. During trials across three different fields, the system scanned a 600-m strip three times, reliably capturing data at speeds up to 15 km/h. The study found that the sensor’s measurements of green coverage were strongly correlated with leaf area index (LAI) and tops fresh mass during key growth phases before canopy closure and during senescence. For instance, before canopy closure, the sensor values showed a linear regression model fit with an R2 of 0.78 and a RMSE of 13.17, indicating a high level of accuracy in the data collected. As the canopy closed, the sensor detected near-constant coverage levels at 90 to 100%, despite increases in LAI and biomass, due to its two-dimensional perspective.

Another approach to optimizing the placement of IoT sensors in agricultural fields is to use spatial interpolation techniques to estimate soil properties across the field based on sensor readings at specific locations. Savin and Blokhin [51] proposed using updated high-resolution soil maps, derived from traditional soil maps and remote sensing data, to identify optimal sensor locations that represent the range of soil conditions. In their study, sensors were placed in replicates on the most and least fertile areas of the field, as determined by the detailed soil fertility maps. Interpolation methods were then applied to estimate soil properties between the sensor points, providing a more complete picture of the spatial variability in soil characteristics. This strategy enables efficient sensor networks that provide representative sampling while keeping costs manageable. The resulting high-resolution soil data generated through interpolation can then guide precision management decisions. By integrating these spatial analysis techniques with the on-the-go sensing technologies covered in this review, we can develop more comprehensive and cost-effective soil and crop monitoring systems that account for the inherent variability in agricultural fields.

Some filed case studies were carried out. For example, Morimoto et al. [36] developed an on-the-go soil sensor system for rice transplanters to measure TD and ECa in real-time. The system consisted of ultrasonic sensors for TD measurement and wheel-type electrodes for ECa measurement. A new soil parameter called soil fertility value (SFV), defined as ECa per unit TD, was introduced to evaluate soil conditions regardless of TD variations. Field tests conducted on eight research fields in Ishigaki island revealed that the ultrasonic sensors accurately measured TD with a R2 of 0.999. The average TD was 23.5 cm, with a maximum of 39.9 cm and a minimum of 12.2 cm. The SFV showed strong correlation with measured EC values from soil samples (R2 = 0.9397). The average SFV was 0.61 mS/cm, ranging from 0.02 to 1.11 mS/cm. Higher SFV areas were mainly observed in fields that received manure treatment two months prior to the study. The developed on-the-go soil sensing system demonstrated its potential for assessing spatial variability in paddy fields during rice transplanting, which could be useful for future variable rate fertilizer application.

Cosby et al. [13] embarked on creating detailed maps to predict the density of redheaded cockchafer (RHC) larvae in pastures. Employing innovative on-the-go sensing technologies, the study successfully mapped RHC infestations by integrating data on soil properties, pasture biomass, and topographical features. The methodology involved utilizing electromagnetic surveys to assess soil conditions and active optical sensors to gauge pasture biomass. These data points, when correlated with topographical information, allowed for the creation of risk maps that indicated areas potentially afflicted by RHC. The study’s results show a risk map achieving an impressive 88% accuracy in categorizing areas according to the density of RHC larvae. This high level of precision marks a considerable improvement in the ability to predict and manage pest infestations in agricultural settings.

CURRENT CHALLENGES AND FUTURE PERSPECTIVES

While on-the-go soil sensors have demonstrated great potential for improving nutrient management practices in precision agriculture, there are still several challenges that need to be addressed to ensure their widespread adoption and effectiveness. This section will discuss the current challenges in improving the accuracy and reliability of on-the-go soil sensors, developing multi-sensor systems for simultaneous measurement of multiple soil properties, incorporating sensors into Internet of Things (IoT) networks for smart farming, and enhancing the affordability and adoptability of on-the-go sensor technologies.

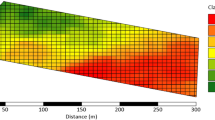

One of the main challenges in the development of on-the-go soil sensors is the need for multi-sensor systems that can simultaneously measure multiple soil properties. While individual sensors have been developed for measuring specific soil properties such as pH, nutrient concentrations, and organic matter content, the integration of these sensors into a single system can provide a more comprehensive assessment of soil health and fertility. For example, a recent study investigated the efficacy of a multi-sensor system incorporating near infrared spectroscopy, topography, and aerial photography for mapping soil carbon in Midwest Alfisols [37]. Their primary goal was to enhance the accuracy of soil carbon predictions. Through rigorous comparison of various calibration approaches and pre-processing techniques, the study identified that PLSR, when coupled with a novel leaving-one-outlier-out cross-validation method, yielded the most accurate results. Specifically, the performance metrics indicated that this approach achieved an adjusted R2 value of 0.93 in the calibration phase, signifying a very high level of explained variance. Furthermore, the RMSE for this method was reported at 0.3 g kg–1, demonstrating a high precision in soil carbon estimation. In the validation phase, the adjusted R2 value remained robust at 0.66, with an RMSE of 0.5 g kg–1. The integration of aerial photographs with topographical information was found to be particularly effective, outperforming models that relied solely on near infrared data. This multi-sensor system approach not only improved the predictive performance but also offered a more comprehensive understanding of spatial soil carbon distribution (Fig. 5b). Similar attempts were made by Knadel et al. [29], Naderi-Boldaji et al. [38], Hemmat et al. [21].

The incorporation of on-the-go soil sensors into IoT networks for smart farming is another important challenge and opportunity for the future. TOthaman et al. [39] developed an IoT-based mobile device for real-time monitoring of soil nutrients in paddy fields. The researchers designed a sensing system consisting of ECa and temperature sensors integrated with a TTGO T-Beam microcontroller and IoT connectivity. Experimental results showed that the observed EC data near the calibration solution conductivities of 12.88 and 150 mS/cm were within 2% of the stated values. The study found a linear relationship between ECa and temperature, with ECa increasing as temperature rose. Furthermore, the investigations revealed that soil ECa was directly linked to nutrient availability and soil depth. Specifically, the soil ECa at a 5 cm depth without fertilizer was 1.04375 mS/cm, while with fertilizer, it was 3.86 mS/cm. At a 10 cm depth, the EC without fertilizer was 0.65625 mS/cm, and with fertilizer, it was 4.20 mS/cm, indicating that EC decreased as soil depth increased but increased with the presence of fertilizer. Postolache et al. [41] developed and assessed an information system aimed at improving soil nutrient monitoring in horticulture through the integration of IoT technologies and mobile applications. The system utilized a range of sensors to measure soil moisture, pH, electrical conductivity, temperature, and the concentrations of nitrogen, phosphorus, and potassium. Laboratory tests confirmed the sensors' sensitivity, with precise measurements starting from 5% soil moisture content. Field tests were conducted at the Lisbon Botanical Garden, where sensor nodes were installed near 12 different tree species. Data were collected under varying conditions, particularly before and after rainfall, to determine the impact of soil wetness on nutrient levels. The results demonstrated that the system could effectively detect changes in soil parameters, providing valuable data for better farm management. Specifically, the system showed a significant response to soil moisture variations, which is critical for nutrient availability to plants. Similarly, Aarthi et al. [1] developed an IoT based prototype for monitoring soil fertility and crop growth in backyard gardening. The prototype system comprised sensors for measuring soil pH, ECa, temperature, and moisture, integrated with an Arduino microcontroller. The sensor data were transmitted to a Raspberry Pi gateway and then to a cloudMQTT server for storage and analysis (Fig. 5c). The researchers tested the prototype in a backyard garden, monitoring the growth of spinach from sowing to harvest. To validate the accuracy of the prototype, they compared the sensor measurements with laboratory analysis results. For soil pH, the sensor measured 6, while the laboratory result was 6.7, indicating a 0.7% difference. Similarly, for soil EC, the sensor value was 0.48, and the laboratory result was 0.64, with a 0.16% difference. These small variations demonstrated the prototype’s efficiency for on-field monitoring in gardening applications. Additionally, the study tracked soil temperature and humidity variations over 25 days, providing valuable data for decision-making regarding irrigation and fertilization schedules for the spinach crop. The state of the open soil surface can significantly influence the spectral reflectance properties and, consequently, the accuracy of soil property predictions using on-the-go sensors and remote sensing data. As discussed by Prudnikova et al. [42], the open surface of arable soils is constantly affected by agricultural machinery and natural factors, such as precipitation, leading to changes in soil moisture, roughness, and material composition. These changes can result in differences between the surface layer and the arable horizon, affecting the reliability of soil property prediction models. Therefore, when using on-the-go soil sensors and remote sensing data for precision agriculture, it is crucial to consider the state of the open soil surface during the survey and to develop models that account for these variations. This can be achieved by incorporating information on soil surface state, such as moisture content, surface roughness, and the presence of crop residues, into the prediction models. By doing so, the accuracy and reproducibility of soil property assessments can be improved, enabling more effective precision agriculture practices.

CONCLUSIONS

On-the-go soil sensors have emerged as promising tools for real-time, high-resolution soil nutrient monitoring in precision agriculture. Optical sensors, such as Vis-NIR, MIR, and ATR spectroscopy, and electrochemical sensors, including ISEs and ISFETs, have demonstrated their potential for accurate and rapid assessment of soil nutrient concentrations directly in the field. The integration of these sensors with positioning systems, such as GPS, enables the generation of detailed soil nutrient maps, which can be used to guide site-specific management practices and optimize fertilizer application rates. However, several challenges need to be addressed to ensure the widespread adoption and effectiveness of on-the-go soil sensors, including the influence of soil heterogeneity, moisture content, and sampling depth on sensor performance, the need for multi-sensor systems for simultaneous measurement of multiple soil properties, and the incorporation of sensors into IoT networks for smart farming. As research continues to advance in this field, it is expected that on-the-go soil sensors will play an increasingly important role in precision agriculture, contributing to improved nutrient use efficiency, reduced environmental impacts, and enhanced sustainability of agricultural systems.

REFERENCES

R. Aarthi, D. Sivakumar, and V. Mariappan, “Smart soil property analysis using IoT: a case study implementation in backyard gardening,” Procedia Comput. Sci. 218, 2842–2851 (2023). https://doi.org/10.1016/j.procs.2023.01.255

M. R. Abisha and J. P. A. Jose, “Experimental investigation on soil stabilization technique by adding nano-aluminium oxide additive in clay soil,” Matéria (Rio J.) 28, e20220272 (2023). https://doi.org/10.1590/1517-7076-RMAT-2022-0272

V. I. Adamchuk, E. D. Lund, B. Sethuramasamyraja, M. T. Morgan, A. Dobermann, and D. B. Marx, “Direct measurement of soil chemical properties on-the-go using ion-selective electrodes,” Comput. Electron. Agric. 48 (3), 272–294 (2005). https://doi.org/10.1016/j.compag.2005.05.001

K. Adhikari, D. R. Smith, H. Collins, C. Hajda, B. S. Acharya, and P. R. Owens, “Mapping within-field soil health variations using apparent electrical conductivity, topography, and machine learning,” Agronomy 12 (5), 1019 (2022). https://doi.org/10.3390/agronomy12051019

M. Ammoniaci, S.-P. Kartsiotis, R. Perria, and P. Storchi, “State of the art of monitoring technologies and data processing for precision viticulture,” Agriculture 11 (3), 201 (2021). https://doi.org/10.3390/agriculture11030201

T. Angelopoulou, A. Balafoutis, G. Zalidis, and D. Bochtis, “From laboratory to proximal sensing spectroscopy for soil organic carbon estimation—a review,” Sustainability 12 (2), 443 (2020). https://doi.org/10.3390/su12020443

G. Archbold, C. Parra, H. Carrillo, and A.M. Mouazen, “Towards the implementation of ISFET sensors for in-situ and real-time chemical analyses in soils: a practical review,” Comput. Electron. Agric. 209, 107828 (2023). https://doi.org/10.1016/j.compag.2023.107828

C. Augusto Euphrosino, A. E. P. G. de A. Jacintho, L. Lorena Pimentel, G. Camarini, and P. S. P. Fontanini, “Tijolos de solo-cimento usados para Habitação de Interesse social (HIS) em mutirão: estudo de caso em olaria comunitária,” Matéria (Rio J.). 27, e202147087 (2022). https://doi.org/10.1590/1517-7076-RMAT-2021-47087

B. Boiarskii, I. Vaitekhovich, S. Tanaka, D. Güneş, T. Sato, and H. Hasegawa, “Comparative analysis of remote sensing via drone and on-the-go soil sensing via Veris U3: a dynamic approach,” Environ. Sci. Proc. 29 (1), 11 (2023). https://doi.org/10.3390/ECRS2023-15846

R. S. Bricklemyer and D. J. Brown, “On-the-go VisNIR: potential and limitations for mapping soil clay and organic carbon,” Comput. Electron. Agric. 70 (1), 209–216 (2010). https://doi.org/10.1016/j.compag.2009.10.006

S. Cao, P. Sun, G. Xiao, Q. Tang, X. Sun, H. Zhao, S. Zhao, H. Lu, and Z. Yue, “ISFET-based sensors for (bio)chemical applications: a review,” Electrochem. Sci. Adv. 3 (4), e2100207 (2023). https://doi.org/10.1002/elsa.202100207

M. Chen, M. Zhang, X. Wang, Q. Yang, M. Wang, G. Liu, and L. Yao, “An all-solid-state nitrate ion-selective electrode with nanohybrids composite films for in-situ soil nutrient monitoring,” Sensors 20 (8), 2270 (2020). https://doi.org/10.3390/s20082270

A. M. Cosby, G. A. Falzon, M. G. Trotter, J. N. Stanley, K. S. Powell, and D. W. Lamb, “Risk mapping of redheaded cockchafer (Adoryphorus couloni) (Burmeister) infestations using a combination of novel k-means clustering and on-the-go plant and soil sensing technologies,” Precis. Agric. 17 (1), 1–17 (2016). https://doi.org/10.1007/s11119-015-9403-z

V. Cviklovič, M. Mojžiš, R. Majdan, K. Kollárová, Z. Tkáč, R. Abrahám, and S. Masarovičová, “Data acquisition system for on-the-go soil resistance force sensor using soil cutting blades,” Sensors 22 (14), 5301 (2022). https://doi.org/10.3390/s22145301

K.-H. Dammer, V. Dworak, and J. Selbeck, “On-the-go phenotyping in field potatoes using camera vision,” Potato Res. 59 (2), 113–127 (2016). https://doi.org/10.1007/s11540-016-9315-y

G. Dimeski, T. Badrick, and A. S. John, “Ion selective electrodes (ISEs) and interferences—a review,” Clin. Chim. Acta 411 (5), 309–317 (2010). https://doi.org/10.1016/j.cca.2009.12.005

M. Ezrin, W. Aimrun, M. Amin, and S. Bejo, “Development of real time soil nutrient mapping system in paddy field,” J. Teknol. 78 (1–2), 125–131 (2016).

D. A. Fagerman, Exploration of Time-Resolved Raman Analysis for On-the-Go Nitrate Sensing (Purdue University, 2010).

M. H. D. Franceschini, J. A. M. Demattê, L. Kooistra, H. Bartholomeus, R. Rizzo, C. T. Fongaro, and J. P. Molin, “Effects of external factors on soil reflectance measured on-the-go and assessment of potential spectral correction through orthogonalisation and standardisation procedures,” Soil Tillage Res. 177, 19–36 (2018). https://doi.org/10.1016/j.still.2017.10.004

A. González Jiménez, Y. Pachepsky, J. L. Gómez Flores, M. Ramos Rodríguez, and K. Vanderlinden, “Correcting on-the-go field measurement–coordinate mismatch by minimizing nearest neighbor difference,” Sensors 22 (4), 1496 (2022). https://doi.org/10.3390/s22041496

A. Hemmat, T. Rahnama, and Z. Vahabi, “A horizontal multiple-tip penetrometer for on-the-go soil mechanical resistance and acoustic failure mode detection,” Soil Tillage Res. 138, 17–25 (2014). https://doi.org/10.1016/j.still.2013.12.003

Y. Hong, M. A. Munnaf, A. Guerrero, S. Chen, Y. Liu, Z. Shi, and A. M. Mouazen, “Fusion of visible-to-near-infrared and mid-infrared spectroscopy to estimate soil organic carbon,” Soil Tillage Res. 217, 105284 (2022). https://doi.org/10.1016/j.still.2021.105284

Y. Hong, S.-O. Chung, J. Park, and Y. Hong, “Portable soil pH sensor using ISFET electrode,” J. Inf. Commun. Convergence Eng. 20 (1), 49–57 (2022). https://doi.org/10.6109/jicce.2022.20.1.49

C. Hutengs, M. Seidel, F. Oertel, B. Ludwig, and M. Vohland, “In situ and laboratory soil spectroscopy with portable visible-to-near-infrared and mid-infrared instruments for the assessment of organic carbon in soils,” Geoderma 355, 113900 (2019). https://doi.org/10.1016/j.geoderma.2019.113900

M. Joly, L. Mazenq, M. Marlet, P. Temple-Boyer, C. Durieu, and J. Launay, “Multimodal probe based on ISFET electrochemical microsensors for in-situ monitoring of soil nutrients in agriculture,” Proceedings 1 (4), 420 (2017). https://doi.org/10.3390/proceedings1040420

M. Joly, M. Marlet, C. Durieu, C. Bene, J. Launay, and P. Temple-Boyer, “Study of chemical field effect transistors for the detection of ammonium and nitrate ions in liquid and soil phases,” Sens. Actuators, B 351, 130949 (2022). https://doi.org/10.1016/j.snb.2021.130949

A. M. Kassim, S. Nawar, and A. M. Mouazen, “Potential of on-the-go gamma-ray spectrometry for estimation and management of soil potassium site specifically,” Sustainability 13 (2), 661 (2021). https://doi.org/10.3390/su13020661

R. Kinoshita, M. Tani, S. Sherpa, A. Ghahramani, and H. M. van Es, “Soil sensing and machine learning reveal factors affecting maize yield in the mid-Atlantic United States,” Agron. J. 115 (1), 181–196 (2023). https://doi.org/10.1002/agj2.21223

M. Knadel, A. Thomsen, and M. H. Greve, “Multisensor on-the-go mapping of soil organic carbon content,” Soil Sci. Soc. Am. J. 75 (5), 1799–1806 (2011). https://doi.org/10.2136/sssaj2010.0452

G. Kweon and C. Maxton, “Soil organic matter sensing with an on-the-go optical sensor,” Biosyst. Eng. 115 (1), 66–81 (2013). https://doi.org/10.1016/j.biosystemseng.2013.02.004

A. Lachgar, D. J. Mulla, and V. Adamchuk, “Implementation of proximal and remote soil sensing, data fusion and machine learning to improve phosphorus spatial prediction for farms in Ontario, Canada,” Agronomy 14 (4), 693 (2024). https://doi.org/10.3390/agronomy14040693

Z. Liu, S. Dhamankar, J. T. Evans, C. M. Allen, C. Jiang, G. M. Shaver, A. Etienne, T. J. Vyn, C. M. Puryk, and B. M. McDonald, “Development and experimental validation of a system for agricultural grain unloading-on-the-go,” Comput. Electron. Agric. 198, 107005 (2022). https://doi.org/10.1016/j.compag.2022.107005

M. R. Maleki, A. M. Mouazen, B. De Ketelaere, H. Ramon, and J. De Baerdemaeker, “On-the-go variable-rate phosphorus fertilisation based on a visible and near-infrared soil sensor,” Biosyst. Eng. 99 (1), 35–46 (2008). https://doi.org/10.1016/j.biosystemseng.2007.09.007

F. R. Masch, R. L. Hecker, G. M. Flores, P. Remirez, and R. Fernandez, “On-the-go sensor with embedded load cells for measuring soil mechanical resistance,” Cienc. Suelo 38 (1), 21–28 (2020).

A. Monteiro, S. Santos, and P. Gonçalves, “Precision agriculture for crop and livestock farming—brief review,” Animals 11 (8), 2345 (2021). https://doi.org/10.3390/ani11082345

E. Morimoto, S. Hirako, H. Yamasaki, and M. Izumi, “Development of on-the-go soil sensor for rice transplanter,” Eng. Agric., Environ. Food 6 (3), 141–146 (2013). https://doi.org/10.11165/eaef.6.141

J. D. Muñoz and A. Kravchenko, “Soil carbon mapping using on-the-go near infrared spectroscopy, topography and aerial photographs,” Geoderma 166 (1), 102–110 (2011). https://doi.org/10.1016/j.geoderma.2011.07.017

M. Naderi-Boldaji, A. Sharifi, R. Alimardani, A. Hemmat, A. Keyhani, E. H. Loonstra, P. Weisskopf, M. Stettler, and T. Keller, “Use of a triple-sensor fusion system for on-the-go measurement of soil compaction,” Soil Tillage Res. 128, 44–53 (2013). https://doi.org/10.1016/j.still.2012.10.002

N. N. C. Othaman, M. N. Isa, and R. Hussin, “IoT based soil nutrient sensing system for agriculture application,” Int. J. Nanoelectron. Mater. 14, 279–288 (2021).

S. Peets, A. M. Mouazen, K. Blackburn, B. Kuang, and J. Wiebensohn, “Methods and procedures for automatic collection and management of data acquired from on-the-go sensors with application to on-the-go soil sensors,” Comput. Electron. Agric. 81, 104–112 (2012). https://doi.org/10.1016/j.compag.2011.11.011

S. Postolache, P. Sebastião, V. Viegas, O. Postolache, and F. Cercas, “IoT-based systems for soil nutrients assessment in horticulture,” Sensors 23 (1), 403 (2023). https://doi.org/10.3390/s23010403

E. Y. Prudnikova, I. Y. Savin, and P. G. Grubina, “Satellite based assessment of agronomically important properties of agricultural soils with consideration of their surface state,” Dokuchaev Soil Bull. 115, 129–159 (2023). https://doi.org/10.19047/0136-1694-2023-115-129-159

J. Reyes and M. Ließ, “On-the-go vis-NIR spectroscopy for field-scale spatial-temporal monitoring of soil organic carbon,” Agriculture 13 (8), 1611 (2023). https://doi.org/10.3390/agriculture13081611

J. Reyes and M. Ließ, “Spectral data processing for field-scale soil organic carbon monitoring,” Sensors 24 (3), 849 (2024). https://doi.org/10.3390/s24030849

T. Roberts, “Improving nutrient use efficiency,” Turk. J. Agric. For. 32 (3), 177–182 (2008).

A. Rodionov, G. Welp, L. Damerow, T. Berg, W. Amelung, and S. Pätzold, “Towards on-the-go field assessment of soil organic carbon using Vis–NIR diffuse reflectance spectroscopy: developing and testing a novel tractor-driven measuring chamber,” Soil Tillage Res. 145, 93–102 (2015). https://doi.org/10.1016/j.still.2014.08.007

P. M. B. Rodrigues, S. A. Dantas Neto, and L. F. de A.L. Babadopulos, “Avaliação do comportamento cisalhante de misturas solo-emulsão com teores de emulsão variando de 16% a 28% em massa,” Matéria (Rio J.) 28, e20230062 (2023). https://doi.org/10.1590/1517-7076-RMAT-2023-0062

N. Rogovska, D. A. Laird, C.-P. Chiou, and L. J. Bond, “Development of field mobile soil nitrate sensor technology to facilitate precision fertilizer management,” Precis. Agric. 20 (1), 40–55 (2019). https://doi.org/10.1007/s11119-018-9579-0

R. K. Sahni, D. Kumar, P. S. Tiwari, V. Kumar, S. P. Kumar, and N. S. Chandel, “A DGPS based on-the-go soil nutrient mapping system: a review,” The Andhra Agric. J. 65, 1–6 (2018).

S. Saldanha, S. L. Cox, T. Militão, and J. González-Solís, “Animal behavior on the move: the use of auxiliary information and semi-supervision to improve behavioural inferences from Hidden Markov Models applied to GPS tracking datasets,” Mov. Ecol. 11 (1), 41 (2023). https://doi.org/10.1186/s40462-023-00401-5

I. Y. Savin and Y. I. Blokhin, “On optimizing the deployment of an internet of things sensor network for soil and crop monitoring on arable plots,” Dokuchaev Soil Bull. 110, 22–50 (2022). https://doi.org/10.19047/0136-1694-2022-110-22-50

M. Schirrmann, R. Gebbers, E. Kramer, and J. Seidel, “Soil pH mapping with an on-the-go sensor,” Sensors 11 (1), 573–598 (2011). https://doi.org/10.3390/s110100573

M. Schirrmann and H. Domsch, “Sampling procedure simulating on-the-go sensing for soil nutrients,” J. Plant Nutr. Soil Sci. 174 (2), 333–343 (2011). https://doi.org/10.1002/jpln.200900367

E. Scudiero, D. L. Corwin, P. T. Markley, A. Pourreza, T. Rounsaville, T. Bughici, and T. H. Skaggs, “A system for concurrent on-the-go soil apparent electrical conductivity and gamma-ray sensing in micro-irrigated orchards,” Soil Tillage Res. 235, 105899 (2024). https://doi.org/10.1016/j.still.2023.105899

M. M. Selim, “Introduction to the integrated nutrient management strategies and their contribution to yield and soil properties,” Int. J. Agron. 2020, e2821678 (2020). https://doi.org/10.1155/2020/2821678

B. Sethuramasamyraja, V. I. Adamchuk, A. Dobermann, D. B. Marx, D. D. Jones, and G. E. Meyer, “Agitated soil measurement method for integrated on-the-go mapping of soil pH, potassium and nitrate contents,” Comput. Electron. Agric. 60 (2), 212–225 (2008). https://doi.org/10.1016/j.compag.2007.08.003

Z.-Q. Shen, Y.-J. Shan, L. Peng, and Y.-G. Jiang, “Mapping of total carbon and clay contents in glacial till soil using on-the-go near-infrared reflectance spectroscopy and partial least squares regression,” Pedosphere 23 (3), 305–311 (2013). https://doi.org/10.1016/S1002-0160(13)60020-X

M. Shojaei Baghini, A. Vilouras, M. Douthwaite, P. Georgiou, and R. Dahiya, “Ultra-thin ISFET-based sensing systems,” Electrochem. Sci. Adv. 2 (6), e2100202 (2022). https://doi.org/10.1002/elsa.202100202

J. V. Sinfield, D. Fagerman, and O. Colic, “Evaluation of sensing technologies for on-the-go detection of macro-nutrients in cultivated soils,” Comput. Electron. Agric. 70 (1), 1–18 (2010). https://doi.org/10.1016/j.compag.2009.09.017

R. P. Sishodia, R. L. Ray, and S. K. Singh, “Applications of remote sensing in precision agriculture: a review,” Remote Sens. 12 (19), 3136 (2020). https://doi.org/10.3390/rs12193136

M. Smolka, D. Puchberger-Enengl, M. Bipoun, A. Klasa, M. Kiczkajlo, W. Śmiechowski, P. Sowiński, C. Krutzler, F. Keplinger, and M. J. Vellekoop, “A mobile lab-on-a-chip device for on-site soil nutrient analysis,” Precis. Agric. 18 (2), 152–168 (2017). https://doi.org/10.1007/s11119-016-9452-y

M. Sozzi, E. Bernardi, A. Kayad, F. Marinello, D. Boscaro, A. Cogato, F. Gasparini, and D. Tomasi, “On-the-go variable rate fertilizer application on vineyard using a proximal spectral sensor,” in Proceedings of the 2020 IEEE International Workshop on Metrology for Agriculture and Forestry (MetroAgriFor) (2020).

Y. Sun, D. Ma, P. Schulze Lammers, O. Schmittmann, and M. Rose, “On-the-go measurement of soil water content and mechanical resistance by a combined horizontal penetrometer,” Soil Tillage Res. 86 (2), 209–217 (2006). https://doi.org/10.1016/j.still.2005.02.022

L. J. Thompson and L. A. Puntel, “Transforming unmanned aerial vehicle (UAV) and multispectral sensor into a practical decision support system for precision nitrogen management in corn,” Remote Sens. 12 (10), 1597 (2020). https://doi.org/10.3390/rs12101597

V. Tsukor, S. Hinck, W. Nietfeld, F. Lorenz, E. Najdenko, A. Möller, D. Mentrup, T. Mosler, and A. Ruckelshausen, “Automated mobile field laboratory for on-the-go soil-nutrient analysis with the ISFET multi-sensor module,” in Proceedings 77th International Conference on Agricultural Engineering (AgEng 2019) (2019).

V. Tsukor, S. Hinck, W. Nietfeld, F. Lorenz, E. Najdenko, A. Möller, D. Mentrup, T. Mosler, and A. Ruckelshausen, “Concept and first results of a field-robot-based on-the-go assessment of soil nutrients with ion-sensitive field effect transistors,” in Proceedings of the 6th International Conference on Machine Control & Guidance (2018).

S. Vogel, E. Bönecke, C. Kling, E. Kramer, K. Lück, G. Philipp, J. Rühlmann, I. Schröter, and R. Gebbers, “Direct prediction of site-specific lime requirement of arable fields using the base neutralizing capacity and a multi-sensor platform for on-the-go soil mapping,” Precis. Agric. 23 (1), 127–149 (2022). https://doi.org/10.1007/s11119-021-09830-x

P. Zhou, W. Yang, M. Li, and W. Wang, “A new coupled elimination method of soil moisture and particle size interferences on predicting soil total nitrogen concentration through discrete NIR spectral band data,” Remote Sens. 13 (4), 762 (2021). https://doi.org/10.3390/rs13040762

Funding

This work was supported by the National Natural Science Foundation of China, project no. 42277017 and “Pioneer” and “Leading Goose” R&D Program of Zhejiang, project no. 2022C02022.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

ETHICS APPROVAL AND CONSENT TO PARTICIPATE

This work does not contain any studies involving human and animal subjects.

CONFLICT OF INTEREST

The authors of this work declare that they have no conflicts of interest.

Additional information

Publisher’s Note.

Pleiades Publishing remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Anyou Xie, Zhou, Q., Fu, L. et al. From Lab to Field: Advancements and Applications of On-The-Go Soil Sensors for Real-Time Monitoring. Eurasian Soil Sc. (2024). https://doi.org/10.1134/S1064229324601124

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1134/S1064229324601124