Abstract

In this paper, we consider the statistical inferences for a class of partially linear models with high dimensional endogenous covariates, when high dimensional instrumental variables are also available. A regularized estimation procedure is proposed for identifying the optimal instrumental variables, and estimating covariate effects of the parametric and nonparametric components. Under some conditions, some theoretical properties are studied, such as the consistency of the optimal instrumental variable identification and significant covariate selection. Furthermore, some simulation studies and a real data analysis are carried out to examine the finite sample performance of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(Y_{i}\) be the response variable, and \(X_{i}\) and \(U_{i}\) be the corresponding covariates, then the partially linear model has the following structure:

where \(\beta =(\beta _{1},\ldots ,\beta _{p_{n}})^{T}\) is a \(p_{n}\)-dimensional vector of unknown parameters, \(g(\cdot )\) is an unknown nonparametric function, and \(\varepsilon _{i}\) is the model error with \(E(\varepsilon _{i}|X_{i},U_{i})=0\). In this paper, we assume the dimension \(p_{n}\) can be diverging with the sample size n. Model (1) increases the flexibility of linear models by allowing the intercept to be a nonparametric function, and this model is one of the most popular semiparametric regression models in the literature. Due to the flexibility of model (1), it has attracted extensive attentions of many scholars recently, such as Fan and Li (2004), Xue and Zhu (2007), Xie and Huang (2009), Huang and Zhao (2017) and Liu et al. (2018), among others.

However, some covariates for regression modeling may be endogenous in practice (see Newhouse and McClellan 1998; Greenland 2000; Hernan and Robins 2006; Fan and Liao 2014). For such case, the estimation methods for model (1) listed above will give an endogeneity bias, and can not give a consistent estimator any more. For such models with endogenous covariates, the instrumental variable adjustment technology can provide a way to obtain a consistent estimation procedure. Therefore, the semiparametric instrumental variable models with endogenous covariates have received a great deal of attention recently, such as Cai and Xiong (2012), Zhao and Li (2013), Yang et al. (2017), Yuan et al. (2016), and Huang and Zhao (2018), among others. In these studies, an essential assumption is that the covariates and instrumental variables are both low-dimensional data with fixed dimension. However, high dimensional data frequently occur in practice.

For the partially linear model, defined by (1), with high dimensional endogenous covariates, Chen et al. (2016) proposed a penalized GMM estimation procedure to perform variable selection for covariates. However, the regularized estimation method proposed by Chen et al. (2016) does not exploit the sparsity of the instrumental variables, and then is still facing the dimensionality curse of high dimensional instrumental variables. Hence take this issue into account, in this paper, we consider the statistical inference for model (1) when some covariates are high dimensional endogenous covariates and a high dimensional set of instrumental variables is available. More specifically, we assume the covariate X in model (1) is an endogenous covariate, and satisfies the following structure

where \(Z=(Z_{1},\ldots ,Z_{q_{n}})^{T}\) is the corresponding \(q_{n}\)-dimensional vector of instrumental variables, \(\varGamma\) is a \(p_{n}\times q_{n}\) matrix of unknown parameters, and e is the model error with \(E\{e|Z, U\}=0\). Furthermore, \(\varepsilon\) and e are assumed to be independent each other, and the dimension \(q_{n}\) also allows to be diverging with the sample size n.

As discussed above, in the following discussion, we are interested in making inference under the high dimensional setting of covariate X and instrumental variable Z. As is typical in high dimensional sparse modeling, we assume models (1) and (2) are both sparse in the sense that only a small subset of parameters in \(\beta\) and \(\varGamma\) are nonzero. Our goal is to identify the optimal instrumental variables, and propose a regularized estimation procedure for model (1) based on the selected optimal instrumental variables.

Recently, penalized methods have a great attraction and proved their efficiency for performing variable selection and parameter estimation simultaneously. Some of these methods are bridge penalty (see Frank and Friedman 1993), Lasso penalty (see Tibshirani 1996), SCAD penalty (see Fan and Li 2001), MCP penalty (see Zhang 2010), and among others. In addition, Lee et al. (2019) present a systematic review on variable selection for high dimensional regression models. In most of the literature listed above, however, the data are assumed to be exogenous. For such high dimensional model with endogenous covariates, these variable selection procedure listed above will give an endogenity bias, and can not give a consistent variable selection result any more. Then, compared with existing estimation methods, our estimation method has the following improvements. Firstly, the proposed method can identify the optimal instrumental variables and important covariates simultaneously, and this is an essential improvement of the regularized estimation procedure proposed by Chen et al. (2016). Secondly, our regularized estimation method is constructed based on the penalized least absolute deviation estimation procedure. Hence, compared with the optimal instrumental variable identification method proposed by Lin et al. (2015), our regularized estimation is more robust. Lastly, the proposed regularized estimation for identifying optimal instrumental variables are constructed by using an auxiliary regression model, which is very different from the existing identification methods of optimal instrumental variables, such as Lin et al. (2015) and Windmeijer et al. (2019).

The rest of this paper is organized as follows. In Sect. 2, we propose an identification method of optimal instrumental variables based on the penalized least absolute deviation method and an auxiliary regression model constructed artificially, and demonstrate some theoretical properties of the proposed optimal instrumental variable identification method. In Sect. 3, we propose a variable selection method of significant covariates in model (1) with the selected optimal instrumental variables, and derive the estimators of model parametric and nonparametric components. In Sect. 4, we propose an iterative algorithm procedure for the proposed regularized estimation method based on the local linear approximation method. In Sect. 5, some simulation studies and a real data analysis are conducted to assess the performances of the proposed method. The technical proofs for all asymptotic results are presented in the Appendix.

2 Optimal instrumental variable identification

For the identification of models (1) and (2), similar to Cai and Xiong (2012), we first give some regularity conditions for models (1) and (2). More specifically, let \(\tilde{Z}\) be a vector of true valid instrumental variables, which is a subset of \(Z=(Z_{1},\ldots ,Z_{q_{n}})^{T}\). Then we assume that the dimensionality of \(\tilde{Z}\) is larger than or equal to the dimensionality of X. Furthermore, we assume that the matrix \(\varGamma\) is a row full rank matrix. Obviously, these regularity conditions ensure that the models (1) and (2) are identifiable, and every endogenous variable \(X_{j}\), \(1\le j \le p_{n}\) has at least one valid instrumental variable. Because X is an endogenous covariate, and Z is the corresponding instrumental variable, we have \(E(\varepsilon |X, U)\ne 0\) and \(E(\varepsilon |Z, U)=0\). In addition, note that U is an exogenous covariate, we assume that the instrumental variable Z is independent of U. Let \(Z_{k}\) be the kth component of instrumental variable \(Z=(Z_{1},\ldots ,Z_{q_{n}})^{T}\), then invoking model (1), and some calculations yield

Didelez et al. (2010) point that if \(Z_{k}\) is an optimal instrumental variable, then \(Z_{k}\) should be significantly correlated with endogenous covariate X. That is, if for all \(j\in \{1,\ldots ,p_{n}\}\), we have \(Cov(X_{j},Z_{k})=0\), then \(Z_{k}\) is an invalid instrumental variable. Hence, (3) implies that if \(Cov(Y,Z_{k})\ne 0\) significantly hold, then \(Z_{k}\) is an optimal instrumental variable. Hence, based on this result, we can identify optimal instrumental variable based on the following auxiliary regression model

More specifically, if \(Z_{k}\) is an optimal instrumental variable, then the corresponding coefficient \(\theta _{k}\) should be significantly nonzero. Hence, to recover the optimal instrumental variables, we define the following penalized objective function

where \(\theta =(\theta _{1},\ldots ,\theta _{q_{n}})^{T}\) is a \(q_{n}\)-dimensional parametric vector, \(p_{\lambda _{1n}}(\cdot )\) is a specified penalized function, and \(\lambda _{1n}\) is a tuning parameter. In practice, there are many penalty functions can be used, such as the Lasso penalty proposed by Tibshirani (1996), the SCAD penalty proposed by Fan and Li (2001), and the MCP penalty proposed by Zhang (2010), among others.

Let \(\widehat{\theta }\) be the solution of \(\theta\) by minimizing (5), then we next study the asymptotic properties of the regularized estimator \(\widehat{\theta }\). From the assumption of sparsity in instrumental variables, we know that only a small subset of components in \(\theta\) is nonzero. Then, for convenience and simplicity, we let \(\theta _{0}\) be the true value of \(\theta\), \(\mathscr {A}_{1}=\{1\le k\le q_{n}: \theta _{0k}\ne 0 \}\) and \(\mathscr {A}_{2}=\{1\le k\le q_{n}: \theta _{0k}=0 \}\). The corresponding optimal instrumental variables and coefficient matrix are denoted as \(Z_{\mathscr {A}_{1}}\) and \(\varGamma _{\mathscr {A}_{1}}\), respectively. Then model (2) can also be rewritten as

Next, we demonstrate some asymptotic properties of the resulting estimator \(\widehat{\theta }\). To establish the asymptotic properties, we first assume some regularity conditions as follows:

-

(C1)

The nonparametric function g(u) is rth continuously differentiable on (0, 1) with \(r \ge 2\).

-

(C2)

The error \(\varepsilon\) has continuous and symmetric density \(f(\cdot )\). Moreover, the density function \(f(\cdot )\) has finite derivatives in any neighborhood of zero.

-

(C3)

Let \(c_{1},\ldots ,c_{K}\) be the interior knots of [0, 1]. Furthermore, we let \(c_{0}=0\), \(c_{K+1}=1\), \(h_{i}=c_{i}-c_{i-1}\). Then, there exists a constant c such that \(\max \{h_{i}\}/\min \{h_{i}\}\le c\) and \(\max \{|h_{i+1}-h_{i}|\}=o(\kappa _{n}^{-1})\), where \(\kappa _{n}\) is the number of interior knots.

-

(C4)

There exists a positive constant c such that \(\max _{i,j}|X_{ij}|<c\), \(\max _{i,k}|Z_{ik}|<c\) in probability, where \(i=1,\ldots ,n\), \(j=1,\ldots ,p_{n}\) and \(k=1,\ldots ,q_{n}\).

-

(C5)

The dimensions of covariate X and instrumental variable Z satisfy \(p_{n}^{3}/n\rightarrow 0\) and \(q_{n}^{3}/n\rightarrow 0\) as \(n\rightarrow \infty\).

-

(C6)

The matrix \(\varGamma _{\mathscr {A}_{1}}\), defined in (6), is a row full rank matrix. In addition, let \(\tau _{n1}\) and \(\tau _{n2}\) be the smallest and largest eigenvalues of the matrix \(E(ZZ^{T})\), and \(\rho _{n1}\) and \(\rho _{n2}\) be the smallest and largest eigenvalues of the matrix \(\varGamma _{\mathscr {A}_{1}} \varGamma _{\mathscr {A}_{1}}^{T}\), respectively. Then, there exist constants \(0<\rho _{1}<\rho _{2}<\infty\) and \(0<\tau _{1}<\tau _{2}<\infty\) such that \(\rho _{1}<\rho _{n1}<\rho _{n2}<\rho _{2}\) and \(\tau _{1}<\tau _{n1}<\tau _{n2}<\tau _{2}\).

-

(C7)

The tuning parameter \(\lambda _{1n}\) satisfies \(\lambda _{1n}\rightarrow 0\) and \(\sqrt{n/q_{n}}\lambda _{1n}\rightarrow \infty\). In addition, we assume \(\liminf _{n\rightarrow \infty }\liminf _{\theta _{k}\rightarrow 0^{+}} \lambda _{1n}^{-1}p'_{\lambda _{1n}} (|\theta _{k}|)>0.\)

-

(C8)

Let \(a_{n}=\max \limits _{k}\left\{ |p_{\lambda _{1n}}'(|\theta _{0k}|)| :\theta _{0k}\ne 0\right\}\), \(b_{n}=\max \limits _{k}\left\{ |p_{\lambda _{1n}}''(|\theta _{0k}|)|:\theta _{0k}\ne 0\right\}\), then we assume that \(n^{1/2}a_{n}\rightarrow 0\) and \(b_{n}\rightarrow 0\) as \(n\rightarrow \infty\).

Conditions C1 and C2 are common assumptions of nonparametric and semiparametric estimation technology. Condition C3 implies that \(c_{0},\ldots ,c_{K+1}\) is a \(C_{0}\)-quasi-uniform sequence of partitions of [0, 1]. The assumptions of high order moment conditions in C4–C6 are standard assumptions for high dimensional semiparametric regression models in the literature, which ensure that the models (1) and (2) are identifiable, and the proposed regularized estimation procedure is consistent. Condition C7 and C8 are assumptions on the penalty function, which ensure that the proposed variable selection method is consistent, which are widely used in variable selection literature (see Fan and Li 2001, Li and Liang (2008), and Wang et al. (2008)). Under these regularity conditions, the following Theorem 1 shows that the resulting regularized estimator \(\widehat{\theta }\) is consistent, and gives the convergence rate of \(\widehat{\theta }\).

Theorem 1

Suppose the regularity conditions (C1)–(C8) hold. Then we have that

Furthermore, we show that such consistent estimators must possess the sparsity property, which is stated in the following Theorem 2.

Theorem 2

Suppose the regularity conditions (C1)–(C8) hold. Then, with probability tending to 1, we have that

3 Regularized estimation for model parameters

We denote \(Z^{*}\) as the vector of selected optimal instrumental variables in Sect. 2. Note that Theorem 2 implies that the variable selection for optimal instrumental variables is consistent, then with probability tending to one, we have \(Z_{\mathscr {A}_{1}}=Z^{*}\) when n is large enough. Then, invoking model (6), the moment estimator of \(\varGamma _{\mathscr {A}_{1}}\) is defined by

Therefore, the optimal instrumental variable adjusted covariates is defined by \(X^{*}=\widehat{\varGamma }Z^{*}\). Next, invoking the adjusted covariate \(X^{*}\), we proceed to identify and estimate the nonzero effects of the covariates in model (1). The regularized estimation objective function is defined by

Note that \(g(\cdot )\) is a nonparametric function, \(M_{n}(\beta ,g(\cdot ))\) is not ready for optimization. Then, invoking B-spline approximation technique (see Schumaker 1981), we replace \(g(\cdot )\) in \(M_{n}(\beta ,g(\cdot ))\) by its basis function approximations. More specifically, let \(B(u)=(B_{1}(u),\ldots ,B_{L_{n}}(u))^{T}\) be B-spline basis functions with the order of M, where \(L=\kappa _{n}+M\), and \(\kappa _{n}\) is the number of interior knots. Then, g(u) can be approximated by \(g(u)\approx B(u)^{T}\gamma\), where \(\gamma =(\gamma _{1},\ldots ,\gamma _{L_{n}})^{T}\) is a vector of basis functions coefficients. Substituting it into \(M_{n}(\beta ,g(\cdot ))\), we can obtain that

where \(W_{i}=B(U_{i})\). Let \(\widehat{\beta }\) and \(\widehat{\gamma }\) be the solution by minimizing (8), then \(\widehat{\beta }\) is the optimal instrumental variable based estimator of \(\beta\), and the estimator of g(u) is given by \(\widehat{g}(u)=B(u)^{T}\widehat{\gamma }\).

Remark 1

Although \(M_{n}(\beta ,g(\cdot ))\) contains nonparametric function \(g(\cdot )\), and cannot be minimized directly, we replace \(g(\cdot )\) by its basis function approximations based on the B-spline approximation technique. Then, we can obtain the estimator of parametric component \(\beta\) and nonparametric component \(g(\cdot )\) simultaneously. B-spline based estimation is a more effective nonparametric estimation method, which is widely used in the nonparametric and semiparametric regression literature. In addition, note that \(M_{n}(\beta ,g(\cdot ))\) contains unknown parametric component \(\beta\) and nonparametric function \(g(\cdot )\) simultaneously, then the proposed estimation procedure can be regarded as a semiparaemtric regularized estimation procedure.

Next, we study the asymptotic properties of the regularized estimator \(\widehat{\beta }\) and \(\widehat{g}(u)\). Similar to conditions (C7) and (C8), we give some conditions for the penalty function used in (8).

-

(C9)

The tuning parameter \(\lambda _{2n}\) satisfies \(\lambda _{2n}\rightarrow 0\) and \(\sqrt{n/p_{n}}\lambda _{2n}\rightarrow \infty\). Furthermore, we assume \(\liminf _{n\rightarrow \infty }\liminf _{\beta _{j} \rightarrow 0^{+}}\lambda _{2n}^{-1}p'_{\lambda _{2n}} (|\beta _{j}|)>0.\)

-

(C10)

\(a_{n}^{*}=\max \limits _{j}\left\{ |p_{\lambda _{2n}}'(|\beta _{0j}|)| :\beta _{0j}\ne 0\right\}\) and \(b_{n}^{*}=\max \limits _{j}\left\{ |p_{\lambda _{2n}}''(|\beta _{0j}|)|:\beta _{0j}\ne 0\right\}\), then we assume that \(n^{1/2}a_{n}^{*}\rightarrow 0\) and \(b_{n}^{*}\rightarrow 0\) as \(n\rightarrow \infty\).

In addition, for convenience and simplicity, we let \(\beta _{0}\) be the true value of \(\beta\), \(\mathscr {B}_{1}=\{1\le j\le p_{n}: \beta _{0j}\ne 0 \}\) and \(\mathscr {B}_{2}=\{1\le j\le p_{n}: \beta _{0j}=0 \}\). Furthermore, we let \(\gamma _{0}\) be the true value of \(\gamma\). The following Theorem 3 shows the resulting estimator \(\widehat{\beta }\) is consistent, and \(\widehat{\beta }\) satisfies sparsity.

Theorem 3

Suppose the regularity conditions (C1)–(C10) hold, and the number of knots \(\kappa _{n}\) satisfies \(\kappa _{n} =O(n^{1/(2r+1)})\) and \(\lambda _{2n}/\sqrt{\kappa _{n}/n}\rightarrow \infty\). Then we have that

-

(i)

\(\Vert \widehat{\beta }-\beta _{0}\Vert =O_{p}(\sqrt{(p_{n}+\kappa _{n})/n})\).

-

(ii)

\(\widehat{\beta }_{j}=0\), \(j\in \mathscr {B}_{2}\), with probability tending to 1.

Theorem 3 shows that, under some regularity conditions, the resulting estimator \(\widehat{\beta }\) is consistent, and satisfies sparsity. This implies that the proposed regularized estimation method for \(\beta\) can be used to select important covariates in model (1). Furthermore, the following Theorem 4 shows that the estimator of nonparametric function g(u) is also consistent, and achieves the optimal nonparametric convergence rate.

Theorem 4

Suppose the regularity conditions (C1)–(C10) hold, and the number of knots \(\kappa _{n}\) satisfies \(\kappa _{n} =O(n^{1/(2r+1)})\) and \(\lambda _{2n}/\sqrt{\kappa _{n}/n}\rightarrow \infty\). Then we have that

where r is defined in condition (C1).

4 Iterative algorithms

In this section, we give an iterative algorithm procedure of the proposed estimation method in Sects. 2 and 3. Similar to Zou and Li (2008), we use the local linear approximation method to the penalty function \(p_{\lambda _{1n}}(\cdot )\). Then, (5) can be rewritten as

where \(p_{\lambda _{1n}}'(\cdot )\) is the first-order derivative of \(p_{\lambda _{1n}}(\cdot )\), and \(\theta ^{(0)}=(\theta _{1}^{(0)},\ldots ,\theta _{q_{n}}^{(0)})^{T}\) is an initial estimator of \(\theta\), which is computed based on the least absolute deviation estimator without penalty. Furthermore, we construct an augmented data set \((\widetilde{Y}_{i},\widetilde{Z}_{i})\) with \(i=1,\ldots ,n+q_{n}\) as follows

where \(\xi _{k}\) is the unit vector with the kth element being 1. Then, (9) can be rewritten as

Hence, the penalized least absolute deviation estimator of \(\theta\) can be easily calculated by the R package “quantreg” for quantile regression (see Koenker 2005). Similarly, we can easily obtain the penalized least absolute deviation estimator of \(\beta\) and \(\gamma\) based on (8). Then, our regularized algorithm has two stages. In the first stage, we identify the optimal instrumental variables. In the second stage, we identify the important covariates and estimate model parameters. In addition, it is worth mentioning that, as discussed above, both of the two stages can be calculated easily. More specifically, the two-stage regularized algorithm is as follows:

Stage 1. We identify the optimal instrumental variables based on model (4). Let \(\widehat{\theta }=(\widehat{\theta }_{1},\ldots ,\widehat{\theta }_{q_{n}})^{T}\) be the solution by minimizing the objective function (5), and \(\mathscr {A}_{*}=\{1\le k\le q_{n}: \widehat{\theta }_{k}\ne 0\}\). Invoking model (4), we denote the corresponding instrumental variables of \(\mathscr {A}_{*}\) as \(Z^{*}\). Then based on the argument in Sect. 2, \(Z^{*}\) is a vector of identified optimal instrumental variables.

Stage 2. We define the instrumental variable adjusted covariates as \(X^{*}=\widehat{\varGamma }Z^{*}\), where \(\widehat{\varGamma }\) is defined by (7). Then, we identify the important covariates and estimate model parameters based on the objective function (8). Let \(\widehat{\beta }\) and \(\widehat{\gamma }\) be the solution by minimizing (8), then \(\widehat{\beta }\) is the regularized estimator of \(\beta\), and the estimator of g(u) is given by \(\widehat{g}(u)=B(u)^{T}\widehat{\gamma }\).

In addition, in the proposed iterative algorithm, the penalty parameter \(\lambda _{1n}\), \(\lambda _{2n}\) and the number in interior knots \(\kappa _{n}\) should be chosen. Similar to Gao and Huang (2010) and Wang et al. (2015), we suggest choosing these parameters by using the Bayesian information criterion (BIC). More specifically, we estimate \(\lambda _{1n}\) by the following BIC function

where \(d_{\lambda _{1n}}\) is the number of nonzero coefficients in \(\widehat{\theta }\), which is obtained by (5). Furthermore, \(\lambda _{2n}\) and \(\kappa _{n}\) are chosen by using the following BIC function

where \(d_{\kappa _{n}}\) is the effective number of parameters in \(\widehat{\beta }\) and \(\widehat{\gamma }\), which are obtained by (8). Note that \(\lambda _{1n}\), \(\lambda _{2n}\) and \(\kappa _{n}\) are all one-dimensional parameters, then we can minimize the BIC criterion by some grid points. More specifically, for parameter \(\lambda _{1n}\), we first choose a \(100\times 1\) uniform grid points vector in the region [0.01, 0.99]. Secondly, we calculate the BIC values on each grid point. Then, based on the 100 BIC values, we choose the optimal \(\lambda _{1n}\) that corresponds to the minimum BIC value. Similarly, we can choose the optimal \(\lambda _{2n}\) and \(\kappa _{n}\) by using the grid search method.

5 Numerical results

In this section, we conduct several simulation experiments to illustrate the finite sample performances of the proposed method, and consider a real data set analysis for further illustration.

5.1 Simulation studies

In this section, we conduct some Monte Carlo simulations to evaluate finite sample performance of the proposed method. The main objective is to evaluate the performance of instrumental variable identification, and the effectiveness of instrumental variable based covariate adjustment technique. Then, the data are generated from the following model

where \(g(u)=\sin (2\pi u)\) and \(\beta =(2.5,2,1.5,1,0,\ldots ,0)^{T}\) is a \(p_{n}\) dimensional parametric vector. From the definition of \(\beta\), we can see that \(X_{ij}\), \(j=1,\ldots ,4\) are important covariates, and the others are unimportant covariates. Furthermore, we generate nonzero entries of the first four columns in \(\varGamma\) from the uniform distribution U(0.75, 1), and the other columns are all set to zero, and the instrumental variables are generated by \(Z_{ik}\sim N(1,1.5)\), \(k=1,\ldots ,q_{n}\). From the generative mechanism of instrumental variables, we can see that \(Z_{ik}\), \(k=1,\ldots ,4\) are optimal instrumental variables, and the others are invalid instrumental variables. The exogenous covariate \(U_{i}\) is generated from \(U_{i}\sim U(0,1)\), and the endogenous covariates \(X_{i}\) and the response \(Y_{i}\) are generated according to the model with \(\varepsilon \sim N(0,0.5)\) and \(\alpha =0.2\) and 0.8, respectively, to represent different levels of endogeneity of covariates. This set up allows the covariate \(X_{i}\) is endogenous, because \(E(X_{i}\varepsilon _{i})\ne 0\).

In the following simulation, the penalty function is taken as the SCAD penalty, Lasso penalty and MCP penalty, respectively. The sample size is taken as \(n=200\), 400 and 600, respectively, the dimensionality of covariate and instrumental variable are taken as \((p_{n},q_{n})=(5\lfloor n^{1/5}\rfloor , 5\lfloor n^{1/4}\rfloor )\) for each sample size, and for each case, we take 1000 simulation runs.

We first evaluate the performance of the proposed optimal instrumental variable selection procedure. In this simulation, we present the number of true positive (TP), false positive (FP) and the false selection rate (FSR) as the effectiveness of the variable selection procedure, where the TP is the number of true optimal instrumental variables correctly set to optimal instrumental variables, the FP is the number of true invalid instrumental variables incorrectly set to optimal instrumental variables, and the FSR is defined as \(\hbox {FSR}=\hbox {IN/TN}\), where “IN” is the number of the invalid instrumental variables incorrectly set to optimal instrumental variables, and “TN” is the total number set to optimal instrumental variables. In fact, FSR represents the proportion of falsely selected invalid instrumental variables among the total variables selected in the variable selection procedure. All these performance indicators are averaged over all simulation runs. Based on 1000 simulation runs, the simulation results are reported in Table 1. From Table 1, we can make the following observations:

-

(i)

For the given level of endogeneity of covariates, the FP and FSR decrease as the sample size n increases, and the TP tends to the true number 4 when the size of sample increases. This implies that the proposed identification method of optimal instrumental variables is consistent.

-

(ii)

For given n, the proposed identification method performs similar in terms of TP, FP and FSR for both levels of endogeneity of covariates. This indicates that the proposed identification method can also select optimal instrumental variables when the level of endogeneity is lower.

-

(iii)

For given size of sample and level of endogeneity of covariates, the simulation results are similar for different penalties, which means that the proposed method does not depend sensitively on the choice of penalty functions.

Next, we evaluate the performance of the proposed significant covariate selection procedure. In this simulation, we also present the number of true positive (TP), false positive (FP) and the false selection rate (FSR) as the effectiveness of the variable selection procedure. In addition, to evaluate the consistence of the resulting estimator of parametric component \(\beta\), we define the generalized mean square error (GMSE) as follows:

Based on 1000 simulation runs, we can obtain 1000 GMSE values. In the following simulations, we present the median of the 1000 GMSE values. The simulation results are reported in Table 2. From Table 2, we can see that the FP and FSR decrease as the sample size n increases, and the TP tends to the true number 4 when the size of sample increases. In addition, for given n, we also can see that the simulation results are similar in terms of TP, FP and FSR under different penalties, which implies that the proposed variable selection method for covariates does not depend sensitively on the choice of penalty functions.

Lastly, we evaluate the efficiency of the proposed instrumental variable adjustment mechanism. Here, two methods are compared: the instrumental variable adjustment based estimation (IVE) method proposed by this paper and the naive estimation (NE) method. The latter is neglecting the endogeneity of covariate, and minimizing the following objective function to obtain the estimator of \(\beta\) and \(\gamma\).

where \(\gamma\) is a vector of basis function coefficients, which satisfies \(g(u)\approx B(u)^{T}\gamma\), and \(W_{i}=B(U_{i})\).

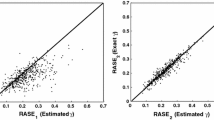

For the parametric \(\beta\), we only present the simulation results of nonzero component \(\beta _{1}\) with SCAD penalty. The simulation results for other nonzero components are similar, and then are not shown. Based on 1000 simulation runs, the box-plots for 1000 values of absolute biases, defined by \(|\widehat{\beta }_{1}-\beta _{1}|\), are presented in Figs. 1 and 2, where Fig. 1 presents the simulation results under the endogenous level \(\alpha =0.2\), and Fig. 2 presents the simulation results under the endogenous level \(\alpha =0.8\). From Figs. 1 and 2, we can make the following observations:

-

(i)

The absolute bias values, obtained by the proposed IVE method, decease as the sample size increases. However, the absolute bias values, obtained by the NE method, are still larger even though the sample size increases. This implies that the estimator based on the IVE method is consistent, and the estimator based on the NE method will give an endogeneity bias.

-

(ii)

For given n, the IVE estimation procedure performs similar for different levels of endogeneity of covariates. This indicates that the proposed IVE estimation procedure can attenuate the effect of the endogeneity of covariates.

In addition, the simulation results for the nonparametric component g(u) when \(n=400\) are shown in Fig. 3, where Fig3a presents the results under the endogenous level \(\alpha =0.2\), Fig. 3b presents the results under the endogenous level \(\alpha =0.8\). In Fig. 3, the dashed curve means the estimator based on the proposed IVE method, the dotted curve means the estimator based on the NE method, and the solid curve means the real curve of g(u).

From Fig. 3, we can see that the estimator, obtained by the NE method, is biased, and the estimator, obtained by the proposed IVE method, can attenuate the endogenous biases. In addition, we also can see that the estimators, obtained by the proposed IVE method, are similar for different levels of endogeneity of covariates. This indicates that the endogeneity in parametric component still affect the estimation for nonparametric components, and the proposed IVE method is also workable for the statistical inferences of the nonparametric component g(u).

5.2 Real data analysis

We analyze a data set from the National Longitudinal Survey of Young Men (NLSYM) to illustrate the estimation procedure proposed by this paper. This data set contains 3010 observations from the NLSYM in 1976, and has been studied by many authors (see Card 1995; Zhao and Xue 2013; Huang and Zhao 2018). The objective of the study is to evaluate the effects of individual’s education and work experience on individual’s wage. More details for this data description and analysis can be seen in Card (1995).

Similar to Zhao and Xue (2013), we consider the following partially linear regression model

where logwage is the log of individual’s hourly wage in cents, educ is the years of individual’s schooling, \(educ^{2}\) and \(educ^{3}\) represent the quadratic and cubic effects, respectively, exper is the individual’s work experience constructed as \(age-educ-6\), and black, south, and smsa (Standard Metropolitan Statistical Area) are dummy variables whose detailed description can be seen in Card (1995). In practice, the years of individual’s schooling may be correlative with some factors in model errors, such as individual’s intelligence quotient. Hence, similar to Card (1995), we take the variable educ as an endogenous variable, and use the proximity to a 4-year college as an instrumental variable for educ.

In addition, in order to demonstrate the performance of the proposed variable selection method for optimal instrumental variables, we add 99 invalid instrumental variables to have in total \(q_{n}=100\) instrumental variables. More specifically, we set \(Z=(Z_{1},\ldots ,Z_{100})^{T}\), where \(Z_{1}\) is the valid instrumental variable “the proximity to a 4-year college”, and \(Z_{2},\ldots ,Z_{100}\) are all invalid instrumental variables, which are independently sampled from the standard normal distribution N(0, 1). The penalty function is taken as the SCAD penalty, Lasso penalty and MCP penalty, respectively.

Since some noise instrument variables are randomly generated, we repeated the optimal instrument variable selection procedure 1000 times. The simulation results for instrument variable selection are shown in Table 3, where “avg. num.” means the average number of selected optimal instrument variables with 1000 simulation runs, “selected times” means the times of the true optimal instrumental variables were selected in the final model over the 1000 simulation runs. From Table 3, we can see that average number of selected optimal instrument variables is very close to the true number 1 for all penalties, and the true optimal instrument variable can be always selected in all simulation runs. In addition, the simulation results under different penalties are similar in term of the identification of optimal instrument variables, which implies that the proposed method is insensitive to the penalty function.

The regularized estimators of parametric component \(\beta\) are shown in Table 4. From Table 4, we can see that \(\beta _{2}\) and \(\beta _{3}\) are zero, which indicates that the quadratic and cubic effects of educ have no significant impact on individual’s wage. In addition, the estimated curves of g(u) are shown in Fig. 4, and the results show that these estimated curves are also similar for differen penalties.

References

Cai, Z., & Xiong, H. (2012). Partially varying coefficient instrumental variables models. Statistca Neerlandica, 66, 85–110.

Card, D. (1995). Using geographic variation in college proximity to estimate the return to schooling. In L. Christofides, E. Grant, & R. Swidinsky (Eds.), Aspects of Labor Market Behaviour: Essays in Honour of John Vanderkamp (pp. 201–222). Toronto: University of Toronto Press.

Chen, B. C., Liang, H., & Zhou, Y. (2016). GMM estimation in partial linear models with endogenous covariates causing an over-identified problem. Communications in Statistics - Theory and Methods, 45, 3168–3184.

Didelez, V., Meng, S., & Sheehan, N. A. (2010). Assumptions of IV methods for observational epidemiology. Statistical Science, 25, 22–40.

Fan, J. Q., & Li, R. (2001). Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American Statistical Association, 96, 1348–1360.

Fan, J. Q., & Li, R. Z. (2004). New estimation and model selection procedures for semiparametric modeling in longitudinal data analysis. Journal of the American Statistical Association, 99, 710–723.

Fan, J. Q., & Liao, Y. (2014). Endogeneity in dimensions. The Annals of Statistics, 42, 872–917.

Frank, I. E., & Friedman, J. H. (1993). A statistical view of some chemometrics regression tools. Technometrics, 35, 109–135.

Gao, X., & Huang, J. (2010). Asymptotic analysis of high-dimensional lad regression with lasso. Statistica Sinica, 20, 1485–1506.

Greenland, S. (2000). An introduction to instrumental variables for epidemiologists. International Journal of Epidemiologists, 29, 722–729.

Hernan, M. A., & Robins, J. M. (2006). Instruments for causal inference-an epidemiologists dream? Epidemiology, 17, 360–372.

Huang, J. T., & Zhao, P. X. (2017). QR decomposition based orthogonality estimation for partially linear models with longitudinal data. Journal of Computational and Applied Mathematics, 321, 406–415.

Huang, J. T., & Zhao, P. X. (2018). Orthogonal weighted empirical likelihood based variable selection for semiparametric instrumental variable models. Communications in Statistics-Theory and Methods, 47, 4375–4388.

Knight, K. (1998). Limiting distributions for \(L_{1}\) regression estimators under general conditions. The Annals of Statistics, 26, 755–770.

Koenker, R. (2005). Quantile Regression. Cambridge: Cambridge University Press.

Lee, E. R., Cho, J., & Yu, K. (2019). A systematic review on model selection in high-dimensional regression. Journal of the Korean Statistical Society, 48, 1–12.

Lin, W., Feng, R., & Li, H. Z. (2015). Regularization methods for high-dimensional instrumental variables regression with an application to genetical genomics. Journal of the American Statistical Association, 110, 270–288.

Liu, J. Y., Lou, L. J., & Li, R. Z. (2018). Variable selection for partially linear models via partial correlation. Journal of Multivariate Analysis, 167, 418–434.

Newhouse, J. P., & McClellan, M. (1998). Econometrics in outcomes research: the use of instrumental variables. Annual Review of Public Health, 19, 17–24.

Schumaker, L. L. (1981). Spline Function. New York: Wiley.

Tibshirani, R. (1996). Regression shrinkage and selection via the Lasso. Journal of the Royal Statistical Society Series B, 58, 267–288.

Wang, H., Li, G., & Jiang, G. (2007). Robust regression shrinkage and consistent variable selection through the lad-lasso. Journal of Business & Economic Statistics, 25, 347–355.

Wang, M. Q., Song, L. X., & Tian, G. L. (2015). SCAD-penalized least absolute deviation regression in high-dimensional models. Communications in Statistics-Theory and Methods, 44, 2452–2472.

Windmeijer, F., Farbmacher, H., Davies, N., & Smith, G. D. (2019). On the use of the Lasso for instrumental variables estimation with some invalid instruments. Journal of the American Statistical Association, 114, 1339–1350.

Xue, L. G., & Zhu, L. X. (2007). Empirical likelihood semiparametric regression analysis for longitudinal data. Biometrika, 94, 921–937.

Xie, H., & Huang, J. (2009). SCAD-penalized regression in high-dimensional partially linear models. The Annals of Statistics, 37, 673–696.

Yang, Y. P., Chen, L. F., & Zhao, P. X. (2017). Empirical likelihood inference in partially linear single index models with endogenous covariates. Communications in Statistics-Theory and Methods, 46, 3297–3307.

Yuan, J. Y., Zhao, P. X., & Zhang, W. G. (2016). Semiparametric variable selection for partially varying coefficient models with endogenous variables. Computational Statistics, 31, 693–707.

Zhang, C. H. (2010). Nearly unbiased variable selection under minimax concave penalty. The Annals of Statistics, 38, 894–942.

Zhao, P. X., & Li, G. R. (2013). Modified SEE variable selection for varying coefficient instrumental variable models. Statistical Methodology, 12, 60–70.

Zhao, P. X., & Xue, L. G. (2013). Empirical likelihood inferences for semiparametric instrumental variable models. Journal of Applied Mathematics and Computing., 43, 75–90.

Zou, H., & Li, R. (2008). One-step sparse estimates in nonconcave penalized likelihood models. The Annals of Statistics, 36, 1509–1533.

Acknowledgements

This research is supported by the National Social Science Foundation of China (No. 18BTJ035).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that there is no conflict of interests regarding the publication of this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix. Proof of theorems

Appendix. Proof of theorems

In this Appendix, we provide the proof details of Theorems 1–4 in this paper.

Proof of Theorem 1

Let \(\delta _{n}=\sqrt{q_{n}/n}\) and \(\theta =\theta _{0}+\delta _{n} M\). We first show that, for any given \(\varepsilon >0\), there exists a large constant C such that

Let \(\varDelta _{n}(\theta )=Q_{n}(\theta )-Q_{n}(\theta _{0})\), then, invoking \(\theta _{0k}=0\) with \(k\in \mathscr {A}_{2}\), \(p_{\lambda _{1n}}(0)=0\) and model (4), some simple calculations yield

We first consider \(I_{n1}\). From Knight (1998), we have the following identity:

Hence, we have

From condition (C3), we have \(E[I(\varepsilon _{i}>0)-I(\varepsilon _{i}<0)]=0\). Hence, invoking condition (C6), we can prove

Hence by the Markov inequality, we obtain

This implies that

Next we consider \(I_{n4}\). We denote

Then

Note that

Then we obtain

This implies \(I_{n5}=o_{p}(\delta _{n}^{2})\). In addition, by the dominated convergence theorem, we can obtain

Next we consider the term \(I_{n2}\). Invoking condition (C8), some calculations yield

Then, by choosing a large C, all terms \(I_{n2}\), \(I_{n3}\) and \(I_{n5}\) are dominated by \(I_{n6}\) with \(\Vert M\Vert =C\). Note that \(I_{n6}\) is positive, then invoking (13–18), we obtain that (12) holds. Furthermore, by the convexity of \(Q_{n}(\cdot )\), we have

This implies, with probability at least \(1-\varepsilon\), that there exists a local minimizer \(\widehat{\theta }\) such that \(\widehat{\theta }-\theta _{0}=O_{p}(\delta _{n})\), which completes the proof of Theorem 1. \(\square\)

Proof of Theorem 2

For convenience and simplicity, let \(\theta _{0}=(\theta _{\mathscr {A}_{1}}^{T},\theta _{\mathscr {A}_{2}}^{T})^{T}\) with \(\theta _{\mathscr {A}_{1}}=\{\theta _{0k}:k\in \mathscr {A}_{1}\}\) and \(\theta _{\mathscr {A}_{2}}=\{\theta _{0k}:k\in \mathscr {A}_{2}\}\). The corresponding covariate is denoted by \(Z_{i}=(Z_{i}^{(1)T},Z_{i}^{(2)T})^{T}\). From the proof of Theorem 1, for a sufficiently large C, \(\widehat{\theta }\) lies in the ball \(\{\theta _{0}+\delta _{n}M:\Vert M\Vert \le C\}\) with probability converging to 1, where \(\delta _{n}=\sqrt{q_{n}/n}\). We denote \(\theta _{1}=\theta _{\mathscr {A}_{1}}+\delta _{n} M_{1}\) and \(\theta _{2}=\theta _{\mathscr {A}_{2}}+\delta _{n} M_{2}\) with \(\Vert M_{1}\Vert ^{2}+\Vert M_{2}\Vert ^{2}\le C^{2}\), and \(V_{n}(M_{1},M_{2})=Q_{n}(\theta _{1},\theta _{2})-Q_{n}(\theta _{\mathscr {A}_{1}},0)\), then the estimator \(\widehat{\theta }=(\widehat{\theta }_{1}^{T},\widehat{\theta }_{2}^{T})^{T}\) can also be obtained by minimizing \(V_{n}(M_{1},M_{2})\), except on an event with probability tending to zero. Hence, to prove this theorem, we only need to prove that, for any \(M_{1}\) and \(M_{2}\) satisfying \(\Vert M_{1}\Vert ^{2} +\Vert M_{2}\Vert ^{2}\le C^{2}\), if \(\Vert M_{2}\Vert >0\), then with probability tending to 1, we have

Note that

Similar to the proof of Theorem 1, we can obtain

In addition, for \(k\in \mathscr {A}_{2}\), we have \(\theta _{0k}=0\). Then invoking \(p_{\lambda _{n}}(0)=0\), we can derive

By conditions (C7) and (C8), we have \(\sqrt{q_{n}/n}/\lambda _{n}\rightarrow 0\) and \(p'_{\lambda _{1n}}(0)/\lambda _{1n}>0\). Hence, (23) implies that (19) holds with probability tending to 1. This completes the proof of Theorem 2. \(\square\)

Proof of Theorem 3

Note that Theorem 2 implies that the variable selection for optimal instrumental variables is consistent, then model (6) implies that, with probability tending to 1, we have \(X_{i}=\varGamma _{\mathscr {A}_{1}} Z_{i}^{*}+e_{i}\), \(i=1,\ldots ,n\). In addition, because \(\widehat{\varGamma }\) is the moment estimator of \(\varGamma _{\mathscr {A}_{1}}\), we can prove \(\widehat{\varGamma }=\varGamma _{\mathscr {A}_{1}}+O_{p}(\sqrt{p_{n}/n})\). Hence, invoking \(E(e_{i})=0\), a simple calculation yields

Furthermore, we let \(\beta _{0}\) and \(\gamma _{0}\) be the true values of \(\beta\) and \(\gamma\), respectively, and denote \(R(U_{i})=g(U_{i})-W_{i}^{T}\gamma _{0}\). Then from Schumaker (1981), we have \(\Vert R(U_{i})\Vert =O_{p}(\kappa _{n}^{-r})=O_{p}(\sqrt{\kappa _{n}/n})\). Hence, invoking (24), some calculations yield

where \(\delta _{n}=\sqrt{(p_{n}+\kappa _{n})/n}\). Furthermore, we denote \(\alpha _{0}=(\beta _{0}^{T},\gamma _{0}^{T})^{T}\) and \(\alpha =(\beta ^{T},\gamma ^{T})^{T}\) with \(\alpha =\alpha _{0}+\delta _{n} M\), where M is a \((p_{n}+L_{n})\) dimensional vector. Then (25) implies that

and

where \(\xi _{i}=(X_{i}^{T},W_{i}^{T})^{T}\). Furthermore, we let \(\varDelta _{n}(\beta ,\gamma )= M_{n}(\beta ,\gamma )-M_{n}(\beta _{0},\gamma _{0})\), then from (26) and (27), we have

Hence invoking (28), and using the similar arguments to the proof of (13), we have that, for any given \(\varepsilon >0\), there exists a large constant C such that

This implies, with probability at least \(1-\varepsilon\), that there exists a local minimizer \(\widehat{\beta }\) and \(\widehat{\gamma }\), which satisfy \(\widehat{\beta }-\beta _{0}=O_{p}(\sqrt{(p_{n}+\kappa _{n})/n})\) and \(\widehat{\gamma }-\gamma _{0}=O_{p}(\sqrt{(p_{n}+\kappa _{n})/n})\). Then, we complete the proof of part (i) in Theorem 3. \(\square\)

In addition, invoking the proof of part (i), and using the same arguments as the proof of Theorem 2, we can prove part (ii) in Theorem 3. Then we omit the proof procedure of part (ii) in detail.

Proof of Theorem 4

A simple calculation yields

where \(R(u)=g(u)-B^{T}(u)\gamma _{0}\) and \(H=\int _{0}^{1}B(u)B^{T}(u)du\). From the proof of Theorem 3, we can obtain \(\Vert \widehat{\gamma }-\gamma _{0}\Vert =O_{p}(\sqrt{(p_{n}+\kappa _{n})/n})\). Then from condition (C7) and \(\kappa _{n}=O(1/(2r+1))\), we can prove \(O_{p}(\sqrt{(p_{n}+\kappa _{n})/n})=O_{p}(\sqrt{\kappa _{n}/n})=O_{p}(n^{-r/(2r+1)})\). Then, invoking \(\Vert H\Vert =O(1)\), a simple calculation yields

In addition, from conditions C1, C4 and Corollary 6.21 in Schumaker (1981), we can obtain \(R(u)=O(\kappa _{n}^{-r})=O(n^{-r/(2r+1)})\). Then, it is easy to show that

Invoking (29–31), we complete the proof Theorem 4. \(\square\)

Rights and permissions

About this article

Cite this article

Liu, C., Zhao, P. & Yang, Y. Regularization statistical inferences for partially linear models with high dimensional endogenous covariates. J. Korean Stat. Soc. 50, 163–184 (2021). https://doi.org/10.1007/s42952-020-00067-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42952-020-00067-4

Keywords

- Partially linear model

- High dimensional endogenous covariates

- High dimensional instrumental variables

- Regularized estimation