Abstract

By using instrumental variable technology and the partial group smoothly clipped absolute deviation penalty method, we propose a variable selection procedure for a class of partially varying coefficient models with endogenous variables. The proposed variable selection method can eliminate the influence of the endogenous variables. With appropriate selection of the tuning parameters, we establish the oracle property of this variable selection procedure. A simulation study is undertaken to assess the finite sample performance of the proposed variable selection procedure.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In many disciplines, some covariates may be endogenous in regression modeling. In this situation, the estimator based on the classical method, such as the ordinary least squares method, is not consistent any more (see Newhouse and McClellan 1998; Greenland 2000; Hernan and Robins 2006). The instrumental variable method provides a way to correct the possible endogeneity between covariates and structural errors, and can obtain consistent parameter estimators. Recently, this method has been widely used in applied statistics, econometrics, and more generally related disciplines. Because the linear instrumental variable model, which assumes that the coefficients of all covariates are constant, is sometimes too restrictive for real economic models (see Schultz 1997; Card 2001), many papers have considered the statistical inferences for semiparametric models. For example, Yao (2012) considered the efficient estimation for partially linear instrumental variable models, and proposed a semiparametric instrumental variable estimation procedure. Zhao and Xue (2013) considered the confidence region construction for regression coefficients in partially linear instrumental variable models based on the empirical likelihood method. Zhao and Li (2013) considered the variable selection for varying coefficient instrumental variable models by using the smooth-threshold estimating equations method. The varying coefficient instrumental variable model allows the effect of endogenous covariates to be varying with a covariate, and is commonly used for analysis of data measured repeatedly over time, such as time series analysis, longitudinal data analysis and functional data analysis. In practice, however, only some of the coefficients vary with certain covariate, hence one useful extension of the varying coefficient instrumental variable model is the partially varying coefficient model with endogenous variables.

where \(\theta (u)=(\theta _{1}(u),\ldots ,\theta _{p}(u))^{T}\) is a \(p\times 1\) vector of unknown functions, \(\beta =(\beta _{1},\ldots ,\beta _{q})^{T}\) is a \(q\times 1\) vector of unknown parameters, \(\varGamma \) is a \(q\times k\) matrix of unknown parameters. \(Y_{i}\) is the response variable, and \(\varepsilon _{i}\) and \(e_{i}\) are zero-mean model errors. Furthermore, we assume that \(X_{i}\) and \(U_{i}\) are exogenous covariates, \(Z_{i}\) is the endogenous covariate, and \(\xi _{i}\) is the corresponding instrumental variable. This implies that the covariate \(Z_{i}\) is correlated with the model error \(\varepsilon _{i}\), but \(X_{i}\), \(U_{i}\) and \(\xi _{i}\) are uncorrelated with \(\varepsilon _{i}\). Then we have

Model (1) is more flexible, and the linear instrumental variable model, the partially linear instrumental variable model and the varying coefficient instrumental variable model are all special cases of model (1). For model (1), Cai and Xiong (2012) considered the efficient estimation problem, and proposed a three-step estimation procedure to estimate the parametric components and the nonparametric components. However, when the number of covariates in model (1) is the large, an important problem is to select the important variables in such model.

Variable selection is a very important topic in modern statistical inference. Recently, based on some penalty methods, many variable selection procedures have been proposed. For example, Frank and Friedman (1993) proposed a variable selection procedure based on the bridge regression technology. Tibshirani (1996) proposed a variable selection procedure based on the least absolute shrinkage and selection operator (LASSO) technology. Fan and Li (2001) proposed a variable selection procedure based on smoothly clipped absolute deviation penalty (SCAD), which include bridge regression and LASSO penalty. Wang et al. (2008) extended the SCAD variable selection method to the varying coefficient model, and proposed a group SCAD (gSCAD) variable selection procedure. Zhao and Xue (2009) proposed a partial gSCAD variable selection method for the varying coefficient partially linear model. Recently, many papers considered the variable selection for varying coefficient models with high dimensional data. For example, Lin and Yuan (2012) considered the variable selection for generalized varying coefficient partially linear models with diverging number of parameters. Lian (2012) considered the variable selection for high-dimensional generalized varying coefficient models. Wang et al. (2013) considered the polynomial spline estimation for generalized varying coefficient partially linear models with a diverging number of components. However, for the case that some covariates are endogenous, these variable selection methods are not consistent, and can not be directly used any more.

To overcome this problem, in this paper, we extend the partial gSCAD variable selection method, used by Zhao and Xue (2009), to the varying coefficient partially linear regression model with endogenous covariates. We propose an instrumental variable based partial gSCAD variable selection procedure which can select significant variables in the parametric components and nonparametric components simultaneously. With the proper choice of regularization parameters, we show that the variable selection procedure is consistent, and the penalized estimators have the oracle property in the sense of Fan and Li (2001). In addition, it is noteworthy that the proposed method can attenuate the effect of the endogeneity of covariates, which is an improvement of the variable selection method used in Zhao and Xue (2009).

The rest of this paper is organized as follows. In Sect. 2, we propose the instrumental variable based partial gSCAD variable selection procedure, and establish some asymptotic properties, including the consistency and the oracle property. In Sect. 3, based on the local quadratic approximation technology, we propose an iterative algorithm for finding the penalized estimators. In Sect. 4, some simulations are carried out to assess the performance of the proposed methods. Finally, the technical proofs of all asymptotic results are provided in “Appendix”.

2 Methodology and main results

We let \(B(u)=(B_{1}(u), \ldots , B_{L}(u))^{T}\) denote B-spline basis functions with the order of M, where \(L=K+M+1\), and K is the number of interior knots. Then, \(\theta _{k}(u)\) can be approximated by

Substituting this into model (1), we can get

where \(W_{i}=I_{p}\otimes B(U_{i})\cdot X_{i} \) and \(\gamma =(\gamma _{1}^{T},\ldots ,\gamma _{p}^{T})^{T}\). Model (2) is a standard linear regression model. Note that each function \(\theta _{k}(u)\) in (1) is characterized by \(\gamma _{k}\) in (2). Then, motivated by the idea of Zhao and Xue (2009), we propose the following partial gSCAD regularized estimation

where \(\Vert \gamma _{k}\Vert _{H}=(\gamma ^{T}H\gamma )^{1/2}\), \(H=(h_{ij})_{L\times L}\) is a matrix with \(h_{ij}=\int B_{i}(u)B_{j}(u)du\), and \(p_{\lambda }(\cdot )\) is the SCAD penalty function with \(\lambda \) as a tuning parameter (see Fan and Li 2001), defined as

with \(a>2, w>0\) and \(p_{\lambda }(0)=0\).

If \(Z_{i}, i=1,\ldots ,n\) in model (1) are exogenous as well, then by Zhao and Xue (2009), it can be shown that we can get a consistent sparse solution by minimizing (3). However, \(Z_{i}\), \(i=1,\ldots ,n\) in model (1) are endogenous covariates, and then \(E(\varepsilon _{i}|Z_{i})\ne 0\). In this case, one can show that the resulting estimator, based on (3), is biased. Hence, (3) cannot be used directly to select the important variables and estimate regression coefficients any more.

Next, we propose an adjustment for (3) based on instrumental variables \(\xi _{i}, i=1,\ldots ,n\). From model (1), we have \(E(Z\xi ^{T})=\varGamma E(\xi \xi ^{T})\). Hence, the moment estimator of \(\varGamma \) can be given by

where

By the proof in the “Appendix”, we have \(\hat{\varGamma }=\varGamma +o_{p}(1)\). Note that \(E(Z_{i}|\xi _{i})=\varGamma \xi _{i}\), then an unbiased adjustment of \(Z_{i}\) can be given by \(\hat{Z}_{i}=\hat{\varGamma }\xi _{i}\). Hence, an instrumental variable based partial gSCAD regularized estimation function can be given by

Remark 1

Because the endogeneity of the covariate \(Z_{i}\) will result in the inconsistent estimation and variable selection, we replace \(Z_{i}\) in \(Q(\gamma ,\beta )\) by the adjustment \(\hat{Z}_{i}\). Note that \(\hat{\varGamma }=\varGamma +o_{p}(1)\), we have \(\hat{Z}_{i}=\varGamma \xi _{i}+o_{p}(1)\). Hence, invoking that the instrumental variable \(\xi _{i}\) is an exogenous covariate, the following asymptotic results show that such an adjustment can attenuate the effect of endogenous covariates, and give a consistent regularity estimation procedure.

Let \(\hat{\beta }\) and \(\hat{\gamma }=(\hat{\gamma }_{1}^{T},\ldots ,\hat{\gamma }_{p}^{T})^{T}\) be the solution by minimizing (4). Then, \(\hat{\beta }\) is the penalized least squares estimator of \(\beta \), and the estimator of \(\theta _{k}(u)\) can be obtained by \(\hat{\theta }_{k}(u)=B^{T}(u)\hat{\gamma }_{k}\).

Next, we study the asymptotic properties of the resulting penalized least squares estimators. Similar to Zhao and Xue (2009), we let \(\theta _{0}(\cdot )\) and \(\beta _{0}\) be the true value of \(\theta (\cdot )\) and \(\beta \) respectively. Without loss of generality, we assume that \(\beta _{l0}=0,~ l=s+1,\ldots ,q\), and \(\beta _{l0},~ l=1,\ldots ,s\) are all nonzero components of \(\beta _{0}\). Furthermore, we assume that \(\theta _{k0}(\cdot )=0,~ k=d+1,\ldots ,p\), and \(\theta _{k0}(\cdot ),~ k=1,\ldots ,d\) are all nonzero components of \(\theta _{0}(\cdot )\). Let

and

Furthermore, we let \(a_{n}=\max \{a_{1n},a_{2n}\}\) and \(b_{n}=\max \{b_{1n},b_{2n}\}\). Then, the following theorem gives the consistency of the penalized least squares estimators.

Theorem 1

Suppose that the regularity conditions C1-C5 in “Appendix” hold and the number of knots \(K=O_{p}(n^{1/(2r+1)})\), where r is defined in condition C1 in “Appendix”. If \(a_{n}\rightarrow 0\) and \(b_{n}\rightarrow 0\), as \(n\rightarrow \infty \), then,

-

(i)

\(\Vert \hat{\beta }-\beta _{0}\Vert =O_{p}(n^{\frac{-r}{2r+1}}+a_{n})\).

-

(ii)

\(\Vert \hat{\theta }_{k}(u)-\theta _{k0}(u)\Vert = O_{p}(n^{\frac{-r}{2r+1}}+a_{n}),~~k=1,\ldots ,p\).

Remark 2

For the SCAD penalty function that used in this paper, it is clear that \(a_{n}=0\) if \(\lambda \rightarrow 0\) when n is large enough. Hence, under the regularity conditions defined in the “Appendix”, the consistent penalized estimator indeed exists with probability tending to one.

Furthermore, under some conditions, we show that such consistent estimators must possess the sparsity property, which is stated as follows

Theorem 2

Suppose that the regularity conditions in Theorem 1 hold, and

If \(n^{r/(2r+1)}\lambda \rightarrow \infty \) and \(\lambda \rightarrow 0\), as \(n\rightarrow \infty \). Then, with probability tending to 1, \(\hat{\beta }\) and \(\hat{\theta }(u)\) must satisfy

-

(i)

\(\hat{\beta }_{l}=0,\quad l=s+1,\ldots ,q.\)

-

(ii)

\(\hat{\theta }_{k}(u)=0,\quad k=d+1,\ldots ,p.\)

Remark 3

From remark 1 in Fan and Li (2001), we have that, if \(\lambda \rightarrow 0\) as \(n\rightarrow \infty \), then \(a_{n}=0\). Then from Theorems 1 and 2, it is clear that, by choosing a proper \(\lambda \), the proposed variable selection method is consistent and the estimators achieve the convergence rate as if the subset of true zero coefficients is already known. This implies that the penalized estimators have the oracle property.

3 Algorithm

Note that the penalty function \(p_{\lambda }(\cdot )\) in \(\hat{Q}(\gamma ,\beta )\) is irregular at the origin, then the classical gradient method can not be used to solve \(\hat{Q}(\gamma ,\beta )\). In this section, we give an iterative algorithm based on local quadratic approximation technology that used in Fan and Li (2001) and Zhao and Xue (2009). More specifically, for any given non-zero \(w_{0}\), in a neighborhood of \(w_{0}\), we have the following approximation

Hence, for the given initial value \(\beta _{l}^\mathrm{ini}\) with \(|\beta _{l}^\mathrm{ini}|>0, l=1,\ldots ,q\), and \(\gamma _{k}^\mathrm{ini}\) with \(\Vert \gamma _{k}^\mathrm{ini}\Vert _{H}>0, k=1,\ldots ,p\), we can obtain that

Let \(\tilde{Z}_{i}=(\hat{Z}_{i}^{T},W_{i}^{T})^{T}\) and \(\alpha =(\beta ^{T},\gamma ^{T})^{T}\) be \(pL+q\)-dimensional vectors. Furthermore, we let

where \(\alpha ^\mathrm{ini}=(\beta ^{\mathrm{ini} T},\gamma ^{\mathrm{ini} T})^{T}\). Then, except for a constant term, \(\hat{Q}(\gamma ,\beta )\) that defined in (4) can be written as

It is clear that \(\hat{Q}(\alpha )\) is a quadratic form, and it can be solved by

Hence, we can give an iterative algorithm as follows

- S1.:

-

Initialize \(\alpha ^{(0)}=\alpha ^\mathrm{ini}\).

- S2.:

-

Set \(\alpha ^{(0)}=\alpha ^{(k)}\), solve \(\alpha ^{(k+1)}\) by Eq. (5).

- S3.:

-

Iterate the step S2 until convergence, and denote the final estimator of \(\alpha \) as \(\hat{\alpha }\).

Then \(\hat{\beta }= (I_{q\times q},0_{q\times pL})\hat{\alpha }\), and \(\hat{\gamma }= (0_{pL\times q},I_{pL\times pL})\hat{\alpha }\). In the initialization step, we obtain an initial estimator \(\alpha ^\mathrm{ini}=(\beta ^{\mathrm{ini} T},\gamma ^{\mathrm{ini} T})^{T}\) by using ordinary least squares method based on the following objective function

Furthermore, to implement this method, the number of interior knots K, and the tuning parameters a and \(\lambda \) in the penalty function should be chosen. Fan and Li (2001) showed that the choice of \(a=3.7\) performs well in a variety of situations. Hence, we use this suggestion throughout this paper. In addition, we estimate \(\lambda \) and K by minimizing the following cross-validation score function

where \(\hat{\theta }_{[i]}(\cdot )\) and \(\hat{\beta }_{[i]}\) are estimators of \(\theta (\cdot )\) and \(\beta \) respectively based on (4) after deleting the ith subject.

Although maybe some nonzero parameters will be incorrectly set to zeros in this algorithm, from the following simulation studies, we can see that the number of the nonzeros incorrectly set to zero is very small, and it decreases rapidly when the sample size n increases. This implies that the proposed iterative algorithm is workable.

4 Simulation studies

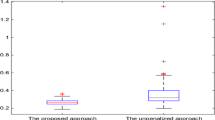

In this section, we conduct some Monte Carlo simulations to evaluate the finite sample performance of the proposed variable selection method. And as in Zhao and Xue (2009), the performance of estimator \(\hat{\beta }\) will be assessed by using the generalized mean square error (GMSE), defined as

The performance of estimator \(\hat{\theta }(\cdot )\) will be assessed by using the square root of average square errors (RASE)

where \(u_{s}, s=1,\ldots ,M\) are the grid points at which the function \(\hat{\theta }(u)\) are evaluated. In our simulation, \(M=200\) is used.

We simulate data from model (1), where \(\beta =(\beta _{1},\ldots ,\beta _{10})^{T}\) with \(\beta _{1}=3\), \(\beta _{2}=2, \beta _{3}=1\) and \(\beta _{4}=0.5\), and \(\theta (u)=(\theta _{1}(u),\ldots ,\theta _{10}(u))^{T}\) with \(\theta _{1}(u)=2.5+0.5\exp (2u-1), \theta _{2}(u)=2-\sin (\pi u)\) and \(\theta _{3}(u)=0.5+0.8u(1-u)\). While the remaining coefficients, corresponding to the irrelevant variables, are given by zeros. To perform this simulation, we take the covariates \(U\sim U(0,1)\), \(X_{k}\sim N(1, 1.5)\), and the instrumental variables \(\xi _{k}\sim N(1, 1), k=1,\ldots ,10\). The covariate \(Z_{k}=\xi _{k}+\alpha \varepsilon \), where \(\varepsilon \sim N(0, 0.5)\) and \(\alpha =0.2, 0.4\) and 0.6 to represent different levels of endogeneity of covariates. This setting up makes sure \(E(Z_{k}\varepsilon )\ne 0\), which implies that the covariate \(Z_{k}\) is endogenous. In the following simulations, we use the quadratic B-splines, and the interior knots are taken equidistantly. Furthermore, the sample size is taken as \(n=100, 200\) and 300 respectively, and for each case, we take 1000 simulation runs.

To evaluate the performance of the proposed variable selection method, two methods are compared: the instrumental variable based partial gSCAD variable selection method (IV-gSCAD) based on Theorem 1, and the naive partial gSCAD variable selection method (Naive-gSCAD). The latter is neglecting the endogeneity of covariate \(Z_{i}\), and using the partial gSCAD penalty method based on (3) directly. Based on the 1000 simulation runs, the average number of zero coefficients for parametric components is reported in Table 1, and the average number of zero coefficients for nonparametric components is reported in Table 2. In Tables 1 and 2, the column labeled “C” presents the average number of coefficients of the true zeros correctly set to zero, and the column labeled “I” presents the average number of the true nonzeros incorrectly set to zero. Tables 1 and 2 also present the average false selection rate (FSR), which is defined as \(\hbox {FSR}=\hbox {IN/TN}\), where “IN” is the average number of the true zeros incorrectly set to nonzero, and “TN” is the average total number set to nonzero. In fact, FSR represents the proportion of falsely selected unimportant variables among the total variables selected in the variable selection procedure. From Tables 1 and 2, we can make the following observations:

-

(i)

The performances of IV-gSCAD method for parametric components and nonparametric components are both better than those of Naive-gSCAD method, and this is especially true when the level of endogeneity of covariates is large. Because the Naive-gSCAD variable selection method cannot eliminate some unimportant variables in the parametric and nonparametric components, and gives significantly larger model errors. This implies that the Naive-gSCAD variable selection procedure is biased.

-

(ii)

For the given level of endogeneity of covariates, the GMSE, RASE and FSR, obtained by the IV-gSCAD variable selection method, all decrease as the sample size n increases. This implies that the proposed IV-gSCAD variable selection procedure is consistent.

-

(iii)

For given n, the IV-gSCAD variable selection method performs similar in terms of model error and model complexity for all levels of endogeneity of covariates. This indicates that the proposed instrumental variable based variable selection can attenuate the effect of the endogeneity of covariates. In general, the proposed variable selection method works well in terms of model error and the model complexity.

References

Cai Z, Xiong H (2012) Partially varying coefficient instrumental variables models. Stat Neerl 66:85–110

Card D (2001) Estimating the return to schooling: progress on some persistent econometric problems. Econometrica 69:1127–1160

Fan JQ, Li R (2001) Variable selection via nonconcave penalized likelihood and its oracle properties. J Am Stat. Assoc 96:1348–1360

Frank IE, Friedman JH (1993) A statistical view of some chemometrics regression tool (with discussion). Technometrics 35:109–148

Greenland S (2000) An introduction to instrumental variables for epidemiologists. Int J Epidemiol 29:722–729

Hernan MA, Robins JM (2006) Instruments for causal inference—an epidemiologists dream? Epidemiology 17:360–372

Lian H (2012) Variable selection for high-dimensional generalized varying-coefficient models. Stat Sinica 22:1563–1588

Lin ZY, Yuan YZ (2012) Variable selection for generalized varying coefficient partially linear models with diverging number of parameters. Acta Math Appl Sinica Eng Ser 28(2):237–246

Newhouse JP, McClellan M (1998) Econometrics in outcomes research: the use of instrumental variables. Annu Rev Public Health 19:17–24

Schultz TP (1997) Human capital, schooling and health. IUSSP, XXIII, General Population Conference. Yale University

Schumaker LL (1981) Spline functions. Wiley, New York

Tibshirani R (1996) Regression shrinkage and selection via the Lasso. J R Stat Soc Ser B 58:267–288

Wang L, Li H, Huang JZ (2008) Variable selection in nonparametric varying-coefficient models for analysis of repeated measurements. J Am Stat Assoc 103:1556–1569

Wang LC, Lai P, Lian H (2013) Polynomial spline estimation for generalized varying coefficient partially linear models with a diverging number of components. Metrika 76:1083–1103

Yao F (2012) Efficient semiparametric instrumental variable estimation under conditional heteroskedasticity. J Quant Econ 10:32–55

Zhao PX, Li GR (2013) Modified SEE variable selection for varying coefficient instrumental variable models. Stat Methodol 12:60–70

Zhao PX, Xue LG (2009) Variable selection for semiparametric varying coefficient partially linear models. Stat Probab Lett 79:2148–2157

Zhao PX, Xue LG (2013) Empirical likelihood inferences for semiparametric instrumental variable models. J Appl Math Comput 43:75–90

Acknowledgments

This paper is supported by the National Natural Science Foundation of China (11301569), the Higher-education Reform Project of Guangxi (2014JGA209), and the Project of Outstanding Young Teachers Training in Higher Education Institutions of Guangxi.

Author information

Authors and Affiliations

Corresponding author

Appendix: Proof of theorems

Appendix: Proof of theorems

For convenience and simplicity, let c denote a positive constant which may be different value at each appearance throughout this paper. Before we prove our main theorems, we list some regularity conditions which are used in this paper.

- C1.:

-

\(\theta (u)\) is rth continuously differentiable on (0, 1), where \(r>1/2\).

- C2.:

-

Let \(c_{1},\ldots ,c_{K}\) be the interior knots of [0, 1]. Furthermore, we let \(c_{0}=0, c_{K+1}=1\), \(h_{i}=c_{i}-c_{i-1}\) and \(h=\max \{h_{i}\}\). Then, there exists a constant \(C_{0}\) such that

$$\begin{aligned} \frac{h}{\min \{h_{i}\}}\le C_{0},\quad \max \{|h_{i+1}-h_{i}|\}=o\left( K^{-1}\right) . \end{aligned}$$ - C3.:

-

The density function of U, says f(u), is bounded away from zero and infinity on [0, 1], and is continuously differentiable on (0, 1).

- C4.:

-

Let \(G_{1}(u)=E\{ZZ^{T}|U=u\}, G_{2}(u)=E\{XX^{T}|U=u\}\) and \(\sigma ^{2}(u)=E\{\varepsilon ^{2}|U=u\}\). Then, \(G_{1}(u), G_{2}(u)\) and \(\sigma ^{2}(u)\) are continuous with respect to u. Furthermore, for given u, \(G_{1}(u)\) and \(G_{2}(u)\) are positive definite matrix, and the eigenvalues of \(G_{1}(u)\) and \(G_{2}(u)\) are bounded.

- C5.:

-

The penalty function \(p_{\lambda }(\cdot )\) satisfies that

- (i):

-

\(\displaystyle \lim _{n\rightarrow \infty }\lambda =0\), and \( \displaystyle \lim _{n\rightarrow \infty }\sqrt{n}\lambda =\infty \).

- (ii):

-

For any given non-zero \(w, \displaystyle \lim _{n\rightarrow \infty }\sqrt{n}p'_{\lambda }(|w|)=0\), and \( \displaystyle \lim _{n\rightarrow \infty }p''_{\lambda }(|w|)=0\).

- (iii):

-

\(\displaystyle \lim _{n\rightarrow \infty }\sup _{|w|\le cn^{-1/2}}p''_{\lambda }(|w|)=0\), and \(\displaystyle \lim _{n\rightarrow \infty }\lambda ^{-1}\inf _{|w|\le cn^{-1/2}}p'_{\lambda }(|w|)>0\), where c is a positive constant.

These conditions are commonly adopted in the nonparametric literature and variable selection methodology. Conditions C1 is the continuity condition of nonparametric components which is common in the nonparametric literature. Condition C2 indicates that \(c_{0},\ldots ,c_{K+1}\) is a \(C_{0}\)-quasi-uniform sequence of partitions of [0, 1] (see Schumaker 1981, p. 216), and this assumption is used in Zhao and Li (2013), Zhao and Xue (2009), Wang et al. (2013). Conditions C3 and C4 are some regularity conditions for covariates, which are similar to those used in Zhao and Xue (2013), Cai and Xiong (2012), Wang et al. (2008). Condition C5 contains some assumptions for the penalty function. These conditions on the penalty function are similar to those used in Fan and Li (2001), Wang et al. (2008), Zhao and Xue (2009), and it is easy to show that the SCAD, Lasso penalty functions satisfy these conditions.

Proof of Theorem 1

Let \(\delta =n^{-r/(2r+1)}+a_{n}, \beta =\beta _{0}+\delta M_{1}\), \(\gamma =\gamma _{0}+\delta M_{2}\) and \(M=(M_{1}^{T}, M_{2}^{T})^{T}\). For part (i), we show that, for any given \(\epsilon >0\), there exists a large constant c such that

Let \(R_{k0}(u)=\theta _{k0}(u)-B(u)^{T}\gamma _{k0}\), then note that \(W_{i}=I_{p}\otimes B(U_{i})\cdot X_{i}\), we have

where \(R_{0}(U_{i})=(R_{10}(U_{i}),\ldots ,R_{p0}(U_{i}))^{T}\). Hence, we have

Then, invoking \(\beta =\beta _{0}+\delta M_{1}\), \(\gamma =\gamma _{0}+\delta M_{2}\) and (9), we can obtain that

By (9) and (10), and based on the formula \(a^{2}-b^{2}=(a+b)(a-b)\), we have that

Let \(\Delta (\gamma ,\beta )=K^{-1}\{\hat{Q}(\gamma ,\beta )-\hat{Q}(\gamma _{0},\beta _{0})\}\), then from (11), we have that

From conditions C1, C2 and Corollary 6.21 in Schumaker (1981), we get that \(\Vert R(u)\Vert =O(K^{-r})\). Then, invoking condition C4, a simple calculation yields

Invoking \(E\{\varepsilon _{i}|\xi _{i},X_{i}\}=0\) and \(\hat{Z}_{i}=\varGamma \xi _{i}+O_{p}(n^{-1/2})\), we can prove that

In addition, note that \(Z_{i}-\hat{Z}_{i}=(\varGamma -\hat{\varGamma })\xi _{i}+e_{i}\), then invoking \(\hat{\varGamma }=\varGamma +O_{p}(n^{-1/2})\) and \(E\{e_{i}|\xi _{i},X_{i}\}=0\), we can prove that

Hence, from (12) to (14), it is easy to show that

Similarly, we can prove that

By the condition C5(ii), we have that \(\lim _{n\rightarrow \infty }\sqrt{n}p'_{\lambda }(|w|)=0\), for any given nonzero w. Then invoking the definition of \(a_{n}\), we can obtain \(\sqrt{n}a_{n}\rightarrow 0\) when n is large enough. Hence, we obtain that

Hence we have \(I_{2}/I_{1}=O_{p}(1)\Vert M\Vert \). Then, by choosing a sufficiently large \(c, I_{2}\) can dominate \(I_{1}\) uniformly in \(\Vert M\Vert =c\). Furthermore, invoking \(p_{\lambda }(0)=0\), and by the standard argument of the Taylor expansion, we get that

Note that \(n^{\frac{r}{2r+1}}a_{n}\rightarrow 0\) and \(b_{n}\rightarrow 0\), we obtain that \(I_{3}=o_{p}(1)\Vert M\Vert ^{2}\). Hence, we have that \(I_{3}\) is dominated by \(I_{2}\) uniformly in \(\Vert M\Vert =c\). With the same argument, we can prove that \(I_{4}\) is also dominated by \(I_{2}\) uniformly in \(\Vert M\Vert =c\). In addition, note that \(I_{2}\) is positive, then by choosing a sufficiently large c, (7) holds.

By the continuity of \(\hat{Q}(\cdot ,\cdot )\), the inequality (7) implies that \(\hat{Q}(\cdot ,\cdot )\) should have a local minimum on \(\{\Vert M\Vert \le c\}\) with probability greater than \(1-\epsilon \). Hence, there exists a local minimizer \(\hat{\beta }\) such that \(\Vert \hat{\beta }-\beta _{0}\Vert =O_{p}(\delta )\), which completes the proof of part (i).

Next, we prove part (ii). Note that

With the same arguments as the proof of part (i), we can get that \(\Vert \hat{\gamma }-\gamma \Vert =O_{p}(n^{-r/(2r+1)}+a_{n})\). Then, a simple calculation yields

In addition, it is easy to show that

Invoking (15) and (16), we complete the proof of part (ii). \(\square \)

Proof of Theorem 2

We first prove part (i). Invoking the condition \(\lambda \rightarrow 0\), it is easy to show that \(a_{n}=0\) for large n. Then by Theorem 1, it is sufficient to show that, for any given \(\beta _{l}, l=1,\ldots ,s\), which satisfy \(\Vert \beta _{l}-\beta _{l0}\Vert =O_{p}(n^{-r/(2r+1)})\), and a small \(\epsilon \) which satisfies \(\epsilon =cn^{-r/(2r+1)}\), with probability tending to 1, we have

and

Thus, (17) and (18) imply that the minimizer attains at \(\beta _{l}=0, l=s+1,\ldots ,q\).

By a similar the proof of Theorem 1, we have that

Since \(\lim _{n\rightarrow \infty }\liminf _{\beta _{l}\rightarrow 0}\lambda ^{-1}p' _{\lambda }(|\beta _{l}|)>0\) and \(\lambda n^{\frac{r}{2r+1}}\rightarrow \infty \), the sign of the derivative is completely determined by the sign of \(\beta _{l}\), then (17) and (18) hold. This completes the proof of part (i).

Applying the similar techniques as in the analysis of part (i) in this theorem, we have, with probability tending to 1, that \(\hat{\gamma }_{k}=0, k=d+1,\ldots ,p\). Then, the result of this theorem is immediately achieved form \(\hat{\theta }_{k}(u)=B^{T}(u)\hat{\gamma }_{k}\). \(\square \)

Rights and permissions

About this article

Cite this article

Yuan, J., Zhao, P. & Zhang, W. Semiparametric variable selection for partially varying coefficient models with endogenous variables. Comput Stat 31, 693–707 (2016). https://doi.org/10.1007/s00180-015-0601-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-015-0601-y