Abstract

The Conway–Maxwell Poisson (CMP) distribution is a popular model for analyzing data that exhibit under or over dispersion. In this article, we construct bivariate CMP distributions with given marginal CMP distributions and range of correlation coefficient over (− 1, 1) based on the Sarmanov family of bivariate distributions. One of the constructions is based on a general method for weighted distributions. The dependence property is examined. Parameter estimation, tests of independence and adequacy of model and a Monte Carlo power study are discussed. A real data set is used to exemplify its usefulness with comparison to other bivariate models.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The construction of a bivariate distribution with specified marginals and correlation has been a challenging problem since the early twentieth century. There is much interest in this problem because of its wide ranging applications. For instance, Ong [22] considered computer sampling of some bivariate discrete distributions with given marginals and correlation. Recently, Lin et al. [21] gave a good survey on this topic and this complements previous surveys. A comprehensive overview is found in the monograph by Balakrishnan and Lai [2].

A simple method to formulate a bivariate distribution with fixed marginals and varying correlation is the well-known Farlie–Gumbel–Morgenstern (FGM) family of distributions defined by

where \(F\left( x \right)\) and \(G\left( y \right)\) are the cumulative distribution functions, \(\bar{F} = 1 - F\) and \(\bar{G} = 1 - G\) and \(\beta\)\(\varepsilon \left[ { - 1, 1} \right]\) is the parameter that controls the correlation. Schucany et al. [25] had shown that the FGM family (1.1) has correlation coefficient restricted to the interval \(\left( { - 1/3, 1/3} \right)\). Various researchers ([14,15,16, 20]; see also, Balakrishnan and Lai [2]) have advocated methods to overcome this drawback of the FGM family.

Sarmanov [23] introduced a family of distributions with better flexibility, and this family includes the FGM family as a particular case. The Sarmanov family is defined by

where \(f\left( x \right)\) and \(g\left( y \right)\) are the probability density functions (pdf) of \(F\left( x \right)\) and \(G\left( y \right)\), respectively. \(\phi_{1} \left( x \right)\) and \(\phi_{2} \left( y \right)\) are measurable functions [21] (also known as mixing functions) satisfying the conditions

If \(\phi_{1} \left( x \right) = 1 - 2F\) and \(\phi_{2} \left( y \right) = 1 - 2G\), then (1.2) reduces to (1.1). Lee [19] and Shubina and Lee [28] have made a detailed study of the Sarmanov family. In the literature, the family (1.2) is referred to as the Sarmanov-Lee family. There is vast improvement in the range of correlation [21]. For example, the maximum correlation for the bivariate distribution with uniform marginals is 3/4 as opposed to 1/3 for the FGM distribution (1.1). Different bivariate distributions with given marginals are constructed by choosing different mixing functions \(\phi_{1} \left( x \right)\) and \(\phi_{2} \left( y \right)\). Lee [19] has given some examples of the choice of \(\phi_{1} \left( x \right)\) and \(\phi_{2} \left( y \right)\). In this paper, we give a general method of constructing bivariate generalizations of weighted discrete distributions by considering a particular simple choice of \(\phi_{1} \left( x \right)\) and \(\phi_{2} \left( y \right)\).

The objective of this paper is to propose bivariate extensions of a univariate CMP distribution where marginals are CMP. Recently Sellers et al. [24] considered a bivariate CMP (BCMP) distribution where marginal distributions are not CMP.

We construct BCMP distributions with CMP marginals and range of correlation over \(\left[ { - 1, 1} \right]\) by using two instances of (1.2). The first is a general method for weighted distributions. The second bivariate distribution is constructed by using \(\phi_{1} \left( x \right)\) and \(\phi_{2} \left( y \right)\) based on the probability generating function. The proposed BCMP distributions includes as a special case a bivariate Poisson distribution which has correlation in \(\left[ { - 1, 1} \right]\). Holgate’s [12] bivariate Poisson distribution constructed by random element in common attains positive correlation only.

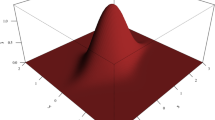

The CMP distribution generalizes the Poisson distribution by allowing for over-dispersion \(\left( {\nu < 1} \right)\) or under-dispersion \(\left( {\nu > 1} \right).\) Its probability mass function (pmf) is given by

where

is the normalizing constant. The mean and variance of the CMP distribution have the following approximations [27]

The CMP distribution may be regarded as a weighted Poisson distribution with pmf

where \(W(\lambda ,\,\nu )\) is the normalizing constant.

The CMP distribution is also appealing from a theoretical point of view because it belongs to the class of two parameters power series distribution [27]. As a consequence, sufficient statistics and other elegant properties may be derived. Some of these have recently been investigated by Gupta et al. [10]. Kadane et al. [17] investigated the number of solutions which give rise to the same sufficient statistics.

The paper is organized as follows. Section 2 defines the proposed BCMP distributions and Sect. 3 discusses some dependence properties. The statistical analyses concerning parameter estimation, tests of hypotheses of independence and adequacy are presented in Sect. 4. Section 4 also contains simulation studies to study the power of Rao score and likelihood ratio tests. Section 5 illustrates an application to a real data set. Some concluding remarks are given in Sect. 6.

2 BCMP Distribution and Properties

2.1 Bivariate Discrete Distributions Based on Sarmanov–Lee Family

In this section, we present two bivariate distributions by choosing different mixing functions \(\phi_{1} \left( x \right)\) and \(\phi_{2} \left( y \right)\).

2.1.1 Bivariate Weighted Discrete Distributions

We propose a general method for constructing Sarmanov-type bivariate distributions for weighted distributions. Consider a discrete distribution with pmf \(p\left( x \right)\) and mean \(\mu\). The weighted distribution for \(p\left( x \right)\) has pmf

where \(w\left( x \right)\) is the weight and \(W\) is the normalizing constant. We make use of the simpler \(p\left( x \right)\) to construct the mixing functions. Let \(\alpha\) be a positive real number,

and

The expectation \(E\left[ {p^{\alpha } \left( X \right)} \right]\) is taken with respect to the weighted pmf \(P(X = x)\). A bivariate distribution with joint pmf based on (1.2) is given by

2.1.2 Bivariate Discrete Distributions Based on Probability Generating Functions

Let \(\phi_{1} \left( x \right) = \theta^{x} - G\left( \theta \right)\) and \(\phi_{2} \left( y \right) = \theta^{y} - G\left( \theta \right)\), where \(0 < \theta < 1\) and \(G\left( \theta \right)\) is the probability generating function of the marginal distribution. A bivariate distribution is defined as follows with joint pmf

Note that the functions \(\phi_{i} \left( t \right),i = 1,2\) are bounded and \(\sum \phi_{i} \left( t \right)P\left( t \right) = 0, \;t = 0,1 2, \ldots\).

Let \(\xi_{i} = \sum t\phi_{i} \left( t \right)P\left( t \right)\). From Theorem 2 [19], the correlation coefficient \(\rho\) of (2.1) and (2.2) is given by

where \(\sigma_{1}^{2} ,\sigma_{2}^{2}\) are the variances.

For model (2.1) the function \(\xi_{i}\) is given by

where \(\delta = E\left[ {Xp\left( X \right)} \right]\), \(\mu\) is the mean for \(P\left( x \right)\) and \(\gamma = E\left[ {p\left( X \right)} \right]\). Thus,

2.2 BCMP Distributions

Based on (2.1) and (2.2) we have the following BCMP distributions.

The bivariate distribution with joint pmf based on (2.1) is given by

where the CMP marginals \(P\left( {X = x} \right)\) and \(P\left( {Y = y} \right)\) are given by (1.3) with parameters \(\left( {\lambda_{1} , \nu_{1} } \right)\) and \(\left( {\lambda_{2} , \nu_{2} } \right)\), respectively. We consider computation of \(E\left[ {p^{\alpha } \left( Y \right)} \right]\) by writing it in terms of a CMP pmf. For simplicity, we suppress the subscripts and write the parameters as \(\left( {\lambda ,\nu } \right)\).

Since \(Z(\lambda ,\,\nu ) = \sum\limits_{j = 0}^{\infty } {\frac{{\lambda^{j} }}{{(j!)^{\nu } }}}\), we express

That is,

where \(P\left( {R = x} \right) = \frac{1}{{Z\left( {\lambda^{2} ,\nu + 1} \right)}}\mathop \sum \limits_{x = 0}^{\infty } \frac{{\lambda^{{x\left( {\alpha + 1} \right)}} }}{{\left( {x!} \right)^{\nu + \alpha } }}\) is a CMP pmf with parameters \(\left( {\lambda^{2} ,\nu + 1} \right)\). Note that \(E\left[ {p^{\alpha}\left( X \right)} \right]\) is an infinite sum of product of Poisson and CMP probabilities and it is easy to see that \(E\left[ {p^{\alpha}\left( X \right)} \right] \le 1\). Hence, \(\phi_{1} \left( x \right)\) and \(\phi_{2} \left( y \right)\) are bounded.

Let \(\phi_{1} \left( x \right) = \theta^{x} - g\left( {\theta ;\lambda_{1} , \nu_{1} } \right)\) and \(\phi_{2} \left( y \right) = \theta^{y} - g\left( {\theta ;\lambda_{2} , \nu_{2} } \right)\), where \(0 < \theta < 1\) and \(g\left( {\theta ;\lambda ,\nu } \right)\) is the probability generating function of the CMP distribution given by

The BCMP distribution corresponding to (2.2) has joint pmf defined as follows:

where the CMP marginals \(P\left( {X = x} \right)\) and \(P\left( {Y = y} \right)\) are given by (1.3) with parameters \(\left( {\lambda_{1} , \nu_{1} } \right)\) and \(\left( {\lambda_{2} , \nu_{2} } \right)\), respectively.

If \(\nu_{1} = \nu_{2} = 1\), then (2.5) is the joint pmf of the bivariate Poisson distribution given in Section 6.3 of Lee [19] with \(\theta = e^{ - 1}\).

2.3 Correlation Coefficient

Let \(\mu\) and \(\sigma^{2}\) denote the mean and variance of the CMP distribution. For BCMP distributions (2.4) and (2.5), the variances \(\sigma_{1}^{2} ,\sigma_{2}^{2}\) may be approximated by (1.5).

For BCMP distribution (2.4),

Thus (2.4) has a very simple expression for the correlation coefficient.

For (2.5),

where \(g_{i} \left( \theta \right) = g\left( {\theta ;\lambda_{i} , \nu_{i} } \right), i = 1, 2\). The correlation coefficient is given by

For the computation of \(Z\left( {\theta , \nu } \right)\) given by (1.4) and related quantities such as derivatives see Section 4 of Gupta et al. [10].

3 Dependence Properties of the BCMP Distribution

The most common measures for determining the relationship between two variables are the Pearson correlation coefficient, Kendall’s tau and Spearman’s rho. As a generalization of Pearson’s correlation coefficient, Bjerve and Doksum [3], Doksum et al. [7] and Blyth [4,5,6] introduced and discussed correlation curve. The correlation curve is a local measure of the strength of association between the two variables X and Y. The correlation curve \(\rho \left( x \right)\), a function of x, describes the amount of variance explained by a regression curve varies locally. However, \(\rho \left( x \right)\) does not treat X and Y on equal footing, but needs Y to be a response and X, a predictor variable. The correlation curve is a regression concept.

A local dependence function, a function of x and y, should measure the strength and direction of association locally treating both variables symmetrically. For a bivariate distribution, it is defined as follows:

An \(r \times c\) contingency table with cell probabilities \(p_{i,j}\) specify the joint distribution for two discrete random variables X and Y as

The two marginal distributions for X and Y are

respectively. Yule and Kendall [30] and Goodman [9] suggested the following set of local cross product ratios

Equation (3.2) defines the local dependence function. Also, let \(\gamma_{i,j} = ln \alpha_{i,j}\). Both \(\alpha_{i,j}\) and \(\gamma_{i,j}\) measure the association of the \(2 \times 2\) tables found by adjacent rows and adjacent columns. It is known that the set \(\left\{ {\alpha_{i,j} } \right\}\) or equivalently \(\left\{ {\gamma_{i,j} } \right\}\) together with marginal probability distributions uniquely determine the bivariate distribution. For more explanation, see Wang [29] and Holland and Wang [13].

We now present a very important property of the local dependence function. It is in terms of the totally positive of order 2, TP2 (reverse regular of order 2, RR2), property defined below.

Definition

A discrete bivariate distribution \(P\left( {X = i;Y = j} \right)\) is said to be TP2(RR2) if for a1 < b1, a2 < b2

where \(p\left( {a_{i} ,b_{j} } \right) = P\left( {X = i;Y = j} \right)\). It can be easily verified that the TP2(RR2) property is equivalent to \(\alpha_{i,j} \ge \left( \le \right)1\); where \(\alpha_{i,j}\) is the local dependence function. This is also equivalent to \(\gamma_{i,j} \ge \left( \le \right)0\).

We now obtain the local dependence function for the Sarmanov family of discrete distributions.

3.1 Local Dependence Function for Sarmanov Family of Discrete Distributions

For this family

So \(\alpha_{i,j} \ge ( \le )1\) is equivalent to

Thus, Sarmanov family is TP2 if

-

(a)

\(\phi_{1} \left( x \right)\) and \(\phi_{2} \left( y \right)\) are both increasing or both decreasing and

-

(b)

\(\omega > 0\).

Similarly, the Sarmanov family is RR2 if

-

(a)

\(\phi_{1} \left( x \right)\) and \(\phi_{2} y\) are both increasing or both decreasing and

-

(b)

\(\omega < 0\).

Let us now obtain such conditions for the BCMP distribution.

We have

This gives

Hence, \(\phi_{1} \left( x \right)\) is decreasing. Similarly, \(\phi_{2} \left( y \right)\) is decreasing.

Hence, BCMP is TP2 if and only if \(\omega > 0\).

Note that the TP2 condition is the same as the condition for positive dependence notion that Lehmann [18] called positive likelihood ratio dependence. This notion leads naturally to the order described below.

3.2 Positively Likelihood Ratio Dependent Ordering

Let (X1,X2) and (Y1,Y2) be two bivariate random vectors having the same marginals. Then, we say that (X1, X2) is smaller than (Y1, Y2) in the positively likelihood ratio dependent (PLRD) order denoted by

if

where F and G have (continuous or discrete) densities f and g; see [26].

It can be easily seen that

is equivalent to

where \(\alpha_{{\left( {X_{1} ,X_{2} } \right)}}\) and \(\alpha_{{\left( {Y_{1} ,Y_{2} } \right)}}\) are the local dependence functions for (X1, X2) and (Y1,Y2), respectively.

We shall now compare two Sarmanov families with parameters w1 and w2:

After tedious algebra, it can be verified that

Now assume

-

(a)

\(\phi_{1} \left( x \right)\) and \(\phi_{2} \left( y \right)\) are both increasing or both decreasing and

-

(b)

$$\omega_{1} \omega_{2} \phi_{1} \left( x \right)\phi_{2} \left( x \right)\phi_{1} \left( {x + 1} \right)\phi_{2} \left( {y + 1} \right) \le 1$$

Then, \(\alpha _{{\left( {X_{1} ,X_{2} } \right)}} \le \alpha_{{\left( {Y_{1} ,Y_{2} } \right)}}\) if \(\omega_{1} < \omega_{2}\).

The alternative conditions are

-

(c)

\(\phi_{1} \left( x \right)\) and \(\phi_{2} \left( y \right)\) are both increasing or both decreasing and

-

(d)

$$\omega_{1} \omega_{2} \phi_{1} \left( x \right)\phi_{2} \left( x \right)\phi_{1} \left( {x + 1} \right)\phi_{2} \left( {y + 1} \right) \ge 1$$

Then, \(\alpha _{{\left( {X_{1} ,X_{2} } \right)}} \le \alpha_{{\left( {Y_{1} ,Y_{2} } \right)}}\) if \(\omega_{1} > \omega_{2}\).

We now investigate PLRD ordering for BCMP distribution. In this case,

This does not imply that \(\omega_{1} \omega_{2} \phi_{1} \left( x \right)\phi_{2} \left( x \right)\phi_{1} \left( {x + 1} \right)\phi_{2} \left( {y + 1} \right) \ge 1\). Hence, we cannot compare the two vectors of Sarmanov family according to the PLRD ordering.

Remark. The PLRD ordering is difficult to check in most cases. By contrast finding families of distributions that are not ordered by this relation is relatively easy. For more explanation and comments, see [8].

4 Statistical Analysis

In this section, we examine statistical inference for the BCMP distribution given by (2.2).

4.1 Parameter Estimation

The method of moments estimation for estimating the parameters is conducted as follows:

The marginal parameters \(\left( {\lambda ,\nu } \right)\) are estimated by equating the first and second marginal sample moments by using the approximations in (1.5). The estimate for \(\beta\) is obtained from (2.3) by equating with the sample correlation coefficient.

For maximum likelihood estimation (MLE), simulated annealing (SA) algorithm is used to determine the estimates corresponding to the global optimum. It is a popular algorithm for searching global extremum in non-smooth functions with a large number of local extrema. Henderson et al. [11] have discussed the convergence of SA and presented practical guidelines for the implementation of SA algorithm, especially its control parameters, to ensure good performance.

The log-likelihood function of the model is given by

where \(Z_{1} = Z(\lambda_{1} ,\,\nu_{1} ),\)\(Z_{2} = Z(\lambda_{2} ,\,\nu_{2} )\). For the following sections, we consider \(\theta = e^{ - 1}\).

4.2 Test of Hypotheses

In this subsection, the tests for the two hypotheses of interest, that is, test of independence and test of adequacy of the proposed BCMP distribution are discussed. To compare the null model (restricted model) against the alternative model (unrestricted model), the score and the likelihood ratio (LR) tests are chosen and their test statistics are summarized as follows.

Let \(H_{0}\): \(\theta = \theta^{*}\) versus \(H_{1}\): \(\theta \ne \theta^{*}\). The score test statistic is \(T = S^{'} V^{ - 1} S\) where

is the score function and

is the information matrix.

The test statistic based on the LR test is defined as \(\text{LR} = - 2\log L\left( {\hat{\theta }^{*} } \right)/L(\hat{\theta })\) where \(\hat{\theta }^{*}\) and \(\hat{\theta }\) are the restricted and unrestricted maximum likelihood estimates.

4.2.1 Test of Independence

The random variables X and Y are independent if \(\beta = 0\). The hypotheses to be tested are \(H_{0 }: \beta= 0\) against \(H_{1} :\beta \ne 0\).

The score functions evaluated under the null hypothesis are needed and they can be easily obtained from the log-likelihood equation in (4.1).

The elements of the information matrix corresponding to \(\beta = 0\) are

Both the score and LR tests have an approximate Chi-square distribution with 1 degree of freedom.

4.2.2 Test of BCMP

To test the bivariate Poisson distribution against a BCMP distribution, that is, if the bivariate Poisson is adequate, the proposed hypotheses are

From Eqs. (2.5) and (4.1), the score functions are found to be

where \(a_{1} = \left( { - e^{{ - \lambda_{2} + \lambda_{2} \theta }} + \theta^{{y_{i} }} } \right)\), \(a_{2} = \left( { - e^{{ - \lambda_{1} + \lambda_{1} \theta }} + \theta^{{x_{i} }} } \right)\), f = 1 + \(\beta \left( { - e^{{ - \lambda_{1} + \lambda_{1} \theta }} + \theta^{{x_{i} }} } \right)\) \(\left( { - e^{{ - \lambda_{2} + \lambda_{2} \theta }} + \theta^{{y_{i} }} } \right)\), \(Z_{1}^{*} = Z(\theta \lambda_{1} ,\,\nu_{1} )\) and \(Z_{2}^{*} = Z(\theta \lambda_{2} ,\,\nu_{2} )\).

The elements of information matrix evaluated under the null hypothesis are

where \(b_{1} = e^{{\lambda_{2} }} \theta^{{y_{i} }} - e^{{\lambda_{2} \theta }}\), \(b_{2} = e^{{\lambda_{1} }} \theta^{{x_{i} }} - e^{{\lambda_{1} \theta }}\) and g = \(\beta e^{{\left( {\lambda_{1} + \lambda_{2} } \right)\theta }} - \beta e^{{\lambda_{1} + \lambda_{2} \theta }} \theta^{{x_{i} }} - \beta e^{{\lambda_{2} + \lambda_{1} \theta }} \theta^{{y_{i} }} + e^{{\lambda_{1} + \lambda_{2} }} \left( {1 + \beta \theta^{{x_{i} + y_{i} }} } \right)\).

Both the score and LR tests have an approximate Chi-square distribution with 2 degrees of freedom.

4.3 Monte Carlo Simulation Study

The performance of the proposed score and LR tests is compared in order to check the efficiencies of the tests. A simulation study of 1000 replications has been carried out by considering small (n = 100), medium (n = 500) and large (n = 1000) sample sizes for the bivariate discrete distributions based on probability generating functions. The nominal significant level, \(\alpha\) is taken as 5% and 10%. For the test of independence, different values of \(\beta\), ranging from − 1 to 1 are considered with a few combinations of \(\nu_{1}\) and \(\nu_{2}\). Tables 1 and 2 display, respectively, the simulated power of the tests under the test of independence and the test of adequacy of BCMP distribution.

From Table 1, the score test performs better in maintaining the nominal significance level of 5% (\(\beta\) = 0) if compared to the LR test for small sample sizes, but vice versa for nominal level of 10%. A weak detection is achieved when \(\beta\) is 0.5 away from zero and when the sample size is small. The power of detection is greatly improved when the sample size increases. When \(\beta\) is 0.8 or 1.0 away from zero, the score test outperforms the LR test when the sample size is small, but the values are very close to each other when the sample size increases.

The powers of the proposed tests increase when \(\beta\) diverges further from the value 0 and when the sample size increases. Besides that the powers of both of the tests are very close to each other when the sample size increases to 500 and 1000 regardless of the value of \(\beta\) and the nominal levels.

As shown in Table 2, the score test outperforms the LR test in maintaining the nominal levels of 5% and 10% regardless of the sample size. The LR test overestimates the nominal significance level for small sample sizes. In addition, when the parameters \(\nu_{1}\) and \(\nu_{2}\) are smaller than 1.0, the score and LR tests are comparable to each other. However, if both of the parameters are higher than 1.0, LR test is able to achieve a higher detection compared to the score test. If \(\nu_{1}\) is fixed as 1.0, the LR test outperforms the score test when \(\nu_{2}\) is larger than 1.0 but vice versa when \(\nu_{2}\) is smaller than 1.0. The same result applies when \(\nu_{2}\) is fixed as 1.0 and \(\nu_{1}\) is set as larger or smaller than 1.0.

Overall, both score and LR tests are powerful when the sample size is equal or larger than 500 as almost 96% detection can be achieved.

5 Example of Application to Real Data

As an illustration of application we consider the data set of the number of accidents sustained by 122 experienced shunters over 2 successive periods of time [1] which was also used by Sellers et al. [24].

In this section, all the summation series involved are computed by recursion with double-precision accuracy and truncation approach is applied to the normalizing constant, \(Z\left( {\lambda ,\nu } \right)\). It is set as \(Z\left( {\lambda ,\nu } \right) \le 1 \times 10^{200}\). To compute the correlation coefficient \(\rho = \frac{{\alpha \xi_{1} \xi_{2} }}{{\sigma_{1} \sigma_{2} }}\), the calculation of mean \(\mu_{1}\), \(\mu_{2}\) and variances \(\sigma_{1}^{2} ,\sigma_{2}^{2}\) from marginals \(P\left( {X = x} \right)\) and \(P\left( {Y = y} \right)\) are required. They are computed by using the following equations as the accuracy of approximation Eqs. (1.5) hold when \(\lambda > 10^{\nu }\). The equations fail when the value of the parameter \(\nu\) is close to zero. For example, when \(\nu = 0.01\), we have to make sure that \(\lambda > 10^{\nu }\) = 1.0233.

For the data set on the number of accidents sustained by 122 experienced shunters over 2 successive periods of time. [1], the sample moments are:

This data set has also been fitted by the bivariate negative binomial (BNB) distribution as a comparison. See Table 3.

To confirm that the MLE’s for the data in Table 3 really give a maximum, some likelihood function values are computed for points around the ML estimates. Based on the likelihood function values, the ML estimates do correspond to the maximum (Table 4).

It is observed that in the summary statistics presented in Table 5, the fits by both proposed BCMP are significantly better than the BNB based upon the \(\chi^{2}\) values and the log-likelihood values. For this data set, Sellers et al. [24] gave a log-likelihood value of − 341.704 for their BCMP distribution which is about the same as BNB. Thus, based on the log-likelihood values, the proposed BCMP distributions fit better than the BCMP distribution of Sellers et al. [24].

6 Concluding Remarks

BCMP distributions with marginal distributions which are CMP distributions and range of correlation coefficient over (− 1, 1) have been proposed. This is based on the Sarmanov family of bivariate distributions which is a simple and elegant approach in constructing bivariate distributions. A method is proposed for constructing bivariate generalizations of weighted distributions. The univariate CMP distribution is a very popular distribution for applications since it has the flexibility to analyze data that exhibit under or over dispersion. The BCMP distribution proposed by Sellers et al. [24] does not have the marginal distributions which are CMP distributions. It is shown in this article that the proposed BCMP distributions fit much better than the BCMP of Sellers et al. [24]. Thus, the proposed BCMP distributions will be of great utility to the data analysts.

References

Arbous AG, Kerrich JE (1951) Accident statistics and the concept of accident proneness. Biometrics 7:340–342

Balakrishnan N, Lai CD (2009) Continuous bivariate distributions, 2nd edn. Springer, New York

Bjerve S, Doksum K (1993) Correlation curves: measures of association as functions of covariate values. Ann Stat 21:890–902

Blyth S (1993) A note on correlation curves and Chernoff’s inequality. Scand J Stat 20(4):375–377

Blyth S (1994) Karl Pearson and correlation curve. Int Stat Rev 62(3):393–403

Blyth S (1994) Measuring local association: an introduction to the correlation curve. Sociol Methods 24:171–197

Doksum K, Blyth S, Bradlow E, Meng XL, Zhao H (1994) Correlation curves as local measures of variance explained by regression. J Am Stat Assoc 89(426):571–582

Genest C, Verret F (2002) The TP2 ordering of Kimeldorf and Sampson has the normal-agreeing property. Stat Probab Lett 57:387–391

Goodman LA (1969) How to ransack social mobility tables and other kinds of cross-classification tables. Am J Sociol 75:1–39

Gupta RC, Sim SZ, Ong SH (2014) Analysis of discrete data by Conway–Maxwell Poisson distribution. AStA Adv Stat Anal 98:327–343

Henderson D, Jacobson SH, Johnson AW (2003) The theory and practice of simulated annealing. In: Handbook of metaheuristics, vol 57, pp 287–319

Holgate P (1964) Estimation for the bivariate Poisson distribution. Biometrika 51:241–245

Holland PW, Wang YJ (1987) Dependence function for continuous bivariate densities. Commun Stat Theory Methods 16:863–876

Huang JS, Kotz S (1984) Correlation structure in iterated Farlie–Gumbel–Morgenstern distributions. Biometrika 71:633–636

Huang JS, Kotz S (1999) Modifications of the Farlie–Gumbel–Morgenstern distributions: a tough hill to climb. Metrika 49:307–323

Johnson NL, Kotz S (1975) On some generalized Farlie–Gumbel– Morgenstern distributions. Commun Stat Theory Methods 4:415–427

Kadane JB, Krishnan R, Shmeuli G (2006) A data disclosure policy for count data based on the COM-Poisson distribution. Manag Sci 52(10):1610–1617

Lehmann EL (1966) Some concepts of dependence. Ann Math Stat 37:1137–1153

Lee M-LT (1996) Properties and applications of the Sarmanov family of bivariate distributions. Commun Stat Theory Methods 25:1207–1222

Lin GD (1987) Relationships between two extensions of Farlie–Gumbel–Morgenstern distribution. Ann Inst Stat Math 39:129–140

Lin GD, Dou X, Kuriki S, Huang JS (2014) Recent developments on the construction of bivariate distributions with fixed marginals. J Stat Distrib Appl 1:14

Ong SH (1992) The computer generation of bivariate binomial variables with given marginals and correlation. Commun Stat Simul Comput 21:285–299

Sarmanov IO (1966) Generalized normal correlation and two dimensional Frechet classes. Soviet Math Dokl 7:596–599 [English translation; Russian original in Dokl. Akad. Nauk. SSSR 168, 32–35 (1966)]

Sellers KF, Morris DS, Balakrishnan N (2016) Bivariate Conway–Maxwell–Poisson distribution: formulation, properties, and inference. J Multivar Anal 150:152–168

Schucany WR, Parr WC, Boyer JE (1978) Correlation structure in Farlie-Gumbel-Morgenstern distributions. Biometrika 65(3):650–653

Shaked M, Shanthikumar JG (2007) Stochastic orders. Springer, New York

Shmueli G, Minka TP, Kadane JB, Borle S, Boatwright S (2005) A useful distribution for fitting discrete data-revival of the Conway–Maxwell–Poisson distribution. J R Stat Soc Ser C (Appl Stat) 54(1):127–142

Shubina M, Lee M-LT (2004) On maximum attainable correlation and other measures of dependence for the Sarmanov family of bivariate distributions. Commun Stat Theory Methods 33:1031–1052

Wang YJ (1993) Construction of continuous bivariate density functions. Stat Sin 3:173–187

Yule GU, Kendall MG (1950) An introduction to the theory of statistics, 14th edn. Charles Griffith & Company Limited, London

Acknowledgements

The authors wish to thank the referees for their constructive comments which have vastly improved the paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

There is no conflict of interest concerning this manuscript

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Derivatives of the BCMP normalizing constant, \(Z\left( {\lambda \theta , \nu } \right)\) are shown as follows

Rights and permissions

About this article

Cite this article

Ong, S.H., Gupta, R.C., Ma, T. et al. Bivariate Conway–Maxwell Poisson Distributions with Given Marginals and Correlation. J Stat Theory Pract 15, 10 (2021). https://doi.org/10.1007/s42519-020-00141-4

Accepted:

Published:

DOI: https://doi.org/10.1007/s42519-020-00141-4