Abstract

The most crucial mechanical property of concrete is compression strength (CS). Insufficient compressive strength can therefore result in severe failure, which can be beyond repair. Therefore, predicting concrete strength accurately and early is a key challenge for researchers and concrete designers. High-strength concrete (HSC) is an extremely complicated material, making it challenging to simulate its behavior. The CS of HSC was predicted in this research using an adaptive neuro-fuzzy inference system (ANFIS), backpropagation neural networks (BPNN), Gaussian process regression (GPR), and NARX neural network (NARX) in the initial case. In the second case, an ensemble model of k-nearest neighbor (k-NN) was proposed due to the poor performance of model combination M1 & M2 in ANFIS, BPNN, NARX, and M1 in GPR. The output variable is the 28-day CS (MPa), and the input variables are cement (Ce) Kg/m3, water (W) Kg/m3, superplasticizer (S) Kg/m3, coarse aggregate (CA) Kg/m3, and fine aggregate (FA) Kg/m3. The outcomes depict that the suggested approach is predictively consistent for forecasting the CS of HSC, to sum up. The MATLAB 2019a toolkit was employed to generate the ML learning models (ANFIS, BPNN, GPR, and NARX), whereas E-Views 11.0 was used for pre- and post-processing of the data, respectively. The BPNN and NARX algorithm was trained and validated using MATLAB ML toolbox. The outcome shows that the combination M3 partakes in the preeminent performance evaluation criterion when associated with the other models, where ANFIS-M3 prediction outperforms all other models with NSE, R2, R = 1, and MAPE = 0.261 & 0.006 in both the calibration and verification phases, correspondingly, in the first case. In contrast, the ensemble of BPNN and GPR surpasses all other models in the second scenario, with NSE, R2, R = 1, and MAPE = 0.000, in both calibration and verification phases. Comparisons of total performance showed that the proposed models can be a valuable tool for predicting the CS of HSC.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

One of the most often employed building materials is concrete, with applications ranging from buildings and bridges to roads and dams. For high-stress applications, in the field of cementitious materials, high-strength concrete (HSC) is a type of concrete that was created in the late 1950s and early 1960s (Gjørv, 2019), which is intended to have a compressive strength (CS) greater than 6000 psi, or 40 Mpa (Al-Shamiri et al., 2019). High-rise structures, bridges, and other infrastructure projects are just a few examples of places where it is frequently employed since they require both high strength and longevity (Farooq et al., 2020). HSC can be produced with the right proportions of cement, water, fine and coarse aggregates, and occasionally natural or fibrous additions (Baykasoǧlu et al., 2009). Depending on the exact application and desired strength, the mix design may change, but it normally entails decreasing the water-to-cement ratio and increasing the cement and aggregate content (Öztaş et al., 2006). High-strength concrete has high strength and additional advantages like greater durability (Hooton & Bickley, 2014; Khaloo et al., 2016), decreased permeability (Hameed et al., 2022), and resilience to extreme climatic conditions (Mbessa & Péra, 2001). In contrast with regular concrete, it can be more expensive and difficult to work with, and it may call for specific tools and knowledge for proper mixing, placement, and curing (Tayfur et al., 2014).

Studying the mechanical characteristics of concrete is crucial for improving design processes and concrete construction performance under external loads. The CS of concrete is the utmost crucial of its many different properties since it directly affects the structure’s safety and is required to evaluate how well it will operate over the course of its whole life. But the mixture of cement, sand, gravel, other raw materials, and admixtures that makes up concrete is not homogeneous. The proportion of these components in the concrete mix is distributed at random. The composition of the waste, the extent of the particles, the proportion of the water–cement ratio, and the ratio of aggregate ratio all impact strength of concrete. To accomplish the appropriate CS, the mix proportions of the elements in HSC are often calculated (Mars et al., 2018). HSC CS is often determined via trial and error, where the proportions of the mix are changed till the anticipated strength is reached (Farooq et al., 2020). It is significant to remember that additional elements, like curing conditions, temperature, and the characteristics of various concrete ingredients, might affect the CS of HSC (Öztaş et al., 2006). Therefore, appropriate quality control procedures should be put in place to guarantee the concrete's consistency in strength and performance. Using standard compression tests, the true CS of HSC can be determined. Finding the CS of HSC requires a laborious, lengthy, and expensive. Numerous regression indices are frequently incorporated into the experimental formula to indicate the effects of numerous additions. Due to the nonlinear nature of the relationship associated with the CS of HSC and its mixed structures, the empirical approach is a poor choice for prognostication (Erdal et al., 2013). Constructing a prediction model using machine learning based on learning to quickly, precisely, and accurately estimate CS, there has been a lot of interest in strength (Ayubi Rad & Ayubi Rad, 2017). Due to their potential use in addressing difficulties in engineering, machine learning (ML) and artificial intelligence (AI) are gaining more attention in both academic and non-academic (industrial) sectors. Over the past two decades, several machine learning methods have been utilized for estimating the 28-day CS of concrete, including artificial neural network (ANN) (Al-Shamiri et al., 2019; Bui et al., 2018), support vector machine (SVM) (Erdal et al., 2013), Elman neural network (ENN) (Jibril et al., 2023), exlearningraning machine (ELM) (Al-Shamiri et al., 2019), adaptive neuro-fuzzy inference system (ANFIS) (Golafshani et al., 2020), regression tree (RT) (Gholampour et al., 2020), and random forest (RF) (Singh et al., 2019). The simplest method for figuring out the CS of concrete often involves laboratory tests. Additionally, it is currently extensively accepted to apply ML techniques to assess concrete CS because of the years-long development of AI. Similar to other conventional regression techniques, ML employs specific algorithms that may learn dependent data to produce incredibly accurate results for the independent data. This technology can be applied in more sophisticated ways in the domains of civil engineering, such as design, construction management, quality control, and risk management.

Using traditional techniques, Nguyen et al. (2021) evaluate several ultra-high-strength concrete (UHSC) mix designs and recommend adjustments to increase strength. Based on experimental data, a proposed empirical equation for forecasting elastic modulus is made, which demonstrates that the proposed equation is straightforward and offers the most accurate predictions of any current equations. Demir (2008) looks into how ANNs might be used to forecast the elastic modulus (E) of both regular and HSC. The E of concrete is successfully forecasted by the ANN model, which was developed, trained, and evaluated using data from previous research. The findings reveal that ANNs have significant promise as a means for forecasting the E of both normal and HSC. The expected outcomes are contrasted with those attained using experimental building code results and different models. Numerous studies that used ANN to estimate the CS of concrete after 28 days proved its precision Abu Yaman et al., (2017); Behnood and Golafshani (2018); Moradi et al. (2021). Four different algorithms were used to evaluate the carbonation depth (CD) of concrete based on experimental data. According to Malami et al. (2021), the researcher discovered that the ANFIS, ELM, and SVM outperform the multilinear regression (MLR) owing to higher Nash–Sutcliffe coefficient efficiency (NSE) in both the calibration and verification stages. Two ML-trained algorithms, SVM and Hammerstein–Wiener model (HWM), were employed in a study by Adamu et al. (2021) to anticipate concrete CS in which jujube seeds were largely substituted for the coarse aggregate (CA). The models require five inputs: slump (D), the amount of CA, the amount of jujube seed (S), the percentage replacement of aggregate (CAR), curing age (T), and CS as an output variable. The fitness of the anticipated model to meet the performance criterion and the projected models' robustness were assessed using the four evaluation metrics. The highest correlation coefficient (R2) values across the calibration and verification phases show that the HWM-M4 model outperforms the other models taken into consideration. Kaveh and Khalegi, (2009) utilized and rain ANN in other to predict the 7-day and 28-day strength of concrete specimens, encompassing both plain and admixture concrete. The backpropagation algorithm is employed to train neural networks with varying numbers of hidden layers (one, two, and three), which are subsequently compared. The most effective networks are chosen based on their performance, and these networks are then used to predict the strength of concrete mixtures with minimal errors. Recently, Kaveh and Khalegi (2009) optimize the parameters of feed-forward backpropagation and radial basis function artificial neural networks (ANNs) using a combination of meta-heuristic algorithms. Particle swarm optimization (PSO), genetic algorithm (GA), colliding bodies optimization (CBO), and enhanced colliding bodies optimization (ECBO) algorithms are utilized with ANNs. The research utilizes 223 test data on carbon FRP (CFRP) to generate training and test data sets. Various validation criteria, including mean square error, root mean square error, and correlation coefficient (R), are employed to validate the models. The models consider the impact of concrete compressive strength, concrete sample diameter, concrete sample length, fiber elastic modulus, fiber thickness, and fiber strength on the ultimate strength of FRP concrete. Similarly, Younis and Pilakoutas (2013) developed a model for the estimate of the CS of recycle aggregate concrete (RAC) using multilinear and nonlinear regression techniques. Utilizing ANN technology, Deshpande et al.,(2014) employed nonlinear regression, M5Tree, and ANN techniques to evaluate the CS of RAC. For the prediction of the E of RAC, (Behnood et al., 2015) employed M5Tree and ANN methods, respectively. Using genetic programming (GEP) and multivariable regression techniques, González-Taboada et al (2016) predicted the CS, E, and splitting tensile strength (STS) of RAC. Recently, utilizing NLR and GEP techniques, Gholampour et al (2017) forecast the CS, E, flexural strength (FS), and STS of RACs. The majority of these methods, however, were either computationally challenging, unable to manage numerous records, or incapable of precisely apprehending the effects of the crucial dependent variables for resolving nonlinear issues. As a result, simpler and more reliable AI algorithms should be used to forecast the characteristics of RACs. For the purpose of forecasting shear strength in FRP-reinforced concrete elements with and without stirrups, the group method of data handling networks (GMDH) is adopted and put to the test (Kaveh et al., 2018). Twelve significant geometrical and mechanical parameters are taken into account by the GMDH models. Models are created for instances with and without shear reinforcement using two large datasets of 112 and 175 data samples, respectively. Artificial neural network (ANN) and ANFIS models are also created for additional evaluation, and the GMDH models are compared with codes of practice. The models’ accuracy is assessed using statistical error parameters. The findings show that GMDH outperforms other models, predicting shear strength for members with and without stirrups with excellent accuracy (R2 = 0.94 and 0.95, respectively). Parametric and sensitivity analysis is also carried out.

For HSC structures to be safe and reliable, it is essential to precisely anticipate their CS. Recent years have seen the emergence of strong technologies for estimating the CS of HSC, including the evolutionary computational intelligence approach and ML algorithms. To accurately estimate the CS of HSC, this study suggests a self-turning predictive model, namely adaptive neuro-fuzzy inference system (ANFIS), backpropagation neural networks (BPNN) which many studies have utilized the used of it, for example (Kaveh & Iranmanesh, 1998; Kaveh et al., 2008), Gaussian process regression (GPR) and NARX neural network (NARX) in the first scenario. In other to enhance the performance of the model, an ensembled machine learning strategy that combines an evolutionary computational intelligence algorithm with a self-turning predictive model of K-nearest neighbor (KNN) is proposed in the second scenario (KNN-ANFIS, KNN-BPNN, KNN-GPR, and KNN-NARX). The proposed method is anticipated to increase HSC CS prediction efficiency and accuracy, improving the safety and dependability of structures made of this material. The google scholar website listed 17,100 research publications that utilized the viability of machine learning models, for concrete CS prediction, from 2000 to the present, as per the cited literature. Below is a scan of 130 documents; 219 terms that repeat among the experiments are present in Fig. 1, indicating a lack of widespread collaboration in learning the prediction of concrete CS.

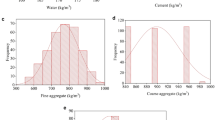

Experimental data

The research utilized an experimental dataset comprising 326 concrete samples, which served as the foundation for constructing AI-based models (ANFIS, BPNN, GPR, and NARX) designed to forecast the CS of HSC. 5 dependent variables were used as the input to train the model, which include water (W) kg/m3, fine aggregate (FA) kg/m3, coarse aggregate (CA) kg/m3, superplasticizer (S) kg/m3), and 28 CS (MPa) as the independent variable (output) (Al-Shamiri et al., 2019).

Methods and methodology

ANFIS model

The ANFIS model, developed by Jang (Jang, 1993), combines neural networks with a fuzzy inference system (FIS) (Armaghani & Asteris, 2021; Malami et al., 2021) to solve interactions that are complicated in nature. FIS is generally utilized due to its ability to convert pre-existing knowledge into specific constraint sets. (Fig. 2). Using ANFIS, which is a multilayer feedforward neural network (MFFNN) also known as a backpropagation neural network (BPNN), mapping can be achieved by integrating fuzzy logic (FL) algorithms and neural networks. For detail of ANFIS models with references and the equation, refer to Appendix A.

BPNN model

Backpropagation neural networks, sometimes referred to as backpropagation, are a form of ANN that are proficient in using supervised learning techniques. It is based on the idea of “gradient descent,” which is used to decrease the discrepancy between ong expected and actual results (Asteris & Mokos, 2020). The procedure used in BPNN is depicted in Fig. 3a, and the structure of the best model combination is depicted in Fig. 3b. For BPNN detail theory, refer to Appendix A.

Gaussian process regression (GPR) model

A technique for estimating functions is called GPR. Based on the function space, the gaissian process (GP) may efficiently do Bayesian inference and describe the dispersal of the function (Liu et al., 2021). The GPR and the potent Bayesian optimization approach, which are both frequently employed in machine learning, are nonparametric models that can contest a black-box function and give reverence to the fitted outcome. The accuracy of the estimates made by the GPR model is strongly influenced by the kernel function (Cai et al., 2020) and could certainly handle nonlinear data. For GPR detail theory, refer to Appendix A.

NARX model

NARX network is a form of NN that can be created as a feedforward or recurrent network (RN). Layers make form a feedforward network (FFN), with the hidden layers enclosed among the dependents and independents layers. Due to the absence of closed loops in this network topology, there are no response and data only flows in one direction. On the other hand, closed loop provides a response in the form of output data to the neural network’s input repeatedly in a recurrent neural network (RNN) (Sheikh et al., 2021) (Fig. 4). For NARX detail theory, refer to Appendix A.

k-NN(k-Nearest Neighbors) model

Machine learning algorithms for classification and regression analysis include k-NN. It is a nonparametric approach; therefore, it does not rely on any knowledge of how the data are distributed. Finding the k-NN to a certain test data point from the training dataset is how k-NN operates (Shahabi et al., 2020). A distance metric, such as the euclidean or Manhattan distance, is used to determine the separation between each training and test data points. The next step is to choose the k closest neighbors based on their proximity to the test data point (Adeniyi et al., 2016). For k-NN detail theory, refer to Appendix A.

Ensemble learning technique (ELT)

In several domains, including civil engineering, the ability to integrate ensemble models to advance the frontier of ML predictions has been effective (Nourani et al., 2018). ELT is a branch of machine learning that combines the process of generating numerous predictors using a single model to improve the performance of the final estimate. The main objective of using an ensemble is to achieve more precise and reliable estimates compared to what a single model can provide. Some researchers have categorized ensemble learning techniques into two groups: homogeneous ensembles and heterogeneous ensembles. Homogeneous ELT is defined as having an identical learning algorithm (for example, a neural network (NN)), while heterogeneous ELT is defined as having different learning algorithms. For overcoming model variety and achieving prediction accuracy, the heterogeneous ensemble is advised (Elkiran et al., 2019). Therefore, an ensemble of the k-NN model was employed in other toenhanced the prediction precision of model combinations M1 and M2 due to their low performance. In ANFIS, BPNN, and NARX-NN models, an ensemble of M1 and M2 combination was taken (k-NN-ANFIS, k-NN-BPNN, and k-NN-NARX), whereas in the GPR model, an ensemble of M2 combination was taken (k-NN-GPR).

Proposed modeling schema

The use of data intelligence algorithms in process engineering, particularly the prediction of concrete CS, has advanced significantly during the last few decades. The data source is the primary advantage of the AI technique, which can be achieved through open databases, field research, and laboratory testing. Four AI-based models, ANFIS, BPNN, GPR, and NARX-NN, were utilized in this work to forecast the CS of HSC in the first scenario comparing Ce (kg/m3), W (kg/m3), FA (Kg/m3), CA (kg/m3), and S (kg/m3), and 28 Days CS MPa, see Fig. 5; in the second scenario, one method of ET was employed to increase the prediction's accuracy of the model combination with low accuracy and high error value. Figure 6 displays the primarily suggested flow chart for the techniques applied in this study. The recommended modeling approach mimics CS based on the CC values among the performance parameters by using the traditional feature extraction approach. To achieve this, Al-Shamiri et al., 2019 provided the experimental setup for the mix proportioning of high-strength concrete. Equation (1) gives the input ccombination adopted in the training of ANFIS, BPNN, GPR, and NARX models based on sensitivity analysis.

The initials M1, M2, and M3 stand for the many input combinations used to train the models. The initials CS stand for the 28-day CS along with the weights of the cement (Ce kg/m3), water (W kg/m3), fine aggregate (FA kg/m3), coarse aggregate (CA kg/m3), and superplasticizer content (SP kg/m3). Using an external validation methodology, 326 datasets were collected for this investigation and split into calibration 228 (70%) and verification 98 (30%) sets.

To enhance the integrity and prediction accuracy of the dataset, Eq. 2 is employed to reduce data redundancy, and the dataset was then normalized to a range of zero to one.

In the dataset, “Xi” denotes the normalized quantity, “Xu” represents the unnormalized quantity, “Xmin” corresponds to the minimum quantity, and “Xmax” signifies the maximum quantity.

Performance evaluation criteria

A set of predetermined standards and measures are used to assess the effectiveness, efficiency, and quality of an individual, predictive performance. They provide a basis for evaluating model's performance and making informed decisions related to rewards, promotions, or improvements in performance. The accuracy of the models was assessed in this research using six statistical metrics: Nash–Sutcliffe coefficient efficiency (NSE), coefficient of determinacy (R2), correlation coefficient (R), mean square error (MSE), mean absolute error (MAE), and mean absolute percentage error (MAPE). The formal ranges of the performance criteria, which are used by much research to assess how well the anticipated model performs, are presented in Table 1.where CS(p) & \({\widehat{{CS}_{P}}}_{.}\) indicate the predicted CS, \({\widetilde{CS}}_{P}\), predicted mean CS, CS(o) observed CS, \({CS}^{i}{ }_{(o)}\) & \(\overline{{CS}_{om}}\) indicate the observed mean CS, N indicates the number of the data samples.

Result and discussion

Result for scenario I

Preprocessing, model construction, and computational conclusions from the data source are discussed in this section. The study made use of the ANFIS, popular neural network (BPNN), GPR, and NARX neural network (NARX-NN) models to evaluate the CS performance in comparison with other constituents. The MATLAB R2019a toolkit was used to train the MLs learning algorithms (ANFIS, BPNN, GPR, and NARX), while E-Views 11.0 was used for pre- and post-processing the data, respectively. The algorithms for BPNN and NARX modeling was trained and validated using MATLAB ML toolbox. Effective generalization requires selecting the right model structure. As a result, hypersensitivity techniques like the maximum number of iterations (1000), learning rate (0.01), and MSE (0.0001) were used for the BPNN. Because these layers were acknowledged by using the expression (2n1/2 + m) to (2n + 1), where n is the number of input neurons and m is the number of output nodes, the most crucial component of building a BPNN is constructing an acceptable number of hidden nodes (Fletcher & Goss, 1993).

While some research suggests that the expression (n + 1) to (n + 2) can be useful in preventing the overuse of trial-and-error uncertainty, others have suggested that the concealed nodes would take the form of an oval-shaped structure (Hadi et al., 2019). In this work, the range of 2–10 hidden nodes, 20–80 calibration epochs, 1–20 emotional hormones, and activation functions were used to find the best structure in BPNN. To prevent overfitting, the dataset was divided into folds, with the accuracy of each fold being evaluated, and the GPR was used with 10-k-folds cross -validation prediction speed of 120 observation/sec and a training length of 15.14 s. Due to the unpredictable conditions brought on by several factors and the properties of the source water that needs to be treated, the associations among the CS restrictions in a complex process may not be linear. The relationship (correlation) between the dependent and independent variables is shown in Fig. 7. To summarize and communicate a dataset's key features, compare variables or groups, find outliers, ensure data integrity, and assist decision-making, descriptive statisical analysis was performed on datasets as shown inTable 2 to reveal crucial information required in model development. The analysis streamlines data exploration and empowers researchers as well as analysts to extract insightful conclusions from the data.The calibration and verification results predicted by ANFIS, BPNN, GPR, and NARX are presented in Table 3. Table 3 presents information that reveals the performance metrics utilized in this study, including MAPE, MAE, and MSE, to be unitless. Conversely, NSE, R2, and R are dimensionless, due to the normalization phase incorporated in the modeling process. The ways in which the pragmatic AI-based and linear models responded to different combination models CS (Mpa) are displayed in Table 3. During the calibration phase, the predictive power for CS (MPa) was 20–60%, 60–70%, 70–80%, 80–1000%, and 0.001–0.088 for R and MAE, respectively.

Figure 8 shows the graphical comparison between the performances of the proposed models based on their individual MAPE values. It is worth to note that the models’ performances decline when error numbers rise and vice versa. The graphical performance in Fig. 8 makes it obvious that ANFIS and GPR demonstrated more performance accuracy than BPNN and NARX-NN. Further details are provided on MAPE measurements (Costache et al., 2020; Malik et al., 2021).

Result of the ELT

The exactness of standalone models is improved in the second scenario of this work by employing an ensemble or providing more accurate predictions by combining the strengths of many models, the KNN (M1 and M2) for ANFIS, BPNN, and NARX and the KNN (M1) for GPR associate the strengths of the KNN model. Table 4 shows that the highest level of accuracy (100%) was attained for both KNN-BPNN and KNN-GPR. This is not unexpected given that KNN is more adaptable to changes in input data or environmental changes and can handle a larger range of input data kinds and formats. Figures 9 and 10 associate the performance of the best-developed models with the observed data using scatter plots and a response plot, while scatter plots can identify trends, linkages, and patterns in data and are frequently used in data analysis and scientific research. The response curve shows a robust covenant among the experimental and predicted value (R = 0.61 − 1), as shown in Fig. 10. Response plot can show the contemporaneous agreement between two variables (measured and projected CS), as demonstrated by the MAPE values. To sum up, ensemble KNN is flexible, enabling more adaptable and flexible solutions to challenging issues like compressive strength. The popularity of KNN in concrete prediction, notably for CS, is due to its dominance over ANFIS, BPNN, GPR, and NARX, as shown in Table 4. The initial scenario's illustration of the performance capabilities of the individual paradigms in numerical and visual presentations demonstrates that the models were ineffective at simulating CS in model combination 3 (M3). By combining the linear and nonlinear behavior of the individual paradigms, distinct ensembled paradigms were then developed to capture and replicate the complicated behavior of CS in the first and second model combinations (M1 & M2).

During the modeling phase, the NSE, R2, R performance evaluation criteria were utilized to compare all of the models using the radar diagram. Figure 11 displays (a) the ANFIS-M3, BPNN-M3, GPR-M3, and NARX-M3, and (b) for the ensemble model. These models' predictions are more precise than others when compared to other models. This article explains how AI-based modeling is suitable for engineering and scientific study.

Conclusion

The compressive strength (CS) stands as the most pivotal mechanical attribute of concrete, reflecting its ability to withstand or resist compression forces. Inadequate compressive strength can potentially lead to significant structural failure, which is notably challenging to rectify. Hence, accurately forecasting concrete strength at an early stage presents a crucial challenge for both researchers and designers in the concrete industry. High-strength concrete (HSC) is an extremely complicated material, making it challenging to simulate its behavior. In order to improve the performance of the model combination M1 & M2 for ANFIS, BPNN, NARX, and M1 for GPR, an ensemble machine algorithm was used, with NSE, R2, R, MSE, MAE, and MAPE as the study’s assessment measures. This research evaluated the possibility of predicting the CS of HSC using ANFIS, BPNN, GPR, and NARX models in the first scenario. ANFIS-M3 suggested a reliable and remarkable performance of NSE, R2, R = 1, and MAPE = 0.261 & 0.006 in both the calibration and verification phases, respectively, when compared with the other models. Combination M3 has the highest performance of all the models used for the first scenario. The ensemble of BPNN and GPR, on the other hand, outperforms all other models with NSE, R2, R = 1, and MAPE = 0.000 in the second scenario, where the ensemble model of model combination M1 and M2 was chosen because of their poor performance. General performance evaluations revealed that the suggested models can be used as a useful tool for anticipating the CS of HSC.

Availability of data and material

On-demand access to all information and resources is available.

Code availability

Accessible upon demand.

References

Abu Yaman, M., Abd Elaty, M., & Taman, M. (2017). Predicting the ingredients of self compacting concrete using artificial neural network. Alexandria Engineering Journal, 56(4), 523–532. https://doi.org/10.1016/j.aej.2017.04.007

Adamu, M., Haruna, S. I., Malami, S. I., Ibrahim, M. N., Abba, S. I., & Ibrahim, Y. E. (2021). Prediction of compressive strength of concrete incorporated with jujube seed as partial replacement of coarse aggregate: a feasibility of Hammerstein-Wiener model versus support vector machine. Modeling Earth Systems and Environment. https://doi.org/10.1007/s40808-021-01301-6

Adeniyi, D. A., Wei, Z., & Yongquan, Y. (2016). Automated web usage data mining and recommendation system using K-Nearest Neighbor (KNN) classification method. Applied Computing and Informatics, 12(1), 90–108. https://doi.org/10.1016/j.aci.2014.10.001

Al-Shamiri, A. K., Kim, J. H., Yuan, T. F., & Yoon, Y. S. (2019). Modeling the compressive strength of high-strength concrete: An extreme learning approach. Construction and Building Materials, 208, 204–219. https://doi.org/10.1016/j.conbuildmat.2019.02.165

Armaghani, D. J., & Asteris, P. G. (2021). A comparative study of ANN and ANFIS models for the prediction of cement-based mortar materials compressive strength. Neural Computing and Applications. https://doi.org/10.1007/s00521-020-05244-4

Asteris, P. G., & Mokos, V. G. (2020). Concrete compressive strength using artificial neural networks. Neural Computing and Applications, 32(15), 11807–11826. https://doi.org/10.1007/s00521-019-04663-2

Ayubi Rad, M. A., & Ayubi Rad, M. S. (2017). Comparison of artificial neural network and coupled simulated annealing based least square support vector regression models for prediction of compressive strength of high-performance concrete. Scientia Iranica, 24(2), 487–496. https://doi.org/10.24200/sci.2017.2412

Baykasoǧlu, A., Öztaş, A., & Özbay, E. (2009). Prediction and multi-objective optimization of high-strength concrete parameters via soft computing approaches. Expert Systems with Applications, 36(3), 6145–6155. https://doi.org/10.1016/j.eswa.2008.07.017

Behnood, A., & Golafshani, E. M. (2018). Predicting the compressive strength of silica fume concrete using hybrid artificial neural network with multi-objective grey wolves. Journal of Cleaner Production, 202, 54–64. https://doi.org/10.1016/j.jclepro.2018.08.065

Behnood, A., Olek, J., & Glinicki, M. A. (2015). Predicting modulus elasticity of recycled aggregate concrete using M5′ model tree algorithm. Construction and Building Materials, 94, 137–147. https://doi.org/10.1016/j.conbuildmat.2015.06.055

Bui, D. K., Nguyen, T., Chou, J. S., Nguyen-Xuan, H., & Ngo, T. D. (2018). A modified firefly algorithm-artificial neural network expert system for predicting compressive and tensile strength of high-performance concrete. Construction and Building Materials, 180, 320–333. https://doi.org/10.1016/j.conbuildmat.2018.05.201

Cai, R., Han, T., Liao, W., Huang, J., Li, D., Kumar, A., & Ma, H. (2020). Prediction of surface chloride concentration of marine concrete using ensemble machine learning. Cement and Concrete Research. https://doi.org/10.1016/j.cemconres.2020.106164

Costache, R., Pham, Q. B., Sharifi, E., Linh, N. T. T., Abba, S. I., Vojtek, M., Vojteková, J., Nhi, P. T. T., & Khoi, D. N. (2020). Flash-flood susceptibility assessment using multi-criteria decision making and machine learning supported by remote sensing and GIS techniques. Remote Sensing. https://doi.org/10.3390/RS12010106

Demir, F. (2008). Prediction of elastic modulus of normal and high strength concrete by artificial neural networks. Construction and Building Materials, 22(7), 1428–1435. https://doi.org/10.1016/j.conbuildmat.2007.04.004

Deshpande, N., Londhe, S., & Kulkarni, S. (2014). Modeling compressive strength of recycled aggregate concrete by Artificial Neural Network, Model Tree and Non-linear Regression. International Journal of Sustainable Built Environment, 3(2), 187–198. https://doi.org/10.1016/j.ijsbe.2014.12.002

Elkiran, G., Nourani, V., & Abba, S. I. (2019). Multi-step ahead modelling of river water quality parameters using ensemble artificial intelligence-based approach. Journal of Hydrology, 577, 123962. https://doi.org/10.1016/j.jhydrol.2019.123962

Erdal, H. I., Karakurt, O., & Namli, E. (2013). High performance concrete compressive strength forecasting using ensemble models based on discrete wavelet transform. Engineering Applications of Artificial Intelligence, 26(4), 1246–1254. https://doi.org/10.1016/j.engappai.2012.10.014

Farooq, F., Amin, M. N., Khan, K., Sadiq, M. R., Javed, M. F., Aslam, F., & Alyousef, R. (2020). A comparative study of random forest and genetic engineering programming for the prediction of compressive strength of high strength concrete (HSC). Applied Sciences (Switzerland), 10(20), 1–18. https://doi.org/10.3390/app10207330

Fletcher, D., & Goss, E. (1993). Forecasting with neural networks. An application using bankruptcy data. Information and Management, 24(3), 159–167. https://doi.org/10.1016/0378-7206(93)90064-Z

Gholampour, A., Gandomi, A. H., & Ozbakkaloglu, T. (2017). New formulations for mechanical properties of recycled aggregate concrete using gene expression programming. Construction and Building Materials, 130, 122–145. https://doi.org/10.1016/j.conbuildmat.2016.10.114

Gholampour, A., Mansouri, I., Kisi, O., & Ozbakkaloglu, T. (2020). Evaluation of mechanical properties of concretes containing coarse recycled concrete aggregates using multivariate adaptive regression splines (MARS), M5 model tree (M5Tree), and least squares support vector regression (LSSVR) models. Neural Computing and Applications, 32(1), 295–308. https://doi.org/10.1007/s00521-018-3630-y

Gjørv, O. E. (2019). High-strength concrete. In Developments in the Formulation and Reinforcement of Concrete. Elsevier LTD. https://doi.org/10.1016/B978-0-08-102616-8.00007-1

Golafshani, E. M., Behnood, A., & Arashpour, M. (2020). Predicting the compressive strength of normal and high-performance concretes using ANN and ANFIS hybridized with grey wolf optimizer. Construction and Building Materials, 232, 117266. https://doi.org/10.1016/j.conbuildmat.2019.117266

González-Taboada, I., González-Fonteboa, B., Martínez-Abella, F., & Pérez-Ordóñez, J. L. (2016). Prediction of the mechanical properties of structural recycled concrete using multivariable regression and genetic programming. Construction and Building Materials, 106, 480–499. https://doi.org/10.1016/j.conbuildmat.2015.12.136

Hadi, S. J., Abba, S. I., Sammen, S. S. H., Salih, S. Q., Al-Ansari, N., & Mundher Yaseen, Z. (2019). Non-linear input variable selection approach integrated with non-tuned data intelligence model for streamflow pattern simulation. IEEE Access, 7, 141533–141548. https://doi.org/10.1109/ACCESS.2019.2943515

Hameed, M. M., AlOmar, M. K., Baniya, W. J., & AlSaadi, M. A. (2022). Prediction of high-strength concrete: High-order response surface methodology modeling approach. Engineering with Computers, 38(0123456789), 1655–1668. https://doi.org/10.1007/s00366-021-01284-z

Hooton, R. D., & Bickley, J. A. (2014). Design for durability: The key to improving concrete sustainability. Construction and Building Materials, 67, 422–430. https://doi.org/10.1016/j.conbuildmat.2013.12.016

Jang, J. R. (1993). ANFIS: adaptive-network-based fuzzy inference system. IEEE Transactions on Systems, Man, and Cybernetics, 23(3), 665–685.

Jibril, M. M., Zayyan, M. A., Idris, S., Usman, A. G., Salami, B. A., Rotimi, A., & Abba, S. I. (2023). Implementation of nonlinear computing models and classical regression for predicting compressive strength of high-performance concrete. Applications in Engineering Science, 15, 100133. https://doi.org/10.1016/j.apples.2023.100133

Kaveh, A., Bakhshpoori, T., & Hamze-Ziabari, S. M. (2018). GMDH-based prediction of shear strength of FRP-RC beams with and without stirrups. Computers and Concrete, 22(2), 197–207. https://doi.org/10.12989/cac.2018.22.2.197

Kaveh, A., Gholipour, Y., & Rahami, H. (2008). Optimal design of transmission towers using genetic algorithm and neural networks. International Journal of Space Structures, 23(1), 1–19. https://doi.org/10.1260/026635108785342073

Kaveh, A., & Iranmanesh, A. (1998). Comparative study of backpropagation and improved counterpropagation neural nets in structural analysis and optimization. International Journal of Space Structures, 13(4), 177–185. https://doi.org/10.1177/026635119801300401

Kaveh, A., & Khalegi, A. (2009). Prediction of strength for concrete specimens using artificial neural networks. Advances in Engineering Computational Technology, 53, 165–171. https://doi.org/10.4203/ccp.53.4.3

Khaloo, A., Mobini, M. H., & Hosseini, P. (2016). Influence of different types of nano-SiO2 particles on properties of high-performance concrete. Construction and Building Materials, 113, 188–201. https://doi.org/10.1016/j.conbuildmat.2016.03.041

Liu, K., Alam, M. S., Zhu, J., Zheng, J., & Chi, L. (2021). Prediction of carbonation depth for recycled aggregate concrete using ANN hybridized with swarm intelligence algorithms. Construction and Building Materials, 301, 124382. https://doi.org/10.1016/j.conbuildmat.2021.124382

Malami, S. I., Anwar, F. H., Abdulrahman, S., Haruna, S. I., Ali, S. I. A., & Abba, S. I. (2021). Implementation of hybrid neuro-fuzzy and self-turning predictive model for the prediction of concrete carbonation depth: A soft computing technique. Results in Engineering, 10, 100228. https://doi.org/10.1016/j.rineng.2021.100228

Malik, A., Tikhamarine, Y., Sammen, S. S., Abba, S. I., & Shahid, S. (2021). Prediction of meteorological drought by using hybrid support vector regression optimized with HHO versus PSO algorithms. Environmental Science and Pollution Research, 28(29), 39139–39158. https://doi.org/10.1007/s11356-021-13445-0

Mars, P., Chen, J. R., & Nambiar, R. (2018). Learning algorithms. Learning Algorithms. https://doi.org/10.1201/9781351073974

Mbessa, M., & Péra, J. (2001). Durability of high-strength concrete in ammonium sulfate solution. Cement and Concrete Research, 31(8), 1227–1231. https://doi.org/10.1016/S0008-8846(01)00553-1

Moradi, M. J., Khaleghi, M., Salimi, J., Farhangi, V., & Ramezanianpour, A. M. (2021). Predicting the compressive strength of concrete containing metakaolin with different properties using ANN. Measurement, 183, 109790. https://doi.org/10.1016/j.measurement.2021.109790

Nguyen, T. T., Thai, H. T., & Ngo, T. (2021). Optimised mix design and elastic modulus prediction of ultra-high strength concrete. Construction and Building Materials, 302, 124150. https://doi.org/10.1016/j.conbuildmat.2021.124150

Nourani, V., Elkiran, G., & Abba, S. I. (2018). Wastewater treatment plant performance analysis using artificial intelligence—an ensemble approach. Water Science and Technology, 78(10), 2064–2076. https://doi.org/10.2166/wst.2018.477

Öztaş, A., Pala, M., Özbay, E., Kanca, E., Çaǧlar, N., & Bhatti, M. A. (2006). Predicting the compressive strength and slump of high strength concrete using neural network. Construction and Building Materials, 20(9), 769–775. https://doi.org/10.1016/j.conbuildmat.2005.01.054

Shahabi, H., Shirzadi, A., Ghaderi, K., Omidvar, E., Al-Ansari, N., Clague, J. J., Geertsema, M., Khosravi, K., Amini, A., Bahrami, S., Rahmati, O., Habibi, K., Mohammadi, A., Nguyen, H., Melesse, A. M., Ahmad, B. B., & Ahmad, A. (2020). Flood detection and susceptibility mapping using Sentinel-1 remote sensing data and a machine learning approach: Hybrid intelligence of bagging ensemble based on K-Nearest Neighbor classifier. Remote Sensing. https://doi.org/10.3390/rs12020266

Sheikh, I. A., Khandel, O., Soliman, M., Haase, J. S., & Jaiswal, P. (2021). Seismic fragility analysis using nonlinear autoregressive neural networks with exogenous input. Structure and Infrastructure Engineering. https://doi.org/10.1080/15732479.2021.1894184

Singh, B., Singh, B., Sihag, P., Tomar, A., & Sehgal, A. (2019). Estimation of compressive strength of high-strength concrete by random forest and M5P model tree approaches. Journal of Materials and Engineering Structures «JMES», 6(4), 583–592.

Tayfur, G., Erdem, T. K., & Kırca, Ö. (2014). Strength prediction of high-strength concrete by fuzzy logic and artificial neural networks. Journal of Materials in Civil Engineering, 26(11), 1–7. https://doi.org/10.1061/(asce)mt.1943-5533.0000985

Younis, K. H., & Pilakoutas, K. (2013). Strength prediction model and methods for improving recycled aggregate concrete. Construction and Building Materials, 49, 688–701. https://doi.org/10.1016/j.conbuildmat.2013.09.003

Acknowledgements

The authors give credit for this research to Civil Lake Ltd. and Humans and Machines Ltd.

Funding

Not offered.

Author information

Authors and Affiliations

Contributions

Equal contributions were made by each author to this work.

Corresponding authors

Ethics declarations

Conflict of interest

There is no conflict of interest as declared by the authors.

Ethical approval

Not applicable.

Consent to participate

Certainly.

Consent for publication

Certainly.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jibril, M.M., Malami, S.I., Muhammad, U.J. et al. High strength concrete compressive strength prediction using an evolutionary computational intelligence algorithm. Asian J Civ Eng 24, 3727–3741 (2023). https://doi.org/10.1007/s42107-023-00746-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42107-023-00746-7