Abstract

In the concrete industry, compressive strength is the most essential mechanical property. Therefore, insufficient compressive strength may lead to dangerous failure and, thus, becomes very difficult to repair. Consequently, early, and precise prediction of concrete strength is a major issue facing researchers and concrete designers. In this study, high-order response surface methodology (HORSM) is used to develop a prediction model to accurately predict the compressive strength of high-strength concrete (HSC). Different polynomial degrees order ranging from 2 to 5 is used in this model. The HORSM, with five-order polynomial degree, model outperforms several artificial intelligence (AI) modeling approaches which are carried out widely in the prediction of HSC compression strength. Besides, support vector machine (SVM) model was developed in this study and compared with the HORSM. The HORSM models outperformed the SVM models according to different statistical measures. Additionally, HORSM models managed to perfectly predict the HSC compressive strength in less than one second to accomplish the learning processes. While, other AI models including SVM much longer time. Lastly, the use of HORSM for the first time in the concrete technology field provided much accurate prediction results and it has great potential in the field of concrete technology.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

High-strength concrete (HSC) is a special kind of concrete with advanced mechanical properties, i.e., compressive strength greater than 40 MPa. HSC was invented and manufactured in cementitious labs during the sixth decade of the last century [1]. As indicated by Henry Russell [2], the American Concrete Institute (ACI) defines HSC as ‘‘concrete that meets special performance and uniformity requirements that cannot always be achieved routinely by using only conventional materials and normal mixing, placing, and curing procedures”. HSC has unique characteristics such as high durability, very low impermeability and its voids ratio is small that can increase uniform density [3]. HSC is usually used in many construction projects like piers, long spans of bridges as well as high-rise buildings such as skyscrapers.

The performance of HSC is extremely superior and more efficient than ordinary concrete in terms of resisting applied pressure. Therefore, the HSC design process is complicated in terms of selecting the required materials with special mechanical and chemical characteristics. Additionally, it usually requires several trial batches to attain this type of concrete which meets up constructors and engineers. Moreover, ordinary concrete consists of four materials including water, cement, coarse aggregate, silica fume, and fine aggregate. Whereas, HSC requires additional cementitious material such as fly ash and superplasticizer. These additives have a significant and positive impact on the HSC compressive strength since it reduces water-–cement ratio and makes the concrete mixture more homogeneous compared to the classical one.

In the material science and engineering area, the accurate prediction of mechanical characteristics of construction materials is considered as a significant research problem [4]. Recently, the demand for using HSC in projects has significantly increased [5]. To enhance and increase the durability and compressive strength of concrete, several cement materials are frequently used as additives like blast furnace slag, silica fume, fly ash and metakaolin [5,6,7]. The compressive strength of concrete is commonly considered the most vital quality of HSC especially in designing concrete mix and quality control sectors. Moreover, developing an accurate and reliable predictive model can efficiently predict the CCS, which leads to diminishing costs, efforts, and time by providing vital data to structural engineers and designers.

Generally, the (CCS) is predicted utilizing linear and non-linear approaches [5, 8]. The general formula of the regression method can be expressed as \(y = f\left( {b_{i} ,x_{i} } \right)\), where y is the compressive strength test, \(b_{i} \;{\text{and}}\, x_{i}\) are the coefficients and concrete parameters, respectively. Nevertheless, obtaining a reliable and accurate equation using these empirical-based models is a tough task. In addtion, many parameters affect the CCS varies from those that influence on the compressive strength of ordinary concrete. Therefore, the prediction of CCS using regression method may not be the appropriate choice [9, 10]. On the other hand, artificial intelligence approaches (i.e., neural network, support vector machine, extreme learning machine, and regression tree) have been designed to replace the conventional models which were used to predict CCS. These evolutionary applications have efficient performances and can provide more accurate predictions. Consequently, the use of these techniques is generally employed to resolve the major shortcomings of traditional models that suffer from fluctuations and lack of accuracy in predicting CCS [11].

As machine learning approaches are sort of artificial intelligence (AI), they can be applied broadly in many engineering disciplines because they have effective abilities in predicting, optimization, periodization, and planning. Machine learning (ML) techniques have been widely used in concrete industries for modeling numerously hardened and fresh characteristic of different sorts of concrete like eco-concrete compressive strength [12], elasticity modulus of replaced aggregate concrete [12,13,14], tensile and flexural strength of recycled aggregate concrete [15], etc. There are many applications of ML used to predict the CCS of concrete such as artificial neural network [16], support vector machine [17], genetic programming [18], extreme learning machine [19], adaptive neuro-fuzzy inference system [20], classification and regression trees [21], random forest [22], and whale optimization algorithm [23].

Although these types of AI models have achieved relatively adequate computed results in estimating the CCS of HSC, there were some limitations and significant challenges faced by researchers during developing reliable predictive modeling techniques. One of the main issues is the need to establish a proper pre-processing method for CCS data by AI models. The second issue is the necessity of preparing a suitable algorithm to re-adjust weights and biases associated with the structure of the model and calculate the optimal values of hyperparameters for the selected model. Moreover, AI modeling approaches generally need trial and error methods to be accurately tuned [type of transfer function, number of hidden layer(s) and number of hidden nodes within the hidden layer(s)] [24,25,26,27]. On top of that, regression models developed using AI techniques generally encounter significant issues related to black-box characteristics; thus, the trained model might not be understood [28]. However, in the recent decade, several researchers from different scientific fields developed the traditional AI models using several algorithms inspired by nature for enhancing the performance of classical models [29,30,31,32]. The process of hybridizing AI models with bio-inspired algorithms has achieved significant successes by capturing the non-linear relationship between input variables and their corresponding targets through efficiently tuning the essential parameters which have a great influence on the model accuracy [33,34,35,36]. Despite the great developments in the performance of predictive models by incorporating AI approaches with sophisticated algorithms, the structure of these models became very complex, thereby the interpretation of their outcomes and behaviors sometimes becomes extremely difficult and incomprehensible. To tackle these issues, this study suggests employing a new high-order response surface method (HORSM) model to predict the CCS of HSC. In recent years, several scholars conducted predictive models using classical response surface methodology (RSM) and achieved efficient successes in different civil engineering and other sectors such as water resources [37], environmental [38,39,40,41], hydrology [42, 43], construction and material [44] and other fields of science [45, 46]. Although RSM modeling achieved promising outcomes in several areas, in the past four years, HORSM has been presented as an advanced version of RSM for solving challenging issues in water resources and environmental fields [47, 48]. This study attempts to use the HORSM modeling approach in the concrete and construction field for the prediction of the HSC compression strength, and comparing the accuracy of the employed model with robust AI predictive models and other modeling approaches carried out in the previous studies. For a fair quantitative assessment and to examine the capability of the HORSM model in predicting CCS, the proposed model was validated against the support vector machine (SVM) model which was extensively used for the prediction of CCS by many researchers [49,50,51,52].

This study aims to establish a new prediction modeling approach based on HORSM for accurately predicting HSC compressive strength to be an alternative and reliable competitor to AI models, which are nowadays widely used in concrete and construction material fields. Subsequently, The HORSM model leads to overcome the limitations of the AI models, which were explained previously, and minimizing errors in calculating the HSC compressive strength. Moreover, SVM model is developed in this study to predict the HSC compressive strength. Later, a critical comparison of the performance of SVM and HORSM to predict the CCS of HSC is established.

2 Methodology

2.1 High-order response surface

Response surface method (RSM) is a beneficial tool that can be utilized for modeling and simulating the physical, chemical, engineering, and environmental issues. Furthermore, it is useful for the complicated process calibration by applying several sets of polynomial functions on the set of experimental samples. RSM function describes a certain process based on a specific mathematical model. Second-order polynomial form with cross terms is commonly performed for RSM. The RSM modeling approach might produce an adequate prediction of HSC compressive strength. Nevertheless, RSM models might face some limitations in terms of reliability and accuracy due to the high nonlinear relationship between predictors and CCS. Therefore, it might be essential to apply a mathematical form with a highly polynomial nonlinear degree to attain reliable and precise prediction [47]. Prediction of CCS using ordinary RSM based on a quadratic polynomial form could produce imprecise estimates with less reliability. Consequently, a new version of RSM is suggested based on a high-order degree of polynomial function combined with highly nonlinear cross terms. This modeling technique is called a high-order response surface method (HORSM) which is employed in this study to predict the CCS of HSC. The HORSM function can be expressed by Eq. (1), depending on several input variables V \(\{ v_{1,} v_{2} ,v_{3,} \ldots\)}

where \(\hat{C}\left( v \right)\) refers to high-order calculated RSM for CCS, n is the number of input predictors, \(\beta_{0} ,\,\beta_{i}\), \(\beta_{ij}\), \(a_{ij}\), \(d_{ij}\) and \(g_{ij}\) are unknown coefficients.

The number of coefficients can be determined using the Eq. (2) [47]

The coefficients are calculated according to the calibration of several experimental samples, where Q is the order of RSM that is set in this study from 2 to 5 orders. The unknown coefficients of RSM in Eq. (1) are generally estimated based on least square error method. These coefficients are determined by minimizing the error between actual (C (v)) and calculated (\(\hat{C}\left( v \right) = p(v)^{\mathrm{T}}\)) values of CCS. The error can be expressed by Eq. (3):

where \(C\left( v \right)\) = [\(C_{1} ,C_{2} ,C_{3} , \ldots C_{{{\text{NI}}}} ]^{\mathrm{T}}\) is actual and \(P(v)^{\mathrm{T}} = \left[ {P\left( {C_{1} } \right),P\left( {C_{2} } \right),P\left( {C_{3} } \right), \ldots .P\left( {C_{{{\text{NI}}}} } \right)} \right]\) is the predicted target based on the higher-order polynomial form for NI number of input data observations. Equation (4) describes the predicted value of HSC concrete compressive strength using 5- order degree RSM based on several variables (suppose 3 variables).

Minimizing error function in Eq. (4) according to unknown coefficients \(a\) can lead to a linear form system. Consequently, the coefficients \(a\) are optimized by minimizing Eq. (4) and the predicted values of CCS can be obtained using the Eq. (5):

The adopted model of high-order polynomial forms described in Eq. (2) performs much better than ordinary (quadratic RSM) and provides more accurate results when it comes to simulating complex phenomena. The major phases for predicting CCS using HORSM approach can be summarized as below:

-

Set the input data comprising CCS (C(v)) and predictors \(v_{1} ,v_{2} ,v_{3} , \ldots\)

-

Choose the order of RSM and calculate the predicted values using Eqs. (2) and (4) for training the model (P (\(c))\) based on given data.

-

Determine the predicted vector for all data samples comprising training and testing data (\(P\left( {v_{{{\text{all}}}} } \right)).\)

-

Predicting CCS (\(\hat{C}\left( v \right)\)) by applying Eq. (6).

$$\widehat{{C}}\left( v \right) = P(v_{{{\text{all}}}} )^{\mathrm{T}} \left[ {P(v)^{\mathrm{T}} P\left( v \right)} \right]^{ - 1} \left[ {P(v)^{\mathrm{T}} P\left( v \right)} \right].$$(6)

The previous steps illustrate the process of constituting HORSM for estimating the CCS. In this study, the program code for the HORSM algorithm based on four previous phases was developed using MATLAB language. Figure 1 explains the framework of the suggested HORSM model.

Classical RSM is considered computationally effective in the process of calibrating polynomial functions. However, when the endeavor is to develop a flexible model used for predicting the compressive strength of concrete through a limited number of predictors that have non-linear correlation coefficients, the HORSM model is a reliable and robust option in this case.

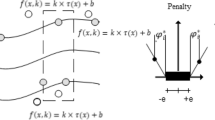

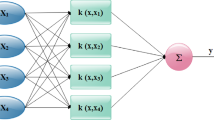

2.2 Support vector machine

Support vector machine (SVM) approach is a sort of AI technique that was developed in 1995 by Vapnik [53]. It is commonly used to address and deal with issues related to classification, regression, and prediction. SVM has been successfully used for modeling different civil engineering issues and achieving high-accuracy results [54,55,56,57]. In this supervised learning machine, SVM is created from the input–output mapping functions of labeled training dataset values. SVM can be employed to address issues related to classification purposes which have only specific values (i.e., 0, 1, and 2) and regression purpose, which has continuous real magnitudes. Typically, the regression model of SVM is very different in solving complicated and nonlinear regression problems by constructing and mapping input–output [58] and extracting a certain relationship between predictors and target values. With respect to the regression task using the SVM model, the first stage is converting the input values to the n-dimensional feature space by applying a specific mapping method. This can be done by applying a nonlinear kernel function then fit into the high-dimensional space. Thus, the input dataset converted and looked more discrete compared to the original data-space [59]. The mechanism of SVM is well described by [41]. In this context, SVM has been utilized as a powerful AI modeling technique to predict the CCS of HSC and compare its targets with the proposed HORSM model to check the accuracy of the adopted predictive model.

For precisely estimating the quality of input parameters, the variabilities of each input variable are compared with each other to obtain a better assessment of input parameters. The normalization approach conducted for all input variables to get a better perspective, since all these variables have a different range of values. Thus, data, before being introduced to SVM, were normalized and set between − 1 and 1 based on Eq. (7). The normalization step is very significant in terms of enhancing and speeding up the process of learning and getting better generalization.

where \(\ddot{X}\) is the ith normalized value of variable (x).

For analyzing and examining the input factor variabilities, the interquartile range (IQR) is calculated by utilizing valued quartiles (Q75%–Q25%) as clearly exhibited in Fig. 2.

Figure 2 illustrates the IQR of scaled input parameters which are in range of 0.639 and 2. Moreover, water (IQR = 2) and fine aggregate (IQR = 0.639) have the highest and lowest values of variability compared to the rest of input factors.

Additionally, radial basis kernel transferee function was used to transfer input data to higher-dimensional space. The code of SVM has been written using MATLAB 2018b.

2.3 Data collection

The concrete mixture used in the study for obtaining HSC consists of water, coarse aggregate, fine aggregate, polycarboxylate superplasticizer (SP) admixture and Type 1 Portland cement. The fine aggregate source was the crushed aggregate. In all samples of concrete mixture, superplasticizer was utilized with a density of 1.06 g/cm3, which led to obtaining efficient fluidity. The cement weights ratio used to conduct the experimental samples varied from 0 to 2% with increment 0.25%. Three cylindrical samples for each mixture were utilized in CCS and each one of 100 mm diameter and 200 mm height was cast for CCS in accordance with ASTMC39 code. The three samples of each mixture were cast and cured in a controlled environment with steady humidity (\(60\overline{ + }5{\text{\% }}\)) and temperature (\(20\overline{ + }\) 1 °C). Additionally, the CCS with the age of 25 days was carried out utilizing a universal testing machine with a capacity of 2500 KN. Table 1 shows the statistical description of each predictor and CCS (target). It is worth noting that the samples used in this study were 324 samples, 75% of which were used to train predictive models; whereas the rest of the samples were used to test the efficacy and validity of those models. All collected data obtained from reliable laboratory tests and published in the literature [19].

2.4 Performance evaluation

The proper conditions of the selection of an efficient and robust nonlinear predictive model can provide accurate predictions of complicated and nonlinear engineering issues. In accordance with the American Society for Civil Engineering area (Hydrology, 2000) for evaluating prediction models, this society recommends using two different types of performance measures, namely descriptive, and visual statistics. The predicted and actual values were utilized for checking the mean, standard deviation, variance, skewness, and kurtosis; while the more standardized statistical matrices are applied to predict and validate the actual values in the test dataset. To examine whether the proposed models in this study, (HORSM) and SVM model, qualify to predict the CCS of HSC, statistical errors were applied such as correlation coefficient (r), root mean square error (RMSE), mean absolute error (MAE), relative error percentage (RE), Willmott’s index (d), relative (%) error data (MAE and RMSE) and nash efficiency (NE). The mathematical expressions of these statistical parameters can be shown as follows:

-

I.

The coefficient of correlation (r) is mathematically expressed in Eq. (8)

$$r = \frac{{\mathop \sum \nolimits_{i = 1}^{n} \left( {{\text{CCSobs}}_{i} - \overline{{{\text{CCSobs}}}} } \right)\left( { {\text{CCSprd}}_{i} - \overline{{{\text{CCSprd}}}} } \right)}}{{\sqrt {\mathop \sum \nolimits_{i = 1}^{n} ({\text{CCSobs}}_{i} - \overline{{{\text{CCSobs}}}} )^{2} } \sqrt {\mathop \sum \nolimits_{i = 1}^{n} ({\text{CCSprd}}_{i} - \overline{{{\text{CCSprd}}}} )^{2} } }} .$$(8) -

II.

Mean absolute error (MAE) is mathematically expressed in Eq. (9)

$${\text{MAE}} = \frac{1}{N}\mathop \sum \limits_{i = 1}^{n} \left| {\left( {{\text{CCSobs}}_{i} - {\text{CCSprd}}_{i} } \right)} \right| .$$(9) -

III.

Root mean square error (RMSE) is mathematically expressed in Eq. (10)

$${\text{RMSE}} = \sqrt {\frac{1}{N}\mathop \sum \limits_{i = 1}^{n} ({\text{CCSobs}}_{i} - {\text{CCSprd}}_{i} )^{2} } .$$(10) -

IV.

Mean absolute percentage error (MAPE %) is mathematically expressed in Eq. (11)

$${\text{MAPE\% }} = \frac{1}{N}\mathop \sum \limits_{i = 1}^{n} \left| {\frac{{\left( {{\text{CCSobs}}_{i} - {\text{CCSprd}}_{i} } \right)}}{{{\text{CCSobs}}_{i} }}} \right| \times 100.$$(11) -

V.

Relative root mean square percentage error (RRMSE) is mathematically expressed in Eq. (12)

$${\text{RRMSE}} = \frac{{\sqrt {\frac{1}{N}\mathop \sum \nolimits_{i = 1}^{n} ({\text{CCSobs}}_{i} - {\text{CCSprd}}_{i} )^{2} } }}{{\frac{1}{n}\mathop \sum \nolimits_{i = 1}^{n} \left( {{\text{CCSobs}}_{i} } \right)}} .$$(12) -

VI.

Willmott’s index (d) is mathematically expressed in Eq. (13):

$$d = 1 - \left[ {\frac{{\frac{1}{N}\mathop \sum \nolimits_{i = 1}^{n} \left({\text{CCSobs}}_{i} - {\text{CCSprd}}_{i} \right)^{2} }}{{\mathop \sum \nolimits_{i = 1}^{n} \left( \left| {{\text{CCSprd}}_{i} - \overline{{{\text{CCSobs}}}} } \right| + \left| {{\text{CCSobs}}_{i} - \overline{{{\text{CCSobs}}}} } \right| \right)^{2} }}} \right] .$$(13) -

VII.

Nash efficiency (NE) is mathematically expressed in Eq. (14)

$${\text{NE}} = 1 - \left[ {\frac{{\mathop \sum \nolimits_{i = 1}^{n} ({\text{CCSobs}}_{i} - {\text{CCSprd}}_{i} )^{2} }}{{\mathop \sum \nolimits_{i = 1}^{n} ({\text{CCSobs}}_{i} - \overline{{{\text{CCSobs}}}} )^{2} }}} \right] .$$(14) -

VIII.

Relative error percentage is mathematically expressed in Eq. (15)

$${\text{RE}}\% = 100 \times \frac{{{\text{CCSobs}}_{i} - {\text{CCSprd}}_{i} }}{{{\text{CCSobs}}_{i} }}.$$(15)

Here, \({\text{CCSobs}}_{i}\) and \({\text{CCSprd}}_{i}\) are the observed and predicted ith values of CCS; \(\overline{{{\text{CCSobs}}}}\) and \(\overline{{{\text{CCSprd}}}}\) represent the average CCS of observed and predicted in a trained and tested sample set; n refers to the total number of data points.

3 Result and discussion

The motivation of this study is to apply a new, alternative, and reliable predicting model called high-order response surface method (HORSM) for construction, and industrial material engineering fields (i.e., compressive strength of high-strength concrete). The new HORSM modeling approaches were validated against robust AI predictive models that used widely in industrial material and manufacture for the development of concrete mixtures sectors such as SVM models. All mentioned models were developed based on five input variables namely, cement, coarse aggregate, fine aggregate, water, and superplasticizer.

In the quantitative assessment Table 2 shows the training and testing performance indicators as well as the optimal parameters for all developed SVM models. In accordance with the same table, the HORSM models with different order degrees ranging from 2 to 5 degrees were evaluated against SVM modeling approaches. The SVM models enerally performed very well during the training phase and achieved higher values of performance measures like r, N, and d ranging from 0.998 to 0.970, 0.997 to 0.941, and 0.999 to 0.984, respectively. However, HORSM models provided varying values of other statistical parameters like MAE, RMSE, MAPE, RRMSE and maximum absolute relative error ranging from 1.924 to 0.419, 2.299 to 0.550, and 3.816% to 0.852%, 4.451% to 1.064%, and 11.945% to 11.002%, respectively. Based on these results, it can be concluded that the most unfavored model was HORSM 2. Additionally, the predictive results improved by the raising HORSM order, Thus, HORSM with 5 polynomial orders generated the highest accuracy results in comparison with all HORSM modeling strategies during the training phase. For fair evaluation, SVM models in the training set were evaluated according to the same statistical matrices that utilized before and these models yielded higher values of r, N, and d ranging from 0.999 to 0.997, 0.995 to 0.998, and 0.999 to 0.999, respectively. Nevertheless, SVM models provided varying values of other statistical parameters such as MAE, RMSE, MAPE, RRMSE and maximum absolute relative error ranging from 0.394 to 0.194, 0.683 to 0.466, 0.409% to 0.813%, 1.323% to 0.902%, and 7.371% to 4.071%, respectively.

Although SVM models provided slightly better accuracy in the prediction of CCS than HORSM models, the training stage is not considered the decisive phase for determining the best predicting modeling method. Thus, the testing phase is the most important stage in the selection process of the best and reliable predictive models because, in this stage, the model’s accuracy will be examined by introducing unseen data and monitoring its performance in generalization capabilities. Returning to Table 2, the HORSM models generally produced excellent responses during the testing stage in terms of r, N and d which ranging from 0.995 to 0.967, 0.989 to 0.903, and 0.997 to 0.980, respectively. With respect to other statistical measures such as MAE, RMSE, MAPE, RRMSE, and maximum absolute relative error, their values were ranging from 2.084 to 0.734, 2.483 to 0.989, 3.957% to 1.437%, 4.704% to 1.874%, and 11.608% to 5.144%, respectively. The most impressive observation could attract attention when the HORSM models showed almost similar behavior in both testing set, and training set and the accuracy was gradually improved with the increase in the nonlinear order. For instance, the HORSM (5) model produced the most accurate predicted results compared to other HORSM modeling approaches during both training and testing phases. Moreover, increasing polynomial nonlinear degrees to five generated a robust predictive model that managed to recognize and capture effectively the complicated and nonlinear relationship between compressive strength and its input variables compared to other HORSM models. With respect to SVM models, it is useful to check their performances during the testing phase and compare it to the training stage. Generally, the accuracies of these models are reduced in comparison to the training set according to statistical indices. However, there was relatively a good agreement between actual and predicted values of CCS and had achieved with r, N, d, MAE, RMSE, MAPE, RRMSE and Maximum Absolute Relative Error ranging from 0.994 to 0.992, 0.986 to 0.983, 0.996 to 0.997, 0.930 to 0.875,1.222 to 1.123, 1.878% to 1.761%, 2.316% to 2.127%, and 10.435% to 5.022%, respectively.

The scatter plot generated between predicted and observed values of CCS over the testing stage for all HORSM and SVM modeling approaches (see Figs. 3, 4). Consequently, the efficiency of the proposed modeling approach can be easily observed by identifying visually the diversion of each single data point. In accordance with the coefficient of determination magnitude (\(R^{2}\)), and the coefficient of correlation (r) which exhibited an exquisite and subtle correlation to the ideal line of 45° for the HORSM (5) model. By the evidence above, it can be concluded that the HORSM with 5-order degrees provided more accurate results in comparison with other HORSM models; therefore, it is vital to carry out more comprehensive assessments with comparable SVM models. Moreover, most of the SVM models generally provided satisfactory predictions in terms of relative error percentage (see Fig. 5). However, these models generated relatively higher values of mean absolute relative error in comparison with HORSM (5) over the testing stage (see Fig. 6). Besides, there was a good match that can be observed from Fig. 7 between actual and predicted values where HORSM (5) provided more accurate predictions among all SVM modeling approaches. As mentioned above, the HORSM (5) model exhibited excellent results compared to other HORSM models, it is crucial to conduct further and detailed comparisons with SVM modeling approaches. Based on the known fact that the reliable models should perform very well in both training and testing set, therefore, HORSM (5) and SVM models were evaluated according to the ratio of statistical parameters like (MAE, RMSE, MAPE%, and RRMSE%) during training phase over their values in the testing phase. Thus, the highest values of statistical indicators, close to one, recorded reference to the most reliable modeling technique. Among the five predictive models, HORSM (5) exhibited the best and more accurate model (see Fig. 8). The adopted modeling approach performed very well in both training and testing phases, thereby reducing the predicting errors to the lowest level compared to other AI models. Moreover, the statistical characteristics, such as mean, standard deviation, skewness, and so on, of the employed model were very close to the actual values of CCS over the testing of data proving smaller magnitudes of absolute differences than SVM models (see Table 3). Additionally, Table 3 demonstrates that the process learning time of proposed models was extremely fast in comparison with SVM models. The adopted model trained efficiently within less than one second. Wherein, the SVM models needed a long time to completely train the model which ranged between 97.8111 and 503.198 s. Thus, it was clear to state that the HORSM model was considered less computationally cost and less time-consuming as well as more efficiently accurate in predicting than the SVM modeling strategies.

The proficiency of the adopted model was intensively evaluated against another AI modeling approach that was used in a previous study for the same dataset. Al-Shamiri et al. [19] conducted a study to predict the CCS of HSC using two different AI modeling methods, including feed-forward backpropagation artificial neural network (FFBANN) and extreme learning machine (ELM) approach. The study recommended using the ELM in the prediction of CCS since it is very fast and has robust capabilities in predicting as well as better generalization performance. ELM models achieved the best prediction results during training and testing stages with minimum predicted errors. For instance, the ELM model produced MAPE value during the testing phase equal to 1.8178%, while the HORSM produced a smaller value of MAPE (1.43%), which means there was a satisfactory enhancement achieved using HORSM (5), thereby improving the predicted result within 20.95%. Figure 9 illustrated more detailed analyses regarding the improvement in accuracy of predictions to using the HORSM (5) in comparison with outcomes achieved by Al-Shamiri et al. for both training and testing phases according to several statistical measures.

Improvement in accuracy of predictions due to using HORSM with five polynomial order degrees compared to ELM modeling approach developed by a previous study [19] over the training and testing phases

3.1 Sensitivity analysis

An accurate Investigation of identifying the most significant parameters that have a major impact on compressive strength of high-strength concrete is crucial in material and structural engineering. To carry out the analysis, cosine amplitude [60, 61] method can be employed for that aim. The mathematical expression of the adopted method can be illustrated as below Eq. (16):

In the equation previously stated, the parameters \(X_{i }\) and \(X_{{j }}\), respectively, represent the input and output values, while n denotes the number of datasets in testing phase. Lastly, \(R_{ij}\) with range between 0 and 1, giving more information on the strength between each variable and target. Based on the given formula, if the magnitude of \(R_{ij}\) (for a certain parameter) is 0, that means there is no relationship between that parameter and the target. However, when the value of \(R_{ij}\) is close to 1 for specific variable(s), then it can be said that the parameter(s) can strongly affect the capacity of compressive strength of concrete. Figure 10 showed the assessment results regarding the influence of each applied parameters to the target. As exhibited from the figure, the cement, coarse aggregate, and water variable are, respectively, having the strongest effects on the model output values.

4 Conclusion

This study attempted to develop a reliable and modern model to accurately predict compressive strength of HSC. Establishing a robust modeling approach is a tough mission in material sectors because the presence of several parameters affects the properties of HSC in comparison to ordinary concrete. Thus, achieving an accurate predicting model is considered an extremely difficult task. However, precise and early forecasting can save time, effort, and cost by reducing trial mixtures. In this context, HORSM was used with different order polynomial degrees ranging from 2 to 5 for developing predictive models. The adopted models have been developed to compete with AI modeling approaches which carried out widely in the prediction of CCS. SVM approach used broadly in the prediction of CCS, therefore, it was developed as a comparable model to examine the performance of HORSM modeling strategies in this study. The obtained result of this study revealed that the accuracies of HORSM modeling improved as order polynomial degrees increased and reached 5 which produced the most accurate outcomes. Yet, the HORSM models outperform the SVM models and generate fewer predicted errors. Additionally, HORSM models managed to perfectly predict the CCS and its algorithm needed in general, less than one second to accomplish the learning processes. In contrast, the algorithm of SVM needed a long time to train the model varying from 97.8111 to 503.198 s. For further assessment, the obtained results of the HORSM also compared with other robust AI modeling techniques such as extreme learning machine using the same data set. The prediction accuracies of the HORSM were more efficient than the extreme learning machine models for both the training and testing set. In accordance with the mentioned results, it can be concluded that the excellent performances of HORSM modeling technique provided very promising results that could compete with AI models in the future.

References

Carrasquillo RL, Nilson AH (1981) Slate FO properties of high strength concrete subject to short-term loads. J Proc 3:171–178

Russell HG (1999) ACI defines high-performance concrete. Concr Int 21(2):56–57

Mbessa M, Péra J (2001) Durability of high-strength concrete in ammonium sulfate solution. Cement Concr Res 31(8):1227–1231

Sobhani J, Najimi M, Pourkhorshidi AR, Parhizkar T (2010) Prediction of the compressive strength of no-slump concrete: a comparative study of regression, neural network and ANFIS models. Constr Build Mater 24(5):709–718

Bharatkumar B, Narayanan R, Raghuprasad B, Ramachandramurthy D (2001) Mix proportioning of high performance concrete. Cement Concr Compos 23(1):71–80

Papadakis V, Tsimas S (2002) Supplementary cementing materials in concrete: Part I: efficiency and design. Cement Concr Res 32(10):1525–1532

Prasad BR, Eskandari H, Reddy BV (2009) Prediction of compressive strength of SCC and HPC with high volume fly ash using ANN. Constr Build Mater 23(1):117–128

Bhanja S, Sengupta B (2002) Investigations on the compressive strength of silica fume concrete using statistical methods. Cement Concr Res 32(9):1391–1394

Yeh I-C, Lien L-C (2009) Knowledge discovery of concrete material using genetic operation trees. Expert Syst Appl 36(3):5807–5812

Hameed MM, AlOmar MK (2020) Prediction of compressive strength of high-performance concrete: hybrid artificial intelligence technique. In: Applied computing to support industry: innovation and technology. Springer International Publishing, Cham, pp 323–335

Topcu IB, Sarıdemir M (2008) Prediction of compressive strength of concrete containing fly ash using artificial neural networks and fuzzy logic. Comput Mater Sci 41(3):305–311

Velay-Lizancos M, Perez-Ordoñez JL, Martinez-Lage I, Vazquez-Burgo P (2017) Analytical and genetic programming model of compressive strength of eco concretes by NDT according to curing temperature. Constr Build Mater 144:195–206

Behnood A, Olek J, Glinicki MA (2015) Predicting modulus elasticity of recycled aggregate concrete using M5′ model tree algorithm. Constr Build Mater 94:137–147

Golafshani EM, Behnood A (2018) Automatic regression methods for formulation of elastic modulus of recycled aggregate concrete. Appl Soft Comput 64:377–400

Behnood A, Verian KP, Gharehveran MM (2015) Evaluation of the splitting tensile strength in plain and steel fiber-reinforced concrete based on the compressive strength. Constr Build Mater 98:519–529

Dao DV, Adeli H, Ly H-B, Le LM, Le VM, Le T-T, Pham BT (2020) A sensitivity and robustness analysis of GPR and ANN for high-performance concrete compressive strength prediction using a Monte Carlo simulation. Sustainability 12(3):830

Ling H, Qian C, Kang W, Liang C, Chen H (2019) Combination of support vector machine and K-Fold cross validation to predict compressive strength of concrete in marine environment. Constr Build Mater 206:355–363

Tsai H-C, Liao M-C (2019) Knowledge-based learning for modeling concrete compressive strength using genetic programming. Comput Concr 23(4):255–265

Al-Shamiri AK, Kim JH, Yuan T-F, Yoon YS (2019) Modeling the compressive strength of high-strength concrete: an extreme learning approach. Constr Build Mater 208:204–219

Golafshani EM, Behnood A, Arashpour M (2020) Predicting the compressive strength of normal and high-performance concretes using ANN and ANFIS hybridized with grey wolf optimizer. Constr Build Mater 232:117266

Gholampour A, Mansouri I, Kisi O, Ozbakkaloglu T (2020) Evaluation of mechanical properties of concretes containing coarse recycled concrete aggregates using multivariate adaptive regression splines (MARS), M5 model tree (M5Tree), and least squares support vector regression (LSSVR) models. Neural Comput Appl 32(1):295–308. https://doi.org/10.1007/s00521-018-3630-y

Singh B, Sihag P, Tomar A, Sehgal A (2019) Estimation of compressive strength of high-strength concrete by random forest and M5P model tree approaches. J Mater Eng Struct JMES 6(4):583–592

Tien Bui D, MaM A, Ghareh S, Moayedi H, Nguyen H (2019) Fine-tuning of neural computing using whale optimization algorithm for predicting compressive strength of concrete. Eng Comput. https://doi.org/10.1007/s00366-019-00850-w

Afan HA, El-Shafie A, Yaseen ZM, Hameed MM, Wan Mohtar WHM, Hussain A (2015) ANN based sediment prediction model utilizing different input scenarios. Water Resour Manag 29(4):1231–1245. https://doi.org/10.1007/s11269-014-0870-1

Hameed M, Sharqi SS, Yaseen ZM, Afan HA, Hussain A, Elshafie A (2017) Application of artificial intelligence (AI) techniques in water quality index prediction: a case study in tropical region. Malays Neural Comput Appl 28(1):893–905. https://doi.org/10.1007/s00521-016-2404-7

Yaseen ZM, El-Shafie A, Afan HA, Hameed M, Mohtar WHMW, Hussain A (2016) RBFNN versus FFNN for daily river flow forecasting at Johor River. Malays Neural Comput Appl 27(6):1533–1542. https://doi.org/10.1007/s00521-015-1952-6

AlOmar MK, Hameed MM, Al-Ansari N, AlSaadi MA (2020) Data-driven model for the prediction of total dissolved gas: robust artificial intelligence approach. Adv Civ Eng 2020:6618842. https://doi.org/10.1155/2020/6618842

Chen S, Gu C, Lin C, Wang Y, Hariri-Ardebili MA (2020) Prediction, monitoring, and interpretation of dam leakage flow via adaptative kernel extreme learning machine. Measurement 166:108161. https://doi.org/10.1016/j.measurement.2020.108161

Zhang G, Ali ZH, Aldlemy MS, Mussa MH, Salih SQ, Hameed MM, Al-Khafaji ZS, Yaseen ZM (2020) Reinforced concrete deep beam shear strength capacity modelling using an integrative bio-inspired algorithm with an artificial intelligence model. Eng Comput. https://doi.org/10.1007/s00366-020-01137-1

Alnaqi AA, Moayedi H, Shahsavar A, Nguyen TK (2019) Prediction of energetic performance of a building integrated photovoltaic/thermal system thorough artificial neural network and hybrid particle swarm optimization models. Energy Convers Manag 183:137–148. https://doi.org/10.1016/j.enconman.2019.01.005

Moayedi H, Raftari M, Sharifi A, Jusoh WAW, Rashid ASA (2020) Optimization of ANFIS with GA and PSO estimating α ratio in driven piles. Eng Comput 36(1):227–238. https://doi.org/10.1007/s00366-018-00694-w

Nguyen H, Mehrabi M, Kalantar B, Moayedi H, MaM A (2019) Potential of hybrid evolutionary approaches for assessment of geo-hazard landslide susceptibility mapping. Geomat Nat Hazards Risk 10(1):1667–1693. https://doi.org/10.1080/19475705.2019.1607782

Zhou G, Moayedi H, Bahiraei M, Lyu Z (2020) Employing artificial bee colony and particle swarm techniques for optimizing a neural network in prediction of heating and cooling loads of residential buildings. J Clean Prod 254:120082. https://doi.org/10.1016/j.jclepro.2020.120082

Moayedi H, Tien Bui D, Gör M, Pradhan B, Jaafari A (2019) The feasibility of three prediction techniques of the artificial neural network, adaptive neuro-fuzzy inference system, and hybrid particle swarm optimization for assessing the safety factor of cohesive slopes. ISPRS Int J Geo-Inf 8(9):391

Shariati M, Mafipour MS, Ghahremani B, Azarhomayun F, Ahmadi M, Trung NT, Shariati A (2020) A novel hybrid extreme learning machine–grey wolf optimizer (ELM-GWO) model to predict compressive strength of concrete with partial replacements for cement. Eng Comput. https://doi.org/10.1007/s00366-020-01081-0

Xu C, Nait Amar M, Ghriga MA, Ouaer H, Zhang X, Hasanipanah M (2020) Evolving support vector regression using Grey Wolf optimization; forecasting the geomechanical properties of rock. Eng Comput. https://doi.org/10.1007/s00366-020-01131-7

Keshtegar B, MeAB S (2018) Modified response surface method basis harmony search to predict the burst pressure of corroded pipelines. Eng Fail Anal 89:177–199

Heddam S, Keshtegar B, Kisi O (2019) Predicting total dissolved gas concentration on a daily scale using kriging interpolation, response surface method and artificial neural network: case study of Columbia River Basin Dams, USA. Nat Resour Res 1–18

Keshtegar B, Heddam S (2018) Modeling daily dissolved oxygen concentration using modified response surface method and artificial neural network: a comparative study. Neural Comput Appl 30(10):2995–3006. https://doi.org/10.1007/s00521-017-2917-8

Fiyadh SS, AlSaadi MA, AlOmar MK, Fayaed SS, Mjalli FS, El-Shafie A (2018) BTPC-based DES-functionalized CNTs for As3+ removal from water: NARX neural network approach. J Environ Eng 144(8):04018070. https://doi.org/10.1061/(ASCE)EE.1943-7870.0001412

AlOmar MK, Hameed MM, AlSaadi MA (2020) Multi hours ahead prediction of surface ozone gas concentration: robust artificial intelligence approach. Atmos Pollut Res 11(9):1572–1587. https://doi.org/10.1016/j.apr.2020.06.024

Keshtegar B, Kisi O (2017) Modified response-surface method: new approach for modeling pan evaporation. J Hydrol Eng 22(10):04017045

Keshtegar B, Kisi O, Zounemat-Kermani M (2019) Polynomial chaos expansion and response surface method for nonlinear modelling of reference evapotranspiration. Hydrol Sci J 64(6):720–730

Hammoudi A, Moussaceb K, Belebchouche C, Dahmoune F (2019) Comparison of artificial neural network (ANN) and response surface methodology (RSM) prediction in compressive strength of recycled concrete aggregates. Constr Build Mater 209:425–436

Keshtegar B, Mert C, Kisi O (2018) Comparison of four heuristic regression techniques in solar radiation modeling: kriging method vs RSM, MARS and M5 model tree. Renew Sustain Energy Rev 81:330–341

Samuel OD, Okwu MO (2019) Comparison of response surface methodology (RSM) and artificial neural network (ANN) in modelling of waste coconut oil ethyl esters production. Energy Sources Part A Recov Utiliz Environ Effects 41(9):1049–1061. https://doi.org/10.1080/15567036.2018.1539138

Keshtegar B, Allawi MF, Afan HA, El-Shafie A (2016) Optimized river stream-flow forecasting model utilizing high-order response surface method. Water Resour Manag 30(11):3899–3914. https://doi.org/10.1007/s11269-016-1397-4

Keshtegar B, Heddam S, Kisi O, Zhu S-P (2019) Modeling total dissolved gas (TDG) concentration at Columbia river basin dams: high-order response surface method (H-RSM) vs. M5Tree, LSSVM, and MARS. Arab J Geosci 12(17):544

Azimi-Pour M, Eskandari-Naddaf H, Pakzad A (2020) Linear and non-linear SVM prediction for fresh properties and compressive strength of high volume fly ash self-compacting concrete. Constr Build Mater 230:117021

Çelik SB (2019) Prediction of uniaxial compressive strength of carbonate rocks from nondestructive tests using multivariate regression and LS-SVM methods. Arab J Geosci 12(6):193. https://doi.org/10.1007/s12517-019-4307-2

Ghanizadeh AR, Abbaslou H, Amlashi AT, Alidoust P (2019) Modeling of bentonite/sepiolite plastic concrete compressive strength using artificial neural network and support vector machine. Front Struct Civ Eng 13(1):215–239. https://doi.org/10.1007/s11709-018-0489-z

Tanyildizi H (2018) Prediction of the strength properties of carbon fiber-reinforced lightweight concrete exposed to the high temperature using artificial neural network and support vector machine. Adv Civ Eng

Vn V (1995) The nature of statistical learning theory. Springer, New York

Park JY, Yoon YG, Oh TK (2019) Prediction of concrete strength with P-, S-, R-Wave velocities by support vector machine (SVM) and artificial neural network (ANN). Appl Sci 9(19):4053

Cw L, Huang Xh, Jj M, Gz Ba (2019) Modification of finite element models based on support vector machines for reinforced concrete beam vibrational analyses at elevated temperatures. Struct Control Health Monit 26(6):e2350

Masino J, Pinay J, Reischl M, Gauterin F (2017) Road surface prediction from acoustical measurements in the tire cavity using support vector machine. Appl Acoust 125:41–48

Li L, Zheng W, Wang Y (2019) Prediction of moment redistribution in statically indeterminate reinforced concrete structures using artificial neural network and support vector regression. Appl Sci 9(1):28

Smola AJ, Schölkopf B (2004) A tutorial on support vector regression. Stat Comput 14(3):199–222

Chou J-S, Tsai C-F, Pham A-D, Lu Y-H (2014) Machine learning in concrete strength simulations: Multi-nation data analytics. Constr Build Mater 73:771–780

Momeni E, Nazir R, Jahed Armaghani D, Maizir H (2014) Prediction of pile bearing capacity using a hybrid genetic algorithm-based ANN. Measurement 57:122–131. https://doi.org/10.1016/j.measurement.2014.08.007

Ji X, Liang SY (2017) Model-based sensitivity analysis of machining-induced residual stress under minimum quantity lubrication. Proc Inst Mech Eng Part B J Eng Manuf 231(9):1528–1541. https://doi.org/10.1177/0954405415601802

Acknowledgements

The authors would like to express their thanks to ALMaarif University College (AUC) for funding this research.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hameed, M.M., AlOmar, M.K., Baniya, W.J. et al. Prediction of high-strength concrete: high-order response surface methodology modeling approach. Engineering with Computers 38 (Suppl 2), 1655–1668 (2022). https://doi.org/10.1007/s00366-021-01284-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-021-01284-z