Abstract

Two improved nonlinear conjugate gradient methods are proposed by using the second inequality of the strong Wolfe line search. Under usual assumptions, we proved that the improved methods possess the sufficient descent property and global convergence. By testing the unconstrained optimization problems which taken from the CUTE library and other usual test collections, some large-scale numerical experiments for the presented methods and their comparisons are executed. The detailed results are listed in tables and their corresponding performance profiles are reported in figures, which show that our improved methods are superior to their comparisons.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The conjugate gradient method (CGM) is an ideal method for solving the large-scale unconstrained optimization problems due to its simple structure and low storage capacity, and therefore, it is usually extended to obtain the solution of optimization arising in many fields of science and engineering. For example, sparse recovery [6], heat conduction problem [21], inverse blackbody radiation problem [15], power system [16, 22, 23] and so on.

In this paper, we consider the following unconstrained optimization problem:

where \(f:R^n\rightarrow R\) is a continuously differentiable function. Denote \(g_{k}=g(x_{k})=\nabla f(x_{k})\) for \(x_{k}\in R^{n}\). For CGM, the classic formula for generating the new iterate \(x_{k+1}\) is

where \(d_{k}\) is the search direction, and \(\alpha _k\) is a step-length computed by a suitable inexact line search. Usually, the popular inexact line searches include the weak Wolfe line search

and the strong Wolfe line search

where the parameters \(\delta \) and \(\sigma \) in (1.3) and (1.4) are required to satisfy \(0<\delta<\sigma <1\). The scalar \(\beta _k\) in (1.2) is the so-called conjugate parameter, and different choices for \(\beta _k\) lead to different CGMs. The classical CGMs include the Hestenes–Stiefel (HS,1952) CGM [11], the Fletcher–Reeves (FR, 1964) CGM [7], the Polak–Ribière–Polyak (PRP,1969) CGM [18, 19] and the Dai–Yuan (DY,1999) CGM [3], and their parameters \(\beta _k\) are specified as follows:

Here \(y_{k-1}=g_k-g_{k-1}\) and \(\Vert \cdot \Vert \) stands for the Euclidean norm.

In the past few decades, based on the methods above, many CGMs with excellent theoretical properties and numerical results are proposed, see [9, 10, 12,13,14, 20, 24,25,26] for details.

Based on the PRP CGM, Huang et al. [12] proposed a new CGM, where \(\beta _k\) is written as

Zhou and Lu [25] modified HS formula as follows:

Recently, Jiang and Jian [13] improved the FR and DY CGMs, and proposed three CGMs with good convergence and high efficiency, in which the conjugate parameters \(\beta _k^{\mathrm{IFR}}\), \(\beta _k^{\mathrm{IDY}}\) and \(\beta _k^{\mathrm{IFD}}\) are computed by

where \(\tau _k=\frac{|g_{k}^{\mathrm {T}} d_{k-1}|}{-g_{k-1}^{\mathrm {T}} d_{k-1}}\) is the disturbance parameter.

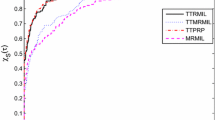

Based on the the numerical results in Ref. [13], we further test the \(\tau _k \cdot \mathrm{FR}, \ (1- \tau _k) \cdot \mathrm{FR}, \ \tau _k \cdot \mathrm{PRP}, \ (1- \tau _k) \cdot \mathrm{PRP}, \ \tau _k \cdot \mathrm{HS}, \ (1- \tau _k) \cdot \mathrm{HS}, \ \tau _k \cdot \mathrm{DY},\) and \((1- \tau _k) \cdot \mathrm{DY}\) CGMs, where the test problems randomly are taken from Refs. [1, 2, 17]. And all the steplengthes are yielded by the strong Wolfe line search with \(\sigma =0.1, \ \delta =0.01\). The numerical results are demonstrated by the following performance charts, and see Figs. 1 and 2:

We notice that there is an interesting phenomenon, that is the methods perform well when the disturbance parameter is yielded by \({\tau _k}\) if the \(\beta _k\) with the denominator \(d_{k-1}^{\mathrm{T}}(g_k-g_{k-1})\) and the disturbance parameter is obtained from \(1-{\tau _k}\) while the denominator of \(\beta _k\) is \(\Vert g_{k-1}\Vert ^{2}\). Along these ideas and inspired by [12, 13, 25], we propose two improved conjugate parameters as follows:

In particular, by the second inequality of the strong Wolfe line search (1.4), we know that \(0 \le {\tau _k= \frac{|g_k^{\mathrm{T}}d_{k-1}|}{-g_{k-1}^{\mathrm{T}}d_{k-1}}} \le \sigma \) if \(g_{k-1}^{\mathrm{T}} d_{k-1}<0\).

This paper is organized as follows. In Sect. 2, the algorithms and relevant properties are demonstrated. The preliminary numerical results are reported in Sect. 3. The conclusion is presented in the final section.

2 Algorithms and Relevant Properties

In this section, based on the conjugate parameters (1.7) and (1.8), we first introduce corresponding algorithms with the strong Wolfe line search (1.4) as follows:

Step 0. Choose constants \(\epsilon >0\), \(0<\delta<\sigma <1\), and initial point \({ x_0}\in R^n\). Let \({ d_0=-g_0}\), \({ k:=0}\).

Step 1. If \(\Vert g_k\Vert \le \epsilon \), then stop. Otherwise, go to Step 2.

Step 2. Determine a step-length \(\alpha _k\) by the strong Wolfe line search (1.4).

Step 3. Let \(x_{k+1}:=x_k+\alpha _kd_k\), compute \(g_{k+1}=g(x_{k+1})\) and parameter \(\beta _{k+1}\) by (1.7) or (1.8), i.e., \(\beta _{k+1}=\beta _{k+1}^{\mathrm {IMPRP}}\) or \(\beta _{k+1}^{\mathrm {IMHS}}\).

Step 4. Compute \(d_{k+1}=-g_{k+1}+\beta _{k+1}d_k\). Let \(k:=k+1\), and go back to Step 1.

For the sake of convenience, we call these iteration methods which are decided by the algorithms with \(\beta _{k+1}^{\mathrm {IMPRP}}\) and \(\beta _{k+1}^{\mathrm {IMHS}}\) the IMPRP CGM and the IMHS CGM, respectively.

The following lemmas state that the search directions yielded by the above two CGMs are all sufficient descent, and possess some properties.

Lemma 2.1

Let \(d_{k}\) be yielded by the IMPRP CGM. If \(0<\sigma <\frac{1}{2}\), then the relation

holds. In other words, the direction \(d_k\) satisfies the sufficient descent condition, that is

Moreover, the relation \(0 \le \beta _k^{\mathrm {IMPRP}} \le \beta _k^{\mathrm {FR}}\) holds.

Proof

Observe that \(g_0^{\mathrm {T}}d_0=-\Vert g_0\Vert ^2\) and the relation (2.1) holds for \(k={0}\). Now, assume that (2.1) holds for \(k-1\) (\(k>{1}\)), and we will prove that (2.1) holds for k. Firstly, it follows from \(g_{k-1}^{\mathrm {T}}d_{k-1}<0\) and the second inequality of (1.4) that

and we further have \(0 \le {\tau _k} \le \sigma .\) This together with (1.7) implies that

where \(\cos \xi _k = \frac{g_k^Tg_{k-1}}{\Vert g_k\Vert \Vert g_{k-1}\Vert }\).

To proceed, based on the inequality (2.3), and with the help of [8, Lemma 3.1], the relations (2.1) and (2.2) hold, and the proof is completed. \(\square \)

Lemma 2.2

Let \(d_{k}\) be yielded by the IMHS CGM. If \(0<\sigma <1\), then the direction \(d_{k}\) satisfies the sufficient descent condition

and the relation

holds.

Proof

Using mathematical induction, firstly, it is easy to see that (2.4) holds for \(k={0}\) since \({d_{0}}={-g_{0}}.\) And we assume that (2.4) holds for \(k-1\) (\(k>{1}\)) which implies that \(g_{k-1}^{\mathrm {T}}d_{k-1}<0\). This together with the second inequality of (1.4) indicates that \(0 \le {\tau _k} \le \sigma \) and

which further gives

where \(\cos \phi _k = \frac{g_k^{\mathrm{T}}g_{k-1}}{\Vert g_k\Vert \Vert g_{k-1}\Vert }\). Multiplying the both sides (1.2) by \(g_{k}^{\mathrm {T}}\), and then from (2.7), we have

Finally, it follows from (2.7), (2.8) and \(g_{k-1}^{\mathrm {T}}d_{k-1}<0\) that relations (2.4), (2.5) hold, which ends the proof. \(\square \)

By [8, Lemma 3.1] for the IMPRP CGM and [4, Theorem 2.3] for the IMHS CGM, we obtain the global convergence results for the IMPRP and IMHS CGMs obviously, respectively, and omit their for brevity.

3 Numerical Results

In this section, we report some preliminary numerical performance of our methods to illustrate their effectiveness and potentiality. The numerical experiments are shown in the following two subsections.

3.1 Experimental Set-up

To demonstrate more clearly the effectiveness of our methods, we have run two groups preliminary numerical experiments for the IMPRP CGM and the IMHS CGM, respectively.

-

In Group A, we compare the IMPRP CGM with the MPRP CGM [12] and the KD CGM [14]. There are 102 problems are tested, in which problems 1–52 (from bard to vardim) come from CUTE library [2], and others come from [1, 17].

-

In Group B, the IMHS CGM is compared with the MHS CGM [25] and the NHS CGM [24]. Specifically, the test problems 1–62 (from bard to woods) are taken from the CUTE library [2], and the others (63–107) are taken from [1, 17].

In the testing process, the dimensions of the test problems range from 2 to 1,000,000. The initial iterate points for all test problems are same as that given in [1, 2, 17]. All codes were written in Matlab R2014a, and run on a DELL laptop (Inter(R) Core(TM) i5-5200U CPU 2.20 GHz) with 4 GB RAM memory and Windows 10 operating system. For all methods, we adopt the strong Wolfe line search rule with \(\sigma =0.1, \ \delta =0.01\). We stop the iteration when the gradient values \(\Vert g_k\Vert \le 10^{-6}\). Also, when the number of iteration \(\mathrm{Itr}>2000\), we stop the iteration to indicate that the test problem is not valid, and denote it as “NaN”.

The comparison results are shown in Tables 1, 2, 3 and 4. Where “name” is the abbreviation of the test problem, and “n” is the dimension of the test problem. Moreover, denote the number of iteration, function evaluations, gradient evaluations, the computing time of CPU and gradient values the “\( \mathrm{Itr},\ \mathrm{NF},\ \mathrm{NG},\ \mathrm{Tcpu}\) and \(\Vert g_{*}\Vert \)”, respectively.

To show the test results clearly, we adopt the performance profiles introduced by Dolan and Moré [5] to illustrate and compare the performances on Tcpu and Itr, respectively. About the performance profile, see [5] for details. Generally speaking, the higher the curve \(\rho (\tau )\), the better the numerical profile of the corresponding method.

3.2 Numerical Testing Reports

We observe from Tables 1, 2 and Figs. 3, 4 that the IMPRP CGM solves about \(97\%\) of test problems in Group A, while the MPRP CGM and the KD CGM solve about \(85\%\) and \(89\%\) of this group, respectively. Furthermore, the IMPRP CGM is fastest since it solves about 40% of the test problems with the least Itr and Tcpu, respectively.

Tables 3, 4 and Figs. 5, 6 show that the IMHS CGM exhibits the best performance since it can solve almost \(42\%\) of test problems with the smallest Itr and Tcpu in Group B. Meanwhile, the MHS CGM and the NHS CGM are superior for solving \(25\%\) and \(30\%\) of test problems, respectively. Moreover, the IMHS CGM solves about 97% of the test problems successfully, while the MHS CGM and the NHS CGM solve about 86% and 90% of this group, respectively.

In summary, from the performance profiles in Figs. 3, 4, 5 and 6, the curves corresponding to the IMPRP CGM and the IMHS CGM are all at the top, respectively, which indicates that the proposed methods perform well, at least for these collections of experiments.

4 Conclusion

In this work, according to the interesting phenomenon in experiments, we have constructed two new conjugate parameters with the second inequality in the strong Wolfe line search. Based on general assumptions, all relevant methods satisfy the sufficient descent and global convergence. Finally, numerical experiments were done and reported, which show that the improved CGMs have good performance and application prospect.

References

Andrei, N.: An unconstrained optimization test functions collection. Adv. Model. Optim. 10(1), 147–161 (2008)

Bongartz, I., Conn, A.R., Gould, N., Toint, P.L.: CUTE: constrained and unconstrained testing environment. ACM Trans. Math. Softw. 21(1), 123–160 (1995)

Dai, Y.H., Yuan, Y.X.: A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10(1), 177–182 (1999)

Dai, Y.H., Yuan, Y.X.: An efficient hybrid conjugate gradient method for unconstrained optimization. Ann. Oper. Res. 103, 33–47 (2001)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2), 201–213 (2002)

Esmaeili, H., Shabani, S., Kimiaei, M.: A new generalized shrinkage conjugate gradient method for sparse recovery. Calcolo 56(1), 1–38 (2019)

Fletcher, R., Reeves, C.M.: Function minimization by conjugate gradients. Comput. J. 7(2), 149–154 (1964)

Glibert, J.C., Nocedal, J.: Global covergence properties of conjugate gradient method for optimization. SIAM J. Optim. 2(1), 21–42 (1992)

Hager, W.W., Zhang, H.C.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16(1), 170–192 (2005)

Hager, W.W., Zhang, H.C.: A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2(1), 35–58 (2006)

Hestenes, M.R., Stiefel, E.: Methods of conjugate gradients for solving linear systems. J. Res. Natl. Bur. Stand. 49(6), 409 (1952)

Huang, H., Li, Y., Wei, Z.: Global convergence of a modified PRP conjugate gradient method. J. Math. Res. Expos. 30(1), 141–148 (2010)

Jiang, X., Jian, J.: Improved Fletcher–Reeves and Dai–Yuan conjugate gradient methods with the strong Wolfe line search. J. Comput. Appl. Math. 348, 525–534 (2019)

Kou, C., Dai, Y.: A modified self-scaling memoryless Broyden–Fletcher–Goldfarb–Shanno method for unconstrained optimization. J. Optim. Theory Appl. 165(1), 209–224 (2015)

Li, H.: Solution of inverse blackbody radiation problem with conjugate gradient method. IEEE Trans. Antennas Propag. 53(5), 1840–1842 (2005)

Liu, Z., Zhu, S., Ge, Y., Shan, F., Zeng, L., Liu, W.: Geometry optimization of two-stage thermoelectric generators using simplified conjugate-gradient method. Appl. Energy 190, 540–552 (2017)

Moré, J.J., Garbow, B.S., Hillstrom, K.E.: Testing unconstrained optimization software. ACM Trans. Math. Softw. 7(1), 17–41 (1981)

Polak, E., Ribière, G.: Note sur la convergence de méthodes de directions conjuées. Rev. Fr. Inform. Rech. Oper. 3(16), 35–43 (1969)

Polyak, B...T.: The conjugate gradient method in extremal problems. USSR Comput. Math. Math. Phys. 9(4), 94–112 (1969)

Tang, C., Li, S., Cui, Z.: Least-squares-based three-term conjugate gradient methods. J. Inequal. Appl. (2020). https://doi.org/10.1186/s13660-020-2301-6

Yang, K., Jiang, G., Qu, Q., Peng, H., Gao, X.: A new modified conjugate gradient method to identify thermal conductivity of transient non-homogeneous problems based on radial integration boundary element method. Int. J. Heat Mass Transf. 133, 669–676 (2019)

Yang, L.F., Luo, J.Y., Xu, Y., Zhang, Z.R., Dong, Z.Y.: A distributed dual consensus ADMM based on partition for DC-DOPF with carbon emission trading. IEEE Trans. Ind. Inform. 16(3), 1858–1872 (2020)

Yang, L.F., Zhang, C., Jian, J.B., Meng, K., Xu, Y., Dong, Z.Y.: A novel projected two-binary-variable formulation for unit commitment in power systems. Appl. Energy 187, 732–745 (2017)

Zhang, L.: An improved Wei–Yao–Liu nonlinear conjugate gradient method for optimization computation. J. Comput. Appl. Math. 215(6), 2269–2274 (2009)

Zhou, X., Lu, L.: The global convergence of modified DY conjugate gradient methods under the Wolfe line search. J. Chongqing Normal Univ. (Nat. Sci. Ed.) 33(3), 6–10 (2016)

Zhu, Z., Zhang, D., Wang, S.: Two modified DY conjugate gradient methods for unconstrained optimization problems. Appl. Math. Comput. 373(15), 125004 (2020)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (11771383), the Natural Science Foundation of Guangxi Province (2020GXNSFDA238017, 2018GXNSFFA281007) and Research Project of Guangxi University for Nationalities (2018KJQ-D02).

Author information

Authors and Affiliations

Contributions

All authors read and approved the final manuscript. JJ mainly contributed to the algorithm design; PL and XJ mainly contributed to the convergence analysis, numerical results and drafted the manuscript; BH mainly contributed to the convergence analysis.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by Rohollah Yousefpour.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Jian, J., Liu, P., Jiang, X. et al. Two Improved Nonlinear Conjugate Gradient Methods with the Strong Wolfe Line Search. Bull. Iran. Math. Soc. 48, 2297–2319 (2022). https://doi.org/10.1007/s41980-021-00647-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41980-021-00647-y

Keywords

- Unconstrained optimization

- Conjugate gradient method

- Strong Wolfe line search

- Sufficient descent property

- Global convergence