Abstract

The inverse eigenvalue problem of quasi-tridiagonal matrices involves reconstruction of quasi-tridiagonal matrices with the given eigenvalues satisfying some properties. In particular, we first analyze the eigenvalue properties from two aspects. On this basis, we investigate the inverse eigenvalue problem of quasi-tridiagonal matrices from the theoretic issue on solvability and the practical issue on computability. Sufficient conditions of existence of solutions of the inverse eigenvalue problem of quasi-tridiagonal matrices concerning solvability are found, and algorithms concerning computability are given with the unitary matrix tool from which we construct matrices. Finally, examples are presented to illustrate the algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The real number field and the complex number field are denoted by \(\mathbb {R}\) and \(\mathbb {C}\), respectively. Let \(E^{(k)}_{i,j}\) be the \(k\times k\) matrix with 1 at its entry (i, j) and zeros elsewhere, \(i=1, 2, \ldots , k\) and \(j=1, 2, \ldots , k\). In this paper, we study the inverse eigenvalue problem of quasi-tridiagonal matrices, a class of matrices of this form

where \(a_k\in \mathbb {R}\), \(b_k\in \mathbb {C}-\mathbb {R}\), \(k=1, 2, \ldots , n\), \(\overline{b}_{k}\) is the complex conjugation of \(b_{k}\), and \(\overline{b}_{n}\) lie in any given entry (1, m) (the first row and mth column) of matrix J for \(3\le m\le n\). Especially, when \(m=n\), we have \(J=H\), where

The goal of this paper is to find the numbers \(a_{1},\dots ,a_n\) and \(b_{1},\dots ,b_{n}\) so that the wanted matrix J has exactly the given eigenvalues. Thus, there are inputs to the problem and the output is the matrix J. The characteristic polynomial of the \(n\times n\) matrix J is \(\chi _{n}\left( \lambda \right) =\mathrm{det}\left( \lambda {I_{n}}-J\right) \), where \(I_n\) is the \(n\times n\) identity matrix. Let \(\sigma \left( J\right) =\{\lambda _{1}, \ldots , \lambda _{n}\}\) be the spectrum of J. It is clear that J is Hermitian, so the eigenvalues \({\lambda _{1}}, \ldots , {\lambda _{n}}\) of J are real.

The inverse eigenvalue problems for different classes of matrices have attracted much attention [10, 13, 14, 16, 19]. Inverse eigenvalue problems arise in a remarkable variety of applications, including system and control theory, geophysics, molecular spectroscopy, particle physics, and structure analysis. Also today their place in mathematical physics is determined rather by the unexpected connection between inverse problems and nonlinear evolution equations which was discovered in 1967. If \(b_{k} \ne 0\) is real for \(k=1, \dots , n\) and \(b_{n}\) and \(\overline{b}_{n}\) are in (1, n) (the first row and the nth column) and (n, 1) entries of the above matrices, the class of matrices H of the form (1.2) is called periodic Jacobi matrices. The inverse eigenvalue problems arise in many areas such as science and engineering [8]. Due to its wide application, these problems deserved much attention of researchers [1, 6, 7, 15, 18]. Algorithms have been found to reconstruct matrices with described eigenvalues [1, 6, 15, 18]. In [17], four inverse eigenvalue problems for pseudo-Jacobi matrices have been considered. The necessary and sufficient conditions for the solvability of these problems have been shown.

If \(b_{n}=0\) in period Jacobi matrices, then the matrices are reduced to tridiagonal matrices named Jacobi matrices. The inverse eigenvalue problems for the family of this form have also been solved. Furthermore, a variety of algorithms of constructions of Jacobi matrices have been presented [2, 5, 9, 11, 12]. In addition, it is shown in [12] by Moerbeke that the eigenvalues of real Jacobi matrices are distinct real numbers.

Study on Jacobi matrix and periodic Jacobi matrix has been relatively mature; in recent years, the extended matrices based on the two classes of matrices have been studied. In 2001, the properties of complex Jacobi matrix were investigated in [13], and the Jacobi matrix was extended to the complex domain. In 2013, inverse spectral problems for pseudo-symmetric matrix whose form is similar to periodic Jacobi matrix are discussed in [18], and the periodic Jacobi matrix was extended to non-symmetric form. In 2013, Bebiano investigated a class of non-self-adjoint periodic of tridiagonal matrices some of whose elements are in the plural [15].

In this article, the inverse eigenvalue problem of the matrix J with the non-diagonal elements is solved. The following are relevant to quasi-tridiagonal matrices.

Principle sub-matrices are the following form of matrices obtained by deleting the first row and the first column of J, denoting as

In the following, the characteristic polynomial of G is denoted by \(\psi _{n-1}\left( \lambda \right) =\mathrm{det}\left( \lambda {I_{n-1}}-G\right) \), and the spectrum of G is \(\sigma \left( G\right) =\{{\mu _{1}}, \ldots , {\mu _{n-1}}\}\). In addition, we can also use the product of non-diagonal elements of J. \(\mathrm{Re}(\beta )\) is the real part of complex number \(\beta =(-1)^n\prod _{k=1}^nb_k\). The other forms of matrices will be involved, respectively, defining them as

This paper is organized as follows. The eigenvalue properties, as well as the location of J and G, are discussed in Sect. 2. We get the conclusion that the eigenvalues of J and G satisfy interlacing property. In Sect. 3, the inverse eigenvalue problems of a family of quasi-tridiagonal matrices are explored. In this part, the construction of bordered diagonal matrices with given eigenvalues is solved first, and the sufficient conditions of solvability to the inverse eigenvalue problem of quasi-tridiagonal matrices are presented. The reconstruction of J is analyzed. Algorithms to describe the construction of J are given in Sect. 4. In Sect. 5, numerical examples are given to illustrate the algorithm and the results demonstrate that the algorithms are practical.

2 Eigenvalue Problem of Quasi-tridiagonal Matrices

Lemma 2.1

[18] The eigenvalues of G are strictly distinct real numbers, that is, \({\mu _{1}}, \ldots , {\mu _{n-1}}\) are real and simple.

The following lemma presents the sufficient and necessary conditions for J and G having common eigenvalues.

Lemma 2.2

Let \({\mu _{1}}, \ldots , {\mu _{n-1}}\) be distinct eigenvalues of G, and \(u_{k}^\mathrm{T}=[{u_{k1}}, \ldots ,\)\( {u_{k,n-1}}]^\mathrm{T} \in \mathbb {C}^{n-1}\) is the unit eigenvector of G corresponding to \(\mu _{k},\) where \(u_{k1}\) and \(u_{k,m-1}\) are the first and \((m-1)\)th entries of \(u_{k},\) respectively. \(\mu _{k}\) is one eigenvalue of J if and only if \(|b_{1}{u_{k,1}}+\overline{b}_{n}{u_{k,m-1}}| = 0\).

Proof

As a bridge, we first define a bordered diagonal matrix as

where the kth row of U is taken as \({u_{k}^*}\) (\(^*\) represents conjugate transpose), it follows that \(U^*=\left[ u_{1}, \ldots , u_{n-1}\right] \). It is clear that U is unitary matrix, that is, \(U^*U=I_{n-1}\) (the \((n-1)\times (n-1)\) identity matrix).

Let \(e_k\) be the \((n-1)\)-dimensional row vector with 1 at its kth element and zeros elsewhere for \(k=1, \dots , n-1\). The matrix J can be expressed as

where \(h=b_1e_{1}+ \overline{b}_{n}e_{m-1}\). In addition, let

where \(M=\mathrm{diag}\left( \mu _{1}, \ldots , \mu _{n-1}\right) \). Clearly, M is unitarily similar to tridiagonal matrix G, that is, the following formula holds:

It is easy to get

where \(\alpha _k={\left| b_{1}{u_{k,1}}+\overline{b}_{n}{u_{k,m-1}}\right| }^2\). It is equivalent to

Then

Substituting \(\mu _{k}\) into (2.5), the following can be obtained

Differentiating both sides of the polynomial \(\psi _{n-1}\left( \lambda \right) \), we see

As the eigenvalues of G are simple, \(\psi '_{n-1}\left( \lambda \right) \) must not be 0.

It can easily be seen that

According to (2.6), we obtain

Therefore, \(\mu _{k}\) is an eigenvalue of J, that is, \(\chi _{n}\left( \mu _{k}\right) =0\). Considering (2.8), we infer that \(\chi _{n}\left( \mu _{k}\right) =0\) if and only if \(\alpha _{k}=0\). This completes the proof. \(\square \)

Suppose there are no common eigenvalue between them, then the next lemma presents the condition which the eigenvalues of quasi-tridiagonal matrices satisfy.

Lemma 2.3

If the formula \(|b_{1}{u_{k,1}}+\overline{b}_{n}{u_{k,m-1}}| \ne 0\) holds for \(k=1, \ldots , n-1,\) then the eigenvalues of J are the zeros of the following function :

Proof

The lemma follows immediately from Lemma 2.2 and formula (2.5). \(\square \)

Based on Lemmas 2.2 and 2.3, the position of the eigenvalues between J and G in the condition that there are no common eigenvalue is presented in the following theorem.

Theorem 2.4

Let \(\lambda _{1}< \cdots <\lambda _{n}\) and \(\mu _{1}<\cdots <\mu _{n-1}\) be the eigenvalues of J and G, respectively. If each \(\lambda _{j}\) is not the eigenvalue of G, then the eigenvalues of J are strictly distinct real numbers, and the eigenvalues \(\lambda _{j}\) and \(\mu _{k}\) satisfy the inequality as follows :

Proof

By Lemma 2.3, we know that the eigenvalues of J are the roots of the equation

Let \(g(\lambda )=\lambda -a_{1}\) and \(q(\lambda )=\sum _{k=1}^{n-1}{\frac{{\alpha _{k}}}{{\lambda -\mu _{k}}}}\). It is obvious to prove that \(g(\lambda )\) is monotonically increasing in the entire interval.

Since \({q'(\lambda )}<0\) with \(\lambda \in {\left( \mu _{k}, \mu _{k+1}\right) \cup \left( -\infty ,\mu _{1}\right) \cup \left( \mu _{n-1}, +\infty \right) }\) for \(k=1, \ldots , n-2\), then \(q(\lambda )\) is strictly monotonically decreasing in each interval and \(\lim _{\lambda \rightarrow +\infty }h(\lambda )=\lim _{\lambda \rightarrow -\infty }h(\lambda )=0\).

Computing \({q''(\lambda )}\), we also know the sign of which changes only once in each interval. It follows that there exist odd roots in each interval. From what have been discussed above, there exists one and only one real root in each interval, that is, the inequality holds. \(\square \)

The condition that the eigenvalues of J satisfy is given in the following if some of the eigenvalues of G are also the eigenvalues of J.

Lemma 2.5

Let S be a subset containing s elements of \(\{1, \ldots , n-1\},\) such that \(|b_{1}{u_{k,1}}+\overline{b}_{n}{u_{k,m-1}}|=0\) holds for \(k \in S,\) and \(|b_{1}{u_{k,1}}+\overline{b}_{n}{u_{k,m-1}}| \ne 0\) holds for \(k \notin S\). Then \(\mu _{k}\) is an eigenvalue of J for \(k \in S \) and the rest eigenvalues of J are the \(n-s\) roots of \(f(\lambda )\).

Proof

It is known that \(\chi _{n}\left( \lambda \right) \) has n roots. Based on Lemma 2.2, it is obvious that \(\chi _{n}\left( \mu _{k}\right) =0\) for \(k \in S\) and the rest eigenvalues of J satisfy \(\prod _{j=1}^{n-1}\left( \lambda -\mu _{j}\right) \ne 0\). Therefore, the rest \(n-s\) eigenvalues of J are the zeros of the polynomial \(f\left( \lambda \right) \), obtained from the structure of \(\chi _{n}\left( \lambda \right) \). The proof of this lemma is now complete. \(\square \)

Based on the previous theory, we can obtain the general properties of eigenvalues of quasi-tridiagonal matrices.

Theorem 2.6

Assume that \(\lambda _{1}\le \cdots \le \lambda _{n}\) and \(\mu _{1}<\cdots <\mu _{n-1}\) are the eigenvalues of J and G. Let S be a subset containing s elements of the set \(\{1, \ldots , n-1\},\) such that \(\mu _{k}\) is an eigenvalue of J for \(k \in S \). Then the multiplicity of the eigenvalues of J is at most 2, the multiple roots are also eigenvalues of G, and the eigenvalues of J and G satisfy the following inequality :

Proof

Let \(S=\{s_{1}, \ldots , s_{s}\}\)\((s_{1}<\cdots <s_{s})\), \(N-S=\{k_{1}, \ldots , k_{n-s}\}\)\((k_{1}<\cdots <k_{n-s})\), where \(N=\{1, \ldots , n-1\}\). Without loss of generality, we may assume \(\mu _{s_{i}}=\lambda _{s_{i}}\), then by Lemma 2.5, the rest \(n-s\) eigenvalues of J satisfy the equation \(f(\lambda )=0\).

We can also have a conclusion that there exists one and only one eigenvalue of J in each interval \((\mu _{k_{i}}, \mu _{k_{i+1}}) \cup (-\infty , \mu _{k_{1}}) \cup (\mu _{k_{n-s}}, +\infty )\) for \(k_{i} \in {N-S}\) from Theorem 2.4.

Assume one eigenvalue \(\lambda _{k_{j}}\) satisfies \(\lambda _{k_{j}}=\lambda _{s_{i}}=\mu _{s_{i}}\), since \(\mu _{i}\) is distinct and simple, then we can come to a conclusion that the multiplicity of the eigenvalues of J is at most 2, and the multiple eigenvalues are also the eigenvalues of G. \(\square \)

To sum up the above discussion, we can characterize the eigenvalue properties of J and the location of J and G.

3 The Inverse Eigenvalue Problems of J

In this section, we first define two bordered diagonal matrices A and \(A^{-}\) as a bridge to proceed in the proof of the next theorem in a similar manner of Lemma 2.2 in Sect. 2, constructed as follows:

Assume

where \( M=\mathrm{diag}(\mu _{1}, \ldots , \mu _{n-1})=U^*{G}U \). Denote vectors c and \(c^-\) as \(c=[c_{1}, \ldots , c_{n-1}]^\mathrm{T}\) and \(c^-=[c^-_{1}, \ldots , c^-_{n-1}]^\mathrm{T}\), respectively.

From the construction above, the diagonal matrix M and the tridiagonal matrix G have the same eigenvalues, the matrices A and J also have the same eigenvalues, so do the matrices \(A^{-}\) and \(J^{-}\). It is easy to get \(a_{1}=\mathrm{tr}\left( A\right) -\mathrm{tr}\left( M\right) =\sum _{m=1}^{n}{\lambda _{m}}-\sum _{k=1}^{n-1}{\mu _{k}}\).

We can also know the matrices A and \(A^{-}\) are both bordered diagonal matrices.

In the above process, if the eigenvalues of J are given, and the eigenvalues of G are selected, then \(a_{1}\) and M can be computed. Therefore, if we want to construct the bordered diagonal matrices A and \(A^{-}\), it is sufficient to compute the boundary elements of them, that is, to compute the vector c and vector \(c^{-}\), respectively.

The following theorem provides formulas to compute the boundary elements of the bordered diagonal matrices A and \(A^{-}\) in the case of \(J=H\).

Theorem 3.1

For H, assume that \(\lambda _{i}\) and \(\mu _{k}\) are distinct real numbers and satisfy the inequality \(\lambda _{1} \le \mu _{1} \le \lambda _{2} \le \mu _{2} \le \cdots \le \mu _{n-1} \le \lambda _{n}\). Then the entries \(c_{k}\) and \(c^-_{k},\)\((k=1, \ldots , n-1)\) can be represented with \(\lambda _{i},\)\(\mu _{k}\) and \(\mathrm{Re}{\beta },\) where the formulas are as follows :

Proof

Computing the characteristic polynomial of A, we have

Substituting \(\lambda =\mu _{1}, \ldots , \mu _{n-1}\) into formula (3.3), we get \(n-1\) equations of \(\left| {c_{k}}\right| \). Solving the \(n-1\) equations, we have

Let \(p(\lambda )=\mathrm{det}(\lambda {I_{n}}-{\hat{H}})\) and \(r(\lambda )=\mathrm{det}(\lambda {I_{n-2}}-L)\), then we get

Doing subtraction with the above two equations, we have

Therefore,

In addition,

substituting \(\mu _{k}\) into formula (3.5), we have

Since

combining formula (3.6) with formula (3.5), we have

This completes the proof of Theorem 3.1. \(\square \)

Based on Theorem 3.1, the existence of solutions is shown in the following.

Theorem 3.2

Let \(\left\{ \lambda _{k}\right\} ,\left( k=1, \ldots , n\right) \) and \(\left\{ \mu _{k}\right\} , \left( k=1, \ldots , n-1\right) \) be distinct real numbers, and satisfy the inequality \(\lambda _{k} \le \mu _{k} \le \lambda _{k+1}, k=1, \ldots , n-1.\) If the real part \(\mathrm{Re}\left( \beta \right) \) of \(\beta \) satisfies the inequality \(| \mathrm{Re}{\beta }| \le r,\) denoting \(r=\mathrm{max}_{1 \le k \le n-1} (r_{k}),\) where \(r_{k}=\left( -1\right) ^{n-k-1}\frac{{1}}{{4}}\prod _{j=1}^{n}\left( \mu _{k}-\lambda _{j}\right) \). Then the solutions of formulas (3.4) and (3.7) exist. It is equivalent to the fact that the solutions exist.

Proof

On the one hand, due to the condition \(\lambda _{k} \le \mu _{k} \le \lambda _{k+1}\), for \(k=1, \ldots , n-1\), yields the signs of \(\prod _{j=1}^{n}(\mu _{k}-\lambda _{j})\) and \( \prod _{\begin{array}{c} j=1\\ j\ne k \end{array}}^{n-1}(\mu _{k}-\mu _{j})\) are opposite. Therefore,

The above inequality ensures the existence of solutions of formula (3.4). On the other hand, if we want to get the formula \({\left| {c_{i}}^{-}\right| }^2 \ge 0\), then for \(i=1, \ldots , n-1\), the following \(n-1\) formulas must be guaranteed

Since

the above formula is equivalent to

that is,

It is equivalent to

Solving the inequality, we have

for \(i=1, \ldots , n-1\).

Simplifying formula (3.8) gives a simple inequality

To sum up, if formula (3.9) holds, then the following formula holds:

The above ensures the existence of solutions of formula (3.7). The proof is completed. \(\square \)

The theorems above provide the sufficient conditions of existence of solutions.

Theorem 3.3

Defining vectors c and \(c^{-}\) as above, assume that \(\overline{b}_{n}\) is in the entry (1, m) of matrix J, the formulas \({\overline{b}_{1}}u_{1}+b_nu_{m-1}=c\)\((3\le m\le n),\)\(2{b_{n}}u_{n-1}=c-c^{-}(m=n)\) and \({\overline{b}_{1}}u_{1}=c+c^{-}(m=n)\) hold.

Proof

Noting that \(\Vert u_1\Vert =1\) and \(\Vert u_{m-1}\Vert =1\). From \(h^*U^*=c\), we have

so

When \(m=n\), from \(h=c^*U^*\) and \(h^-={c^-}^*{U}^*\), we have

that is,

Therefore,

The proof is completed. \(\square \)

4 Algorithm for the Inverse Eigenvalue Problem of Quasi-tridiagonal Matrices

4.1 Algorithm for H

The algorithm combining the previous theoretic part with Lanczos algorithm for the construction of quasi-tridiagonal matrices is presented as follows:

-

(1)

Giving \(\lambda _k\), \(k=1,\dots ,n\), take \(\mu _k=\frac{1}{2}(\lambda _k+\lambda _{k+1})\), \(k=1,\dots ,n-1\). According to formulas (3.4) and (3.7), we can compute \(\left| c_{k}\right| \) and \(\left| {c_{k}}^{-}\right| \). Let \(c_{k}=\left| c_{k}\right| \mathrm{e}^{{\mathrm{i}}\frac{{\pi }}{{4}}}\), \({c_{k}}^{-}=\left| {c_{k}}^{-}\right| \mathrm{e}^{{\mathrm{i}}\frac{{\pi }}{{4}}}\), then we get the complex vectors c and \(c^{-}\).

-

(2)

From formula (3.11), we can compute \(\left| \overline{b_{1}}\right| \), noting \(\left| u_{1}\right| =1\). Let \(b_{1}=\left| \overline{b_{1}}\right| \mathrm{e}^{\mathrm{i}\frac{\pi }{4}}\), then we get the complex number \(\overline{b_{1}}\), then we can compute the eigenvector \(u_{1}\). Similarly, we can also compute \(\left| b_{n}\right| \). Taking \(b_{n}=\left| b_{n}\right| \mathrm{e}^{\mathrm{i}\frac{\pi }{4}}\), we can have the element \(b_{n}\), then we can compute the vector \(u_{n-1}\).

-

(3)

It is well known that \(G=UMU^{*}\) is equal to \({U^{*}G=MU^{*}}\). For simplicity, we denote the sub-matrix G as the following form:

$$\begin{aligned} G= \sum _{k = 1}^{n-1}t_kE^{(n-1)}_{k,k}+\sum _{k = 1}^{n-2}s_kE^{(n-1)}_{k,k+1}+\sum _{k = 1}^{n-2} \overline{s}_kE^{(n-1)}_{k+1,k}. \end{aligned}$$We can compute the vectors \(u_{2}, \ldots , u_{n-1}\) and the tridiagonal matrix G via Lanczos algorithm with the vector \(u_{1}\) and the diagonal matrix \(M=\mathrm{diag}\left( \mu _{1}, \ldots , \mu _{n-1}\right) \), proceeding as follows:

-

Step 0.

\(k=1\).

-

Step 1.

\(t_{k}={u^*_{k}}Mu_{k} \in {\mathbb R}.\)

-

Step 2.

\(z_{k+1}=Mu_{k}-u_{k}t_{k}\) for \(k=1\),

\(z_{k+1}=Mu_{k}-u_{k}t_{k}-u_{k-1}s_{k-1}\) for \(k=2, \ldots , n-2\).

-

Step 3.

\({\overline{s}_{k}}=\left\| z_{k+1}\right\| \mathrm{e}^{\mathrm{i}\frac{{\pi }}{{4}}}\).

-

Step 4.

\(u_{k+1}=z_{k+1}\left( {\overline{s}_{k}}\right) ^{-1}\).

Let \(k=k+1\) return to step 1.

Calculating the above steps, we get \(a_{2}, \ldots , a_{n-1}\)\(b_{1}, \ldots , b_{n-2}, b_{n}\).

-

Step 0.

-

(4)

Select \({\left( -1\right) ^n}(b_{1}\cdots b_{n-2}b_{n})^{-1}\mathrm{Re}\left( \beta \right) \) as \( b_{n-1}\).

-

(5)

Obviously, \(a_{1}=\mathrm{tr}\left( A\right) -\mathrm{tr}\left( M\right) =\sum _{k=1}^{n}\lambda _{k}-\sum _{k=1}^{n-1}\mu _{k}\).

4.2 Algorithm for J

Give m and \(\lambda _k\), \(k=1,\dots ,n\), and take \(\mu _k=\frac{1}{2}(\lambda _k+\lambda _{k+1})\), \(k=1,\dots ,n-1\). According to formula (3.4), we can compute \(\left| c_{k}\right| \). Let \(c_{k}=\left| c_{k}\right| \mathrm{e}^{{\mathrm{i}}\frac{{\pi }}{{4}}}\), then we get the complex vector c.

Take any \((n-1)\)-dimensional complex column vector \(\tilde{u}_1\). For \(j=1\), let \(u_1^{(j)}=\tilde{u}_1\Vert \tilde{u}_1\Vert ^{-1}\).

-

Step 0.

\({b_{1}^{(j)}}=\overline{(u_1^{(j)})^*c}\), \(k=1\);

-

Step 1.

\(t_{k}^{(j)}={(u^{(j)}_{k})^*}Mu_{k}^{(j)} \in {\mathbb R}\);

-

Step 2.

\(z_{k+1}^{(j)}=Mu_{k}^{(j)}-u_{k}^{(j)}t_{k}^{(j)}\) for \(k=1\),

\(z_{k+1}^{(j)}=Mu_{k}^{(j)}-u_{k}^{(j)}t_{k}^{(j)}-u_{k-1}^{(j)}s_{k-1}^{(j)}\) for \(k=2, \ldots , m-2\);

-

Step 3.

\(\overline{s_{k}^{(j)}}=\Vert z_{k+1}^{(j)}\Vert \mathrm{e}^{\mathrm{i}\frac{{\pi }}{{4}}}\);

-

Step 4.

\(u_{k+1}^{(j)}=z_{k+1}^{(j)}\left( {\overline{s_{k}^{(j)}}}\right) ^{-1}\);

-

Step 5.

\(b_n^{(j)}=(u_{m-1}^{(j)})^*c\);

-

Step 6.

\(|b_1^{(j+1)}|=\Vert c-b_n^{(j)}u_{m-1}^{(j)}\Vert \);

-

Step 7.

\(b_1^{(j+1)}=|b_1^{(j+1)}|\mathrm {e}^{\mathrm {i}\frac{\pi }{4}}\);

-

Step 8.

\(u_1^{(j+1)}=(c-b_n^{(j)}u_{m-1}^{(j)})\left( \overline{b_1^{(j+1)}}\right) ^{-1}\);

-

Step 9.

\(b_1^{(j+1)}=\overline{(u_1^{(j+1)})^*c}\) for regulating \(b_1^{(j+1)}\) above;

-

Step 10.

\(u_{m-1}^{(j)}=(c-\overline{b_1^{(j+1)}}u_{1}^{(j+1)})({b_n^{(j)}})^{-1}\) for regulating \(u_{m-1}^{(j)}\) above.

Let \(j=j+1\), return to step 0. When \(j=K\) makes \(\Vert u_{m-1}^{(K)}-u_{m-1}^{(K+1)}\Vert <\varepsilon \) given before start, we take \(b_1=b_1^{(K+1)}\), \(b_n=b_n^{(K)}\) and \(u_1=u_1^{(K+1)}\).

For \(k=2,\dots ,n-2\),

Let \(k=k+1\) return. Calculating the above steps, we get \(u_{1}, \dots , u_{n-1}\). Let \(J=(J_{i,j})=O_{n \times n}\). Take

We get J wanted. To avoid errors, we may take \(\frac{1}{2}(J+J^*)\) as J.

5 Numerical Experiments

Numerical experiments are conducted with Matlab to test the algorithms for illustrating our method.

Example 5.1

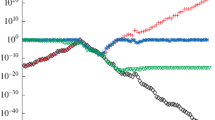

Giving a set of geometric sequence whose first item is 2, and common ratio is 2, \(\lambda _{1}=2\), \(\lambda _{2}=8\), \(\lambda _{3}=32\) and \(\lambda _{4}=128\), we reconstruct a \(4\times 4\) quasi-tridiagonal matrix H of the form (1.2) with the eigenvalues \(\lambda _1,\lambda _2,\lambda _3,\lambda _4\) by the algorithm for H.

Taking \(\mu _{1}=4\), \(\mu _{2}=16\), \(\mu _{3}=64\), first we have the boundary elements of the bordered diagonal matrix

The matrix H is reconstructed as follows:

Substituting the given eigenvalues into the characteristic polynomial of the constructed matrix H, we have Table 1.

Example 5.2

Giving a set of Fibonacci sequence from the second item, \(\lambda _{1}=1\), \(\lambda _{2}=2\), \(\lambda _{3}=\lambda _{1}+\lambda _{2}=3\), \(\lambda _{4}=\lambda _{2}+\lambda _{3}=5\) and \(\lambda _{5}=\lambda _{3}+\lambda _{4}=8\), we reconstruct three \(5\times 5\)J of the form (1.1) for \(m=3,4,5\) with the eigenvalues \(\lambda _1,\lambda _2,\lambda _3,\lambda _4,\lambda _5\) by the algorithm for J.

Taking \(\mu _{1}=\frac{1}{2}(\lambda _{1}+\lambda _{2})=1.5\), \(\mu _{2}=\frac{1}{2}(\lambda _{2}+\lambda _{3})=2.5\), \(\mu _{3}=\frac{1}{2}(\lambda _{3}+\lambda _{4})=4\) and \(\mu _{4}=\frac{1}{2}(\lambda _{4}+\lambda _{5})=6.5\) and choosing \(\tilde{u}_1=[1,2,3,4]^\mathrm{T}+{\mathrm{i}}[5,6,7,8]^\mathrm{T}\), when \(m=3\), we reconstruct J as

Substituting the given eigenvalues into the characteristic polynomial of the constructed matrix J, we have Table 2.

When \(m=4\), we reconstruct J as

Substituting the given eigenvalues into the characteristic polynomial of the constructed matrix J, we have Table 3.

When \(m=5\), we reconstruct J as

Substituting the given eigenvalues into the characteristic polynomial of the constructed matrix J, we have Table 4.

6 Conclusion

The spectral properties and the inverse eigenvalue properties for the class of quasi-tridiagonal matrices are given. The first conclusion is that the multiplicities of the eigenvalues of J are at most two, as well as satisfying the interlacing properties. Second, the sufficient conditions of the solution to the inverse eigenvalue problem of quasi-tridiagonal matrices are solved. Two algorithms are given. The advantage of the second algorithm is that we can give any initial vector. Computational results are shown in numerical examples, illustrating the feasibility of the algorithm.

References

Andrea, S.A., Berry, T.G.: Continued fractions and periodic Jacobi matrices. Linear Algebra Appl. 161, 117–134 (1992)

Arlinskii, Y., Tsekhanovskii, E.I.: Non-self-adjoint Jacobi matrices with a rank-one imaginary part. J. Funct. Anal. 241, 383–438 (2006)

Bebiano, N., Joao, D.P.: Inverse spectral problems for structured pseudo-symmetric matrices. Linear Algebra Appl. 438, 4062–4074 (2013)

Bebiano, N., Tyaglov, M.: Direct and inverse spectral problems for a class of non-self-adjoint periodic tridiagonal matrices. Linear Algebra Appl. 439, 3490–3504 (2013)

Beckerman, B.: Complex Jacobi matrices. J. Comput. Appl. Math. 127, 17–65 (2001)

Boley, D., Golub, G.H.: A modified method for reconstructing periodic Jacobi matrices. Math. Comput. 165, 143–150 (1984)

Ferguson, W.E.: The construction of Jacobi and periodic Jacobi matrices with prescribed spectra. Math. Comput. 35, 1203–1220 (1980)

Gladwell, G.M.L.: Inverse problems in vibration. Appl. Mech. Rev. 39, 1013–1018 (1986)

Hald, O.: Inverse eigenvalue problems for Jacobi matrices. Linear Algebra Appl. 14, 63–85 (1976)

Higgins, V., Johnson, C.: Inverse spectral problems for collections of leading principal submatrices of tridiagonal matrices. Linear Algebra Appl. 489, 104–122 (2015)

Hochstadt, H.: On the construction of a Jacobi matrix from spectral data. Linear Algebra Appl. 8, 435–446 (1974)

Moerbeke, P.V.: The spectrum of Jacobi matrices. Inventiones Mathematicae 37, 45–81 (1976)

Monfared, K.H., Shader, B.L.: The \(\lambda -\tau \) structured inverse eigenvalue problem. Linear Multilinear Algebra 63, 2275–2300 (2015)

Mourad, B., Abbas, H., Moslehian, M.S.: A note on the inverse spectral problem for symmetric doubly stochastic matrices. Linear Multilinear Algebra 63, 2537–2545 (2015)

Natalia, B., da Fonseca, C.M., da Providencia, J.: An inverse eigenvalue problem for periodic Jacobi matrices in Minkowski spaces. Linear Algebra Appl. 435, 2033–2045 (2011)

Soto, R.L., Julio, A.I., Salas, M.: Nonnegative persymmetric matrices with prescribed elementary divisors. Linear Algebra Appl. 483, 139–157 (2015)

Su, Q.F.: Inverse spectral problem for pseudo-Jacobi matrices with partial spectral data. J. Comput. Appl. Math. 297, 1–12 (2016)

Xu, Y.H., Jiang, E.X.: An inverse eigenvalue problem for periodic Jacobi matrices. Inverse Probl. 23, 165–181 (2007)

Zhang, Q.H., Xu, C.Q., Yang, S.J.: Symmetric stochastic inverse eigenvalue problem. J. Inequal. Appl. 180, 1–17 (2015)

Acknowledgements

The research was supported partially by National Natural Science Foundation of China (Grant nos. 10871056 and 10971150).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Touraj Nikazad.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, X.T., Jin, M.L. Inverse Eigenvalue Problem for Quasi-tridiagonal Matrices. Bull. Iran. Math. Soc. 45, 1697–1712 (2019). https://doi.org/10.1007/s41980-019-00223-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41980-019-00223-5