Abstract

Pile settlement is the downward displacement of a foundational pile in construction, used to support structures by transferring loads to stable soil or rock layers beneath the surface. Accurately predicting pile settlement is critical for successful foundation design in civil engineering. In recent years, machine learning techniques have been utilized to predict pile settlement with improved accuracy compared to traditional analytical methods. This study has considered three different optimization algorithms combined with Support vector regression (SVR) to enhance its predictive capabilities further. These algorithms include Archimedes Optimization (AO), Marine Predators Algorithm (MPA), and Augmented Grey Wolf Optimizer (AGWO). The proposed SVR-based models are trained and tested using field data from several projects with varying soil conditions. The input parameters include pile diameter, length, soil properties, and load characteristics. In addition, various statistical measures are used to evaluate each model’s performance. In conclusion, the proposed SVR models, including optimization algorithms, particularly AGWO, MPA, and AO, provide a robust and accurate prediction of pile settlement. In comparing the models with each other, SVAO was able to obtain the most appropriate values compared to the other two models, with \({R}^{2}=0.997\) and \({\text{RMSE}}=0.201\). In general, these models can be used as a reliable tool in foundation design for predicting pile settlement and ensuring the safety and stability of structures.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In civil engineering, pile foundations are commonly employed to transmit the load of buildings to the underlying ground or rock. When piles are embedded in rock, they transfer loads to the earth either through end bearing, shaft resistance, or a mixture of the two. In scenarios where the soil overlay on top of the rock is weak and not deep, employing piles into the bedrock is perceived as one of the most fitting remedies (Carrubba 1997). The rock’s horizontal resistance can create substantial support despite minimal pile displacement in such situations. According to the results, it can be inferred that the current techniques for designing socketed piles in rock are comparably efficient (Carvalho et al. 2023; Lu et al. 2023).

Nonetheless, owing to the intricacy of the pile’s behavior, these techniques may not furnish precise forecasts (Ng et al. 2001). Consequently, it is essential to create a novel paradigm of soft computing able to accurately predict and precisely anticipate settlement of piles (SP), an essential aspect of pile design (Gutiérrez-Ch et al. 2021). Since SP can significantly affect the durability and security of structures, it should be considered a critical element in this process (Fleming 1992). Despite the various design techniques available, geotechnical engineers encounter difficulty in developing an innovative and feasible forecasting model that produces satisfactory results for SP prediction (Armaghani et al. 2020). Recent studies have demonstrated that pile performance prediction precision heavily relies on the choice of input variables. Therefore, the subsequent sentences will examine pertinent research studies to ascertain the appropriate input variables to anticipate SP (Masoumi et al. 2020).

As stated in reference (Randolph and Wroth 1978), multiple factors impact the SP. These factors include the magnitude of the load on the pile, the diameter and length of the pile, as well as the shear modulus (Le Tirant 1992). Another factor is the radial gap, where the stress from shear decreases (Nejad et al. 2009). Conversely, earlier research has suggested that the pile capacity and, consequently, the SP can be significantly influenced by the rocks’ unconfined compressive strength (UCS) in the surrounding area (Rowe and Armitage 1987). Utilizing common penetrating test data, a prediction algorithm on the basis of data mining was developed for predicting foundation settling. For training the model, about 1000 data points were employed at first from different sources. Later on, field measurements were included to enhance the model’s effectiveness further. Integrating fundamental geotechnical engineering principles is essential to underscore the applied value of models. Comprehending soil behavior, including stress–strain dynamics and shear strength, is paramount. Likewise, critical soil properties like permeability and compaction influence interactions with structures. By intertwining these principles within model elucidations, it becomes evident how models replicate authentic soil behavior and its responses to diverse forces. The incorporation of pertinent analytical methods, such as finite element analysis and analytical solutions, underscores model accuracy and relevance in predicting phenomena like pile settlement. This approach offers a holistic perspective on model alignment with established theories, augmenting their practical efficacy in geotechnical engineering (Akbarzadeh et al. 2023; Sedaghat et al. 2023).

Geotechnical engineering has become increasingly common in using artificial intelligence (AI) and machine learning (ML) in recent years. As described, an ML algorithm can generate an anticipated result once provided with experimental data (Alam et al. 2021). ML comprises several learning methods: supervised, unsupervised, semi-supervised, and reinforcement (Vapnik 1999a). Recently, researchers have integrated machine learning techniques into real-world geotechnical engineering problems. Some of the methods utilized consist of gene expression programming (GEP), artificial neural network (ANN), support vector machine (SVM), multilayer perceptron neural network (MLP), as well as the multigroup approach for data management to predict the desired output data (Vapnik et al. 1996; Smola and Schölkopf 2004).

Ge et al. (2023) used SVR coupled with two optimizer algorithms containing the Arithmetic Optimization Algorithm (AOA) and Grasshopper Optimization Algorithm (GOA). They found that the RMSE values for SVR-AOA and SVR-GOA were obtained as 0.550 and 0.592, respectively, and the MAE presented values of 0.525 and 0.561, respectively. The R-value of SVR-AOA shows a desired intensity of 0.994, which is 0.10% higher than that of SVR-GOA. Kumar and Robinson (2023) introduced the SVR combined with Henry’s Gas Solubility Optimization (HGSO) and Particle Swarm Optimization (PSO) to predict the settlement of the pile. The R2 of the model was obtained similarly at 0.99. In comparison, the RMSE of SVR-PSO appears more than double that of SVR-HGSO, 0.46 and 0.29 mm, respectively. Cesaro et al. (2023) proposed a new, simple analytical method to predict the load–deflection response at the pile tip. The reliability of the proposed method is verified against a database consisting of 50 in situ pile loading tests performed worldwide. Kumar and Samui (2020) proposed a least squares support vector machine (LSSVM), a group data processing method (GMDH), and a reliability analysis based on Gaussian process regression (GPR) of a group of piles resting on cohesive soil. The results showed that all models can be applied to analyze the settlement of a group of piles reliably.

In addition, other investigations have been studied on the effect of machine learning in geotechnical applications (Onyelowe et al. 2022; Gnananandarao et al. 2023a, b, c). Gnananandarao et al. (2020) presented the application of artificial neural networks (ANN) and multivariate regression analysis (MRA) to predict the bearing capacity and settlement of multi-sided foundations in sand. The R for multi-sided foundations ranged from 0.940 to 0.977 for the ANN model and from 0.827 to 0.934 for the regression analysis. Similarly, R for SRF prediction can be 0.913–0.985 for the ANN model and 0.739–0.932 for regression analysis. Onyelowe et al. (2021) predict erosion potential and generate a model equation using ANN learning techniques. The performance shows the model has an R2 more significant than 0.95 during training and testing between the predicted and measured values. Furthermore, the error metrics show significantly low values, indicating good performance.

This study aims to employ a supervised learning method for regression analysis to forecast SP. A regression analysis technique is implemented to ascertain the association between the independent variables or features and the dependent variable or output. Because of the capacity of AI methods to handle complex systems and process a large number of parameters, the SVR method is used to predict SP. Moreover, metaheuristic approaches are employed to enhance the accuracy, reduce errors in the model, and produce outputs similar to laboratory results. Various novel methods are going to be covered in the following part, namely the Archimedes Optimization algorithm (AOA), marine predators’ algorithm (MPA), and Augmented grey wolf optimizer (AGWO), which are among various optimizers.

The effectiveness of optimizing methods in enhancing the precision of the SVR estimating framework is highlighted through various statistical measures. The SVR model’s performance is evaluated using or not using the mentioned techniques, and the outcomes show the framework with optimizing methods outperforms the individual one. The paper generally suggests a unique strategy to forecast pile settlement by incorporating SVR and three unique optimization algorithms. The results suggest that SVR can accurately predict pile settlement, and implementing optimization algorithms improves the model’s performance. Therefore, geotechnical scientists are able to anticipate SP and optimize pile foundation planning with the help of this technology.

2 Dataset and methodology

2.1 Data gathering

The Klang Valley Mass Rapid Transit (KVMRT) is a recent endeavor that strives to ease traffic congestion in Kuala Lumpur, Malaysia’s vibrant capital. Site analysis revealed that to prevent station failure for the KVMRT project, several bored piles would be required to be installed. Figure 1 illustrates that the project site in Malaysia includes diverse rock foundations, such as granite, sandstone, limestone, and phyllite, thus necessitating the construction of several heaps (Hatheway 2009).

This research tends to look into a certain topic comprising 96 piles founded on granite rock. It was discovered that the San Trias formation is where the granite rock in the region originated. The study examined the geological characteristics of the subsurface materials at the pile locations. The study’s findings revealed that the subsoil profiles were primarily constituted of residual rocks. As per the collected data, the bedrock depth varied between 70 cm and more than 1400 m below the ground level. Further information regarding the field sampling and bore log details is discussed in the following sentences—

-

The observed rock masses ranged from moderately to extensively weathered.

-

According to the ISRM, the UCS values ranged from 25 to 68 (MPa), with the minimum and maximum values being observed.

-

The bore log data indicate that the soil is highly weathered up to 16.5 (MPa) depth, with the predominant soil composition being hard sandy mud. The minimum and the maximum N_SPT values observed were 4 and 167 blows per 300 (mm), respectively.

-

From depths of 7.5–27.0 (m), most of the subsoil materials have N_SPT values that exceed 50 blows per 300 (mm).

The initial step in developing a prediction model is to collect a dataset with robust dependent variables. Identifying and delineating the critical factors that substantially influence the model’s output is essential. Table 1 shows the statistical properties of model input and target values and the total data (96 samples) presented in Appendix 1.

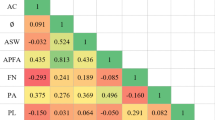

Figure 2 shows the correlation of input and output (Khatti and Grover 2023b, c, e). The correlation matrix observes correlations between input variables (Lp/D, Ls/Lr, N_SPT, UCS, Qu) and the output variable (SP). Lp/D and Ls/Lr exhibit strong positive correlations with SP (0.742 and 0.714, respectively), indicating increased static penetration as these ratios grow. Conversely, N_SPT and UCS demonstrate strong negative correlations with SP (− 0.727 and − 0.753, respectively), revealing reduced static penetration with higher standard penetration test blow count and unconfined compressive strength. Qu displays a moderate positive correlation with SP (0.662), suggesting elevated ultimate bearing capacity increases static penetration. Understanding these correlations aids in optimizing the given problem.

2.2 Support vector regression (SVR)

SVR stands out from other models because it can improve generalization performance and achieve an optimal global solution within a shorter timeframe (Vapnik et al. 1996; Gunn 1998).

2.2.1 Linear support vector regression

Assuming a training dataset of \(\{{y}_{i}, {x}_{i}, i=1, 2, 3,\dots n\}\), where \({y}_{i}\) represents the output vector, \({x}_{i}\) represents the input vector, and n represents the dataset size. The general linear regression form of SVR can be expressed as follows:

In the above equation, the dot product is represented by \(\left(x,k\right)\), where k is the weight vector, x represents the normalized test pattern, and b is the bias. As shown in Eq. (2), the empirical risk is calculated using an \(\varepsilon\)-insensitive loss function denoted by \({L}_{\varepsilon }({y}_{i},f\left({x}_{i},k\right))\):

The \(\varepsilon\)-insensitive loss function, denoted by \({L}_{\varepsilon }\left({y}_{i},f\left({x}_{i},k\right)\right)\), represents the tolerance error between the target output \({y}_{i}\) and the estimated output values \(f\left({x}_{i},k\right)\) during the optimization process. The training pattern, \({x}_{i}\), is also defined in this context. Minimizing the squared norm of the weight vector, \({\Vert k\Vert }^{2}\), can simplify the complexity of the SVR model when using the \(\varepsilon\)-insensitive loss function for linear regression problems. The deviation of the training data outside the ε-insensitive zone can be estimated using a non-negative slack variable (\({\varphi }_{i}^{*}{\varphi }_{i})\).

One must find the Lagrange function’s saddle point to solve the problem.

The KKT conditions can be applied to minimize the Lagrange function by performing partial differentiation of Eq. (5) concerning k, b, \({\varphi }_{i}^{*}\), and \({\varphi }_{i}\).

The parameters \(k\) in Eqs. (1) and (6) are connected. The dual optimization function can be expressed as follows by substituting Eq. (6) into the Lagrange function:

The Lagrange multipliers \({\alpha }_{i}^{*}\) and \({\alpha }_{i}\) are used to define the optimization problem (Luenberger and Ye 1984). Once Eq. (10) is solved under the constraints in Eq. (11), the ultimate linear regression function can be stated as:

2.2.2 Nonlinear support vector regression

Linear SVR may not be appropriate for complex real-world problems. By mapping the input data into a high-dimensional feature space where linear regression is applicable, nonlinear SVR can be obtained. A nonlinear function is used to transform the input training pattern, \({x}_{i}\), into the feature space \(\tau ({x}_{i})\). Consequently, the formulation of the nonlinear support vector regression takes shape, as shown below.

The parameter vector is represented by \(k\) and \(b\), and the mapping function \(\tau (x)\) transforms input features into a feature space with higher dimensionality.

Figure 3 illustrates the diagram of nonlinear SVR with \(\varepsilon\)-insensitive loss function. The bold points have the maximum distance from the decision boundary, representing the support vectors (Saha et al. 2020).

The \(\varepsilon\)-insensitive loss function that has an error tolerance \(\varepsilon\), shown on the right side of Fig. 3, and upper and lower bounds computed by the slack variable (\({\varphi }_{i}^{*},{\varphi }_{i})\). Last, nonlinear support vector regression can be communicated as:

The kernel function \(\tau \left({x}_{i}\right)\cdot \tau \left({x}_{j}\right)=H({x}_{i}\cdot {x}_{j})\) can be given instead of the inner product (Vapnik 1999b) because of the complexity of the inner product \(\tau \left({x}_{i}\right)\cdot \tau \left({x}_{j}\right)\)

2.3 Archimedes optimization algorithm (AOA)

The suggested approach uses the AOA algorithm, in which the immersed objects represent the individuals of the population. Like other metaheuristic algorithms according to the population (Hashim et al. 2021), AOA begins the search process with an initial population of candidate solutions represented by objects with randomly assigned densities, accelerations, and volumes (Zhang et al. 2021). Once the initial population’s fitness is assessed, the AOA works through iterations until the termination condition is met, during which each object is initialized with a random position within the fluid (Houssein et al. 2021). During each iteration of the process, each object’s acceleration has additionally recalculated according to the condition of its collision with neighboring objects. Additionally, the AOA algorithm updates each object’s volume and density. The new position of an object is determined based on the updated values of its volume, acceleration, and density (Desuky et al. 2021). The steps involved in the AOA algorithm are detailed in the following mathematical expressions.

2.3.1 Algorithmic step

The mathematical formulation of the AOA algorithm is presented in this section. AOA can be regarded as a global optimization algorithm as it involves theoretical exploitation and exploration procedures. The proposed AOA algorithm’s steps are outlined mathematically in the following sections:

-

(a)

step one

The positions of all objects are initialized using Eq. (15):

The variables \({ub}_{i}\) and \({lb}_{i}\) correspond to the upper and lower boundaries of the search space, respectively, and the variable \({R}_{i}\) denotes the ith object in a population of n objects. Using Eq. (16), set the values of volume \(({vl}_{i})\) and density \(({dn}_{i})\) for every ith object during the initialization process.

Generate a D-dimensional vector called rand that randomly generates a number between 0 and 1. Next, utilize Eq. (17) to initialize the acceleration (ac) for the \(i{\text{th}}\) object.

During this stage, evaluate the original population and select the most outstanding fitness value object. Set \({dn}_{best}\), \({x}_{best}\), \({vl}_{best}\), and \({ac}_{best}\) to the values of the selected object.

-

(b)

Step two

To update the densities and volumes, apply Eq. (18) to object \(i\) for iteration t + 1.

The variables \({dn}_{best}\) and \({vl}_{best}\) represent the density and the volume of the best object found thus far, while the rand corresponds to a random number with uniform distribution.

-

(c)

Step three

To start, objects will collide and eventually strive to attain a state of equilibrium. AOA utilizes the transfer operator TF to convert the search process from exploration to exploitation, as described in Eq. (19):

The TF slowly increases in value over time until it reaches 1. The variables, \({t}_{{\text{max}}}\) and t represent the maximum allowable number of iterations and the current iteration number. The density decreasing factor, d, also aids AOA’s global to-local search. It gradually decreases over time, as shown in Eq. (20):

The variable \({d}^{t+1}\) gradually decreases over time, allowing the algorithm to focus on exploring the already identified promising region and converge toward it. It is important to handle this variable properly to achieve a balance between exploitation and exploration.

-

(d)

Step four

When the value of TF is less than or equal to 0.5, it displays a collision among objects. In such a scenario, the object’s acceleration for the next iteration (t + 1) is updated, and a material (mr) is chosen Eq. (21) randomly:

In the equation, \({vl}_{i}\), \({ac}_{i}\), and \({den}_{i}\) refer to the volume, the acceleration, and the density of object i. On the other hand, \({vl}_{mr}\), \({ac}_{mr}\), and \({dn}_{mr}\) represent the volume, the acceleration, and the density of the random material chosen. Mentioning that TF ≤ 0.5 is significant as it ensures exploration in 33% of the iterations, altering the value to anything other than 0.5 will modify the balance between exploration and exploitation.

Assuming TF is more significant than 0.5, it indicates no occurrence of object collision; thus, the object’s acceleration should be updated for iteration \(t + 1\) utilizing Eq. (22):

Normalize the acceleration employing Eq. (23) to calculate the percentage of change:

\(u\) and \(l\) define the normalization range and are set to 0.9 and 0.1. The value of \({ac}_{i,norm}^{t+1}\) is used to calculate the percentage of each agent’s steps. The acceleration value will be higher when object i is located at a considerable distance from the global optimum, denoting that the object is exploring the environment. Conversely, if object i is relatively closer to the global optimum. The acceleration value will be lower, meaning the object is in the exploitation section. This shows the transformation of the search from the exploration section to the exploitation section. It is important to note that some search agents may need additional time in the exploration section compared to the average. Therefore, AOA ensures a balance between exploration as well as exploitation. However, the acceleration factor initially has a high value and gradually decreases over time. This aids search agents in approaching the optimal global solution while moving away from local solutions.

-

(e)

Step five

When in the exploration section where TF is less than or equal to 0.5, the position of the ith object for the subsequent iteration t + 1 can be determined using Eq. (24):

The constant \({e}_{1}\) is assigned a value of 2. Alternatively, during the exploitation phase where TF is higher than 0.5, the objects adjust their positions using Eq. (25):

The constant \({e}_{2}\) has a value of 6. The variable T increases as time passes, and its value is directly linked to the transfer operator. Precisely, T is calculated as \(T = {e}_{3} x {\text{TF}}\cdot T\), where \({e}_{3}\) is another constant. T value increases over time within the range of \({e}_{3}\)x0.3–1, and from the ideal position, takes a certain percentage. The disparity between the best and current positions will be significant when the percentage is initially low. Consequently, the magnitude of the steps taken during the random walk will be enormous. During the search, the percentage gradually narrows the gap between the top and the present positions. This outcome results in a satisfactory equilibrium between exploitation and exploration.

Using Eq. (26), F serves as a marker for altering the direction of movement.

-

(f)

Step six

Use objective function f to assess every object and keep track of the most optimal solution found thus far. Set \({dn}_{best}\), \({ac}_{best}\), \({x}_{best}\), and \({vl}_{best}\) accordingly.

In addition, the flowchart of AOA is presented in Fig. 4.

2.4 Marine predators’ algorithm (MPA)

The following section presents the marine predator’s algorithm formulation (Faramarzi et al. 2020). Like other metaheuristic techniques, it involves assigning random values to a group of solutions based on the search space (Soliman et al. 2020). This can be expressed as:

Equation (27) defines \(UB\) as the upper boundary and \(LB\) as the lower boundary of the search space. Additionally, \({g}_{1}\) is a random number between 0 and 1. This algorithm employs a strategy in which predator and prey act as search agents. This is because as the prey searches for its food, the predator also actively searches for its prey (Abdel-Basset et al. 2021). The elite will be updated at each generation’s end (i.e., the matrix containing the most exceptional predators). The details regarding the formulation of the prey and elite (O) can be found in (Faramarzi et al. 2020).

The position of prey O gets updated through a three-stage process, which will be explained in detail in the following subsections. The process considers the velocity variant ratio and replicates the entire relationship between the predator and prey (Abd Elminaam et al. 2021).

2.4.1 Stage one: high-velocity ratio

During the exploration phase, which happens in the first third of the total generations (i.e., \(\frac{1}{3}{t}_{{\text{max}}}\)), the predator moves faster than O. At this stage, the following equations update the prey \({S}_{i}\).

The vector \({R}_{B}\) describes the Brownian motion while \(\otimes\) signifies the process of multiplying each element in the vector R within the range of 0–1 with a constant value of 0.5.

2.4.2 Stage two: the ratio of the unit velocity

In this stage, the predator and prey occupy the same territory, mimicking the food search. This action also denotes the exploration to exploitation transition of the MPA’s status. Both events hold an equal probability of occurrence during this phase. As referred to (Faramarzi et al. 2020), while the prey performs exploitation, the predator’s movement is utilized during exploration. The predator is represented by Brownian motion, while the prey’s motion is represented using a Lévy flight. This is explicitly for the time range where \(\frac{1}{3}{t}_{{\text{max}}}<t<\frac{2}{3}{t}_{{\text{max}}}\) defined in Eqs. (31) and (32):

The variable represents the Lévy distribution \({R}_{{\text{L}}}\) which is a set of random numbers. The first half of the population is used to execute Eqs. (31) and (32) to indicate the exploitation stage. The latter half of the population undergoes the following modifications:

\({t}_{{\text{max}}}\) indicates the maximum number of generations, while CF regulates the magnitude of the predator’s displacement per step.

2.4.3 Stage three: low-velocity ratio

Once the predator’s movement surpasses its prey’s, the final step in the optimization process commences. This stage is known as the exploitation phase and is identified \(by t> \frac{2}{3} {t}_{{\text{max}}}\). It signifies the culmination of the process, as represented by the following formulation:

2.4.4 Eddy formation and FAD effect

Environmental conditions may impact the behavior of marine predators, like those attracted to fish aggregating devices (FADs). The influence of FADs on predator behavior can be described as:

Equation (37) utilizes FAD = 0.2 and a binary solution represented by U. The binary solution is generated randomly and converted using a threshold of 0.2. The indices identify the prey \({r}_{1}\) and \({r}_{2}\), while the random number \({r}_{2}\) is on a scale of 0–1.

The MPA’s flowchart is mentioned in Fig. 5.

2.5 Augmented grey wolf optimizer (AGWO)

The nonlinear nature of specific large power system applications, such as grid-connected wind power plants, makes it challenging to determine the transfer function leading to optimal performance. As a result, online optimization is a more feasible alternative to consider while optimizing power system performance. When it comes to online optimization of power system applications using the Grey Wolf Optimizer (GWO) algorithm, the number of search agents available is a limiting factor, unlike offline optimization of benchmark functions or transfer functions optimization.

The GWO algorithm has been introduced in its simplest form to achieve global optimization and be applicable in a wide range of scenarios. This means that, like other algorithms that have been suggested (like PSO), the GWO algorithm could be enhanced and adjusted to improve both exploitation and exploration performance in various fields. M. H. Qais et al. (2018) suggested a new modification to the GWO algorithm to improve its exploration capabilities. It has been designed not to compromise the original algorithm’s global optimization, flexibility, and simplicity abilities. In the GWO algorithm, the parameter a that primarily determines exploitation and exploration is contingent on parameter a. The parameter variation shapes the algorithm’s exploitation and exploration behavior, linearly ranging from 2 to 0 in the standard GWO. The augmentation proposed in the AGWO algorithm introduces a nonlinear and random variation of parameter a, ranging from 2 to 1, as shown in Eq. (38). Accordingly, the algorithm leans toward the exploration rather than the exploitation state.

In the GWO algorithm, the process of decision-making and hunting is reliant on the updates made to betas (β), deltas (δ), and alphas (α). However, the AGWO algorithm, an adaptation of GWO, simplifies this process by only considering the updates made to betas and alphas (β and α) as described in Eqs. (41)–(43). This modification greatly streamlines decision-making and improves efficiency (Long et al. 2017).

Figure 6 shows the flowchart of AGWO.

2.6 Performance evaluator

This section contains indicators that enable the assessment of hybrid models by revealing their error levels and correlation. The indicators featured in this section are symmetric mean absolute percentage error (sMAPE), mean absolute percentage error (MAPE), root mean square error (RMSE), coefficient correlation (R2), and T statistic taste (Tstate). The mathematical equations for each of these indicators are provided below:

Equations (44–48) utilize the following variables: \(n\) represents the number of samples, \({b}_{i}\) signifies the predicted value, \(\overline{b }\) and \(\overline{m }\) represent the average predicted and measured values, respectively. On the other hand, \({m}_{i}\) represents the measured value.

3 Results and discussion

To predict the pile settlement, multiple hybrid models have been implemented, including the SVR-archimedes optimization algorithm (SVRAO), SVR-augmented grey wolf optimizer (SVRAW), and SVR-marine predators’ algorithm (SVRMP). During this study’s training and testing phases, the measurements obtained from experimental trials were compared to the predictions produced by three models: SVRAO, SVRMP, and SVRAW. Table 2 displays that 70% of the experimental outcomes were employed in the training stage, while the remaining 30% was utilized in the testing phase. Five statistical measures (R2, RMSE, MAPE, sMAPE, and Tstate) were utilized to thoroughly assess and contrast the algorithms’ effectiveness.

A model that has an R2 value of nearly 1 suggests excellent performance during the training and testing stages. Meanwhile, parameters like RMSE, MAPE, s MAPE, and Tstate illustrate the error present in the model, where a lower value signifies a more satisfactory error level. The effectiveness of the employed algorithms was comprehensively evaluated and compared using these metrics, whose results are compiled in Table 2.

While the statistical performance criteria values of the developed models were reasonably similar in the testing and training phases, the SVRAO hybrid model exhibited the highest level of accuracy, with an R2 value of 0.989 during the training phase and 0.997 in the testing phase. SVRAO showed the highest degree of agreement between the predicted and observed values, as evidenced by its RMSE, MAPE, and sMAPE being the lowest among all the other models. On the other hand, the SVRAW models exhibit the weakest performance with an R2 value of 0.976 and 0.968 during the training and testing phases, respectively, and the highest values of RMSE, MAPE, and sMAPE. This suggests that the SVRAW models have poor performance. However, the SVRMP has an intermediate performance among the other two models with an R2 value of 0.981.

Table 3 indicates the comparison between the best present model, as indicated in Table 2, and the published articles’ models.

Figure 7 depicts a scatter plot that compares the performance of the hybrid models based on two parameters: R2, which indicates the level of agreement, and RMSE, which indicates the degree of dispersion. The centerline of the plot is positioned at X = Y coordinates, and the distance between the points and the centerline indicates the level of accuracy in the model’s performance. The SVRAO model exhibited a narrow range of dispersion, with the data points closely grouped around the centerline. In contrast, the SVRMP and SVRAW models indicated relatively similar levels of performance where their data points were more broadly scattered.

The observed variance was impacted by the deviation between the anticipated and actual values, notably diminished during the test phase, as illustrated in Fig. 8. During the training phase, the SVRAW model displayed minimal dispersion, and the difference in the line angles between the measured bold points and train triangles was more perceptible compared to the testing phase. Despite identifying disparities between the predicted and measured values for some samples during the training phase, leading to noteworthy divergences, enhancements in performance and favorable learning outcomes have somewhat mitigated this weakness.

One additional analysis that should be carried out involves observing the percentage error for each pile, indicating the degree of difference between the predicted settlement rate and the actual rate. Figures 9 and 10 showcased how well each model type predicted pile settlement and compared their effectiveness. As per the error distribution chart, the precision of predicting pile settlement varied across SVRAW, SVRMP, and SVRAO models. SVRAO demonstrated the lowest degree of error, with most predicted values being close to the actual values. SVRMP exhibited a moderate error, with a broader range of predicted values. At the same time, SVRAW had the highest error level, with several predicted values deviating significantly from the actual values. The SVRAO model generally exhibited the most trustworthy results, while SVRAW exhibited the weakest performance, and SVRMP performance was in the middle. The distribution chart of errors offered significant insights into the relative pros and cons of each model’s predictive accuracy, aiding researchers in identifying the most efficient model for forecasting pile settlement in real-world scenarios.

3.1 Sensitivity analyses

3.1.1 Cosine amplitude method (CAM)

Table 4 displays the outcomes of sensitivity analyses focusing on different input parameters. Sensitivity analysis serves as a method for gaging how responsive the results of a model or study are to changes in input variables (Ardakani and Kordnaeij 2019; Khatti and Grover 2023a, d). In this context, Table 4 examines the degree of sensitivity of results to alterations in specific input parameters. Five input parameters have been selected for sensitivity evaluation: Lp/D, Ls/Lr, N_SPT, UCS, and Qu.

The sensitivity measure (ST) signifies the extent to which the output changes in response to variations in the input parameter. A higher ST value implies that the output is more responsive to that specific parameter. ST_conf potentially signifies the confidence intervals associated with the sensitivity measures. Confidence intervals aid in assessing the degree of uncertainty within the results of the sensitivity analysis.

The ST fluctuates among the input parameters, indicating that the results of the model or study exhibit varying levels of sensitivity to different parameters. For instance, the parameter “N_SPT” possesses a relatively high sensitivity value of 3.7E−08, signifying that alterations in “N_SPT” exert a substantial influence on the study’s results. The “ST_conf” values, which represent confidence intervals, offer a range within which the sensitivity measures are likely to lie with a certain level of confidence.

4 Conclusion

This investigation’s principal aim is to assess the performance of 3 hybrid SVRs in estimating the rock-socketed piles settling. Three optimization algorithms, namely Archimedes Optimization algorithm (AOA), marine predators’ algorithm (MPO), and Augmented grey wolf optimizer (AGWO), are employed in constructing the SVR models. In order to achieve this goal, the investigators examined the outcomes of experiments using pile-driving analyzers as well as the features of the heaps and the soil. The investigation produced the subsequent significant findings:

-

1.

The study shows the promising predictive ability of SVR models for SP, with training R2 at 0.976 and testing at 0.968. SVRAO outperformed SVRMP and SVRAW, especially for small SP values. AOA optimization demonstrated superior performance for all SP ranges.

-

2.

Despite displaying weaker performance than the other SVR models across all statistical indices, SVRAW still produced acceptable results, achieving respective R2, RMSE, MAPE, sMAPE, and Tstate values of 0.968, 0.780, 5.220, 0.0018, and 1.156. On the other hand, the SVRAO model demonstrates the most optimal performance, with the highest R2, RMSE, MAPE, sMAPE, and Tstate values observed during the phases of testing and training, excluding MAPE in the training stage.

-

3.

The advantages of the present study include improving predicting accuracy, robust performance, real-world data utilization, and informing decision-making.

-

4.

The present study also has limitations, including limited input parameters and data variability.

-

5.

The future scope for expansion can encompass diverse parameters, hybrid model integration, validation through long-term monitoring, and generalizability and scalability.

Abbreviations

- SP:

-

Pile settlement

- Ls:

-

Length of soil layer

- D:

-

Diameter

- SVR:

-

Support vector regression

- MPA:

-

Marine predators algorithm

- RMSE:

-

Root mean square error

- T state :

-

T Statistic taste

- R 2 :

-

Coefficient correlation

- Qu:

-

Ultimate pile bearing capacity

- Lr:

-

Socket length

- UCS:

-

Uniaxial compressive strength

- AO:

-

Archimedes optimization algorithm

- AGWO:

-

Augmented grey wolf optimizer

- MAPE:

-

Mean absolute percentage error

- SMAPE:

-

Symmetric mean absolute percentage error

References

Abd Elminaam DS et al (2021) An efficient marine predators algorithm for feature selection. IEEE Access 9:60136–60153

Abdel-Basset M et al (2021) Parameter estimation of photovoltaic models using an improved marine predators algorithm. Energy Convers Manag 227:113491

Akbarzadeh MR et al (2023) Estimating compressive strength of concrete using neural electromagnetic field optimization. Materials 16(11):4200

Alam MS, Sultana N, Hossain SMZ (2021) Bayesian optimization algorithm based support vector regression analysis for estimation of shear capacity of FRP reinforced concrete members. Appl Soft Comput 105:107281

Ardakani A, Kordnaeij A (2019) Soil compaction parameters prediction using GMDH-type neural network and genetic algorithm. Eur J Environ Civ Eng 23(4):449–462

Armaghani DJ et al (2020) On the use of neuro-swarm system to forecast the pile settlement. Appl Sci 10(6):1904

Carrubba P (1997) Skin friction on large-diameter piles socketed into rock. Can Geotech J 34(2):230–240

Carvalho SL, Sales MM, Cavalcante ALB (2023) Systematic literature review and mapping of the prediction of pile capacities. Soils Rocks 46:e2023011922

Cesaro R, Di Laora R, Mandolini A (2023) A novel method for assessing pile base resistance in sand. In: National conference of the researchers of geotechnical engineering. Springer, pp 638–645

Desuky AS et al (2021) EAOA: an enhanced Archimedes optimization algorithm for feature selection in classification. IEEE Access 9:120795–120814

Faramarzi A et al (2020) Marine predators algorithm: a nature-inspired metaheuristic. Expert Syst Appl 152:113377

Fleming WGK (1992) A new method for signle pile settlement prediction and analysis. Geotechnique 42(3):411–425

Ge Q, Li C, Yang F (2023) Support vector machine to predict the pile settlement using novel optimization algorithm. Geotech Geol Eng 41:3861–3875

Gnananandarao T, Khatri VN, Dutta RK (2020) Bearing capacity and settlement prediction of multi-edge skirted footings resting on sand. Ing Investig 40(3):9–21

Gnananandarao T, Khatri VN et al (2023a) Implementing an ANN model and relative importance for predicting the under drained shear strength of fine-grained soil. Artificial intelligence and machine learning in smart city planning. Elsevier, Amsterdam, pp 267–277

Gnananandarao T, Onyelowe KC et al (2023b) Sensitivity analysis and estimation of improved unsaturated soil plasticity index using SVM, M5P, and random forest regression. Artificial intelligence and machine learning in smart city planning. Elsevier, Amsterdam, pp 243–255

Gnananandarao T, Onyelowe KC, Murthy KSR (2023c) Experience in using sensitivity analysis and ANN for predicting the reinforced stone columns’ bearing capacity sited in soft clays. Artificial intelligence and machine learning in smart city planning. Elsevier, Amsterdam, pp 231–241

Gunn SR (1998) Support vector machines for classification and regression. ISIS Tech Rep 14(1):5–16

Gutiérrez-Ch JG et al (2021) A DEM-based factor to design rock-socketed piles considering socket roughness. Rock Mech Rock Eng 54:3409–3421

Hashim FA et al (2021) Archimedes optimization algorithm: a new metaheuristic algorithm for solving optimization problems. Appl Intell 51(3):1531–1551

Hatheway AW (2009) The complete ISRM suggested methods for rock characterization, testing and monitoring; 1974–2006. Environ Eng Geosci 15:7–48

Houssein EH et al (2021) An enhanced Archimedes optimization algorithm based on Local escaping operator and orthogonal learning for PEM fuel cell parameter identification. Eng Appl Artif Intell 103:104309

Khatti J, Grover KS (2023a) Assessment of fine-grained soil compaction parameters using advanced soft computing techniques. Arab J Geosci 16(3):208

Khatti J, Grover KS (2023b) CBR prediction of pavement materials in unsoaked condition using LSSVM, LSTM-RNN, and ANN approaches. Int J Pavement Res Technol. https://doi.org/10.1007/s42947-022-00268-6

Khatti J, Grover KS (2023c) Prediction of compaction parameters for fine-grained soil: critical comparison of the deep learning and standalone models. J Rock Mech Geotech Eng 15:3010–3038

Khatti J, Grover KS (2023d) Prediction of compaction parameters of compacted soil using LSSVM, LSTM, LSBoostRF, and ANN. Innova Infrastruct Solut 8(2):76

Khatti J, Grover KS (2023e) Prediction of UCS of fine-grained soil based on machine learning part 1: multivariable regression analysis, gaussian process regression, and gene expression programming. Multiscale Multidiscip Model Exp Des. https://doi.org/10.1007/s41939-022-00137-6

Khatti J, Samadi H, Grover KS (2023) Estimation of settlement of pile group in clay using soft computing techniques. Geotech Geol Eng. https://doi.org/10.1007/s10706-023-02643-x

Kumar S, Robinson S (2023) Estimating the pile settlement using a machine learning technique optimized by Henry’s gas solubility optimization and particle swarm optimization. Adv Eng Intell Syst. https://doi.org/10.22034/aeis.2022.368689.1051

Kumar M, Samui P (2020) ’Reliability analysis of settlement of pile group in clay using LSSVM, GMDH, GPR. Geotech Geol Eng 38:6717–6730

Kumar M et al (2021) Reliability analysis of settlement of pile group. Innov Infrastruct Solut 6:1–17

Le Tirant P (1992) Design guides for offshore structures: offshore pile design

Long W et al (2017) A modified augmented Lagrangian with improved grey wolf optimization to constrained optimization problems. Neural Comput Appl 28:421–438

Lu T et al (2023) Semi-analytical approach for the load-settlement response of a pile considering excavation effects. Acta Geotech 18(3):1179–1197

Luenberger DG, Ye Y (1984) Linear and nonlinear programming. Springer, Cham

Masoumi F et al (2020) Automatic calibration of the groundwater simulation model with high parameter dimensionality using sequential uncertainty fitting approach. Water Suppl 20(8):3487–3501. https://doi.org/10.2166/ws.2020.241

Nejad FP et al (2009) Prediction of pile settlement using artificial neural networks based on standard penetration test data. Comput Geotech 36(7):1125–1133

Ng CWW et al (2001) Side resistance of large diameter bored piles socketed into decomposed rocks. J Geotech Geoenviron Eng 127(8):642–657

Onyelowe KC, Gnananandarao T, Nwa-David C (2021) Sensitivity analysis and prediction of erodibility of treated unsaturated soil modified with nanostructured fines of quarry dust using novel artificial neural network. Nanotechnol Environ Eng 6(2):37. https://doi.org/10.1007/s41204-021-00131-2

Onyelowe KC, Gnananandarao T, Ebid AM (2022) Estimation of the erodibility of treated unsaturated lateritic soil using support vector machine-polynomial and-radial basis function and random forest regression techniques. Clean Mater 3:100039

Qais MH, Hasanien HM, Alghuwainem S (2018) Augmented grey wolf optimizer for grid-connected PMSG-based wind energy conversion systems. Appl Soft Comput 69:504–515

Randolph MF, Wroth CP (1978) Analysis of deformation of vertically loaded piles. J Geotech Eng Div 104(12):1465–1488

Rowe RK, Armitage HH (1987) A design method for drilled piers in soft rock. Can Geotech J 24(1):126–142

Saha P, Debnath P, Thomas P (2020) Prediction of fresh and hardened properties of self-compacting concrete using support vector regression approach. Neural Comput Appl 32(12):7995–8010

Sedaghat B, Tejani GG, Kumar S (2023) Predict the maximum dry density of soil based on individual and hybrid methods of machine learning. Adv Eng Intell Syst. https://doi.org/10.22034/aeis.2023.414188.1129

Smola AJ, Schölkopf B (2004) A tutorial on support vector regression. Stat Comput 14(3):199–222

Soliman MA, Hasanien HM, Alkuhayli A (2020) Marine predators algorithm for parameters identification of triple-diode photovoltaic models. IEEE Access 8:155832–155842

Vapnik V (1999a) The nature of statistical learning theory. Springer Science & Business Media, New York

Vapnik VN (1999b) An overview of statistical learning theory. IEEE Trans Neural Netw 10(5):988–999

Vapnik V, Golowich S, Smola A (1996) Support vector method for function approximation, regression estimation and signal processing. Adv Neural Inf Process Syst 9

Zhang L et al (2021) Ensemble wind speed forecasting with multi-objective Archimedes optimization algorithm and sub-model selection. Appl Energy 301:117449

Author information

Authors and Affiliations

Contributions

YL: Methodology, software, validation, formal analysis. TL: Writing-original draft preparation, conceptualization, supervision, project administration.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing of interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix 1

Appendix 1

Lp/D | Ls/Lr | N_SPT | UCS | Qu | SP |

|---|---|---|---|---|---|

8.47 | 1.71 | 132.85 | 67.34 | 13,868.61 | 6.04 |

7.89 | 1.14 | 144.53 | 64.46 | 20,072.99 | 4.97 |

11.69 | 4.29 | 132.85 | 48.35 | 13,868.61 | 8.85 |

13.15 | 4.29 | 154.74 | 58.71 | 14,598.54 | 6.05 |

14.03 | 3.14 | 124.09 | 35.11 | 24,452.55 | 9.94 |

14.61 | 5.71 | 119.71 | 34.53 | 32,481.75 | 9.94 |

20.16 | 6.57 | 125.55 | 48.92 | 33,941.61 | 12.10 |

26.01 | 8.57 | 20.44 | 33.38 | 27,007.30 | 12.10 |

28.05 | 24.00 | 23.36 | 25.32 | 34,671.53 | 14.91 |

18.13 | 8.86 | 13.14 | 42.59 | 32,116.79 | 11.24 |

31.56 | 19.43 | 21.90 | 29.93 | 41,605.84 | 20.09 |

28.06 | 14.57 | 46.72 | 32.23 | 18,978.10 | 12.97 |

20.18 | 10.86 | 5.84 | 26.47 | 27,007.30 | 14.06 |

19.89 | 12.29 | 13.14 | 43.17 | 21,897.81 | 10.17 |

18.14 | 12.57 | 14.60 | 30.50 | 20,437.96 | 12.98 |

11.43 | 3.71 | 4.38 | 43.74 | 22,262.77 | 11.04 |

21.65 | 10.86 | 27.74 | 28.78 | 22,992.70 | 14.06 |

20.20 | 7.71 | 27.74 | 29.35 | 17,153.28 | 14.07 |

12.03 | 4.00 | 127.01 | 58.71 | 19,343.07 | 8.89 |

16.41 | 7.71 | 20.44 | 46.04 | 18,978.10 | 10.83 |

21.67 | 11.14 | 29.20 | 60.43 | 16,788.32 | 9.97 |

19.63 | 8.00 | 11.68 | 47.77 | 26,277.37 | 11.27 |

21.96 | 14.57 | 27.74 | 35.68 | 18,248.18 | 12.14 |

9.71 | 2.29 | 115.33 | 49.50 | 18,248.18 | 9.12 |

11.17 | 2.57 | 112.41 | 47.77 | 33,576.64 | 8.90 |

26.94 | 15.71 | 20.44 | 28.78 | 36,131.39 | 16.89 |

16.14 | 5.71 | 18.98 | 27.63 | 18,978.10 | 14.95 |

8.26 | 2.86 | 5.84 | 35.68 | 15,693.43 | 10.64 |

15.56 | 6.86 | 159.12 | 58.71 | 19,708.03 | 8.26 |

9.72 | 5.43 | 5.84 | 34.53 | 33,576.64 | 9.78 |

11.19 | 3.14 | 166.42 | 68.49 | 24,452.55 | 10.86 |

13.82 | 4.00 | 62.77 | 41.44 | 24,452.55 | 12.37 |

12.36 | 2.57 | 103.65 | 52.95 | 31,751.82 | 14.10 |

6.82 | 1.43 | 160.58 | 65.04 | 19,343.07 | 4.61 |

15.58 | 8.86 | 13.14 | 46.62 | 17,518.25 | 11.95 |

17.33 | 3.43 | 102.19 | 35.68 | 16,058.39 | 9.14 |

10.91 | 3.43 | 153.28 | 47.77 | 20,437.96 | 10.87 |

17.05 | 0.57 | 2.92 | 46.04 | 24,817.52 | 10.87 |

20.26 | 17.14 | 7.30 | 28.20 | 36,496.35 | 16.06 |

20.26 | 6.00 | 5.84 | 28.78 | 38,686.13 | 16.06 |

13.84 | 3.43 | 113.87 | 42.59 | 21,897.81 | 12.39 |

12.97 | 2.57 | 113.87 | 42.59 | 39,416.06 | 13.26 |

19.11 | 15.14 | 116.79 | 32.23 | 34,306.57 | 15.20 |

21.74 | 22.29 | 7.30 | 29.93 | 33,941.61 | 16.93 |

27.58 | 9.43 | 109.49 | 29.35 | 30,656.93 | 12.40 |

23.49 | 14.29 | 7.30 | 25.32 | 38,686.13 | 15.86 |

12.99 | 13.43 | 2.92 | 25.90 | 34,671.53 | 15.21 |

25.54 | 15.43 | 11.68 | 29.35 | 30,291.97 | 17.80 |

20.58 | 6.00 | 13.14 | 31.65 | 36,496.35 | 15.22 |

8.62 | 6.29 | 140.15 | 65.61 | 15,328.47 | 5.72 |

26.43 | 31.71 | 11.68 | 28.20 | 40,875.91 | 18.67 |

7.45 | 2.86 | 8.76 | 48.92 | 21,167.88 | 13.06 |

6.58 | 1.43 | 140.15 | 42.59 | 32,846.72 | 8.10 |

10.96 | 4.57 | 140.15 | 57.55 | 32,116.79 | 8.97 |

5.42 | 1.71 | 140.15 | 62.73 | 15,693.43 | 5.52 |

9.51 | 2.57 | 140.15 | 62.73 | 14,233.58 | 6.17 |

13.89 | 2.57 | 8.76 | 52.95 | 15,328.47 | 9.62 |

14.77 | 7.43 | 157.66 | 59.86 | 17,518.25 | 6.39 |

15.07 | 7.71 | 157.66 | 31.65 | 14,598.54 | 7.25 |

22.08 | 11.43 | 52.55 | 45.47 | 16,788.32 | 12.43 |

20.91 | 25.43 | 52.55 | 28.78 | 35,036.50 | 17.83 |

14.78 | 10.86 | 32.12 | 43.74 | 20,072.99 | 10.28 |

13.04 | 4.86 | 148.91 | 28.78 | 24,817.52 | 8.99 |

6.03 | 2.00 | 119.71 | 29.93 | 31,021.90 | 10.93 |

6.03 | 1.71 | 119.71 | 48.92 | 31,021.90 | 11.15 |

6.04 | 1.43 | 148.91 | 54.68 | 24,817.52 | 5.11 |

14.51 | 6.00 | 140.15 | 59.28 | 16,788.32 | 5.98 |

11.88 | 2.00 | 141.61 | 59.28 | 17,518.25 | 5.98 |

7.21 | 1.71 | 103.65 | 40.29 | 21,532.85 | 12.02 |

7.51 | 1.14 | 128.47 | 48.92 | 25,182.48 | 7.28 |

16.56 | 8.57 | 138.69 | 48.92 | 20,802.92 | 8.14 |

13.94 | 3.43 | 11.68 | 36.26 | 23,722.63 | 10.09 |

10.15 | 2.86 | 121.17 | 37.41 | 20,072.99 | 12.25 |

12.78 | 4.00 | 151.82 | 54.10 | 18,248.18 | 6.42 |

13.95 | 2.00 | 113.87 | 50.07 | 24,817.52 | 10.96 |

9.57 | 2.00 | 148.91 | 65.04 | 28,467.15 | 9.88 |

9.87 | 3.14 | 90.51 | 40.86 | 19,343.07 | 10.10 |

7.24 | 1.14 | 141.61 | 56.40 | 16,788.32 | 5.14 |

4.62 | 0.86 | 154.74 | 50.07 | 17,883.21 | 6.00 |

6.08 | 1.71 | 160.58 | 66.19 | 19,708.03 | 4.49 |

4.33 | 0.29 | 160.58 | 62.73 | 25,547.45 | 6.22 |

8.13 | 2.29 | 115.33 | 60.43 | 19,708.03 | 6.23 |

22.15 | 2.00 | 5.84 | 33.38 | 19,708.03 | 14.21 |

24.19 | 9.43 | 37.96 | 30.50 | 24,817.52 | 15.08 |

9.89 | 0.86 | 108.03 | 49.50 | 12,408.76 | 8.61 |

20.40 | 10.29 | 11.68 | 42.59 | 35,036.50 | 12.71 |

28.87 | 22.57 | 10.22 | 41.44 | 39,781.02 | 18.97 |

28.29 | 27.43 | 10.22 | 28.20 | 34,306.57 | 18.97 |

17.20 | 8.86 | 30.66 | 33.96 | 42,700.73 | 13.58 |

22.17 | 10.57 | 27.74 | 45.47 | 14,598.54 | 11.21 |

20.42 | 8.00 | 118.25 | 34.53 | 15,693.43 | 9.27 |

13.71 | 2.00 | 119.71 | 35.68 | 13,868.61 | 9.27 |

15.17 | 1.14 | 100.73 | 37.99 | 19,343.07 | 10.13 |

18.97 | 2.00 | 134.31 | 34.53 | 20,802.92 | 9.92 |

13.72 | 0.86 | 8.76 | 26.47 | 35,401.46 | 14.02 |

8.17 | 2.57 | 134.31 | 59.86 | 18,613.14 | 7.34 |

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, Y., Li, T. Prediction of pile settlement using hybrid support vector regressor. Multiscale and Multidiscip. Model. Exp. and Des. 7, 2103–2120 (2024). https://doi.org/10.1007/s41939-023-00318-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41939-023-00318-x