Abstract

This paper proposes a single projection method with the Bregman distance technique for solving pseudomonotone variational inequalities in real reflexive Banach space. The step-sizes, which varies from step to step, are found over each iteration by cheap computation without any linesearch. We prove strong convergence result under suitable conditions on the cost operator. We further provide an application of our main result and also report some numerical experiments to illustrate the performance and efficiency of our proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Numerous problems in science and engineering, optimization theory, nonlinear analysis, equilibrium theory and differential equations, lead to the study of variational inequality problem (VIP) in the sense of Stampacchia et al. in [19, 25]. This problem has been intensively investigated and developed after appearing in the mono graphic book [15, 26]. Let E be a real Banach space with norm \(\Vert \cdot \Vert\) and represent the dual of E by \(E^*\) with \(\langle x^*,x\rangle\) signifying the value of \(x^*\in E^*\) at \(x\in E.\) Suppose C is nonempty, closed with \(C\subset E\) and \({\mathcal {A}} : C\rightarrow E^*\) is a nonlinear mapping. The VIP is defined as finding \(u\in C\) such that

We shall denote by \(Sol(C,{\mathcal {A}}),\) the solution set of VIP (1.1).

The projected gradient method [18] is known to solve VIP (1.1) when \({\mathcal {A}}\) is strongly monotone and Lipschitz continuous. This method fails to converge to any solution of VIP (1.1) when \({\mathcal {A}}\) is monotone. The Extragradient Method (EGM) [27] was introduced to solve VIP (1.1) when \({\mathcal {A}}\) is continuous and Lipschitz continuous:

where \(P_C\) is the projection onto C. The weak convergence of EGM (1.2) is achieved when \(\rho \in (0,1/L),\) with L being the Lipschitz constant of \({\mathcal {A}}\) (see, [5,6,7,8, 10,11,12, 14, 21, 24, 33, 36, 40,41,42,43]). The major weakness in EGM (1.2) is that it involves two projection onto C per iteration and this requires solving a minimization problem twice per iteration during implementation, which can slow down the iterations. This motivated Tseng [37] to propose the following method;

which requires only one computation of \(P_C\) per iteration. The weak convergence of (1.3) was obtained in Hilbert spaces when either the step size \(\rho \in (0,1/L)\) or generated by a line search procedure. The requirement that the step size \(\rho\) in EGM (1.2) and (1.3) dependent on Lipschitz constant of the cost function is inefficient since the Lipschitz constant are difficult to estimate in most of the applications, and when they do, they are often too small and this in turn slows down the convergence of EGM (1.2) and (1.3).

It is very interesting to also study VIP in Banach spaces because several physical models and applications can be formulated as VIPs in real Banach spaces which are not Hilbert.

Recently, Jolaoso et al. [22] presented modified Bregman subgradient algorithms with line search technique for solving VIP with a pseudo-monotone operator without necessarily satisfying the Lipschitz condition. They introduced the following two algorithms:

Under suitable conditions of the given parameters, Jolaoso et al. [22] established the weak and strong convergence of Algorithm 1.1 and 1.2 respectively in reflexive Banach spaces. See also the recent paper by Reich et al. [31].

Following this direction, motivated and inspired by the presented results, we introduce a single projection method with self adaptive step size for solving the VIP (1.1) in a reflexive Banach space. The proposed algorithm uses variable step size from step to step which are updated over each iteration by a cheap computation. This step size allows the algorithm to work more easily without knowing previously the Lipschitz constant of operator \({\mathcal {A}}.\) Unlike [22], we do not use any linesearch in our algorithm (a linesearch means that at each outer-iteration we use an inner-loop until some finite stopping criterion is satisfied, and this can be time consuming). We provide an application and some numerical experiment to illustrate the performance and efficiency of our proposed method.

2 Preliminaries

In this section, we give some definitions and preliminary results which will be used in our convergence analysis. Let C be a nonempty, closed and convex subset of a real Banach space E with the norm \(\Vert \cdot \Vert\) and dual space \(E^*.\) We denote the weak and strong convergence of a sequence \(\{x_n\}\subset E\) to a point \(x^*\in E\) by \(x_n\rightharpoonup x^*\) and \(x_n \rightarrow x^*\) respectively.

Let \(f:E\rightarrow (-\infty , +\infty ]\) be a function satisfying the following:

-

(i)

\(\text{ int } (\text{dom }f) \subset E\) is a uniformly convex set;

-

(ii)

f is continuously differentiable on \(\text{ int }(\text{dom }f);\)

-

(iii)

f is strongly convex with strongly convexty constant \(\varrho >0,\) i.e.

$$\begin{aligned} f(x)\ge f(y) - \langle \nabla f(y), x -y\rangle + \frac{\varrho }{2}\Vert x - y\Vert ^2, \ \ \ \forall x\in \text{dom } \ \ \text{ and } \ \ y\in \text{ int }(\text{dom }f). \end{aligned}$$

The subdifferential set of f at a point x denoted by \(\partial f\) is defined by

Every element \(x^* \in \partial f(x)\) is called a subgradient of f at x. Since f is continuously differentiable, then \(\partial f(x) = \{\nabla f(x)\},\) which is the gradient of f at x. The Fénchel conjugate of f is the convex functional \(f^*: E^* \rightarrow (-\infty , +\infty ]\) defined by \(f^*(x^*) = \sup \{\langle x^*,x\rangle - f(x): x \in E\}.\) Let E be a reflexive Banach space, the function f is said to be Legendre if and only if f satisfy the following conditions:

-

(R1)

\(\text{ int }(\text{dom }f) \ne \emptyset\) and \(\partial f\) is single-valued on its domain;

-

(R2)

\(\text{ int }(\text{dom } f) \ne \emptyset\) and \(\partial f^*\) is single-valued on its domain.

It is worth mentioning that the Bregman distance is not a metric in the usual sense because it does not satisfy the symmetric and triangular inequality properties. However, It posses the following interesting property called the three point identity: for \(x\in \text{dom } f\) and \(y,z \in \text{ int }(\text{dom }f),\) we have Let \(f: E \rightarrow {\mathbb {R}}\) be strictly convex and Gâteaux differentiable function. The Bregman distance \(\phi _f : \text{dom }f \times \text{ int }(\text{dom }f) \rightarrow {\mathbb {R}}\) with respect to f is defined by

The Bregman function has been widely used by many authors for solving many optimization problems in the literature (see [30] and the references therein).

Remark 2.1

Practical important examples of the Bregman distance function can be found in [3]. If \(f(x) =\frac{1}{2}\Vert x\Vert ,\) we have \(\phi _f(x,y) = \frac{1}{2}\Vert x -y\Vert ^2\) which is the Euclidean norm distance. Also, if \(f(x) = - \sum _{j=1}^{m}x_j\log (x_j)\) which is Shannon’s entropy for non-negative orthant \({\mathbb {R}}_{++}^m:=\{x\in {\mathbb {R}}^m:x_j>0\},\) we obtain the Kullback-Leibler cross entropy defined by

Also, if f is \(\varrho\)-strongly convex, then

Definition 2.2

The Bregman projection (see e.g. [32]) with respect to f of \(x\in \text{ int }(\text{dom }f)\) onto a nonempty closed convex set \(C \subset \text{ int }(\text{dom }f)\) is the unique vector \(Proj_C^f(x) \in C\) satisfying

The Bregman projection is characterized by the inequality

Also

Following [1, 13], we define the function \(V_f: E\times E \rightarrow [0,\infty )\) associated with f by

\(V_f\) is non-negative and \(V_f(x,y) = \phi _f(x,\nabla f(y))\) for all \(x,y\in E.\) Moreover, by the subdifferential inequality, it is easy to see that

for all \(x,y,z\in E.\) In addition, If \(f: E\rightarrow {\mathbb {R}} \cup \{+\infty \}\) is proper lower semicontinous, then \(f^*: E\rightarrow {\mathbb {R}} \cup \{+\infty \}\) is proper weak lower lower semicontinuous and convex. Hence, \(V_f\) is convex in second variable, i.e.,

where \(\{x_i\} \subset E\) and \(\{t_i\} \subset (0,1)\) with \(\sum _{i=1}^{N}t_i = 1.\)

Definition 2.3

Let \(f : E \rightarrow {\mathbb {R}} \cup \{+\infty \}\) be a G\({\hat{a}}\)teaux differentiable function. The function \(\phi _f : \text{dom }f \times \text{ int }(\text{dom }f) \rightarrow [0, +\infty )\) defined by

is the Bregman distance with respect to f. The Bregman distance does not satisfy the well-known properties of a metric, but it has the following important properties (see [28]): For any \(w,x,y,z \in E,\)

-

(i)

three point identity:

$$\begin{aligned} \phi _f(y,z) + \phi _f(z,x) - \phi _f(y,x) = \langle \nabla f(z) - \nabla f(x), z - y\rangle ; \end{aligned}$$(2.10) -

(ii)

four point identity: for

$$\begin{aligned} \phi _f(z,w) + \phi _f(y,x) - \phi _f(z,x) - \phi _f(y,w) = \langle \nabla f(x) - \nabla f(w), z -y\rangle . \end{aligned}$$(2.11)

Definition 2.4

[28, 29] The Minty Variational Inequality Problem (MVIP) is defined as finding a point \({\bar{x}} \in C\) such that

We denote by \(M(C,{\mathcal {A}}),\) the set of solution of (2). Some existence results for the MVIP has been presented in [28]. Also, the assumption that \(M(C,{\mathcal {A}}) \ne \emptyset\) has already been used for solving \(VI(C,{\mathcal {A}})\) in finite dimensional spaces (see e.g [36]). It is not difficult to prove that pseudomonotonicity implies property \(M(C,{\mathcal {A}}) \ne \emptyset ,\) but the converse is not true. Indeed, let \({\mathcal {A}}: {\mathbb {R}} \rightarrow {\mathbb {R}}\) be defined by \({\mathcal {A}}(x) = \cos x\) with \(C=[0,\frac{\pi }{2}].\) We have that \(VI(C,{\mathcal {A}}) = \{0,\frac{\pi }{2}\}\) and \(M(C,{\mathcal {A}}) = \{0\}.\) But if we take \(x=0\) and \(y=\frac{\pi }{2}\) in the definition of pseudomonotone (Assumption 3.1, (A1)), we see that \({\mathcal {A}}\) is not pseudomonotone.

Lemma 2.5

[29] Consider the VIP (1.1). If the mapping \(h:[0,1] \rightarrow E^*\) defined as \(h(t) = {\mathcal {A}}(tx + (1-t)y)\) is continuous for all \(x,y\in C\) (i.e. h is hemicontinuous), then \(M(C,{\mathcal {A}}) \subset VI(C,{\mathcal {A}}).\) Moreover, if \({\mathcal {A}}\) is pseudomonotone then \(VI(C,{\mathcal {A}})\) is closed, convex and \(VI(C,{\mathcal {A}}) = M(C,{\mathcal {A}}).\)

Lemma 2.6

[39] Let \(\{a_n\}\) be a sequence of nonnegative real numbers satisfying the following relation:

where (i) \(\{\alpha _n\} \subset [0,1],\) \(\sum \alpha _n = \infty ;\) (ii) \(\limsup \sigma _n \le 0;\) (iii) \(\gamma _n \ge 0;\) \((n\ge 1)\) \(\sum \gamma _n < \infty .\)

Then \(a_n \rightarrow 0,\) as \(n\rightarrow \infty .\)

3 Main result

In this section, we give concise statement of our algorithm, discuss some of its elementary properties and its convergence analysis. In the sequel, we assume that the following hold.

Assumption 3.1

Let C be a nonempty closed convex subset of a real reflexive Banach space E. The operator \({\mathcal {A}} : C \rightarrow E^*\) satisfies the following:

-

(A1)

\({\mathcal {A}}\) is pseudomonotone on C, i.e, for all \(x,y \in E,\) \(\langle {\mathcal {A}}x, y-z \rangle \ge 0\) implies \(\langle {\mathcal {A}}y, y - x\rangle \ge 0;\)

-

(A2)

\({\mathcal {A}}\) is L-Lipschitz continuous, i.e. there exists \(L>0\) such that \(\Vert {\mathcal {A}}x - {\mathcal {A}}y\Vert \le L\Vert x -y\Vert ,\) for all \(x,y\in C.\) However, the information of L need not to be known;

-

(A3)

\({\mathcal {A}}\) is weakly sequentially continuous, i.e. for any \(\{x_n\} \subset E,\) we have \(x_n \rightharpoonup x\) implies \({\mathcal {A}}x_n \rightharpoonup {\mathcal {A}}x;\)

-

(A4)

\(Sol(C,{\mathcal {A}}) \ne \emptyset ;\)

-

(A5)

In addition, the function \(f: E \rightarrow [-\infty ,+\infty ]\) is proper, uniformly Fréchet differentiable on bounded subsets of E, strongly convex with constant \(\varrho >0,\) strongly coercive and Legendre.

We now present our algorithm as follows.

Remark 3.3

-

(1)

The stepsize \(\rho _n\) in Algorithm 3.2 varies from step to step. This stepsize is updated at each iteration by a cheap computation. This stepsize rule allows Algorithm 3.2 to be implemented more easily where firstly the Lipschitz constant of \({\mathcal {A}}\) must not be the input parameter of the algorithm, i.e., this constant is not necessary to be known, and secondly the stepsize is found without any line-search which can be time-consuming.

-

(2)

The VIP is studied in a reflexive real Banach space which is more general than 2-uniformly convex real Banach space and real Hilbert space. This extends all the results in [9, 17, 33,34,35, 38] to mention but a few.

Lemma 3.4

Assume that \({\mathcal {A}}\) is Lipschitz continuous on E. Then the sequence generated by (3.3) is nonincreasing and

Proof

It is easy to prove this Lemma, hence we omit it. \(\square\)

If at some iteration we have \(x_n = y_n\) or \({\mathcal {A}}y_n = 0\) then Algorithm 3.2 terminates and \(y_n \in Sol(C,{\mathcal {A}}).\) From now on, we assume that \(x_n\ne y_n\) and \({\mathcal {A}}y_n \ne 0\) for all n so that the algorithm does not terminate finitely.

Lemma 3.5

Let \(\{x_n\},\) \(\{y_n\}\) and \(\{z_n\}\) be defined Algorithm 3.2, then for every \(x^*\in Sol(C,{\mathcal {A}})\) the following inequalities holds for all \(n\ge 1.\)

Proof

Let \(x^*\in Sol(C,{\mathcal {A}}),\) then

Note that from (2.11), we get

Hence from (3.5) and (3.6), we have

Also from (2.10), we have

Substituting (3.8) into (3.7), we obtain

Since \(y_n = Proj_C^f(\nabla f^*(\nabla f(x_n) - \rho _n{\mathcal {A}}x_n)),\) it follows from (2.4) that

Also, since \(x^*\in Sol(C,{\mathcal {A}})\) then \(\langle {\mathcal {A}}x^*, y_n - x^*\rangle \ge 0.\) From the pseudomonotononicity of \({\mathcal {A}},\) we obtain

Combining (3.10) and (3.11), we get

Hence from (3.9), we obtain

Now using (3.3) and Cauchy-Schwatz inequality, we have

Then from (2.3), we obtain

\(\square\)

Lemma 3.6

The sequence \(\{x_n\}\) generated by Algorithm 3.2, is bounded.

Proof

Note that since \(\delta \in (0,\varrho )\) and \(\varrho >0,\) we have

Then, there exists \(N>0\) such that

Thus, it from (3.14) that

Furthermore, from (3.4) and (3.15), we have

This implies that \(\{\phi _f(x^*,x_n)\}\) is bounded. Hence, \(\{x_n\}\) is bounded. Consequently, we see that \(\{\nabla f(x_n)\},\) \(\{y_n\}\) and \(\{z_n\}\) are bounded. \(\square\)

Lemma 3.7

The sequence \(\{x_n\}\) generated by Algorithm 3.2 satisfies the following estimates:

-

(i)

\({\mathcal {S}}_{n+1} \le (1-\alpha _n(1-\delta _n)){\mathcal {S}}_n + \alpha _n(1-\delta _n) d_n,\)

-

(ii)

\(-1 \le \limsup _{n\rightarrow \infty }d_n < +\infty ,\)

where \({\mathcal {S}}_n = \phi _f(x^*,x_n)\) and \(d_n = \langle v_n - x^*, \nabla f(u) - \nabla f(x^*)\rangle\) for any \(x^*\in Sol(C,{\mathcal {A}}).\)

Proof

Now, let \(v_n = \nabla f^*\left[ \alpha _n(1-\delta _n)\nabla f(u) + (1-\alpha _n(1-\delta _n))\nabla f(u_n)\right] ,\) \(n\ge 1\) where

This established (i).

Next we show (ii). Since \(\{v_n\}\) is bounded, then we have

This implies that \(\limsup _{n \rightarrow \infty }d_n < \infty\). Next, we show that \(\limsup _{n \rightarrow \infty }d_n \ge -1.\) Assume the contrary, i.e. \(\limsup _{n \rightarrow \infty }d_n < -1\). Then there exists \(n_0 \in {\mathbb {N}}\) such that \(d_n < -1\), for all \(n \ge n_0\). Then for all \(n \ge n_0\), we get from (i) that

Taking \(\limsup\) of the last inequality, we have

This contradicts the fact that \(\{{\mathcal {S}}_n\}\) is a non-negative real sequence. Therefore \(\limsup _{n \rightarrow \infty }d_n \ge -1\). \(\square\)

We now present our main convergence result.

Theorem 3.8

Assume Conditions \((A1) - (A5)\) and suppose \(\{\alpha _n\},\) and \(\{\delta _n\}\) are sequences in (0, 1) and satisfy the following conditions:

-

(i)

\(\lim _{n\rightarrow \infty }\alpha _n = 0,\) and \(\sum _{n=1}^{\infty }\alpha _n = \infty ;\)

-

(ii)

\(0<\liminf _{n\rightarrow \infty } \delta _n \le \limsup _{n\rightarrow \infty } \delta _n <1.\)

Then the sequence \(\{x_n\}\) generated by Algorithm 3.2 converges strongly to an element in \(Sol(C,{\mathcal {A}}).\)

Proof

Let \(x^* \in Sol(C,{\mathcal {A}})\) and \({\mathcal {S}}_n = \phi _f(x^*,x_n).\) we divide the proof into two cases.

Case 1: Suppose that there exists \(n_0 \in {\mathbb {N}}\) such that \(\{{\mathcal {S}}_n\}_{n=n_0}^\infty\) is non-increasing. Then \(\{{\mathcal {S}}_n\}_{n=1}^\infty\) converges and

Thus, from Lemma 3.5 we have.

Also, from (3.16), we get

From (3.19) and (3.20), we get

Passing limit as \(n\rightarrow \infty\) in (3.21), since \(\underset{n\rightarrow \infty }{\lim }\phi _f(x^*,x_n)\) exists and \(\frac{\rho _n}{\rho _{n+1}} \rightarrow 1,\) we have

Also, since \(\delta \in (0,\varrho ),\) then

Thus

Similarly from, (3.21), we obtain

Then

Combining (3.22) and (3.23), we have

Since \(\{x_n\}\) is bounded, there exists a subsequence \(\{x_{n_k}\}\) of \(\{x_n\}\) such that \(x_{n_k} \rightharpoonup z.\) We now show that \(z\in Sol(C,{\mathcal {A}}).\)

Since \(y_{n_k} = Proj_C^f(\nabla f^*(\nabla f(x_{n_k}) - \rho _{n_k}{\mathcal {A}}x_{n_k})),\) from (2.4), we have

Hence

This implies that

Since \(\Vert x_{n_k} - y_{n_k}\Vert \rightarrow 0\) and f is strongly coercive, then

Next, fix \(x\in C,\) it follows from (3.25) and (3.26) and the fact that \(\liminf _{k\rightarrow \infty }\rho _{n_k} >0,\) then

Let \(\{\epsilon _k\}\) be a sequence of decreasing non-negative numbers such that \(\epsilon _k \rightarrow 0\) as \(k\rightarrow \infty .\) For each \(\epsilon _k,\) we denote by \(N_k\) the smallest positive integer such that

where the existence of \(N_k\) follows from (3.27). Since \(\{\epsilon _k\}\) is decreasing, then \(\{N_k\}\) is increasing. Also, for some \(t_{N_k} \in C\) assume \(\langle {\mathcal {A}}x_{N_k},t_{N_k}\rangle = 1,\) for each k. Therefore,

Since \({\mathcal {A}}\) is pseudomonotone, then we have from (3.27) that

Since \(\{x_{n_k}\}\) converges weakly to z as \(k\rightarrow \infty\) and \({\mathcal {A}}\) is weakly sequentially continuous, we have that \(\{{\mathcal {A}}\}\) converges weakly to \({\mathcal {A}}z.\) Suppose \({\mathcal {A}}z \ne 0\) (otherwise, \(z\in Sol(C,{\mathcal {A}}\)). Then by the sequentially weakly lower semicontinuous of the norm, we get

Since \(\{x_{N_k}\} \subset \{x_{n_k}\}\) and \(\epsilon _k \rightarrow 0,\) we get

and this means \(\lim _{k\rightarrow \infty }\Vert \epsilon _kt_{N_k}\Vert = 0.\) Passing the limit \(k\rightarrow \infty\) in (3.28), we get

Therefore, from Lemma 2.5, we have \(z\in Sol(C,{\mathcal {A}}).\) Furthermore, from the definition of \(u_n\) and \(v_n,\) we get

and

Thus,

Hence, we obtain

Since \(x_{n_k}\rightharpoonup z\) and \(\lim _{n\rightarrow \infty }\Vert v_n - x_n\Vert =0\) we have that \(v_{n_k} \rightharpoonup z.\) Hence from (2.4), we obtain that

Hence

Using Lemma 2.6 and Lemma 3.7(i), we obtain

This implies that \(\Vert x_n - x^*\Vert \rightarrow 0\) as \(n\rightarrow \infty .\) Therefore \(\{x_n\}\) converges strongly to \(x^*.\)

Case 2. Assume that \(\{{\mathcal {S}}_n\}_{n=1}^\infty\) is not a monotonically decreasing sequences. Set \(\Gamma _n := {\mathcal {S}}_n, \ \forall n\ge 1\) and let \(\tau : {\mathbb {N}} \rightarrow {\mathbb {N}}\) be a mapping for all \(n\ge n_0\) (for some \(n_0\) large enough) by

Clearly, \(\tau\) is a nondecreasing sequence such that \(\tau (n) \rightarrow \infty\) as \(n\rightarrow \infty\) and

Following a similar argument as in Case 1 we immediately conclude that

Also from (3.29), we get that

From (3.17) we have that

which implies

and

Therefore, for \(n\ge n_0,\) it is easy to see that \(\Gamma _{\tau (n)} \le \Gamma _{\tau (n)+1}\) if \(n \ne \tau (n)\) (that is, \(\tau (n) < n\)), because \(\Gamma _j \ge \Gamma _{j+1}\) for \(\tau (n)+1 \le j \le n.\) As a consequence, we obtain for all \(n\ge n_0\)

Hence, \(\lim _{n\rightarrow \infty } \Gamma _{n} = 0.\) This implies that \(\lim _{n\rightarrow \infty } \phi _f(x^*, x_n) = 0,\) thus \(\Vert x_n - x^*\Vert \rightarrow 0\) as \(n \rightarrow \infty .\) Therefore, \(\{x_n\}\) converges strongly to \(x^*.\) This completes the proof. \(\square\)

Remark 3.9

If the operator is monotone and Lipschitz continuous, then we do not need it to be sequentially weakly continuous. This is because the sequential weakly continuity assumption was only used after (3.25). From the definition of \(y_n,\) we have

Thus from the monotonicity of \({\mathcal {A}}\),

Since \(\nabla f\) is weakly - weakly continuous, passing to the limit in the last inequality as \(k\rightarrow \infty\) and using relation (3.23), we obtain \(\langle {\mathcal {A}} x, x - z\rangle \ge 0 \ \ \forall z\in C.\) Thus, from Lemma 2.5 we get that \(z\in Sol(C,{\mathcal {A}}).\) Hence the conclusion of Theorem 3.8 still hold.

4 Application

4.1 Application to computing dynamic user equilibria

In this section, we apply Algorithm 3.2 to compute dynamic user equilibria (see [16]). Let \({\mathcal {P}}\) be set of paths in the network. \({\mathbb {W}}\) be set of O-D pairs in the network, \(Q_{ij}\) be fixed O-D demand between \((i,j)\in {\mathbb {W}},\) \({\mathcal {P}}_{ij}\) be subset of paths that connect O-D (i, j), t be continuous time parameter in a fixed time horizon \([t_0,t_1],\) \(h_p(t)\) be depature rate along path p at time t, h(t) be complete vector of departure rates \(h(t) = (h_p(t) : p \in {\mathcal {P}}),\) \(\Psi _p(t,h)\) be travel cost along path p with departure time t, under departure profile h, \(v_{ij}(h)\) be minimum travel cost between O-D pair (i, j) for all paths and departure times.

Assume that \(h_p(\cdot ) \in L^2_{+}[t_0,t_1]\) and \(h(\cdot ) \in (L^2_{+}[t_0,t_1])^{|{\mathcal {P}}|}.\) Define the effective delay operator \(\Psi : (L^2_{+}[t_0,t_1])^{|{\mathcal {P}}|} \rightarrow (L^2_{+}[t_0,t_1])^{|{\mathcal {P}}|}\) as follows:

The travel demand satisfaction constraint satisfies

Then, the set of feasible path departures vector can be expressed as

Recall that a vector of departures \(h^*\in \Lambda\) is a dynamic user equilibrium with simultaneous route and departure time choice if

Note that (4.1) is equivalent to the following variational inequality ([16]):

Based on Algorithm 3.2, we have the following algorithm.

If the delay operator \(\Psi\) is Lipschitz continuous and pseudomonotone, then we can apply Algorithm 4.1 to compute dynamic user equilibria.

5 Numerical examples

In this section, we consider some examples to illustrate the convergence of the proposed algorithm and compare it with other algorithms. We also compare the convergence of Algorithm 3.2 for various examples of the Bregman distance.

Example 5.1

Let \(E= \ell _2({\mathbb {R}})\) where \(\ell _2({\mathbb {R}}) = \{x=(x_1,x_2,x_3,...,), \ x_i \in {\mathbb {R}}: \sum _{i=1}^{\infty }\}\) with norm \(\Vert x\Vert _{\ell _2} = \left( \sum _{i=1}^{\infty }|x_i|^2\right) ^{\frac{1}{2}}\) and inner product \(\langle x,y\rangle = \sum _{i=1}^{\infty }x_iy_i,\) for all \(x =(x_1,x_2,x_3,...),\) \(y=(y_1,y_2,y_3,...) \in E.\) Let \(C = \{x= (x_1,x_2,x_3,...) \in \ell _2 : \Vert x\Vert \le 1\}\) and \({\mathcal {A}} : \ell _2 \rightarrow \ell _2\) be defined by

It was shown in Example 2.1 of [4] that \({\mathcal {A}}\) is pseudomonotone, Lipschitz continuous and sequentially weakly continuous but not monotone in \(\ell _2({\mathbb {R}}).\)

We now list some known Bregman distances. These distances are listed in the following forms:

-

(i)

The function \(f^{IS}(x) = -\sum _{i=1}^{m}\log x_i\) and the Itakura-Saito distance

$$\begin{aligned} \phi _f^{IS}(x,y) = \sum _{i=1}^{m}\left( \frac{x_i}{y_i} - \log \left( \frac{x_i}{y_i}\right) - 1\right) . \end{aligned}$$ -

(ii)

The function \(f^{KL}(x) = \sum _{i=1}^{m}x_i\log x_i\) and the Kullback-Leibler distance

$$\begin{aligned} \phi _f^{KL}(x,y) = \sum _{i=1}^{m}\left( x_i\log \left( \frac{x_i}{y_i}\right) + y_i - x_i\right) . \end{aligned}$$ -

(iii)

The function \(f^{SE}(x) = \frac{1}{2}\Vert x\Vert ^2\) and the squared Euclidean distance

$$\begin{aligned} \phi _f^{SE}(x,y) = \frac{1}{2}\Vert x -y\Vert ^2. \end{aligned}$$

The values \(\nabla f(x)\) and \(\nabla f^*(x) = (\nabla f)^{-1}(x)\) are computed explicitly. More precisely,

-

(i)

\(\nabla f^{IS}(x) = -(1/x_1,...,1/x_m)^T\) and \((\nabla f^{IS})^{-1}(x) = -(1/x_1,...,1/x_m)^T.\)

-

(ii)

\(\nabla f^{KL}(x) = (1+\log (x_1),...,1+\log (x_m))^T\) and

$$\begin{aligned} (\nabla f^{KL})^{-1}(x) = (\exp (x_1-1),...,\exp (x_m - 1))^T. \end{aligned}$$ -

(iii)

\(\nabla f^{SE}(x) = x\) and \((\nabla f^{SE})^{-1}(x) = x.\)

The feasibility set of our problem VIP is of the form,

where \(a<1/\sqrt{m}\) (which ensures that \(C\ne \emptyset\)). For the experiment in Algorithm 3.2, we choose \(\alpha _n = \frac{1}{n+1},\) \(\delta _n = \frac{2n}{5n+4},\) \(u=\mu =0.5\) and \(\rho =3.5.\)

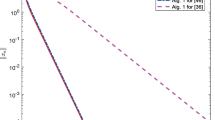

Let \(E_n = \Vert x_{n+1} - x_n\Vert ^2 < 10^{-4},\) we consider this example for various types of Bregman distance with \(m=10,20,50,80.\) The results of this experiment are reported in Fig. 1.

Example 5.1. Top left: \(m=10,\) Top right: \(m=20\), Bottom left: \(m=50,\) Bottom right: \(m=80\)

Example 5.2

The following example was first considered in [20],

where

Since P is symmetric and positive definite, g is pseudoconvex on X. We minimize g on \(K=\{x \in {\mathbb {R}}^4: 1 \le x_i \le 3 \} \subset X.\)

It is easy to see that

The following choices of parameters are made: \(\alpha _{n}=\frac{1}{n+1},\) \(\delta _n=\frac{2n}{5n+7},\) \(\rho =3.5\) and \(u =\mu =0.5.\)

We terminate the iterations at \(E_n=\Vert x_{n+1}-x_n\Vert ^2 \le \epsilon\) with \(\epsilon = 10^{-4}.\) The results are presented in Fig. 2 for various initial values of \(x_1.\)

- Case 1::

-

\(x_1=(-10, -10, -10, -10)';\)

- Case 2::

-

\(x_1=(1, 2, 3, 4)';\)

- Case 3::

-

\(x_1=(4, 4, 4, 4)';\)

- Case 4:

-

\(x_1=(5, 0, 0, 10)'.\)

We compare the performance of our Algorithm 3.2 with Algorithm 1.2. For algorithm 1.2, we let \(l=0.001\) and \(\gamma =0.002.\)

References

Alber, Y.I. 1996. Metric and generalized projection operators in Banach spaces: properties and applications. In Theory and applications of nonlinear operator of accretive and monotone type, ed. A.G. Kartsatos, 15–50. New York: Marcel Dekker.

Alber, Y., and I. Ryazantseva. 2006. Nonlinear ill-posed problems of monotone type. Dordrecht: Springer.

Beck, A. 2017. First -order methods in optimization. Philadephia (PA): Society for Industrial and Applied Mathematics.

Bot, R.I., E.R. Csetnek, and P.T. Voung. 2020. The forward-backward-forward method from continuous and discrete perspective for pseudomonotone variational inequality inequalities in Hilbert spaces. European Journal of Operational Research 287: 49–60.

Ceng, L.C., N. Hadjisavas, and N.C. Weng. 2010. Strong convergence theorems by a hybrid extragradient-like approximation method for variational inequalities and fixed point problems. Journal of Global Optimization 46: 635–646.

Ceng, C.L., M. Teboulle, and J.C. Yao. 2010. Weak convergence of an iterative method for pseudomonotone variational inequalities and fixed-point problems. Journal of Optimization Theory and Applications 146: 19–31.

Ceng, C.L., A. Petrusel, and J.C. Yao. 2018. Hybrid viscosity extragradient method for systems of variational inequalities, fixed points of nonexpansive mappings, zero points of accretive operators in Banach spaces. Fixed Point Theory 19: 487–502.

Ceng, C.L., A. Petrusel, and J.C. Yao. 2019. Systems of variational inequalities with hierarchical variational inequality constraints for Lipschitzian pseudocontractions. Fixed Point Theory 20: 113–133.

Ceng, C.L., A. Petrusel, X. Qin, and J.C. Yao. 2021. Pseudomonotone variational inequalities and fixed points. Fixed Point Theory 22 (2): 543–558.

Censor, Y., A. Gibali, and S. Reich. 2012. Extensions of Korpelevich’s extragradient method for variational inequality problems in Euclidean space. Optimization 61: 1119–1132.

Censor, Y., A. Gibali, and S. Reich. 2012. The subgradient extragradient method for solving variational inequalities in Hilbert spaces. Journal of Optimization Theory and Applications 148: 318–335.

Censor, Y., A. Gibali, and S. Reich. 2011. Strong convergence of subgradient extragradient methods for the variational inequality problem in Hilbert space. Optimization Methods and Software 26: 827–845.

Censor, Y., and A. Lent. 1981. An iterative row-action method for interval convex programming. Journal of Optimization Theory and Applications 34: 321–353.

Denisov, S.V., V.V. Semenov, and L.M. Chabak. 2015. Convergence of the modified extragradient method for variational inequalities with non-Lipschitz operators. Cybernetics and Systems Analysis 51: 757–765.

Facchinei, F., and J.S. Pang. 2003. Finite-dimensional variational inequalities and complementarity problems. Berlin: Springer.

Friesz, T.L., and K. Han. 2019. The mathematical foundations of dynamic user equilibrium. Transportation Research Part B: Methodological 126: 309–328.

Gibali, A., and Y. Shehu. 2019. An efficient iterative method for finding common fixed point and variational inequalities in Hilbert spaces. Optimization 68 (1): 13–32.

Goldstein, A.A. 1964. Convex programming in Hilbert spaces. Bulletin of the American Mathematical Society 70: 709–710.

Hartman, P., and G. Stampacchia. 1966. On some non-linear elliptic differential-functional equations. Acta Mathematica 115: 271–310.

Hu, X., and J. Wang. 2006. Solving pseudo-monotone variational inequalities and pseudo-convex optimization problems using the projection neural network. IEEE Transactions on Neural Networks and Learning Systems 17: 1487–1499.

Jolaoso, L.O., A. Taiwo, and O.T. Alakoye. 2019. A unified algorithm for solving variational inequality and fixed point problems with application to the split equality problem. Computational and Applied Mathematics 39: 38.

Jolaoso, L.O., and M. Aphane. 2020. Weak and strong convergence Bregman extragradient scheme for solving pseudo-monotone and non-Lipschitz variational inequalities. Journal of Inequalities and Applications 2020: 195.

Jolaoso, L.O., and Y. Shehu. 2021. Single Bregman projection method for solving variational inequalities in reflexive Banach spaces. Applicable Analysis. https://doi.org/10.1080/0036811.2020.1869947.

Kanzow, C., and Y. Shehu. 2018. Strong convergence of a double projection-type method for monotone variational inequalities in Hilbert spaces. Journal of Fixed Point Theory and Applications. https://doi.org/10.1007/s11784-018-0531-8.

Kinderlehrer, D., and G. Stampacchia. 1980. An introduction to variational inequalities and their applications. New York: Academic Press.

Konnov, I.V. 2007. Equilibrium models and variational inequalities. Amsterdam: Elsevier.

Korpelevich, G.M. 1976. The extragradient method for finding saddle points and other problems. Ekon Mat Metody 12: 747–756.

Lin, L.J., M.F. Yang, and Q.H. Ansari. 2005. Existence results for stampacchia and Minty type implicit variational inequality in multivalued maps. Nonlinear Analysis: Theory, Methods and Applications 61: 1–19.

Mashreghi, J., and M. Nasri. 2010. Forcing strong convergence of Korpelevich’s method in Banach spaces with its application in game theory. Nonlinear Analysis 72: 2086–2099.

Reem, D., S. Reich, and A. De Pierro. 2019. Re-examination of Bregman functions and new properties of their divergences. Optimization 68: 279–348.

Reich, S., D.V. Thong, P. Cholamjiak, and L.V. Long. 2021. Inertial projection-type methods for solving pseudomonotone variational inequality problems in Hilbert space. Numerical Algorithms 88: 813–835.

Reich, S., and S. Sabach. 2009. A strong convergence theorem for proximal-type algorithm in reflexive Banach space. Journal of Nonlinear and Convex Analysis 10: 471–485.

Shehu, Y., Q.L. Dong, and D. Jiang. 2019. Single projection method for pseudomonotone variational inequality in Hilbert space. Optimization 68: 385–409.

Shehu, Y., O.S. Iyiola, and S. Reich. 2021. A modified inertial subgradient extragradient method for solving variational inequalities. Optimization and Engineering. https://doi.org/10.1007/s11081-020-09593-w.

Shehu, Y., X.-H. Li, and Q.-L. Dong. 2020. An efficient projection-type method for monotone variational inequalities in Hilbert spaces. Numerical Algorithms 84: 365–388.

Solodov, M.V., and B.F. Svaiter. 1999. A new projection method for variational inequality problems. SIAM Journal on Control and Optimization 37: 765–776.

Tseng, P.A. 2009. Modified forward-backward splitting method for maximal monotone mappings. SIAM Journal on Control and Optimization 38: 431–446.

Wairojjana, N., N. Pakkaranang, and N. Nuttapol. 2021. Pholasa, Strong convergence inertial projection algorithm with self-adaptive step size rule for pseudomonotone variational inequalities in Hilbert spaces. Demonstration Mathematica 54 (1): 110–128.

Xu, H.K. 1991. Inequalities in Banach spaces with applications. Nonlinear Analysis 16 (2): 1127–1138.

Yao, Y., M. Postolache, and J.C. Yao. 2019. Iterative algorithms for the generalized variational inequalities. UPB Scientific Bulletin Series A 81: 3–16.

Yao, Y., M. Postolache, and J.C. Yao. 2019. Iterative algorithms for pseudomonotone variational inequalities and fixed point problems of pseudocontrative operators. Mathematics 7: 1189.

Yao, Y., M. Postolache, and J.C. Yao. 2020. Strong convergence of an extragradient algorithm for variational inequality and fixed point problems. UPB Scientific Bulletin Series A 82 (3): 3–12.

Zhao, X., J.C. Yao, and Y. Yao. 2020. A proximal algorithm for solving split monotone inclusion. University Politehnica of Bucharest Series A 80 (3): 43–52.

Acknowledgements

The third author acknowledges with thanks the bursary and financial support from Department of Science and Innovation and National Research Foundation, Republic of South Africa Center of Excellence in Mathematical and Statistical Sciences (DSI-NRF COE-MaSS) Doctoral Bursary. Opinions expressed and conclusions arrived are those of the authors and are not necessarily to be attributed to the CoE-MaSS.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Samy Ponnusamy.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Okeke, C.C., Bello, A.U. & Oyewole, O.K. A strong convergence algorithm for solving pseudomonotone variational inequalities with a single projection. J Anal 30, 965–987 (2022). https://doi.org/10.1007/s41478-022-00384-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41478-022-00384-3