Abstract

In this paper, we propose a new extragradient method consisting of the hybrid steepest descent method, a single projection method and an Armijo line searching the technique for approximating a solution of variational inequality problem and finding the fixed point of demicontractive mapping in a real Hilbert space. The essence of this algorithm is that a single projection is required in each iteration and the step size for the next iterate is determined in such a way that there is no need for a prior estimate of the Lipschitz constant of the underlying operator. We state and prove a strong convergence theorem for approximating common solutions of variational inequality and fixed points problem under some mild conditions on the control sequences. By casting the problem into an equivalent problem in a suitable product space, we are able to present a simultaneous algorithm for solving the split equality problem without prior knowledge of the operator norm. Finally, we give some numerical examples to show the efficiency of our algorithm over some other algorithms in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Throughout this paper, let C be a nonempty closed convex subset of a real Hilbert space H with norm \(||\cdot ||\) and inner product \(\langle \cdot , \cdot \rangle .\) The variational inequality problem (VIP) is defined as:

where \(A: C \rightarrow H\) is a nonlinear operator. We denote the set of solutions of VIP (1.1) by VI(C, A). The VIP is an important tool in economics, decision making, engineering mechanics, mathematical programming, transportation, operation research, etc. (see, for example Aubin 1998; Glowinski et al. 1981; Khobotov 1987; Kinderlehrer and Stampachia 2000; Marcotte 1991).

It is well known that \(x^\dagger \) solves the VIP (1.1) if and only if \(x^\dagger \) solves the fixed point equation

or equivalently, \(x^\dagger \) solves the residual equation

for an arbitrary positive constant \(\lambda \); see Glowinski et al. (1981) for details. Obviously, (1.3) is obtained from (1.2).

Several iterative methods have been introduced for solving the VIP and its related optimization problems; see (Jolaoso et al. 2019; Taiwo et al. 2019a, b, c). One of the earliest methods for solving VIP is the extragradient method introduced by Korpelevich (1976). The extragradient method was stated as follows:

where \(\lambda \in \left( 0, \frac{1}{L}\right) \), \(A:C \rightarrow {\mathbb {R}}^n\) is monotone and Lipschitz continuous with Lipschitz constant L. This extragradient method has further been extended to infinite-dimensional spaces by many authors; see for example (Apostol et al. 2012; Ceng et al. 2010; Censor et al. 2012; Denisov et al. 2015).

As an improvement of the extragradient algorithm, (1.4), Censor et al. (2011b) introduced the following subgradient extragradient algorithm for solving the VIP in a real Hilbert space H:

In (1.5), the second projection \(P_C\) of the extragradient algorithm (1.4) was replaced with a projection onto a half-space \(D_k\) which is easier to evaluate. Under some mild assumptions, Censor et al. (2011b) obtained a weak convergence result for solving VIP using (1.5).

The second problem which we involve in this paper is finding the fixed point of an operator \(T:H \rightarrow H.\) A point \(x \in H\) is called a fixed point of T if \(x = Tx.\) The set of fixed points of T is denoted by F(T). Motivated by the result of Yamada et al. (2001), Tian (2010) considered the following general viscosity type iterative method for approximating the fixed points of a nonexpansive mapping:

where \(f: H \rightarrow H\) is a \(\rho \)-Lipschitz mapping with \(\rho >0\) and \(B:H \rightarrow H\) is a \(\kappa \)-Lipschitz and \(\eta \)-strongly monotone mapping with \(\kappa > 0\) and \(\eta >0.\) Under some certain conditions, Tian (2010) proved that the sequence \(\{x_n\}\) generated by (1.6) converges strongly to a fixed point of T which also solves the variational inequality

In Nadezhkina and Takahashi (2006), Nadezhkina and Takahashi proposed the following algorithm for finding a common solution of VIP (1.1) and F(T), where T is nonexpansive and A is monotone and L-Lipschitz continuous:

where \(\{\lambda _k\}\subset [a,b]\) for some \(a,b \in \left( 0, \frac{1}{L}\right) \) and \(\{\alpha _k\} \subset (0,1)\). The sequence \(\{x_k\}\) generated by (1.7) converges weakly to a solution \(x \in \Gamma := VI(C,A)\bigcap F(T).\)

Also, Censor et al. (2011b) studied the approximation of common solution of the VIP and fixed point problem for a nonexpansive mapping T in a real Hilbert space. They proposed the following subgradient extragradient algorithm and proved its weak convergence to a solution \(u^* \in F(T)\cap VI(C,A)\):

To obtain strong convergence, Censor et al. (2011a) combined the subgradient extragradient method and the hybrid method to obtain the following effective scheme for solving the VIP (1.1) and finding the fixed point of a nonexpansive mapping T.

As an improvement on (1.9), Maingé (2008) proposed the following hybrid extragradient viscosity method which does not involve computing the projection onto the intersection \(C_k \cap Q_k\):

where \(\lambda _k >0\), \(\alpha _k >0\) and \(w \in [0,1]\) are suitable parameters, \(T: H \rightarrow H\) is \(\beta \)-demicontractive mapping, \(A: C \rightarrow H\) is a monotone and L-Lipschitz continuous mapping and \(B:H \rightarrow H\) is \(\eta \)-strongly monotone and \(\kappa \)-Lipschitz continuous mapping. Maingé (2008) proved that the sequence \(\{x_k\}\) generated by (1.10) converges strongly to the unique solution \(x^* \in VI(C,A)\cap F(T).\)

Recently, Hieu et al. (2018) modified algorithm (1.10) and proposed the following two-step extragradient viscosity method for solving similar problem in a Hilbert space:

where \(\rho _k >0\), \(0 \le \lambda _k \le \rho _k,\) \(\beta _k \in [0,1],\) A, T and B are as defined for (1.10). We observe that, although algorithm (1.11) does not contain (1.4), the algorithm (1.11) requires more computation of projections onto the feasible set. This can be costly if the feasible set has a complex structure which may affect the usage of the algorithm.

Motivated by the above results, in this paper, we present a unified algorithm which consists of the combination of hybrid steepest descent method (also called general viscosity method Tian 2010) and a projection method with an Armijo line searching rule for finding a common solution of VIP (1.1) and fixed point of \(\beta \)-demicontractive mapping in a Hilbert space. Our contributions in this paper can be highlighted as follows:

Our proposed algorithm requires only one projection onto the feasible set and no other projection along each iteration process. This is in contrast to the above-mentioned methods and many other recent results (such as Dong et al. 2016; Kanzow and Shehu 2018; Thong and Hieu 2018a, b; Vuong 2018) which require more than one projection onto the feasible set in each iteration process.

The underlying operator A of the VIP considered in our result is pseudo-monotone. This extends the above results where the operator is assumed to be monotone. Note that every monotone operator is pseudo-monotone, but the converse is not always true (as seen in Example 2.2).

In our result, the step size \(\lambda _k\) is determined via an Armijo line search rule. This is very important because it helps us to avoid finding a prior estimate of the Lipschitz constant L of the operator A used in the above-mentioned results. In practice, it is very difficult to approximate this Lipschitz constant.

The strong convergence guaranteed by our algorithm makes it a good candidate method for approximating a common solution of VIP (1.1) and fixed point problem.

Finally, we present an application of our result for solving the split equality problem in Hilbert spaces.

2 Preliminaries

In this section, we present some basic notions and results that are needed in the sequel. We denote the strong and weak convergence of a sequence \(\{x_n\} \subseteq H\) to a point \(p \in H\) by \(x_n \rightarrow p\) and \(x_n \rightharpoonup p,\) respectively.

Definition 2.1

A mapping \(A:C \rightarrow H\) is called

- (a)

\(\eta \)-strongly monotone on C if there exists a constant \(\eta >0\) such that \(\langle Ax -Ay, x-y \rangle \ge \eta ||x-y||^2,\) for all \(x,y \in C;\)

- (b)

\(\alpha \)-inverse strongly monotone on C if there exists a constant \(\alpha >0\) such that \(\langle Ax -Ay, x - y\rangle \ge \alpha ||Ax - Ay||^2\) for all \(x,y \in C;\)

- (c)

monotone on C if \(\langle Ax - Ay, x- y\rangle \ge 0\) for all \(x,y \in C;\)

- (d)

pseudo-monotone on C if for all \(x,y \in C\), \(\langle Ax, y -x \rangle \ge 0 \Rightarrow \langle Ay, y -x \rangle \ge 0;\)

- (e)

L-Lipschitz continuous on C if there exists a constant \(L >0\) such that \(||Ax- Ay|| \le L||x-y||\) for all \(x,y \in C.\)

If A is \(\eta \)-strongly monotone and L-Lipschitz continuous, then, A is \(\frac{\eta }{L^2}\)-inverse strongly monotone. Also, we note that every monotone operator is pseudo-monotone, but the converse is not true (see the Example 2.2 below).

Example 2.2

Khanh and Vuong (2014) Let \(E = \ell _2\), the real Hilbert space whose elements are the square summable sequences of real scalars, i.e.,

The inner product and norm on E are given by

where \(x = (x_1, x_2,\dots ,x_k,\ldots ),\) and \(y = (y_1, y_2, \ldots , y_k, \ldots ).\)

Let \(\alpha ,\beta \in {\mathbb {R}}\) such that \(\beta> \alpha> \frac{\beta }{2}>0\) and

It is easy to verify that \(VI(C,A) = \{0\}\). Now, let \(x,y \in C\) such that \(\langle Ax, y-x\rangle \ge 0\), i.e.,

Since \(\beta> \alpha> \frac{\beta }{2} > 0,\) the last inequality implies that \(\langle x, y-x\rangle \ge 0.\) Hence,

This means that A is pseudo-monotone on C. To show that A is not monotone on C, let us consider \(x = \Big (\dfrac{\beta }{2}, 0,\ldots ,0,\ldots \Big ),~~ y= (\alpha ,0,\ldots ,0, \ldots ) \in C.\) Then, we have

Definition 2.3

A mapping \(P_C:H \rightarrow C\) is called a metric projection if for any point \(w \in H,\) there exists a unique point \(P_Cw \in C\) such that

We know that \(P_C\) is a nonexpansive mapping and satisfies the following characterization.

- (i)

\(\langle x-y, P_C x - P_C y \rangle \ge ||P_C x-P_C y||^2,\) for every \(x,y \in H;\)

- (ii)

for \(x \in H\) and \(z\in C\), \(z = P_C x \Leftrightarrow \)

$$\begin{aligned} \langle x-z, z-y \rangle \ge 0, ~~\forall y \in C; \end{aligned}$$(2.1) - (iii)

for \(x \in H\) and \(y \in C\),

$$\begin{aligned} ||y-P_C(x)||^2 + ||x- P_C(x)||^2 \le ||x-y||^2. \end{aligned}$$(2.2)

The normal cone of a nonempty closed convex subset C of H at a point \(x \in C\), denoted by \(N_C(x),\) is defined as

Next, we recall some basic concepts of nonexpansive mapping and its generalization.

Definition 2.4

Let \(T:C \rightarrow C\) be a nonlinear operator. Then T is called (see for example, Maingé 2008)

- (i)

nonexpansive if

$$\begin{aligned} ||Tx - Ty|| \le ||x-y||,\quad \forall ~ x,y \in C; \end{aligned}$$ - (ii)

quasi-nonexpansive mapping if \(F(T) \ne \emptyset \) and

$$\begin{aligned} ||Tx - p|| \le ||x -p||,\quad \forall ~x \in C, p \in F(T); \end{aligned}$$ - (iii)

k-strictly pseudocontractive if there exists a constant \(k \in [0,1)\) such that

$$\begin{aligned} ||Tx - Ty||^2 \le ||x-y||^2 + k||(I-T)x - (I-T)y||^2 \quad \forall ~x,y \in C; \end{aligned}$$ - (iv)

\(\beta \)-demicontractive mapping if there exists \(\beta \in [0,1)\) such that

$$\begin{aligned} ||Tx - p||^2 \le ||x - p||^2 +\beta ||x- Tx||^2, \quad \forall ~x \in C, p \in F(T). \end{aligned}$$(2.3)

The following results will be used in the sequel.

Lemma 2.5

(Marino and Xu 2007; Zegeye and Shahzad 2011) In a real Hilbert space H, the following inequalities hold:

- (i)

\(||x-y||^2 = ||x||^2 - 2\langle x, y \rangle + ||y||^2 , ~~~\forall x,y \in H;\)

- (ii)

\(||x+y||^2 \le ||x||^2 + 2\langle y, x+y \rangle , ~~\forall x,y \in H;\)

- (iii)

\(||\alpha x + (1-\alpha )y ||^2 = \alpha ||x||^2 + (1-\alpha )||y||^2 - \alpha (1-\alpha )||x-y||^2, ~~\forall x,y \in H\) and \(\alpha \in [0,1].\)

It is well known that the demicontractive mappings have the following property.

Lemma 2.6

(Maingé 2008, Remark 4.2, pp 1506) Let T be a \(\beta \)-demicontractive self-mapping on H with \(F(T) \ne \emptyset \) and set \(T_w :=(1-w)I+wT\) for \(w \in (0,1].\) Then

- (i)

\(T_w\) is a quasi-nonexpansive mapping if \(w \in [0, 1 - \beta ]\);

- (ii)

F(T) is closed and convex.

Definition 2.7

(See Lin et al. 2005; Mashreghi and Nasri 2010) The Minty Variational Inequality Problem (MVIP) is defined as finding a point \({\bar{x}} \in C\) such that

We denote by M(C, A) the set of solution of (1.1). Some existence results for the MVIP have been presented in Lin et al. (2005). Also, the assumption that \(M(C,A) \ne \emptyset \) has already been used for solving VI(C, A) in finite dimensional spaces (see e.g., Solodov and Svaiter 1999). It is not difficult to prove that pseudo-monotonicity implies property \(M(C,A) \ne \emptyset \), but the converse is not true. Indeed, let \(A:{\mathbb {R}} \rightarrow {\mathbb {R}}\) be defined by \(A(x) = \cos (x)\) with \(C = [0, \frac{\pi }{2}]\). We have that \(VI(C,A) = \{0, \frac{\pi }{2}\}\) and \(M(C,A) = \{0\}\). But if we take \(x = 0\) and \(y = \frac{\pi }{2}\) in Definition 2.1(d), we see that A is not pseudo-monotone.

Lemma 2.8

(See Mashreghi and Nasri 2010) Consider the VIP (1.1). If the mapping \(h:[0,1] \rightarrow E^{*}\) defined as \(h(t) = A(tx + (1-t)y)\) is continuous for all \( x,y \in C\) (i.e., h is hemicontinuous), then \(M(C,A) \subset VI(C,A)\). Moreover, if A is pseudo-monotone, then VI(C, A) is closed, convex and \(VI(C,A) = M(C,A).\)

The following lemma was proved in \({\mathbb {R}}^n\) in Fang and Chen (2015) and can easily be extended to a real Hilbert space.

Lemma 2.9

Let H be a real Hilbert space and C be a nonempty closed and convex subset of H. For any \(x \in H\) and \(\lambda >0,\) we denote

then

Lemma 2.10

(Lemma 2.2 of Witthayarat et al. 2012) Let B be a k-Lipschitzian and \(\eta \)-strongly monotone operator on a Hilbert space H with \(k>0,\) \(\eta >0,\) \(0<\mu <\frac{2\eta }{k^2}\) and \(0<\alpha <1.\) Then \(S:=(I-\alpha \mu B):H \rightarrow H\) is a contraction with a contractive coefficient \(1-\alpha \tau \) and \(\tau = \frac{1}{2}\mu (2\eta - \mu k^2).\)

Lemma 2.11

(Xu 2002) Let \(\{a_n\}\) be a sequence of nonnegative real numbers satisfying the following relation:

where

- (i)

\(\{\alpha _n\}\subset [0,1],\) \(\sum \nolimits _{n=1}^{\infty }\alpha _n = \infty ,\)

- (ii)

\(\limsup \nolimits _{n\rightarrow \infty }\sigma _n \le 0,\)

- (iii)

\( \gamma _n \ge 0,\) (\(n \ge 1)\) and \(\sum \nolimits _{n=1}^\infty \gamma _n < \infty .\)

Then, \(a_n \rightarrow 0\) as \(n \rightarrow \infty \).

Lemma 2.12

(Maingé 2008) Let \(\{a_n\}\) be a sequence of real numbers such that there exists a subsequence \(\{n_i\}\) of \(\{n\}\) with \(a_{n_i} < a_{n_i+1}\) for all \(i \in {\mathbb {N}}\). Consider the integer \(\{m_k\}\) defined by

Then \(\{m_k\}\) is a nondecreasing sequence verifying \(\lim _{n \rightarrow \infty }m_n = \infty ,\) and for all \(k \in {\mathbb {N}},\) the following estimates hold:

3 Main results

In this section, we give a precise statement of our algorithm and discuss its strong convergence.

Let C be a nonempty closed and convex subset of a real Hilbert space H. Let \(A:H \rightarrow H\) be a pseudo-monotone and L-Lipschitz continuous operator and \(T:H \rightarrow H\) be a \(\beta \)-demicontractive mapping with constant \(\beta \in [0,1)\) and demiclosed at zero. Suppose Sol \(:= VI(C,A)\bigcap F(T) \ne \emptyset ,\) let \(B:H \rightarrow H\) be a k-Lipschitzian and \(\eta \)-strongly monotone mapping with \(k>0\) and \(\eta >0\) and \(f:H \rightarrow H\) be a \(\rho \)-Lipschitz mapping with \(\rho >0\). Let \(0< \mu < \frac{2\eta }{k^2}\) and \(0< \xi \rho < \tau ,\) where \(\tau = \frac{1}{2}\mu (2\eta - \mu k^2).\) Let \(\{\alpha _k\}\) and \(\{v_k\}\) be sequences in (0, 1) and \(\{x_k\}\) be generated by the following algorithm:

To establish the convergence of Algorithm 3.1, we make the following assumption:

- (C1)

\(\lim \nolimits _{k\rightarrow \infty }\alpha _k = 0\) and \(\sum \limits _{k=0}^{\infty } \alpha _k = \infty ;\)

- (C2)

\(\liminf \nolimits _{k \rightarrow \infty }\lambda _{k}>0;\)

- (C3)

\(\liminf \nolimits _{k \rightarrow \infty }(v_{k}-\beta )v_{k}>0\).

Remark 3.2

Observe that if \(x_k = y_k\) and \(x_k - Tx_k = 0\), then we are at a common solution of the variational inequality (1.1) and fixed point of the demicontractive mapping T. In our convergence analysis, we will implicitly assume that this does not occur after finitely many iterations so that our Algorithm 3.1 generates infinite sequences. We will see in the following result that the Algorithm 3.1 is well defined. To do this, it suffices to show that the Armijo line searching rule defined by (3.2) is well defined and \(\delta _k \ne 0.\)

Lemma 3.3

There exists a nonnegative integer \(l_k\) satisfying (3.2). In addition,

Proof

Let \(r_{\lambda _k}(x_k) = x_k - P_C(x_k - \lambda _k Ax_k)\) and suppose \(r_{\gamma ^{k_0}}(x_k) = 0\) for some \(k_0 \ge 1.\) Take \(l_k = k_0\) which satisfies (3.2). Suppose \(r_{\gamma ^{k_1}}(x_k) \ne 0\) for some \(k_1 \ge 1\) and assume the contrary, that is,

Then it follows from Lemma 2.9 and the fact that \(\gamma \in (0,1)\) that

Since \(P_C\) is continuous, we have that

We now consider two cases, namely when \(x_k \in C\) and \(x_k \notin C.\)

- (i)

If \(x_k \in C,\) then \(x_k = P_Cx_k\). Now since \(r_{\gamma ^{k_1}}(x_k) \ne 0\) and \(\gamma ^{k_1} \le 1\), it follows from Lemma 2.9 that

$$\begin{aligned} 0< & {} ||r_{\gamma ^{k_1}}(x_k)|| \le \max \{1, \gamma ^{k_1}\}||r_1(x_k)|| \\= & {} ||r_1(x_k)||. \end{aligned}$$Letting \(l \rightarrow \infty \) in (3.7), we have that

$$\begin{aligned} 0 = ||Ax_k - Ax_k|| \ge \theta ||r_1(x_k)|| > 0. \end{aligned}$$This is a contradiction and so (3.2) is valid.

- (ii)

\(x_k \notin C,\) then

$$\begin{aligned} \gamma ^{l}||Ax_k - Ay_k|| \rightarrow 0,\quad l \rightarrow \infty , \end{aligned}$$(3.9)while

$$\begin{aligned} \lim _{l\rightarrow \infty }\theta ||r_{\gamma ^{l}}(x_k)|| = \lim _{l\rightarrow \infty }\theta ||x_k - P_C(x_k - \gamma ^{l}Ax_k)|| = \theta ||x_k - P_Cx_k|| > 0. \end{aligned}$$This is a contradiction. Therefore, the Armijo line searching rule in (3.2) is well defined.

On the other hand, since A is Lipschitz continuous, then, we have from (3.2) and (3.3):

Also,

Therefore from (3.5), (3.10) and (3.11), we get

\(\square \)

Now, we prove that the sequences \(\{x_k\},\{y_k\}\) and \(\{w_k\}\) generated by Algorithm 3.1 are bounded.

Lemma 3.4

The sequence \(\{x_k\}\) generated by Algorithm 3.1 is bounded. In addition, the following inequality is satisfied:

where \(x^* \in Sol\).

Proof

Let \(x^* \in \) Sol, then by Lemma 2.5 (i), we obtain

Observe that

Since \(y_k = P_C(x_k - \lambda _k Ax_k)\) and \(x^* \in \) Sol, then by the variational characterization of \(P_C\), we have

and from the pseudo-monotonicity of A, we have

Hence, combining (3.15) and (3.16), with the fact that \(\lambda _k > 0\), we get

Thus from (3.17) and (3.14) , we get

Therefore, (3.13) yields

From the definition of \(\delta _k\) and \(w_k\), we have

Substituting (3.20) into (3.19), we have

Hence,

Now, let \(T_v = vT + (1 - v)I\), then by Lemma 2.6, \(T_v\) is quasi-nonexpansive. Using Lemma 2.10, we have

This implies that \(\{||x_k - x^*||\}\) is bounded and so \(\{x_k\}\) is bounded in H. Consequently, from (3.21), \(\{w_k\}\) is bounded and since A is continuous, then \(\{Ax_k\}\) is bounded and therefore \(\{y_k\}\) is bounded too. \(\square \)

Lemma 3.5

The sequence \(\{x_n\}\) generated by Algorithm 3.1 satisfies the following estimates:

- (i)

\(s_{k+1} \le (1-a_k)s_k + a_kb_k ,\)

- (ii)

\(-1 \le \limsup \nolimits _{k \rightarrow \infty }b_k < + \infty \),

where \(s_k = ||x_k -x^*||^2, \quad a_k = \frac{2\alpha _k(\tau - \xi \rho )}{1 - \alpha _k \xi \rho },\) \(b_k = \frac{\alpha _k\tau ^2 M_1}{2(\tau - \xi \rho )} + \frac{1}{\tau - \xi \rho }\langle \xi f(x^*) - \mu B(x^*), x_{k+1} - x^* \rangle ,\) for some \(M_1 >0,\) \(x^* \in Sol.\)

Proof

Let \(x^* \in \mathrm{Sol},\) then from Lemma 2.5FPar52.5 (ii) and (3.6), we have

This implies that

where the exisence of \(M_1\) follows from the boundedness of \(\{x_k\}\). This established (i).

Next, we prove (ii). Since \(\{x_k\}\) is bounded and \(\alpha _k \in (0,1)\), we have that

We next show that \(\limsup _{k \rightarrow \infty }b_k \ge -1.\) Assume the contrary that \(\limsup _{k \rightarrow \infty }b_k < -1\), which implies that there exists \(k_0 \in {\mathbb {N}}\) such that \(b_k \le -1\) for all \(k \ge k_0\). Hence, it follows from (i) that

By induction, we get that

Taking \(\limsup \) of both sides in the last inequality, we have that

This contradicts the fact that \(\{s_k\}\) is a nonnegative real sequence. Therefore, \(\limsup _{k \rightarrow \infty }b_k \ge -1.\) \(\square \)

Lemma 3.6

Let \(\{x_{k_j}\}\) be a subsequence of the sequence \(\{x_k\}\) generated by Algorithm 3.1 such that \(x_{k_j} \rightharpoonup p \in C.\) Suppose \(||x_k - y_k|| \rightarrow 0\) as \(k \rightarrow \infty \) and \(\liminf _{j\rightarrow \infty }\lambda _{k_j} >0.\) Then,

- (i)

\(0 \le \liminf _{j\rightarrow \infty }\langle Ax_{k_j}, x - x_{k_j} \rangle \), for all \(x \in C;\)

- (ii)

\(p \in VI(C,A).\)

Proof

-

(i)

Since \(y_{k_j} = P_C(x_{k_j} - \lambda _{k_j} Ax_{k_j}),\) from the variational characterization of \(P_C\) (i.e., (2.1)), we have

$$\begin{aligned} \langle x_{k_j} - \lambda _{k_j} Ax_{k_j} - y_{k_j}, x- y_{k_j} \rangle \le 0, \quad ~ \forall ~x \in C. \end{aligned}$$Hence,

$$\begin{aligned} \langle x_{k_j} - y_{k_j},x- y_{k_j} \rangle\le & {} \lambda _{k_j} \langle Ax_{k_j}, x - y_{k_k} \rangle \\= & {} \lambda _{k_j} \langle Ax_{k_j}, x_{k_j} - y_{k_j} \rangle + \lambda _{k_j} \langle Ax_{k_j}, x - x_{k_k} \rangle . \end{aligned}$$This implies that

$$\begin{aligned} \langle x_{k_j} - y_{k_j},x- y_{k_j} \rangle +\lambda _{k_j} \langle Ax_{k_j}, y_{k_j} - x_{k_j} \rangle \le \lambda _{k_j} \langle Ax_{k_j}, x - x_{k_k} \rangle . \end{aligned}$$(3.23)Fix \(x \in C\) and let \(j \rightarrow \infty \) in (3.23), since \(||x_{k_j} - y_{k_j}|| \rightarrow 0\) and by condition (C2), \(\liminf _{j\rightarrow \infty }\lambda _{k_j} >0\), we have

$$\begin{aligned} 0 \le \liminf _{j\rightarrow \infty }\langle Ax_{k_j}, x - x_{k_j} \rangle , \quad \forall ~x \in C. \end{aligned}$$(3.24) -

(ii)

Let \(\{\epsilon _j\}\) be a sequence of decreasing non-negative numbers such that \(\epsilon _j \rightarrow 0\) as \(j \rightarrow \infty .\) For each \(\epsilon _j,\) we denote by N the smallest positive integer such that

$$\begin{aligned} \langle Ax_{k_j}, x - x_{k_j} \rangle + \epsilon _j \ge 0, \quad \forall ~ j \ge N, \end{aligned}$$where the existence of N follows from (i). This implies that

$$\begin{aligned} \langle Ax_{k_j}, x + \epsilon _j t_{k_j} - x_{k_j} \rangle \ge 0, \quad \forall j \ge N, \end{aligned}$$for some \(t_{k_j} \in H\) satisfying \(1 = \langle Ax_{k_j}, t_{k_j} \rangle \) (since \(Ax_{k_j} \ne 0\)). Since A is pseudo-monotone, then we have from (i) that

$$\begin{aligned} \langle A(x+\epsilon _j t_{k_j}), x + \epsilon _j t_{k_j} - x_{k_j} \rangle \ge 0, \quad \forall j \ge N, \end{aligned}$$which implies that

$$\begin{aligned} \langle Ax, x - x_{k_j} \rangle \ge \langle Ax - A(x + \epsilon _jt_{k_j}), x + \epsilon _jt_{k_j} - x_{k_j} \rangle - \epsilon _j\langle Ax, t_{k_j} \rangle \quad \forall ~j \ge N. \end{aligned}$$(3.25)Since \(\epsilon _j \rightarrow 0\) and A is continuous, the right hand side of (3.25) tends to zero. Thus, we obtain that

$$\begin{aligned} \liminf _{j\rightarrow \infty }\langle Ax, x - x_{k_j} \rangle \ge 0, \quad \forall ~x \in C. \end{aligned}$$Hence,

$$\begin{aligned} \langle Ax, x - p \rangle = \lim _{j\rightarrow \infty }\langle Ax, x - x_{k_j} \rangle \ge 0, \quad \forall ~x \in C. \end{aligned}$$Therefore from Lemma 2.8FPar82.8, we obtain that \(p \in VI(C,A).\)

\(\square \)

We are now in a position to prove the convergence of our Algorithm.

Theorem 3.7

Let C be a nonempty closed and convex subset of a real Hilbert space H. Let \(A:H \rightarrow H\) be a pseudo-monotone and L-Lipschitz continuous operator and \(T:H \rightarrow H\) be a \(\beta \)-demicontractive mapping with constant \(\beta \in [0,1)\) and demiclosed at zero. Suppose \(Sol:= VI(C,A)\bigcap F(T),\) let \(B:H \rightarrow H\) be a k-Lipschitz and \(\eta \)-strongly monotone mapping with \(k>0\) and \(\eta >0\) and \(f:H \rightarrow H\) be a \(\rho \)-Lipschitz mapping with \(\rho >0\). Let \(0< \mu < \frac{2\eta }{k^2}\) and \(0< \xi \rho < \tau ,\) where \(\tau = \frac{1}{2}\mu (2\eta - \mu k^2).\) Let \(\{\alpha _k\}\) and \(\{v_k\}\) be sequences in (0, 1), \(\{x_k\}\) such that Assumptions (C1)–(C3) are satisfied. Then, sequence \(\{x_k\}\) generated by Algorithm 3.1 converges strongly to a point \(x^\dagger \), where \(x^\dagger = P_{Sol}(I - \mu B + \xi f)(x^\dagger )\) is a unique solution of the variational inequality

Proof

Let \(x^* \in \) Sol and put \(\Gamma _k : = ||x_k -x^*||^2.\) We divide the proof into two cases.

Case I: Suppose that there exists \(k_0 \in {\mathbb {N}}\) such that \(\{\Gamma _k\}\) is monotonically non-increasing for \(k\ge k_0.\) Since \(\{\Gamma _k\}\) is bounded (from Lemma 3.4), then \(\{\Gamma _k\}\) converges and therefore

Let \(z_k = (1-v_k)w_k + v_k Tw_k,\) then using Lemma 2.5 (iii), we have

Then, from Lemma ( 2.5) (ii) and (3.12), we have

Hence,

for some \(M>0\). Since \(\alpha _k \rightarrow 0\) and from (3.27), we have

Therefore,

From 3.20)3.20, we have

Using (3.10), we have

From (3.30) and (3.32), we have

Therefore,

Also from (3.29), we have

for some \(M>0\). Since \(\alpha _k \rightarrow 0\) and from (3.27), we have

Therefore from condition (C3), we have

Furthermore, from (3.34),

and

Therefore from (3.30), (3.36) and (3.37), we have

Since \(\{x_k\}\) is bounded, there exists \(\{x_{k_l}\}\) of \(\{x_k\}\) such that \(x_{k_l} \rightharpoonup p\) as \(l \rightarrow \infty .\) From (3.35) and the demiclosedness of \(I-T\) at zero, we have that \(p \in F(T).\) Also, since \(||x_k - y_k|| \rightarrow 0,\) we have from Lemma that \(p \in VI(C,A)\). Therefore, \(p \in \mathrm{Sol}:= VI(C,A) \cap F(T).\)

Next we show that \(\limsup _{k \rightarrow \infty }\langle (\mu B - \xi f)x^*, x^* - x_k \rangle \le 0,\) where \(x^* = P_\mathrm{Sol}(I -\mu B + \xi f)x^*\) is the unique solution of the variational inequality

We obtain from (2.1) and (3.38) that

Finally, we show that \(\{x_k\}\) converges strongly to \(x^*\). By Lemma 3.5 (i) we obtain

where \(a_k = \frac{2\alpha _k(\tau - \xi \rho )}{1 - \alpha _k \xi \rho },\) \(b_k = \frac{\alpha _k\tau ^2 M_1}{2(\tau - \xi \rho )} + \frac{1}{\tau - \xi \rho }\langle \xi f(x^*) - \mu B(x^*), x_{k+1} - x^* \rangle ,\) for some \(M_1 >0.\) It is easy to see that \(a_k \rightarrow 0\) and \(\sum _{k=1}^\infty a_k = \infty .\) Also by (3.39), \(\limsup _{k \rightarrow \infty }b_k \le 0.\) Therefore, using Lemma 2.11 in (3.40), we obtain

and hence \(\{x_k\}\) converges strongly to \(x^*\) as \(k \rightarrow \infty .\)

Case II: Assume that \(\{\Gamma _{k}\}\) is not monotonically decreasing. Let \(\tau : {\mathbb {N}} \rightarrow {\mathbb {N}}\) be a mapping for all \(k \ge k_0\) (for some \(k_0\) large enough) defined by

Clearly, \(\tau \) is a non-decreasing sequence, \(\tau (k) \rightarrow 0\) as \(k \rightarrow \infty \) and

Following similar process as in Case I, we have

and

Since \(\{x_{\tau (k)}\}\) is bounded, there exists a subsequence of \(\{x_{\tau (k)}\}\) still denoted by \(\{x_{\tau (k)}\}\) which converges weakly to \(z \in C.\) By similar argument as in Case I, we conclude that \(z \in \mathrm{Sol}:= VI(C,A)\cap F(T).\) From Lemma 3.5 (i), we have

Also, \(a_{\tau (k)} \rightarrow 0\) as \(k \rightarrow \infty \) and \(\limsup _{k \rightarrow \infty }b_{\tau (k)} \le 0\).

Since \(\Gamma _{\tau (k)} \le \Gamma _{\tau (k)+1}\) and \(a_{\tau (k)} >0\), we have

This implies that

and thus

Also from (3.42), we obtain

Therefore,

Furthermore, for \(k \ge k_0\), it is easy to see that \(\Gamma _{\tau (k)} \le \Gamma _{\tau (k)+1}\) if \( k \ge \tau (k)\) (that is \(\tau (k) < k\)), because \(\Gamma _j \ge \Gamma _{j+1}\) for \(\tau (k)+1 \le j \le k\). As a consequence, we obtain that for all \(k \ge k_0,\)

Hence, \(\Gamma _k \rightarrow 0\) as \(k \rightarrow \infty \). That is, \(\{x_k\}\) converges strongly to \(x^*\). This completes the proof. \(\square \)

4 Application to split equality problem

Let \(H_1,H_2\) and \(H_3\) be real Hilbert spaces, let \(C \subset H_1\) and \(Q \subset H_2\) be nonempty closed convex sets, let \(A:H_1 \rightarrow H_3\) and \(B:H_2 \rightarrow H_3\) be bounded linear operators. The Split Equality Problem (shortly, SEP) is to find (see Moudafi 2013, 2014)

The SEP allows asymmetric and partial relations between the variables x and y. If \(H_2 =H_3\) and \(B = I\) (the identity mapping), then the SEP reduces to the Split Feasibility Problem (SFP) which was introduced by Censor and Elfving (1994) and defined as

The SEP (4.1) covers many situations, such as for instance in domain decomposition for PDE’s, game theory and intensity-modulated radiation therapy (IMRT) (Attouch et al. 2008; Censor et al. 2006; Moudafi 2014). A great numbers of articles have been published on iterative methods (most of which are projection methods) for solving the SEP (4.1) in literature; see, for instance (Jolaoso et al. 2018; Ogbuisi and Mewomo 2016, 2018; Okeke and Mewomo 2017).

In this section, we adapt our Algorithm 3.1 to solve the SEP (4.1). Before that, let us first prove some lemmas which will be of help.

Lemma 4.1

(Dong and Jiang 2018) Let \(S = C \times Q \subset H:= H_1 \times H_2.\) Define \(K:= [A, -B]: H_1 \times H_2 \rightarrow H_1 \times H_2\) and let \(K^*\) be the adjoint operator of K, then the SEP (4.1) can be modified as

where \(w = \left[ \begin{array}{l} x \\ y \end{array} \right] \) is the vector associated with z.

Lemma 4.2

Let \(H = H_1 \times H_2\), define \(M: H \rightarrow H\) by \(M(w) = M(u,v) := (\phi _1(u),\phi _2(v)),\) \(w=(u,v) \in H\), where \(\phi _i:H \rightarrow H\) are \(k_i\)-Lipschitz and \(\eta _i\)-strongly monotone mapping with \(k_i >0\) and \(\eta _i >0,\) \(i =1,2.\) Then, M is k-Lipschitz and \(\eta \)-strongly monotone where \(k = \max \{k_1,k_2\}\) and \(\eta = \min \{\eta _1,\eta _2\}.\)

Proof

Let \(x = (x_1,y_1),\) \(y = (x_2,y_2) \in H,\) then we have

Hence, M is \(\eta \)-strongly monotone , where \(\eta = \min \{\eta _1,\eta _2\}.\) Also,

Hence M is k-Lipschitz with \(k = \max \{k_1,k_2\}.\) \(\square \)

In a similar process as in Lemma 4.2, we can prove the following results.

Lemma 4.3

Let \(H:= H_1 \times H_2\), let \(f:H \rightarrow H\) be defined by \(f(u,v) = (f_1(u), f_2(v)),\) \(w = (u,v) \in H\), \(f_i:H_i \rightarrow H_i\) is \(\rho _i\)-Lipschitz mapping with \(\rho _i >0\), \(i =1,2.\) Then f is \(\rho \)-Lipschitz mapping with \(\rho = \sqrt{\max \{\rho _1,\rho _2\}}.\)

Lemma 4.4

Let \(H:= H_1 \times H_2\), let \(T:H \rightarrow H\) be defined by \(T(u,v) = (T_1(u), T_2(v)),\) \(w = (u,v) \in H\), \(T_i:H_i \rightarrow H_i\) is \(\beta _i\)-demicontractive mapping with \(\beta _i \in [0,1)\), \(i =1,2.\) Then T is \(\beta \)-demicontractive mapping with \(\beta = {\max \{\beta _1,\beta _2\}}.\)

We now adapt our algorithm to solve the SEP.

Let H, S, and K be as defined in Lemma 4.1. Let T be as defined in Lemma 4.4 such that

Let M and f be as defined in Lemmas 4.2 and 4.3, respectively, such that \( 0< \mu < \frac{2\eta }{k^2}\) and \(0< \xi \rho < \tau \), where \(\tau = \frac{1}{2}\mu (2\eta - \mu k^2).\) Let \(\{\alpha _k\}\) and \(\{v_k\}\) be sequences in (0, 1) and \(\{z_k\} = \{(x_k,y_k)\}\) be generated by the following Algorithm.

Remark 4.6

Let \(z = (x,y)\), we know that

Also, since

then

Define the function \(F: H_1 \times H_2 \rightarrow H_1\) by

and \(G: H_1 \times H_2 \rightarrow H_2\) by

Now, by setting \(z_k = (x_k,y_k),\) \(y_k = (u_k,v_k)\) and \(w_k = (s_k,t_k)\) in Algorithm 4.5, Algorithm 4.5 can be rewritten in the following simultaneous form:

We now prove the convergence of Algorithm 4.7 using Algorithm 3.1.

Observe that

But,

and

Hence,

Therefore from (4.15) and (4.16), we have

From the definition of \(\delta _k\) and (4.12), we have

Hence from (4.17) and (4.18), we get

Following similar approach as in (3.22), we get

Hence \(\{||x_{k+1} -x^*||+||y_{k+1} -y^*||\}\) is bounded and, consequently, \(\{||x_{k}-x^*||\},\) \(\{||y_{k}- y^*||\}\) are bounded. Thus, \(\{x_k\}\) and \(\{y_k\}\) are bounded.

Lemma 4.8

Suppose \(\Gamma := \{(x,y)\in C\times Q:Ax = By\}\ne \emptyset .\) Let \(\lambda _n\) be a sequence in \((0, \frac{2}{||A||^2+||B||^2}),\) such that (4.11) holds and suppose \(\liminf _{n\rightarrow \infty }\lambda _n(2 - \lambda _n(||A||^2+||B||^2))>0,\) \(||x_k - u_k||\rightarrow 0, ||y_k -v_k|| \rightarrow 0\) as \(k \rightarrow \infty \). Then, there exist \(({\bar{x}},{\bar{y}})\in \Omega \) such that \(x_{k_j} \rightharpoonup {\bar{x}}\) and \(y_{k_j} \rightharpoonup {\bar{y}},\) where \(\{x_{k_j}\}\) and \(\{y_{k_j}\}\) are subsequences of \(\{x_k\}\) and \(\{y_k\}\) generated by Algorithm 4.7.

Proof

Let \((x^*,y^*) \in \Omega ,\) then from (4.10), we have

Similarly, we have

Adding (4.21 and (4.22) while noting that \(Ax^* = By^*\), we have

Also, note that

Then from (4.23) and (4.24), we have

Without loss of generality, we may assume that \(x_{k_j} \rightharpoonup {\bar{x}}\) and \(y_{k_j} \rightharpoonup {\bar{y}}\) for some \({\bar{x}} \in H_1\) and \({\bar{y}} \in H_2\). Since \(\{x_k\}\) is a sequence in C, we know that \({\bar{x}} \in C\). Similarly, \({\bar{y}} \in Q.\) Since \(x_{k_j} \rightharpoonup {\bar{x}}\) and \(y_{k_j} \rightharpoonup {\bar{y}},\) it follows that \(Ax_{k_j} \rightharpoonup A{\bar{x}}\) and \(By_{k_j} \rightharpoonup B{\bar{y}}.\) Hence, \(Ax_{k_j} - By_{k_j} \rightharpoonup A{\bar{x}} - B{\bar{y}}.\) By the lower semicontinuity of the squared norm, we have

Hence, \(A{\bar{x}} = B{\bar{y}}.\) Therefore, \(({\bar{x}},{\bar{y}}) \in \Omega .\) \(\square \)

Now using Lemma 4.8 and following the line of argument in Theorem 3.7, we can prove the following result.

Theorem 4.9

Let H, S, and K be as defined in Lemma 4.1. Let T be as defined in Lemma 4.4 such that \(\Gamma := \{(x,y)\in F(T_1)\times F(T_2):Ax = By\}\ne \emptyset .\) Let M and f be as defined in Lemmas 4.2 and 4.3, respectively, such that \( 0< \mu < \frac{2\mu }{k^2}\) and \(0< \xi \rho < \tau \), where \(\tau = \frac{1}{2}\mu (2\eta - \mu k^2).\) Let \(\{\alpha _k\}\) and \(\{v_k\}\) be sequences in (0, 1) satisfying condition (C1) and (C3) and let \(\lambda _n\) be a sequence in \((0, \frac{2}{||A||^2+||B||^2}),\) such that (4.11) holds and \(\liminf _{n\rightarrow \infty }\lambda _n(2 - \lambda _n(||A||^2+||B||^2))>0.\) Then the sequence \(\{(x_k,y_k)\}\) generated by Algorithm 4.7 converges strongly to a solution \((u,v) \in \Gamma .\)

5 Numerical examples

In this section, we present three numerical examples which demonstrate the performance of our Algorithm 3.1. Let \(T: H \rightarrow H\) be defined by

It easy to see that T is demicontractive mapping with \(\beta = \frac{77}{121},\) and \(F(T) = \{0\}\). We let \(f =I,\) \(B = \frac{1}{2}I,\) then \(\rho =1\) and \(\eta = 1 = k.\) Hence \(0< \mu < \frac{2\eta }{k^2} = 2.\) Let us choose \(\mu = 1\) so that \(\tau = \frac{1}{2}\mu (2\eta - \mu k^2) = 1.\) As \( 0< \xi \rho < \tau ,\) we have \(\xi \in (0,2).\) Without loss of generality, we choose \(\xi = 1.\)

In each example, we fix the stopping criterion as \({||x_{k+1}-x_{k}||}=\epsilon <10^{-5}\), \(\sigma = 0.7\), \(\gamma = 0.54\), \(\lambda _k = 0.15\) and let \(\alpha _k = \frac{1}{k+1}\) and \(v_k = \frac{2k+3}{4k + 12}\). The projection onto the feasible set C is carried out by using the MATLAB solver ‘ fmincon ’ and the projection onto an hyperplane \(Q = \{x \in H: \langle a,x\rangle = 0\}\) is defined by

Example 5.1

First, we consider the Hp-Hard problem. Let \(A: {\mathbb {R}}^m \rightarrow {\mathbb {R}}^m\) define by \(Ax = Mx+q\) where

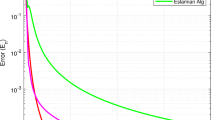

N is an \(m\times m\) matrix, S is an \(m \times m\) skew-symmetric matrix, D is an \(m \times m\) diagonal matrix, whose diagonal entries are nonnegative so that M is positive definite and q is a vector in \({\mathbb {R}}^m\). The feasible set \(C\subset {\mathbb {R}}^m\) is the closed and convex polyhedron which is defined as \(C = \{x = (x_1,x_2,\dots , x_m) \in {\mathbb {R}}^m: Qx \le b\}\), where Q is a \(l \times m\) matrix and b is a nonnegative vector. It is clear that A is monotone (hence, pseudo-monotone) and L-Lipschitz continuous with \(L = ||M||\). For experimental purpose, all the entries of N, S, D and b are generated randomly as well as the starting point \(x_1 \in [0,1]^m\) and q is equal to the zero vector. In this case, the solution to the corresponding variational inequality is \(\{0\}\) and also, \(\mathrm{Sol} : = VI(C,A) \cap F(T) =\{0\}\). We take \(m = 10, 50, 100\) and compare the output of Algorithm 3.1 with Algorithm (1.11) and Algorithm (1.8). The numerical results are reported in Table 1 and Fig. 1.

Example 5.2

Let \(H = L^2([0,2\pi ])\) with norm \(||x|| = (\int _{0}^{2\pi }|x(t)|^2\mathrm{d}t)^{\frac{1}{2}}\) and inner product \(\langle x,y \rangle = \int _{0}^{2\pi }x(t)y(t)\mathrm{d}t,\) \(x,y \in H.\) The operator \(A:H \rightarrow H\) is defined by \(Ax(t) = \frac{1}{2}\max \{0,x(t)\},\) \(t \in [0,2\pi ]\) for all \(x \in H\). It can easily be verified that A is Lipschitz continuous and monotone. The feasible set \(C = \{x \in H: \int _{0}^{2\pi }(t^2+1)x(t)\mathrm{d}t \le 1\}\). Observe that \(\mathrm{Sol} = \{0\}.\) We choose the following starting points and compare the result of Algorithm 3.1 with Algorithms (1.11) and (1.9).

The numerical results are shown in Table 2 and Fig. 2.

Example 5.3

Finally, we consider the Kojima–Shindo nonlinear complementarity problem (NCP) which was considered in Malitsky (2015), where \(n = 4\) and the mapping A is defined by

The feasible set \(C = \{x \in {\mathbb {R}}_{+}^4: x_1 + x_2 + x_3 +x_4 = 4\}\). We choose the following starting points and test our Algorithm 3.1 with Algorithm (1.11).

The results are summarized in Table 3 and Fig. 3.

Remark 5.4

In conclusion, one can see from the above examples that

there is no significant difference in terms of number of iterations between Algorithms 3.1 and (1.11), for Example 5.1. However, Algorithm 3.1 performs better than Algorithm (1.11) in terms of time of execution. This can be due to the greater number of projections in Algorithm 1.11 .

Algorithm 3.1 converges faster than Algorithms (1.8) and (1.9) in terms of number of iteration and cpu time taken for execution.

In addition, when the feasible set is complex, Algorithm 3.1 is more preferable than Algorithm (1.9) or (1.11).

References

Apostol RY, Grynenko AA, Semenov VV (2012) Iterative algorithms for monotone bilevel variational inequalities. J Comput Appl Math 107:3–14

Attouch H, Bolte J, Redont P, Soubeyran A (2008) Alternating proximal algorithms for weakly coupled minimization problems. Applications to dynamical games and PDEs. J Convex Anal 15:485–506

Aubin JP (1998) Optima and equilibria. Springer, New York

Ceng LC, Hadjisavas N, Weng NC (2010) Strong convergence theorems by a hybrid extragradient-like approximation method for variational inequalities and fixed point problems. J Glob Optim 46:635–646

Censor Y, Elfving T (1994) A multiprojection algorithm using Bregman projection in a product space. Numer Algorithms 8(2–4):221–239

Censor Y, Bortfeld T, Martin B, Trofimov A (2006) A unified approach for inversion problems in intensity-modulated radiation therapy. Phys Med Biol 51:2353–2365

Censor Y, Gibali A, Reich S (2011) Strong convergence of subgradient extragradient methods for the variational inequality problem in Hilbert space. Optim Methods Softw 26:827–845

Censor Y, Gibali A, Reich S (2011) The subgradient extragradient method for solving variational inequalities in Hilbert spaces. J Optim Theory Appl 148:318–335

Censor Y, Gibali A, Reich S (2012) Extensions of Korpelevich’s extragradient method for variational inequality problems in Euclidean space. Optimization 61:119–1132

Denisov S, Semenov V, Chabak L (2015) Convergence of the modified extragradient method for variational inequalities with non-Lipschitz operators. Cybern Syst Anal 51:757–765

Dong Q-L, Jiang D (2018) Simultaneous and semi-alternating projection algorithms for solving split equality problems. J Inequal Appl 2018:4. https://doi.org/10.1186/s13660-017-1595-5

Dong Q-L, Lu YY, Yang J (2016) The extragradient algorithm with inertial effects for solving the variational inequality. Optimization 65(12):2217–2226

Fang C, Chen S (2015) Some extragradient algorithms for variational inequalities. In: Advances in variational and hemivariational inequalities, Advances in Applied Mathematics and Mechanics, vol 33. Springer, Cham, pp 145–171

Glowinski R, Lions JL, Trémoliéres R (1981) Numerical analysis of variational inequalities. North-Holland, Amsterdam

Hieu DV, Son DX, Anh PK, Muu LD (2018) A two-step extragradient-viscosity method for variational inequalities and fixed point problems. Acta Math Vietnam. https://doi.org/10.1007/s40306-018-0290-z

Jolaoso LO, Oyewole KO, Okeke CC, Mewomo OT (2018) A unified algorithm for solving split generalized mixed equilibrium problem and fixed point of nonspreading mapping in Hilbert space. Demonstr Math 51:211–232

Jolaoso LO, Alakoya T, Taiwo A, Mewomo OT (2019) A parallel combination extragradient method with Armijo line searching for finding common solution of finite families of equilibrium and fixed point problems. Rend Circ Mat Palermo II. https://doi.org/10.1007/s12215-019-00431-2

Kanzow C, Shehu Y (2018) Strong convergence of a double projection-type method for monotone variational inequalities in Hilbert spaces. J Fixed Point Theory Appl. https://doi.org/10.1007/s11784-018-0531-8

Khanh PD, Vuong PT (2014) Modified projection method for strongly pseudo-monotone variational inequalities. J Glob Optim 58:341–350

Khobotov (1987) Modification of the extragradient method for solving variational inequalities and cerain optimization problems. USSR Comput Math Math Phys 27:120–127

Kinderlehrer D, Stampachia G (2000) An introduction to variational inequalities and their applications. Society for Industrial and Applied Mathematics, Philadelphia

Korpelevich GM (1976) An extragradient method for finding saddle points and for other problems. Ekon Mat Metody 12:747–756

Lin LJ, Yang MF, Ansari QH, Kassay G (2005) Existence results for Stampacchia and Minty type implicit variational inequalities with multivalued maps. Nonlinear Anal Theory Methods Appl 61:1–19

Maingé PE (2008) A hybrid extragradient viscosity method for monotone operators and fixed point problems. SIAM J Control Optim 47(3):1499–1515

Maingé PE (2008) Strong convergence of projected subgradient methods for nonsmooth and nonstrictly convex minimization. Set-Valued Anal 16:899–912

Malitsky YuV (2015) Projected reflected gradient methods for variational inequalities. SIAM J Optim 25(1):502–520

Marcotte P (1991) Applications of Khobotov’s algorithm to variational and network equilibrium problems. INFOR Inf Syst Oper Res 29:255–270

Marino G, Xu HK (2007) Weak and strong convergence theorems for strict pseudo-contraction in Hilbert spaces. J Math Anal Appl 329:336–346

Mashreghi J, Nasri M (2010) Forcing strong convergence of Korpelevich’s method in Banach spaces with its applications in game theory. Nonlinear Anal 72:2086–2099

Moudafi A (2013) A relaxed alternating CQ-algorithm for convex feasibility problems. Nonlinear Anal 79:117–121

Moudafi A (2014) Alternating CQ-algorithms for convex feasibility and split fixed-point problems. J Nonlinear Convex Anal 15:809–818

Nadezhkina N, Takahashi W (2006) Weak convergence theorem by an extragradient method for nonexpansive mappings and monotone mappings. J Optim Theory Appl 128:191–201

Ogbuisi FU, Mewomo OT (2016) On split generalized mixed equilibrium problems and fixed point problems with no prior knowledge of operator norm. J Fixed Point Theory Appl 19(3):2109–2128

Ogbuisi FU, Mewomo OT (2018) Convergence analysis of common solution of certain nonlinear problems. Fixed Point Theory 19(1):335–358

Okeke CC, Mewomo OT (2017) On split equilibrim problem, variational inequality problem and fixed point problem for multi-valued mappings. Ann Acad Rom Sci Ser Math Appl 9(2):255–280

Solodov MV, Svaiter BF (1999) A new projection method for variational inequality problems. SIAM J Control Optim 37:765–776

Taiwo A, Jolaoso LO, Mewomo OT (2019a) A modified Halpern algorithm for approximating a common solution of split equality convex minimization problem and fixed point problem in uniformly convex Banach spaces. Comput Appl Math 38(2):77

Taiwo A, Jolaoso LO, Mewomo OT (2019b) Parallel hybrid algorithm for solving pseudomonotone equilibrium and split common fixed point problems. Bull Malays Math Sci Soc. https://doi.org/10.1007/s40840-019-00781-1

Taiwo A, Jolaoso LO, Mewomo OT (2019c) General alternative regularization method for solving split equality common fixed point problem for quasi-pseudocontractive mappings in Hilbert spaces. Ric Mat. https://doi.org/10.1007/s11587-019-00460-0

Thong DV, Hieu DV (2018) Modified subgradient extragradient method for variational inequality problems. Numer Algorithms 79:597–601

Thong DV, Hieu DV (2018) Modified subgradient extragdradient algorithms for variational inequalities problems and fixed point algorithms. Optimization 67(1):83–102

Tian M (2010) A general iterative algorithm for nonexpansive mappings in Hilbert spaces. Nonlinear Anal 73:689–694

Vuong PT (2018) On the weak convergence of the extragradient method for solving pseudo-monotone variational inequalities. J Optim Theory Appl 176:399–409

Witthayarat U, Kim JK, Kumam P (2012) A viscosity hybrid steepest-descent method for a system of equilibrium and fixed point problems for an infinite family of strictly pseudo-contractive mappings. J Inequal Appl 2012:224

Xu HK (2002) Iterative algorithms for nonlinear operators. J Lond Math Soc 66:240–256

Yamada I, Butnariu D, Censor Y, Reich S (2001) The hybrid steepest descent method for the variational inequality problems over the intersection of fixed points sets of nonexpansive mappings. Inherently parallel algorithms in feasibility and optimization and their application. North-Holland, Amsterdam

Zegeye H, Shahzad N (2011) Convergence theorems of Mann’s type iteration method for generalized asymptotically nonexpansive mappings. Comput Math Appl 62:4007–4014

Acknowledgements

The authors thank the referees of this paper whose valuable comments and suggestions have improved the presentation of the paper. The first author acknowledges with thanks the bursary and financial support from the Department of Science and Technology and National Research Foundation, Republic of South Africa Center of Excellence in Mathematical and Statistical Sciences (DST-NRF COE-MaSS) Doctoral Bursary (Grant no. BA-2019-067). The second author acknowledges with thanks the International Mathematical Union Breakout Graduate Fellowship (IMU-BGF) (Grant no. IMU-BGF-2019-10) Award for his doctoral study. The fourth author is supported by the National Research Foundation (NRF) of South Africa Incentive Funding for Rated Researchers (Grant Number 119903). Opinions expressed and conclusions arrived are those of the authors and are not necessarily to be attributed to the NRF and CoE-MaSS.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Pablo Pedregal.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Jolaoso, L.O., Taiwo, A., Alakoya, T.O. et al. A unified algorithm for solving variational inequality and fixed point problems with application to the split equality problem. Comp. Appl. Math. 39, 38 (2020). https://doi.org/10.1007/s40314-019-1014-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-019-1014-2

Keywords

- Variational inequality

- Extragradient method

- Split equality problem

- Hyrbid-steepest descent

- Armijo line search