Abstract

Over the past several decades, empirical and theoretical work has focused on the question of whether it is possible to purposefully improve cognitive functioning through behavioral interventions. Accordingly, a field is emerging around cognitive training, be it through executive function training, video game play, music training, aerobic exercise, or mindfulness meditation. One concern that has been raised regarding the results of this field centers on the potential impact of participants’ expectations. Suggestions have been raised that participants may, at least in some cases, show improvements in performance because they expect to improve, rather than because of any mechanisms inherent in the behavioral interventions per se. The present paper discusses the latest views on expectations and the new methodological challenges they raise when considering the effectiveness of behavioral interventions on human behavior, and in particular cognition.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

There is currently a great deal of interest in the possibility that human cognitive function can be purposefully improved via dedicated long-term behavioral training (Strobach and Karbach 2016). Indeed, the past few decades have seen an explosion of work examining the potential for behavioral training to positively impact a host of cognitive functions, including fluid intelligence (Au et al. 2015), working memory (Nutley and Söderqvist 2017), spatial thinking (Uttal et al. 2013a), executive function (Titz and Karbach 2014), and selective attention (Parsons et al. 2016).

Like all nascent scientific domains, much of the research to date has focused on basic science questions. Such investigations include, for instance, examinations of the core mechanisms underlying cognitive change and how these could be deliberately manipulated to produce intended outcomes (Bavelier et al. 2010; Deveau et al. 2015). Yet, the obvious real-world significance of successful cognitive enhancement has also prompted a substantial amount of work probing possible avenues for translation. Many distinct populations of individuals could potentially realize significant benefits from effective means of cognitive enhancement, especially those showing deficits in cognitive functioning for reasons related to various disorders, damage, disease, and/or age-related degradation (Biagianti and Vinogradov 2013; Mahncke et al. 2006; Ross et al. 2017). Even among those individuals within the normal range of cognitive functioning, cognitive enhancement could be advantageous in a host of real-world situations, from law enforcement, to piloting, to athletics, to academic pursuits (McKinley et al. 2011; Rosser et al. 2007; Uttal et al. 2013a; Uttal et al. 2013b).

While the results of numerous individual studies and meta-analyses have provided reason for optimism that cognitive functions can be enhanced via some forms of behavioral training, significant debate in the field still persists (Green et al. 2019; Morrison and Chein 2011). In some cases, the debate has focused on whether the existing set of empirical results in the field should in fact be interpreted in a positive direction. This includes discussions centered on whether given forms of training do in fact induce improvements in cognitive functions, what the size of any positive effects may be, and/or whether any observed changes are “broad” or “narrow” in scope (e.g., for discussions related to the overall impact of action video games, see Bediou et al. 2018 and Hilgard et al. 2019; for discussions related to the overall impact of N-back training see Au et al. 2015 and Melby-Lervåg et al. 2016; for discussions related to CogMed, see Brehmer et al. 2012 and Shipstead et al. 2012). In other cases, the debate has focused on the methodology employed in generating the existing data in the field (e.g., for a discussion of differences in working memory training techniques, see Pergher et al. 2020; for discussion of validity of transfer effects, see Noack et al. 2014). In particular, a number of recent critiques and commentaries have argued that the existing methodology employed in the field is insufficient to draw firm conclusions regarding the efficacyFootnote 1 of behavioral training interventions (Boot et al. 2011; Boot et al. 2013; Roque and Boot 2018; Simons et al. 2016). In particular, it has been argued that the standard methodological approaches employed in the field are inadequate with respect to blinding (i.e., preventing both experimenters and participants from knowing information about the study conditions that could potentially influence outcomes). Inadequate blinding leaves alive the possibility that participant expectations, rather than the behavioral training itself, might be a causal agent driving any observed changes in cognition.

Here, we first discuss the design features of cognitive training interventions that have been highlighted as being potentially vulnerable to expectation effects as well as review the existing evidence for expectation-driven effects in cognitive enhancement. We then discuss the core issues surrounding how to control for participant expectations in the context of behavioral interventions. In doing so, we identify how the challenges in the cognitive training domain correspond to important concepts and empirical results in other research domains that have more fully considered expectation effects, in particular the study of pain analgesia. Finally, we suggest that, rather than considering expectation-based effects solely as to be avoided or controlled for methodological confounds, there may be considerable value in studying expectation effects in cognitive interventions for their own sake, in the same way as studies of placebo and nocebo effects have been probed in the study of other physiological systems and conditionsFootnote 2 (Price et al. 2008).

Expectations in Behavioral Interventions for Cognitive Enhancement: The Impact of Research Design

The space of individual approaches that fall under the category label of “behavioral interventions for cognitive enhancement” is incredibly broad. Such approaches share the broad goal of producing lasting improvement in one or many cognitive functions, but otherwise may diverge substantially from one another. Indeed, individual interventions in this field differ in everything from their basic theoretical underpinnings (e.g., being inspired by the biological science of neuroplasticity – Nahum et al. 2013 – or Eastern meditation practices – Tang et al. 2007) to their look and feel (e.g., employing reasonably unaltered psychology lab tests – Jaeggi et al. 2008 – or graphically complex and immersive commercial video games – Green and Bavelier 2003). Yet, despite these sometimes-massive differences in the particulars of the individual interventions themselves, because they have a shared goal (i.e., to demonstrate that some behavioral interventions induce some positive changes in cognitive function), most studies utilize a reasonably common set of core methods to assess the possible effectiveness of those interventions. In particular, in the case of cognitive training, experimental designs are tailored to ask whether a given behavioral intervention produces cognitive benefits.

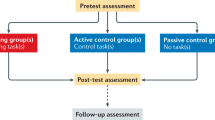

The research designs used (see Green et al. 2019) share many features of randomized controlled trials (RCTs), as employed in other applied domains such as clinical studies. Participants first complete one or more baseline assessments of cognitive functioning. They are then randomly assigned to complete either an experimental training paradigm (i.e., the behavioral training that is hypothesized to improve cognitive function) or a control training paradigm (i.e., a form of training that is hypothesized to not improve cognitive function). Finally, after the end of their respective training, all participants complete a post-test, which once again assesses the same cognitive functions as at baseline. The critical to-be-answered question is thus whether the experimental treatment group improved more between baseline and post-test than did the active control group. If such an outcome is observed, it suggests that the intervention is effective.

In drug trials, the two arms of such a study might correspond to one group that receives an experimental drug (e.g., via a pill) that is hypothesized to have a positive effect and a control group that receives an inert sugar pill that is identical in appearance to the experimental drug. Because the pills that are received by the two groups are outwardly identical, participants cannot utilize this appearance information to infer whether they are in the experimental or control group. In other words, participants are “blinded” to the intervention. Given this, any beliefs that participants have about expected efficacy should be equivalent in the two groups and therefore cannot explain any differences in outcome between the two groups. Note that this methodology does not “prevent” participants from developing expectations. Instead, the goal of this methodology is to attempt to ensure that patients’ expectations do not systematically differ between the treatment and control groups. Unfortunately, perfect blinding is rarely (Haahr and Hróbjartsson 2006). Even if the outward appearance of the experimental drug and inert pill is matched, it may nonetheless be possible for participants to intuit which type of pill they are taking in other ways. For instance, active drugs typically produce some manners of side effects, while a truly inert (sugar) control pill will not. Therefore, participants could theoretically use the presence/absence of side effects as a cue to the condition to which they have been assigned (Hrobjartsson et al. 2007; Moscucci et al. 1987).

In the case of behavioral interventions, of which cognitive interventions are one sub-type, the issues are even more substantial as the very nature of behavioral interventions inescapably prevents a direct match in the outward look and feel of the two arms of a study. There is simply no way to create two outwardly identical behavioral interventions, where one is likely to positively impact cognitive function and the other one is not. After all, for any behavioral intervention, the content of the training, including its appearance, and the tasks participants are asked to perform are integrally related to the expected cognitive outcomes. Two training conditions that are perfectly matched in terms of outward appearance and participant interactions would not just be “outwardly” identical – they would be literally identical. If two training conditions are to have different cognitive outcomes (i.e., one producing positive changes and one being inert), they must necessarily differ in content, challenges, and/or tasks. And because it is clearly not possible to hide the outward appearance or participant interactions from the participants themselves, behavioral interventions for cognitive enhancement necessarily entail that participants are aware of the content and feel of their training. These necessary differences across conditions may also induce knock-on effects that effectively unblind participants in the same way as side effects may unblind participants in the medical domain. For example, most forms of behavioral training for cognitive enhancement involve putting some set of cognitive systems under sustained load, which may produce fatigue that is identifiable to participants.

Given that participants cannot be blinded to the training condition per se, it has been argued that the next best option is to attempt to blind participants to the intended outcome of their training condition. Indeed, as noted above, the general goal of blinding in the context of intervention studies is not to eliminate expectations per se. Instead, the goal is to ensure that the expectations that are induced by interacting with the experimental training condition and with the control training condition are matched. In the cognitive training literature to date, many studies have attempted to meet this goal by describing both experimental and control training paradigms to participants as being active (as in Green and Bavelier 2003; Jaeggi et al. 2014; Smith et al. 2009; Tsai et al. 2018). While it remains a commendable practice to introduce each of these arms as potentially active, this practice does not entirely settle the issue of expectations. Indeed, it will always remain true that what participants see and do will be different in important ways depending on whether the training was designed to improve cognitive function or chosen to result in no change or improving other domains of behavior. Thus, actually assessing whether expectations were in fact equivalent remains a crucially important quality measure of any intervention study. Yet, as reviewed below, how exactly to best assess such expectations remains an area of active debate and research. Before turning to this issue though, we first consider the extent to which there is evidence for expectation-based effects in cognitive task performance more generally, as well as in the context of behavioral interventions for cognitive enhancement more specifically.

Expectations in Behavioral Interventions for Cognitive Enhancement: Mixed Evidence from Highly Divergent Methods

As of today, only a handful of studies have investigated the effect of expectations on cognitive performance, with even fewer doing so in the context of interventions for cognitive enhancement. Yet, there is certainly evidence to suggest that some mechanisms that could potentially work in concert with, or be triggered by, expectations could in turn result in changes in performance on many cognitive lab-based tasks. For example, one’s sense of self-efficacy is known to be linked with increases in task performance, in particular by increasing intrinsic motivation, and, as a result, the degree of effort individuals put into a task and how long they will persist (Bandura 1977; Bandura 1980; Bandura et al. 1982; Greene and Miller 1996; Honicke and Broadbent 2016; Pajares 1996; Richardson et al. 2012; Sadri and Robertson 1993; Schunk 1991; Walker et al. 2006). Other work has found an influence of self-efficacy on performance through goal setting, with students with higher self-efficacy ratings setting higher goals and having better grades (Zimmerman and Bandura 1994). This latter phenomenon dovetails nicely with another mechanism known to affect cognitive performance, growth mindset. Growth mindset describes the idea that individuals who have the internal belief that capabilities can be developed will seek more challenging goals and will engage more in their tasks (Dweck et al. 2011).

Critically, all of these mechanisms are potentially modifiable through context and as such could serve as the basis of expectation-induced effects. For example, in a group of individuals who had previously experienced head injuries, Suhr and Gunstad (2002) observed decreased performance in an immediate and a delayed recall memory task in participants who received the verbal suggestion that they may have attentional problems as a result of their injury. Participants’ self-rated reports on effort put into the task, confidence in their performance, and perceived self-efficacy revealed that their observed performance reduction might be mediated by those factors. In a similar vein, Oken et al. (2008) gave placebo pills during 2 weeks to healthy seniors, suggesting it was a cognitive enhancer. They found a positive effect of the pill on immediate memory tasks, as well as a better reaction time for choices, but not on other cognitive outcome measures such as delayed memory, letter fluency, and simple reaction time. The authors identified perceived stress and self-efficacy as potential predictors of this expectation effect.

Among the handful of studies available, expectations have been documented to drive improvements in various aspects of cognitive function including perception (Langer et al. 2010; Magalhães De Saldanha da Gama et al. 2013), problem-solving ability (Madzharov et al. 2018), memory (Oken et al. 2008), general knowledge (Weger and Loughnan 2013), creativity (Rozenkrantz et al. 2017), working memory (Rabipour et al. 2018b), and implicit learning (Colagiuri et al. 2011). Yet, at the same time, there have also been a host of null effects on specific cognitive outcome measures such as verbal fluency (Oken et al. 2008), psychomotor speed (Suhr and Gunstad 2002), attention (Oken et al. 2008; Suhr and Gunstad 2002), and the figural component of the Torrance creativity test (Rozenkrantz et al. 2017).

Thus, the evidence remains mixed as to the impact of expectations on cognitive performance. One possible explanation for the mixture of outcomes observed in the literature to date is that studies have targeted a wide variety of expectation sources and have utilized a host of different methods for inducing expectations. For example, Rozenkrantz et al. (2017) used explicit verbal suggestions to induce expectations. In their study, participants were first exposed to a particular odor. One group of participants was told that this odor was supposed to enhance creativity, while the other received no information. This explicit verbal route of driving expectations accounts for the majority of methodological approaches covered in the literature review above (73% of studies). However, there are other potential ways to manipulate expectations. For instance, Madzharov et al. (2018) provided no explicit information. Instead, in their study, they took advantage of the fact that participants had likely come to associate coffee with arousal and enhanced cognitive abilities. They thus simply exposed participants to a coffee-like aroma (or not) with the goal of manipulating expectations and, through this, task performance. They found that when a coffee odor was released, subjects not only expected to have better performance; they in fact made fewer errors in solving math problems.

Another source of variability in this nascent literature is the outcome measures. The few existing studies have assessed a host of abilities, some of which may be more or less susceptible to change via expectations. For example, a number of studies have seen positive effects of expectations only on participants’ subjective perceptions of their performance, but not on objective measures of task performance. This includes work by Schwarz et al. (2016), in which participants in the group receiving a placebo suggestion indicated the belief that they had performed better on a flanker task, but in fact did not improve their performance. Similarly, Looby and Earleywine (2011) observed that participants who took an inert placebo pill that they were told was Ritalin provided self-reports indicating higher arousal, but did not improve in any of a number of different cognitive tests.

While the results above certainly speak to some of the key theoretical issues that come into play in the context of behavioral interventions for cognitive enhancement, none was explicitly performed in a cognitive training context. Work performed in context of interventions meant to durably enhance cognitive performance represents a considerably smaller body of work. Among these studies, it appears important to distinguish between studies that have employed techniques to bias participant sampling from those that manipulated expectations in a randomly selected sample. Concerning the former, Foroughi et al. (2016) recruited participants to take part in a working memory training study via two different posters; one poster was purposed to induce belief in the efficacy of the training (“Numerous studies have shown working memory training can increase fluid intelligence”) and the second was meant to induce neutral expectations about the training and/or its purpose (“Looking for SONA credits? Sign up for a study today”). Upon arriving at the lab, participants first completed a pre-test assessment of fluid intelligence. They then completed one session of working memory training, using the common dual N-back paradigm. Then, on the next day, the participants completed a post-test assessment of fluid intelligence. No changes in fluid intelligence scores were noted in the group recruited via the neutral expectations’ poster. However, large and significant improvement in scores on the fluid intelligence measures was observed in the group recruited via the expectation-inducing posters. Katz et al. (2018) meanwhile utilized a similar biased-sampling methodological approach, but saw no such positive results. They recruited participants either with flyers that offered them the opportunity to participate in a cognitive training study to improve fluid intelligence without compensation (intrinsic motivation, positive expectation) or with flyers that did not provide any information about potential gains in fluid intelligence, but offered a considerable amount of monetary compensation (extrinsic motivation, neutral expectation). Both training groups improved in fluid reasoning over the course of 20 sessions over and above an active control group but did not differ from one another. Such a pattern of results is in line with the larger literature indicating that participant sampling and selection can bias outcome performance, but indicate no clear impact of expectations per se.

Concerning behavioral intervention studies that have more directly manipulated participant expectations as part of the experimental design, the results are, at the moment, quite sparse. As of today, we identified only three that did actually manipulate expectations in the context of such a cognitive training intervention. Rabipour et al. (2019) manipulated expectations in a cognitive training program (25 sessions of training in either the Activate commercial program or Sudoku and N-back exercises). Before training, participants were either told they would receive a cognitive training known to improve performance or that the training was not expected to have any benefits. The results showed no effects of expectations on cognitive outcomes. The only expectation effect was that participants who received the high-expectation script showed a tendency to engage more in their training program compared to low-expectation priming condition. Tsai et al. (2018) utilized an expectation induction protocol in a training study (7 sessions of training in either an active N-back training condition or a control knowledge training condition). In this study, prior to training, participants were presented with a pre-recorded narrated presentation outlining the expected outcomes of the study. These expectations could be either positive (e.g., that visual N-back training would result in significant improvement not just in the visual N-back task but other tasks as well) or negative (e.g., that visual N-back training would result in significant improvement on just the visual N-back task, but not any other tasks). The authors found no influence of the initial expectation condition on the final results. On the other hand, a more recent study investigating potential expectation effect on visual attention performance concluded that video game training might be susceptible to expectation effect (Tiraboschi et al. 2019). Participants’ attention was evaluated with an Attentional Blink task and a Useful Field of View (UFOV) task at pre- and post-test. In between, participants underwent a single training session on a video game hypothesized to have no effect on visual performance (Sudoku). The placebo group, however, was told that the training would help them perform better while the control group received the instruction that it was just to give them a break between the two testing sessions. Results showed a better performance in UFOV task for the placebo group at the post-testing session.

Other studies did not manipulate expectation per se but measured them with the aim of controlling for this factor in case of differences in expectations. Two studies found no differences in the expectations triggered by active vs control interventions in the face of performance differences in aspects of attention (Baniqued et al. 2014; Ziegler et al. 2019). Among the studies that did find differences (Ballesteros et al. 2017; Baniqued et al. 2015; Haddad et al. 2020; Bavelier, personal communication; Green, personal communication), participants in the experimental group – playing certain video games or undergoing a mindfulness program– had higher expectations of improvement, but those expectations were not predictive of performance at post-training assessment.

In all, there is a growing body of work showing that factors such as self-efficacy, stress, or arousal may change cognitive performance via a transient change in the participants’ internal state. Furthermore, there is reason to suspect that these factors could be promoted by participant-level expectations. Yet, whether manipulating participants’ expectations induces durable changes in cognitive performance remains an important question, but one that remains largely unanswered. This is unfortunate as a key feature of behavioral interventions for cognitive enhancement is that they seek to induce long-lasting cognitive benefits that are still visible days to months after the end of training, and not just state-dependent effects. To date, in the specific context of behavioral interventions, the current results seem to suggest that although expectations can be manipulated, whether they will impact cognitive performance in a durable manner remains largely unsubstantiated. Despite this mixed evidence though, it nonetheless remains an important consideration for future work. In particular, it is not impossible that the varied outcomes are a direct result of the range of approaches and theoretical underpinnings across the few existing studies. As such, we next consider different conceptualizations of what expectations entail before turning to the question of how expectations may be best leveraged in the field of behavioral interventions.

What Are Expectations, Where Do They Come From, and How Might Different Expectations Be Combined?

A number of different terms such as “placebo effect,” “belief,” and “expectation” have been frequently utilized throughout areas of the psychological and medical domains. In some cases, authors appear to use these terms interchangeably to indicate essentially the same construct or phenomenon and thus alternate between the different terms even within a single document (Foroughi et al. 2016; Madzharov et al. 2018; Magalhães De Saldanha da Gama et al. 2013; Tsai et al. 2018). In other cases, it has been argued that these terms refer to distinct phenomena and that the field would be better served to utilize terminology in a more precise manner (Anguera et al. 2013; de Lange et al. 2018; Schwarz et al. 2016). Thus, within the scope of this manuscript, it is important to specify how the term “expectations” will be utilized. To complement the current consensus on expectation theory employed by the medical literature (Bowling et al. 2012; Kern et al. 2020), we will use a working definition that frames expectations as predictions of intervention-related cognitive performance. An expectation effect is therefore a change in measured cognitive performance that occurs after an intervention as a result of such expectations.

Critically, predictions regarding the possible effects of a given intervention can stem from a variety of sources (Crow et al. 1999; de Lange et al. 2018; Schwarz et al. 2016). Some of these sources may be part of the explicit experimental context. For instance, as noted above, one obvious way that the information used to generate expectations may be obtained is via simple verbal information. In the field of pain, for example, an inert pill could be described as an analgesic that will reduce the experienced level of pain (). A second possible source for expectations is via observational learning, wherein an individual learns vicariously through witnessing another person’s response to treatment (Colloca and Benedetti 2009). This could occur, for instance, if one observes another individual be administered a pill and afterward shows behaviors that are consistent with a reduced level of pain (e.g., less intense facial expressions consistent with less pain). A final possible source for expectations is via prior personal experience (Benedetti et al. 2003; Colloca et al. 2008; Voudouris et al. 1990; Zunhammer et al. 2017). For example, an individual may have consistently experienced pain relief from taking one particular type of pill in the past.

Obviously, these modes of expectation induction can also be combined. As an example, in one study, participants were first given painful stimulus (iontophoretic pain or ischemic pain) and were asked to rate the level of pain they experienced (Voudouris et al. 1989). They were then given an inert substance that was verbally described as an analgesic. The reduction in pain that the participants reported when the painful stimulus was then given again (as compared to when it was first given) was taken to reflect the impact of verbally given expectations about the analgesic effect of the applied substance. A separate group of participants had the same initial experience – an initial painful stimulus that they rated the pain of and then the application of an inert substance that was described as an analgesic. Then, although they were told they were being given a shock of the same intensity as previously, they were, in fact, given a shock of lower intensity. In essence, this was meant to create an associative pairing between the inert substance and lower experienced pain. The two groups were then given a third painful stimulus of the same intensity as initial and were asked to rate the pain. Any reduction of pain in the second group of participants beyond what was seen in the first group was taken to reflect the impact of associative learning–based expectations on top of the verbal associations that both groups received.

Importantly, these three forms of inducing expectations – verbal instruction, observational learning, and associative learning – involve different mechanisms and thus will manifest in different ways. Indeed, there are some pointers in the literature that verbally induced expectations may not be as long-lasting and/or as reliable as associatively learned expectations (Colloca and Benedetti 2006). For example, the pain literature has demonstrated that the placebo effect is enhanced when explicitly given information is followed by a conditioning protocol where the inert substance is purposefully paired with a reduction in the experienced pain to induce a conditioned, learned response. Such associative learning demonstrates particularly powerful effects of expectation within controlled laboratory settings (e.g., Colloca et al. 2008; Price et al. 1999; Voudouris et al. 1990). To our knowledge though, the potential that different types of expectancy manipulations produces meaningfully different outcomes has not been systematically tested in the field of cognitive function.

The variety of sources that can give rise to expectations calls into question how these may be combined into a final expectation. A process very much like (or identical to) Bayesian cue combination seems highly plausible (Deneve and Pouget 2004; Körding and Wolpert 2006; Smid et al. 2020). In the Bayesian framework, the expectation induced by each source (verbal instruction, observation of others, previous experience) would be modeled as a distribution over possible values. The final expectation will thus be an average of the expectations induced by the individual sources, weighted by their respective uncertainties.

Given such a framework, when deriving hypotheses about the expected outcome that will arise via inducing certain types of expectations, it will be critical to consider factors that will influence both the magnitude of the respective expectations and the uncertainty associated with those estimated magnitudes. For example, instructions coming from a widely respected primary investigator from a prestigious institution might result in more certainty in the estimated magnitude than the exact same instructions delivered by an undergraduate research assistant (Haslam et al. 2014). Such a Bayesian perspective naturally aligns with reports that direct experience (in the form of associative learning) tends to produce larger effect sizes than verbal instruction (Amanzio and Benedetti 1999; Colloca and Benedetti 2006; Colloca et al. 2008; Voudouris et al. 1990), as direct experience should be associated with less uncertainty regarding the magnitude of the expectation.

This line of reasoning has been elegantly described by Buchel and colleagues in the case of placebo analgesia (Büchel et al. 2014). The estimate to be retrieved by the nervous system in this case corresponds to the subjective pain level experienced after a thermal probe was applied to the skin. Buchel and colleagues highlighted how different priors over the most likely to-be-experienced pain impacted the final subjectively reported level of pain (Fig. 1). Specifically, Grahl et al. (2018) manipulated the variance of the prior through two different conditioning phases in an electrodermal heat stimulation protocol. During the conditioning phase, they repeatedly applied painful stimuli (lower than the baseline stimulus – i.e., as in the experiment described above). The key manipulation was that these painful stimuli were drawn from a distribution that was either high variance (i.e., included many different pain intensities) or low variance (i.e., was generally the same pain intensity). A high-variance distribution was meant to suggest an uncertain impact of the analgesic, while a low-variance distribution was meant to suggest a certain, consistent impact of the analgesic. Consistent with a Bayesian view, the authors observed a shift toward lower pain perception for the group that underwent heat stimulation with low variance. Their analysis of the neural underpinnings of this effect suggested a role for the periaqueductal gray in this effect.

Adapted from Grahl et al. (2018). a In the case of pain analgesia, the solid gray vertical line represents the actual pain delivered (noting that the units are likely more accurately described as temperature, but the example is more intuitive, treating the full x-axis as pain). The red curve (or light gray) is the pain signaled by the skin receptors (i.e., an unbiased noisy estimate centered on the true pain stimulus). The blue curve (or light gray) represents the prior belief derived here from experience/expectations about how much pain will be experienced. When the blue curve is combined with the red curve, the final subjective reported level of pain is determined by the green dashed curve. b Panel B shows the same basic phenomenon as Panel A with the exception that the prior belief arises via different processes. In the top panel, the prior belief comes entirely from explicit verbal instructions that less pain will be experienced. In the bottom panel, the prior belief comes from personal experience that less pain will be experienced. In both cases, this produces a final pain experience (green dashed curve) that is lower than the actual applied pain (red curve). The key difference is that the prior distribution is broader (more uncertain) in the case of the verbal instructions (top panel) than for the personal experience (bottom panel). This causes the final estimate to shift more toward the prior estimate from personal experience (i.e., toward less experienced pain) than from verbal instruction. In both cases though, the final distribution is biased, or in other words inaccurate, indicating a lesser sensitivity of participants to the true painful stimulation. While in the context of pain analgesia research this is the desired result, we note that in the case of cognitive training, there are other pathways for expectations to act

One limitation in attempting to extrapolate from the domain of placebo analgesia to the domain of cognitive training is that in the domain of placebo analgesia, a shift in bias is the desired result. In other words, the goal in this domain is for participants to indicate that a particular stimulus produced less pain than it had when they experienced the same stimulus previously. The goal is not for the participants to “more accurately” detect the level of pain. In the domain of cognitive training meanwhile, inducing a bias would be of little interest, as this would not be considered to represent a true improvement of cognitive processing. For instance, a shift in bias in a reaction time task will produce faster response times. But because this increase in speed will necessarily come at the cost of reduced accuracy, it would not be understood as representing cognitive enhancement. Similarly, a shift in bias that causes a higher hit rate during an old/new memory recognition task would also necessarily produce more false alarms. This again would not be considered to represent enhanced cognitive abilities.

In the context of cognitive training, it is certainly possible that expectations could create bias shifts as has been observed in pain analgesia. Given this, it is critical that methodology be employed that can differentiate shifts in bias from shifts in sensitivity. For example, behavioral training utilizing action video games has been consistently associated with faster reaction times (Green et al. 2010). Faster reaction times could arise via either shifts in bias or shifts in sensitivity. However, these mechanisms can be dissociated when other measures (e.g., accuracy) are taken. For example, Green, Pouget, and Bavelier et al. (2010) documented that when participants were asked to perform a dot motion direction perceptual task, individuals trained on action video games displayed faster RTs, but equivalent levels of accuracy as compared to individuals trained on a control video game. This finding of faster RTs but equivalent levels of accuracy could not be accounted for by shifts in bias alone. Instead, using drift diffusion modeling, the authors found the full pattern of results was best captured by a combination of an increase in sensitivity along with a decrease in bias in the action-trained group.

Unfortunately, many cognitive effects are still documented primarily through the assessment of reaction time differences across conditions rather than mechanistic modeling. Such subtraction techniques, although pervasive throughout cognitive psychology, are frequently ill-suited to properly distinguish changes in sensitivity from changes in bias (which could easily be driven by expectations). This is particularly true because such methods are often designed to produce near-ceiling levels of accuracy, at which point it becomes very difficult to determine if accuracy differences exist. Modeling behavior in terms of sensitivity and bias requires more sophisticated approaches than is frequently employed in the cognitive training literature. Such approaches do though exist, for instance, in the case of attention or working memory (Dowd et al. 2015; Van Den Berg et al. 2012; Yu et al. 2009). These experimental designs and modeling approaches should be more pervasive in cognitive psychology, and cognitive training in particular (O’Reilly et al. 2012).

While it is reasonably clear how expectations could act on bias, there are possible mechanisms by which expectations could also act on the sensory estimate derived from the sensory stimulation (i.e., sensitivity). In a typical visual training task, for example, the blue curve in Fig. 2 could correspond to the estimate of the orientation of a Gabor patch, given that a 25-degree Gabor patch was physically presented. The question then is how expectations could serve to improve the sensory estimate of the Gabor patch orientation – in other words to narrow the width of the blue curve around the true value. Here, there are a number of potential routes. For instance, if a participant’s expectation was that they should show improvement on the task, then they might “try harder,” attend more closely, have more stable fixations, or become more physiologically aroused, when they are tested again after their training. Any of these mechanisms could potentially produce greater orientation sensitivity (Denison et al. 2018). Because participants are trying harder/attending more closely/more aroused during the post-test, their likelihood over orientation becomes narrower, or in other words, their sensitivity/accuracy improves (Fig. 2). Unfortunately, the same basic outcome, a narrowing of the likelihood, is also the expected result from cognitive training itself. This leads to a conundrum, as either mechanism (expectations or cognitive training) would manifest in much the same way.

Illustrations of how expectations may affect performance in cognitive training studies. Performance may vary from pre-test to post-test following cognitive training because of either the direct effect of cognitive training in sharpening the likelihood, an effect that is expected to be long-lasting, or the indirect effect of expectations on attentional or motivational mechanisms such as arousal or trying harder. Importantly, the latter is expected to be transient, and not as long-lasting as that of cognitive training. Note that the prior is here represented as a nearly flat distribution, as is often the case in cognitive tasks where there is no preferred response

This state of affairs raises the issue of whether expectations and cognitive training may be teased apart when both target sensitivity. Doing so may be possible through careful methodological considerations. First, in many cognitive training designs, the experimenters do not necessarily expect that all aspects of cognition will be equally enhanced by the training. Thus, by including tasks in the pre- and post-test battery that are not expected to be impacted by training, these could potentially control for expectation effects. The basic logic is that if improvements are seen on a set of tasks that should not be impacted by the training itself, but that could be impacted by changes in attention/effort/motivation, then expectations are possibly at play on all tasks. Second, while cognitive training aims to change the likelihood in a durable manner, expectations are likely to only do so in a fleeting, context-dependent manner. Controlling for enhanced skills outside of the initial training study setup (such as within a different laboratory space, with new staff and different tasks at an extra follow-up session) would allow one to evaluate which effects are indeed durable, and thus unlikely to be expectation-dependent.

Finally, it is worth considering that to the extent that expectations may act, like cognitive training, to increase the sensitivity of the system of interest, for all practical purposes, expectations may be thought as an integral part of the training rather than a mere confound. We will return to this issue below, but first, we consider the delicate issue of how exactly to probe participants’ expectations.

Methodological Challenges Associated with Measuring Expectations

Given that expectations are inherently internal quantities, there are major methodological challenges to assess and quantify the presence of expectations and their subsequent effects. In particular, there are substantial challenges in determining when to assess expectations (e.g., before, during, or after training), how to assess expectations (e.g., via free report, structured questionnaires, or forced-choice procedures), and what exactly to assess (e.g., specific expectations about a specific intervention or more general beliefs).

When to Measure Expectations

As expectations are, by definition, predictions about the future, it would seem sensible to measure expectations before an intervention begins. However, encouraging participants to think actively about the potential outcomes of an intervention may run the risk of inducing expectations where previously there would have been none. On the other hand, waiting until after the conclusion of the intervention to measure expectations may be irreconcilably susceptible to distortion and misrepresentation due to the very nature of memory, which is notoriously unreliable (Price et al. 1999). Indeed, market research has demonstrated that participants’ “forecast expectations” (i.e., those accessed before exposure to an experience) are functionally different from those recalled after exposure (Higgs et al. 2005). Furthermore, if measures of expectations are taken after training, these may inappropriately reflect actual outcomes rather than initial expectations. After all, expectations are by nature “fluid,” or subject to consistent revision as new information is acquired (Stone et al. 2005). For example, if participants initially expected that their training would have no impact, but during post-test, they felt as if there was a significant increase in their performance as compared to baseline, their report regarding their initial expectations may be biased in the positive direction by their post-test performance (note, the opposite situation could also easily arise). In all, the fact that expectations potentially fluctuate through time certainly presents a challenge for any methodology that takes a single “snapshot” of expectations (e.g., one could imagine participants indicating very different beliefs following a training session during which they had been particularly successful as compared to another training session during which they had particularly poor performance).

At the moment, there is no widely agreed-upon best practice with regard to when expectations should be assessed. In many cases, expectations have been assessed before the start of the intervention, but in others, these measures have come afterward. Of these latter studies, they have in many cases asked participants to attempt to recall their general expectations, beliefs, or perceptions of change in their performance (Ballesteros et al. 2017; Baniqued et al. 2015; Langer et al. 2010; Redick et al. 2013; Suhr and Gunstad 2002; Tsai et al. 2018). Based on best practices in the field of placebo analgesic, it may be most appropriate to measure expectations after participants have been entered in the study and briefed about the training regimen they will be asked to follow, but before they are pre-tested and begin training.

To limit the risk that participants form expectations about their training, some authors have taken another approach where they assessed expectation on a different sample of participants than that included in the training study (Ziegler et al. 2019). While elegant and potentially robust (as it can involve much larger sample sizes), it does not properly address interindividual variability in expectations. Understanding how large a study sample will have to be so that it is robust to mean expectation assessments across treatments, rather than individual assessment treatment, should be an interesting methodological point to address in future studies.

How to Measure Expectations/What Expectations to Measure

While physiological or neural markers may be of use at some point in the future, at present, the only way to attempt to gain access to what are, by definition, internal states is via some manner of self-report (e.g., Madzharov et al. 2018; Oken et al. 2008; Schwarz and Büchel 2015; Suhr and Gunstad 2002; Tsai et al. 2018). In the literature to date, there are various individual approaches to this issue without a clear “best practice solution.” For example, in terms of the types of expectations that are probed, some questionnaires have clearly targeted expectations developed for the planned training and precise tasks (e.g., “Do you think specific training procedure will affect your performance on memory tasks?”) (Tsai et al. 2018), while others have assessed more general a priori beliefs about training and cognition in general (e.g., “Do you think computerized training will improve your cognitive function?”) (Rabipour and Davidson 2015). Yet, the differences across approaches extend all the way down to the particular format of the questions (e.g., whether the questionnaire uses a Likert scale (e.g., Rabipour and Davidson 2015; Schwarz and Büchel 2015; Suhr and Gunstad 2002), employed visual analog scales (Oken et al. 2008), and/or simple yes or no answers (Rabipour and Davidson 2015; Stothart et al. 2014; Tsai et al. 2018).

Making it more difficult still for researchers in the field to move forward is that fact that many studies on the measurement of expectations about cognitive training do not report the exact wording of each item included in their surveys (e.g., Ballesteros et al. 2017). Just as importantly, none has reported general information such as internal consistency, criterion validity, or general validity, which severely limits their overall reliability as a measure of expectation and expectation effects (Barth et al. 2019).

Researchers in the field of medicine have come to understand the necessity for valid, reliable expectation assessment tools shared across any studies with a health-related goal (Barth et al. 2019; Bowling et al. 2012). Various attempts have been made to develop appropriate expectation questionnaires, such as the Credibility/Expectancy Questionnaire (Devilly and Borkovec 2000), the Acupuncture Expectancy Scale (Mao et al. 2007), the EXPECT questionnaire (Jones et al. 2016), and, most recently, the Expectation for Treatment Scale (Barth et al. 2019) and the Treatment Expectation Questionnaire (Shedden-Mora et al. 2019). And while the majority of assessment tools developed thus far have been situated in more clinical domains, there are some emerging scales that have been proposed for use in the cognitive domain (e.g., Rabipour et al. 2018a). Yet, Barth et al. (2019) in particular identified the difficulty of discerning exactly which types of expectation are accessed during the measurement procedure. In particular, while surveys and questionnaires access expectations that are explicit, the extent to which they reach implicit expectations is as yet unresolved. Furthermore, it has been noted that ceiling effects (Mao et al. 2007) and participant reliability (Barth et al. 2019) often limit the usefulness of these tools. Similar limitations are likely to apply when considering developing expectation questionnaires for cognitive training.

Lessons from Outside Domains

While the role of expectations in the context of behavioral interventions for cognitive enhancement has only recently begun to be a topic of study, there is a rich history of study around expectations in the clinical domain. One particularly fruitful tactic has been to focus on the exploration of whether and when expectation-based effects can be deliberately induced. The reasoning here is at least two-fold. First, if researchers find it is not possible to purposefully induce expectation-based changes in some outcome measures despite using the strongest possible manipulations seeking to do so, then the results of previous work on those outcome measures are unlikely to be severely contaminated by expectation effects. Second, if changes are found, such studies potentially provide a window into possible mechanisms of action that could be harnessed for real-world ends.

Indeed, to this latter point, one clear lesson from the clinical field is that placebo/nocebo effects are not a mere nuisance that should simply be controlled for and otherwise ignored. Instead, placebo effects have their own neurobiology and to the extent that they enhance the desired outcome should be exploited as such (Enck et al. 2013). In the case of behavioral interventions, as has been argued recently in the case of pain, it may be difficult to separate an “actual” effect of the intervention from an expectation effect of the intervention. Endogenously mediated opioid analgesia has now been well documented, through both functional brain imaging and direct neural recording (Fabrizio Benedetti et al. 2011; de la Fuente-Fernández et al. 2001; Scott et al. 2008; Zubieta et al. 2005). In other words, once participants have learned to associate the act of rubbing an ointment on their skin with decreased pain, even an inert ointment may lead to the central release of endogenous opioids and dopamine, which will in turn dampen the perceived pain. This state of affairs clearly calls into question the very notion of “inert” substances/intervention. It may be a lure to assume that it is even possible for fully inert treatments to be administered. Rather, the emerging view is that expectations trigger their own endogenous pharmacological pathway and as such act as another source of treatment.

The strength of the effects in the medical realm, and the body of existing knowledge underlying them, is sufficient in that the effects have actually recently been put to the test via open-label studies (OLPs). OLPs are studies in which patients are administered an inert pill while being explicitly told that the pill is inert. The rationale is that, at least in western societies, individuals have repeatedly experienced relief after taking a pill, be it for a headache, back pain, or stomachaches. Because pills that medical professionals provide us generally produce some positive outcomes – like relief of pain – we thus have formed associations between the unconditioned stimulus of “taking a pill” (or “taking a pill prescribed by a doctor”) and pain relief. Kaptchuk et al. (2010) conducted an open-label placebo study with eighty patients suffering from irritable bowel syndrome (IBS). Participants were given a pill that they were explicitly told contained no active substance and that no improvement should be expected from the pill in isolation. Yet, the experimenters also gave positive suggestions about the placebo response. They explained to participants that “the placebo effect can be powerful, [that] the body automatically can respond to taking placebo pills, [that] a positive attitude can be helpful but is not necessary and taking the pills faithfully…is critical” (for full citation of instructions, see Kaptchuk et al. 2010). The authors measured change in self-reported scores on an IBS symptoms scale and observed a positive outcome, as compared to a no-treatment group.

Other work has gone so far as to assess how placebo effects and true effects of active drugs interact. For example, Kam-Hansen et al. (2014) used a 2 × 3 design, where patients with episodic migraine received either a placebo pill or real medication (Maxalt), labeled either “placebo,” “placebo or Maxalt,” or “Maxalt.” They found that the open-label placebo was superior to no treatment (i.e., participants who took the pill that truly was a placebo and was labeled as being a placebo showed greater improvements than would have been expected from no treatment at all). Moreover, the authors observed a gradient of the observed effect, with the increase in positive labeling boosting the effect of placebo as well as that of the medication.

It is not clear as of yet whether the effects seen in open-label placebo studies were driven by expectations, as only a few of these have measured expectations and the results are not consistent. For example, Meeuwis et al. (2018) applied histamine on the skin to provoke itch. They informed the subjects that the application will cause little or no itch and that this suggestion alone will lead to a reduced itch sensation. A control group received only the information of little itch after application. Expected level of itch was measured before the histamine application in these two groups; then, itch ratings after histamine application were collected. The authors found that subjects with lower itch expectations before histamine application reported lower mean itch after histamine application; yet, this was true only for the experimental group. No such effect was found in the control group, suggesting a possible important calibration process as to expectations when participant are made aware of possible expectation effects. On the other hand, in a study assessing the effect of OLP treatment on chronic back pain, Kleine-Borgmann et al. (2019) found no correlation between subjects’ expectation and the observed OLP treatment effect, despite having been given positive suggestions about the placebo response. Another study assessing the role of dose, expectancy, and adherence in OLP placebo effect on different well-being measures (El Brihi et al. 2019) found that for subjects receiving OLP treatment, and thus open guidance as to the positive effect of placebo responses, expectation predicted the reduction of physical symptoms (i.e., headache or dizziness), depression, anxiety, and stress scores, but did not predict quality of sleep. Thus, various measures may be differentially impacted by the explicit mention of suggestions and their impact.

It is only the very early days of open-label procedure studies and certainly many questions remain. Yet, the existing work around placebo and nocebo effects in the medical field already highlights the urgency of evaluating the extent to which expectations may be leveraged for cognitive benefits. Expectations – be they verbally induced, observational, or associative – may be better understood as potential behavioral training-extensions, rather than nuisance factors. As is currently being argued in the medical literature, expectation manipulations could be paired with active treatment in order to maximize intervention efficacy (Colloca et al. 2004; Enck et al. 2013; Schenk et al. 2014) or as a way of reducing treatment doses while maintaining the same efficacy (Albring et al. 2014; Benedetti et al. 2007; Colloca et al. 2016). In this view, expectation effects provide an additional, not-to-be-neglected source of brain and behavioral changes that could induce valuable cognitive enhancement in their own way. In other words, to the extent that such effects can be induced, behavioral interventions able to tap into these mechanisms should not be avoided, but rather their mechanisms should be understood and sought after. This seems all the more appropriate given that our current understanding is that placebo and nocebo effects involve top-down effects via pre-frontal control on key circuits, such as the amygdala, nucleus accumbens (NAc), and ventral striatum (VS), at least in the case of analgesia (Atlas and Wager 2014; de La Fuente-Fernández et al. 2002; Scott et al. 2008; Yu et al. 2014). Thus, cognitive control, a current target of many cognitive intervention studies, is likely to be tightly linked to the expression of expectation effects.

A key issue then becomes that of the proper designs necessary to evaluate behavioral interventions and their impact. As thoroughly discussed by Green et al. (2019), the research question of interest will determine the proper methodological design choices. Efficacy and effectiveness studies (i.e., studies that are meant to ask whether an intervention provides a benefit above and beyond the status quo) may benefit from leveraging expectation effects to enhance the positive cognitive outcome sought after (to the extent that this is possible in the cognitive domain). On the contrary, mechanistic studies require a careful individual assessment of all mechanisms that can possibly affect cognitive performance; as one such mechanism, researchers may want to attempt to isolate expectation effects, above and beyond the cognitive training proper through a manipulation of expectations either in a positive or in a negative direction (e.g., Sinke et al. 2016).

Using these two methodologies side by side, although certainly not in a sufficiently systematic manner, the field of medicine has already established that, at times, the magnitude of clinical responses can be as large following expectation manipulations as are induced by true drug administration, but that expectation-induced treatments tend to last for shorter durations than true drug treatments (Benedetti et al. 2018), that the clinical response tends to be more variable when induced by expectations than by drug treatments, and, finally and maybe crucially in relation to cognitive training, that some people respond to expectations whereas others do not. In particular, personality traits such as optimism (Kern et al. 2020), empathy (Colloca and Benedetti 2009), and suggestibility (de Pascalis et al. 2002) have been associated with higher placebo responding, whereas pessimism, anxiety, and pain catastrophizing have been linked to higher nocebo responding (Corsi and Colloca 2017; Kern et al. 2020). The issue of interindividual differences does not involve only personality traits but also includes genetic variations recently identified as potential contributors to the placebo response (Hall et al. 2015). Understanding of these differences is still in its infancy, but also highly relevant for interventions based on cognitive training and enhancement. As such, the extent to which individual differences are associated with certain types of expectations, or reactions to expectation-induction manipulations, should be a focus for future studies (Molenaar and Campbell 2009).

Conclusions and Future Directions

At the moment, the extent to which expectations play a role in the outcome of cognitive training interventions is largely unknown. However, an ever-growing body of literature in several outside domains (e.g., pain analgesia) has demonstrated the potential power of expectations in shifting human behavior. Furthermore, there are plausible mechanisms through which such outcomes could be realized in the cognitive domain, as related to attention, arousal, or persistence to cite a few. There is thus an urgent need for work that more thoroughly examines the routes through which expectations can be manipulated in the cognitive domain and the impact expectations have on the types of objective measures of performance (e.g., accuracy, reaction time) that are common in the field. In this, there is certainly value in correlational research that utilizes assessments of expectations in the context of typical behavioral intervention methodology (in particular in addressing various open basic science questions – e.g., as related to when and how to make these assessments in such a way that maximizes information gain without creating expectations, in determining whether participants’ prior knowledge of various paradigms influences their expectations, in understanding whether participants’ beliefs in their own susceptibility to placebo effects moderates outcomes). Yet, moving forward will also require careful experimental work. Indeed, moving forward in this latter way calls for a paradigm shift whereby, instead of being exclusively measured through explicit questionnaires, expectations are manipulated experimentally, preferably through direct experience, via forms of conditioning or associative learning, which are known to result in more durable placebo/nocebo effects than verbal suggestions. For example, conceptually analogous work to the pain studies described above may involve using verbally provided expectations along with deceptively altered tests of cognitive function that would provide “evidence” to the participants of “enhanced” or “diminished” cognitive performance. Indeed, there are potentially many ways to make cognitive tasks subjectively easier or more difficult without the participants being explicitly aware that the tests had been manipulated. For example, difficulty in mental rotation is monotonically related to the magnitude of the rotation angle; thus, an “easy” session could involve many small magnitude rotations, while a “hard” session could involve many large magnitude rotations; this would produce systematic shifts in behavior in a way that seems like it would be difficult for participants to identify. Such manipulations are bound to be most fruitful in the context of experimental designs that allow careful modeling of the relative contribution of response bias and sensitivity as well as external and internal sources of uncertainty if possible.

In all, given the huge number of open questions remaining (as well as the huge variability in goals and approaches in the field), it is not clear that there is any specific “gold standard” that can be suggested going forward. Instead, perhaps, the strongest suggestion is that the questions are worth pursuing, and thus, researchers examining the impact of behavioral interventions for cognitive enhancement should consider manipulating participant expectations as well as taking measures of possible individual differences that could moderate the formation or impact of expectations. Given the cost associated with designs that directly manipulate expectations, this will include continuing to assess possible participant expectations in standard behavioral training for cognitive enhancements as well as participant knowledge (e.g., with respect to general trends in the field, with respect to knowledge of certain programs or paradigms). In taking such measurements, a certain degree of heterogeneity in approach (e.g., with regard to when and how to best assess expectations) would be welcome. Yet, while such studies are valuable in informing future designs, they are not fully sufficient. Only studies whose intended design is to directly contrast different methods of manipulating expectations in the context of behavioral interventions for cognitive training can fully answer the key questions of whether cognitive functions can be altered in a long-lasting way through expectations.

Data Availability

Not applicable.

Code Availability

Not applicable.

Notes

In the scope of this article, we will use the terms efficacy and effectiveness as defined in the dictionary of epidemiology (Last et al. 2001): Efficacy refers to the extent to which a specific intervention is beneficial under ideal conditions. Effectiveness is a measure of the extent to which a specific intervention when deployed in the field in routine care does what it is intended to do for a specific population.

Note that our goal in doing so is not to compare the potential real-world significance of effects in different domains. Rather, by considering cognitive training interventions alongside medical trials such as those for pain, our aim is to highlight potential lessons from these medical domains that the cognitive training literature can build on.

References

Albring, A., Wendt, L., Benson, S., Nissen, S., Yavuz, Z., Engler, H., et al. (2014). Preserving learned immunosuppressive placebo response: perspectives for clinical application. Clinical Pharmacology and Therapeutics, 96(2), 247–255. https://doi.org/10.1038/clpt.2014.75.

Amanzio, M., & Benedetti, F. (1999). Neuropharmacological dissection of placebo analgesia: expectation-activated opioid systems versus conditioning-activated specific subsystems. Journal of Neuroscience, 19(1), 484–494. https://doi.org/10.1523/jneurosci.19-01-00484.1999.

Anguera, J. A., Boccanfuso, J., Rintoul, J. L., Al-Hashimi, O., Faraji, F., Janowich, J., et al. (2013). Video game training enhances cognitive control in older adults. Nature, 501(7465), 97–101. https://doi.org/10.1038/nature12486.

Atlas, L. Y., & Wager, T. D. (2014). A meta-analysis of brain mechanisms of placebo analgesia: consistent findings and unanswered questions. Handbook of Experimental Pharmacology, 225, 37–69. https://doi.org/10.1007/978-3-662-44519-8_3.

Au, J., Sheehan, E., Tsai, N., Duncan, G. J., Buschkuehl, M., & Jaeggi, S. M. (2015). Improving fluid intelligence with training on working memory: a meta-analysis. Psychonomic Bulletin and Review, 22(2), 366–377. https://doi.org/10.3758/s13423-014-0699-x.

Ballesteros, S., Mayas, J., Prieto, A., Ruiz-Marquez, E., Toril, P., & Reales, J. M. (2017). Effects of video game training on measures of selective attention and working memory in older adults: results from a randomized controlled trial. Frontiers in Aging Neuroscience, 9(Nov), 1–15. https://doi.org/10.3389/fnagi.2017.00354.

Bandura, A. (1977). Self-efficacy: toward a unifying theory of behavioral change. Psychological Review, 84(2), 191–215.

Bandura, A. (1980). Gauging the relationship between self-efficacy judgment and action. Cognitive Therapy and Research, 4(2), 263–268. https://doi.org/10.1007/BF01173659.

Bandura, A., Reese, L., & Adams, N. E. (1982). Microanalysis of action and fear arousal as a function of differential levels of perceived self-efficacy. Journal of Personality and Social Psychology, 43(1), 5–21. https://doi.org/10.1037/0022-3514.43.1.5.

Baniqued, P. L., Kranz, M. B., Voss, M. W., Lee, H., Cosman, J. D., Severson, J., & Kramer, A. F. (2014). Cognitive training with casual video games: points to consider. Frontiers in Psychology, 4, 1010. https://doi.org/10.3389/fpsyg.2013.01010.

Baniqued, P. L., Allen, C. M., Kranz, M. B., Johnson, K., Sipolins, A., Dickens, C., et al. (2015). Working memory, reasoning, and task switching training: transfer effects, limitations, and great expectations? PLoS One, 10(11). https://doi.org/10.1371/journal.pone.0142169.

Barth, J., Kern, A., Lüthi, S., & Witt, C. M. (2019). Assessment of patients’ expectations: development and validation of the Expectation for Treatment Scale (ETS). BMJ Open, 9(6), 1–10. https://doi.org/10.1136/bmjopen-2018-026712.

Bavelier, D., Levi, D. M., Li, R. W., Dan, Y., & Hensch, T. K. (2010). Removing brakes on adult brain plasticity: from molecular to behavioral interventions. Journal of Neuroscience, 30(45), 14964–14971. https://doi.org/10.1523/JNEUROSCI.4812-10.2010.

Bediou, B., Adams, D. M., Mayer, R. E., Tipton, E., Green, C. S., & Bavelier, D. (2018). Meta-analysis of action video game impact on perceptual, attentional, and cognitive skills. Psychological Bulletin, 144(1), 77–110. https://doi.org/10.1037/bul0000130.

Benedetti, F., Pollo, A., Lopiano, L., Lanotte, M., Vighetti, S., & Rainero, I. (2003). Conscious expectation and unconscious conditioning in analgesic, motor, and hormonal placebo/nocebo responses. The Journal of neuroscience : the official journal of the Society for Neuroscience, 23(10), 4315–4323 https://doi.org/23/10/4315.

Benedetti, F., Pollo, A., & Colloca, L. (2007). Opioid-mediated placebo responses boost pain endurance and physical performance: is it doping in sport competitions? Journal of Neuroscience, 27(44), 11934–11939. https://doi.org/10.1523/JNEUROSCI.3330-07.2007.

Benedetti, F., Amanzio, M., Rosato, R., & Blanchard, C. (2011). Nonopioid placebo analgesia is mediated by CB1 cannabinoid receptors. Nature Medicine, 17(10), 1228–1230. https://doi.org/10.1038/nm.2435.

Benedetti, F., Piedimonte, A., & Frisaldi, E. (2018). How do placebos work? European Journal of Pschotraumatology, 9(sup3), 1533370. https://doi.org/10.1080/20008198.2018.1533370.

Biagianti, B., & Vinogradov, S. (2013). Computerized cognitive training targeting brain plasticity in schizophrenia. In Progress in Brain Research (Vol. 207, pp. 301–326). https://doi.org/10.1016/B978-0-444-63327-9.00011-4.

Boot, W. R., Blakely, D. P., & Simons, D. J. (2011). Do action video games improve perception and cognition? Frontiers in Psychology, 2, 226. https://doi.org/10.3389/fpsyg.2011.00226.

Boot, W. R., Simons, D. J., Stothart, C., & Stutts, C. (2013). The pervasive problem with placebos in psychology: why active control groups are not sufficient to rule out placebo effects. Perspectives on Psychological Science, 8(4), 445–454. https://doi.org/10.1177/1745691613491271.

Bowling, A., Rowe, G., Lambert, N., Waddington, M., Mahtani, K., Kenten, C., & Howe, A. (2012). The measurement of patients’ expectations for health care: a review and psychometric testing of a measure of patients’ expectations. Health Technology Assessment, 16(30).

Brehmer, Y., Westerberg, H., & Bäckman, L. (2012). Working-memory training in younger and older adults: training gains, transfer, and maintenance. Frontiers in Human Neuroscience, 6(MARCH 2012), 63. https://doi.org/10.3389/fnhum.2012.00063.

Büchel, C., Geuter, S., Sprenger, C., & Eippert, F. (2014). Placebo analgesia: a predictive coding perspective. Neuron, 81(6), 1223–1239. https://doi.org/10.1016/j.neuron.2014.02.042.

Colagiuri, B., Livesey, E. J., & Harris, J. A. (2011). Can expectancies produce placebo effects for implicit learning? Psychonomic Bulletin & Review, 18(2), 399–405. https://doi.org/10.3758/s13423-010-0041-1.

Colloca, L., & Benedetti, F. (2006). How prior experience shapes placebo analgesia. Pain, 124(1–2), 126–133. https://doi.org/10.1016/J.PAIN.2006.04.005.

Colloca, L., & Benedetti, F. (2009). Placebo analgesia induced by social observational learning. Pain, 144(1–2), 28–34. https://doi.org/10.1016/J.PAIN.2009.01.033.

Colloca, L., Lopiano, L., Lanotte, M., & Benedetti, F. (2004). Overt versus covert treatment for pain, anxiety, and Parkinson’s disease. Lancet Neurology, 3(11), 679–684. https://doi.org/10.1016/S1474-4422(04)00908-1.

Colloca, L., Sigaudo, M., & Benedetti, F. (2008). The role of learning in nocebo and placebo effects. Pain, 136(1–2), 211–218. https://doi.org/10.1016/j.pain.2008.02.006.

Colloca, L., Enck, P., & DeGrazia, D. (2016). Relieving pain using dose-extending placebos: a scoping review. Pain, 157(8), 1590–1598. https://doi.org/10.1016/j.physbeh.2017.03.040.

Corsi, N., & Colloca, L. (2017). Placebo and nocebo effects: the advantage of measuring expectations and psychological factors. Frontiers in Psychology, 8(308). https://doi.org/10.3389/fpsyg.2017.00308.

Crow, R., Gage, H., Hampson, S., Hart, J., Kimber, A., & Thomas, H. (1999). The role of expectancies in the placebo effect and their use in the delivery of health care: a systematic review. HTA Health Technology Assessment, 3(3).

de la Fuente-Fernández, R., Ruth, T. J., Sossi, V., Schulzer, M., Calne, D. B., & Stoessl, A. J. (2001). Expectation and dopamine release: mechanism of the placebo effect in Parkinson’s disease. Science, 293(5532), 1164–1166. https://doi.org/10.1126/science.1060937.

de La Fuente-Fernández, R., Schulzer, M., & Stoessl, A. J. (2002). The placebo effect in neurological disorders. Lancet Neurology, 1(2), 85–91. https://doi.org/10.1016/S1474-4422(02)00038-8.

de Lange, F. P., Heilbron, M., & Kok, P. (2018). How do expectations shape perception? Trends in Cognitive Sciences, 22(9), 764–779. https://doi.org/10.1016/j.tics.2018.06.002.

de Pascalis, V., Chiaradia, C., & Carotenuto, E. (2002). The contribution of suggestibility and expectation to placebo analgesia phenomenon in an experimental setting. Pain, 96(3), 393–402. https://doi.org/10.1016/S0304-3959(01)00485-7.

Deneve, S., & Pouget, A. (2004). Bayesian multisensory integration and cross-modal spatial links. Journal of Physiology Paris, 98(1–3), 249–258. https://doi.org/10.1016/j.jphysparis.2004.03.011.

Denison, R. N., Adler, W. T., Carrasco, M., & Ma, W. J. (2018). Humans incorporate attention-dependent uncertainty into perceptual decisions and confidence. Proceedings of the National Academy of Sciences of the United States of America, 115(43), 11090–11095. https://doi.org/10.1073/pnas.1717720115.

Deveau, J., Jaeggi, S. M., Zordan, V., Phung, C., & Seitz, A. R. (2015). How to build better memory training games. Frontiers in Systems Neuroscience, 8(243). https://doi.org/10.3389/fnsys.2014.00243.

Devilly, G. J., & Borkovec, T. D. (2000). Psychometric properties of the credibility/expectancy questionnaire. Journal of Behavior Therapy and Experimental Psychiatry, 31(2), 73–86. https://doi.org/10.1016/S0005-7916(00)00012-4.

Dowd, E. W., Kiyonaga, A., Beck, J. M., & Egner, T. (2015). Quality and accessibility of visual working memory during cognitive control of attentional guidance: a Bayesian model comparison approach. Visual Cognition, 23(3), 337–356. https://doi.org/10.1080/13506285.2014.1003631.

Dweck, C., Walton, G. M., & Cohen, G. L. (2011). Academic tenacity: mindset and skills that promote long-term learning. In Bill & Melinda Gates Foundation Retrieved from https://files.eric.ed.gov/fulltext/ED576649.pdf.

El Brihi, J., Horne, R., & Faasse, K. (2019). Prescribing placebos: an experimental examination of the role of dose, expectancies, and adherence in open-label placebo effects. Annals of Behavioral Medicine, 53(1), 16–28. https://doi.org/10.1093/abm/kay011.

Enck, P., Bingel, U., Schedlowski, M., & Rief, W. (2013). The placebo response in medicine: minimize, maximize or personalize? Nature Reviews Drug Discovery, 12(3), 191–204. https://doi.org/10.1038/nrd3923.

Foroughi, C. K., Monfort, S. S., Paczynski, M., McKnight, P. E., & Greenwood, P. M. (2016). Placebo effects in cognitive training. Proceedings of the National Academy of Sciences, 113(27), 7470–7474. https://doi.org/10.1073/pnas.1601243113.

Grahl, A., Onat, S., & Büchel, C. (2018). The periaqueductal gray and Bayesian integration in placebo analgesia. ELife, 7, e32930. https://doi.org/10.7554/eLife.32930.

Green, S. C., & Bavelier, D. (2003). Action video game modifies visual selective attention. Nature, 423(6939), 534–537. https://doi.org/10.1038/nature01647.

Green, C. S., Pouget, A., & Bavelier, D. (2010). Improved probabilistic inference as a general learning mechanism with action video games. Current Biology, 20(17), 1573–1579. https://doi.org/10.1016/j.cub.2010.07.040.

Green, S. C., Bavelier, D., Kramer, A. F., Vinogradov, S., Ansorge, U., Ball, K. K., et al. (2019). Improving methodological standards in behavioral interventions for cognitive enhancement. Journal of Cognitive Enhancement, 3(1), 2–29. https://doi.org/10.1007/s41465-018-0115-y.

Greene, B. A., & Miller, R. B. (1996). Influences on achievement: goals, perceived ability, and cognitive engagement. Contemporary Educational Psychology, 21(2), 181–192. https://doi.org/10.1006/ceps.1996.0015.

Haahr, M. T., & Hróbjartsson, A. (2006). Who is blinded in randomized clinical trials? A study of 200 trials and a survey of authors. Clinical Trials (London, England), 3(4), 360–365. https://doi.org/10.1177/1740774506069153.

Haddad, R., Lenze, E. J., Nicol, G., Miller, J. P., Yingling, M., & Wetherell, J. L. (2020). Does patient expectancy account for the cognitive and clinical benefits of mindfulness training in older adults? International Journal of Geriatric Psychiatry, 35(6), 626–632. https://doi.org/10.1002/gps.5279.

Hall, K. T., Loscalzo, J., & Kaptchuk, T. J. (2015). Genetics and the placebo effect: the placebome. Trends in Molecular Medicine, 21(5), 285–294. https://doi.org/10.1016/j.molmed.2015.02.009.

Haslam, N., Loughnan, S., & Perry, G. (2014). Meta-milgram: an empirical synthesis of the obedience experiments. PLoS One, 9(4). https://doi.org/10.1371/journal.pone.0093927.