Abstract

There is substantial interest in the possibility that cognitive skills can be improved by dedicated behavioral training. Yet despite the large amount of work being conducted in this domain, there is not an explicit and widely agreed upon consensus around the best methodological practices. This document seeks to fill this gap. We start from the perspective that there are many types of studies that are important in this domain—e.g., feasibility, mechanistic, efficacy, and effectiveness. These studies have fundamentally different goals, and, as such, the best-practice methods to meet those goals will also differ. We thus make suggestions in topics ranging from the design and implementation of control groups, to reporting of results, to dissemination and communication, taking the perspective that the best practices are not necessarily uniform across all study types. We also explicitly recognize and discuss the fact that there are methodological issues around which we currently lack the theoretical and/or empirical foundation to determine best practices (e.g., as pertains to assessing participant expectations). For these, we suggest important routes forward, including greater interdisciplinary collaboration with individuals from domains that face related concerns. Our hope is that these recommendations will greatly increase the rate at which science in this domain advances.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The past two decades have brought a great deal of attention to the possibility that certain core cognitive abilities, including those related to processing speed, working memory, perceptual abilities, attention, and general intelligence, can be improved by dedicated behavioral training (Au et al. 2015; Ball et al. 2002; Bediou et al. 2018; Deveau et al. 2014a; Karbach and Unger 2014; Kramer et al. 1995; Schmiedek et al. 2010; Strobach and Karbach 2016; Valdes et al. 2017). Such a prospect has clear theoretical scientific relevance, as related to our understanding of those cognitive sub-systems and their malleability (Merzenich et al. 2013). It also has obvious practical relevance. Many populations, such as children diagnosed with specific clinical disorders or learning disabilities (Franceschini et al. 2013; Klingberg et al. 2005), individuals with schizophrenia (Biagianti and Vinogradov 2013), traumatic brain injury (Hallock et al. 2016), and older adults (Anguera et al. 2013; Nahum et al. 2013; Whitlock et al. 2012), may show deficits in these core cognitive abilities, and thus could reap significant benefits from effective interventions.

There are also a host of other circumstances outside of rehabilitation where individuals could potentially benefit from enhancements in cognitive skills. These include, for instance, improving job-related performance in individuals whose occupations place heavy demands on cognitive abilities, such as military and law enforcement personnel, pilots, high-level athletes, and surgeons (Deveau et al. 2014b; Schlickum et al. 2009). Finally, achievement in a variety of academic domains, including performance in science, technology, engineering, and mathematics (STEM) fields, in scientific reasoning, and in reading ability, have also been repeatedly linked to certain core cognitive capacities. These correlational relations have in turn then sparked interest in the potential for cognitive training to produce enhanced performance in the various academic areas (Rohde and Thompson 2007; Stieff and Uttal 2015; Wright et al. 2008).

However, while there are numerous published empirical results suggesting that there is reason for optimism that some or all of these goals are within our reach, the field has also been subject to significant controversy, concerns, and criticisms recommending that such enthusiasm be appreciably dampened (Boot et al. 2013; Melby-Lervag and Hulme 2013; Shipstead et al. 2012; Simons et al. 2016). Our goal here is not to adjudicate between these various positions or to rehash prior debates. Instead, the current paper is forward looking. We argue that many of the disagreements that have arisen in our field to-date can be avoided in the future by a more coherent and widely agreed-upon set of methodological standards in the field. Indeed, despite the substantial amount of research that has been conducted in this domain, as well as the many published critiques, there is not currently an explicitly delineated scientific consensus outlining the best methodological practices to be utilized when studying behavioral interventions meant to improve cognitive skills.

The lack of consensus has been a significant barrier to progress at every stage of the scientific process, from basic research to translation. For example, on the basic research side, the absence of clear methodological standards has made it difficult-to-impossible to easily and directly compare results across studies (either via side-by-side contrasts or in broader meta-analyses). This limits the field’s ability to determine what techniques or approaches have shown positive outcomes, as well as to delineate the exact nature of any positive effects—e.g., training effects, transfer effects, retention of learning, etc. On the translational side, without such standards, it is unclear what constitutes scientifically acceptable evidence of efficacy or effectiveness. This is a serious problem both for researchers attempting to demonstrate efficacy and for policy makers attempting to determine whether efficacy has, in fact, been demonstrated.

Below, we lay out a set of broad methodological standards that we feel should be adopted within the domain focused on behavioral interventions for cognitive enhancement. As will become clear, we strongly maintain that a “gold standard methodology,” as exists in clinical or pharmaceutical trials, is not only a goal that our field can strive toward, but is indeed one that can be fully met. We also appreciate though that not every study in our domain will require such methodology. Indeed, our domain is one in which there are many types of research questions—and with those different questions come different best-practice methodologies that may not include constraints related to, for example, blinding or placebo controls. Finally, while we recognize that many issues in our field have clear best practices solutions, there are a number of areas wherein we currently lack the theoretical and empirical foundations from which to determine best practices. This paper thus differs from previous critiques in that rather than simply noting those issues, here we lay out the steps that we believe should be taken to move the field forward.

We end by noting that although this piece is written from the specific perspective of cognitive training, the vast majority of the issues that are covered are more broadly relevant to any research domain that employs behavioral interventions to change human behavior. Some of these interventions do not fall neatly within the domain of “cognitive training,” but they are nonetheless conducted with the explicit goal of improving cognitive function. These include interventions involving physical exercise and/or aerobic activity (Hillman et al. 2008; Voss et al. 2010), mindfulness meditation (Prakash et al. 2014; Tang et al. 2007), video games (Colzato et al. 2014; Green and Bavelier 2012; Strobach et al. 2012), or musical interventions (Schellenberg 2004). The ideas are further clearly applicable in domains that, while perhaps not falling neatly under the banner of “cognitive enhancement,” clearly share a number of touch points. These include, for instance, many types of interventions deployed in educational contexts (Hawes et al. 2017; Mayer 2014). Finally, the issues and ideas also apply in many domains that lie well outside of cognition. These range from behavioral interventions designed to treat various clinical disorders such as post-traumatic stress disorder (PTSD) or major depressive disorder (Rothbaum et al. 2014), to those designed to decrease anti-social behaviors or increase pro-social behaviors (Greitemeyer et al. 2010). The core arguments and approaches that are developed here, as well as the description of areas in need of additional work, are thus similarly shared across these domains. Our hope is thus that this document will accelerate the rate of knowledge acquisition in all domains that study the impact of behavioral interventions. And as the science grows, so will our knowledge of how to deploy such paradigms for practical good.

Behavioral Interventions for Cognitive Enhancement Can Differ Substantially in Content and Target(s) and Thus A Common Moniker like “Brain Training” Can Be Misleading

As the literature exploring behavioral interventions for cognitive enhancement has grown, so too has the number of unique approaches adopted in this endeavor. For example, some research groups have used reasonably unaltered standard psychology tasks as training paradigms (Schmiedek et al. 2010; Willis et al. 2006), while others have employed “gamified” versions of such tasks (Baniqued et al. 2015; Jaeggi et al. 2011; Owen et al. 2010). Some groups have used off-the-shelf commercial video games that were designed with only entertainment-based goals in mind (Basak et al. 2008; Green et al. 2010), while others have utilized video games designed to mimic the look and feel of such commercial games, but with the explicit intent of placing load on certain cognitive systems (Anguera et al. 2013). Some groups have used a single task for the duration of training (Jaeggi et al. 2008), while others have utilized training consisting of many individual tasks practiced either sequentially or concurrently (Baniqued et al. 2015; Smith et al. 2009). Some groups have used tasks that were formulated based upon principles derived from neuroscience (Nahum et al. 2013), while others have used tasks inspired by Eastern meditation practices (Tang et al. 2007). In all, the range of approaches is now simply enormous, both in terms of the number of unique dimensions of variation, as well as the huge variability within those dimensions.

Unfortunately, despite vast differences in approach, there continues to exist the tendency of lumping all such interventions together under the moniker of “brain training,” not only in the popular media (Howard 2016), but also in the scientific community (Bavelier and Davidson 2013; Owen et al. 2010; Simons et al. 2016). We argue that such a superordinate category label is not a useful level of description or analysis. Each individual type of behavioral intervention for cognitive enhancement (by definition) differs from all others in some way, and thus will generate different patterns of effects on various cognitive outcome measures. There is certainly room for debate about whether it is necessary to only consider the impact of each unique type of intervention, or whether there exist categories into which unique groups of interventions can be combined. However, we urge caution here, as even seemingly reasonable sub-categories, such as “working memory training,” may still be problematic (Au et al. 2015; Melby-Lervag and Hulme 2013). For instance, the term “working memory training” can easily promote confusion regarding whether working memory was a targeted outcome or a means of training. Regardless, it is clear that “brain training” is simply too broad a category to have descriptive value.

Furthermore, it is notable that in those cases where the term “brain training” is used, it is often in the context of the question “Does brain training work?” (Howard 2016; Walton 2016). However, in the same way that the term “brain training” implies a common mechanism of action that is inconsistent with the wide number of paradigms in the field, the term “work” suggests a singular target that is inconsistent with the wide number of training targets in the field. The cognitive processes targeted by a paradigm intended to improve functioning in individuals diagnosed with schizophrenia may be quite different from those meant to improve functioning in a healthy older adult or a child diagnosed with ADHD. Similarly, whether a training paradigm serves to recover lost function (e.g., improving the cognitive skills of a 65-year old who has experienced age-related decline), ameliorate abnormal function (e.g., enhancing cognitive skills in an individual with developmental cognitive deficits), or improve normal function (e.g., improving speed of processing in a healthy 21-year old) might all fall under the description of whether cognitive training “works”—but are absolutely not identical and in many cases may have very little in common.

In many ways then, the question “Does brain training work?” is akin to the question “Do drugs work?” As is true of the term “brain training,” the term “drugs” is a superordinate category label that encompasses an incredible variety of chemicals—from those that were custom-designed for a particular purpose to those that arose “in the wild,” but now are being put to practical ends. They can be delivered in many different ways, at different doses, on different schedules, and in endless combinations. The question of whether drugs “work” is inherently defined with respect to the stated target condition(s). And finally, drugs with the same real-world target (e.g., depression), may act on completely different systems (e.g., serotonin versus dopamine versus norepinephrine).

It is undoubtedly the case, at least in the scientific community, that such broad and imprecise terms are used as a matter of expository convenience (e.g., as is needed in publication titles), rather than to actually reflect the belief that all behavioral interventions intended to improve cognition are alike in mechanisms, design, and goals (Redick et al. 2015; Simons et al. 2016). Nonetheless, imprecise terminology can easily lead to imprecise understanding and open the possibility for criticism of the field. Thus, our first recommendation is for the field to use well-defined and precise terminology, both to describe interventions and to describe an intervention’s goals and outcomes. In the case of titles, abstracts, and other text length-limited parts of manuscripts, this would include using the most precise term that these limitations allow for (e.g., “working memory training” would be more precise than “brain training,” and “dual-N-back training” would be even more precise). However, if the text length limitations do not allow for the necessary level of precision, more neutral terminology (e.g., as in our title—“behavioral interventions for cognitive enhancement”) is preferable with a transition to more precise terminology in the manuscript body.

Different Types of Cognitive Enhancement Studies Have Fundamentally Different Goals

One clear benefit to the use of more precise and better-defined terms is that research studies can be appropriately and clearly delineated given their design and goals. Given the potential real-world benefits that behavioral interventions for cognitive enhancement could offer, a great deal of focus in the domain to date has been placed on studies that could potentially demonstrate real-world impact. However, as is also true in medical research, demonstration of real-world impact is not the goal of every study.

For the purposes of this document, we differentiate between four broad, but distinct, types of research study:

-

(i)

Feasibility studies

-

(ii)

Mechanistic studies

-

(iii)

Efficacy studies

-

(iv)

Effectiveness studies

Each type of study is defined by fundamentally different research questions. They will thus differ in their overall methodological approach and, because of these differences, in the conclusions one may draw from the study results. Critically though, if properly executed, each study type provides valuable information for the field going forward. Here, we note that this document focuses exclusively on intervention studies. There are many other study types that can and do provide important information to the field (e.g., the huge range of types of basic science studies—correlational, cross-sectional, longitudinal, etc.). However, these other study types are outside the scope of the current paper and they are not specific for the case of training studies (see the “Discussion” section for a brief overview of other study types).

Below, we examine the goals of each type of study listed above—feasibility, mechanistic, efficacy, and effectiveness studies—and discuss the best methodological practices to achieve those goals. We recommend that researchers state clearly at the beginning of proposals or manuscripts the type of study that is under consideration, so that reviewers can assess the methodology relative to the research goals. And although we make a number of suggestions regarding broadly defined best methodological practices within a study type, it will always be the case that a host of individual-level design choices will need to be made and justified on the basis of specific well-articulated theoretical models.

Feasibility, Mechanistic, Efficacy, and Effectiveness Studies—Definitions and Broad Goals

Feasibility Studies

The goal of a feasibility study is to test the viability of a given paradigm or project—almost always as a precursor to one of the study designs to follow. Specific goals may include identifying potential practical or economic problems that might occur if a mechanistic, efficacy, or effectiveness study is pursued (Eldridge et al. 2016; Tickle-Degnen 2013). For instance, it may be important to know if participants can successfully complete the training task(s) as designed (particularly in the case of populations with deficits). Is the training task too difficult or too easy? Are there side effects that might induce attrition (e.g., eye strain, motion sickness, etc.)? Is training compliance sufficient? Do the dependent variables capture performance with the appropriate characteristics (e.g., as related to reliability, inter-participant variability, data distribution, performance not being at ceiling or floor, etc.)? What is the approximate effect size of a given intervention and what sample size would be appropriate to draw clear conclusions?

Many labs might consider such data collection to be simple “piloting” that is never meant to be published. However, there may be value in re-conceptualizing many “pilot studies” as feasibility studies where dissemination of results is explicitly planned (although note that other groups have drawn different distinctions between feasibility and pilot studies, see for instance, Eldridge et al. 2016; Whitehead et al. 2014). This is especially true in circumstances in which aspects of feasibility are broadly applicable, rather than being specific to a single paradigm. For instance, a feasibility study assessing whether children diagnosed with ADHD show sufficient levels of compliance in completing an at-home multiple day behavioral training paradigm unmonitored by their parents could provide valuable data to other groups planning on working with similar populations.

Implicit in this recommendation then is the notion that the value of feasibility studies depends on the extent to which aspects of feasibility are in doubt (e.g., a feasibility study showing that college-aged individuals can complete ten 1-h in-lab behavioral training sessions would be of limited value as there are scores of existing studies showing this is true). We thus suggest that researchers planning feasibility studies (or pilot studies that can be re-conceptualized as feasibility studies) consider whether reasonably minor methodological tweaks could not only demonstrate feasibility of their own particular paradigm, but also speak toward broader issues of feasibility in the field. While this might not be possible in all cases or for all questions (as some issues of feasibility are inherently and inseparably linked to a very specific aspect of a given intervention), we argue that there are, in fact, a number of areas wherein the field currently lacks basic feasibility information. Published data in these areas could provide a great deal of value to the design of mechanistic, efficacy, or effectiveness studies across many different types of behavioral interventions for cognitive enhancement. These include issues ranging from compliance with instructions (e.g., in online training studies), to motivation and attrition, to task-performance limitations (e.g., use of keyboard, mouse, joysticks, touch screens, etc.). As such, we believe there is value in researchers considering whether, with a slight shift in approach and emphasis, the piloting process that is often thought of happening “before the science has really begun” could contribute more directly to the base of knowledge in the field.

Finally, it is worth noting that a last question that can potentially be addressed by a study of this type is whether there is enough evidence in favor of a hypothesis to make a full-fledged study of mechanism, efficacy, or effectiveness potentially feasible and worth undertaking. For instance, showing the potential for efficacy in underserved or difficult-to-study populations could provide inspiration to other groups to examine related approaches in that population.

The critical to-be-gained knowledge here includes an estimate of the expected effect size, and in turn, a power estimate of the sample size that would be required to demonstrate statistically significant intervention effects (or convincing null effects). It would also provide information about whether an effect is likely to be clinically significant (which could require a higher effect size than what is necessary to reach statistical significance). While feasibility studies will not be conclusive (and all scientific discourse of such studies should emphasize this fact), they can provide both information and encouragement that can add to scientific discourse and lead to innovation. By recognizing that feasibility studies have value, for instance, in indicating whether larger-scale efficacy trials are worth pursuing, it will further serve to reduce the tendency for authors to overinterpret results (i.e., if researchers know that their study will be accepted as valuable for providing information that efficacy studies could be warranted, authors may be less prone to directly and inappropriately suggesting that their study speaks to efficacy questions).

Mechanistic Studies

The goal of a mechanistic study is to identify the mechanism(s) of action of a behavioral intervention for cognitive enhancement. In other words, the question is not whether, but how. More specifically, mechanistic studies test an explicit hypothesis, generated by a clear theoretical framework, about a mechanism of action of a particular cognitive enhancement approach. As such, mechanistic studies are more varied in their methodological approach than the other study types. They are within the scope of fundamental or basic research, but they do often provide the inspiration for applied efficacy and effectiveness studies. Thus, given their pivotal role as hypothesis testing grounds for applied studies, it may be helpful for authors to distinguish when the results of mechanistic studies indicate that the hypothesis is sufficiently mature for practical translation (i.e., experimental tests of the hypothesis are reproducible and are likely to produce practically relevant outcomes) or is instead in need of further confirmation. Importantly, we note that the greater the level of pressure to translate research from the lab to the real world, the more likely it will be that paradigms and/or hypotheses will make this transition prematurely or that the degree of real-world applicability will be overstated (of which there are many examples). We thus recommend appropriate nuance if authors of mechanistic studies choose to discuss potential real-world implications of the work. In particular, the discussion should be used to explicitly comment on whether the data indicate readiness for translation to efficacy or effectiveness studies, rather than giving the typical full-fledged nods to possible direct real-world applications (which are not among the goals of a mechanistic study).

Efficacy Studies

The goal of efficacy studies is to validate a given intervention as the cause of cognitive improvements above and beyond any placebo or expectation-related effects (Fritz and Cleland 2003; Marchand et al. 2011; Singal et al. 2014). The focus is not on establishing the underlying mechanism of action of an intervention, but on establishing that the intervention (when delivered in its planned “dose”) produces the intended outcome when compared to a placebo control or to another intervention previously proven to be efficacious. Although efficacy studies are often presented as asking “Does the paradigm produce the intended outcome?” they would be more accurately described as asking, “Does the paradigm produce the anticipated outcome in the exact and carefully controlled population of interest when the paradigm is used precisely as intended by the researchers?” Indeed, given that the goal is to establish whether a given intervention, as designed and intended, is efficacious, reducing unexplained variability or unintended behavior is key (e.g., as related to poor compliance, trainees failing to understand what is required of them, etc.).

Effectiveness Studies

As with efficacy studies, the goal of effectiveness studies is to assess whether a given intervention produces positive impact of the type desired and predicted, most commonly involving real-world impact. However, unlike efficacy studies—which focus on results obtained under a set of carefully controlled circumstances—effectiveness studies examine whether significant real-world impact is observed when the intervention is used in less than ideally controlled settings (e.g., in the “real-world”; Fritz and Cleland 2003; Marchand et al. 2011; Singal et al. 2014). For example, in the pharmaceutical industry, an efficacy study may require that participants take a given drug every day at an exact time of day for 30 straight days (i.e., the exact schedule is clearly defined and closely monitored). An effectiveness study, in contrast, would examine whether the drug produces benefits when it is used within real-world clinical settings, which might very well include poor compliance with instructions (e.g., taking the drug at different times, missing doses, taking multiple doses to “catch up,” etc.). Similarly, an efficacy study in the pharmaceutical domain might narrowly select participants (e.g., in a study of a drug for chronic pain, participants with other comorbid conditions, such as major depressive disorder, might be excluded), whereas an effectiveness trial would consider all individuals likely to be prescribed the drug, including those with comorbidities.

Effectiveness studies of behavioral interventions have historically been quite rare as compared to efficacy studies, which is a major concern for real-world practitioners (although there are some fields within the broader domain of psychology where such studies have been more common—e.g., human factors, engineering psychology, industrial organization, education, etc.). Indeed, researchers seeking to use behavioral interventions for cognitive enhancement in the real-world (e.g., to augment learning in a school setting) are unlikely to encounter the homogenous and fully compliant individuals who comprise the participant pool in efficacy studies. This in turn may result in effectiveness study outcomes that are not consistent with the precursor efficacy studies, a point we return to when considering future directions.

Critically, although we describe four well-delineated categories in the text above, in practice, studies will tend to vary along the broad and multidimensional space of study types. This is unlikely to change, as variability in approach is the source of much knowledge. However, we nonetheless recommend that investigators should be as clear as possible about the type of studies they undertake starting with an explicit description of the study goals (which in turn constrains the space of acceptable methods).

Methodological Considerations as a Function of Study Type

Below, we review major design decisions including participant sampling, control group selection, assignment to groups, and participant and researcher blinding, and discuss how they may be influenced by study type.

Participant Sampling across Study Types

One major set of differences across study types lies in the participant sampling procedures—including the population(s) from which participants are drawn and the appropriate sample size. Below, we consider how the goals of the different study types can, in turn, induce differences in the sampling procedures.

Feasibility Studies

In the case of feasibility studies, the targeted population will depend largely on the subsequent planned study or studies (typically either a mechanistic study or an efficacy study). More specifically, the participant sample for a feasibility study will ideally be drawn from a population that will be maximally informative for subsequent planned studies. Note that this will most often be the exact same population as will be utilized in the subsequent planned studies. For example, consider a set of researchers who are planning an efficacy study in older adults who live in assisted living communities. In this hypothetical example, before embarking on the efficacy study, the researchers first want to assess feasibility of the protocol in terms of: (1) long-term compliance and (2) participants’ ability to use a computer-controller to make responses. In this case, they might want to recruit participants for the feasibility study from the same basic population as they will recruit from in the efficacy study.

This does not necessarily have to be the case though. For instance, if the eventual population of interest is a small (and difficult to recruit) population of individuals with specific severe deficits, one may first want to show feasibility in a larger and easier to recruit population (at least before testing feasibility in the true population of interest). Finally, the sample size in feasibility studies will often be relatively small as compared to the other study types, as the outcome data simply need to demonstrate feasibility.

Mechanistic and Efficacy Studies

At the broadest level, the participant sampling for mechanistic and efficacy studies will be relatively similar. Both types of studies will tend to sample participants from populations intended to reduce unmeasured, difficult-to-model, or otherwise potentially confounding variability. Notably, this does not necessarily mean the populations will be homogenous (especially given that individual differences can be important in such studies). It simply means that the populations will be chosen to reduce unmeasured differences. This approach may require excluding individuals with various types of previous experience. For example, a mindfulness-based intervention might want to exclude individuals who have had any previous meditation experience, as such familiarity could reduce the extent to which the experimental paradigm would produce changes in behavior. This might also require excluding individuals with various other individual difference factors. For example, a study designed to test the efficacy of an intervention paradigm meant to improve attention in typically developing individuals might exclude individuals diagnosed with ADHD.

The sample size of efficacy studies must be based upon the results of a power analysis and ideally will draw upon anticipated effect sizes observed from previous feasibility and/or mechanistic studies. However, efficacy studies are often associated with greater variability as compared with mechanistic and feasibility studies. Hence, one consideration is whether the overall sample in efficacy studies should be even larger still. Both mechanistic and efficacy studies could certainly benefit from substantially larger samples than previously used in the literature and from considering power issues to a much greater extent.

Effectiveness Studies

In effectiveness studies, the population of interest is the population that will engage with the intervention as deployed in the real-world and thus will be recruited via similar means as would be the case in the real-world. Because recruitment of an unconstrained participant sample will introduce substantial inter-individual variability in a number of potential confounding variables, sample sizes will have to be correspondingly considerably larger for effectiveness studies as compared to efficacy studies. In fact, multiple efficacy studies using different populations may be necessary to identify potential sources of variation and thus expected power in the full population.

Control Group Selection across Study Types

A second substantial difference in methodology across study types is related to the selection of control groups. Below, we consider how the goals of the different study types can, in turn, induce differences in control group selection.

Feasibility Studies

In the case of feasibility studies, a control group is not necessarily required (although one might perform a feasibility study to assess the potential informativeness of a given control or placebo intervention). The goal of a feasibility study is not to demonstrate mechanism, efficacy, or effectiveness, but is instead only to demonstrate viability, tolerability, or safety. As such, a control group is less relevant because the objective is not to account for confounding variables. If a feasibility study is being used to estimate power, a control group (even a passive control group) could be useful, particularly if gains unrelated to the intervention of interest are expected (e.g., if the tasks of interest induce test-retest effects, if there is some natural recovery of function unattributable to the training task, etc.).

Mechanistic Studies

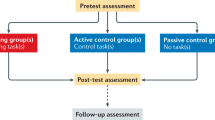

To discuss the value and selection of various types of control groups for mechanistic studies (as well as for efficacy, and effectiveness studies), it is worth briefly describing the most common design for such studies: the pre/post design (Green et al. 2014). In this design, participants first undergo a set of pre-test (baseline) assessments that measure performance along the dimensions of interest. The participants are then either randomly or pseudo-randomly assigned to a treatment group. For instance, in the most basic design, the two treatment groups would be an active intervention and a control intervention. The participants then complete the training associated with their assigned group. In the case of behavioral interventions for cognitive enhancement, this will often involve performing either a single task or set of tasks for several hours spaced over many days or weeks. Finally, after the intervention is completed, participants perform the same tasks they completed at pre-test as part of a post-test (ideally using parallel-test versions rather than truly identical versions). The critical measures are usually comparisons of pre-test to post-test changes in the treatment group as compared to a control group. For example, did participants in the intervention group show a greater improvement in performance from pre-test to post-test as compared to the participants in the control group? The purpose of the control group is thus clear—to subtract out any confounding effects from the intervention group data (including simple test-retest effects), leaving only the changes of interest. This follows from the assumption that everything is, in fact, the same in the two groups with the exception of the experimental manipulation of interest.

In a mechanistic study, the proper control group may appear to be theoretically simple to determine—given some theory or model of the mechanism through which a given intervention acts, the ideal control intervention is one that isolates the posited mechanism(s). In other words, if the goal is to test a particular mechanism of action, then the proper control will contain all of the same “ingredients” as the experimental intervention other than the proposed mechanism(s) of action. Unfortunately, while this is simple in principle, in practice it is often quite difficult because it is not possible to know with certainty all of the “ingredients” inherent to either the experimental intervention or a given control.

For example, in early studies examining the impact of what have come to be known as “action video games” (one genre of video games), the effect of training on action video games was contrasted with training on the video game Tetris as the control (Green and Bavelier 2003). Tetris was chosen to control for a host of mechanisms inherent in video games (including producing sustained arousal, task engagement, etc.), while not containing what were felt to be the critical components inherent to action video games specifically (e.g., certain types of load placed on the perceptual, cognitive, and motor systems). However, subsequent research has suggested that Tetris may indeed place load on some of these processes (Terlecki et al. 2008). Had the early studies produced null results—i.e., if the action video game trained group showed no benefits as compared to the Tetris trained group—it would have been easy to incorrectly infer that the mechanistic model was incorrect, as opposed to correctly inferring that both tasks in fact contained the mechanism of interest.

Because of this possibility, we suggest that there is significant value for mechanistic studies to consider adding a second control group—what we would call a “business as usual” control—to aid in the interpretation of null results. Such a control group (sometimes also referred to as a “test-retest” control group or passive control group) undergoes no intervention whatsoever, but completes the pre- and post-testing within the same timeframe as the trained groups. If neither the intervention group nor the active control group shows benefits relative to this second control group, this is strong evidence against either the mechanistic account itself, against the ability of the intervention to activate the proposed mechanism, or against the ability of the outcome measures to detect effects of the intervention (Roberts et al. 2016). Conversely, if both the intervention and the active control show a benefit relative to the business-as-usual control group, a range of other possibilities are suggested. For instance, it could be the case that both the intervention and active control group have properties that stimulate the proposed mechanism. It could also be the case that there is a different mechanism of action inherent in the intervention training, control training, or both, that produces the same behavioral outcome. Finally, it could be that the dependent measures are not sensitive enough to detect real differences between the outcomes. Such properties might include differential expectancies that lead to the same outcome including the simple adage that sometimes doing almost anything is better than nothing, that the act of being observed tends to induce enhancements, or any of a host of other possibilities.

Efficacy Studies

For efficacy studies, the goal of a control group is to subtract out the influence of a handful of mechanisms of “no interest”—including natural progression and participant expectations. In the case of behavioral interventions for cognitive enhancement, natural progression will include, for instance, mechanisms: (1) related to time and/or development, such as children showing a natural increase in attentional skills as they mature independent of any interventions; and (2) those related to testing, such as the fact that individuals undergoing a task for a second time will often have improved performance relative to the first time they underwent the task. Participant expectations, meanwhile, would encompass mechanisms classified as “placebo effects.” Within the medical world, these effects are typically controlled via a combination of an inert placebo control condition (e.g., sugar pill or saline drip) and participant and experimenter blinding (i.e., neither the participant nor the experimenter being informed as to whether the participant is in the active intervention condition or the placebo control condition). In the case of behavioral interventions for cognitive enhancement, it is worth noting, just as was true of mechanistic studies, that there is not always a straightforward link between a particular placebo control intervention and the mechanisms that placebo is meant to control for. It is always possible that a given placebo control intervention, that is meant to be “inert,” could nonetheless inadvertently involve mechanisms that are of theoretical interest.

Given this, in addition to a placebo control (which we discuss in its own section further below), we suggest here that efficacy studies also include a business-as-usual control group. This will help in cases where the supposed “inert placebo” control turns out to be not inert with respect to the outcomes of interest. For instance, as we will see below, researchers may wish to design an “inert” control that retains some plausibility as an active intervention for participants, so as to control for participant expectations. However, in doing so, they may inadvertently include “active” ingredients. Notably, careful and properly powered individual difference studies examining the control condition conducted prior to the efficacy study will reduce this possibility.

More critically perhaps, in the case of an efficacy study, such business-as-usual controls have additional value in demonstrating that there is no harm produced by the intervention. Indeed, it is always theoretically possible that both the active and the control intervention may inhibit improvements that would occur due to either natural progression, development, maturation, or in comparison with how individuals would otherwise spend their time. This is particularly crucial in the case of any intervention that replaces activities known to have benefits. This would be the case, for instance, of a study examining potential for STEM benefits where classroom time is replaced by an intervention, or where a physically active behavior is replaced by a completely sedentary behavior.

Effectiveness Studies

For effectiveness studies, because the question of interest is related to benefits that arise when the intervention is used in real-world settings, the proper standard against which the intervention should be judged is business-as-usual—or in cases where there is an existing proven treatment or intervention, the contrast may be against normal standard of care (this latter option is currently extremely rare in our domain, if it exists at all). In other words, the question becomes: “Is this use of time and effort in the real world better for cognitive outcomes than how the individual would otherwise be spending that time?” Or, if being compared to a current standard of care, considerations might also include differential financial costs, side effects, accessibility concerns, etc.

We conclude by noting that the recommendation that many mechanistic and all efficacy studies include a business-as-usual control has an additional benefit beyond aiding in the interpretation of the single study at hand. Namely, such a broadly adopted convention will produce a common control group against which all interventions are contrasted (although the outcome measures will likely still differ). This in turn will greatly aid in the ability to determine effect sizes and compare outcomes across interventions. Indeed, in cases where the critical measure is a difference of differences (e.g., (post-performanceintervention − pre-performanceintervention) − (post-performancecontrol − pre-performancecontrol), there is no coherent way to contrast the size of the overall effects when there are different controls across studies. Having a standard business-as-usual control group allows researchers to observe which interventions tend to produce bigger or smaller effects and take that information into account when designing new interventions. There are of course caveats, as business-as-usual and standard-of-care can differ across groups. For example, high SES children may spend their time in different ways than low-SES children, rendering it necessary to confirm that apples-to-apples comparisons are being made.

Assignment to Groups

While the section above focused on the problem of choosing appropriate control interventions, it is also important to consider how individuals are assigned to groups. Here, we will consider all types of studies together (although this is only a concern for feasibility studies in cases where the feasibility study includes multiple groups). Given a sufficiently large number of participants, true random assignment can be utilized. However, it has long been recognized that truly random assignment procedures can create highly imbalanced group membership, a problem that becomes increasingly relevant as group sizes become smaller. For instance, if group sizes are small, it would not be impossible (or potentially even unlikely) for random assignment to produce groups that are made up of almost all males or almost all females or include almost all younger individuals or almost all older individuals (depending on the population from which the sample is drawn). This in turn can create sizeable difficulties for data interpretation (e.g., it would be difficult to examine sex as a biological variable if sex was confounded with condition).

Beyond imbalance in demographic characteristics (e.g., age, sex, SES, etc.), true random assignment can also create imbalance in initial measured performance; in other words—pre-test (or baseline) differences. Pre-test differences in turn create severe difficulties in interpreting changes in the typical pre-test → training → post-test design. As just one example, consider a situation where the experimental group’s performance is worse at pre-test than the control group’s performance. If, at post-test, a significant improvement is seen in the experimental group, but not in the control group, a host of interpretations are possible. Such a result could be due to: (1) a positive effect of the intervention, (2) it could be regression to the mean due to unreliable measurements, or (3) it could be that people who start poorly have more room to show simple test-retest effects, etc. Similar issues with interpretation arise when the opposite pattern occurs (i.e., when the control group starts worse than the intervention group).

Given the potential severity of these issues, there has long been interest in the development of methods for group assignment that retain many of the aspects and benefits of true randomization while allowing for some degree of control over group balance (in particular in clinical and educational domains; Chen and Lee 2011; Saghaei 2011; Taves 1974; Zhao et al. 2012). A detailed examination of this literature is outside of the scope of the current paper. However, such promising methods have begun to be considered and/or used in the realm of cognitive training (Green et al. 2014; Jaeggi et al. 2011; Redick et al. 2013). As such, we urge authors to consider various alternative group assignment approaches that have been developed (e.g., creating matched pairs, creating homogeneous sub-groups or blocks, attempting to minimize group differences on the fly, etc.) as the best approach will depend on the study’s sample characteristics, the goals of the study, and various practical concerns (e.g., whether the study enrolls participants on the fly, in batches, all at once, etc.). For instance, in studies employing extremely large task batteries, it may not be feasible to create groups that are matched for pre-test performance on all measures. The researchers would then need to decide which variables are most critical to match (or if the study was designed to assess a smaller set of latent constructs that underlie performance on the larger set of various measures, it may be possible to match based upon the constructs). In all, our goal here is simply to indicate that not only can alternative methods of group assignment be consistent with the goal of rigorous and reproducible science, but in many cases, such methods will produce more valid and interpretable data than fully random group assignment.

Can Behavioral Interventions Achieve the Double-Blind Standard?

One issue that has been raised in the domain of behavioral interventions is whether it is possible to truly blind participants to condition in the same “gold standard” manner as in the pharmaceutical field. After all, whereas it is possible to produce two pills that look identical, one an active treatment and one an inert placebo, it is not possible to produce two behavioral interventions, one active and one inert, that are outwardly perfectly identical (although under some circumstances, it may be possible to create two interventions where the manipulation is subtle enough to be perceptually indistinguishable to a naive participant). Indeed, the extent to which a behavioral intervention is “active” depends entirely on what the stimuli are and what the participant is asked to do with those stimuli. Thus, because it is impossible to produce a mechanistically active behavioral intervention and an inert control condition that look and feel identical to participants, participants may often be able to infer their group assignment.

To this concern, we first note that even in pharmaceutical studies, participants can develop beliefs about the condition to which they have been assigned. For instance, active interventions often produce some side effects, while truly inert placebos (like sugar pills or a saline drip) do not. Interestingly, there is evidence to suggest: (1) that even in “double-blind” experiments, participant blinding may sometimes be broken (i.e., via the presence or absence of side effects; Fergusson et al. 2004; Kolahi et al. 2009; Schulz et al. 2002) and (2) the ability to infer group membership (active versus placebo) may impact the magnitude of placebo effects (Rutherford et al. 2009, although see Fassler et al. 2015).

Thus, we would argue that—at least until we know more about how to reliably measure participant expectations and how such expectations impact on our dependent variables—efficacy studies should make every attempt to adopt the same standard as the medical domain. Namely, researchers should employ an active control condition that has some degree of face validity as an “active” intervention from the participants’ perspective, combined with additional attempts to induce participant blinding (noting further that attempts to assess the success of such attempts is perhaps surprisingly rare in the medical domain—Fergusson et al. 2004; Hrobjartsson et al. 2007).

Critically, this will often start with participant recruitment—in particular using recruitment methods that either minimize the extent to which expectations are generated or serve to produce equivalent expectations in participants, regardless of whether they are assigned to the active or control intervention (Schubert and Strobach 2012). For instance, this may be best achieved by introducing the overarching study goals as examining which of two active interventions is most effective, rather than contrasting an experimental intervention with a control condition. This process will likely also benefit retention as participants are more likely to stay in studies that they believe might be beneficial.

Ideally, study designs should also, as much as is possible, include experimenter blinding, even though it is once again more difficult in the case of a behavioral intervention than in the case of a pill. In the case of two identical pills, it is completely possible to blind the experimental team to condition in the same manner as the participant (i.e., if the active drug and placebo pill are perceptually indistinguishable, the experimenter will not be able to ascertain condition from the pill alone—although there are perhaps other ways that experimenters can nonetheless become unblinded; Kolahi et al. 2009). In the case of a behavioral intervention, those experimenter(s) who engage with the participants during training will, in many cases, be able to infer the condition (particularly given that those experimenters are nearly always lab personnel who, even if not aware of the exact tasks or hypotheses, are reasonably well versed in the broader literature). However, while blinding those experimenters who interact with participants during training is potentially difficult, it is quite possible and indeed desirable to ensure that the experimenter(s) who run the pre- and post-testing sessions are blind to condition and perhaps more broadly to the hypotheses of the research (but see the “Suggestions for Funding Agencies” section below, as such practices involve substantial extra costs).

Outcome Assessments across Study Types

Feasibility Studies

The assessments used in behavioral interventions for cognitive enhancement arise naturally from the goals. For feasibility studies, the outcome variables of interest are those that will speak to the potential success or failure of a subsequent mechanistic, efficacy, or effectiveness studies. These may include the actual measures of interest in those subsequent studies, particularly if one purpose of the feasibility study is to estimate possible effect sizes and necessary power for those subsequent studies. They may also include a host of measures that would not be primary outcome variables in subsequent studies. For instance, compliance may be a primary outcome variable in a feasibility study, but not in a subsequent efficacy study (where compliance may only be measured in order to exclude participants with poor compliance).

Mechanistic Studies

For mechanistic studies, the outcomes that are assessed should be guided entirely by the theory or model under study. These will typically make use of in-lab tasks that are either thought or known to measure clearly defined mechanisms or constructs. Critically, for mechanistic studies focused on true learning effects (i.e., enduring behavioral changes), the assessments should always take place after potential transient effects associated with the training itself have dissipated. For instance, some video games are known to be physiologically arousing. Because physiological arousal is itself linked with increased performance on some cognitive tasks, it is desireable that testing takes place after a delay (e.g., 24 h or longer depending on the goal), thus ensuring that short-lived effects are no longer in play (the same holds true for efficacy and effectiveness studies).

Furthermore, there is currently a strong emphasis in the field toward examining mechanisms that will produce what is commonly referred to as far transfer as compared to just producing near transfer. First, it is important to note that the distinction between what is “far” and what is “near” is commonly a qualitative, rather than quantitative one (Barnett and Ceci 2002). Near transfer is typically used to describe cases where training on one task produces benefits on tasks meant to tap the same core construct as the trained task using slightly different stimuli or setups. For example, those in the field would likely consider transfer from one “complex working memory task” (e.g., the O-Span) to another “complex working memory task” (e.g., Spatial Span) to be an example of near transfer. Far transfer is then used to describe situations where the training and transfer tasks are not believed to tap the exact same core construct. In most cases, this means partial, but not complete, overlap between the training and transfer tasks (e.g., working memory is believed to be one of many processes that predict performance on fluid intelligence measures, so training on a working memory task that improves performance on a fluid intelligence task would be an instance of far transfer).

Second, and perhaps more critically, the inclusion of measures to assess such “far transfer” in a mechanistic study are only important to the extent that such outcomes are indeed a key prediction of the mechanistic model. To some extent, there has been a tendency in the field to treat a finding of “only near transfer” as a pejorative description of experimental results. However, there are a range of mechanistic models where only near transfer to tasks with similar processing demands would be expected. As such, finding near transfer can be both theoretically and practically important. Indeed, some translational applications of training may only require near transfer (although true real-world application will essentially always require some degree of transfer across content).

Thus, in general, we would encourage authors to describe the similarities and differences between trained tasks and outcome measures in concrete, quantifiable terms whenever possible (whether these descriptions are in terms of task characteristics—e.g., similarities of stimuli, stimulus modality, task rules, etc.—or in terms of cognitive constructs or latent variables).

We further suggest that assessment methods in mechanistic studies would be greatly strengthened by including, and clearly specifying, tasks that are not assumed to be susceptible to changes in the proposed mechanism under study. If an experimenter demonstrates that training on Task A, which is thought to tap a specific mechanism of action, produces predictable improvements in some new Task B, which is also thought to tap that same specific mechanism, then this supports the underlying model or hypothesis. Notably, however, the case would be greatly strengthened if the same training did not also change performance on some other Task C, which does not tap the underlying specific mechanism of action. In other words, only showing that Task A produces improvements on Task B leaves a host of other possible mechanisms alive (many of which may not be of interest to those in cognitive psychology). Showing that Task A produces improvements on Task B, but not on Task C, may rule out other possible contributing mechanisms. A demonstration of a double dissociation between training protocols and pre-post assessment measures would be better still, although this may not always be possible with all control tasks. If this suggested convention of including tasks not expected to be altered by training is widely adopted, it will be critical for those conducting future meta-analyses to avoid improperly aggregating across outcome measures (i.e., it would be a mistake, in the example above, for a meta-analysis to directly combine Task B and Task C to assess the impact of training on Task A).

Efficacy Studies

The assessments that should be employed in efficacy studies lie somewhere between the highly controlled, titrated, and precisely defined lab-based tasks that will be used most commonly in mechanistic studies, and the functionally meaningful real-world outcome measurements that are employed in effectiveness studies. The broadest goal of efficacy studies is, of course, to examine the potential for real-world impact. Yet, the important sub-goal of maintaining experimental control means that researchers will often use lab-based tasks that are thought (or better yet, known) to be associated with real-world outcomes. We recognize that this link is often tenuous in the peer-reviewed literature and in need of further well-considered study. There are some limited areas in the literature where real-world outcome measures have been examined in the context of cognitive training interventions. Examples include the study of retention of driving skills (in older adults; Ross et al. 2016) or academic achievement (in children; Wexler et al. 2016) that have been measured in both experimental and control groups. Another example is psychiatric disorders (e.g., schizophrenia; Subramaniam et al. 2014), where real-world functional outcomes are often the key dependent variable.

In many cases though, the links are purely correlational. Here, we caution that such an association does not ensure that a given intervention with a known effect on lab-based measures will improve real-world outcomes. For instance, two measures of cardiac health—lower heart-rate and lower blood pressure—are both correlated with reductions in the probability of cardiac-related deaths. However, it is possible for drugs to produce reductions in heart-rate and/or blood pressure without necessarily producing a corresponding decrease in the probability of death (Diao et al. 2012). Therefore, the closer that controlled lab-based efficacy studies can get to the measurement of real-world outcomes, the better. We note that the emergence of high-fidelity simulations (e.g., as implemented in virtual reality) may help bridge the gap between well-controlled laboratory studies and a desire to observe real-world behaviors (as well as enable us to examine real-world tasks that are associated with safety concerns - such as driving). However, caution is warranted as this domain remains quite new and the extent to which virtual reality accurately models or predicts various real-world behaviors of interest is at present unknown.

Effectiveness Studies

In effectiveness studies, the assessments also spring directly from the goals. Because impact in the real-world is key, the assessments should predominantly reflect real-world functional changes. We note that “efficiency,” which involves a consideration of both the size of the effect promoted by the intervention and the cost of the intervention is sometimes utilized as a critical metric in assessing both efficacy and effectiveness studies (larger effects and/or smaller costs mean greater efficiency; Andrews 1999; Stierlin et al. 2014). By contrast, we are focusing here primarily on methodology associated with accurately describing the size of the effect promoted by the intervention in question (although we do point out places where this methodology can be costly). In medical research, the outcomes of interest are often described as patient relevant outcomes (PROs): outcome variables of particular importance to the target population. This presents a challenge for the field, though, as there are currently a limited number of patient-relevant “real-world measures” available to researchers, and these are not always applicable to all populations.

One issue that is often neglected in this domain is the fact that improvements in real-world behaviors will not always occur immediately after a cognitive intervention. Instead, benefits may only emerge during longer follow-up periods, as individuals consolidate enhanced cognitive skills into more adaptive real-world behaviors. As an analogy from the visual domain, a person with nystagmus (constant, repetitive, and uncontrolled eye-movements) may find it difficult to learn to read because the visual input is so severely disrupted. Fixing the nystagmus would provide the system with a stronger opportunity to learn to read, yet would not give rise to reading in and of itself. The benefits to these outcomes would instead only be observable many months or years after the correction.

The same basic idea is true of what have been called “sleeper” or “protective” effects. Such effects also describe situations where an effect is observed at some point in the future, regardless of whether or not an immediate effect was observed. Specifically, sleeper or protective benefits manifest in the form of a reduction in the magnitude of a natural decline in cognitive function (Jones et al. 2013; Rebok et al. 2014). These may be particularly prevalent in populations that are at risk for a severe decline in cognitive performance. Furthermore, there would be great value in multiple long-term follow-up assessments even in the absence of sleeper effects to assess the long-term stability or persistence of any findings. Again, like many of our other recommendations, the presence of multiple assessments increases the costs of a study (particularly as attrition rates will likely rise through time).

Replication—Value and Pitfalls

There have been an increasing number of calls over the past few years for more replication in psychology (Open Science 2012; Pashler and Harris 2012; Zwaan et al. 2017). This issue has been written about extensively, so here we focus on several specific aspects as they relate to behavioral interventions for cognitive enhancement. First, questions have been raised as to how extensive a change can be made from the original and still be called a “replication.” We maintain that if changes are made from the original study design (e.g., if outcome measures are added or subtracted; if different control training tasks are used; if different populations are sampled; if a different training schedule is used), then this ceases to be a replication and becomes a test of a new hypothesis. As such, only studies that make no such changes could be considered replications. Here, we emphasize that because there are a host of cultural and/or other individual difference factors that can differ substantially across geographic locations (e.g., educational and/or socioeconomic backgrounds, religious practices, etc.) that could potentially affect intervention outcomes, “true replication” is actually quite difficult. We also note that when changes are made to a previous study’s design, it is often because the researchers are making the explicit supposition that such changes yield a better test of the broadest level experimental hypothesis. Authors in these situations should thus be careful to indicate this fact, without making the claim that they are conducting a replication of the initial study. Instead, they can indicate that a positive result, if found using different methods, serves to demonstrate the validity of the intervention across those forms of variation. A negative result meanwhile may suggest that the conditions necessary to generate the original result might be narrow. In general, the suggestions above mirror the long-suggested differentiation between “direct” replication (i.e., performing the identical experiment again in new participants) and “systematic” replication (i.e., where changes are made so as to examine the generality of the finding—sometimes also called “conceptual” replication; O’Leary et al. 1978; Sidman 1966; Stroebe and Strack 2014).

We are also aware that there is a balance, especially in a world with ever smaller pools of funding, between replicating existing studies and attempting to develop new ideas. Thus, we argue that the value of replication will depend strongly on the type of study considered. For instance, within the class of mechanistic studies, it is rarely (and perhaps never) the case that a single design is the only way to test a given mechanism.

As a pertinent example from a different domain, consider the “facial feedback” hypothesis. In brief, this hypothesis holds that individuals use their own facial expressions as a cue to their current emotional state. One classic investigation of this hypothesis involved asking participants to hold a pen either in their teeth (forcing many facial muscles into positions consistent with a smile) or their lips (prohibiting many facial muscles from taking positions consistent with a smile). An initial study using this approach produced results consistent with the facial feedback hypothesis (greater positive affect when the pen was held in the teeth; Strack et al. 1988). Yet, multiple attempted replications largely failed to find the same results (Acosta et al. 2016).

First, this is an interesting exemplar given the distinction between “direct” and “conceptual” replication above. While one could argue that the attempted replications were framed as being direct replications, changes were made to the original procedures that may have substantively altered the results (e.g., the presence of video recording equipment; Noah et al. 2018). Yet, even if the failed replications were truly direct, would null results falsify the “facial feedback” hypothesis? We would suggest that such results would not falsify the broader hypothesis. Indeed, the pen procedure is just one of many possible ways to test a particular mechanism of action. For example, recent work in the field has strongly indicated that facial expressions should be treated as trajectories rather than end points (i.e., it is not just the final facial expression that matters, the full set of movements that gave rise to the final expression matter). If pen procedure does not effectively mimic the full muscle trajectory of a smile, it might not be the best possible test of the broader theory.

Therefore, such a large-scale replication of the pen procedure—one of many possible intervention designs—provides limited evidence for or against the posited mechanism. It instead largely provides information about the given intervention (which can easily steer the field in the wrong direction; Rotello et al. 2015). It is unequivocally the case that our understanding of the links between tasks and mechanisms is often weaker than we would like. Given this, we suggest that, in the case of mechanistic studies, there will often be more value in studies that are “extensions,” which can provide converging or diverging evidence regarding the mechanism of action, rather than in direct replications.

Conversely, the value of replication in the case of efficacy and effectiveness studies is high. In these types of studies, the critical questions are strongly linked to a single well-defined intervention. There is thus considerable value in garnering additional evidence about that very intervention.

Best Practices when Publishing

In many cases, the best practices for publishing in the domain of behavioral interventions for cognitive enhancement mirror those that have been the focus of myriad recent commentaries within the broader field of psychology (e.g., a better demarcation between analyses that are planned and those that were exploratory). Here, we primarily speak to issues that are either unique to our domain or where best practices may differ by study type.

In general, there are two mechanisms for bias in publishing that must be discussed. The first is publication bias (also known as the “file drawer problem”; Coburn and Vevea 2015). This encompasses, among other things, the tendency for authors to be more likely to submit for publication studies with positive results (most often positive results that confirm their hypotheses, but also including positive, but unexpected results), while failing to submit studies with negative or more equivocal results. It also includes the related tendency for reviewers and/or journal editors to be less likely to accept studies that show non-significant or null outcomes. The other bias is p-hacking (Head et al. 2015). This is when a study collects many outcomes and only the statistically significant outcomes are reported. Obviously, if only positive outcomes are published, it will result in a severely biased picture of the state of the field.

Importantly, the increasing recognition of the problems associated with publication bias has apparently increased the receptiveness of journals, editors, and reviewers toward accepting properly powered and methodologically sound null results. One solution to these publication bias and p-hacking problems is to rely less on p values when reporting findings in publications (Barry et al. 2016; Sullivan and Feinn 2012). Effect size measures provide information on the size of the effect in standardized form that can be compared across studies. In randomized experiments with continuous outcomes, Hedges’ g is typically reported (a version of Cohen’s d that is unbiased even with small samples); this focuses on changes in standard deviation units. This focus is particularly important in the case of feasibility studies and often also mechanistic studies, which often lack statistical power (see Pek and Flora 2017 for more discussion related to reporting effect sizes). Best practice in these studies is to report the effect sizes and p values for all comparisons made, not just those that are significant or that make the strongest argument. We also note that this practice of full reporting applies also to alternative methods to quantify statistical evidence, such as the recently proposed Bayes factors (Morey et al. 2016; Rouder et al. 2009). It would further be of value in cases where the dependent variables of interest were aggregates (e.g., via dimensionality reduction) to provide at least descriptive statistics for all variables and not just the aggregates.

An additional suggestion to combat the negative impact of selective reporting is preregistration of studies (Nosek et al. 2017). Here, researchers disclose, prior to the study’s start, the full study design that will be conducted. Critically, this includes pre-specifying the confirmatory and exploratory outcomes and/or analyses. The authors are then obligated, at the study’s conclusion, to report the full set of results (be those results positive, negative, or null). We believe there is strong value for preregistration both of study design and analyses in the case of efficacy and effectiveness studies where claims of real-world impact would be made. This includes full reporting of all outcome variables (as such studies often include sizable task batteries resulting in elevated concerns regarding the potential for Type I errors). In this, there would also potentially be value in having a third party curate the findings for different interventions and populations and provide overviews of important issues (e.g., as is the case for the Cochrane reviews of medical findings).

The final suggestion is an echo of our previous recommendations to use more precise language when describing interventions and results. In particular, here, we note the need to avoid making overstatements regarding real-world outcomes (particularly in the case of feasibility and mechanistic studies). We also note the need to take responsibility for dissuading hyperbole when speaking to journalists or funders about research results. Although obviously scientists cannot perfectly control how research is presented in the popular media, it is possible to encourage better practices. Describing the intent and results of research, as well as the scope of interpretation, with clarity, precision, and restraint will serve to inspire greater confidence in the field.

Need for Future Research

While the best practices with regard to many methodological issues seem clear, there remain a host of areas where there is simply insufficient knowledge to render recommendations.

The Many Uncertainties Surrounding Expectation Effects

We believe our field should strive to meet the standard currently set by the medical community with regard to blinding and placebo control (particularly as there is no evidence indicating that expectation effects are larger in cognitive training than in the study of any other intervention). Even if it is impossible to create interventions and control conditions that are perceptually identical (as can be accomplished in the case of an active pill and an inert placebo pill), it is possible to create control conditions that participants find plausible as an intervention. However, we also believe that we, as a field, have the potential to go beyond standards set by the medical community. This may arise, for instance, via additional research on potential benefits from placebo effects. Indeed, the practice of entirely avoiding expectation-based effects may remove an incredibly powerful intervention component from our arsenal (Kaptchuk and Miller 2015).

At present, empirical data addressing whether purely expectation-based effects are capable of driving gains within behavioral interventions for cognitive enhancement is extremely limited and what data does exist is mixed. While one short-term study indicated that expectation effects may have an impact on measured cognitive performance (Foroughi et al. 2016), other studies have failed to find an impact of either expectations or deliberately induced motivational factors (Katz et al. 2018; Tsai et al. 2018).

Although a number of critiques have indicated the need for the field to better measure and/or control for expectation effects, these critiques have not always recognized the difficulties and uncertainties associated with doing so. More importantly, indirect evidence suggests that such effects could serve as important potential mechanisms for inducing cognitive enhancement if they were purposefully harnessed. For instance, there is work suggesting a link between a variety of psychological states that could be susceptible to influence via expectation (e.g., beliefs about self-efficacy) and positive cognitive outcomes (Dweck 2006). Furthermore, there is a long literature in psychology delineating and describing various “participant reactivity effects” or “demand characteristics,” which are changes in participant behavior that occur due to the participants’ beliefs about or awareness of the experimental conditions (Nichols and Maner 2008; Orne 1962). Critically, many sub-types of participant reactivity result in enhanced performance (e.g., the Pygmalion effect, wherein participants increase performance so as to match high expectations; Rosenthal and Jacobson 1968).

There is thus great need for experimental work examining the key questions of how to manipulate expectations about cognitive abilities effectively and whether such manipulations produce significant and sustainable changes in these abilities (e.g., if effects of expectations are found, it will be critical to dissociate expectation effects that lead to better test-taking from those that lead to brain plasticity). In this endeavor, we can take lessons from other domains where placebo effects have not only been explored, but have begun to be purposefully harnessed, as in the literature on pain (see also the literature on psychotherapy; Kirsch 2005). Critically, studies in this vein have drawn an important distinction between two mechanisms that underlie expectation and/or placebo effects. One mechanism is through direct, verbal information given to participants; the other one is learned by participants, via conditioning, and appears even more powerful in its impact on behavior (Colloca and Benedetti 2006; Colloca et al. 2013).