Abstract

Partial least squares path modeling (PLSPM) and generalized structural component analysis (GSCA) constitute composite-based structural equation modeling (SEM) methods, which have attracted considerable interest among methodological and applied researchers alike. Methodological extensions of PLSPM and GSCA have appeared at rapid pace, producing different research streams with different foci and understandings of the methods and their merits. Based on a theoretical comparison of PLSPM and GSCA in terms of model specification, parameter estimation, and results evaluation, we apply a text analytics technique to identify links between dominant topics in methodological research on both methods. We find that researchers have put effort on clearly distinguishing factor and composite models and their implications for the methods’ performance. We also identify an increasing interest in more complex model specifications such as mediating effects and higher-order models. The evidence of converging and diverging PLSPM and GSCA streams of research points out opportunities for advancing the evolution of composite-based SEM.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Structural equation modeling (SEM) has become a quasi-standard tool for analyzing complex inter-relationships between observed and latent variables (Kaplan 2002). Two conceptually different approaches to SEM have been proposed: factor- and composite-based SEM (Jöreskog and Wold 1982; Rigdon et al. 2017). In factor-based SEM, as strongly influenced by the psychometric or psychological measurement tradition, unobservable conceptual variables are approximated by common factors under the assumption that each latent variable exists as an entity independent of observed variables, but serves as the sole source of the associations among the observed variables. On the contrary, composite-based SEM—influenced by traditional multivariate statistical techniques such as principal component analysis and canonical correlation analysis (e.g., Horst 1936, 1961; Hotelling 1933, 1936; Pearson 1901; Spearman 1913)—represents a latent variable by a weighted composite (or component) of observed variables, assuming that it is a deterministic aggregation of observed variables.Footnote 1

Partial least squares path modeling (PLSPM; Wold 1966, 1973, 1982; Lohmöller 1989) and generalized structured component analysis (GSCA; Hwang and Takane 2004, 2014) are full-fledged approaches to composite-based SEM and are comparable in scope and capability, although many multivariate methods can also be considered to fall into the domain of composite-based SEM (Hwang and Takane 2014, see Chapter 2). Particularly PLSPM has gained massive dissemination during the last decade, especially in the social sciences (e.g., Ali et al. 2018; Hair et al. 2012; Ringle et al. 2019), but also in other fields of scientific inquiry such as agricultural science, engineering, environmental science, and medicine (e.g., Avkiran 2018; Sarstedt 2019; Willaby et al. 2015).

Reflecting on the increasing prominence of the method, Khan et al. (2019) recently presented a social network analysis of methodological PLSPM research. Specifically, using 84 methodological studies published in 39 journals by 145 authors from 106 universities as input, their results show that the PLSPM knowledge network is rather fragmented, with authors working in partly isolated silos. An additional burst detection analysis indicates that method comparisons and extensions, for example, to estimate common factor model data (e.g., Dijkstra and Henseler 2015) or to leverage PLSPM’s predictive capabilities (e.g., Shmueli et al. 2016), feature prominently in recent research. While Khan et al. (2019) outline the PLSPM’s domain knowledge infrastructure and identify prominent topics via simple word counts in the studies’ titles and abstracts, their study offers no insights into the semantic relationships among key topics covered in prior research. However, recognizing these relationships is important for understanding the domain’s current state of research and identifying future research opportunities. Furthermore, Khan et al.’s (2019) analysis focuses on PLSPM and did not investigate methodological research on GSCA, which has attracted considerable research attention among users of composite-based SEM and methodologists alike (e.g., Hwang et al. 2017; Jung et al. 2018; Suk and Hwang 2016).

Addressing these concerns, this study sets out to identify dominant topics that characterize the joint PLSPM and GSCA research domain. For this purpose, we apply a two-stage approach to uncover the structure in text corpora by identifying links between dominant topics via the co-occurrence of words within their textual contexts (Smith and Humphreys 2006). This approach differs from co-citation analysis, which investigates the subject similarity among central articles in a research stream by counting the number of joint citations (White and Griffith 1981). It also extends the social network and burst detection analyses employed by Khan et al. (2019), which focus on analyzing relationships among authors, institutions, and countries in the form of co-authorships and simple word pairs to identify salient topics in the field. Instead, our analysis identifies semantic patterns from lexical co-occurrence information extracted from methodological publications on PLSPM and GSCA.

In what follows, we first discuss similarities and differences between PLSPM and GSCA in model specification, parameter estimation, and results evaluation. Describing and contrasting the foundations of PLSPM and GSCA allows establishing the grounds for a unified view on composite-based modeling, which is important to path the way for future method developments and application practices in studies. We then introduce the method and the data used in our concept analysis of methodological research on PLSPM and GSCA. The next section depicts the dominant topics derived from the analysis and puts these into relationship with recent research on related topics. In doing so, we differentiate between two periods, 1979–2013 and 2014–2017, to disclose trending and fading topics.

2 PLSPM and GSCA: similarities and differences

While PLSPM and GSCA share the same objective of analyzing complex inter-relationships between observed and latent variables, both methods differ in the way they achieve this aim. In the following, we highlight several similarities and differences, focusing on aspects related to model specification, estimation, and results evaluation.

2.1 Model specification

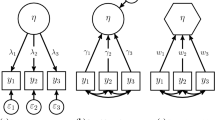

Model specification in PLSPM and GSCA involves two sub-models—the measurement (or outer) models and the structural (or inner) model. The measurement model is used to specify the relationships between indicators and latent variables, whereas the structural model expresses the relationships between latent variables.

Let z and γ denote vectors of all indicators and latent variables, respectively. PLSPM and GSCA contemplate the following measurement model:

where C is a matrix of loadings relating the indicators (z) and the latent variables (γ), and ε is the disturbance term of the indicators. The loading is the zero-order correlation between a latent variable and an indicator.

PLSPM additionally considers another measurement model, where a latent variable (γ) is modeled as a linear function of its associated indicators (z):

where H is a matrix of indicator weights derived from a regression of each latent variable on the indicators of its measurement model, and θ is the disturbance term of the latent variables.

The measurement model specification in Eq. (1) is typically associated with the term “reflective measurement model”, where the indicators are viewed as imperfect reflections of the underlying construct (MacKenzie et al. 2011). However, this can be misleading in composite-based SEM, because it is typically used in the context of common factor models to indicate that a latent variable “causes” the indicators to covary. In other words, when controlling for the impact of the latent variable, the indicator correlations are zero, also known as the axiom of local independence (Lazarsfeld 1959). Similarly, in PLSPM, researchers typically associate the measurement model specification in Eq. (2) with the term “formative measurement model”, where the indicators combine to form the construct (MacKenzie et al. 2011). However, the terms “reflective” and “formative” refer to the theoretical specification of a construct, which is different from how PLSPM and GSCA statistically estimate the models. No matter if one specifies the measurement model according to Eqs. (1) or (2), composite-based SEM methods such as PLSPM and GSCA always compute weighted composites of indicators to represent the latent variables in the statistical model (Rigdon et al. 2017; Sarstedt et al. 2016).

In PLSPM and GSCA, the structural model of the relationships between the latent variables (γ) can be generally expressed as:

where B is a matrix of path coefficients and ζ is the disturbance term of the dependent latent variables. In addition to the measurement and structural models, GSCA has another sub-model, called the weighted relation model, which explicitly defines latent variables (γ) as weighted composites of indicators (z), as follows:

where W is a matrix of (component) weights assigned to indicators. A key difference in model specification is whether the aforementioned sub-models are combined into a single formulation for specifying an entire structural equation model. GSCA integrates its sub-models into a unified formulation (i.e., a single equation), as follows:

where I is an identity matrix, V = \(\left[ {\begin{array}{*{20}c} {\mathbf{I}} \\ {\mathbf{W}} \\ \end{array} } \right]\), and A = \(\left[ {\begin{array}{*{20}c} {\mathbf{C}} \\ {\mathbf{B}} \\ \end{array} } \right]\). This is called the GSCA model. Note that this model can also be expressed as:

where u = \(\left[ {\begin{array}{*{20}c} {\mathbf{I}} \\ {\mathbf{W}} \\ \end{array} } \right]{\mathbf{z}}\) and T = \(\left[ {\begin{array}{*{20}c} {\mathbf{0}} & {\mathbf{C}} \\ {\mathbf{0}} & {\mathbf{B}} \\ \end{array} } \right]\). This model is essentially of the same form as the reticular action model (RAM; McArdle and McDonald 1984), which is mathematically the most compact one amongst several formulations for factor-based SEM, including the LISREL (Jöreskog 1970; Jöreskog 1973) and the Bentler–Weeks (Bentler and Weeks 1980) models. The difference between GSCA and RAM is that GSCA defines latent variables as composites; that is, γ = Wz in Eq. (4), whereas the RAM defines it as a (common) factor. On the other hand, PLSPM does not combine its sub-models into a single equation as GSCA does based on Eq. (5). This dissimilarity in the number of equations needed for the entire model specification in turn leads to differences in the set-up of the parameter estimation algorithms used by the two approaches.

2.2 Model estimation

Model estimation in PLSPM—as implemented in most software programs—draws on Lohmöller’s (1989) extension of Wold’s (1982) original PLSPM algorithm, which belongs to the family of (alternating) least squares algorithms (Mateos-Aparicio 2011). PLSPM estimates model parameters such that the model’s residual variances are minimized (Jöreskog and Wold 1982). To achieve this aim, PLSPM carries out two computational stages sequentially. The first stage returns the latent variable scores as weighted sums of their associated indicators by either using correlation weights (Mode A) or regression weights (Mode B) per measurement model. Correlation weights are technically equivalent to zero-order correlations between a latent variable and each of its assigned indicators. Regression weights result from regressing a latent variable on its associated indicators. In this first stage, an iterative four-step algorithm is used to estimate the weights per measurement model. After convergence (i.e., between iterations, the sum of weights changes becomes very small; e.g., < 0.0000001), the weights are used to compute the latent variable scores as linear combinations of their indicators. The second stage uses the latent variable scores as input in a series of ordinary least squares regressions to estimate the final outer loadings (Eq. 1), outer weights (Eq. 2), the structural model path coefficients (Eq. 3), and the R2 values of the dependent latent variables. The second stage is, thus, non-iterative and simply based on the latent variable scores obtained from the first stage. Consequently, the first stage is the most crucial in PLSPM (Hanafi 2007).Footnote 2

GSCA has the goal to minimize a single optimization criterion of the model. Let zi denote a vector of indicators measured on a single observation of a sample of N observations (i = 1, …, N). To estimate weights (W) and path coefficients and loadings (A) in Eq. (5), GSCA seeks to minimize the sum of all squared residuals (ei) over N observations. This is equivalent to minimizing the following least squares criterion:

with respect to W and A.

For this purpose, GSCA estimates all parameters in one stage by using an iterative algorithm with two steps named alternating least squares (ALS; De Leeuw et al. 1976). The ALS algorithm divides the entire set of parameters into two subsets—W and A. The algorithm begins by assigning arbitrary initial values to W and A, and subsequently carries out two steps per iteration. The first step obtains the least squares estimates of W by minimizing Eq. (7) only with respect to W, considering A fixed temporarily. The second step obtains the least squares estimates of A by minimizing the same criterion only with respect to A, while considering W constant. The two steps are repeated until convergence; for example, the change of the criterion value between iterations becomes smaller than a pre-determined threshold value (e.g., 0.0001). We refer to Hwang and Takane (2014, Appendix 2.1) for a detailed description of the ALS algorithm.

A main difference in parameter estimation is that GSCA optimizes a single criterion to estimate all parameters concurrently and utilizes all information available from the entire system of equations. On the contrary, PLSPM does not involve a single criterion, but rather splits its parameters into two sets and estimates each set iteratively by using a subset of equations at a time, which uses the results of the other subset as input. For these reasons, GSCA is a full-information method, whereas PLSPM is a limited-information method (Tenenhaus 2008). In general, full-information methods are known to be more efficient under correct model specification (Antonakis et al. 2010; Fomby et al. 2012, see Chapter 22), whereas limited-information methods tend to be robust to model misspecification (Bollen et al. 2007; Gerbing and Hamilton 1994). In addition, the estimation procedure of GSCA via ALS appears technically more straightforward and easier to understand than the procedure of PLSPM, which has been criticized for its complexity (e.g., McDonald 1996; Tenenhaus 2008). When using the PLSPM algorithm, for instance, researchers must choose to either use correlations weights (Mode A) or regression weights (Mode B) per measurement model. However, which choice best supports certain model estimation objectives is subject of further research (Dijkstra 2017). Primary results in this direction by Becker et al. (2013a) substantiate that correlation weights (Mode A) produce a higher out-of-sample predictive power under a broad range of conditions and better parameter accuracy when sample sizes are small and the model’s effect sizes are moderate to strong. But most importantly, the absence of a single optimization criterion in PLSPM makes it difficult to impose certain constraints (e.g., equality constraints) on parameters or to fix specific parameters (Tenenhaus 2008). On the contrary, GSCA allows to impose parameter constraints in its estimation procedure (e.g., Hwang and Takane 2014, Chapter 3).

PLSPM and GSCA have recently been extended to address the most common criticism of composite-based SEM that it has no formal way of modeling errors in indicators (e.g., Bentler and Huang 2014; Takane and Hwang 2018), although extracting a weighted composite from a set of indicators contributes to accounting for measurement error (Gleason and Staelin 1973; Henseler et al. 2014; Rigdon 2012). For example, recent research has brought forward consistent partial least squares (PLSc; Dijkstra 2010; Dijkstra and Henseler 2015). The method follows a composite modeling logic but mimics a common factor model (Sarstedt et al. 2016). To do so, the method first computes the model parameters using the standard PLSPM algorithm and correlation weights to obtain the results for the outer loadings, outer weights, path coefficients, and R2 values. Then, PLSc corrects these estimates for attenuation by using the constructs’ reliability coefficients ρA (Dijkstra and Henseler 2015):

whereby \(h\) represents the estimated outer weights vector of the latent variable and S is the empirical covariance matrix of the latent variable’s indicators. PLSc also employs ρA to compute adjusted outer loadings \(\hat{c}\) as follows:

In the structural model, PLSc adjusts the PLSPM correlations \({\text{corr}}\left( {\gamma_{i} ,\gamma_{j} } \right)\) between all pairs of latent variables \(\gamma_{i}\) and \(\gamma_{j}\) as follows:

Then, PLSc utilizes the matrix of the changed correlations \({\text{corr}}\left( {\tilde{\gamma }_{i} ,\tilde{\gamma }_{j} } \right)\) to estimate the adjusted path coefficients for each dependent latent variable and its R2 values by means of ordinary least squares regressions.

To mimic common factor model results in a GSCA context, Hwang et al. (2017) proposed GSCAM that includes both common and unique parts of each indicator, under the assumption that the unique parts are equivalent to measurement errors, as postulated in common factor analysis or factor-based SEM. Like GSCA, GSCAM estimates all parameters simultaneously, taking into account measurement errors.

Both PLSc and GSCAM provide parameter estimates comparable to those of factor-based SEM. Thus, researchers may use these extensions when factor-based SEM does not converge or converges to improper solutions and small sample sizes or complex model specifications permit addressing these issues. Nonetheless, a main difference between PLSc and GSCAM is that GSCAM does not require the basic design in model specification and parameter estimation. The basic design can often be restrictive in practice, leading to the exclusion of multidimensional latent variables that have been well studied in the literature (Asparouhov and Muthén 2009). For instance, multitrait–multimethod models (Campbell and Fiske 1959) and latent growth curve models (Meredith and Tisak 1990; Duncan et al. 2013) include multidimensional latent variables. In this regard, GSCAM seems to be more flexible than PLSc. Irrespective of this, rather than mimicking factor-based SEM results, researchers should generally revert to the much more widely recognized and validated factor-based SEM method when estimating factor models (Hair et al. 2017a).

2.3 Results evaluation

Differences in model estimation entail distinct model evaluation criteria to be used in PLSPM and GSCA, which are well documented in the extant literature (e.g., Hair et al. 2017b; Hwang and Takane 2014; see Table 1).

For PLSPM, the first step in results evaluation involves examining the measurement models. For reflective measurement models, researchers need to assess the indicator and construct reliabilities, convergent validity, and discriminant validity. Formative measurement models need to be assessed with regard to convergent validity, multicollinearity, and the significance and relevance of the indicator weights (Sarstedt et al. 2017). The second step in PLSPM-based results evaluation considers the structural model. This step focuses on the significance and relevance of the path coefficients and the model’s explanatory power (i.e., the R2) as well as its predictive power (e.g., using PLSpredict; Shmueli et al. 2016, 2019). Researchers have also proposed various criteria for assessing a PLS path model’s goodness-of-fit (e.g., GFI and SRMR; Lohmöller 1989; Henseler et al. 2014). However, recent research calls the appropriateness of these metrics and their proposed thresholds into question as the metrics don’t align with the functional principles of the PLSPM algorithm (Hair et al. 2019b). Instead, researchers should focus on predictive model evaluation, which conforms to PLSPM’s causal-predictive nature (Jöreskog and Wold 1982). Researchers should also consider different model configurations and engage in (predictive) model comparisons (Sharma et al. 2019a, b).

The assessment of GSCA results (see Hwang and Takane 2014) can be carried out based on global fit criteria such as FIT and AFIT. These criteria represent a form of average R2 value of both the indicators in the measurement model and the dependent latent variables in the structural model. Moreover, the GFI and SRMR are global fit measures that consider the difference between the sample covariance matrix and the model-implied covariance matrix. Similarly to the FIT and AFIT criteria, the indices FITM and FITS separately indicate how much the variance of indicators (and latent variables) is on average accounted for by a measurement (and the structural) model. GSCA also supports the assessment of each measurement model’s composite reliability (Ryoo and Hwang 2017), as well as predictability of the entire model or sub-models via cross-validation (Cho et al. 2019). Furthermore, various aforementioned local fit criteria that PLSPM adopts can also be used for GSCA (Hwang and Takane 2014, Chapter 2).

Since PLSPM and GSCA are non-parametric, both methods rely on resampling methods, such as bootstrapping (Efron 1979, 1982), to obtain the parameters’ standard errors (e.g., Chin 2001; Hwang and Takane 2004). These allow for computing test statistics or confidence intervals, which facilitate testing the significance of path coefficients and other model parameters of interest (most notably indicator weights).

Even though the criteria differ, results evaluation in PLSPM and GSCA put strong emphasis on the explained variance of the model’s dependent constructs. In addition, while GSCA emphasizes goodness-of-fit testing, researchers using PLSPM have recently put greater emphasis on prediction-oriented model assessment (Shmueli et al. 2019). We expect that these views will converge in order to exploit the composite-based SEM’s causal-predictive capabilities in the future.

3 Concept analysis

3.1 Methodology

To identify dominant topics that characterize the joint PLSPM and GSCA research domain, we apply a combination of semantic and relational extraction from text, referred to as Leximancer (Smith and Humphreys 2006). The approach has been used in various fields including communication studies (Chevalier et al. 2018), marketing (e.g., Babin and Sarstedt 2019; Fritze et al. 2018; Wilden et al. 2017), and different areas of life sciences (e.g., Day et al. 2018; Kilgour et al. 2019; Rigo et al. 2018) to identify dominant themes in research streams.

In its first stage (semantic extraction), the method uses word frequencies and co-occurrences to produce a ranked list of lexical terms. This list seeds a bootstrapping algorithm, which extracts a set of classifiers from the text by iteratively extending the seed word definitions. The resulting weighted term classifiers are referred to as concepts and represent words that carry related meanings (Smith and Humphreys 2006). In its second stage (relational extraction), the method uses the concepts identified in the previous stage to classify text segments (typically every two or three sentences; Leximancer 2018). Specifically, using the relative concept co-occurrence frequency as input, the method generates a two-dimensional concept map based on a variant of the spring-force model for the many-body problem (Chalmers and Chitson 1992). The connectedness of each concept in the underlying network is used to group the concepts into higher-level themes, which aid interpretation of the network’s structure. Themes typically consist of several highly connected concepts, which can be used to characterize the corresponding region of the network—as visualized in the concept map. When themes comprise only a single concept, they usually appear as isolates in the border region of the concept map.

3.2 Data

For the analysis, we first included all 84 articles used in Khan et al.’s (2019) network analysis of the PLSPM research domain. In the next step, we used the Web of Science (WoS) to retrieve additional articles that deal with GSCA and other composite-based SEM methods. Specifically, we entered the following search query into the WoS search engine to find publications across all the databases: “composite-based SEM” OR “composite-based structural equation modeling” OR “GSCA” OR “GESCA” OR “generalized structure component analysis”. To remain consistent with Khan et al.’s (2019) list, we considered all publications from 1965 to early 2017. This second search retrieved an initial number of 24 articles, which three professors proficient in SEM then independently classified. Relevant articles identified in the second search primarily deal with generalized canonical correlation analysis (e.g., Tenenhaus and Tenenhaus 2014; Tenenhaus et al. 2015), GSCA (Hwang and Takane 2004) and its various extensions (e.g., Hwang and Takane 2004; Hwang et al. 2007, 2010).

As a result, our analysis considers 108 papers published between 1979 and 2017. Most of these articles were published in Journal of Business Research (10 articles, 9.26%), Long Range Planning (8 articles, 7.41%), Psychometrika (7 articles, 6.48%), Computational Statistics & Data Analysis, and Ind Manag Data Syst (both 6 articles, 5.56%), showing that composite-based SEM methods have a strong standing in both, prominent applied and renowned statistics journals. A detailed breakdown of the publication years shows that the field experienced a sharp increase in publications in 2014 and later. Specifically, 64 of the 108 articles (59.26%) stem from this time period. Therefore, we performed (1) an analysis of all papers, and (2) separate analyses of the time periods 1979–2013 and 2014–2017. We first performed several training runs on the overall data separated by time periods to identify generic concepts that do not offer any insights into the research domain. As a result of these training runs, we excluded concepts such as “article”, “number”, “paper”, “table”, and “use” from the subsequent analyses. In addition, we removed all name-like concepts such as “Chin” and “Wold”.

4 Results

Table 2 shows the extracted themes (i.e., groups of concepts) from the analysis of all papers and by time periods, including each theme’s number of hits per analysis. The two most dominant themes are latent and analysis. Also, from 1979 to 2013, the theme model played a particularly important role, while from 2014 to 2017 effects and value represent became relevant themes.

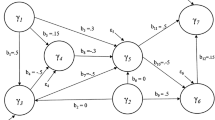

Table 3 presents the breakdown of the themes and corresponding concepts, showing all themes with more than one concept per theme (i.e., other than the theme itself) in any of the analyses, sorted by overall hits. Figure 1 shows the concept map resulting from the analyses showing all the derived concepts and their groupings into themes. Finally, Fig. 2 displays the concept map from 1979 to 2013 while Fig. 3 shows the concept map from 2014 to 2017. The dots in each of the maps represent the concepts, while the circles represent the themes. The size of each circle indicates the number of concepts belonging to each theme, thereby also defining the boundaries to neighboring themes. The themes are heat-mapped to indicate importance. That is, a theme comprising many concepts that are mentioned frequently within the textual data is considered important and appears in red in the map. The second most important theme appears in orange, and so on according to the color wheel. Similarly, the size of a concept’s dot reflects its connectivity in the concept map. The larger the dot, the more often the concept is coded in the text along with the other concepts in the map. In addition, distances between the dots indicate how closely the concepts are related. For example, concepts that appear together often in the same text element tend to settle near one another in the map space (e.g., empirical and theory in the effects theme) .

The analysis yields the dominant theme latent, which comprises a multitude of concepts related to measurement models (Table 3; Fig. 1). Contrasting the two time periods shows that earlier research has put greater emphasis on the distinction between reflective and formative measurement models (Table 3; Figs. 2, 3). In fact, recent research in psychometrics has witnessed considerable debates regarding the nature and applicability of formative measurement (e.g., Aguirre-Urreta et al. 2016; Bentler 2016; Howell and Breivik 2016), which have also impacted the way methodological research on composite-based SEM uses these concepts and related terminologies. For example, Rigdon (2016, p. 601) notes that “the terms ‘formative’ and ‘reflective’ only obscure the statistical reality” and that researchers should rather distinguish between common factor proxies and composite proxies, and between regression weighted composites and correlation weighted composites (Ryoo and Hwang 2017). Similarly, Henseler et al. (2016a, p. 6) avoid the distinction between reflective and formative measurement, instead noting that “the specification of the measurement model entails decisions for composite or factor models”. Sarstedt et al. (2016) however argue that the distinction is still relevant in the context of measurement conceptualization, which needs to be distinguished from how SEM methods treat the measures statistically.

Another prominent theme is analysis, which strongly relates to method comparisons, spanning across both time periods (Table 3; Fig. 1). Research in the field has a long-standing tradition of comparing composite-based with factor-based SEM methods on the grounds of simulated data. The vast majority of these studies used factor model populations as the benchmark against which the parameter estimates from composite-based SEM methods were evaluated (e.g., Goodhue et al. 2012; Rönkkö and Evermann 2013). Researchers have long warned that such comparisons are akin to “comparing apples with oranges” (Marcoulides et al. 2012, p. 725) and only more recent studies have evaluated composite-based SEM methods on the grounds of correctly specified population (i.e., composite) models (Hair et al. 2017c; Sarstedt et al. 2016). This strand of research is also reflected in the emergence of the theme performance in more recent studies.

The third most prominent theme labeled effects shows a divergent development over time. Earlier research related to this theme had a stronger focus on the analysis of interaction effects as evidenced in several prominent publications on this topic (e.g., Chin et al. 2003; Henseler and Chin 2010; Henseler et al. 2012). More recent research, however, focuses on other model specification types such as mediating effects (e.g., Nitzl et al. 2016) and hierarchical component models (e.g., Cheah et al. 2019; Ciavolino et al. 2015; Sarstedt et al. 2019a).

The analysis with regard to the theme model shows that the assessment of unobserved heterogeneity was a dominant concept in earlier research (Table 3; Fig. 2). In this time period, research has brought forward several latent class approaches for capturing unobserved heterogeneity, including FIMIX-PLS (e.g., Hair et al. 2016; Sarstedt et al. 2019b), fuzzy clusterwise GSCA (Hwang et al. 2007), and PLS-POS (Becker et al. 2013b). More recently proposed latent class procedures in PLSPM—PLS-GAS (Ringle et al. 2014) and PLS-IRRS (Schlittgen et al. 2016)—have not reinforced the heterogeneity concept in current research (Table 3; Fig. 3), despite ongoing research in related fields such a multigroup comparisons (Henseler et al. 2016b). A potential reason for this surprising finding could be that new guidelines for PLSPM analyses (Henseler et al. 2016a; Henseler 2017) neglect the concept of unobserved heterogeneity, despite its obvious importance to ensure the validity of results (Becker et al. 2013b; Hair et al. 2019b; Jedidi et al. 1997).

The analysis also produced the theme value, which, in recent research, relates to the concepts of customer and satisfaction. A more detailed analysis shows that many of the studies in the field use customer or job satisfaction data to illustrate methodological extensions such as quantile composite-based SEM (Davino and Vinzi 2016), GSCA with uniqueness terms (Hwang et al. 2017), or cross-validation in PLSPM (Reguera-Alvarado et al. 2016).

Several of the themes identified in the analysis only appear as isolates in either of the time periods (Table 3). For example, the theme bootstrapping relates to the studies by Kock (2016), Rönkkö et al. (2015), and Streukens and Leroi-Werelds (2016) on statistical inference in PLSPM. The theme test refers goodness-of-fit testing in PLSPM, which has experienced renewed interest in recent research. For example, while Henseler et al. (2016a) and Henseler (2017) call for the routine use of model fit measures such as SRMR, Sarstedt et al. (2016) comment critically on the applicability of measures grounded in a comparison of empirical and model-implied correlation matrices in a PLSPM context (also see Hair et al. 2019a). Finally, the theme validity appears in the overall analysis, pointing to researchers’ ongoing interest in related concepts such as discriminant validity (Henseler et al. 2015), which extends to very recent research (Franke and Sarstedt 2019).

5 Discussion

Fostered by recent methodological advances and the availability of easy-to-use software programs, composite-based SEM methods—particularly PLSPM and GSCA—have gained massive traction in recent years (Hair et al. 2019a, Ringle 2019). Methodological research has constantly advanced PLSPM and GSCA to accommodate a broad range of data and model constellations. Recent examples include methods for assessing a model’s predictive power (Shmueli et al. 2016, 2019), addressing endogeneity (Hult et al. 2018; Sarstedt et al. 2019a), and model comparisons (Sharma et al. 2019a, b). At the same time, conceptual considerations have given composite-based SEM methods significant tailwind. Specifically, researchers have started questioning the reflex-like applicability of factor-based methods, calling for a broader scope, which also considers composites as an integral element of measurement. For example, Bentler and Huang (2014, p. 138) note that “composite variable models are probably far more prevalent overall than latent variable models”. Similarly, Grace and Bollen (2008, p. 210) note that “composites have, we believe, great potential to facilitate our ability to create models that are empirically meaningful and also of theoretical relevance”. Rhemtulla et al. (2019) have recently echoed these observations, noting that latent variable models have been over-applied in psychiatry, clinical psychology, and various other fields of life sciences (also see Henseler et al. 2014). These observations certainly pave the way for composite-based SEM methods, which we expect to play an increasingly important role in all fields of science.

Composite-based SEM methods’ prominence is fostered by increasing doubts on long-held beliefs regarding the factors that differentiate composite- from factor-based SEM methods. For example, researchers have relativized PLSPM’s small sample size capabilities by identifying concrete situations in which the method performs well vis-á-vis other SEM methods when limited data are available (e.g., Goodhue et al. 2012; Hair et al. 2017c, 2019b). More importantly, many researchers have criticized composite-based SEM methods for not being able to reduce measurement error (Rönkkö and Evermann 2013), which has been debunked as wrong (Henseler et al. 2014). More importantly, however, Rigdon et al. (2019) show that factor-based SEM methods induce a significant degree of measurement uncertainty, which is a “parameter, associated with the result of a measurement, that characterizes the dispersion of the values that could reasonably be attributed to the measurand” (JCGM/WG1 2008, Sect. 2.2.3). Uncertainty quantifies a researcher’s lack of knowledge about the value of the measurand and directly blurs the relationship between latent variables and the concepts that they seek to represent. By acknowledging uncertainty as an integral part of any measurement, “researchers would derive substantial benefit from a full accounting, and a fresh debate, relating to the compromises involved in using either common factors or weighted composites as stand-ins or proxies for conceptual variables” (Rigdon et al. 2019, p. 440). We expect that this perspective will change the nature of the debate regarding the relative merits of factor- vs. composite-based SEM methods in the long-run (also see Rigdon et al. 2017).

Our concept mapping of composite-based SEM research illustrates the field’s maturation. The results suggest that researchers have become aware of the conceptual differences between composite and factor models and their implications for the methods’ performance. Specifically, researchers have started evaluating composite-based methods under (composite) models that are consistent with what the methods assume, finding support for their consistency (Hair et al. 2017c). Sarstedt et al. (2016) investigated the robustness of covariance structure analysis and PLSPM when incorrectly applied to the composite-based and factor-based models, respectively. These authors found that in this situation, PLSPM yields more accurate parameter estimates on average than covariance structure analysis, indicating that PLSPM is more robust against being used for models with its incomparable latent variables (i.e., factors). We expect that future studies will build on this research and further examine the methods’ performance under (in)consistent model specifications.

Furthermore, our analysis documents an increased interest in model evaluation metrics such as for the assessment of discriminant validity testing (Henseler et al. 2015) and internal consistency reliability (Dijkstra and Henseler 2015). Researchers have also developed methods for assessing a model’s out-of-sample predictive power (Shmueli et al. 2016, 2019; Cho et al. 2019) or measures for comparing different models in this respect (Sharma et al. 2019b). We expect this development to prevail as composite-based methods do not follow a strict confirmatory perspective, like factor-based SEM, but adhere to a causal-predictive paradigm. As Hair et al. (2019a, p. 3) note in the context of business research applications, PLSPM “overcomes the apparent dichotomy between explanation—as typically emphasized in academic research—and prediction, which is the basis for developing managerial implications”.

Finally, we would like to envision what we believe are the most pressing challenges in composite-based SEM research. With regard to PLSPM, future research should extend the method’s modeling capabilities to permit relating a manifest variable to multiple constructs simultaneously. GSCA already supports this modeling option, which paves the way for running an explorative composite analysis. In the structural model, modeling advances in PLSPM may support non-recursive models (i.e., circular path relationships), bidirectional relationships, and constraining paths. Also, the PLSPM method developments should follow calls to facilitate longitudinal and panel data analyses (Richter et al. 2016). Current treatments of longitudinal data (Roemer 2016) are rather ad hoc and do not truly take time variant effects into account. Similarly, multilevel modeling in PLSPM is a concern for future methodological developments. Often, the assessment of common methods variance represents an issue that researchers must address in their PLSPM analysis. Even though prior research tackled this question (Chin et al. 2013), PLSPM lacks methodological support and a straightforward procedure on how to assess and treat common methods variance. The same call holds for GSCA. Finally, in the social sciences, researchers usually only focus on confirmatory tests and results evaluations but often neglect the relevance of their models’ predictive power (Shmueli and Koppius 2011). Future research also need to complement recent efforts to establish predictive model assessment criteria (Shmueli et al. 2016; Sharma et al. 2019b), for example, by developing a test for predictive model comparisons. Similar needs hold for GSCA.

In GSCA, it would be fruitful to also extend the modeling capabilities and to consider alternative objective criteria of the algorithm. For example, GSCA should follow recent developments in PLSPM (Hult et al. 2018) and identify means for dealing with endogeneity that generally refers to situations where independent variables are correlated with residual terms in either the measurement or structural model. One may consider replacing the current ordinary least squares estimator with the instrumental variable estimator in each step of the ALS algorithm. In practice, auxiliary covariates (e.g., gender, age, ethnicity, etc.) often lead to heterogeneous subgroups of observations. GSCA can be extended to consider such covariate-dependent heterogeneity to examine whether the relationships among indicators and latent variables vary across subgroups differentiated by covariates. It may be combined with recursive partitioning (e.g., Strobl et al. 2009) to capture this heterogeneity. In addition, it would be desirable to develop an integrated approach to GSCA and GSCAM in order to simultaneously accommodate two statistical representations of latent variables—common factors and composites. Such an integrated framework can contribute to bridging the two SEM approaches. The same holds for PLSPM. Lastly, future endeavors are needed to provide a combined view of PLSPM and GSCA, for example, by developing a unified model formulation and/or estimation procedure for both composite-based SEM approaches.

Notes

Throughout the manuscript, we use the terms composites and components interchangeably.

Note that the original presentation of the PLSPM algorithm also considers a third stage, which deals with the estimation of location parameters of the indicators and latent variables. We refer to Lohmöller et al. (1989) and Tenenhaus et al. (2005) for a detailed description of the PLSPM algorithm (also see Hair et al. 2017b; Hwang et al. 2015; Wold 1982).

References

Aguirre-Urreta MI, Rönkkö M, Marakas GM (2016) Omission of causal indicators: consequences and implications for measurement. Meas: Interdiscip Res Perspect 14(3):75–97

Ali F, Rasoolimanesh SM, Sarstedt M, Ringle CM, Ryu K (2018) An assessment of the use of partial least squares structural equation modeling (PLS-SEM) in hospitality research. Int J Contemp Hosp Manag 30(1):514–538

Antonakis J, Bendahan S, Jacquart P, Lalive R (2010) On making causal claims: a review and recommendations. Leadership Quart 21(6):1086–1120

Asparouhov T, Muthén B (2009) Exploratory structural equation modeling. Struct Equ Model: A Multidiscip J 16(3):397–438

Avkiran NK (2018) An in-depth discussion and illustration of partial least squares structural equation modeling in health care. Health Care Manag Sci 21(3):401–408

Babin BJ, Sarstedt M (2019) The great facilitator. In Babin BJ, Sarstedt M (eds) The great facilitator. Reflections on the contributions of Joseph F. Hair, Jr. to marketing and business research. Springer Nature, Cham, pp 1–7

Becker J-M, Rai A, Rigdon E (2013a) Predictive validity and formative measurement in structural equation modeling: embracing practical relevance. In: Proceedings of the 34th International Conference on Information Systems, Milan, Italy

Becker J-M, Rai A, Ringle CM, Völckner F (2013b) Discovering unobserved heterogeneity in structural equation models to avert validity threats. MIS Quart 37(3):665–694

Bentler PM (2016) Causal indicators can help to interpret factors. Meas Interdiscip Res Perspect 14(3):98–100

Bentler PM, Huang W (2014) On components, latent variables, PLS and simple methods: reactions to Rigdon’s rethinking of PLS. Long Range Plan 47(3):138–145

Bentler PM, Weeks DG (1980) Linear structural equations with latent variables. Psychometrika 45(3):289–308

Bollen KA, Kirby JB, Curran PJ, Paxton PM, Chen F (2007) Latent variable models under misspecification: two-stage least squares (2SLS) and maximum likelihood (ML) estimators. Sociol Methods Res 36(1):48–86

Campbell DT, Fiske DW (1959) Convergent and discriminant validation by the multitrait-multimethod matrix. Psychol Bull 56(2):81

Chalmers M, Chitson P (1992). Bead: Explorations in information visualisation. In: Belkin NJ, Ingwersen P, Pejtersen AM (eds) Proceedings of the 15th Annual ACM SIGIR conference on research and development in information retrieval. ACM Press, New York, pp 330–337

Cheah J-H, Ting H, Ramayah T, Memon MA, Cham T-H, Ciavolino E (2019) A comparison of five reflective–formative estimation approaches: reconsideration and recommendations for tourism research. Qual Quant 53(3):1421–1458

Chevalier BA, Watson BM, Barras MA, Cottrell WN, Angus DJ (2018) Using discursis to enhance the qualitative analysis of hospital pharmacist-patient interactions. PLoS One 13(5):e0197288

Chin WW (2001) PLS-Graph user’s guide version 3.0

Chin WW, Marcolin BL, Newsted PR (2003) A partial least squares latent variable modeling approach for measuring interaction effects: results from a Monte Carlo simulation study and an electronic-mail emotion/adoption study. Inf Syst Res 14(2):189–217

Chin WW, Thatcher JB, Wright RT, Steel D (2013) Controlling for common method variance in PLS analysis: the measured latent marker variable approach. In: Herve A, Chin WW, Esposito Vinzi V, Russolillo G, Trinchera L (eds) New perspectives in partial least squares and related methods. Springer, Berlin, pp 231–239

Cho G, Jung K, Hwang H (2019) Out-of-bag prediction error: a cross validation index for generalized structured component analyis. Multivariate Behavioral Research (forthcoming)

Ciavolino E, Carpita M, Nitti M (2015) High-order pls path model with qualitative external information. Qual Quant 49(4):1609–1620

Davino C, Vinzi VE (2016) Quantile composite-based path modeling. Adv Data Anal Classif 10(4):491–520

Day NJ, Hunt A, Cortis-Jones L, Grenyer BF (2018) Clinician attitudes towards borderline personality disorder: a 15-year comparison. Personal Mental Health 12(4):309–320

De Leeuw J, Young FW, Takane Y (1976) Additive structure in qualitative data: an alternating least squares method with optimal scaling features. Psychometrika 41(4):471–503

Dijkstra TK (2010) Latent variables and indices: Herman Wold’s basic design and partial least squares. In: Esposito Vinzi V, Chin WW, Henseler J, Wang H (eds) Handbook of partial least squares. Springer, Berlin, pp 23–46

Dijkstra TK (2017) A perfect match between a model and a mode. In: Latan H, Noonan R (eds) Partial least squares path modeling: basic concepts, methodological issues and applications. Springer, Berlin, pp 55–80

Dijkstra TK, Henseler J (2015) Consistent partial least squares path modeling. MIS Quart 39(2):297–316

Duncan TE, Duncan SC, Strycker LA (2013) An introduction to latent variable growth curve modeling: concepts, issues, and applications. Lawrence Erlbaum Associates, Mahwah

Efron B (1979) Bootstrap methods: another look at the jackknife. Ann Stat 7:1–26

Efron B (1982) The jackknife, the bootstrap, and other resampling plans. Society for Industrial and Applied Mathematics, Philadelphia

Fomby TB, Hill RC, Johnson SR (2012) Advanced econometric methods. Springer, Berlin

Franke GR, Sarstedt M (2019) Heuristics versus statistics in discriminant validity testing: a comparison of four procedures. Internet Research (forthcoming)

Fritze MP, Urmetzer F, Khan GF, Sarstedt M, Neely A, Schäfers T (2018) From goods to services consumption: a social network analysis on sharing economy and servitization research. J Serv Manag Res 2(3):3–16

Gerbing DW, Hamilton JG (1994) The surprising viability of a simple alternate estimation procedure for construction of large-scale structural equation measurement models. Struct Equ Model: Multidiscip J 1(2):103–115

Gleason TC, Staelin R (1973) Improving the metric quality of questionnaire data. Psychometrika 38(3):393–410

Goodhue DL, Lewis W, Thompson R (2012) Does PLS have advantages for small sample size or non-normal data? MIS Quart 36(3):981–1001

Grace JB, Bollen KA (2008) Representing general theoretical concepts in structural equation models: the role of composite variables. Environ Ecol Stat 15(2):191–213

Hair JF, Sarstedt M, Ringle CM, Mena JA (2012) An assessment of the use of partial least squares structural equation modeling in marketing research. J Acad Mark Sci 40(3):414–433

Hair JF, Sarstedt M, Matthews L, Ringle CM (2016) Identifying and treating unobserved heterogeneity with FIMIX-PLS: part I—method. Eur Bus Rev 28(1):63–76

Hair JF, Hollingsworth CL, Randolph AB, Chong AYL (2017a) An updated and expanded assessment of PLS-SEM in information systems research. Ind Manag Data Syst 117(3):442–458

Hair JF, Hult GTM, Ringle CM, Sarstedt M (2017b) A primer on partial least squares structural equation modeling (PLS-SEM), 2nd edn. Sage, Thousand Oaks

Hair JF, Hult GTM, Ringle CM, Sarstedt M, Thiele KO (2017c) Mirror, mirror on the wall: a comparative evaluation of composite-based structural equation modeling methods. J Acad Market Sci 45(5):616–632

Hair JF, Risher JJ, Sarstedt M, Ringle CM (2019a) When to use and how to report the results of PLS-SEM. Eur Bus Rev 31(1):2–24

Hair JF, Sarstedt M, Ringle CM (2019b) Rethinking some of the rethinking of partial least squares. Eur J Market 53(4):566–584

Hanafi M (2007) PLS path modelling: computation of latent variables with the estimation mode B. Comput Stat 22(2):275–292

Henseler J (2017) Bridging design and behavioral research with variance-based structural equation modeling. J Advert 46(1):178–192

Henseler J, Chin WW (2010) A comparison of approaches for the analysis of interaction effects between latent variables using partial least squares path modeling. Struct Equ Model Multidiscip J 17(1):82–109

Henseler J, Fassott G, Dijkstra TK, Wilson B (2012) Analysing quadratic effects of formative constructs by means of variance-based structural equation modelling. Eur J Inf Syst 21(1):99–112

Henseler J, Dijkstra TK, Sarstedt M, Ringle CM, Diamantopoulos A, Straub DW, Ketchen DJ Jr, Hair JF, Hult GTM, Calantone RJ (2014) Common beliefs and reality about PLS: comments on Rönkkö and Evermann (2013). Organ Res Methods 17(2):182–209

Henseler J, Ringle CM, Sarstedt M (2015) A new criterion for assessing discriminant validity in variance-based structural equation modeling. J Acad Mark Sci 43(1):115–135

Henseler J, Hubona G, Ray PA (2016a) Using PLS path modeling in new technology research: updated guidelines. Ind Manag Data Syst 116(1):2–20

Henseler J, Ringle CM, Sarstedt M (2016b) Testing measurement invariance of composites using partial least squares. Int Market Rev 33(3):405–431

Horst P (1936) Obtaining a composite measure from a number of different measures of the same attribute. Psychometrika 1(1):53–60

Horst P (1961) Relations among m sets of measures. Psychometrika 26(2):129–149

Hotelling H (1933) Analysis of a complex of statistical variables into principal components. J Educ Psychol 24(6):498–520

Hotelling H (1936) Relations between two sets of variates. Biometrika 28(3/4):321–377

Howell RD, Breivik E (2016) Causal indicator models have nothing to do with measurement. Meas Interdiscip Res Perspect 14(4):167–169

Hult GTM, Hair JF, Proksch D, Sarstedt M, Pinkwart A, Ringle CM (2018) Addressing endogeneity in international marketing applications of partial least squares structural equation modeling. J Int Market 26(3):1–21

Hwang H, Takane Y (2004) Generalized structured component analysis. Psychometrika 69(1):81–99

Hwang H, Takane Y (2014) Generalized structured component analysis: A component-based approach to structural equation modeling. Chapman and Hall/CRC, Boca Raton

Hwang H, Desarbo WS, Takane Y (2007) Fuzzy clusterwise generalized structured component analysis. Psychometrika 72(2):181–198

Hwang H, Ho M-HR, Lee J (2010) Generalized structured component analysis with latent interactions. Psychometrika 75(2):228–242

Hwang H, Takane Y, Tenenhaus A (2015) An alternative estimation procedure for partial least squares path modeling. Behaviormetrika 42(1):63–78

Hwang H, Takane Y, Jung K (2017) Generalized structured component analysis with uniqueness terms for accommodating measurement error. Front Psychol 8:2137

JCGM/WG1 (2008) Evaluation of measurement data—guide to the expression of uncertainty in measurement. Technical Report. https://www.bipm.org/utils/common/documents/jcgm/JCGM_100_2008_E.pdf

Jedidi K, Jagpal HS, DeSarbo WS (1997) Finite-mixture structural equation models for response-based segmentation and unobserved heterogeneity. Market Sci 16(1):39–59

Jöreskog K (1970) A general method for analysis of covariance structures. Biometrika 57(2):409–426

Jöreskog KG (1973) Analysis of covariance structures. In: Krishnaiah PR (ed) Multivariate analysis–III Proceedings of the third international symposium on multivariate analysis held at Wright State University, Dayton, Ohio, June 19–24, 1972. Academic Press, Cambridge, pp 263–285

Jöreskog KG, Wold HOA (1982) The ML and PLS techniques for modeling with latent variables: historical and comparative aspects. In: Jöreskog KG, Wold HOA (eds) Systems under indirect observation, part I. North-Holland, Amsterdam, pp 263–270

Jung K, Panko P, Lee J, Hwang H (2018) A comparative study on the performance of GSCA and CSA in parameter recovery for structural equation models with ordinal observed variables. Front Psychol 9:2461

Kaplan D (2002) Structural equation modeling. International Encyclopedia of the Social & Behavioral Sciences, Pergamon

Khan GF, Sarstedt M, Shiau W-L, Hair JF, Ringle CM, Fritze M (2019) Methodological research on partial least squares structural equation modeling (PLS-SEM): An analysis based on social network approaches. Internet Research (forthcoming)

Kilgour C, Bogossian FE, Callaway L, Gallois C (2019) Postnatal gestational diabetes mellitus follow-up: perspectives of Australian hospital clinicians and general practitioners. Women Birth 32(1):24–33

Kock N (2016) Hypothesis testing with confidence intervals and p-values in PLS-SEM. Int J e-Collab 12(3):1–6

Lazarsfeld PF (1959) Latent structure analysis. In: Hoch S (ed) Psychology: a study of a science 3. McGraw-Hill, New York, pp 476–543

Leximancer (2018) Leximancer user guide release 4.5. Leximancer Pty Ltd

Lohmöller J-B (1989) Latent variable path modeling with partial least squares. Springer, Berlin

MacKenzie SB, Podsakoff PM, Podsakoff NP (2011) Construct measurement and validation procedures in MIS and behavioral research: integrating new and existing techniques. MIS Quart 35(2):293–334

Marcoulides GA, Chin WW, Saunders C (2012) When imprecise statistical statements become problematic: a response to Goodhue, Lewis, and Thompson. MIS Quart 36(3):717–728

Mateos-Aparicio G (2011) Partial least squares (PLS) methods: origins, evolution, and application to social sciences. Commun Stat-Theory Methods 40(13):2305–2317

McArdle JJ, McDonald RP (1984) Some algebraic properties of the reticular action model for moment structures. Br J Math Stat Psychol 37(2):234–251

McDonald RP (1996) Path analysis with composite variables. Multivar Behav Res 31(2):239–270

Meredith W, Tisak J (1990) Latent curve analysis. Psychometrika 55(1):107–122

Nitzl C, Roldán JL, Cepeda Carrión G (2016) Mediation analysis in partial least squares path modeling: helping researchers discuss more sophisticated models. Ind Manag Data Syst 119(9):1849–1864

Pearson K (1901) On lines and planes of closest fit to systems of points in space. Lond Edinburgh, Dublin Philos Mag J Sci 2(11):559–572

Reguera-Alvarado N, Blanco-Oliver A, Martín-Ruiz D (2016) Testing the predictive power of PLS through cross-validation in banking. J Bus Res 69(10):4685–4693

Rhemtulla M, van Bork R, Borsboom D (2019) Worse than measurement error: Consequences of inappropriate latent variable measurement models. Working Paper

Richter NF, Sinkovics RR, Ringle CM, Schlaegel C (2016) A critical look at the use of SEM in international business research. Int Market Rev 33(3):376–404

Rigdon EE (2012) Rethinking partial least squares path modeling: in praise of simple methods. Long Range Plan 45(5–6):341–358

Rigdon EE (2016) Choosing PLS path modeling as analytical method in European management research: a realist perspective. Eur Manag J 34(6):598–605

Rigdon EE, Sarstedt M, Ringle CM (2017) On comparing results from CB-SEM and PLS-SEM. Five perspectives and five recommendations. Marketing ZFP 39(3):4–16

Rigdon EE, Becker J-M, Sarstedt M (2019) Factor indeterminacy as metrological uncertainty: implications for advancing psychological measurement. Multivar Behav Res 54(3):429–443

Rigo M, Willcox J, Spence A, Worsley A (2018) Mothers’ perceptions of toddler beverages. Nutrients 10(3):374

Ringle CM (2019) What Makes a Great Textbook? Lessons Learned from Joe Hair. In Babin BJ, Sarstedt M (eds) The great facilitator. Reflections on the contributions of Joseph F. Hair, Jr. to marketing and business research. Springer Nature Switzerland, Cham, pp 131–150

Ringle CM, Sarstedt M, Schlittgen R (2014) Genetic algorithm segmentation in partial least squares structural equation modeling. OR Spectrum 36(1):251–276

Ringle CM, Sarstedt M, Mitchell R, Gudergan SP (2019) Partial least squares structural equation modeling in HRM research. The International Journal of Human Resource Management (forthcoming)

Roemer E (2016) A tutorial on the use of PLS path modeling in longitudinal studies. Ind Manag Data Syst 116(9):1901–1921

Rönkkö M, Evermann J (2013) A critical examination of common beliefs about partial least squares path modeling. Org Res Methods 16(3):425–448

Rönkkö M, McIntosh CN, Antonakis J (2015) On the adoption of partial least squares in psychological research: caveat emptor. Pers Individ Differ 87:76–84

Ryoo JH, Hwang H (2017) Model evaluation in generalized structured component analysis using confirmatory tetrad analysis. Front Psychol 8:916

Sarstedt M (2019) Der Knacks and a silver bullet. In Babin BJ, Sarstedt M (eds) The great facilitator. Reflections on the contributions of Joseph F. Hair, Jr. to marketing and business research. Springer Nature Switzerland, Cham, pp 155–164

Sarstedt M, Hair JF, Ringle CM, Thiele KO, Gudergan SP (2016) Estimation issues with PLS and CBSEM: where the bias lies! J Bus Res 69(10):3998–4010

Sarstedt M, Hair JF, Ringle CM (2017) Partial least squares structural equation modeling. In: Klarmann M, Vomberg A (eds) Homburg C. handbook of market research springer, Berlin

Sarstedt M, Hair JF, Cheah, J-H, Becker JM, Ringle CM (2019a) How to specify, estimate, and validate higher-order constructs in PLS-SEM. Aus Mark J (forthcoming)

Sarstedt M, Ringle CM, Cheah J-H, Ting H, Moisescu OI, Radomir L (2019b) Structural model robustness checks in PLS-SEM. Tourism Econ (forthcoming)

Schlittgen R, Ringle CM, Sarstedt M, Becker J-M (2016) Segmentation of PLS path models by iterative reweighted regressions. J Bus Res 69(10):4583–4592

Sharma PN, Sarstedt M, Shmueli G, Kim KH, Thiele KO (2019a) PLS-based model selection: The role of alternative explanations in IS research. Journal of the Association for Information Systems (forthcoming)

Sharma PN, Shmueli G, Sarstedt M, Danks N, Ray S (2019b) Prediction-oriented model selection in partial least squares path modeling. Decision Sciences (forthcoming)

Shmueli G, Koppius OR (2011) Predictive analytics in information systems research. MIS Quart 35(3):553–572

Shmueli G, Ray S, Velasquez Estrada JM, Chatla SB (2016) The elephant in the room: evaluating the predictive performance of PLS models. J Bus Res 69(10):4552–4564

Shmueli G, Sarstedt M, Hair JF, Cheah J-H, Ting H, Vaithilingam S, Ringle CM (2019) Predictive model assessment in PLS-SEM: guidelines for using PLSpredict. Eur J Market (forthcoming)

Smith AE, Humphreys MS (2006) Evaluation of unsupervised semantic mapping of natural language with Leximancer concept mapping. Behav Res Methods 38(2):262–279

Spearman C (1913) Correlations of sums or differences. Br J Psychol 5(4):417–426

Streukens S, Leroi-Werelds S (2016) Bootstrapping and PLS-SEM: a step-by-step guide to get more out of your bootstrap results. Eur Manag J 34(6):618–632

Strobl C, Malley J, Tutz G (2009) An introduction to recursive partitioning: rationale, application, and characteristics of classification and regression trees, bagging, and random forests. Psychol Methods 14(4):323–348

Suk HW, Hwang H (2016) Functional generalized structured component analysis. Psychometrika 81(4):940–968

Takane Y, Hwang H (2018) Comparisons among several consistent estimators of structural equation models. Behaviormetrika 45(1):157–188

Tenenhaus M (2008) Component-based structural equation modelling. Total Qual Manag 19(7–8):871–886

Tenenhaus A, Tenenhaus M (2014) Regularized generalized canonical correlation analysis for multiblock or multigroup data analysis. Eur J Oper Res 238(2):391–403

Tenenhaus M, Vinzi VE, Chatelin Y-M, Lauro C (2005) PLS path modeling. Comput Stat Data Anal 48(1):159–205

Tenenhaus A, Philippe C, Frouin V (2015) Kernel generalized canonical correlation analysis. Comput Stat Data Anal 90:114–131

White HD, Griffith BC (1981) Author cocitation: a literature measure of intellectual structure. J Am Soc Inf Sci 32(3):163–171

Wilden R, Akaka MA, Karpen IO, Hohberger J (2017) The evolution and prospects of service-dominant logic: an investigation of past, present, and future research. J Serv Res 20(4):345–361

Willaby HW, Costa DSJ, Burns BD, MacCann C, Roberts RD (2015) Testing complex models with small sample sizes: a historical overview and empirical demonstration of what partial least squares (PLS) can offer differential psychology. Pers Individ Differ 84:73–78

Wold HOA (1966) Estimation of principal components and related models by iterative least squares. In: Krishnaiah PR (ed) Multivariate analysis–III Proceedings of the third international symposium on multivariate analysis held at Wright State University, Dayton, Ohio, June 19–24, 1972. Academic Press, Cambridge, pp 391–420

Wold HOA (1973) Nonlinear iterative partial least squares (NIPALS) modelling: Some current developments. In: Krishnaiah PR (ed) Multivariate analysis–III Proceedings of the third international symposium on multivariate analysis held at Wright State University, Dayton, Ohio, June 19–24, 1972. Academic Press, Cambridge, pp 383–407

Wold HOA (1982) Soft modeling: the basic design and some extensions. In: Jöreskog KG, Wold HOA (eds) Systems under indirect observation: Causality, structure, prediction, part II, vol 2. North Holland, Amsterdam pp 1–54

Acknowledgements

Even though this research does not explicitly refer to the use of the statistical software SmartPLS (http://www.smartpls.com), Ringle acknowledges a financial interest in SmartPLS.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Maomi Ueno.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

About this article

Cite this article

Hwang, H., Sarstedt, M., Cheah, J.H. et al. A concept analysis of methodological research on composite-based structural equation modeling: bridging PLSPM and GSCA. Behaviormetrika 47, 219–241 (2020). https://doi.org/10.1007/s41237-019-00085-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41237-019-00085-5