Abstract

In this paper, following very recent results of Baliarsingh (Alex Eng J 55(2):1811–1816, 2016), we first introduce the concepts of statistically \(\Omega ^{\Delta }\)-summability and \(\Omega ^{\Delta }\)-statistical convergence by means of fractional-order difference operator \(\Delta ^{\alpha ,\beta ,\gamma }_{h}\). We also present some important inclusion relations between newly proposed methods. Our present investigation deals essentially with various summability techniques and reveals how these methods lead to a number of approximation by positive linear operators. As an application, we prove a Korovkin type approximation theorem and also present an illustrative example using the generating function type Meyer-König and Zeller operator. Furthermore, we estimate the rate of convergence of approximating linear operators by means of the modulus of continuity and some Voronovskaja type results are derived. Finally, we present some computational and geometrical interpretations to illustrate some of our approximation results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Preliminaries

This work is a combination of two mathematical research areas namely statistical summability and approximation process based on positive linear operators. To reveal the novelties presented by this article, we present some new developments and historical comments in summability theory and its applications to approximation theorems.

The theory of summability arises from the process of summation of series and consists fruitful applications in various contexts, for example, approximation theory, probability theory, quantum mechanics, analytic continuation, Fourier analysis, dynamical systems, the theory of orthogonal series, and fixed point theory. Due to the rapid development of sequence spaces some researchers have focused on the notion of statistical convergence which brings a new approach to the concept of ordinary convergence. In 1935, statistical convergence was introduced by Zygmund (1959) under the name of almost convergence. In 1951, Steinhaus (1951) and Fast (1951) independently introduced the notion of statistical convergence. At the last quarter of the twentieth century, statistical convergence and statistical summability have been played the significant role in the development of functional analysis. There are many other generalizations of these concepts which have been investigated by many researchers (Kadak et al. 2017; Edely and Mursaleen 2009; Fridy 1993; Kadak 2016; Mohiuddine 2016; Mursaleen et al. 2012; Connor 1989; Başarır and Konca 2017; Yeşilkayagil and Başar 2016; Nuray et al. 2016; Duman and Orhan 2008).

Let A be a subset of the set \(\mathbb {N}\) of natural numbers and \(A_{n}=\left\{ j\le n: j\in A \right\} \). The natural density of A is defined by

provided that the limit exists, where \(|A_{n}|\) denotes the cardinality of set \(A_{n}\). A sequence \(x=(x_{j})\) is said to be statistically convergent (st-convergent) to the number L, denoted by \(st-\lim x=L\), if, for each \(\varepsilon >0,\) the set:

has natural density zero, or equivalently:

By \(\omega \), we denote the family of all real valued sequences and any subspace of \(\omega \) is called a sequence space. We write \(\ell _\infty , c\) and \(c_0\) for the classical sequence spaces of all bounded, convergent and null sequences, respectively. With respect to the supremum norm \(\Vert x\Vert _\infty =\sup _{k}|x_k|\), it is not hard to show that these are Banach spaces. The theory of difference sequence spaces was initially introduced by Kızmaz (1981). As a generalization of difference sequence spaces, the idea of difference operators with natural order m was introduced by Et and Çolak (1995) by defining:

where

and

Moreover, these well-known difference operators were extended and used year after year in many directions (see Aydin and Başar 2004; Kadak 2017a, b). In 2013, Baliarsingh (2013), Baliarsingh and Dutta (2015) (also see Kadak and Baliarsingh 2015) introduced a new kind of difference sequence spaces with respect to the fractional-order difference operator involving Gamma function, as:

In the year 2016, some new classes of difference sequence spaces of fractional order have been introduced by Baliarsingh (2016) (see Baliarsingh and Nayak 2017).

Given a positive constant h, for each real numbers \(\alpha \), \(\beta \) and \(\gamma ~(\gamma \notin \mathbb N)\), we define generalized difference sequence associating with the fractional order difference operator \(\Delta ^{\alpha ,\beta ,\gamma }_h\) as:

where

We assume without loss of generality, the summation given in (1) is convergent for all \(\gamma \notin \mathbb N\) and \( \alpha +\beta > \gamma \).

Our main focus of the present study is to generalize the concept of statistical summability using a fractional order linear difference operator \(\Delta ^{\alpha ,\beta ,\gamma }_{h}\). In fact, our present investigation shows how newly proposed summability methods lead to a number of approximation processes. We also establish some important approximation results associating with statistically \(\Omega ^{\Delta }\)-summability and investigate Korovkin and Voronovskaja type approximation results by the help of generalized Meyer-König and Zeller operator. We also present computational and geometrical approaches to illustrate some of our results in this paper.

2 Some New Definitions and Inclusion Relations

In this section, we first give the definitions concerning statistically \(\Omega ^{\Delta }\)-summability and \(\Omega ^{\Delta }\)-statistical convergence by means of the fractional order difference operator \(\Delta ^{\alpha ,\beta ,\gamma }_{h}\). Secondly, we state and prove two theorems and an illustrative example to determine some inclusion relations between proposed methods.

Let \((\lambda _n)_{n=0}^{\infty }\) be a strictly increasing sequence of positive numbers i.e.

Also, let \(x=(x_n)\) be a sequence of real or complex number. We then define the following sum involving difference operator \(\Delta _{h}^{\alpha ,\beta ,\gamma }(\lambda x)\) as follows:

where \(\alpha ,\beta \) and \(\gamma \notin \mathbb N\) are real numbers, h is a positive constant such that \(|\Delta _{h}^{\alpha ,\beta ,\gamma }(\lambda _n)|\ge 0\) and \(\lambda _{-n}=0\) for all \(n \in \mathbb N\). That is,

Definition 1

Let \(\alpha ,\beta \) and \(\gamma \notin \mathbb N\) be real numbers and h be any positive constant. A sequence \(x=(x_n)\) is said to be \(\Omega ^{\Delta }\)-summable to the number L, if

We also say that the sequence \(x=(x_n)\) is strongly \(\Omega _q^{\Delta }\)-summable to L, if

Definition 2

A sequence \(x=(x_n)\) is said to be \(\Omega ^{\Delta }\)-statistical convergent to a number L, if, for every \(\epsilon >0,\)

We denote it by \(S_{\Omega ^{\Delta }}-\lim x_n = L.\) We also say that the sequence \(x=(x_n)\) is statistically \(\Omega ^{\Delta }\)-summable to L, if

Equivalently, we may write

We write it as \(\overline{N}_{\Omega ^{\Delta }}-\lim x_n =L\).

Now, we shall give the following special cases to show the effectiveness of above definitions.

-

(1)

Let us take \(\alpha =2\), \(\beta =\gamma \), \(h=1\), i.e.

$$\begin{aligned} (\Delta _{1}^{2,\beta ,\beta }(\lambda x))_k=\lambda _kx_k-2\lambda _{k-1}x_{k-1}+\lambda _{k-2}x_{k-2}. \end{aligned}$$Then statistically \(\Omega ^{\Delta }\)-summability given in Definition 2 is reduced to the \(\Lambda ^2\)-statistically summability introduced in Braha et al. (2015) (see also Kadak 2016; Alotaibi and Mursaleen 2013).

-

(2)

Let \(\alpha =2\), \(\beta =\gamma \), \(h=1\), then \(\Omega ^{\Delta }\)-statistical convergence given in Definition 2 reduces to weighted \(\Lambda ^2\)-statistically convergence. In addition, the notion of strongly \(\Omega _q^{\Delta }\)-summability can be interpreted as strongly \(\Lambda ^2\)-summability (see Kadak 2016; Braha et al. 2015; Mursaleen 2000).

We now present the following theorem which gives the relation between \(\Omega ^{\Delta }\)-statistical convergence and statistically \(\Omega ^{\Delta }\)-summability.

Theorem 1

Let\(|(\Delta _{h}^{\alpha ,\beta ,\gamma }(\lambda x))_k-L|\le M\)for all\(k \in \mathbb N.\)If a sequence\(x=(x_n)\)is\(\Omega ^{\Delta }\)-statistical convergent to the numberL, then it is statistically\(\Omega ^{\Delta }\)-summable to the same limit, but not conversely.

Proof

Let \(h> 0\) be any constant, \(\alpha ,\beta \) and \(\gamma \notin \mathbb N\) be real numbers. Let \(|(\Delta _{h}^{\alpha ,\beta ,\gamma }(\lambda x))_k-L|\le M\) for all \(k \in \mathbb N\). Since \(S_{\Omega ^{\Delta }}-\lim x_n = L\), we have

We thus find that

where

and

That is to say that \(x=(x_n)\) is \(\Omega ^{\Delta }\)-summable to L and hence statistically \(\Omega ^{\Delta }\)-summable to the same limit. On the other hand the converse is not true, as can be seen by considering the following example.

Example 1

Define the sequence \(x=(x_n)\) by

for all \(m \in \mathbb N\). Let \(\alpha \in (0,1), \beta =\gamma \), \(h=1\) and \(\lambda _n=n^2\) for all \(n\in \mathbb N\). We thus find that

Letting \(n \rightarrow \infty \) in (2), we find that \(\Omega ^{\Delta }_n(\lambda x) \rightarrow 0\) which yields \(st-\lim \Omega ^{\Delta }_n(\lambda x) =0\). On the other hand, for a fixed \(\alpha \in (0, 1)\), since

and

then the sequence \(x=(x_n)\) is not \(\Omega ^{\Delta }\)-statistical convergent.

Theorem 2

-

(a)

Let us suppose that\(x=(x_n)\)is strongly\(\Omega _q^{\Delta }\)-summable\((0<q<\infty )\)to the numberL. If the following conditions hold, then the sequencexis\(\Omega ^{\Delta }\)-statistical convergent toL:

-

(i)

\(q\in (0, 1)\) and \(0\leqq |(\Delta _{h}^{\alpha ,\beta ,\gamma }(\lambda x))_k-L|<1\) or

-

(ii)

\(q\in [1, \infty )\)and\(1\leqq |(\Delta _{h}^{\alpha ,\beta ,\gamma }(\lambda x))_k-L|<\infty \).

-

(i)

-

(b)

Assume that\(x=(x_n)\) is \(\Omega ^{\Delta }\)-statistical convergent toLand\(|(\Delta _{h}^{\alpha ,\beta ,\gamma }(\lambda x))_k-L|\le M\)for all\(k\in \mathbb N.\)If the following conditions hold, then the sequencexis strongly\(\Omega _q^{\Delta }\)-summable toL:

-

(i)

\(q\in (0, 1]\) and \(M\in [1, \infty )\) or

-

(ii)

\(q\in [1, \infty )\) and \(M\in [0, 1)\).

-

(i)

Proof

-

(a)

Let \(x=(x_n)\) be strongly \(\Omega _q^{\Delta }\)-summable (\(0<q<\infty \)) to L. Under the above conditions, we get

$$\begin{aligned} \frac{1}{\epsilon ~\Delta \lambda _n} \sum _{k=\lambda _{n-1}}^{\lambda _n}\big |(\Delta _{h}^{\alpha ,\beta ,\gamma }(\lambda x))_k-L\big |^q\ge & {} \frac{1}{\epsilon \Delta \lambda _n} \sum _{k=\lambda _{n-1}}^{\lambda _n}\big |(\Delta _{h}^{\alpha ,\beta ,\gamma }(\lambda x))_k-L\big |\\\ge & {} \frac{1}{\epsilon \Delta \lambda _n} \sum _{\begin{array}{c} k=\lambda _{n-1} \\ (k\in K_{\lambda _{n}}(\epsilon )) \end{array}}^{\lambda _{n}}\big |(\Delta _{h}^{\alpha ,\beta ,\gamma }(\lambda x))_k-L\big |\\\ge & {} \frac{1}{\epsilon \Delta \lambda _n} \sum _{\begin{array}{c} k=\lambda _{n-1}\\ (k\in K_{\lambda _{n}}(\epsilon )) \end{array}}^{\lambda _{n}}\epsilon \\= & {} \, \frac{1}{\lambda _n-\lambda _{n-1}}|K_{\lambda _{n}}(\epsilon ))| \quad (\epsilon >0) \end{aligned}$$which leads us by passing to limit as \(n \rightarrow \infty \) that

$$\begin{aligned} \frac{1}{\lambda _n-\lambda _{n-1}}\left| \left\{ k\le \lambda _n-\lambda _{n-1}:\big |(\Delta _{h}^{\alpha ,\beta ,\gamma }(\lambda x))_k-L\big |\ge \epsilon \right\} \right| \rightarrow 0. \end{aligned}$$Hence, \(x=(x_n)\) is \(\Omega ^{\Delta }\)-statistical convergent to L.

-

(b)

Let \(x=(x_n)\) be \(\Omega ^{\Delta }\)-statistical convergent to L and

$$\begin{aligned} |(\Delta _{h}^{\alpha ,\beta ,\gamma }(\lambda x))_k-L|\le M \quad\text {for all}~~k\in \mathbb N. \end{aligned}$$Thus, clearly, we have

$$\begin{aligned}&\frac{1}{\Delta \lambda _n} \sum _{k=\lambda _{n-1}}^{\lambda _n}\big |(\Delta _{h}^{\alpha ,\beta ,\gamma }(\lambda x))_k-L\big |^q\\&\quad =\frac{1}{\Delta \lambda _n} \sum _{\begin{array}{c} k=\lambda _{n-1} \\ (k\in K^C_{\lambda _{n}}(\epsilon )) \end{array}}^{\lambda _n}\big |(\Delta _{h}^{\alpha ,\beta ,\gamma }(\lambda x))_k-L\big |^q+\frac{1}{\Delta \lambda _n} \sum _{\begin{array}{c} k=\lambda _{n-1}\\ (k\in K_{\lambda _{n}}(\epsilon )) \end{array}}^{\lambda _n}\big |(\Delta _{h}^{\alpha ,\beta ,\gamma }(\lambda x))_k-L\big |^q\\&\quad \le \frac{1}{\Delta \lambda _n} \sum _{\begin{array}{c} k=\lambda _{n-1} \\ (k\in K^C_{\lambda _{n}}(\epsilon )) \end{array}}^{\lambda _n}\big |(\Delta _{h}^{\alpha ,\beta ,\gamma }(\lambda x))_k-L\big |+\frac{1}{\Delta \lambda _n} \sum _{\begin{array}{c} k=\lambda _{n-1}\\ (k\in K_{\lambda _{n}}(\epsilon )) \end{array}}^{\lambda _n}\big |(\Delta _{h}^{\alpha ,\beta ,\gamma }(\lambda x))_k-L\big |\\&\quad \le \frac{\epsilon ~|K^C_{\lambda _{n}}(\epsilon )|}{\lambda _n-\lambda _{n-1}}+\frac{M|K_{\lambda _{n}}(\epsilon )|}{\lambda _n-\lambda _{n-1}}\rightarrow \epsilon \quad (n \rightarrow \infty ). \end{aligned}$$Hence, for \(0<q<\infty \), our sequence \(x=(x_n)\) is strongly \(\Omega _q^{\Delta }\)-summable to L .\(\square \)

3 Applications to Korovkin Type Approximation Theorem

Let C[a, b] be the space of all continuous real valued functions on [a, b]. It is well known that C[a, b] is a Banach space with the norm defined by

Let \(L:C[a, b] \rightarrow C[a, b]\) be a linear operator. Then, L is said to be positive provided by \(f \ge 0\) implies \(Lf \ge 0\). We also use the notation L(f; x) for the value of Lf at a point x.

The classical Korovkin type approximation theorem (see Korovkin 1953; Bohman 1952) states as follows:

Let \(T_n: C[a, b] \rightarrow C[a, b]\) be a sequence of positive linear operators. Then

if and only if

where the test function \(f_i(x)=x^i\).

The statistical version of Korovkin theorem was given by Gadjiev and Orhan (2002). With the development of summability methods, this type approximation has been widely used and extended by many authors the reader may refer to (Kadak 2016, 2017a, b; Orhan and Demirci 2014; Srivastava et al. 2012; Edely et al. 2010).

In this section using the test function \(f_i(x)=(\frac{x}{1-x})^i\), \(i=0, 1, 2\) for \(x\in [0, A]\) where \(A \leqq \frac{1}{2}\), we try to obtain a Korovkin type approximation theorem which is stronger than that both classical and statistical cases of Korovkin theorem.

Theorem 3

Let\(\alpha \), \(\beta \)and\(\gamma \notin \mathbb N\)be real numbers andhbe a positive constant. Also let\((T_k)_{k\ge 1}\)be a sequence of positive linear operators fromC[0, A] into itself. Then, for all\(f\in C[0, A],\)

if and only if

Proof

Since each \(1, \frac{x}{1-x}, (\frac{x}{1-x})^2\) functions belongs to C[0, A] then the assertions (4), (5) and (6) follow immediately from the first assertion (3). Let us take \(f \in C[0, A]\) and \(x \in [0, A]\) be fixed. Then there exists a constant \(M>0\) such that \(|f(x)|\le M\) for all \(x \in [0, A]\). Thus, we have

By continuity of f at x, for given \(\varepsilon >0\), there exists a number \(\delta =\delta (\varepsilon )>0\) such that for all \(s, x\in [0, A]\) satisfying

we have

Setting \(\varphi (s,x)=(\frac{s}{1-s}-\frac{x}{1-x})\). For \(|\frac{s}{1-s}-\frac{x}{1-x}|\ge \delta \), then \(\varphi ^2(s,x)\ge \delta ^2\). Hence, we derive the consequence from (7) and (8) that

It follows from the linearity and positivity of \(T_{k}\) that

Taking the supremum over \(x \in [0, A]\), we obtain

where

We now replace \(T_k(\cdot ; x)\) by

in (9). For a given \(\varepsilon ' >0\), we choose a number \(\varepsilon >0\) such that \(\varepsilon <\varepsilon '\). Then, upon setting

Then, it is clear that \(\mathcal {B}\subset \mathcal {B}_{0}\cup \mathcal {B}_{1}\cup \mathcal {B}_{2}\) and hence using the conditions (4–6) we obtain

which completes the proof.

Now we may give an example for Theorem 3. Before giving this example, we present a short introduction related with the generating function type Meyer-Konig and Zeller operators (see Altın et al. 2005).

For a function f on [0, 1), the operators

are known as Meyer-Konig and Zeller operators Meyer-König and Zeller (1960) where

This operator were also generalized in Altın et al. (2005) using linear generating functions

where \(0<\frac{a_{k,n}}{a_{k,n}+b_{n}}\le \tilde{A}\), \(\tilde{A} \in (0, 1)\), and \(h_n(x,s)\) is the generating function for the sequence of \(\{\Gamma _{k, n}(s)\}~(s \in I)\) with the form

We also suppose that the following conditions hold true:

-

(i)

\(h_n(x,s)=(1-x)h_{n+1}(x,s)\);

-

(ii)

\(b_n \Gamma _{k, n+1}(s)=a_{k+1,n}\Gamma _{k+1, n}(s)\) and \(\Gamma _{k, n}(s)\ge 0\) for all \(s \in I \subset \mathbb R\);

-

(iii)

\(b_n \rightarrow \infty , \frac{b_{n+1}}{b_n}\rightarrow 1\) and \(b_n\ne 0\) for all \(n\in \mathbb N\);

-

(iv)

\(a_{k+1, n}-a_{k, n+1}=\varphi _n\) where \(|\varphi _n|\le m <\infty \), and \({a_{0n}}=0.\)

It is easy to see that \(L_n\) defined by (10) is positive and linear. We also observe that

Example 2

Let \(\{T_k\}\) be a sequence of positive linear operators from \(C[0, \tilde{A}]\) into itself defined by

where \(x=(x_k)\) is defined as in Example 1. Since \(\overline{N}_{\Omega ^{\Delta }}-\lim x_n=0\), it is easy to see that

In view of (iii), we have

which yields that

Therefore, we obtain

By taking Theorem 3 into account, and hence by letting \(n \rightarrow \infty \), we are led to the fact that

In view of above example, we say that our proposed method works successfully but classical and statistical forms of Korovkin theorem do not work for this sequence \(\{T_k\}\) of positive linear operators.

4 Rates of \(\Omega ^{\Delta }\)-Statistical Convergence

In this section, we estimate the rate of \(\Omega ^{\Delta }\)-statistical convergence of positive linear operators defined from C[0, A] into itself.

We first present the following definition.

Definition 3

Let \(\alpha \), \(\beta \) and \(\gamma \notin \mathbb N\) be real numbers and h be a positive constant. Also let \((\theta _n)\) be any non-increasing sequence of positive real numbers. We say that a sequence \(x=(x_n)\) is \(\Omega ^{\Delta }\)-statistical convergent to the number L with the rate \(o(\theta _n)\) if, for every \(\epsilon >0\),

In this case, we can write \(x_k-L=\Omega ^{\Delta }-o(\theta _n)\).

Lemma 1

Let\((a_n)\)and\((b_n)\)be positive non-increasing sequences. Assume that\(x=(x_k)\)and\(y=(y_k)\)are two sequences such that

Then

-

(1)

\((x_k-L_1)\pm (y_k-L_2)=\Omega ^{\Delta }-o(c_n)\)

-

(2)

\((x_k-L_1)(y_k-L_2)=\Omega ^{\Delta }-o(a_n b_n)\)

-

(3)

\(\mu (x_k-L_1)=\Omega ^{\Delta }-o(a_n)\), for any scalar \(\mu \in \mathbb R\),

where\(c_n=\max _n\{a_n, b_n\}\).

Proof

Assume that \(x_k-L_1=\Omega ^{\Delta }-o(a_n)~~\text {and}~~y_k-L_2=\Omega ^{\Delta }-o(b_n)\). In addition, for \(\epsilon >0\), let us set

and

We then observe that \(\mathcal {D} \subset \mathcal {D}_{0} \cup \mathcal {D}_{1}\). Thus, we obtain

where \(c_n=\max \{a_n, b_n\}\). Now letting \(n \rightarrow \infty \) in (13) and using by hypothesis, we have

as asserted by Lemma 1 (1). Since the other assertions can be proved similarly, we choose to omit the details involved.

We now recall the following basic definition and notation on the modulus of continuity to get the rates of \(\Omega ^{\Delta }\)-statistical convergence using Definition 3.

The modulus of continuity for a function \(f \in C[0, A]\) is defined as follows:

It is well-known that for any \(\delta >0\) and each \(s\in [0, A]\),

Theorem 4

Let\(\alpha \), \(\beta \)and\(\gamma ~( \notin \mathbb N)\)be real numbers andhbe a positive constant. Let\(\{T_{k}\}\)be a sequence of positive linear operators fromC[0, A] into itself. Assume further that\((a_n)\)and\((b_n)\)be positive non-increasing sequences. Suppose that the following conditions hold true:

-

(i)

\(\Vert T_{k}(1; x)-1\Vert _\infty =\Omega ^{\Delta }-o(a_n)\) on [0, A],

-

(ii)

\(\omega (f, \xi _k)=\Omega ^{\Delta }-o(b_n)\) on [0, A], where \(\xi _k:=\sqrt{\Vert T_{k}(\varphi _x(s); x)\Vert }_\infty \) with

$$\begin{aligned} \varphi _x(s)=\bigg (\frac{s}{1-s}-\frac{x}{1-x}\bigg )^2. \end{aligned}$$

Then we have, for all\(f\in C[0, A],\)

where\(c_n=\max \{a_n, b_n\}\).

Proof

Let \(f \in C[0, A]\) and \(x \in [0, A]\) be fixed. Since \(\{T_k\}\) is linear and monotone, we see (for any \(\delta >0\)) that

where \(N=\Vert f\Vert _\infty \). Taking the supremum over \(x \in [0, A]\) on both sides, we get

Now, if we take

in the last relation, we deduce that

Now, we replace \(T_{k}(\cdot ; x)\) by

Using Lemma 1, for a given \(\epsilon >0\), we obtain that

Letting \(n \rightarrow \infty \) which leads us to the fact that

as desired.

5 A Voronovskaja-Type Theorem

In this section, using the notion of statistically \(\Omega ^{\Delta }\)-summability we obtain a Voronovskaja-type approximation theorem by the help of \(T_k\) family of linear operators defined by (11) for \(h_n(x,s)=(1-x)^{-n-1}\), \(a_{k,n}=k\), \(\Gamma _{k,n}(s)=\left( {\begin{array}{c}n+k\\ k\end{array}}\right) \) and \(b_n=n+1\) for all \(n \in \mathbb N\).

Lemma 2

Let\(\alpha \), \(\beta \)and\(\gamma ~(\notin \mathbb N)\)be real numbers andhbe a positive constant. Suppose also that\(\eta _x(s)=\left (\frac{s}{1-s}-\frac{x}{1-x}\right )\)where\(x, s \in [0, A]\). Then, we get

Proof

Let h be a positive constant, \(x \in [0, A]\) and, \(\alpha \), \(\beta \) and \(\gamma ~(\notin \mathbb N)\) be real numbers. Since

we deduce that

In addition, since

we find that

which yields, for all \(x \in [0, A]~(A\le 1/2)\), that

Since

the desired result follows, that is,

Corollary 1

Assume thathis a positive constant,\(x \in [0, A]\). Let\(\eta _x(s)\)be given as in Lemma 2. Then there is a positive constant\(M_0(x)\)depending only onx, such that

Theorem 5

Let\(\alpha \), \(\beta \)and\(\gamma ~(\notin \mathbb N)\)be real numbers andhbe a positive constant. Then, for every\(f\in C[0, A]\)such that\(f', f'' \in C[0, A],\)

Proof

Let \(x \in [0, A]\) and \(f, f', f'' \in C[0, A]\). Now we consider the following function defined by

where \(\theta _x(\frac{x}{1-x})=0\) and \(\theta _x \in C[0, A]\). Using the Taylor formula for \(f \in C[0, A] \), we can write

We then observe that the operator \(T_k\) is linear and that

In view of Lemma 2, one obtains

Upon multiplying both sides by \(k+1\), we have

and hence

where \(M_1=\big \Vert f(\frac{x}{1-x})\big \Vert _\infty \) and \(M_2=\big \Vert f''(\frac{x}{1-x})\big \Vert _\infty \). Applying the Cauchy-Schwarz inequality in (16), we obtain

From Theorem 3, we observe that

Using Lemma 2 and Corollary 1, it is not hard to see that

Thus, by taking \(n \rightarrow \infty \) in (16), we get

which leads us to the desired assertion of Theorem 5.\(\square \)

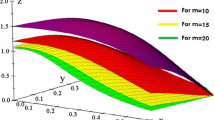

6 Computational and Geometrical Approaches

In this section, we provide the computational and geometrical approaches of Theorem 3 with respect to the linear operator \(L_n(f;x)\) given in (10) under different choices for the parameters. Here, we have found it to be convenient to investigate our series only for finite sums. More powerful equipments with higher speed can easily compute the more complicated infinite series in a similar manner.

Here, in our computations, we take

-

\(h_n(x,s)=(1-x)^{-n-1}\) and \( \Gamma _{{k,n}} (s) = \left( {\begin{array}{ll} {n + k} \\ k \\ \end{array} } \right); \)

-

\(a_{k,n}=k\) and \(b_n=n+1\);

-

\(\alpha =2, \beta =\gamma \) and \(h=1\);

-

\(\lambda (n)=n^2\) for all \(n \in \mathbb N\).

Based upon the above choices, we may define the following operator \(\Omega _m^{\Delta }(\lambda Lf)\) by

where

Under above conditions, we obtain

In fact, in Fig. 1, the value of k runs from \(k = 0\) to 25 for \(m = 5\), \(m = 10\) and \(m=15\), respectively. As the value of m increases, the sequence

converges towards to the function \(f_0(x)=1\).

In addition, from Fig. 2, it can be observed that, as the value of m increases, the sequence

converges to the function \(f_1(x)=\frac{x}{1-x}.\)

Similarly, from Fig. 3, it can be easily seen that, as the value of m increases, the sequence

converges to the function \(f_2(x)\) given by \(f_2(x)=\big (\frac{x}{1-x}\big )^2.\)

Figures 1, 2 and 3 clearly show that the conditions (4), (5) and (6) of Theorem 3 are satisfied.

We also observe from Fig. 4 that, as the value of m increases, the operators given by (17) converge towards the function. Indeed, Fig. 4 shows that the condition (3) holds true for the function

in C[0, A] where \(A\leqq 1/2\).

References

Alotaibi A, Mursaleen M (2013) Generalized statistical convergence of difference sequences. Adv Differ Equ 2013:212

Altın A, Doğru O, Taşdelen F (2005) The generalization of Meyer-Konig and Zeller operators by generating functions. J Math Anal Appl 312:181–194

Aydin C, Başar F (2004) Some new difference sequence spaces. Appl Math Comput 157(3):677–693

Baliarsingh P (2013) Some new difference sequence spaces of fractional order and their dual spaces. Appl Math Comput 219(18):9737–9742

Baliarsingh P (2016) On a fractional difference operator. Alex Eng J 55(2):1811–1816

Baliarsingh P, Dutta S (2015) On the classes of fractional order difference sequence spaces and their matrix transformations. Appl Math Comput 250:665–674

Baliarsingh P, Nayak L (2017) A note on fractional difference operators. Alex Eng J. https://doi.org/10.1016/j. aej.2017.02.022

Başarır M, Konca S (2017) Weighted lacunary statistical convergence. Iran J Sci Technol Trans A Sci 41(1):185–190

Bohman H (1952) On approximation of continuous and of analytic functions. Arkiv Math 2:43–56

Braha NL, Loku V, Srivastava HM (2015) \(\Lambda ^2\)-Weighted statistical convergence and Korovkin and Voronovskaya type theorems. Appl Math Comput 266(1):675–686

Connor J (1989) On strong matrix summability with respect to a modulus and statistical convergence. Can Math Bull 32:194–198

Duman O, Orhan C (2008) Rates of A-statistical convergence of operators in the space of locally integrable functions. Appl Math Lett 21:431–435

Edely OHH, Mursaleen M (2009) On statistical \(A\)-summability. Math Comput Model 49:672–680

Edely OHH, Mohiuddine SA, Noman AK (2010) Korovkin type approximation theorems obtained through generalized statistical convergence. Appl Math Lett 23(11):1382–1387

Et M, Çolak R (1995) On some generalized difference sequence spaces. Soochow J Math 21(4):377–386

Fast H (1951) Sur la convergence statistique. Colloq Math 2:241–244

Fridy JA (1993) Lacunary statistical summability. J Math Anal Appl 173:497–504

Gadjiev AD, Orhan C (2002) Some approximation theorems via statistical convergence. Rocky Mt J Math 32:129–138

Kadak U (2016) On weighted statistical convergence based on \((p, q)\)-integers and related approximation theorems for functions of two variables. J Math Anal Appl 443:752–764

Kadak U (2017a) Weighted statistical convergence based on generalized difference operator involving \((p, q)\)-gamma function and its applications to approximation theorems. J Math Anal Appl 448:1633–1650

Kadak U (2017b) Generalized weighted invariant mean based on fractional difference operator with applications to approximation theorems for functions of two variables. Results Math 72(3):1181–1202

Kadak U, Baliarsingh P (2015) On certain Euler difference sequence spaces of fractional order and related dual properties. J Nonlinear Sci Appl 8:997–1004

Kadak U, Braha NL, Srivastava HM (2017) Statistical weighted B-summability and its applications to approximation theorems. Appl Math Comput 302:80–96

Kızmaz H (1981) On certain sequence spaces. Can Math Bull 24(2):169–176

Korovkin PP (1953) On convergence of linear positive operators in the spaces of continuous functions (Russian), Doklady Akad. Nauk SSSR 90:961–964

Meyer-König W, Zeller K (1960) Bernsteinsche potenzreihen. Stud Math 19:89–94

Mohiuddine SA (2016) Statistical weighted \(A\)-summability with application to Korovkin’s type approximation theorem. J Inequal Appl (Article ID 101)

Mursaleen M (2000) \(\lambda \)-Statistical convergence. Math. Slovaca 50:111–115

Mursaleen M, Karakaya V, Ertürk M, Gürsoy F (2012) Weighted statistical convergence and its application to Korovkin type approximation theorem. Appl Math Comput 218:9132–9137

Nuray F, Ulusu U, Dündar E (2016) Lacunary statistical convergence of double sequences of sets. Soft Comput 20(7):2883–2888. https://doi.org/10.1007/s00500-015-1691-8

Orhan S, Demirci K (2014) Statistical \(A\)-summation process and Korovkin type approximation theorem on modular spaces. Positivity 18:669–686

Srivastava HM, Mursaleen M, Khan A (2012) Generalized equi-statistical convergence of positive linear operators and associated approximation theorems. Math Comput Model 55:2040–2051

Steinhaus H (1951) Sur la convergence ordinaire et la convergence asymptotique. Colloq Math 2:73–74

Yeşilkayagil M, Başar F (2016) A note on riesz summability of double series. Proc Natl Acad Sci India Sect A Phys Sci 86(3):333–337

Zygmund A (1959) Trigonometric Series, 1st edn. Cambridge University Press, New york, NY, USA

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kadak, U. Generalized Statistical Convergence Based on Fractional Order Difference Operator and Its Applications to Approximation Theorems. Iran J Sci Technol Trans Sci 43, 225–237 (2019). https://doi.org/10.1007/s40995-017-0400-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40995-017-0400-0

Keywords

- Statistical convergence and statistical summability

- Fractional order difference operator

- Korovkin and Voronovskaja type approximation theorems

- Modulus of continuity and rate of convergence

- Meyer-König and Zeller polynomials