Abstract

In the present paper, we introduce the notion of relatively uniform weighted summability and its statistical version based upon fractional-order difference operators of functions. The concept of relatively uniform weighted \(\alpha \beta \)-statistical convergence is also introduced and some inclusion relations concerning the newly proposed methods are derived. As an application, we prove a general Korovkin-type approximation theorem for functions of two variables and also construct an illustrative example by the help of generating function type non-tensor Meyer-König and Zeller operators. Moreover, it is shown that the proposed methods are non-trivial generalizations of relatively uniform convergence which includes a scale function. We estimate the rate of convergence of approximating positive linear operators by means of the modulus of continuity and give a Voronovskaja-type approximation theorem. Finally, we present some computational results and geometrical interpretations to illustrate some of our approximation results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Preliminaries

The theory of summability arises from the process of summation of series and is an extremely wide and fruitful field for the application in several branches of functional analysis, in particular, operator theory, analytic continuation, the rate of convergence, quantum mechanics, approximation theory, probability theory, the theory of orthogonal series, and fixed point theory. With the rapid development of sequence spaces, many researchers have focused on the notion of statistical convergence which was independently introduced by Fast [1] and Steinhaus [2] in the same year 1951. Recently, the concepts of statistical convergence and statistical summability have become an active area of research. Also these techniques have been discussed satisfactorily in approximation theory of functions by positive linear operators. For more various approaches to statistical convergence and statistical summability, we refer to [3,4,5,6,7,8].

Let \(S \subseteq {\mathbb {N}}\) and

The natural density (see [9, 10]) of S is defined by

provided that the limit exists, where \(|S_m|\) denotes the cardinality of set \(S_m\). A number sequence \(u=(u_{n})\) is called statistically convergent (\(\delta \)-convergent) to the number L, denoted by st-\(\lim _m u=L\), if, for every \(\epsilon >0,\) the set

has natural density zero, or equivalently \(\delta (H_{\epsilon })=0\). The idea of weighted statistical convergence of single sequences was first given by Karakaya and Chishti [11]. More recently, this notion was modified by Mursaleen et al. [12] (see also the related work by Srivastava et al. [13]) and was further extended by Kadak et al. [14].

Let \(s=(s_{k})\) be a sequence of non-negative numbers such that \(s_{0}>0\) and \(S_m=\sum _{k=0}^m s_k \rightarrow \infty \) as \(m \rightarrow \infty \). We say that a sequence \(u=(u_{n})\) is weighted statistically convergent (or \(S_N\)-convergent) to the number L if, for every \(\epsilon >0\),

Quite recently, Aktuğlu [15] introduced the notion of \((\alpha ,\beta )\)-statistical convergence for single sequences with the help of two sequences \(\{\alpha (n)\}_{n\in {\mathbb {N}}}\) and \(\{\beta (n)\}_{n\in {\mathbb {N}}}\) of positive numbers which satisfy the conditions: \(\alpha \) and \(\beta \) are both non-decreasing, \(\beta (n)\geqq \alpha (n)\) for all \(n\in {\mathbb {N}}\), (\(\beta (n)-\alpha (n))\rightarrow \infty \) as \(n\rightarrow \infty .\) The set of all pairs \((\alpha ,\beta )\) satisfying above conditions will be denoted by \(\Lambda \). A sequence \(u=(u_{k})\) is said to be \((\alpha ,\beta )\)-statistically convergent to L if, for each \(\varepsilon >0\),

where \((\alpha ,\beta ) \in \Lambda \) and \(S^{(\alpha ,\beta )}_{n}=[\alpha (n), \beta (n)]\).

The concept of relatively uniform convergence of a sequence of functions was introduced by Moore [16]. In the slight modification by Chittenden [17], the relatively uniform convergence was defined on a closed interval \(I \subset {\mathbb {R}}\) as follows:

A sequence \(\{f_n(x)\}\) of functions, defined on an interval \(I\equiv (a \leqq x \leqq b)\), converges relatively uniformly to a limit function f(x) if there exists a function \(\sigma (x)\), called a scale function \(\sigma (x)\) defined on I, such that for every \(\varepsilon > 0\) there is an integer \(n_\varepsilon \) such that for every n greater than \(n_\varepsilon \) the inequality

holds uniformly in x on the interval \(I \subset {\mathbb {R}}\). For example (see [18]), consider the sequence \((f_n)\) of functions defined on [0, 1] given by

It is clear that \((f_n)\) is not convergent uniformly, but is convergent to \(f(x)=0\) uniformly relative to a scale function defined as

Note that uniform convergence is the special case of relatively uniform convergence in which the scale function is a non-zero constant. Very recently, based on the natural density of a set, the notion of relative statistical uniform convergence has been introduced by Demirci and Orhan [18] (see also [19]). In the same year, they gave the definitions of relative modular convergence and statistical relative modular convergence for double sequences of measurable real-valued functions [20].

Let \((f_n)\) be a sequence of functions defined on a compact subset E of real numbers. The sequence \((f_n)\) is said to be statistically relatively uniform convergent to the limit function f defined on E, if there exists a scale function \(\sigma (x)\), \(|\sigma (x)|>0\), on E such that for every \(\epsilon >0\),

In this case we write \((st)-f_n \rightrightarrows f (E;\sigma )\).

The idea of fractional-order difference operator was firstly used by Chapman [21] and has since been studied by many researchers [22,23,24,25]. In the year 2016, some new classes of fractional-order difference sequence spaces was introduced by Baliarsingh in [26]. Later on, Kadak [27] has generalized the concept of weighted statistical convergence via (p, q)-integers. In the year 2017, Kadak [28] extended the weighted statistical convergence based on a generalized difference operator involving (p, q)-gamma function.

For our purpose, we will need the following definition involving a fractional-order backward difference operator of functions (see [26]).

Let a, b and c be real numbers and h be any positive constant. Let f(x) be a real-valued function which is differentiable with fractional-order. Based on this function f(x), for the sequence \(f_h(\cdot )=(f(x-ih))\), the fractional-order backward difference operator of f corresponding to the decrement ih is defined as

where \((-c)_i\ne 0\) for all \(i \in {\mathbb {N}}\) and \((r)_k\) denotes the Pochhammer symbol (or shifted factorial) of a real number r which is defined as

From now on, without loss of generality, we can assume that the summation given in (1.2) converges for all \(c <a+b\) where \((-c)_i\ne 0\) for all \(i \in {\mathbb {N}}\). It is clear that the fractional-order difference operator defined in Eq. (1.2) is linear and hence the integral and fractional-order operators can be obtained through the difference operator \(\Delta ^{a,b,c}_{h}\). For example, by choosing \(a=2\), \(b=c\) in (1.2), we can write

if it exists. Clearly, for \(a=r \in {\mathbb {R}}, b=c\), the fractional derivative operator \((\frac{d}{dx})^r\) and fractional integro operator \((\frac{d}{dx})^{-r}\,(r \notin {\mathbb {N}})\), are immediately obtained via \(\Delta ^{a,b,c}_{h}\). For more details see [26].

Our main purpose of the present study is to generalize the uniform convergence of sequences of positive linear operators based on fractional-order linear difference operator \(\Delta ^{a,b,c}_{h}\). Also, our present investigation deals essentially with various summability methods for sequences of functions and shows how these methods lead to a number of approximation results. Furthermore, we apply our new type of summability method to prove Korovkin and Voronovskaya type results for functions of two variables with the help of non-tensor Meyer-König and Zeller operators involving generating functions. Several illustrative examples and geometrical interpretations are also given to illustrate some of approximation results in this paper.

2 Some New Definitions and Concerning Inclusion Relations

In this section, we first give the definition of relative uniform weighted \(\alpha \beta \)-statistical convergence through the weighted \(\alpha \beta \)-density. Also, we introduce the notion of relatively uniform statistical \(\Phi \)-summability for function sequences. Secondly, we give some inclusion relations between proposed methods and present an illustrative example to prove that our method is non-trivial generalization of classical and statistical cases of relatively uniform convergence introduced in [18, 19].

Let \(p=(p_{k})_{k=0}^\infty \) be a sequence of non-negative real numbers such that

The lower and upper weighted \(\alpha \beta \)-densities of the set \(K \subset {\mathbb {N}}\) are defined by

and

respectively. We say that K has weighted \(\alpha \beta \)-density \(\delta ^{(\alpha ,\beta )}_{P_n}(K)\) if

in which case \(\delta ^{(\alpha ,\beta )}_{{\bar{N}}}(K)\) is equal to this common value. The weighted \(\alpha \beta \)-density can be restated in the following way:

if the limit exists.

Definition 1

Let h be any positive constant, \((\alpha ,\beta ) \in \Lambda \) and \(a, b, c \in {\mathbb {R}}\). A sequence \((f_n)\) of functions, defined on a compact subset E of real numbers, is said to be relatively uniform weighted \(\alpha \beta \)-statistical convergent to the function f on E, if there exists a scale function \(\sigma (x)~(|\sigma (x)|>0)\) on E such that, for each \(\epsilon >0\),

In this case we denote it by \(S_{\Delta }^{(\alpha ,\beta )}(p_n, \sigma )-f_n \rightrightarrows f \).

Definition 2

Let h be any positive constant, \((\alpha ,\beta ) \in \Lambda \) and \(a, b, c \in {\mathbb {R}}\). A sequence \((f_n)\) of functions defined on the compact subset \(E \subset R\), is said to be uniformly \(\Phi \)-summable to f on E, if

as \(n \rightarrow \infty \) uniformly in \(x\in E\), where \(|\Delta ^{a,b,c}_{h}f_k(x)|>0\) for all \(k \in {\mathbb {N}}\). Also, we say that \((f_n)\) is uniformly statistical \(\Phi \)-summable to f, if \((\Phi (f_n))\) is statistically uniform convergent to the same limit function f. That is, for every \(\epsilon >0\),

This limit is denoted by \({\overline{N}}_\Phi ~(stat)-f_n \rightrightarrows f \) on E.

Definition 3

A sequence \((f_n)\) of functions, defined on the compact subset \(E\subset {\mathbb {R}}\), is said to be relatively uniform statistical \(\Phi \)-summable to f, if \((\Phi (f_n))\) is relatively uniform statistical convergent to f on E. Equivalently, we may write

We denote it by \({\overline{N}}_\Phi ~-f_n \rightrightarrows f (\sigma , E)\).

Based upon above definitions, we shall give the following special cases and some inclusion relations to show the effectiveness of newly proposed methods.

-

Let us take \(a=0\), \(b=c\), \(\alpha (n)=1\), \(\beta (n)=n\) and \(p_n=1\) for all \(n \in {\mathbb {N}}\), the relatively uniform weighted \(\alpha \beta \)-statistical convergence in Definition 1, is reduced to relatively uniform statistical convergence introduced in [18]. For the case \(\sigma (x)\) is non-zero constant, we have its uniformly statistical convergence version (cf. [19]).

-

Let \((\lambda _n)\) be a strictly increasing sequence of positive numbers tending to \(\infty \) as \(n \rightarrow \infty \) such that \(\lambda _{n+1}\le \lambda _n +1\) and \(\lambda _1=1\). If we take \(a=0\), \(b=c\), \(\sigma (x)=1\), \(\alpha (n)=n-\lambda _n+1\) and \(\beta (n)=n\), then the relatively uniform statistical \(\Phi \)-summability given in Definition 3 reduces to the weighted \(\lambda \)-statistical summability (cf. [29, 30]). Again, if we take \(p_n=1\) for all \(n \in {\mathbb {N}}\) as an extra condition, we have an analog of \(\lambda \)-statistical summability for function sequences (cf. [31]).

-

Let \(\theta =(k_n)\) be an increasing positive number sequence such that \(k_0=0\), \(0<k_n<k_{n+1}\) and \(h_n=k_n-k_{n-1}\rightarrow \infty \), as \(n\rightarrow \infty \). It is known that \(\theta \) is a lacunary sequence. For \(a=0\), \(b=c\), \(\sigma (x)=1\), \(\alpha (n)=k_{n-1}+1\), \(\beta (n)=k_n\) and \(p_n=1\) for all \(n \in {\mathbb {N}}\), relatively uniform statistical \(\Phi \)-summability is reduced to lacunary statistical summability for function sequences (cf. [28, 32, 33]).

-

Take \(a=2\), \(b=c\), \(\sigma (x)=1\), \(\alpha (n)=\lambda _{n-1}+1\), \(\beta (n)=\lambda _n\), the relatively uniform weighted \(\alpha \beta \)-statistical convergence reduces to weighted \(\Lambda ^{2}\)-statistical convergence for function sequences (cf. [27, 34]). In a similar manner, relatively uniform statistical \(\Phi \)-summability is reduced to \(\Lambda ^{2}\)-statistical summability (cf. [27, 34]).

As a direct consequence of above mentioned special cases, we can give the following inclusion relations without proof.

Lemma 1

-

(a)

\(f_n \rightrightarrows f \) on E (in the ordinary sense) implies \((st)-f_n \rightrightarrows f (E;\sigma )\), which also implies \(S_{\Delta }^{(\alpha ,\beta )}(p_n, \sigma )-f_n \rightrightarrows f \) on E.

-

(b)

\(f_n \rightrightarrows f \) on E (ordinary uniform summable) implies \({\overline{N}}_\Phi ~(stat)-f_n \rightrightarrows f \) on E, which also implies \({\overline{N}}_\Phi ~-f_n \rightrightarrows f(\sigma , E)\).

Theorem 1

Let h be any positive constant, \((\alpha ,\beta ) \in \Lambda \) and \(a, b, c \in {\mathbb {R}}\) and, let \(\sigma (x)\) be a bounded scale function defined on \(E \subset {\mathbb {R}}\). Assume that

If a sequence \((f_n)\) of functions on \(E \subset {\mathbb {R}}\) is relatively uniform weighted \(\alpha \beta \)-statistical convergent to the bounded function f on E, then it is relatively uniform statistical \(\Phi \)-summable to the same limit function f on E, but not conversely.

Proof

Suppose that \(\sup _{x \in E} p_k~\big |\frac{\Delta ^{a,b,c}_{h} f_k(x)-f(x)}{\sigma (x)}\big |\le M~~\text {for all }~k\in {\mathbb {N}}\). From the hypotheses, we have

Let us set

and

We then obtain that

By using the fact that \(\frac{1}{P_n^{(\alpha , \beta )}}\sum _{k\in I_n^{(\alpha ,\beta )}} p_k=1\), \(\sup _{x\in E}|f(x)/\sigma (x)|<\infty \), and taking supremum over \(x \in E\) in the last equation, we get

where \(I_n^{(\alpha ,\beta )}=[\alpha (n), \beta (n)]\). Therefore, the sequence \((f_n)\) of functions defined on E is relatively uniform statistical \(\Phi \)-summable to the same limit f on E. \(\square \)

For converse, we present the following example:

Example 1

Define \(f_n:[0, 4] \rightarrow {\mathbb {R}}\) and \(\sigma (x)\) by

and

In the special case, when \(a=2\), \(b=c\), \(h=2\), \(\alpha (n)=1\), \(\beta (n)=n\) and \(p_n=1\) for all \(n \in {\mathbb {N}}\), we have

It is obvious that neither \((\Delta ^{2,b,b}_{h} f_n)\) nor \((f_n)\) converges uniformly on [0, 4]. On the other hand, since

we obtain,

and hence \((f_n)\) is relatively uniform statistical \(\Phi \)-summable to \(f(x)=0\). However, since

and

then \((f_n)\) is not relatively uniform weighted \(\alpha \beta \)-statistical convergent to \(f(x)=0\).

Based upon above example, it is concluded that proposed method is stronger than the classical and statistical version of relatively uniform convergence introduced in [17, 18].

3 A Korovkin-Type Approximation Theorem

At the beginning of the 1950s, the study of some particular approximation by means of the positive linear operators was extended to general approximation sequences of such operators. The basis of approximation theory through positive linear operators or functionals was developed by Korovkin [35]. This approximation theorem nowadays called Korovkin’s type approximation theorem has been extended in several directions. First of all, Gadjiev and Orhan [36] established the classical Korovkin theorem via statistical convergence. In recent years, with the help of extended summability methods, various approximation results have been proved [14, 23, 30, 34]. For more details on the usage of summability methods in Korovkin-type approximation theorems, refer to [37, 38].

In this section, we shall prove a Korovkin-type approximation theorem related to the notion of relatively uniform statistical \(\Phi \)-summability for sequence of functions of two variables. First, we give the definitions of fractional-order difference partial operators of f(x, y) defined on \(E^2\subset {\mathbb {R}}^2\). Secondly, using the generating function type non-tensor Meyer-König and Zeller operators [39, 40], we show that our proposed method successfully works and is more powerful than the existing Korovkin-type approximation theorem based on (relatively) uniform convergence.

Let a, b and c be real numbers and h be any positive constant. Let \(f:E^2 \rightarrow {\mathbb {R}}\) be any real-valued function which has fractional-order partial derivatives. Then, the fractional-order difference partial operators of f(x, y) with respect to x and y are defined by

and

respectively, where \((-c)_i\ne 0\) for all \(i \in {\mathbb {N}}\) and \((r)_k\) denotes the Pochhammer symbol in (1.3). Without loss of generality, we assume that the summations given in (3.1) and (3.2) converge for all \(a+b>c\) with \((-c)_i\ne 0\) for all \(i \in {\mathbb {N}}\). For instance, taking \(a=1\), \(b=c\) in (3.1), we would have the first-order partial derivative of f(x, y) with respect to x, that is,

provided that the limit exists (as a finite number).

By \(C(D_A)\), we denote the space of all continuous real-valued functions on a fixed compact subset \(D_A\) of \({\mathbb {R}}^2\) defined by

and equipped with the following norm:

Suppose that J is a of positive linear operators from \(C(D_A)\) into itself. Then as usual, we say that J is a positive linear operator provided that \(f \ge 0\) implies \(Jf \ge 0\). Also, we use the notation J(f(u, v); x, y) for the value of Jf at a point \((x, y)\in D_A\).

Throughout the paper, we consider the following families of two dimensional test functions on \(D_A\):

Theorem 2

Let h be any positive constant, \((\alpha ,\beta ) \in \Lambda \) and \(a, b, c \in {\mathbb {R}}\). Assume that \(\{T_{n}\}\) is a sequence of positive linear operators acting from \(C(D_A)\) into itself satisfying \(|\Delta _{h,x}^{a,b,c} T_n(\cdot ;x,y)|>0\). Assume further that \(\sigma _i(x, y)\) is an unbounded scale function on \(D_A\) such that \(|\sigma _i(x, y)|>0\) for \(i=0,1,2,3\). Then, for all \(f\in C(D_A)\),

if and only if

where \(\sigma (x, y)=\max \big \{|\sigma _i(x, y)|; i=0,1,2,3\big \}\) and \(e_{i}(\cdot ,\cdot )\) is defined as in (3.3).

Proof

Since each \(e_i\) belongs to \(C(D_A)\) where \(i=0,1,2,3\), then the implication (3.4) \(\Rightarrow \) (3.5) is clear. Let \(f \in C(D_A)\) and \((x, y) \in D_A\) be fixed. Since f is continuous on \(D_A\), given \(\epsilon >0\), there exists a number \(\delta =\delta (\epsilon )>0\) such that

for all \((x, y), (u, v) \in D_A\) satisfying

Also we obtain for all \((x, y), (u, v) \in D_A\) satisfying

that

where

and \(M:=\sup _{(x, y) \in D~_A}|f(x, y)|\). Combining (3.6) and (3.7), we get for all \((x, y), (u, v) \in D_A\) and \(f \in C(D_A)\) that

It follows from the linearity and positivity of \(T_{k}\) that

Now multiplying the both sides of the above inequality by \(\frac{1}{|\sigma (x, y)|}\) and taking the supremum over \((x,y) \in D_A\), we deduce that

where \(\sigma (x, y)=\max \{|\sigma _i(x, y)|; i=0,1,2,3\}\) and \(N:=\varepsilon +M+\frac{4M}{\delta ^2}.\) We now replace \(T_k(\cdot ; x, y)\) by \(\Phi (T_mf):C(D_A) \rightarrow C(D_A)\) defined by

in (3.9). For a given \(r >0\), we choose a number \(\epsilon >0\) such that \( \sup \limits _{(x,y) \in D_A}\frac{\epsilon }{|\sigma (x, y)|}<r\). Then, upon setting

where \(i=0,1,2,3\). Then, it is clear that \({\mathcal {A}}\subset \bigcup \limits _{i=0}^3{\mathcal {A}}_{i} \) and hence using the hypothesis (3.5), we have

which completes the proof of Theorem 2. \(\square \)

Remark 1

In Theorem 2, the condition \(|\Delta _{h,x}^{a,b,c} T_n(\cdot ; x,y)|>0\) can not be removed. For example, taking \(a \in {\mathbb {N}}\), \(b=c\) and \(h=1\), since \(\Delta _{h,x}^{a,b,c} T_n(e_0;x,y)=0\), then

Therefore, the condition (3.5) does not always hold true for \(i=0\).

We now present an illustrative example for Theorem 2. Before giving this example, we give a short introduction associated with the non-tensor type Meyer-König and Zeller operators of two variables (see [40, 41]).

Let us consider the following bivariate non-tensor operators:

where \(P^n_{k,l}(u,v)>0\) for all \((u,v) \in D_{{\mathcal {A}}}\), \({\mathcal {A}}\in (0, 1)\) and

For the double indexed function sequence \(\{P^n_{k,l}(u,v)\}_{k,l\in {\mathbb {N}}}\), the generating function \(\Omega _n(u,v;x,y)\) is defined by

Since the nodes are given by

the denominators of

are both independent of k and l, respectively. Throughout the paper, we also suppose that the following conditions hold true (see, for details, [40]):

-

(i)

\(\Omega _n(u,v;x,y)=(1-x-y)~\Omega _{n+1}(u,v;x,y)\);

-

(ii)

\(a_{k+1,l,n} P^n_{k+1,l}(u,v)=b_{n+1}P^{n+1}_{k,l}(u,v)\) and \(c_{k,l+1,n} P^n_{k,l+1}(u,v)=b_{n+1}P^{n+1}_{k,l}(u,v)\);

-

(iii)

\(b_n \rightarrow \infty , \frac{b_{n+1}}{b_n}\rightarrow 1\) and \(b_n\ne 0\) for all \(n\in {\mathbb {N}}\);

-

(iv)

\(a_{k+1,l, n}-a_{k,l, n+1}=\varphi _n\) and \(c_{k,l+1, n}-c_{k,l, n+1}=\xi _n\) where \(|\varphi _n|\le M_0 <\infty ,\)\(|\xi _n|\le M_1 <\infty \) and \({a_{0,l,n}}=0, {c_{k,0,n}}=0\) for all \(n\in {\mathbb {N}}\).

Note that, choosing \(\Omega _n(u,v;x,y)=\frac{1}{(1-x-y)^{n+1}}\), \(a_{k,l, n}=k, c_{k,l, n}=l\) and \(b_n=n\) in (3.10), we get the non-tensor bivariate MKZ operators (see [42]). Using the positivity and linearity of \(L_n\), it can be observed that (see [40])

and

Example 2

Let \(\alpha (n)=1\), \(\beta (n)=n\) and \(p_n=n\) for all \(n \in {\mathbb {N}}\). Define \(f_n:[0, 1] \rightarrow {\mathbb {R}}\) and \(\sigma (x,y)\) by

and

For the special case \(a= 0\) and \(b = c\), one obtains

which implies that

Now, let us suppose that \(\{T_n\}\) is the same as taken in Theorem 2 such that

Then, observe that

and

Taking into account our assumptions (i)–(iv), we have

We will now show that

Now let

By (3.11), (3.14) and the assumption (iv), one obtains

Passing to limit as \(n \rightarrow \infty \) in the last inequality and using (iii), the inclusion (3.15) holds true. From (3.13) and (3.15), we can say that our sequence \(T_n(f; x,y)\) defined by (3.12) satisfy all assumptions of Theorem 2. Therefore

In view of above example, we say that our proposed method works successfully but classical and statistical version of relatively uniform convergence do not work for this sequence \(\{T_n\}\) of positive linear operators on \(D_A\).

4 Rate of Relatively Uniform Weighted \(\alpha \beta \)-Statistical Convergence

In this section, we estimate the rates of relatively uniform weighted \(\alpha \beta \)-statistical convergence of positive linear operators defined from \(C(D_A)\) into itself by the help of the modulus of continuity.

We now present the following definition.

Definition 4

Let a, b,c be real numbers, h be any positive constant and \((\alpha ,\beta )\in \Lambda \). Let \((\theta _n)\) be a positive non-increasing sequence of real numbers and \(\sigma (x,y)\) be a scale function defined on a compact subset \(E^2\subset {\mathbb {R}}^2\) satisfying \(|\sigma (x,y)|>0\). A sequence \((f_k(x,y))\) of real-valued functions defined on \(E^2\) is said to be relatively uniform weighted statistically convergent to f on \(E^2\) with the rate of \(o(\theta _n)\), if for every \(\epsilon >0\),

In this case, we denote it by \(f_k-f=S_{\Delta }^{(\alpha ,\beta )}(p_k, \sigma )-o(\theta _n)\) on \(E^2\). Taking into account that being little “o” of a sequence (function) is a stronger condition than being big “\({\mathcal {O}}\)” of a sequence (function), all the results presented in this section can be given when little “o” is replaced by big “\({\mathcal {O}}\)”.

Lemma 2

Let a, b,c be real numbers, h be any positive constant and \((\alpha ,\beta )\in \Lambda \). Let \((f_k)\) and \((g_k)\) be two function sequences belonging to \(C(E^2)\). Suppose that \((\eta _{n})\) and \((\zeta _{n})\) are positive non-increasing sequences of real numbers such that

where \(|\sigma _i(x,y)|>0\), \(i=0,1\). Let \(\gamma _{n}=\max \{\eta _{n}, \zeta _{n}\}\). Then, the following statements hold:

-

(1)

\((f_{k}-f)\pm (g_{k}-g)=S_{\Delta }^{(\alpha ,\beta )}(p_k, \max \{|\sigma _i(x,y)|:i=0,1\})-o(\gamma _n)~~\text {on}~~E^2\),

-

(2)

\((f_{k}-f)(g_{k}-g)=S_{\Delta }^{(\alpha ,\beta )}(p_k, \sigma _0\sigma _1)-o(\eta _{n} \zeta _{n})~~\text {on}~~E^2\),

-

(3)

\((\lambda (f_{k}-f))=S_{\Delta }^{(\alpha ,\beta )}(p_k, \sigma _0)-o(\eta _n)~~\text {on}~~E^2\), for any scalar \(\lambda \).

Proof

Assume that

Also, for \(\epsilon >0\), define

It is seen that \({\mathcal {D}} \subset {\mathcal {D}}_{0} \cup {\mathcal {D}}_{1}\), which yields, for \(n \in {\mathbb {N}}\), that

where \(|{\mathcal {D}}|\) denotes the cardinality of the set \({\mathcal {D}}\). Now letting \(n \rightarrow \infty \) in the last inequality and using hypothesis, we get

where \(\gamma _{n}=\max \{\eta _{n}, \zeta _{n}\}\). Since the other assertions can be proved similarly, we omit the details. \(\square \)

We now recall the modulus of continuity and auxiliary facts to get the rates of weighted statistically relatively uniform convergence given in Definition 4 by means of the modulus of continuity.

Let \(H_{\omega }(D_A)\) denote the space of all real-valued functions f on \(D_A\) such that

where \(\varphi _u(x)\), \(\varphi _v(y)\) are as defined in (3.8), and \(\omega (f; \delta )\) is the modulus of continuity defined by

We then observe that any function in \(H_{\omega }(D_A)\) is continuous and bounded on \(D_A\), and a necessary and sufficient condition for \(f \in H_{\omega }(D_A)\) is that \(\lim _{\delta \rightarrow 0}\omega (f; \delta )=0\).

Theorem 3

Let a, b,c be real numbers, h be any positive constant and \((\alpha ,\beta )\in \Lambda \). Also, let \(\{B_{k}\}\) be a sequence of positive linear operators acting from \(H_{\omega }(D_A)\) into \(C(D_A)\). Assume that the following conditions hold true :

-

(i)

\(B_{k}(e_{0})-e_{0} =S_{\Delta }^{(\alpha ,\beta )}(p_k, \sigma _0)-o(\eta _n)~~\text {on}~~D_A\), where \(e_{0}(u,v)=1\),

-

(ii)

\(\omega (f; \lambda _{k}) =S_{\Delta }^{(\alpha ,\beta )}(p_k, \sigma _1)-o(\zeta _n)~~\text {on}~~D_A\), where \(\lambda _{k}:=\sqrt{|B_{k}(\psi ; x, y)|}\) with

$$\begin{aligned}\psi (u, v) =\left( \frac{u}{1-u-v}-\frac{x}{1-x-y}\right) ^2+\left( \frac{v}{1-u-v}-\frac{y}{1-x-y}\right) ^2.\end{aligned}$$

Then, we have, for all \(f \in H_{\omega }(D_A)\),

where \(\gamma _{n}=\max \{\eta _{n}, \zeta _{n}\}\) and \(\sigma (x,y)=\max \{|\sigma _0(x,y)|, |\sigma _1(x,y)|, |\sigma _0(x,y)\sigma _1(x,y)|\}\) for \(i=0,1\).

Proof

Let \(f \in H_{\omega }(D_A)\) and \((x, y) \in D_A\) be fixed. Then, since \(e_{0}(u,v)=1\), by (4.1) and using monotonicity of \(\{B_{k}\}\), we see (for any \(\delta >0\) and \(k\in {\mathbb {N}})\) that

Now multiplying the both sides of the above inequality by \(\frac{1}{|\sigma (x,y)|}\) and taking the supremum over \((x,y) \in D_A\), we obtain

where \(N:=\sup \limits _{(x,y) \in D_A}|f(x,y)|\). Put \(\delta =\lambda _{k}=\sqrt{|B_{k}(\psi ; x, y)|}\) and replace \(B_{k}(\cdot ;x,y)\) by \(\widetilde{B}_{k}(\cdot ;x,y)=|\Delta ^{a,b,c}_{h,x} B_k(\cdot ;x,y)|\), so we get

For a given \(\epsilon >0\), we consider the following sets:

Then it is follows from (4.2) that \({\mathcal {J}}\subset {\mathcal {J}}_{0} \cup {\mathcal {J}}_{1}\cup {\mathcal {J}}_{2}\). Now, since \(\gamma _{n}=\max \{\eta _{n}, \zeta _{n}\}\), we get, for every \(n \in {\mathbb {N}}\), that

By taking Lemma 2 into account, and hence passing to the limit as \(n \rightarrow \infty \) in the last inequality, we see that

whence the result. \(\square \)

5 A Voronovskaja-Type Approximation Theorem

For the pointwise convergence of a sequence of linear positive operators, Voronovskaja theorem (see [43]) concerning the asymptotic behavior of Bernstein polynomials has a crucial role. In this section, based on relatively uniform statistical \(\Phi \)-summability, we prove a Voronovskaja-type approximation theorem with the help of \(T_{n}\) family of linear operators as defined in Example 2.

Lemma 3

Let h be a positive constant, \((\alpha ,\beta ) \in \Lambda \) and \(a, b, c\in {\mathbb {R}}\). Then

and

where

Proof

Since the proof is similar for (5.2), we consider only (5.1). Suppose that a, b, c are real numbers, \(h>0\) and \((x, y) \in D_A\). Since \(L_{n}(e_{1})=\frac{b_{n+1}}{b_n}e_{1}\), we can write

Also, since

we find that

Using the assumption (iv) and multiplying both sides of above equality by \(\frac{1}{|\sigma (x,y)|}\), and also taking supremum over \((x, y) \in D_A\), we get

Replacing \(\{b_nT_n(\eta _u^2)\}\) by

and passing to the limit as \(n \rightarrow \infty \) in the last inequality we have

\(\square \)

Corollary

Let \((x, y) \in D_A\), and let \(\eta _u(x)\) and \(\eta _v(y)\) be given as in Lemma 3. Then there are two positive constants \(R_0(x)\) and \(R_0(y)\) depending only on x and y, respectively, such that

and

In order to estimate the asymptotic behavior of \(T_n\), we present a Voronovskaja-type approximation theorem [43]. For simplicity in notation, we use the followings in next theorem:

Theorem 4

Let h be a positive constant, \((\alpha ,\beta ) \in \Lambda \), \((x^*,y^*) \in D_A\) and \(a, b, c\in {\mathbb {R}}\). Then, for every \(f\in C_B(D_A)\) such that \(f_x, f_y, f_{xx}, f_{xy}, f_{yy} \in C_B(D_A),\)

where \(C_B(D_A)\) denotes the space of all continuous and bounded real-valued functions on the compact subset \(D_A\) of \({\mathbb {R}}^2\).

Proof

Let \((x^*,y^*) \in D_A\) and \(f_x, f_y, f_{xx}, f_{xy}, f_{yy} \in C_B(D_A)\). By the Taylor formula for \(f\in C_B(D_A)\), we have

where the function \(\theta _{(x^*,y^*)}\) is the remainder,

We thus observe that the operator \(T_{n}\) is linear and that

Now, we recall that, if \(g\in C_B(D_A)\) and if \(g(s, t)=g_1(s)g_2(t)\) for all \((s, t) \in D_A\), then

Upon multiplying both sides by \(b_n\), \(n \in {\mathbb {N}}\), in (5.4), and using (5.3) and (5.5), we get

Taking supremum over \((x^*,y^*) \in D_A\) in (5.4), one obtains

We will now show that

Applying the Cauchy–Schwarz inequality and using (5.5), we obtain

Let us consider \(\theta ^2_{(x^*,y^*)}(u^*,v^*)=\gamma _{(x^*,y^*)}(u^*,v^*).\) In this case, we see that

From Theorem 2, we observe that

Using the above Corollary, the inclusion (5.7) holds true. Now we replace \(\big \{b_n(T_{n}f-f)\big \}\) by

Letting \(n \rightarrow \infty \) in (5.6) and considering the assumption (iv), we have

where

Since \({\overline{N}}_\Phi -f_n\rightrightarrows 0~(\sigma , D_A)\), then

Using (5.8), (5.9) and Lemma 3, we obtain

the proof is completed. \(\square \)

6 Computational and Geometrical Interpretations

In this section, using the positive linear operator \(L_n(f;x,y)\) given in (3.10), we provide the computational and geometrical interpretations of Theorem 2 by under different choices for the parameters. More powerful equipments with higher speed can easily compute the more complicated infinite series in a similar manner.

Here, in our computations, we take

-

\(\Omega _n(u,v;x,y)=(1-x-y)^{-n-1}\) and \(P^n_{k,l}(u,v)=\frac{(n+k+l)!}{n!~k!~l!}\);

-

\(a_{k,l,n}=k\), \(c_{k,l,n}=l\) and \(b_n=n\);

-

\(a=5/2, b=c\) and \(h=\frac{\sqrt{3}}{4}\);

-

\(\alpha (n)=1\), \(\beta (n)=n\) and \(p_n=1\) for all \(n \in {\mathbb {N}}\);

-

\(f_n(x,y)\) is given as in Example 2, and \(\sigma (x,y)=1\) for all \((x,y) \in D_A\).

Using the above choices, we may define the operator \(\Phi (L_m(f;x,y))\) by

where

By means of (3.1), for \(a=5/2,b=c\) and \(h=\frac{\sqrt{3}}{4}\), one gets

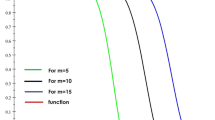

The convergence of \(\Phi (L_m(e_0;x,y))\) to the function \(e_0(x, y)=1\), is illustrated in Fig. 1 for each values of k and l runs from \(k,l = 0\) to 20 for \(m = 5\), \(m = 10\), \(m = 15\) and \(m=20\).

Also, from Fig. 2, it can be observed that, as the value of m increases, the sequence \(\Phi (L_m(e_{1};x,y))\) converges to the function \(e_{1}(x,y)=\frac{x}{1-x-y}.\)

Similarly, for the test function given by

and different values of m, it is also observe that, \(\Phi (L_m(e_{3};x,y))\) converges to the function \(e_{3}(x,y)\).

Figures 1, 2 and 3 clearly show that the conditions (3.5) of Theorem 2 are satisfied for \(i=0,1,2,3\).

We observe from Fig. 4 that, as the value of m increases, the operators given by (6.1) converge toward the function. Furthermore, Fig. 4 shows that the condition (3.4) holds true for the function

References

Fast, H.: Sur la convergence statistique. Colloq. Math. 2, 241–244 (1951)

Steinhaus, H.: Sur la convergence ordinaire et la convergence asymptotique. Colloq. Math. 2, 73–74 (1951)

Schoenberg, I.J.: The integrability of certain functions and related summability methods. Am. Math. Mon. 66, 361–375 (1959)

Balcerzak, M., Dems, K., Komisarski, A.: Statistical convergence and ideal convergence for sequences of functions. J. Math. Anal. Appl. 328, 715–729 (2007)

Kolk, E.: Matrix summability of statistically convergent sequences. Analysis 13, 77–83 (1993)

Edely, O.H.H., Mursaleen, M.: On statistical \(A\)-summability. Math. Comput. Model. 49, 672–680 (2009)

Salat, T.: On statistically convergent sequences of real numbers. Math. Slovaca 30(2), 139–150 (1980)

Mursaleen, M., Edely, O.H.H.: Generalized statistical convergence. Inform. Sci. 162, 287–294 (2004)

Buck, R.C.: Generalized asymptotic density. Am. J. Math. 75, 335–346 (1953)

Buck, R.C.: The measure theoretic approach to density. Am. J. Math. 68, 560–580 (1946)

Karakaya, V., Chishti, T.A.: Weighted statistical convergence. Iran. J. Sci. Technol. Trans. A. Sci. 33, 219–223 (2009)

Mursaleen, M., Karakaya, V., Ertürk, M., Gürsoy, F.: Weighted statistical convergence and its application to Korovkin type approximation theorem. Appl. Math. Comput. 218, 9132–9137 (2012)

Srivastava, H.M., Mursaleen, M., Khan, A.: Generalized equi-statistical convergence of positive linear operators and associated approximation theorems. Math. Comput. Model. 55, 2041–2051 (2012)

Kadak, U., Braha, N.L., Srivastava, H.M.: Statistical weighted \(B\)-summability and its applications to approximation theorems. Appl. Math. Comput. 302, 80–96 (2017)

Aktuğlu, H.: Korovkin type approximation theorems proved via \(\alpha \beta \)-statistical convergence. J. Comput. Appl. Math. 259, 174–181 (2014)

Moore, E.H.: An Introduction to a Form of General Analysis. The New Haven Mathematical Colloquium. Yale University Press, New Haven (1910)

Chittenden, E.W.: On the limit functions of sequences of continuous functions converging relatively uniformly. Trans. AMS 20, 179–184 (1919)

Demirci, K., Orhan, S.: Statistically relatively uniform convergence of positive linear operators. Results Math. 69, 359–367 (2016)

Duman, O., Orhan, C.: \(\mu \)-statistically convergent function sequences. Czechoslovak Math. J. 54, 413–422 (2004)

Demirci, K., Orhan, S.: Statistical relative approximation on modular spaces. Results Math. 71(3–4), 1167–1184 (2017)

Chapman, S.: On non-integral orders of summability of series and integrals. Proc. Lond. Math. Soc. 2(9), 369–409 (1911)

Kuttner, B.: A limitation theorem for differences of fractional order. J. Lond. Math. Soc. 43, 758–762 (1968)

Kadak, U.: Generalized weighted invariant mean based on fractional difference operator with applications to approximation theorems for functions of two variables. Results Math. 72(3), 1181–1202 (2017)

Kadak, U., Baliarsingh, P.: On certain Euler difference sequence spaces of fractional order and related dual properties. J. Nonlinear Sci. Appl. 8, 997–1004 (2015)

Baliarsingh, P.: Some new difference sequence spaces of fractional order and their dual spaces. App. Math. Comput. 219, 9737–9742 (2013)

Baliarsingh, P.: On a fractional difference operator. Alex. Eng. J. 55(2), 1811–1816 (2016)

Kadak, U.: On weighted statistical convergence based on \((p, q)\)-integers and related approximation theorems for functions of two variables. J. Math. Anal. Appl. 443, 752–764 (2016)

Kadak, U.: Weighted statistical convergence based on generalized difference operator involving \((p, q)\)-gamma function and its applications to approximation theorems. J. Math. Anal. Appl. 448, 1633–1650 (2017)

Belen, C., Mohiuddine, S.A.: Generalized weighted statistical convergence and application. Appl. Math. Comput. 219, 9821–9826 (2013)

Braha, N.L., Srivastava, H.M., Mohiuddine, S.A.: A Korovkin’s type approximation theorem for periodic functions via the statistical summability of the generalized de la Vallée Poussin mean. Appl. Math. Comput. 228, 162–169 (2014)

Mursaleen, M.: \(\lambda \)-statistical convergence. Math. Slovaca 50, 111–115 (2000)

Fridy, J.A., Orhan, C.: Lacunary statistical convergence. Pac. J. Math. 160, 43–51 (1993)

Et, M., Şengül, H.: Some Cesàro-type summability spaces of order \(\alpha \) and lacunary statistical convergence of order \(\alpha \). Filomat 28, 1593–1602 (2014)

Braha, N.L., Loku, V., Srivastava, H.M.: \(\Lambda ^2\)-Weighted statistical convergence and Korovkin and Voronovskaya type theorems. Appl. Math. Comput. 266, 675–686 (2015)

Korovkin, P.P.: Linear Operators and Approximation Theory. Hindustan Publishing Corporation, Delhi (1960)

Gadjiev, A.D., Orhan, C.: Some approximation theorems via statistical convergence. Rocky Mt. J. Math. 32(1), 129–138 (2002)

Atlıhan, Ö.G., Ünver, M., Duman, O.: Korovkin theorems on weighted spaces: revisited. Periodica Hung. Math. 75(2), 201–209 (2017)

Duman, O., Khan, M.K., Orhan, C.: \(A\)-statistical convergence of approximating operators. Math. Inequal. Appl. 6, 689–699 (2003)

Meyer-König, W., Zeller, K.: Bernsteinsche potenzreihen. Studia Math. 19, 89–94 (1960)

Özarslan, M.A.: New Korovkin type theorem for non-tensor Meyer-König and Zeller operators. Results Math. 69(3–4), 327–343 (2016)

Taşdelen, F., Erençin, A.: The generalization of bivariate MKZ operators by multiple generating functions. J. Math. Anal. Appl. 331, 727–735 (2007)

Lopez-Moreno, A.-J., Munoz-Delgado, F.-J.: Asymptotic expansion of multivariate conservative linear operators. J. Comput. Appl. Math. 150(2), 219–251 (2003)

Voronovskaja, E.V.: Détermination de la forme asymptotique de l’approximation des fonctions par les polynomes de M. Bernstein Dokl. Akad. Nauk SSSR 4, 79–85 (1932)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare to have no competing interests.

Additional information

Communicated by Rosihan M. Ali.

Rights and permissions

About this article

Cite this article

Kadak, U., Srivastava, H.M. & Mursaleen, M. Relatively Uniform Weighted Summability Based on Fractional-Order Difference Operator. Bull. Malays. Math. Sci. Soc. 42, 2453–2480 (2019). https://doi.org/10.1007/s40840-018-0612-2

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40840-018-0612-2

Keywords

- Weighted statistical convergence and weighted statistical summability

- Fractional-order difference operators of functions

- Relatively uniform convergence

- The rates of convergence

- Korovkin- and Voronovskaja-type approximation theorems