Abstract

In this article, numerical approximations based on generalized fractional Mittag–Leffler and generalized fractional Laguerre functions are developed. A new suitable formula of the Laguerre function and its fractional versions is stated and its derivative of fractional order is evaluated. Also, a new fractional Mittag–Leffler function formula is stated. Numerical differential and integral Galerkin methods depend on generalized fractional Mittag–Leffler and generalized fractional Laguerre functions being constructed. The proposed methods are applied to solving linear and nonlinear differential equations of fractional order. Some different examples are included to ensure the applicability and efficiency of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Since the last century, fractional differential equations have played an important role in solving a lot of physical problems because it can explain a lot of natural phenomena, engineering theories, economics and commercial models, etc [1,2,3,4]. Some of these phenomena are complex and very difficult to understand and solve. However, they can be easily analysed and solved if they are described by ordinary fractional differential equations.

Because there is no exact solution to such problems, numerical treatment of most fractional differential equations has become widespread and flourishing in the last two decades.Pedas and Tamme [5] investigated the numerical solution of fractional differential equations with initial values by piecewise polynomial collocation methods. They studied the order of convergence and established a super convergence effect for a special choice of collocation points. Yan et al. [6] introduced an accurate numerical technique for solving differential equations of fractional order.

We present in this work two approaches that are called differentiation Galerkin methods and integration Galerkin methods, that have been applied to linear and nonlinear equations by using two functions of approximation; the first is based on a Laguerre function in generalized form of the fractional formula of the fractional differential problems. The second method is based on the generalized fractional Mittag–Leffler function of the fractional differential equation in the problems which we discussed. The Galerkin Collocation method is an approximation technique to obtain numerical solutions to differential problems and has some advantages in dealing with this class of problems. The unknown coefficients can be easily obtained by using specific numerical programs. Therefore, this method is very efficient and fast in extracting results [7, 8].

The outline of the paper is as follows: In Sect. 2, we show the definitions of fractional calculus theory. The properties of the generalized fractional Mittag–Leffler function are explained in Sect. . 3.1. The formula of the Laguerre function and the generalized fractional Laguerre function are reformulated to be suitable for approximation in Sects. 3.2 and 3.3. In Sect. 4, we explain how to use the Galerkin method with linear and nonlinear equations and present four methods of numerical solution for the given problem, namely the Mittag differential Galerkin method (MDGM), the Mittag integeral Galerkin method (MIGM), the Laguerre differential Galerkin method (LDGM), and the Laguerre integeral Galerkin method (LIGM). Through Sect. 5, we show some theorems that are used to calculate the error estimation. In Sect. 6, we include some different examples in order to illustrate the simplicity and the capability of the proposed method. Finally, conclusions are presented in Sect. 7.

Preliminaries

In this section, some basic properties of derivatives and integrals of fractional order are recalled. The widely used definition of Caputo derivatives and integrals is:

Definition 1

[9] The Caputo fractional derivative \( D_C^\mu \) of order \(\mu >0\), \(n \in \mathbb {N}\) is given by:

The Caputo fractional derivative operator satisfies the following properties [10]: For constants \( \zeta _k,k=1,2,\ldots ,n, \) we have:

then

In fact if \(\mu \) is an integer, the Caputo differential operator will be identical with the usual differential operator as:

If the function become \(x^{n}\) then the Caputo fractional derivative is:

The fractional integral of \(x^{n}\) is defined by

To demonstrate the definitions of fractional differentiation and integration, we use matlab and take \(n = 2\) as an example, and \(\mu \)is changed from 0 to 3, as shown in Fig. 1.

Some Properties of Approximating Polynomials

Generalized Fractional Mittag–Leffler Function

The Mittag–Leffler function of one-parameter is defined as [11]:

The Mittag–Leffler function of two-parameter is given by [11]:

As a special case, we have \(E^{\beta ,1} (x)=E^\beta (x)\) and \(E^{1,1} (x)=E^1(x)=e^x\). The finite version of the Mittag–Leffler function in the two-parameter finite of any integer n is given by [12]:

that is

so, we can write

The definition of generalized Mittag–Leffler function is:

the modify is

From Eq. (13), we show the first GMLF modification as:

The fractional version of the Mittag function of two parameters in Eq. (15) is the general case of the usual Mittag function in Eq. (9). When \(\alpha =n\), the two Eqs. (9) and (15) are identical.

The fractional order derivative of Eq. (15) is defined by:

The fractional order integral of Eq. (15) is defined by:

Laguerre Function

The generalized Laguerre polynomials \(L_{\mu , n} (x), n=0, 1, 2, \ldots \) and \(\mu >-1\) can be defined on the interval \([0, \infty )\) [13] by:

where \(L_{\mu , 0} (x)=1\) and \(L_{\mu , 1} (x)=1+\mu -x.\) The explicit formula of generalized Laguerre polynomials is given by:

Generalized Fractional Laguerre Functions

Here, we will give the representation of the Laguerre type functions \(L_{n}^{\gamma ,\beta }(x)\) that is related by the fractional order. We know that the Laguerre Rodrigues’ formula [14] is defined by:

the fractional order of Laguerre polynomial (20) is:

Using the Leibniz rule for fractional derivative [15] that states:

using Eqs. (21) and (22) becomes:

By using Eqs. (4) and (23) we get:

Lemma 1

Let \(L_{\alpha }^{\beta ,\gamma }(x)\) be a generalized fractional Laguerre polynomial. Then the fractional- order derivative of it is defined by:

where \(x\in \mathbb {R},~~ \mu >0\) and \(\alpha ,~ \beta ,~ \gamma >0.\)

Lemma 2

Let \(L_{\alpha }^{\beta ,\gamma }(x)\) be a generalized fractional Laguerre polynomial. Then the fractional- order integral of it is defined by:

where \(x\in \mathbb {R},~~ \mu >0\) and \(\alpha ,~ \beta ,~ \gamma >0.\)

Methods of Numerical Solution

Galerkin Method

Let us begin with an abstract issue presented as a weak formulation on a Hilbert space \(L^2~ [a,b]\) to introduce Galerkin’s technique, with the following inner product as:

Now, consider the differentiable problem is \(F(u)=0\) and let

is an approximate solution, then Galerkin method finds the unknowns by solving the system:

It is known as Weak Galerkin formulation [16].

Differential Galerkin Method

Linear Equation

Consider the linear multi-order fractional differential equation with initial conditions:

where \(0<\mu _{1}<\cdots<\mu _{r-1}<\mu _{r}, ~~ A_{k}(x),~~k=1,2,\ldots ,r,~~f(x)\) are known continuous functions on [0, 1] and \(d_{i},~~i=0,1,2,\ldots ,\lceil \mu _r \rceil -1,\) are given constants.

Let

where \(M^{\alpha _{i}}(x) \in \{E_{\alpha _{i}}^{\beta ,\gamma }(x), L_{\alpha _{i}}^{\beta ,\gamma }(x)\}\) and \(a_{i},~~ i=0,1,2,\ldots ,n,\) are anonymous constants.

Substituting from Eq. (32) into Eqs. (30) and (31) we obtain:

Then

where \(j=1,2,\ldots ,n,\) and

Equations (35) and (36) in view of the Galerkin Eq. (29) become:

where \(j=1,2,\ldots ,n,\) and

with \(w_0=w_n=1/2, w_k=1, k=1,2,\ldots ,n-1\). So we can construct an unconstrained optimization problem with objective function as:

The solution of Eq. (39) defines the anonymous coefficients \(a_{i},~~ i=1,2,\ldots ,n,\) and so the numerical solution y(x) is defined by Eq. (32).

Nonlinear Equation

Consider the nonlinear multi-order fractional differential equation with initial conditions:

where \(0<\mu _{1}<\cdots<\mu _{r-1}<\mu _{r},~~ A_{k}(x),~~k=1,2,\ldots ,r,~~f(x)\) are known continuous functions on [0, 1] and \(d_{i},~~i=0,1,2,\ldots ,\lceil \mu _{r} \rceil -1,\) are given constants.

Let

where \(M^{\alpha _{i}}(x) \in \{E_{\alpha _{i}}^{\beta ,\gamma }(x), L_{\alpha _{i}}^{\beta ,\gamma }(x)\}\) and \(a_{i},~~ i=0,1,2,\ldots ,n,\) are anonymous constants.

Substituting from Eq. (42) into Eqs. (40) and (41) we obtain:

Then

where \(j=1,2,\ldots ,n,\) and

Equations (45) and (46) in view of the Galerkin Eq. (29) become:

where \(j=1,2,\ldots ,n,\) and

with \(w_0=w_n=1/2, w_k=1, k=1,2,\ldots ,n-1\). So we can construct an unconstrained optimization problem with objective function as:

The solution of Eq. (49) defined the anonymous coefficients \(a_{i},~~ i=1,2,\ldots ,n,\) and so the numerical solution y(x) is defind by Eq. (42).

Integeral Galerkin Method

Linear Equation

Consider the linear multi-order fractional differential equation with initial conditions:

where \(0<\mu _{1}<\cdots<\mu _{r-1}<\mu _{r}, ~~ A_{k}(x),~~k=1,2,\ldots ,r,~~f(x)\) are known continuous functions on [0, 1] and \(d_{i},~~i=0,1,2,\ldots ,\lceil \mu _r \rceil -1,\) are given constants.

Firstly, we apply the integral operator \(I^{\mu _r}\) on Eq. (50) to become:

Assume that the solution of the fractional linear differential Eq. (50) can be written as:

where \(M^{\alpha _{i}}(x) \in \{E_{\alpha _{i}}^{\beta ,\gamma }(x), L_{\alpha _{i}}^{\beta ,\gamma }(x)\}\) and \(b_{i},~~ i=0,1,2,\ldots ,n\) are anonymous constants.

Substituting from Eq. (53) into Eq. (52) we have:

Then

Equations (55) and (56) in view of the Galerkin Eq. (29) become:

where \(j=1,2,\ldots ,n,\) and

with \(w_0=w_n=1/2, w_k=1, k=1,2,\ldots ,n-1\).

So we can construct an unconstrained optimization problem with objective function as:

The solution of Eq. (59) defined the anonymous coefficients \(b_{i},~~ i=1,2,\ldots ,n,\) and so the numerical solution y(x) is defind by Eq. (53).

Nonlinear Equation

Consider the nonlinear multi-order fractional differential equation with initial conditions:

where \(0<\mu _{1}<\cdots<\mu _{r-1}<\mu _{r}, ~~ A_{k}(x),~~k=1,2,\ldots ,r,~~f(x)\) are known continuous functions on [0, 1] and \(d_{i},~~i=0,1,2,\ldots ,\lceil \mu _r \rceil -1,\) are given constants.

Firstly, we apply the integral operator \(I^{\mu _r}\) on Eq. (60) to become:

Assume that the solution of Eq. (60) can be written as:

where \(M^{\alpha _{i}}(x) \in \{E_{\alpha _{i}}^{\beta ,\gamma }(x), L_{\alpha _{i}}^{\beta ,\gamma }(x)\}\) and \(b_{i},~~ i=0,1,2,\ldots ,n\) are anonymous constants.

Substituting Eq. (63) into Eq. (62) we get:

Then

Equations (65) and (66) in view of the Galerkin Eq. (29) become:

where \(j=1,2,\ldots ,n,\) and

with \(w_0=w_n=1/2, w_k=1, k=1,2,\ldots ,n-1\). So we can construct an unconstrained optimization problem with objective function as:

The solution of Eq. (69) defined the anonymous coefficients \(b_{i},~~ i=1,2,\ldots ,n,\) and so the numerical solution y(x) is defind by Eq. (63).

The Proposed Methods

We present four methods of numerical solution for the given problem, namely the Mittag differential Galerkin method (MDGM), the Mittag integeral Galerkin method (MIGM), the Laguerre differential Galerkin method (LDGM), and the Laguerre integeral Galerkin method (LIGM).

Error Analysis

Theorem 1

[12]: Let y(x) and \(y_n (x) \in C^\infty [0,1]\) be approximated by Eq. (32), then for every \(x\in [0,1]\), there exists \(\varpi \in [0,1]\), such that:

and the estimated error is:

Theorem 2

[12]: Let \(y(x)\in C^\infty [0,1]\) satisfies Eq. (30) and it is approximated by Eq. (32) then for every \(x\in [0,1]\), there exists \(\varpi \in [0,1]\) such that the residual is estimated by:

Theorem 3

[17]: Suppose y(x) and its \((\mu -1)\) order derivatives are absolutely continuous in \([0,\infty )\) and satisfies:

then for the Mittag expansion from Eq. (32), we have:

Theorem 4

[8]: Assume that y(x) satisfies the hypothesis of Theorem 3. If y(x) and \(y_n(x)\) are expressed by Eq. (32), then \(y_n(x)\) converges to y(x) as \(n \rightarrow \infty \).

Theorem 5

[8]: under the hypothesis of Theorem 3 and Theorem 4., the approximate fractional order drivative \(D_C^{\mu }~ y_n(x)\) converges to \(D_C^{\mu } ~ y(x)\) as \(n \rightarrow \infty \).

Theorem 6

under the hypothesis of the previous theorems, the approximate fractional order integral \(I^{\mu }~ y_n(x)\) converges to \(I^{\mu } ~ y(x)\) as \(n \rightarrow \infty \).

Proof

As in [8]. \(\square \)

Numerical Experiments

Problem 1

We consider the problem [12]:

with \(y(0) = 0\), the exact solution of this problem is \(y(x) = x^{\rho }\).

Figure 2 presents a comparison between MIGM and LIGM with \(\mu =0.9, \rho =3\).

Figure 3 presents a comparison between the fractional and integral orders of a numerical solution by using MIGM.

Table 1 presents the numerical solution of this problem by using the integration Galerkin method with \(\alpha =3.5\) and different fractionl orders of \(\mu \).

Table 2 presents a comparison between the fractional and integral orders of a numerical solution by using LIGM.

Comparison of a numerical solution of problem 1 between MIGM and LIGM, with \(\alpha =0.8, \rho =3\)

Comparison between fractional and integral orders of a numerical solution of problem 1 by using MIGM

Problem 2

Consider the equation [18]:

with the following initial conditions \(y(0)=0,~~ y^{'}(0)=0\) and exact solution \(y(x)=x^{2}\).

Figure 4 presents the numerical solution of this problem by using MDGM and LDGM with \(\alpha =10\) and fractional order \((\mu =1.5),\) and we compare our results with those obtained by reference [18] that used the BPs method.

Table 3 presents a comparison between the fractional and integral orders of this problem by using LDGM when \(\alpha =1.5\) and \(\rho =2.0\).

Problem 3

Consider the problem [12]:

with \(y(0) = 0\). The exact solution of this problem is \(y(x) = x^\rho \). A simple case of this problem is solved in [19].

Table 4 presents the numerical solution of this problem with \(\alpha =2.5\) and a different fractional order \((\mu )\) by the differentiation Galerkin method.

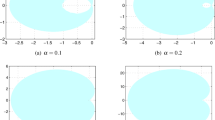

In Fig. 5, the numerical solution of this problem is compared between MDGM and LDGM, by using fractional and integral orders.

Comparison of numerical solution of problem 3 between LDGM in (a, b) and MDGM in (c, d), by using fractional and integral orders

Problem 4

We consider the following fractional differential equation with initial condition [20]:

We take \(f(x)=2\rho x^{2\rho -1}+ \rho x^{\rho -1}+ \frac{\varGamma (\rho +1)}{\varGamma (\rho -\mu +1)} x^{\rho -\mu } \). The exact solution of this problem is \(y(x)=x^\rho \).

In Table 5, presents the numerical solution of problem 4, with \(\alpha =2.8\) and different fractional orders of \(\mu \) by using the integration Galerkin method.

In Table 6, presents a comparison between the fractional and integral orders of this problem by using MIGM with \(\mu =0.9,~ \beta =0.5 \) and \(\gamma =0.5\).

In Table 7 presents a comparison between the fractional and integral orders of this problem by using LIGM with \(\mu =0.9,~\beta =0.5 \) and \(\gamma =0.5\).

Conclusion

In this paper, four numerical methods based on Mittag–Leffler and Laguerre with differential and integeral Galerkin methods (MDGM, LDGM, MIGM, and LIGM) are developed. The proposed methods are applied to solving linear and nonlinear differential equations of fractional order. We compared the new approximation methods for functions based on the generalized fractional Mittag–Leffler function and the generalized fractional Laguerre function, and applied them with the differential Galerkin method and integral Galerkin method to solve linear and nonlinear fractional differential equations.

-

We find that the methods give a good result (see Figs. 2, 4, Tables 1, 4, and 5).

-

We find a numerical solution to the problems when we use the fractional order that is better than the numerical solutions when we use the integer order (see Figs. 3, 5, and Table 6).

-

When we apply the generalized fractional Laguerre Galerkin method, we find the difference of the results by using fractional order and integer order is small this is due to the power of generalized fractional Laguerre function does not depend on \(\alpha \) (see Tables 2, 3, 7 and Fig. 5c, d) on the contrary the difference of the results by using generalized fractional Mittag–Leffler Galerkin method is large because it depends on \(\alpha \) (see Figs. 3 and 5a, b).

Data Availability

Not applicable.

References

Liu, S., Wang, S., Wang, L.: Global dynamics of delay epidemic models with nonlinear incidence rate and relapse. Nonlinear Anal. Real World Appl. 12(1), 119–127 (2011)

Hilfer, R.: Applications of fractional calculus in physics, vol. 35(12), pp. 87–130. World Scientific, Singapore (2000)

Ali, H.M., Rida, S.Z., Gouda, Y.G., Farag, M.M.: Evaluation of generalized Mittag–Leffler function method on endemic disease model. J. Abstr. Comput. Math. 3(3), 1–7 (2018)

Heymans, N., Podlubny, I.: Physical interpretation of initial conditions for fractional differential equations with Riemann–Liouville fractional derivatives. Rheologica Acta 45(5), 765–771 (2006)

Pedas, A., Tamme, E.: Numerical solution of nonlinear fractional differential equations by spline collocation methods. J. Comput. Appl. Math. 255, 216–230 (2014)

Yan, Y., Pal, K., Ford, N.J.: Higher order numerical methods for solving fractional differential equations. BIT Numer. Math. 54(2), 555–584 (2014)

Atta, A.G., Moatimid, G.M., Youssri, Y.H.: Generalized fibonacci operational collocation approach for fractional initial value problems. Int. J. Appl. Comput. Math. 5(1), 1–9 (2019)

Hussien, H.S.: Efficient collocation operational matrix method for delay differential equations of fractional order. Iran. J. Sci. Technol. Trans. A Sci. 43(4), 1841–1850 (2019)

Diethelm, K.: The Analysis of Fractional Differential Equations: An Application-Oriented Exposition Using Differential Operators of Caputo Type. Springer, Berlin (2010)

Kazem, S., Abbasbandy, S., Kumar, S.: Fractional-order Legendre functions for solving fractional-order differential equations. Appl. Math. Model. 37(7), 5498–5510 (2013)

Mathai, A.M., Haubold, H.J.: Special Functions for Applied Scientists. Springer, New York (2008)

Rida, S.Z., Hussien, H.S.: Efficient Mittag–Leffler collocation method for solving linear and nonlinear fractional differential equations. Mediterr. J. Math. 15(3), 1–15 (2018)

Baleanu, D., Bhrawy, A.H., Taha, T.M.: Two efficient generalized Laguerre spectral algorithms for fractional initial value problems. In: Abstract and Applied Analysis; Hindawi Article ID 546502, vol. 2013, pp. 1–10 (2013)

Bhrawy, A.H., Alghamdi, M.M., Taha, T.M.: A new modified generalized Laguerre operational matrix of fractional integration for solving fractional differential equations on the half line. Adv. Differ. Equ. 2012(1), 1–12 (2012)

Series, I., Osler, T.J.: Leibniz rule for fractional derivatives generalized and an application to infinite series. SIAM J. Appl. Math. 18(3), 658–674 (1970)

Stamm, B., Wihler, T.P.: A total variation discontinuous Galerkin approach for image restoration. Int. J. Numer. Anal. Model 12(1), 81–93 (2015)

Xiang, S.: Asymptotics on Laguerre or Hermite polynomial expansions and their applications in Gauss quadrature. J. Math. Anal. Appl. 393(2), 434–444 (2012)

Rostamy, D., Alipour, M., Jafari, H., Baleanu, D.: Solving multi-term orders fractional differential equations by operational matrices of BPs with convergence analysis. Roman. Rep. Phys. 65(2), 334–349 (2013)

Sakar, M.G., Akgül, A., Baleanu, D.: On solutions of fractional Riccati differential equations. Adv. Differ. Equ. 2017(1), 1–10 (2017)

Çerdik Yaslan, H., Mutlu, F.: Numerical solution of the conformable differential equations via shifted Legendre polynomials. Int. J. Comput. Math. 97(5), 1016–1028 (2020)

Acknowledgements

The authors would like to express their gratitude to the referees for their critical reading of the article as well as their insightful remarks, which significantly improved the manuscript.

Funding

No funding for this research.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the work.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no any conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Rida, S.Z., Hussien, H.S., Noreldeen, A.H. et al. Effective Fractional Technical for Some Fractional Initial Value Problems. Int. J. Appl. Comput. Math 8, 149 (2022). https://doi.org/10.1007/s40819-022-01346-w

Accepted:

Published:

DOI: https://doi.org/10.1007/s40819-022-01346-w

Keywords

- Generalized fractional Mittag–Leffler function

- Generalized fractional Laguerre function

- Galerkin method fractional differential equations

- Error estimation