Abstract

This work considers numerical solutions of variable fractional order, multi-term differential equations. A second order numerical approach has been used here to approximate fractional order derivative and this approach is similar to the fractional-variable order derivative approach for single-term case (see Cao and Qiu (Appl. Math. Lett. 61, 88–94, 2016)). To construct a multi-term, fractional-variable order differential equation, we first add y(t) term to the single-term equation and then test the convergency of the method. Secondly, we add a second order ordinary derivative term to the equation and this term is approximated by central finite differences, where the variable Riemann–Liouville derivative approximation is based on the shifted GrÜnwald approximation technique and the numerical scheme is still convergent.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

11.1 Introduction

Since the last two or three decades, fractional calculus has become valuable tool in many branches of science and engineering. However, its history goes back to eighteenth century. Many scientists, including famous mathematicians such as Fourier (1822), Abel (1823–1826), Liouville (1822–1837), Riemann (1847), have contributed significant works for development of fractional calculus. There are many possible generalizations of \(\frac {d^{n}f(x)}{dx^{n}} \), where n is not an integer, but the most important of these are the Riemann–Liouville and Caputo derivatives. The first of these appeared earlier than the others and was developed in works of Abel, Riemann, and Liouville in the first half of the nineteenth century. The mathematical theory of this derivative has been well established so far, but it has disadvantage that leads to difficulties especially for initial and boundary values, since in real world problems, these conditions cannot be described by fractional derivatives. Thus, the latter one, the Caputo derivative was derived by Caputo to eliminate the difficulties in identifying initial and boundary conditions. Both derivatives are very well known in the theory of fractional differential equations and the definitions of these derivatives will be given in the following section.

It has been proved that many physical processes can be well defined and modelled by fractional order differential equations. Moreover, fractional analysis provides many benefits for identifying and best modelling the physical systems which are suggested by scientists. Therefore, fractional order derivatives are much more suitable than the ordinary derivatives (see references [1,2,3,4]). For instance, it is not easy to explain abnormal diffusion behaviours by integer order differential equations since these processes appear abnormally with respect to time and space variables and it requires fractional models.

There are many application areas where these mathematical models are used and some of them can be listed here as physics, chemistry, biology, economics, control theory, signal and image processing, blood flow phenomenon, aerodynamics, fitting of experimental data, etc. Usually these models have complex nature; therefore, analytical solutions can only be obtained for certain classes of equations. Many numerical and approximate methods have been developed to solve these kinds of equations so far. Some of these methods are given as follows: finite difference approximation methods [5,6,7,8,9,10], fractional linear multistep methods [11,12,13], quadrature method [14,15,16,17,18,19], adomian decomposition method [20,21,22], variational iteration method [22, 23], differential transform method [24], Laplace perturbation method [25, 26], homotopy analysis method [27], etc. On the other hand, existing pure numerical techniques have usually first order convergency. However, it is well known that raising the order of convergency is a factor that increases the power of the method [28].

Nowadays, there are further developments in the analysis of fractional order differential equations and some studies are dealt with variable order fractional derivatives [29]. Thus, the need to develop more reliable methods in parallel with the developments in this field is inevitable.

The aim of this work is to use a second order convergent method to the variable fractional order multi-term differential equations similar to the work in [30] and to obtain reliable results. In the next section, second order convergent method will be mentioned and the theory of the method will be dealt with. Sections 11.3 and 11.4 are applications of the method for adding extra y(t) and y″(t) terms to the single-term equation. The last section is the conclusion.

11.2 Problem Definition and Integration Method for Variable Order Fractional Differential Equations

In this section, we first consider the following single-term initial value problem with fractional derivative, where α(t) is a function of time. Therefore, we can write the problem as

where f(t) is a continuous function of t for a given interval. If y(0) = μ, then by using the transformation v(t) = y(t) − μ, we get y(0) = 0. In Eq. (11.1), α(t) denotes the order of variable fractional Caputo derivative, namely CD, and this derivative is defined as,

We also recall the variable fractional order Riemann–Liouville derivative, RLD as

Consequently, by the following lemma, we see the relation between the Riemann–Liouville and the Caputo derivatives.

Lemma 11.1

If y(t) ∈ C[0, ∞) then, similar to the constant order fractional operators, the relation between variable order Caputo and Riemann–Liouville fractional derivatives is

In Eq. (11.1), since the initial condition is y(0) = 0, this follows that

Consequently, for convenience, the Caputo derivative is replaced by Riemann–Liouville derivative in Eq. (11.1). To obtain a numerical approach to the Riemann–Liouville variable order fractional derivative by a second order convergent method, we first call the shifted Grünwald approximation of a function y(t)

where, for k ≥ 0 ,

Now, the second order convergent method for Riemann–Liouville variable order derivative is defined as by the following theorem (see [30]).

Theorem 11.1

Let y(t) ∈ L 1(R) and its Riemann–Liouville derivative be \(_{RL}D_{-\infty ,t}^{\alpha (t) +2} y(t)\). For ∀t k ∈ R, the Fourier transform of this derivative in L 1(R) is [ 10]

Therefore,

where p and q are integers and p≠q.

Proof

From the definition of \(\mathcal {A}_{\tau ,p}^{\alpha (t)} y(t) \) as in Eq. (11.6), we write

If the Fourier transform is applied to both sides of Eq. (11.9), the following expression is obtained

where \(\mathcal {F}(w)\) is the Fourier transform of y(t) and we writing

denoting,

and by using Eqs.(11.10)–(11.11) and we have

This completes the proof [30].

11.2.1 Numerical Method

To solve Eq. (11.1) numerically, we discretize the time domain, t ∈ [0, T] by \(\tau =\frac {T}{N}\), where N is an integer and α(t k) = α k denotes the varying order fractional derivative with t k = kτ, k = 0, 1, 2, 3…, N. Moreover, choosing (p, q) = (0, −1), then by using Eq. (11.9) we have

and

Now, the second order convergent method can be given as follows [30]:

where, if k = 0, then \(w_{0}^{\alpha _k}=(\frac {2+\alpha _k}{2}) g_{0}^{\alpha _k}\),otherwise, \( w_{j}^{\alpha _k}=(\frac {2+\alpha _k}{2}) g_{j}^{\alpha _k}-(\frac {\alpha _k}{2} )g_{j-1}^{\alpha _k}\), k ≥ 1 and \(g_{j}^{\alpha _k}=(-1)^{j}\left (\begin {array}{cc}\alpha _{k} & \\ n&\end {array}\right )\!\!.\)

11.2.2 Stability Criteria of the Method

This part deals with the stability of the method and the following lemma holds.

Lemma 11.2

Being α k ∈ (0, 1), then the coefficients, \(w_{j}^{\alpha _k} \) in Eq.(11.12) satisfy the following properties:

Theorem 11.2

Let y(t) ∈ C[0, ∞) denotes exact and {y k|k = 0, 1, 2, 3…N} numerical solution of Eq.(11.1) respectively, then the following inequality holds:

Proof

According to the Lemma 11.2, we know that

Hence, by arranging Eq. (11.12), we have

For k = 1, we can write

Now, we have to show that Eq. (11.14) is also valid for j = 1, 2, 3, …, k − 1. Hence, taking the absolute value of Eq. (11.15) and writing Eq. (11.14) into this inequality then we obtain

Therefore, we get

As a result, by mathematical induction, Eq. (11.14) is valid for all 1 ≤ k ≤ N (See [30]).

Theorem 11.3

Let y(t) ∈ C[0, ∞) denote the exact solution and {y(t k)|k = 0, 1, 2, 3…N} define the values of y at t k. Let us also denote the numerical solution of Eq.(11.1) by {y k|k = 0, 1, 2, 3…N} at particular points t k. Therefore, absolute error in each step is denoted by e k = y(t k) − y k, k = 0, 1, …N. Hence, the following relation holds:

where c is a positive constant independent from τ.

Proof

The proof of the theorem is given as in [30]. The error of Eq. (11.12) is

This requires that |R k|≤ cτ 2. Then, by using Theorem 11.1 and Theorem 11.2, we write

This completes the proof.

11.2.3 Numerical Example

So far, a second order convergent method has been considered for approximating the Riemann– Liouville derivative, where the maximum error and the order of the convergency are obtained from the following formulas:

To see the efficiency of the method the following example has been considered here. All numerical calculations have been done within MATLAB (R2015b).

Example 11.1

Assuming that 0 < α(t) < 1 and T = 1. Now, we can solve the following initial value problem [30]:

The exact solution of the problem is known as y(t) = 3t + t 2 and two different values of α(t) will be considered here:

- Case 1: :

-

\(\alpha (t)=\frac {1}{2}t\),

- Case 2: :

-

α(t) = sin(t).

Consequently, by using the following numerical scheme:

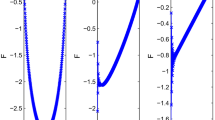

and taking y 0 = 0, numerical results are obtained. These results have been shown by tables. Tables 11.1 and 11.3 show the difference between exact and numerical solutions of the problem for \(\tau =\frac {1}{16}\) and \(\tau =\frac {1}{32}\). In Table 11.1, the first case \(\alpha (t)=\frac {1}{2}t \) has been used. Moreover, in Table 11.3, the second case, α(t) = sin(t) was applied. Tables 11.2 and 11.4 denote the maximum error and order of convergency results for \(\alpha (t)=\frac {1}{2}\) and α(t) = sin(t), respectively. Figure 11.1 shows both numerical and exact solutions in the same plot. It is clear that analytical and numerical solutions overlap.

Comparison of the numerical and exact solutions of y(t) of Example 11.1, where T = 1 , \( \alpha (t)= \frac {1}{2}t\)

11.3 Multi-term Variable Order Fractional Equations

In this section we will apply the second order convergent method to a new class of variable fractional order differential equations. With additional terms, we will have multi-term variable fractional order differential equation. First, we will apply y(t) term to Eq. (11.1). Hence, the following initial value problem, Eq. (11.20), will be considered here:

For convenience, taking a = 1 and each t k is in the discretized time domain, the following numerical scheme holds:

Therefore, the following theorem is valid.

Theorem 11.4

Let y(t) ∈ C[0, ∞) denote the exact solution and {y(t k)|k = 0, 1, 2, 3…N} define the values of y at t k. Let us also denote the numerical solution of Eq.(11.1) by {y k|k = 0, 1, 2, 3…N} at particular points t k. Therefore, maximum error in each step is denoted by e k = y(t k) − y k, k = 0, 1, …N. Hence, the following relation holds:

where c is a positive constant independent from τ.

Proof

By using Eq. (11.12), the proof of this theorem can be performed easily same as the proof of Theorem 11.3.

Following example denotes that the second order convergent method is still valid for multi-term variable fractional order differential equations.

Example 11.2

In Eq. (11.20), assuming that \(f(t)=t^2 + \frac {2}{\varGamma ( \frac {5}{2} )} t^{\frac {3}{2}}\) and T = 1, then, the exact solution of the problem is known as y(t) = t 2. But this solution is known for only \(\alpha (t)=\frac {1}{2}\). To compare numerical results with exact ones, only \(\alpha =\frac {1}{2}\) case has been considered here. Numerical calculations have been performed for different values of τ, and a code is written in MATLAB (R2015b). The following table, Table 11.5, lists maximum error and the order of convergency for different values of τ and Table 11.6 compares the numerical and exact solutions for \(\tau =\frac {1}{16}\) and \(\tau =\frac {1}{32}\).

11.4 Addition of y ′′(t) Term to Variable Order Fractional Differential Equations

In this section, by using the second order convergent method which is given by Eq. (11.12), we will develop a hybrid method for wider classes of differential equations. By adding y(t) and y ′′(t) terms to Eq. (11.1), then we will have multi-term fractional differential equations. To solve the multi-term fractional equation, we approximate the second order derivative with the central differences and the fractional derivative term is evaluated as it is given in Eq. (11.12). Therefore, we will consider the following initial value problem:

Therefore, at particular values of t k, Eq. (*) is written as

Assuming that a and b are constants and for simplicity, we take their values as 1, −1 respectively. Now recalling central finite difference approximation to the second order derivative:

and substituting this into Eq. (11.22), then the last form of the numerical scheme is obtained easily. The method will be applied to following example (see[19]).

Example 11.3

Consider the differential equation in Eq. (*) as follows:

Since the exact results are known for only \(\alpha =\frac {1}{2}\), for comparing the numerical results with exact ones, we will also use \(\alpha (t)=\frac {1}{2}\) in the calculations. Substituting the finite difference approximation to the second order ordinary derivative, then we have

The exact solution of the problem is known as y(t) = t 2, when

Hence,

where h = τ. As a result, arranging Eq. (11.27) again, we obtain

Table 11.7, shows the exact and numerical values of y(t) at particular points of t for different step sizes where initial conditions are taken as in Eq. (*). Figure 11.2 illustrates that both exact and numerical results are in good agreement.

For \( \alpha (t)= \frac {1}{2}\) and N = 100. Comparison of numerical and exact values of y(t) in Example 11.3

11.5 Conclusion

Here, we aimed to solve fractional-variable order differential equations and multi-term fractional order differential equations. We have used the second order convergent method as in [30]. The method is quite well when it is compared with the analytical solutions. For finer mesh, one can obtain more reliable results. As a result, the method can be applied to wider classes of fractional equations, variable order fractional differential equations.

References

Kilbas, A.A., Sirvastava, H.M., Trujillo, J.J.: Theory and Application of Fractional Differential Equations, vol. 204. North-Holland Mathematics Studies, Amsterdam (2006)

Ross, B.: Fractional Calculus and Its Applications, Lecture Notes in Mathematics, vol. 457. Springer (1975)

Oldham, K.B., Spanier, J.: The fractional calculus theory and applications of differentiation and integration of arbitrary order. Dower, New York (2006)

Cansiz, M.: Kesirli Diferensiyel Denklemler ve Uygulaması, Yüksek Lisans Tezi, Ege Üniversitesi Fen Bilimleri Enstitüsü, İzmir, pp. 16–33 (2010)

Podlubny, I.: Fractional Differential Equations. Academic Press, New York (1999)

Ciesielski, M., Leszcynski, J.: Numerical Simulations of Anomalous Diffusion. Computer Methods Mech Conference, Gliwice Wisla Poland (2003)

Yuste, S.B.: Weighted average finite difference methods for fractional diffusion equations. J. Comput. Phys. 1, 264–274 (2006)

Odibat, Z.M.: Approximations of fractional integrals and Caputo derivatives. Appl. Math. Comput., 527–533 (2006)

Odibat, Z.M.: Computational algorithms for computing the fractional derivatives of functions. Math. Comput. Simul. 79(7), 2013–2020 (2009)

Wang, Z., Vong, S.: Compact difference schemes for the modified anomalous fractional sub-diffusion equation and the fractional diffusion-wave equation. J. Comput. Phys. 277, 1–15 (2014)

Ford, N.J., Joseph Connolly, A.: Systems-based decomposition schemes for the approximate solution of multi-term fractional differential equations. J. Comput. Appl. Math. 229, 382–391 (2009)

Ford, N.J., Simpson, A.C.: The numerical solution of fractional differential equations: speed versus accuracy. Numer. Alg. 26, 333–346 (2001)

Sweilam, N.H., Khader, M.M., Al-Bar, R.F.: Numerical studies for a multi-order fractional differential equations. Phys. Lett. A 371, 26–33 (2007)

Diethelm, K.: An algorithm for the numerical solution of differential equations of fractional order. Electron. Trans. Numer. Anal. 5, 1–6 (1997)

Diethelm, K., Walz, G.: Numerical solution of fractional order differential equations by extrapolation. J. Numer. Algorithms 16, 231–253 (1997)

Diethelm, K., Ford, N.: Analysis of fractional differential equations. J. Math. Anal. Appl. 265, 229–248 (2002)

Diethelm, K.: Generalized compound quadrature formulae for finite-part integrals. IMA J. Numer. Anal., 479–493 (1997)

Diethelm, K.: The Analysis of Fractional Differential Equations, pp. 85–185. Springer Pub., Germany (2004)

Görgülü, O.: Kesirli Mertebeden Diferensiyel Denklemler için Sayısal ve Yaklaşık Yöntemler, Yüksek Lisans Tezi, Gazi Üniversitesi Fen Bilimleri Enstitüsü, Ankara, pp. 13–43 (2017)

Momani, S., Odibat, Z.: Analytical solution of a time-fractional Navier-Stokes equation by Adomian decomposition method. App. Math. Comput. 177, 488–494 (2006)

Odibat, Z., Momani, S.: Numerical approach to differential equations of fractional order. J. Comput. Appl. Math. 207(1), 96–110 (2007)

Odibat, Z., Momani, S.: Numerical methods for nonlinear partial differential equations of fractional order. Appl. Math. Model. 32, 28–39 (2008)

Odibat, Z., Momani, S.: Application of variational iteration method to equation of fractional order. Int. J. Nonlinear Sci. Numer. Simul. 7, 271–279 (2006)

Erturk, V.S., Momani, S., Odibat, Z.: Application of generalized differential transform method to multi-order fractional differential equations. Commun. Nonlinear Sci. Numer. Simul. 13(8), 1642–1654 (2008)

Yavuz, M., Ozdemir, N.: New Numerical Techniques for Solving Fractional Partial Differential Equations in Conformable Sense, Non-Integer Order Calculus and its Applications. Book Series: Lecture Notes in Electrical Engineering, vol. 496, pp. 49–62 (2019)

Yavuz, M., Ozdemir, N., Baskonus, M.H.: Solutions of partial differential equations using the fractional operator involving Mittag-Leffler kernel. Eur. Phy. J. Plus 133(6), 215 (2018)

Yavuz, M., Ozdemir, N.: European vanilla option pricing model of fractional order without singular kernel. Fractal Fractional 2(1), 3 (2018)

Er, F.N.: Kısmi Türevli Kesirli Mertebeden Lineer Schrodinger Denklemlerinin Sayısal Çözümleri, Doktora Tezi, İstanbul Külür Üniversitesi Fen Bilimleri Enstitüsü, İstanbul, pp. 14–63 (2015)

Weilbeer, M.: Efficient Numerical Methods for Fractional Differential Equations and Their Analytical Background, Doktora Tezi, Technische Universität Braunschweig, Germany, pp. 35–63 (2005)

Cao, J., Qiu, Y.: High order numerical scheme for variable order fractional ordinary differential equations. Appl. Math. Lett. 61, 88–94 (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Ayaz, F., Güner, İ.B. (2020). A Numerical Approach for Variable Order Fractional Equations. In: Machado, J., Özdemir, N., Baleanu, D. (eds) Numerical Solutions of Realistic Nonlinear Phenomena. Nonlinear Systems and Complexity, vol 31. Springer, Cham. https://doi.org/10.1007/978-3-030-37141-8_11

Download citation

DOI: https://doi.org/10.1007/978-3-030-37141-8_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-37140-1

Online ISBN: 978-3-030-37141-8

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)