Abstract

Role of intuitionistic fuzzy sets (IFSs) in solving multiple attribute decision making (MADM) problem has been established by many researchers. Depending upon the situation, we need different types of entropies based on IFSs. In this paper, we have proposed a new two parametric intuitionistic fuzzy entropy in the settings of IFSs theory. Besides proving some major properties, the proposed entropy is genuinely compared with some other existing entropies in literature. Based on the proposed entropy, a new method for solving MADM problems is introduced. Attribute weights play an eminent role in solving MADM problems. In this communication, two methods of determining the attribute weights are discussed. First is when information about attributes is completely unknown and second is when we have partial information regarding attribute weights. The two methods are effectively explained with the help of three examples. The attribute weights identification based on the proposed intuitionistic fuzzy entropy is offered in context of IFSs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Literature Review

Since the time Zadeh [1] introduced the concept of fuzzy set, many theories and approaches concerning imprecision and vagueness came into existence. Intuitionistic fuzzy sets (IFSs) proposed by Atanassov [2] are one of the primary generalizations of conventional fuzzy set theory. He pointed out the drawbacks of Zadeh’s fuzzy set theory and proved to be extremely helpful in dealing with uncertainty and vagueness. Entropy is an important concepts in the study of fuzzy set theory and its extensions to IFSs. For the first time, the idea of fuzzy entropy was introduced by Zadeh [1]. Then Yager [3], Szmidt and Kacprzyk [4], Kaufman [5] proposed the various entropies on fuzzy sets. Recently Joshi and Kumar [50] introduced a new (R, S)-norm fuzzy information measure corresponding to (R, S)-norm entropy proposed by Joshi and Kumar [49] The notion of intuitionistic fuzzy entropy was firstly presented by Bustince and Burillo [6] and Szmidt and Kacprzyk [4] introduced a non-probabilistic type intuitionistic fuzzy entropy. Later, Zhang et al. [7] and Hung [8] suggested the intuitionistic fuzzy entropy based on the distance measure between IFSs. Vlachos and Sergiadis [9] generalized the De Luca and Termini’s [10] concept of non-probabilistic entropy to IFSs. Zeng et al. [11] proposed the intuitionistic fuzzy entropy based on similarity between IFSs. Chen and Li [12] proposed different kinds of entropies on IFSs.

Pedrycz [13], Yager [14] and Zadeh [15] proved the usefulness of fuzzy sets in tackling the problems with uncertain information in many fields such as pattern recognition, decision making and logical reasoning [16]. Also, IFSs have been proved to be useful in handling the fuzzy MADM problems [16]. In most of the fuzzy MADM problems, the information provided by experts may not be sufficient to choose the best alternative because the facts may be fuzzy or uncertain in nature. This may be due to the subjectivity of experts, lack of knowledge, time or data about problem domain and over all may be due lack of expertise in relevant field. Therefore, the alternatives with uncertainty are represented by IFSs. However, a large amount of literature is available in solving fuzzy MADM problems using IFSs, but a very few literature is available on solving fuzzy MADM problems with unknown attribute weights and partially known attribute weights, in particular. In MADM problems, the experts must evaluate the various alternatives for different attributes and choose the most desirable alternative. Attribute weights play an important role in decision making process as the improper assignment of attribute weights may cause the change in ranking of alternatives. Chen and Li [12] categorized the attribute weights into two parts: subjective weights and objective weights. Subjective weights are determined only according to the preference decision makers. The AHP method [17], weighted least squares method [18] and Delphi method [19] belong to this category. The objective methods determine weights by solving the mathematical models without considering the decision maker’s preferences. Entropy method, multi objective programming [20, 21], principle element analysis [20] etc. belong to this category. Since in many practical problems, decision maker’s expertise and experience matters but when it is difficult to obtain such reliable subjective weights, the use of objective weights is useful. In general, the attribute weights cannot be represented by crisp numbers. Entropy method is one of the most representative approaches to solve MADM problems with unknown or partially known weight information. Chen and Li [12] suggested several methods to solve MADM problems with unknown attribute weights information. The traditional entropy method focuses on using the discrimination of data to determine the weights of attributes. If the attribute can discriminate the data more significantly, we give a higher weight to the attribute. Dissimilarly, we focus on using the credibility of data to determine the attribute weights through IF entropy measures. This concept is totally different with the traditional entropy method, but our method can combine with traditional method. Besides Szmidt and Kacprzyk [4] proposed a different concept for assessing the IF entropy. However, in our research, we use Szmidt and Kacprzyk’s concept to measure the IF entropy, because this concept could measure the whole missing information which might be required to certainly have. Therefore the traditional entropy is based on the concept of probability and it could measure the discrimination of attributes while we apply it in MADM. Nevertheless, the meaning of IF entropy is different from the traditional entropy, because the IF entropy represents the credibility of the data while we apply it in MADM.

From the above discussion, role of intuitionistic fuzzy sets in solving MADM problems can be easily estimated. Depending upon the situation, there is a need to develop such measures which not only satisfy the requirement but are also the generalized forms of the existing measures. Apart from this, they should be quite efficient and have consistent performance also. The role of parameters in any information measure is very important. For example, in any problem related with environment, different parameters may represent different environmental factors like humidity, temerature, pressure etc. Thus, the presence of parameters make an information measure more suitable from application view point. Inspired by this, our main emphasis will be on to develop the new information measures and MADM methods based on them to solve the problems containing multiple attributes. The present communication is a sequel in this direction.

This paper is managed as follows. After the introductory section, basic concepts and definitions of the theory of fuzzy sets and intuitionistic fuzzy sets are discussed in “Preliminaries” section. “A New Parametric Intuitionistic Fuzzy Entropy” section is devoted to the introduction of a new intuitionistic fuzzy entropy, establishing its validity and discussing some of its mathematical properties. In “A Comparison with Other Existing Measures” section, the performance of proposed measure is compared some existing measures in literature. A new multiple attribute decision making (MADM) method is proposed by using the concept of TOPSIS in “The New MADM Method Using Proposed IF Entropy” section. In “Numerical Examples” section, the proposed MADM method is explained with the help of numerical examples. Finally, the paper is concluded in “Concluding Remarks” section.

Preliminaries

Now, we introduce some basic definitions and concepts regarding fuzzy sets and IFSs.

Definition 2.1

(See [1]) Let \(X= (z_1, z_2, \ldots , z_n)\) be a finite universe of discourse. A fuzzy set G is given by

where \(\mu _G:X\rightarrow [0,1]\) is the membership function of G. The number \(\mu _G (z_i)\) defines the belongingness degree of \(z_i\in X\) in G.

Definition 2.2

A fuzzy set \(\tilde{G}\) is called a sharpened version of fuzzy set G if it satisfies the following conditions:

and

De Luca and Termini [10] axiomatized the fuzzy entropy and the axioms proposed by him are widely acclaimed as a criterion to define any fuzzy entropy. In fuzzy set theory, the fuzzy entropy is a measure of fuzziness which represent the average amount of difficulty or ambiguity in guessing that a particular element belongs to the set or not.

Definition 2.3

(See [10]). A measure of fuzziness in a fuzzy set should satisfy atleast the following axioms:

-

P1

(Sharpness) H(G) is minimum if and only if G is a crisp set, i.e., \(\mu _G (z_i)=0\) or 1 for all \(z_i\in X\).

-

P2

(Maximality) H(G) is maximum if and only if G is most fuzzy set, i.e., \(\mu _G (z_i)=.5\); for all \(z_i \in X\).

-

P3

(Resolution) \(H (G)\ge H(\tilde{G})\), where \(\tilde{G}\) is the sharpened version of G.

-

P4

(Symmetry) \(H (G)=H(G^c)\), where \( G^c\) is the complement of G, i.e., \(\mu _{G^c} (z_i)=1-\mu _G (z_i)\) for all \(z_i\in X\).

Since \(\mu _G (z_i)\) and \((1-\mu _G (z_i))\) represent the same degree of fuzziness, then, De Luca and Termini [10] defined fuzzy entropy for a fuzzy set G as:

Later on, Bhandari and Pal [22] made a survey of information measures on fuzzy sets. Corresponding to Renyi’s entropy [23], they introduced a new measure of fuzzy entropy as:

Zadeh’s [1] idea of fuzzy sets was extended to intuitionistic fuzzy sets by Atanassov [2] as:

Definition 2.4

(See [2]) An intuitionistic fuzzy set G in a finite universe of discourse \(X= (z_1, z_2, \ldots , z_n)\) is given by

where \(\mu _G: X\rightarrow [0,1]\), \(\nu _G: X\rightarrow [0,1]\) satisfying \(0\le \mu _G (z_i)+\nu _G (z_i)\le 1\), \(\forall z_i\in X\). Here \(\mu _G (z_i)\) and \(\nu _G (z_i)\), respectively, denotes the degree of membership and degree of non-membership of \(z_i\in X\) to the set G. For each IFS G in X, \(\pi _G (z_i)=1-\mu _G (z_i)-\nu _G (z_i), z_i\in X\) represents the hesitancy degree of \(z_i\in X\) and is also called intuitionistic index. Obviously, if \(\pi _G (z_i)=0\) then IFS becomes fuzzy set. Thus, the fuzzy sets are particular cases of IFSs.

Definition 2.5

(See [24]). Let IFS(X) denote the family of all IFSs in the universe X and let \(G, H\in IFS (X)\) be given by

Then usual set operations and relations are defined as follows:

- (i):

-

\(G\subseteq H\) if and only if \(\mu _G (z_i)\le \mu _H (z_i)\) and \(\nu _G (z_i)\ge \nu _H (z_i)\) for all \(z_i\in X\);

- (ii):

-

\(G=H\) if and only if \(G\subseteq H\) and \(H\subseteq G\);

- (iii):

-

\(G^c=\{\langle z_i, \nu _G (z_i), \mu _G (z_i)\rangle /z_i\in X\}\);

- (iv):

-

\(G\cap H=\{\langle \mu _G (z_i)\wedge \mu _H (z_i) \,\mathrm {and}\, \nu _G (z_i)\vee \nu _H (z_i)\rangle /z_i\in X\}\);

- (v):

-

\(G\cup H=\{\langle \mu _G (z_i)\vee \mu _H (z_i)\, \mathrm {and}\,\nu _G (z_i)\wedge \nu _H (z_i)\rangle /z_i\in X\}\).

Szmidt and Kacprzyk [25] first formulated the axioms for intuitionistic fuzzy entropy measure as an extension of De Luca and Termini [10] for fuzzy sets. The set of axioms of intuitionistic fuzzy entropy measure is:

Definition 2.6

(See [25]). An entropy on IFS(X) is a real-valued function \(E:IFS (X)\rightarrow [0,1]\), which satisfies the following axioms:

- (IFS1):

-

\(E(G)=0\) if and only if G is a crisp set, i.e., \(\mu _G (z_i)=0\), \(\nu _G (z_i)=1\) or \(\mu _G (z_i)=1\), \(\nu _G (z_i)=0\) for all \(z_i\in X\).

- (IFS2):

-

\(E(G)=1\) if and only if \(\mu _G (z_i)=\nu _G (z_i)\) for all \(z_i\in X\).

- (IFS3):

-

\(E(G)\le E(H)\) if and only if \(G\subseteq H\), i.e., if \(\mu _G (z_i)\le \mu _H (z_i)\) and \(\nu _G (z_i)\ge \nu _H (z_i)\) for \(\mu _H (z_i)\le \nu _H (z_i)\), or if \(\mu _G (z_i)\ge \mu _H (z_i)\) and \(\nu _G (z_i)\le \nu _H (z_i)\), for \(\mu _H (z_i)\ge \nu _H (z_i)\) for any \(z_i\in X\).

- (IFS4):

-

\(E(G)=E(G^c)\).

Definition 2.7

(See [26]). Let \( G=\{\langle z_i, \mu _G (z_i), \nu _G (z_i)\rangle / z_i\in X\}\) and \(H=\{\langle z_i, \mu _H (z_i), \nu _H (z_i)\rangle / z_i\in X\}\) be two IFSs with the weight of \(z_i\) is \(u_i\). Then the weighted Hamming Distance measure of G and H is defined as follows:

Throughout this paper, IFS(X) and FS(X) will represent the set of all intuitionistic fuzzy sets and set of all fuzzy sets respectively.

With these ideas in mind, we now introduce a new parametric intuitionistic fuzzy entropy on IFSs with \(\alpha \) and \(\beta \) as parameters.

A New Parametric Intuitionistic Fuzzy Entropy

In the following, we will borrow an entropy \(H_\alpha ^\beta (A)\) to a probability distribution \(A=\{p_1, p_2, \ldots , p_n\}\) with \(\sum _{i=1}^n p_i=1\) for which

where \(\alpha >1\) and \(0<\beta <1\) or \(0<\alpha <1\) and \(\beta >1\), which is studied by Sharma and Taneja [27].

Corresponding to (7), we then propose families of fuzzy entropy of an IFS G with

Particular Cases

-

1.

If \(\beta =1\), then (8) becomes

$$\begin{aligned} E_\alpha ^1 (G)&= \frac{1}{n\left( 2^{1-\alpha }-1\right) }\sum _{i=1}^n\Big [\left( \mu _G (z_i)^\alpha +\nu _G (z_i)^\alpha )\times (\mu _G (z_i)\right. \nonumber \\&\quad \left. +\,\nu _G (z_i))^{1-\alpha }+2^{1-\alpha }\pi _G (z_i)\right) -1\Big ], \end{aligned}$$(9)which is an intuitionistic fuzzy entropy of order-\(\alpha \) studied by Joshi and Kumar [28].

-

2.

If \(\alpha =1, \beta \rightarrow 1\) or \(\beta =1, \alpha \rightarrow 1\), then (8) becomes

$$\begin{aligned} E_\alpha ^\beta (G)= & {} -\frac{1}{n}\sum _{i=1}^n\Big [\mu _G (z_i) \log (\mu _G (z_i))+\nu _G (z_i) \log (\nu _G (z_i))\nonumber \\&-\, (1-\pi _G (z_i))\log (1-\pi _G (z_i))-\pi _G (z_i)\Big ]. \end{aligned}$$(10)which is studied by Vlachos and Sergiadis [9].

-

3.

If \(\pi _G (z_i)=0\), (8) becomes an ordinary fuzzy entropy as:

$$\begin{aligned} E_\alpha ^\beta (G)&=\frac{1}{n\left( 2^{1-\alpha }-2^{1-\beta }\right) }\sum _{i=1}^n\Big \{\Big (\mu _G (z_i)^\alpha +(1-\mu _G (z_i))^\alpha \Big )\nonumber \\&\quad \left( \mu _G (z_i)^\beta +(1-\mu _G (z_i))^\beta \right) \Big \}. \end{aligned}$$(11) -

4.

If \(\pi _G (z_i)=0\) and \(\beta =1\) then (8) becomes a parametric fuzzy entropy with \(\alpha \) as a parameter:

$$\begin{aligned} E_\alpha ^1 (G)=\frac{1}{n \left( 2^{1-\alpha }-1\right) }\sum _{i=1}^n\left\{ \left( \mu _G (z_i)^\alpha + (1-\mu _G (z_i))^\alpha \right) -1\right\} {,} \end{aligned}$$(12)which is an entropy slightly different from Hooda [29].

Now, a very natural question that arises in mind “Is the entropy measure proposed, reasonable?”. We answer this question in the following theorem by showing that the proposed entropy measure obey the axioms (IFS1–IFS4).

Theorem 3.1

The measure \(E_\alpha ^\beta (G)\) is a valid entropy measure for IFSs; i.e., it satisfies all the axioms given in definition (2.6).

Proof

(IFS1) Let G be the crisp set having membership values either 0 or 1 for all \(z_i\in X\). Then from (8), we have \(E_\alpha ^\beta (G)=0\).

Conversely, if \(E_\alpha ^\beta (G)=0\), then

Since \(\alpha \ne \beta \), this implies

Therefore (14) will hold only if \(\mu _G (z_i)=0, \nu _G (z_i)=1\) or \(\mu _G (z_i)=1, \nu _G (z_i)=0\) for all \(z_i\in X\).

Hence \(E_\alpha ^\beta (G)=0\) if and only if G is a crisp set. This proves (IFS1).

(IFS2) \(\displaystyle E_\alpha ^\beta (G)=1\) if and only if \(\mu _G (z_i)=\nu _G (z_i)\quad \forall z_i\in X.\)

First let \(\mu _G (z_i)=\nu _G (z_i)\) for all \(z_i\in X\) in (8),

Conversely, let \(E_\alpha ^\beta (G)=1\),

which implies

and

From (16), we get

Therefore (18) will hold only if either

or

Now consider the following function:

On differentiating with respect to t, (21) gives

Since \(f''(t)>0\) for \(z>1\) and \(f''(t)<0\) for \(z<1\). Therefore, f(t) is convex for \(z>1\) and concave for \(z<1\). Therefore, for any two points \(t_1\) and \(t_2\) in [0, 1], the following inequalities hold:

with equality only for \(t_1=t_2\). Therefore, from (21), (22), (23), (24), we conclude that (20) will hold only if \(\mu _G (z_i)=\nu _G (z_i)\) for all \(z_i\in X\). Similarly, we may prove it for (17).

(IFS3). \(E_\alpha ^\beta (G)\le E_\alpha ^\beta (H)\) if and only if \(G\subseteq H\), i.e., if \(\mu _G (z_i)\le \mu _H (z_i)\) and \(\nu _G (z_i)\ge \nu _H (z_i)\) for \(\mu _H (z_i)\le \nu _H (z_i)\), or if \(\mu _G (z_i)\ge \mu _H (z_i)\) and \(\nu _G (z_i)\le \nu _H (z_i)\), for \(\mu _H (z_i)\ge \nu _H (z_i)\) for any \(z_i\in X\).

To prove (8) satisfies (IFS3), it suffices to prove that the function

where \(x,y\in [0,1]\), is an increasing function with respect to x and decreasing function with respect to y. Taking partial derivatives of f with respect to x and y, respectively, we get

and

For critical points of f, we put \(\partial f(x,y)/\partial x=0\) and \(\partial f(x,y)/\partial y=0\). This gives

From (26), (27) and (28), we get

for all \(x,y\in [0, 1]\). Thus f(x, y) is an increasing function of x and decreasing function of y.

Now, let us consider the two sets \(G, H\in IFS (X)\) such that \(G\subseteq H\). Let the finite universe of discourse \(X=\{z_1, z_2, \ldots , z_n\}\) be partitioned into two disjoint sets \(X_1\) and \(X_2\) with \(X=X_1\cup X_2\).

Further, let us suppose that all \(z_i\in X_1\) be dominated by the condition

and for all \(z_i\in X_2\),

Thus, from the monotonicity of the function f and (8), we obtain that \(E_\alpha ^\beta (G)\le E_\alpha ^\beta (H)\) when \(G\subseteq H\).

(IFS4) \(E_\alpha ^\beta (G)=E_\alpha ^\beta (G^c)\).

We know that \(G^c=\{\langle z_i, \nu _G (z_i), \mu _G (z_i)\rangle /z_i\in X\}\) for \(z_i\in X\) and

Thus from (8), we have

Therefore, \(E_\alpha ^\beta (G)\) is a valid intuitionistic fuzzy entropy measure. \(\square \)

The proposed measure (8) also satisfies the following additional properties.

Theorem 3.2

Let G and H be two intuitionistic fuzzy sets defined in \(X=\{z_1, z_2,\ldots , z_n\}\), where \(G=\{\langle z_i, \mu _G (z_i), \nu _G (z_i)\rangle /z_i\in X\}\), \(H=\{\langle z_i, \mu _H (z_i), \nu _H (z_i)\rangle /z_i\in X\}\), such that for all \(z_i\in X\) either \(G\subseteq H\) or \(H\subseteq G\); then

Proof

Let us separate X into two parts \(X_1\) and \(X_2\), such that

This implies that for each \(z_i\in X_1\),

and for each \(z_i\in X_2\),

From (8), we have,

Similarly,

This proves the theorem.

Corollary For any \(G\in IFS (X)\) and its complement \(G^c\),

\(\square \)

Theorem 3.3

The measure \(E_\alpha ^\beta (G)\) attains maximum value when the set is most intuitionistic fuzzy set and minimum value when the set is crisp set. Also, these values do not contain \(\alpha \) and \(\beta \).

Proof

It has already been proved that in properties IFS3 and IFS4 in Theorem (3.1) that \(E_\alpha ^\beta (G)\) attains maximum value if and only if G is most intuitionistic fuzzy set, i.e., \(\mu _G (z_i)=\nu _G (z_i)\), for all \(z_i\in X\) and minimum value when G is a crisp set, i.e., \(\mu _G (z_i)=1; \nu _G (z_i)=0\) or \(\mu _G (z_i)=0; \nu _G (z_i)=1\) . Therefore, it is sufficient to prove that the minimum and maximum values are free of \(\alpha \) and \(\beta \).

Suppose G be the most intuitionistic fuzzy set; i.e., \(\mu _G (z_i)=\nu _G (z_i)\), for all \(z_i\in X\). Then from (8),

which does not contain \(\alpha \) and \(\beta \).

On the other hand, if G is a crisp set, i.e., \(\mu _G (z_i)=1\) and \(\nu _G (z_i)=0\) or \(\mu _G (z_i)=0\) and \(\nu _G (z_i)=1\), for all, \(z_i\in X\) then \(E_\alpha ^\beta (G)=0\) for all values of \(\alpha \) and \(\beta \). This proves the theorem. \(\square \)

Now, we demonstrate the performance of proposed \((\alpha , \beta )\)-norm intuitionistic fuzzy entropy by comparing with other existing measures of intuitionistic fuzzy entropy in literature.

A Comparison with Other Existing Measures

Let \(G=\{\langle z_i,\mu _G (z_i), \nu _G (z_i)\rangle /z_i\in X\}\) be an intuitionistic fuzzy set in \(X=\{z_1, z_2, \ldots , z_n\}\). For any positive real number n, [30] defined an intuitionistic fuzzy set \(G^n\) as follows:

Let us assume an intuitionistic fuzzy set G in \(X=\{6, 7, 8, 9, 10\}\) defined by [30] as:

Based on the characterization of linguistic variables, suggested by [30], we compare the performance of proposed measure with some existing measures of intuitionistic fuzzy entropy suggested by various researchers as:

-

1.

Burillo and Bustince’s entropy \((E_{BB})\)[6];

-

2.

Zeng and Li’s entropy \((E_{ZL})\) [31];

-

3.

Szmidt and Kacprzyk’s entropy \((E_{SK})\)[25];

-

4.

Vlachos and Sergiadis entropy \((E_{SV})\) [9]:

-

5.

Zhang and Jiang’s IF entropy \((E_{ZJ})\) [32];

-

6.

Hung and Yang’s entropy \((E_{hc}^2\) and \(E_r^{1/2})\) [33];

-

7.

Ye’s IF entropy measure \((E_Y)\)[34];

-

8.

Wei et al. entropy measure \((E_{Wei})\) [35];

-

9.

Verma and Sharma’s exponential intuitionistic fuzzy entropy measure \((E_{VS})\) [36];

-

10.

Wei et al. \((E_{W})\) [35];

-

11.

Wang and Wang entropy \((E_{WW})\) [37];

-

12.

Liu and Ren intuitionistic fuzzy entropy \((E_{LR})\) [38];

Hung and Yang [33] and Hwang and Yang [39] established that the entropy measures of IFSs are supposed to satisfy the following requirement for good performance:

Computed numerical values of different entropy measures are tabulated in Table 1.

On analyzing the Table 1, we get the following results:

Thus, from the above analysis, we find that \(E_{ZL}\), \(E_{SK}\), \(E_{SV}\), \(E_{Wei}\), \(E_W\), \(E_{VS}\), \(E_{WW}\), \(E_{LR}\) and \(E_\alpha ^\beta \) follows the sequence (47) whereas \(E_{BB}\), \(E_{ZJ}\), \(E_{hc}^2\), \(E_r^{1/2}\) and \(E_W\) do not follow the sequence. This means, that performance of \(E_{ZL}\), \(E_{SK}\), \(E_{SV}\), \(E_{Wei}\), \(E_Y\), \(E_{VS}\), \(E_{WW}\), \(E_{LR}\) and \(E_\alpha ^\beta \) is better than that of \(E_{BB}\), \(E_{ZJ}\), \(E_{hc}^2\), \(E_r^{1/2}\) and \(E_W\).

Let us take one more example from Hung and Yang [33] for further comparison.

To analyze how different IFSs “LARGE” in X affect the above entropy measures, we reduce the hesitancy degree of “8” which is the middle point of X. First, suppose that

To compare the different entropy measures, we use IFSs \(G_1^\frac{1}{2}\), \(G_1\), \(G_1^2\), \(G_1^3\) and \(G_1^4\). The comparison results are presented in the Table 2.

Analysis of above table gives,

From the above analysis, we observe that \(E_{ZL}\), \(E_{SK}\), \(E_{ZJ}\), \(E_{WW}\) and \(E_{LR}\) do not follow the pattern (47) but \(E_{SV}\), \(E_{Wei}\), \(E_{VS}\) and \(E_\alpha ^\beta \) follow the pattern (47). Thus the performance of \(E_{SV}\), \(E_{Wei}\), \(E_{VS}\) and \(E_\alpha ^\beta \) is better than \(E_{ZL}\), \(E_{SK}\), \(E_{ZJ}\), \(E_{WW}\) and \(E_{LR}\).

Now, we consider an another IFS “LARGE” in X from Hung and Yang [33] defined as:

In \(G_2\), the hesitancy degree of “8” is reduced to zero. Based on this, we calculate the following Table 3:

On analyzing above table, we observe that

Again \(E_{ZL}\), \(E_{SK}\), \(E_{ZJ}\), \(E_{WW}\) and \(E_{LR}\) fail to meet the requirement whereas \(E_{SV}\), \(E_{Wei}\), \(E_{VS}\) and \(E_\alpha ^\beta \) follow the pattern.

Finally, we take one more example from Liu and Ren [38].

Suppose that there are five IF sets denoted as IFNs as: \(G_3=(0.4, 0.1)\), \(G_4=(0.6, 0.3)\), \(G_5=(0.2, 0.6)\), and \(G_6= (0.13, 0.565)\). It can be observed that \(G_4\) is less fuzzy than \(G_3\) and \(G_5\) is less fuzzy than \(G_6\). Numerical values of the five entropies are displayed in Table 4.

From the Table 4, we can observe that entropies \(E_{ZJ}\), \(E_{Wei}\) and \(E_{VS}\) cannot differentiate between the alternatives \(G_3\) and \(G_4\) and entropies \(E_W\) and \(E_{WW}\) is unable to differentiate between \(G_5\) and \(G_6\) while \(E_{SV}\) and proposed entropy \(E_\alpha ^\beta \) distinguishes all the above alternatives. Thus on the basis of above examples we can say that \(E_{SV}\) and \(E_\alpha ^\beta \) has a better performance over \(E_{ZJ}\), \(E_{Wei}\), \(E_W\), \(E_{WW}\), \(E_{VS}\). But the proposed entropy \(E_\alpha ^\beta \) contains the parameters which makes it more flexible from application point of view whereas \(E_{SV}\) does not. Therefore, the proposed entropy measure \(E_\alpha ^\beta \) is not only flexible in nature but also has consistent performance. Thus, the proposed entropy formula is considerably good.

The New MADM Method Using Proposed IF Entropy

For a MADM problem, suppose there be set \(Z=(Z_1, Z_2, \ldots , Z_m)\) of m equally probable alternatives and \(O=(e_1, e_2, \ldots , e_n)\) be a set of n attributes. Out of the given set of m alternatives, we have to select most suitable one. The degrees to which the alternative \(Z_i (i=1, 2, \ldots , m)\) satisfies the attribute \(e_j (j=1, 2, \ldots , n)\) is represented by intuitionistic fuzzy number (IFN) \(\tilde{x}_{ij}= (p_{ij}, q_{ij})\), where \(p_{ij}\) is the membership degree and \(q_{ij}\) denote the non-membership degree of the alternative \(Z_i (i=1, 2, \ldots , m)\) satisfying: \(0\le p_{ij}\le 1\), \(0\le q_{ij}\le 1\) and \(0\le p_{ij}+q_{ij}\le 1\) with \(i=1, 2, \ldots m\) and \(j=1, 2, \ldots , n\). In MADM problems, intuitionistic fuzzy values are calculated using statistical method suggested by Liu and Wang [40].

To obtain the degrees to which the alternatives \(Z_i\)’s \((i=1, 2, \ldots , m)\) satisfy or do not satisfy the attributes \(e_j\)’s \((j=1, 2, \ldots , n)\), we use the statistical tool proposed by Liu and Wang [40]. Suppose we invite a team of N-decision makers to deliver the judgment. The team members are expected to answer “yes” or “no” or “I don’t know” to the questions whether the alternatives \(Z_i (i=1, 2, \ldots , m)\) satisfy the attributes \(e_j (j=1, 2, \ldots n)\). Let \(n_{yes} (i, j)\) denote the number of experts who answer affirmatively and \(n_{no} (i, j)\) denote the number of experts who answer negatively. Then, the degrees to which alternatives \(Z_i\)’s \((i=1, 2, \ldots , m)\) satisfy and/or do not satisfy attributes \(e_j\)’s \((j=1, 2, \ldots , n)\) may be computed as:

Thus, MADM problem can be represented by using a fuzzy decision matrix \(X=(\tilde{x}_{ij})_{m\times n}\) as:

Considering that the attributes have different importance degrees, the weight vector of all attributes, given by the decision makers, is defined as \(u=(u_1, u_2, \ldots , u_n)^T\) such that \(0\le u_j\le 1 (j=1, 2, \ldots , n)\) satisfying \(\sum \nolimits _{j=1}^nu_j=1\), and \(u_j\) is the importance degree of the jth attribute. Sometimes, the information about attribute weights is completely unknown or incompletely known or partially known because of decision maker’s limited expertise about the problem domain, lack of knowledge or time pressure etc. To get the optimal alternatives, we should use methods or optimal models to determine the weight vector of the attributes. In the present communication, two methods are discussed to determine the weights of attributes using proposed entropy.

When Weights are Unknown

Based on the work done by Chen et al. [12], we use the Eq. (8) to determine the weights of the attributes when they are completely unknown as:

where \(e_j=\frac{1}{m}\sum _{i=1}^mE_\alpha ^\beta (\tilde{x}_{ij})\) and

is an IF entropy measure of \(\tilde{x}_{ij}=(p_{ij}, q_{ij})\).

According to the entropy theory, smaller value of entropy for each criterion across all alternatives provide decision makers with the useful information. So, the criterion should be assigned a bigger weight; otherwise such a criterion will be judged unimportant by most decision makers. In other words, such a criterion should be assigned a very small weight.

When Weights are Partially Known

In general, there are more constraints for the weight vector \(u=(u_1, u_2, \ldots , u_n)\). Sometimes, the information about attribute weights is partially known due to lack of expertise, time limit or lack of knowledge about the problem domain. To get the optimal alternative, we should use the optimal methods to determine the weight vector of attributes. Let the set of known weight information is denoted as H. Under intuitionistic fuzzy environment, to obtain the weights of attributes for a multiple attribute decision making problem when we have partial information about them, we use the minimum entropy principle introduced by Wang and Wang [37] to determine the weight vector of attributes by constructing the following programming model as:

Now, the overall entropy of the alternative \(Z_i\) is

Since each alternative is made in a fairly competitive environment, the weight coefficients corresponding to same attributes should also be equal; to determine the optimal weight the following model can be constructed:

On solving the model (53) by using MATLAB software, we get the optimal solution \(\mathrm {arg}\, \min E= (u_1, u_2, \ldots , u_n)^T\).

In summary, the procedural steps of decision making method are listed as follows:

-

1.

Determine the weights of the attributes by solving models equations (50) and (53).

-

2.

Define the Best Solution \(Z^+\) and Worst Solution \(Z^-\) as:

$$\begin{aligned} Z^+=\Big (\Big (\alpha _1^+,\beta _1^+\Big ), \Big (\alpha _2^+, \beta _2^+\Big ), \ldots , \Big (\alpha _n^+, \beta _n^+\Big )\Big ), \end{aligned}$$(54)where \((\alpha _j^+, \beta _j^+)=(\sup (\mu _G (z_i)), \inf (\nu _G (z_i)))=(1, 0), j=1, 2, \ldots , n\) and \(z_i\in X\).

$$\begin{aligned} \mathrm {and}\quad Z^-=\Big (\Big (\alpha _1^-,\beta _1^-\Big ), \Big (\alpha _2^-, \beta _2^-\Big ), \ldots , \Big (\alpha _n^-, \beta _n^-\Big )\Big ), \end{aligned}$$(55)where \((\alpha _j^-, \beta _j^-)=(\inf (\mu _G (z_i)), \sup (\nu _G (z_i)))=(0, 1), j=1, 2, \ldots , n\) and \(z_i\in X\).

-

3.

By using the definition (2.3), the distance measures of \(Z_i\)’s from \(Z^+\) and \(Z^-\) are given as follows:

$$\begin{aligned} s (Z_i, Z^+)= & {} \frac{1}{2}\sum _{j=1}^n u_j (|p_{ij}-\alpha _j^+|+|q_{ij}-\beta _j^+|+|r_{ij}-\pi _j^+|),\nonumber \\= & {} \frac{1}{2}\sum _{j=1}^n u_j (|1-p_{ij}|+|q_{ij}|+|1-p_{ij}-q_{ij}|). \end{aligned}$$(56)$$\begin{aligned} s (Z_i, Z^-)= & {} \frac{1}{2}\sum _{j=1}^n u_j (|p_{ij}-\alpha _j^-|+|q_{ij}-\beta _j^-|+|r_{ij}-\pi _j^-|),\nonumber \\= & {} \frac{1}{2}\sum _{j=1}^n u_j (|p_{ij}|+|1-q_{ij}|+|1-p_{ij}-q_{ij}|). \end{aligned}$$(57)where \(r_{ij}=1-p_{ij}-q_{ij}\) and \(\pi _j=1-\alpha _j-\beta _j\).

-

4.

Determine the relative degrees of closeness \(D_i\)’s as:

$$\begin{aligned} D_i=\frac{s (Z_i, Z^-)}{s (Z_i, Z^-)+s (Z_i, Z^+)}. \end{aligned}$$(58) -

5.

Rank the alternatives as per the values of \(D_i\)’s in descending order. The alternative nearest to the \(Z^+\) and farthest from the \(Z^-\) will be the best alternative.

Numerical Examples

Now we illustrate the application of MADM method with the help of examples as follows:

Case 1 When the weights of the attributes are unknown.

Example 6.1 Consider a supplier selection problem with four possible alternatives \(Z_i (i=1, 2, 3, 4)\) and three attributes \(e_j (j=1, 2, 3)\). The ratings of the alternatives are displayed in the intuitionistic fuzzy decision matrix represented by Table 6. (This example is taken from Herrera and Herrera-Viedma [41]; Ye [42]). The membership degrees (satisfactory degrees) \(p_{ij}\) and non-membership degrees (non-satisfactory degrees) \(q_{ij}\) for the alternatives \(Z_i\)’s \((i=1, 2, \ldots , m)\) satisfying the attributes \(e_j\)’s \((j=1, 2, \ldots , n)\) respectively, may be obtained using statistical method proposed by Liu and Wang [40]. [Taking no. of experts=\(N=100\) in (48)]. Suppose that the ‘yes’ or ‘no’ answers of the expert are distributed as shown in Table 5.

Using the formula

we obtain the degrees to which alternatives \(Z_i\)’s \((i=1, 2, 3, 4)\) satisfy or do not satisfy attributes \(e_j\)’s \((j=1, \ldots , 3)\) as follows:

The IF decision matrix corresponding to Table 5 is given in Table 6.

The specific calculations are as under:

-

1.

Using Eq. (50), (Taking \(\alpha =50\) and \(\beta =.7\)) the calculated attribute weight vector is:

$$\begin{aligned} u=(u_1, u_2, u_3)^T=(0.2981, 0.3047, 0.3973)^T. \end{aligned}$$ -

2.

The Best Solution \((Z^+)\) and Worst Solution \((Z^-)\) are respectively given as:

$$\begin{aligned} Z^+= & {} ((\alpha _1^+, \beta _1^+), (\alpha _2^+, \beta _2^+), (\alpha _3^+, \beta _3^+))=((1, 0), (1, 0), (1, 0));\\ Z^-= & {} ((\alpha _1^-, \beta _1^-), (\alpha _2^-, \beta _2^-), (\alpha _3^-, \beta _3^-))=((0, 1), (0, 1), (0, 1)). \end{aligned}$$ -

3.

The distance measures of \(Z_i\)’s from \(Z^+\) and \(Z^-\) are:

$$\begin{aligned} s (Z_1, Z^+)= & {} 0.6256, s (Z_2, Z^+)=0.3859, s (Z_3, Z^+)=0.4857, s (Z_4, Z^+)=0.4221;\\ s (Z_1, Z^-)= & {} 0.5923, s (Z_2, Z^-)=0.7859, s (Z_3, Z^-)=0.7039, s (Z_4, Z^-)=0.8358. \end{aligned}$$ -

4.

The calculated values of \(D_i\)’s, the relative degrees of closeness, are:

$$\begin{aligned} D_1=0.4863, D_2=0.6707, D_3=0.5917, D_4=0.6644. \end{aligned}$$

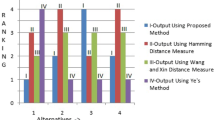

Thus, the ranking order of all alternatives is \(Z_2\succ Z_4\succ Z_3\succ Z_1\) and \(Z_2\) is the desirable alternative.

Let us take one more example for more clarity.

Example 6.2 This example is adapted from [43]. Consider a supplier selection problem with five possible alternatives \(Z_i (i=1, 2, 3, 4, 5)\) and six attributes \(e_j (j=1, 2, \ldots , 6)\). The ratings of the alternatives are displayed in the following intuitionistic fuzzy decision matrix D.

The specific calculation steps are as follows:

-

1.

Taking \(\alpha =50\) and \(\beta =0.7\) in (50), the computed attribute weight vector is:

$$\begin{aligned} u=(u_1, u_2, u_3, u_4, u_5, u_6)^T= (0.0382, 0.5762, 0.1367, 0.1077, 0.0896, 0.0516) \end{aligned}$$(61) -

2.

The Best Solution \((Z^+)\) and Worst Solution \((Z^-)\) are:

$$\begin{aligned} Z^+&=((\alpha _1^+, \beta _1^+), (\alpha _2^+, \beta _2^+), (\alpha _3^+, \beta _3^+), (\alpha _4^+, \beta _4^+). (\alpha _5^+, \beta _5^+), (\alpha _6^+, \beta _6^+))\nonumber \\&=(1, 0), (1, 0), (1, 0), (1, 0), (1, 0), (1, 0)\nonumber \\ Z^-&=((\alpha _1^-, \beta _1^-), (\alpha _2^-, \beta _2^-), (\alpha _3^-, \beta _3^-), (\alpha _4^-, \beta _4^-), (\alpha _5^-, \beta _5^-), (\alpha _6^-, \beta _6^-))\nonumber \\&=(0, 1), (0, 1), (0, 1), (0, 1), (0, 1), (0, 1) \end{aligned}$$(62) -

3.

The computed distance measures of \(Z_i\)’s from \(Z^+\) and \(Z^-\) are:

$$\begin{aligned} s (Z_1, Z^+)&=0.6491, s (Z_2, Z^+)=0.3993, s (Z_3, Z^+)=0.3213, s (Z_4, Z^+)\nonumber \\&\quad =0.1477, s (Z_5, Z^+)=0.4475; \nonumber \\ s (Z_1, Z^-)&=0.5918, s (Z_2, Z^-)=0.7887, s (Z_3, Z^-)=0.8701, s (Z_4, Z^-)\nonumber \\&\quad =0.9538, s (Z_5, Z^-)=0.8496. \end{aligned}$$(63) -

4.

The computed values of \(D_i\)’s, i.e., the relative degrees of closeness are:

$$\begin{aligned} D_1=0.4769, D_2=0.6639, D_3=0.7303, D_4=0.8659, D_5=0.6550. \end{aligned}$$(64) -

5.

Ranking the alternatives according to the values of \(D_i\)’s in descending order, the sequence of alternatives so obtained is \(Z_4\succ Z_3\succ Z_2\succ Z_5\succ Z_1\) and \(Z_4\) is the most desirable alternative.

Comparison with other methods

By applying the method proposed by Xu [43], the preference order of all alternatives is \(Z_4\succ Z_3\succ Z_5\succ Z_2\succ Z_1\) and \(Z_4\) is the best alternative.

If we apply the method suggested by Boran et al. [44] to compute example 6.2, the sequence of alternatives so obtained is \(Z_4\succ Z_5\succ Z_3\succ Z_2\succ Z_1\) and \(Z_4\) is the most desirable alternative.

Using the method proposed by Ye [45], the preference order of alternatives \(Z_4\succ Z_5\succ Z_3\succ Z_2\succ Z_1\) and \(Z_4\) is the most desirable alternative.

On applying Li’s method [46] to example 6.2, the sequence of alternatives so obtained is \(Z_4\succ Z_5\succ Z_3\succ Z_2\succ Z_1\) and \(Z_4\) is the most suitable option.

If we use the MADM method based on intuitionistic fuzzy weighted geometric averaging (IFWGA) operator proposed by Chen and Chang [47] to compute the Example 6.2, the sequence of preferences so obtained is \(Z_4\succ Z_3\succ Z_5\succ Z_2\succ Z_1\) with \(Z_4\) as the best alternative.

All the methods used for comparison choose \(Z_4\) as the best option. Xu’s method [43] is effective only if all attributes have equal weights which is not possible only in some specific applications, for example risk assessment, medical diagnosis [12] etc. Boran et al. [44] uses the definition of IVIFS to calculate the attribute weights in decision making problems under interval-valued intuitionistic fuzzy (IVIF) environment, which do not consider the decision matrix for decision making. Li’s method [46] is only effective in solving MADM problem in attribute weights as well as alternatives on attributes are denoted by interval-valued intuitionistic fuzzy sets (IVIFSs). In our proposed MADM method, we measure the relative degrees of closeness of different alternatives from best possible solution and worst possible solution whereas Ye’s method [45] is based on correlation coefficients with the best solution only. The entropy based attributes weight method introduced in this communication is not only simple and objective method but also considers all the alternatives on attributes.

Case 2 When weights of attributes are partially known

Example 6.3 This example is adapted from Li [48]. In this, we consider an washing machine selection problem. Suppose there are three washing machines: \(Z_i (i=1, 2, 3)\) are to be selected. Evaluation attributes are \(e_1\) (Economical), \(e_2\) (Function) and \(e_3\) (Operationality). The membership degrees (satisfactory degrees) \(p_{ij}\) and non-membership degrees (non-satisfactory degrees) \(q_{ij}\) for the alternatives \(Z_i\)’s \((i=1, 2, \ldots , m)\) satisfying the attributes \(e_j\)’s \((j=1, 2, \ldots , n)\) respectively, may be obtained using statistical method proposed by Liu and Wang [40].

The intuitionistic fuzzy decision matrix (Calculated same as in above example) provided by decision makers is shown in Table 7.

Let the weights of attributes satisfy the following set

The specific calculation steps are as under:

-

1.

Using Eq. (53), following programming model can be established:

$$\begin{aligned} \min E=0.3926 u_1 +0.4326 u_2+0.1748 u_3, \end{aligned}$$(65)$$\begin{aligned} s.t.\quad {\left\{ \begin{array}{ll} 0.25\le u_1\le 0.75 \\ 0.35\le u_2\le 0.60\\ 0.30\le u_3\le 0.35\\ u_1+u_2+u_3=1. \end{array}\right. } \end{aligned}$$(66)Solving the above programming model by using MATLAB software, we get the weight vector as follows:

$$\begin{aligned} u=(0.30, 0.35, 0.35)^T. \end{aligned}$$(67) -

2.

The Best Solution \((Z^+)\) and Worst Solution \((Z^-)\) are respectively given as:

$$\begin{aligned} Z^+= & {} ((\alpha _1^+, \beta _1^+), (\alpha _2^+, \beta _2^+), (\alpha _3^+, \beta _3^+))=((1, 0), (1, 0), (1, 0));\\ Z^-= & {} ((\alpha _1^-, \beta _1^-), (\alpha _2^-, \beta _2^-), (\alpha _3^-, \beta _3^-))=((0, 1), (0, 1), (0, 1)). \end{aligned}$$ -

3.

The calculated distances of \(Z_i\)’s \((i=1, \ldots , 4)\) from \(Z^+\) and \(Z^-\) are as:

$$\begin{aligned} s (Z_1, Z^+)= & {} 0.2850, s (Z_2, Z^+)=0.3645, s (Z_3, Z^+)=0.4075;\\ s (Z_1, Z^-)= & {} 0.8125, s (Z_2, Z^-)=0.7100, s (Z_3, Z^-)=0.7425. \end{aligned}$$ -

4.

The calculated values of \(D_i\)’s, the relative degrees of closeness, are as follows:

$$\begin{aligned} D_1=0.7403, D_2=0.6608, D_3=0.6457. \end{aligned}$$

Arranging the alternatives in descending order according to the values of \(D_i\)’s, we get the following sequence of alternatives \(Z_1\succ Z_2\succ Z_3\) and \(Z_1\) is the best alternative.

If we apply intuitionistic fuzzy weighted geometric averaging (IFWGA) operator method introduced by Chen and Chang [47], the preferential sequence of alternatives so obtained is \(Z_1\succ Z_2\succ Z_3\) which coincides with proposed method. This shows that the performance of proposed information measure and MADM method based on it is considerably good. Also, the best alternative agrees with that of Li [48].

On analyzing the output obtained from above three examples, we may observe that the proposed method not only give an optimal alternative, but also provide the decision makers with useful information for the choice of alternatives. The fuzzy decision making method with the entropy weights is more practical and effictive for dealing with the partially known and unknown information about criteria weights.

The above method can also be used to solve the following types of MADM problems

-

1.

Suppose a person wants to buy a car. Suppose five types of cars are available in the market. Suppose he makes the four attributes (1) price (2) comfort (3) design (4) safety, a base to purchase the car.

-

2.

Suppose a person wants insure himself with some insurance company. Suppose he has five options available and company considers four attributes to check the suitability of the customer namely (1) age (2) adequate weight (3) cholesterol level (4) blood pressure.

-

3.

Suppose a doctor wants to diagnose the patients on the basis of some symptoms of disease. Let the five possible diseases be (1) \(D_1\) (2) \(D_2\) (3) \(D_3\) (4) \(D_4\) having closely related symptoms. Let the doctor consider the four symptoms to decide the possibility of a particular disease: (1) \(A_1\) (2) \(A_2\) (3) \(A_3\) (4) \(A_4\).

-

4.

Suppose a person wants to choose a school for his children. He as five schools as possible alternatives. He considers the following four attributes to decide : (1). Transport facility (2). Academic profile of the teachers (3). Previous year’s results of the school (4). Discipline.

Concluding Remarks

Intuitionistic fuzzy sets play an important role in solving MADM problems. In this communication, we have proposed a new parametric intuitionistic fuzzy entropy and presented a MADM model based on the proposed entropy in which intuitionistic fuzzy sets represents characteristics of alternatives. We have discussed two cases to calculate the weights of the attributes. One is for unknown attribute weights and other is for partially known attribute weights. Using minimum entropy principle, the optimal criteria weights are obtained by the proposed entropy based model. The problems based on multiple attributes like evaluation of project management risks which depends on many factors, site selection and credit evaluation etc. can also be solved by using proposed MADM method. The techniques proposed in this paper can efficiently help the decision maker. In future, this idea of intuitionistic fuzzy sets will be extended to interval valued intuitionistic fuzzy sets for determining the weights of experts in MADM problems under intuitionistic fuzzy environment and will be reported elsewhere.

References

Zadeh, L.A.: Fuzzy sets. Inf. Comput. 8, 338–353 (1965)

Atanassov, K.T.: Intuitionistic fuzzy sets. Fuzzy Sets Syst. 20, 87–96 (1986)

Yager, A.: On measures of fuzziness and fuzzy complements. Int. J. Gen. Syst. 8(3), 169–180 (1982)

Szmidt, E., Kacprzyk, J.: Entropy for intuitionistic fuzzy sets. Fuzzy Sets Syst. 118(3), 467–477 (2001)

Kaufmann, A.: Introduction to Theory of Fuzzy Subsets. Academic, New York (1975)

Burillo, P., Bustince, H.: Entropy on intuitionistic fuzzy sets and on interval-valued fuzzy sets. Fuzzy Sets Syst. 2001(118), 305–316 (2001)

Zhang, H., Zhang, W., Mei, C.: Entropy on interval-valued fuzzy sets based on distance and its relationship with similarity measure. Knowl Based Syst. 22(6), 449–454 (2009)

Hung, W.: A note on entropy of intuitionistic fuzzy sets. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 11(5), 627–633 (2003)

Vlachos, I.K., Sergiadis, G.D.: Intuitionistic fuzzy information—applications to pattern recognition. Pattern Recognit. Lett. 28, 197–206 (2007)

De Luca, A., Termini, S.: A definition of non-probabilistic entropy in the setting of fuzzy set theory. Inf. Control 20, 301–312 (1972)

Zeng, W., Yu, F., Yu, X., Chen, H., Wu, S.: Entropy on intuitionistic fuzzy set based on similarity measure. Int. J. Innov. Comput. Inf. Control 5(12), 4737–4744 (2009)

Chen, T., Li, C.: Determining objective weights with intuitionistic fuzzy entropy measures: a comparative analysis. Inf. Sci. 180, 4207–4222 (2010)

Pedrycz, W.: Fuzzy sets in pattern recognition: accomplishments and challenges. Fuzzy Sets Syst. 90(2), 171–176 (1997)

Yager, R.: Fuzzy modeling for intelligent decision making under uncertainty. IEEE Trans. Syst. Man Cybern. Part B Cybern. 30(1), 60–70 (2000)

Zadeh, L.: Is there a need for fuzzy logic? Inf. Sci. 178(13), 2751–2779 (2008)

Cornelis, C., Deschrijver, G., Kerre, E.: Implication in intuitionistic fuzzy and interval-valued fuzzy set theory: construction, classification, application. Int. J. Approx. Reason. 35(1), 55–95 (2004)

Saaty, T.L.: The Analytic Hierarchy Process. McGraw hill, New York (1980)

Chu, A.T.W., Kalaba, R.E., Spingarn, K.: A comparison of two methods for determining the weights of belonging to fuzzy sets. J. Optim. Theory Appl. 27, 531–538 (1979)

Hwang, C.L., Lin, M.J.: Group Decision Making Under Multiple Criteria: Methods and Applications. Springer, Berlin (1987)

Fan, Z.P.: Complicated multiple attribute decision making: theory and applications. Ph.D. Dissertation, Northeastern university, Shenyang, China (1996)

Choo, E.U., Wedley, W.C.: Optimal criterion weights in repetitive multicriteria decision making. J. Oper. Res. Soc. 36, 983–992 (1985)

Bhandari, D., Pal, N.R.: Some new information measures for fuzzy sets. Inf. Sci. 67, 209–228 (1993)

Renyi, A.: On measures of entropy and information. In: Proceedings of 4th Barkley Symposium on Mathematical Statistics and Probability, vol. 1, pp. 547. University of California Press (1961)

Ejegwa, P.A., Akowe, S.O., Otene, P.M., Ikyule, J.M.: An overview on intuitionistic fuzzy sets. Int. J. Sci. Technol. Res. 3(3), 142–145 (2014)

Szmidt, E., Kacprzyk, J.: Using intuitionistic fuzzy sets in group decision-making. Control Cybern. 31, 1037–1054 (2002)

Joshi, R., Kumar, S.: A new approach in multiple attribute decision making using \(R\)-norm entropy and Hamming distance measure. Int. J. Inf. Manag. Sci. 27, 253–268 (2016)

Sharma, B.D., Taneja, I.J.: Entropy of type \((\alpha, \beta )\) and other generalized measures in information theory. Metrika 22, 205–215 (1975)

Joshi, R., Kumar, S.: A new intuitionistic fuzzy entropy of order-\(\alpha \) with applications in multiple attribute decision making. In: Proceedings of 6th International Conference on Soft Computing for Problem Solving. Advances in Intelligent Systems and Computing, vol. 546. Springer (2017). https://doi.org/10.1007/978-981-10-3322-319

Hooda, D.S.: On generalized measures of fuzzy entropy. Mathematica Slovaca 54, 315–325 (2004)

De, S.K., Biswas, R., Roy, A.R.: Some operations on intuitionistic fuzzy sets. Fuzzy Sets Syst. 114, 477–484 (2000)

Zeng, W., Li, H.: Relationship between similarity measure and entropy of interval valued fuzzy sets. Fuzzy Sets Syst. 118(3), 467–477 (2001)

Zhang, Q., Jiang, S.: A note on information entropy measure for vague sets. Inf. Sci. 178, 4184–4191 (2008)

Hung, W.L., Yang, M.S.: Fuzzy entropy on intuitionistic fuzzy sets. Int. J. Intell. Syst. 21(4), 443–451 (2006)

Ye, J.: Two effective measures of intuitionistic fuzzy entropy. Computing 87, 55–62 (2010)

Wei, C., Gao, Z., Guo, T.: An intuitionistic fuzzy entropy measure based on trigonometric function. Control Decis. 27, 571–574 (2012)

Verma, R., Sharma, B.D.: Exponential entropy on intuitionistic fuzzy sets. Kybernetika 49, 114–127 (2013)

Wang, J., Wang, P.: Intuitionistic linguistic fuzzy multi-criteria decision-making method based on intuitionistic fuzzy entropy. Control Decis. 27, 1694–1698 (2012)

Liu, M., Ren, H.: A new intuitionistic fuzzy entropy and application in multi-attribute decision-making. Information 5, 587–601 (2014)

Hwang, C.M., Yang, M.S.: On entropy of fuzzy sets. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 16(4), 519–527 (2008)

Liu, H., Wang, G.: Multi-criteria decision-making methods based on intuitionistic fuzzy sets. Eur. J. Oper. Res. 179, 220–223 (2007)

Herrera, F., Herrera-Viedma, E.: Linguistic decision analysis: steps for solving decision problems under linguistic information. Fuzzy Sets Syst. 115, 67–82 (2000)

Ye, J.: Fuzzy decision-making method based on the weighted correlation coefficient under intutionistic fuzzy enviornment. Eur. J. Oper. Res. 205, 202–204 (2010)

Xu, Z.: Intuitionistic Fuzzy Information Aggregation Theory and Application. Science press, BeiJing (2008)

Boran, F., Gene, S., Kurt, M., Akay, D.: A multi-criteria intuitionistic fuzzy group decision making for supplier selection with TOPSIS method. Experts Syst. Appl. 36(8), 11363–11368 (2009)

Ye, J.: Multicriteria fuzzy decision making method using entropy weights based correlation coefficients of interval valued intuitionistic fuzzy sets. Applied Math. Model. 34(12), 3864–3870 (2010)

Li, D.: TOPSIS-based nonlinear-programming methodology for multiattribute decision making with interval-valued intuitionistic fuzzy sets. IEEE Trans. Fuzzy Syst. 18(2), 299–311 (2010)

Chen, S.M., Chang, C.H.: A new method for multiple attribute decision making based on intuitionistic fuzzy geometric averaging operators. In: Proceedings of 2015 International Conference on Machine Learning and Cybernatics, Guangzhou, pp. 426–432 (2015)

Li, D.: Multi-attribute decision-making models and methods using intuitionistic fuzzy sets. J. Comput. Syst. Sci. 70, 73–85 (2005)

Joshi, R., Kumar, S.: \((R, S)\)-norm information measure and a relation between coding and questionnaire theory. Open Syst. Inf. Dyn. 23(3), 1–12 (2016)

Joshi, R., Kumar, S.: An \((R, S)\)-norm fuzzy information measure with its applications in multiple attribute decision making. Comput. Appl. Math (2017). https://doi.org/10.1007/s40314-017-0491-4

Acknowledgements

The authors are thankful to the anonymous reviewers for their constructive suggestions to improve this manuscript.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Joshi, R., Kumar, S. A New Parametric Intuitionistic Fuzzy Entropy and its Applications in Multiple Attribute Decision Making. Int. J. Appl. Comput. Math 4, 52 (2018). https://doi.org/10.1007/s40819-018-0486-x

Published:

DOI: https://doi.org/10.1007/s40819-018-0486-x