Abstract

It is well-known that small, regular, spherically symmetric characteristic initial data to the Einstein-scalar-field system which are decaying towards (future null) infinity give rise to solutions which are forward-in-time global (in the sense of future causal geodesic completeness). We construct a class of spherically symmetric solutions which are global but the initial norms are consistent with initial data not decaying towards infinity. This gives the following consequences:

-

1.

We prove that there exist forward-in-time global solutions with arbitrarily large (and in fact infinite) initial bounded variation (BV) norms and initial Bondi masses.

-

2.

While general solutions with non-decaying data do not approach Minkowski spacetime, we show using the results of Luk and Oh (Anal PDE 8(7):1603–1674, 2014. arXiv:1402.2984) that if a sufficiently strong asymptotic flatness condition is imposed on the initial data, then the solutions we construct (with large BV norms) approach Minkowski spacetime with a sharp inverse polynomial rate.

-

3.

Our construction can be easily extended so that data are posed at past null infinity and we obtain solutions with large BV norms which are causally geodesically complete both to the past and to the future.

Finally, we discuss applications of our method to construct global solutions for other nonlinear wave equations with infinite critical norms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

We study the Einstein-scalar-field system for a Lorentzian manifold \((\mathcal M, g)\) and a real-valued function \(\phi :\mathcal M\rightarrow \mathbb R\):

in \((3+1)\) dimensions with spherically symmetric data. It is known [2, 4] that small, regular and sufficiently decaying initial data give rise to forward-in-time global solutions in the sense that they are future causally geodesically complete. In this paper, we show that the decay condition can be removed and be replaced by the requirement that the growth of the integral of the data is suitably mild at infinity (see Theorem 1.1). As a particular consequence, we construct global solutions with arbitrarily large (and in fact infinite) BV norms and Bondi massesFootnote 1.

To further discuss our results, we recall the reduction of (1.1) in spherical symmetry. It is well-known that in spherical symmetry we can introduce null coordinates (u, v) such that the metric g takes the form

where \(\mathrm {d}\sigma _{\mathbb S^2}\) is the standard metric on the unit round sphere and r is the area-radius of the orbit of the symmetry group SO(3) (see Section 2.1). We normalize the coordinates so that \(u = v\) on the axis of symmetry \(\Gamma = \{r = 0\}\). Defining the Hawking mass m by the relation

the Einstein-scalar-field system reduces to the following system of equations for \((r,\phi , m)\) in \((1+1)\) dimensions, which we will also call the spherically symmetric Einstein-scalar-field (SSESF) system:

We will consider solutions to (SSESF) via studying the characteristic initial value problem for which initial data are posed on a constant u curve \(C_{u_0}:=\{(u,v):u=u_0, \, v\ge u_0\}\). On \(C_{u_0}\), after imposing the gauge condition \((\partial _v r)(u_0,v)=\frac{1}{2}\) and the boundary conditions \(r(u_0,u_0)=m(u_0,u_0)=0\), the initial value for \(\Phi (v):=2(\partial _v(r\phi ))(u_0,v)\) can be freely prescribed. It is easy to show that if \(\Phi (v)\) is \(C^1\), then there exists a unique local solution to (SSESF). We refer the readers to Section 2.1 for further discussions on the characteristic initial value problem.

As mentioned earlier, (SSESF) is known to have global solutions for small, regular and decaying initial data. More precisely, Christodoulou [2] showed that there exists a universal constant \(\delta _0>0\) such that if

then the solution is forward-in-time global. An analogous small data result in fact holds without assuming spherical symmetry as long as the higher derivatives of the scalar field and appropriate geometric quantities are also small and decaying. In the vacuum case, this was first proved by Christodoulou-Klainerman [6]. An alternative proof was later given by Lindblad-Rodnianski [8], who also treated the case of the Einstein-scalar-field system.

Returning to the special case of spherical symmetry, in fact a much stronger result is known: Christodoulou showed in [4] that only the bounded variation (BV) norm of the initial data \(\Phi \) is required to be smallFootnote 2, i.e., there exists a universal constant \(\delta _1>0\) such that ifFootnote 3

then the solution is global toward the future.

On the other hand, in the large data regime, Christodoulou showed in [3] that not all initial data give rise to future causally geodesically complete solutions. In particular, for some class of initial data, the future Cauchy development contains a black hole region and is future causally geodesically incomplete.

The purpose of this paper is to construct a class of solutions which on one hand are global (in the sense of future causal geodesic completeness), but on the other hand their initial data are non-decaying and therefore large when measured using an integrated normFootnote 4. One way to measure the size of the initial data is by the BV norm

which is a scaling-invariant quantity and as mentioned above, the smallness of the BV norm guarantees that the solution is global. We will also quantify the largeness of the initial data by the initial Bondi mass, which is defined as the limit of the Hawking mass as \(v\rightarrow \infty \) on the initial curve, i.e.,

In fact, our construction allows both the initial BV norm and Bondi mass to be infinite. More precisely, the following is the main result of this paper:

Theorem 1.1

Consider the characteristic initial value problem from an outgoing curve \(C_{u_0}\) with \(v\ge u_0\), \(\partial _v r \upharpoonright _{C_{u_0}} = \frac{1}{2}\) and \(r(u_0,u_0)=m(u_0,u_0)=0\). Suppose the data on the initial curve \(C_{u_0}\) is given by

where \(\Phi :[u_0,\infty )\rightarrow \mathbb R\) is a smooth function satisfying the following conditions for some \(\gamma >0\):

Then there exists \(\epsilon >0\) depending only on \(\gamma \) such that the unique solution to (SSESF) arising from the given data is future causally geodesically complete. Moreover, the solution satisfies the following uniform a priori estimates:

and

for some constant \(C>0\) depending only on \(\gamma \).

Remark 1.2

We note explicitly that the constants \(\epsilon \) and C in the above theorem are independent of \(u_0\).

Remark 1.3

In addition, if the second derivative of the data \(\Phi \) is bounded (i.e., \(\vert \Phi ''(v)\vert \le C\)), we will show that the solution obeys corresponding higher regularity bounds which are uniform with respect to \(u_{0}\); see Proposition 3.18.

The proof of this theorem will occupy most of this paper. Global existence of a unique solution in an appropriate coordinate system will be established in Section 3. As a brief comment on the proof, we note that even for spherically symmetric solutions to the linear wave equation \(\Box _{\mathbb R^{1+3}}\phi =0\), if the initial data on an outgoing null cone \(C_{u_0}\) are only required to satisfy (1.7), then the solutions \(\phi \) and its first derivatives in general do not decay in time. In fact, \(\phi \) only satisfies decay estimates in r. In our setting, since we can use the method of characteristics in spherical symmetry, we will only need to integrate the error terms along null curves and the r decay is therefore already sufficient to close a nonlinear problem.

Since our solution does not decay in time, even after establishing global existence of the solution in an appropriate coordinate system, it does not immediately follow that the solution is future causally geodesically complete. For this we need an additional geometric argument, which will be carried out in Section 6.

An immediate corollary of Theorem 1.1 is the following result, which follows simply from the observation that there exists \(\Phi \) satisfying the assumptions of Theorem 1.1 such that (1.5) and (1.6) are both infinite. The proof of this corollary will be carried out in Section 5.

Corollary 1.4

There exist solutions to (1.1) with spherically symmetric data such that the data have arbitrarily large (in fact infinite) BV norm and initial Bondi mass, while the development is future causally geodesically complete.

Remark 1.5

We remark that to achieve the arbitrarily large initial Bondi mass in Corollary 1.4, we need to arrange the data to be “suitably spread out”. To illustrate this, let us compare our result with the black hole formation theorem of Christodoulou in [3]. [3] impliesFootnote 5 that for initial data given on \(C_{u_0}\) with \(v\ge u_0\), \(\partial _v r \upharpoonright _{C_{u_0}} = \frac{1}{2}\) and \(r(u_0,u_0)=m(u_0,u_0)=0\), for every \(0<v_1<v_2<\infty \), there exists \(c>0\) depending on \(v_1\) and \(v_2\) such that if \(m(u_0,v_2)-m(u_0,v_1)>c\), then the development of the data is future causally geodesically incomplete. Notice that for \(\epsilon \) sufficiently small, the conditions in Theorem 1.1 in particular guarantee that the mass difference on any finite v-interval is suitably small and that the data do not verify the black hole formation criterion in [3]. On the other hand, Corollary 1.4 holds since the conditions of Theorem 1.1 do not require the data to decay and one can appropriately “spread out” the initial data. As a consequence, one can arrange the initial data to satisfy the conditions of Theorem 1.1 (and such that the mass difference on any finite v-interval is small), while at the same time the total mass can be arbitrarily large.

We now briefly describe some generalizations and consequences of Theorem 1.1, but we will refer the readers to Sections 1.1 and 1.2 for more details. First, while Theorem 1.1 itself does not show that the solution “decays” while approaching timelike infinityFootnote 6, if we assume in addition a sufficiently strong asymptotic flatness condition, then we can apply the results in [9] to show that solution satisfies a pointwise inverse polynomial decay rate. In fact, Theorem 1.1 also gives the first examples of solutions with large initial BV norms which satisfy the assumptions of the conditional decay result in [9]. As a consequence of the pointwise decay, (a subclass of) these solutions are also stable with respect to small but not necessarily spherically symmetric perturbations [10]. See further discussion in Section 1.1.

Second, a consequence of the proof of Theorem 1.1 is the construction of a large data spacetime solution which is causally geodescally complete both to the future and the past. This is achieved by making use of the uniformity of the estimates of Theorem 1.1 in \(u_0\) and taking the limit \(u_0\rightarrow -\infty \). We refer the readers to Theorem 1.8 in Section 1.2 for a precise statement.

Before turning to further discussions of our results, we note that the problem of constructing large data global solutions to supercritical nonlinear wave equations has attracted much recent attention. We refer the readers to [1, 7, 11, 12] and the references therein for some recent results. The ideas in the work [11] in particular is inspired by the monumental work of Christodoulou [5] in general relativity on the formation of trapped surfaces, which is itself a semi-globalFootnote 7 large data result. Our result appears to be the first in which the large data solutions are global both to the future and to the past. As an example of the robustness of our methods, we also consider the much simpler supercritical semilinear equation

We show that (1.10) admits solutions with infinite \(\dot{H}^{\frac{7}{6}}\times \dot{H}^{\frac{1}{6}}\) norms for all time and are global both to the future and to the past (see Theorem A.1), thus extendingFootnote 8 the results of Krieger-Schlag [7] and Beceanu-Soffer [1].

Quantitative decay rates and nonlinear stability

In general, the solutions that are constructed in Theorem 1.1 may not exhibit uniform decay in v. Nevertheless, in this section we show that if one imposes the following strong asymptotic flatness condition on the \(C^1\) initial data:

for \(\omega '>1\), then in fact the solution decaysFootnote 9 in v.

To see this, we apply the result in [9] by the first two authors. In [9], the long time asymptotics of spherically symmetric, causally geodesically complete solutions to the Einstein-scalar-field system was studied. It was shown that (see Theorems 3.1 and 3.2 and Remark 3.9 in [9]) sharp pointwise inverse polynomial decaying bounds hold for the solution even for large initial data, as long as the solution is assumed to satisfy the boundFootnote 10

for some \(p>1\), \(R>0\) and \(C>0\). Here, and below, we use the convention that \(C_u\) denote a constant u curve.

In [9], it was further proved using the work of Christodoulou [4] that the pointwise decay results holds if the initial data obey (1.11) and have small BV norm. On the other hand, the work [9] leaves open the question whether there exist any solutions with large BV norm that satisfy both (1.11) and (1.12). Our present work provides a construction of such spacetimes. More precisely, we have

Theorem 1.6

Assume, in addition to the assumptions of Theorem 1.1, that \(\Phi \) obeys the following bounds for some \(A_0>0\) and \(\omega '>1\):

Then the following decay estimates hold for \(\omega :=\min \{\omega ', 3\}\) and for some \(A_1>0\):

Proof

By Theorems 3.1 and 3.2 and Remark 3.9 in [9], the desired decay rates hold if for some \(p>1\), \(R>0\) and \(C>0\), we have

This latter bound indeed holds (for any \(p>1\) and any \(R>0\)) in view of the estimates (1.8) and (1.9) in Theorem 1.1. \(\square \)

Remark 1.7

As already noted in [9], the decay rates that are obtained in Theorem 1.6 are sharp.

Given these decay rates, it seems natural to ask whether the solutions constructed in Theorem 1.6 are stable with respect to small perturbations even outside spherical symmetry. This question will be addressed in a forthcoming paper [10] in which we answer this question in the affirmativeFootnote 11, thus extending the proof of the nonlinear stability of Minkowski spacetime to a more general class of dispersive spacetimes.

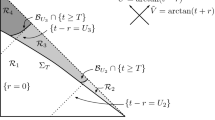

Large data solutions which are both future and past complete

Theorem 1.1 constructs future causally geodesically complete solutions to the future of the hypersurface \(C_{u_0}\). On the other hand, a priori estimates in Theorem 1.1 are independent of \(u_0\). One can thereforeFootnote 12 take \(u_0\rightarrow -\infty \) and obtain solutions to (SSESF) for \((u,v)\in \{(u, v) : -\infty< u< \infty , \ u \le v < \infty \}\). The solutions constructed in this manner are moreover causally geodesically complete both towards the future and the past. More precisely, we have the following theorem:

Theorem 1.8

Let \(\Phi :\mathbb R\rightarrow \mathbb R\) be a smooth function such that (1.7) holds for some \(\gamma >0\), i.e.,

and

Then for every \(\gamma >0\), there exists \(\epsilon >0\) such that there exists a solution to (SSESF) which is both future and past casually geodesically complete and obeys

The proof of global existence in a suitable double null coordinate system will be carried out in Section 4. Future and past causal geodesic completeness of the solution will be proved in Section 6.

We contrast Theorem 1.8 with the works [11, 12] on large solutions to nonlinear wave equations. In [11, 12], the key idea, inspired by [5], is to construct solutions which are large but “sufficiently outgoing”. For instance, on an initial Cauchy hypersurface \(\{t=0\}\), this means that \(\partial _v\phi \) is appropriately small, while \(\partial _u\phi \) is allowed to be large. This approach, while useful to obtain a global solution to the future, does not seem applicable to construct solutions which are global both to the future and to the past as in Theorem 1.8. On the other hand, we also note that the work [11] allows the initial data to be large in a compact region in space, whereas in Theorem 1.8, the “largeness” of the initial data is only achieved by the lack of decay at infinity.

Outline of the paper

We end the introduction with an outline of the remainder of the paper. In Section 2, we will discuss some preliminaries, including the geometric setup and some identities that we will repeatedly use. The main theorem (Theorem 1.1) will then be proved in Section 3, modulo the assertion that the resulting spacetime is future causally geodesically complete. Then using the estimates obtained in Section 3, we will prove Theorem 1.8 in Section 4, again modulo causal geodesic completeness. In Section 5, we will then return to the proof of Corollary 1.4. In Section 6, we finally complete the proof of Theorems 1.1 and 1.8 by establishing the causal geodesic completeness statements. Lastly, in “Appendix A”, we will apply the methods in this paper to study the equation \(\Box _{\mathbb R^{1+3}}\phi =\pm \phi ^7\), and show that there exists solutions global to the future and the past which have infinite critical \(\dot{H}^{\frac{7}{6}} \times \dot{H}^{\frac{1}{6}}\) norm and infinite critical Strichartz norm.

Preliminaries

In this section, we further explain the geometric setup of the problem and introduce the notation that we will use for the rest of the paper.

Setup

As discussed in the introduction, (SSESF) arises as a reduction of the (3+1)-dimensional Einstein-scalar-field equation under spherical symmetry, written in a double null coordinate system. Here we describe (SSESF) as a (1+1)-dimensional system, which is the point of view we adopt in our analysis throughout this paper until Section 6.

Consider the \((1+1)\)-dimensional domain

with partial boundary

We define causality in \(\mathcal Q\) with respect to the ambient metric \(m = - \mathrm {d}u \cdot \mathrm {d}v\) of \(\mathbb R^{1+1}\), and the time orientation in \(\mathcal Q\) so that \(\partial _{u}\) and \(\partial _{v}\) are future pointing. We use the notation \(C_{u}\) and \(\underline{C}_{v}\) for constant u and v curves in \(\mathcal Q\), respectively. We call \(C_{u}\) an outgoing null curve and \(\underline{C}_{v}\) as an incoming null curves, in reference to their directions (to the future) relative to \(\Gamma \). Moreover, given \(- \,\infty< u_{0}< u_{1} < \infty \), let

We introduce the notion of a \(C^{k}\) solution to (SSESF) as follows.

Definition 2.1

Let \(- \infty< u_{0}< u_{1} < \infty \). We say that a triple \((r, \phi , m)\) of real-valued functions on \(\mathcal Q_{[u_{0}, u_{1}]}\) is a \(C^{k}\) solution to (SSESF) if it satisfies this system of equations and the following conditions hold:

-

(1)

The following functions are \(C^{k}\) in \(\mathcal Q_{[u_{0}, u_{1}]}\):

$$\begin{aligned} \partial _{u} r, \ \partial _{v} r, \ \phi , \ \partial _{v}(r \phi ), \ \partial _{u}(r \phi ). \end{aligned}$$ -

(2)

For \(\partial _{v} r\) and \(\partial _{u} r\), we have

$$\begin{aligned} \inf _{\mathcal Q_{[u_{0}, u_{1}]}} \partial _{u} r> -\infty , \quad \inf _{\mathcal Q_{[u_{0}, u_{1}]}} \partial _{v} r > 0. \end{aligned}$$ -

(3)

For each point \((a, a) \in \Gamma \cap \mathcal Q_{[u_{0}, u_{1}]}\), the following boundary conditions hold:

$$\begin{aligned} r (a, a) =&0, \end{aligned}$$(2.1)$$\begin{aligned} m(a, a) =&0. \end{aligned}$$(2.2)

Moreover, if \((r, \phi , m)\) is a \(C^{k}\) solution on \(\mathcal Q_{[u_{0}, u_{1}]}\) for every \(u_{1}\) greater than \(u_{0}\), then we say that it is a global \(C^{k}\) solution on \(\mathcal Q_{[u_{0}, \infty )}\).

The boundary condition (2.1) can be combined with the regularity assumption to deduce higher order boundary order conditions for r and \(r \phi \). More precisely, let \((r, \phi , m)\) be a \(C^{k}\) solution on \(\mathcal Q_{[u_{0}, u_{1}]}\). Since \(u = v\) on \(\Gamma = \{ r = 0\}\), we have

for every \(\ell = 0, \ldots , k\) and \((a, a) \in \Gamma \cap \mathcal Q_{[u_{0}, u_{1}]}\).

Consider the characteristic initial value problem for (SSESF) with data

and initial gauge conditionFootnote 13

on some outgoing null curve \(C_{u_{0}}\). This problem is locally well-posed for \(C^{k}\) data \((k \ge 1)\) in the following sense: Given any \(C^{k}\) data \(\Phi \) with \(k \ge 1\), there exists a unique \(C^{k}\) solution to (SSESF) on \(\mathcal Q_{[u_{0}, u_{1}]}\) for some \(u_{1} > u_{0}\), which only depends on \(u_{0}\) and the \(C^{k}\) norm of \(\Phi \). We omit the proof, which is a routine iteration argument using, for instance, the equations stated in Section 2.2 below.

Remark 2.2

The system (SSESF) is invariant under reparametrizations of the form \((u, v) \mapsto ( U(u), V(v) )\); this is the gauge invariance of (SSESF). Note that we have implicitly fixed a gauge in the setup above, by requiring that \(u = v\) on \(\Gamma \) and imposing the initial gauge condition (2.5).

Remark 2.3

As discussed in the introduction, reduction of the Einstein-scalar-field system under spherical symmetry yields the above (1+1)-dimensional setup, where \(\Gamma \) corresponds to the axis of symmetry \(\{r = 0\}\). Furthermore, the boundedness of the function \(\nabla ^{\alpha } r \nabla _{\alpha } r = 1 - \frac{2m}{r}\) on \(\Gamma \) translates to the boundary condition \(m = 0\) on \(\Gamma \). Conversely, any suitably regular solution \((r, \phi , m)\) on \(\mathcal Q_{0} \subseteq \mathcal Q\) gives rise to a spherically symmetric (3+1)-dimensional solution \((g, \phi )\) to the Einstein-scalar-field system on \(\mathcal M= \mathcal Q_{0} \times \mathbb S^{2}\), where g is as in the introduction.

Remark 2.4

Finally, although it is stated slightly differently, it can be checked that the notion of \(C^{1}\) solution in [9] is equivalent to the present definition.

Structure of (SSESF)

Following [4], we introduce the shorthands

These dimensionless quantities will play an important role in this paper, as they encode key geometric information about the spacetime.

In what follows, we will rewrite (SSESF) using normalized derivatives \(\lambda ^{-1} \partial _{v}\) and \(\nu ^{-1} \partial _{u}\) instead of \(\partial _{v}\) and \(\partial _{u}\). Unlike \(\partial _{v}\) and \(\partial _{u}\), these normalized derivatives are invariant under reparametrizations of v and u. Moreover, it turns out that writing (SSESF) in such a form leads to decoupling of the evolutionary equations under mild assumptions on the quantities \(\lambda \), \(\nu \) and \(\mu \), which is convenient for analysis; see Remark 2.5 below for a more detailed discussion.

The wave equation for \(\phi \), in terms of \(\lambda ^{-1} \partial _{v} \phi \) and \(\nu ^{-1} \partial _{u} \phi \), takes the form

The wave equation for r, in terms of \(\log \lambda \) and \(\log \nu \), takes the form

The equations for the Hawking mass m read

Moreover, the following Raychaudhuri equations can be derived from (SSESF):

By the wave equation for r, we also have the commutator formulae

Remark 2.5

Once we have a suitable control of the underlying geometry, namely upper and lower bounds for \(\lambda , \nu \) and \((1-\mu )\), the evolutionary equations (2.6), (2.7), (2.8) and (2.9) are essentially all decoupled from each other. This observation allows us to close bounds for \(\lambda ^{-1} \partial _{v}\) derivatives of \(r \phi \) first, and then derive bounds for other variables (such as \(\log \lambda \), \(\nu ^{-1} \partial _{u}(r \phi )\) and \(\log \nu \)) afterwards. Moreover, from (2.6), (2.8) and (2.14), it is clear that a key step in propagating the incoming waves \(\lambda ^{-1} \partial _{v} (r \phi )\) and \(\log \lambda \) is to control the factor \(\frac{2 m \nu }{(1-\mu ) r^{2}}\). Similarly, controlling \(\frac{2 m \lambda }{(1-\mu ) r^{2}}\) is important for propagating the outgoing waves \(\nu ^{-1} \partial _{u} (r \phi )\) and \(\log \nu \).

Finally, for a \(C^{k}\) solution \((r, \phi , m)\), note that the boundary conditions in (2.3) imply

for \(\ell = 0, \ldots , k\) on the axis. These equations, along with the wave equations stated above, can be used to compute \((\nu ^{-1} \partial _{u})^{k} (r \phi )\) and \((\nu ^{-1} \partial _{u})^{k-1} \log \nu \) in terms of \((\lambda ^{-1} \partial _{v})^{k} (r \phi )\), \((\lambda ^{-1} \partial _{v})^{k-1} \log \lambda \) and lower order terms.

Averaging operators and commutation with \(\lambda ^{-1} \partial _{v}\)

Observe that a number of quantities in the nonlinearity of (SSESF) are given in terms of averaging formulae. For instance, by the boundary conditions (2.1), (2.2) and the equation (2.10), we have

Motivated by these formulae, we define the s-order averaging operator \(I_{s}\) on outgoing null curves by

By pulling out f outside the integral and using the fundamental theorem of calculus, we obtain the basic estimate

The averaging operator \(I_{s}\) turns out to obey a nice differentiation formula with respect to \(\lambda ^{-1} \partial _{v}\).

Lemma 2.6

For any real number \(s \ge 1\), the following identity holds.

Proof

In what follows, we will often omit writing u, which is fixed throughout the proof. Making the change of variable \(\rho = r^{s}(u, v)\) so that

we may rewrite \(I_{s}[f]\) and \(\lambda ^{-1} \partial _{v} I_{s}[f]\) as

where we abuse notation and write \(I_{s}[f](\rho ) = I_{s}[f](v(\rho ))\), \(f(\rho ') = f(v(\rho '))\) etc. Note that

The previous identity follows quickly by, say, making a further change of variables \(\sigma ' = \rho ' / \rho \). Plugging this in the expression for \(\lambda ^{-1} \partial _{v} I_{s}[f]\) and changing the variable back to v, we arrive at (2.20). \(\square \)

Applying Lemma 2.6 to the formulae (2.17) and (2.18), we obtain

Such differentiated averaging identities are useful near the axis. On the other hand, far away from the axis, it is more effective to simply commute \(\lambda ^{-1} \partial _{v}\) with r, as in the following identities:

An entirely analogous discussion holds with the roles of u and v interchanged. Indeed, with the definition

the following analogue of Lemma 2.6 can be proved.

Lemma 2.7

For any real number \(s \ge 1\), the following identity holds.

Forward-in-time global solution

The main goal of this section is to establish Theorem 1.1 modulo future causal geodesic completeness, which is proved in Section 6. We also formulate and prove uniform estimates for higher derivatives (Proposition 3.18), which will be useful in the proof of Theorem 1.8 in the next section.

This section is structured as follows. In Sections 3.1–3.5, we carry out the main bootstrap argument, which lies at the heart of our proof of Theorem 1.1. The proof of Theorem 1.1 is then completed in Section 3.7. Finally, in Section 3.8, we prove estimates for higher derivatives (Proposition 3.18), which are uniform with respect to the initial curve \(u_{0}\).

Bootstrap assumptions

Suppose that \((r, \phi , m)\) is a \(C^{1}\) solution to (SSESF) on \(\mathcal Q_{[u_{0}, u_{1}]}\). We introduce the following bootstrap assumptions on \(\mathcal Q_{[u_{0}, u_{1}]}\):

-

(1)

Assumptions on the geometry.

$$\begin{aligned} \lambda> \frac{1}{3}, \quad -\frac{1}{6}> \nu> - \frac{2}{3}, \quad 1-\mu > \frac{1}{2}. \end{aligned}$$(3.1) -

(2)

Assumptions on the inhomogeneous part of \(\lambda ^{-1} \partial _{v} \phi \).

$$\begin{aligned} \int _{u_0}^{u} \vert \frac{2m \nu }{(1-\mu ) r^{2}} \phi (u', v)\vert \, \mathrm {d}u'&\le 2 \epsilon r_+^{-\gamma }, \end{aligned}$$(3.2)where \(r_{+} := \max \{1, r\}\).

-

(3)

Assumption on \((\lambda ^{-1} \partial _{v})^2 (r \phi )\).

$$\begin{aligned} \vert (\lambda ^{-1} \partial _{v})^{2} (r \phi )\vert \le 3 \epsilon . \end{aligned}$$(3.3)

Henceforth until Section 3.5, the domain for each bound is \(\mathcal Q_{[u_{0}, u_{1}]}\) unless otherwise specified. We will use the convention that unless otherwise stated, the constants C depend only on \(\gamma \). Moreover, we will also use the notation \(\lesssim \) such that the implicit constants are allowed to depend only on \(\gamma \).

Preliminary estimates

Recall that r vanishes on the boundary \(\Gamma \) and \(\lambda > 1/3\) by the bootstrap assumption. Moreover, by the bootstrap assumptions on \(\nu \) and \(1-\mu \), we have \(\partial _{u} \lambda \le 0\). It follows that

This bound implies that at the point (u, v) the radius r(u, v) is comparable to the difference \(v-u\) up to a constant:

In the proof, we will frequently need estimates for integrals of powers of r. We will collect these estimates in Lemma 3.1. To this end, the notation

introduced above will be convenient.

The following lemma holds also due to (3.4) and the assumption that r vanishes on the boundary \(\Gamma \).

Lemma 3.1

Assume the bootstrap assumption on the geometry (3.1). Then for all \(k> 1\), we have

for some constant C depending only on k.

Proof

For (3.6), the case when \(r(u, v)\le 1\) is easy to verify. The lower bound for \(\lambda \) implies that

When \(r(u, v)>1\), let \(v^*\) be the unique v value such that \(r(u, v^*)=1\). Then we have

The proof for (3.7) is very similar where we make use of the bootstrap assumption on the lower bound of \(-\nu \). More precisely, for \(r(u,v)>1\), we have

On the other hand, if \(r(u,v)\le 1\), we defined \(u^*\) to be the unique u value such that \(r(u^*,v)=1\). We then obtain

\(\square \)

Estimate (3.6) bounds the integral from the axis to the given point (u, v) on the outgoing null hypersurface \(C_u\). It will be used if we want to control some quantity by using the data on the axis, e.g., the mass m. Estimate (3.7) controls the integral from the point \((u_0, v)\) on the initial hypersurface \(C_{u_0}\) to the given point (u, v). We will use it when we want to control the solution from the data given on \(C_{u_0}\).

Estimates for \(\phi \)

The following lemma will be crucial for many estimates to follow.

Lemma 3.2

For any \(u_{1} \le u_{2}\), we have

Hence, under the bootstrap assumptions (3.1)–(3.3), we have

Proof

Equation (3.8) is an immediate consequence of the equation (2.8). Then (3.9) follows from (3.1) and (3.4). \(\square \)

We now derive estimates for the scalar field \(\phi \) and its \(\lambda ^{-1} \partial _{v}\) derivatives.

Proposition 3.3

Under the bootstrap assumptions (3.1)–(3.3), we have the following estimates for the scalar field:

Here the implicit constants depend only on \(\gamma \).

Proof

By (2.6), we have the integral formula

Then using Lemma 3.2 and the bootstrap assumption (3.2), we have

Recalling that \(\lambda ^{-1} \partial _{v} (r \phi )(u_{0}, v) = \Phi (v)\), the desired estimate (3.10) follows.

Once we have estimate (3.10), we can then use the averaging formula (2.17) to control the scalar field \(\phi \):

Here we have used the condition (1.7) and the relation (3.5) to estimate the integral of \(\Phi (v')\). The inequality

follows from the fact that \(r_+=\max \{1,r\}\), \(r\ge 0\), \(0<\gamma <1\).

Estimate (3.10) for \(\partial _v(r\phi )\) and estimate (3.11) together with (2.23) give us the following bound for \(\lambda ^{-1} \partial _{v} \phi \):

Such an estimate is favorable in the region far away from the axis.

On the other hand, using the differentiated averaging formula (2.21) and the bootstrap assumption (3.3), we are able to show that \(\partial _{v}\phi \) is uniformly bounded near the axis, which completes the proof of (3.12):

Finally, (3.13) follows from the commutation formula

as well as the bootstrap assumption (3.3) and estimate (3.12). \(\square \)

Estimates for the Hawking mass

Once we have estimate for the solution \(\partial _v\phi \), we can derive bounds for the mass m.

Proposition 3.4

Under the bootstrap assumptions (3.1)–(3.3), we have

We remark that the gain of the positive power in r is crucial to close the bootstrap assumptions on the nonlinearity near the axis. Indeed, as a quick consequence of (3.16), we have

for all \(0\le k\le 3\), where the constant C depends only on k and \(\gamma \).

Proof

Recall that \(\frac{1}{2} \le 1-\mu \le 1\) by the bootstrap assumption (3.1). From estimates (3.10)–(3.12), we can show that

Here we have used the condition (1.7) to control the integral of \(|\Phi (v')|\). \(\square \)

We also derive estimates for \(\lambda ^{-1} \partial _{v}\) of 2m and \(2m / r^{2}\), which will be needed for closing the bootstrap assumption for \((\lambda ^{-1} \partial _{v})^{2} (r \phi )\).

Proposition 3.5

Under the bootstrap assumptions (3.1)–(3.3), we have

An important point is that \(\lambda ^{-1} \partial _{v} \Big ( \frac{2m}{r^{2}} \Big )\) is uniformly bounded near the axis; this fact will be clear by the use of the differentiated averaging formula (2.22).

Proof

Estimate (3.19) is a simple consequence of the equation (2.10), as well as the bootstrap assumption (3.1) and estimate (3.12).

To establish (3.20), we begin by showing that

which is acceptable in the region \(\{r \le 1\}\) near the axis. Using the differentiated averaging formula (2.22), we have

To estimate the last line, we expand

Then by (3.12), (3.13), (3.19) and the fact that \(\mu \ge 0\), it follows that the absolute value of the preceding expression is uniformly bounded by \(\lesssim \epsilon ^{2}\). Hence (3.21) is proved.

In order to complete the proof of (3.20), it suffices to prove

which is favorable in the region \(\{r \ge 1\}\) away from the axis. In this case, recall that by (2.24), we have

The desired estimate (3.22) now follows from (3.16) and (3.19). \(\square \)

Closing the bootstrap assumptions

The purpose of this subsection is to improve the bootstrap assumptions (3.1)–(3.3), using the estimates for the scalar field in Proposition 3.3 and the bounds for the mass in Propositions 3.4 and 3.5. Combined with local well-posedness of (SSESF) for \(C^{1}\) solutions, global existence of the solution then follows.

We begin by improving the bootstrap assumption (3.1) on the geometry. A corollary of Proposition 3.4 is that \(\mu = \frac{2m}{r}\) is small for sufficiently small \(\epsilon \); this improves the bootstrap assumption on \(1-\mu \).

Corollary 3.6

Under the bootstrap assumptions (3.1)–(3.3), we have

for some constant C depending only on \(\gamma \).

To close the bootstrap argument for \(\lambda \), as well as for (3.2) below, a key role is played by the following lemma.

Lemma 3.7

Under the bootstrap assumptions (3.1)–(3.3), we have

for some constant C depending only on \(\gamma \).

Proof

The desired estimate follows from (3.1) and (3.16) in Proposition 3.4. \(\square \)

With Lemma 3.7, we can immediately prove an improved bound for \(\lambda \).

Proposition 3.8

Under the bootstrap assumptions (3.1)–(3.3), we have

for some constant C depending only on \(\gamma \).

Proof

By integrating (2.8) and using (3.24), we can show that

where we have used Lemma 3.1 on the last line. Recalling our initial gauge condition that \(\lambda =\frac{1}{2}\) on the initial hypersurface \(C_{u_0}\), (3.25) follows. \(\square \)

In order to estimate \(\nu \), the following lemma is needed.

Lemma 3.9

Under the assumption (1.7) on the initial data, for all \(u<v^*<v\) we have

for some constant C depending only on \(\gamma \).

Proof

Let

for \(s\ge v^*\). Then \(F'(s)=|\Phi (s)|\). From the assumption (1.7), we have

Therefore we can show that

as desired. \(\square \)

Using the above lemma, we now estimate \(\nu \).

Proposition 3.10

Under the bootstrap assumptions (3.1)–(3.3), we have

for some constant C depending only on \(\gamma \).

Proof

We rely on the Raychaudhuri equation (2.12). From that we obtain the representation for \(\nu \):

To control the integral on the right-hand side, define \(v^*\) to be the unique v value such that \(r(u, v^*)=1\). We then divide the integral into the regions \([u, v^*]\) and \([v^*, v]\). By (1.7), (3.12) and (3.26), we have

Here we have used estimate (3.7) to bound the integral of \(r_+^{-1-2\gamma }\). Using also the following identities on the axis \(\Gamma \)

we can bound \(\nu \) as follows:

for some constant C depending only on \(\gamma \). Moreover, since the last term in (3.28) is non-negative, we have the trivial bound

Then from Corollary 3.6 and estimate (3.25) in Proposition 3.8, we have

for some constant C depending only on \(\gamma \). Estimate (3.27) in the proposition then follows. \(\square \)

Next, we establish an estimate for the inhomogeneous part of \(\lambda ^{-1} \partial _{v} (r \phi )\), which improves the bootstrap assumption (3.2). The key ingredient is Lemma 3.7.

Proposition 3.11

Under the bootstrap assumptions (3.1)–(3.3), we can show that

for some constant C depending only on \(\gamma \).

Proof

Using Lemma 3.7 and (3.11), we may estimate

where we have used estimate (3.7) to bound the integral. \(\square \)

It remains to close the bootstrap assumption (3.3) for \((\lambda ^{-1} \partial _{v})^{2} (r \phi )\). Analogous to the role played by Lemma 3.7 in the preceding proof, this task requires a good bound on the factor

This is the subject of the following lemma.

Lemma 3.12

Under the bootstrap assumptions (3.1)–(3.3), we have

Proof

Estimate (3.30) is an immediate consequence of the Raychaudhuri equation (2.12), the bootstrap assumption (3.1) and estimate (3.12). Estimate (3.31) then follows from (3.16), (3.20) and the preceding bound. \(\square \)

We are ready to prove an improved estimate for \((\lambda ^{-1} \partial _{v})^{2} (r \phi )\).

Proposition 3.13

Under the bootstrap assumptions (3.1)–(3.3), we have

for some constant C depending only on \(\gamma \).

Proof

Commuting \(\lambda ^{-1} \partial _{v}\) with the equation (2.6) for \((\lambda ^{-1} \partial _{v}) \phi \), we arrive at the equation

Hence we have

By (3.9) and the bootstrap assumption (3.1), the integration factor is bounded by

Combined with the initial condition \(\vert \lambda ^{-1} \partial _{v} \Phi \vert \le \epsilon \), we see that the contribution of the data on \(C_{u_{0}}\) is acceptable. Hence, using (3.34) again, recalling the definition of \(N_{2}(u, v)\) and using Leibniz’s rule, it only remains to establish

uniformly in \(u \in [u_{0}, v]\) and v. This bound is an immediate consequence of the Leibniz rule, (3.10), (3.12), (3.24) and (3.31). \(\square \)

By Corollary 3.6 and Propositions 3.8, 3.10, 3.11 and 3.13, there exists a constant \(0< \epsilon _{1} < 1\) (depending only on \(\gamma \)) such that the bootstrap assumptions (3.1)–(3.3) for \(\mathcal Q_{[u_{0}, u_{1}]}\) are improved if \(\epsilon \le \epsilon _{1}\). Then by a standard continuity argument, the \(C^{1}\) solution \((r, \phi , m)\) exists globally on \(\mathcal Q_{[u_{0}, \infty )}\), which satisfies the bootstrapped bounds (3.1)–(3.3) as well as the estimates derived in this section so far.

In the remainder of this section, we require that \(\epsilon \le \epsilon _{1}\) and take \((r, \phi , m)\) to be such a global \(C^{1}\) solution obeying (3.1)–(3.3).

Estimate for \(\partial _{v}\) derivatives of r and \(\phi \)

Let \((r, \phi , m)\) be the global \(C^{1}\) solution constructed above obeying (3.1)–(3.3); we assume furthermore that \(\epsilon< \epsilon _{1} < 1\). Here we show that \((r, \phi , m)\) obeys the estimates for \(\partial _{v}^{2} (r \phi )\) and \(\partial _{v}^{2} r\) stated in Theorem 1.1; see Corollary 3.15.

We start by establishing an estimate for \(\lambda ^{-1} \partial _{v} \log \lambda \), which follows from essentially the same estimates used in Proposition 3.13.

Proposition 3.14

For the global solution we have constructed for (SSESF), we have

where the implicit constant depends only on \(\gamma \).

Proof

Taking \(\lambda ^{-1} \partial _{v}\) of the equation for \(\partial _{u} \log \lambda \), we obtain

Note furthermore that \(\partial _{v} \log \lambda (u_{0}, v)= 0\), due to the initial gauge condition \(\lambda = \frac{1}{2}\). Hence

As before, the integration factor can be bounded by (3.9) in Lemma 3.2 and the bound (3.1) on the geometry i.e.,

Therefore it only remains to prove

uniformly in u, which in turn is a quick consequence of (3.31). \(\square \)

Since \(\lambda ^{-1} \partial _{v} \log \lambda = \lambda ^{-2} \partial _{v} \lambda \), the previous proposition gives an estimate for \(\partial _{v} \lambda \). In turn, this estimate can be used bound \(\partial _{v}^{2}(r \phi )\); indeed

and we have estimates (3.3) and (3.10) for \((\lambda ^{-1} \partial _{v})^{2} (r \phi )\) and \(\lambda ^{-1} \partial _{v} (r \phi )\), respectively. We record these bounds in the following corollary.

Corollary 3.15

For the global solution we have constructed for (SSESF), we have

where the implicit constant depends only on \(\gamma \).

Estimates for \(\partial _u\) derivatives of r and \(\phi \)

As before, let \(\epsilon< \epsilon _{1} < 1\) and take \((r, \phi , m)\) to be a \(C^{1}\) solution obeying (3.1)–(3.3) on \(u \in [u_{0}, \infty )\). We now derive estimates for the outgoing wave \(\nu ^{-1} \partial _{u}(r \phi )\) and \((\nu ^{-1} \partial _{u})^{2}(r \phi )\), as well as \(\nu ^{-1} \partial _{u} \log \nu \).

Proposition 3.16

For the global solution we have constructed for (SSESF), we have the following estimates for the \(\partial _u\) derivatives of r and \(\phi \):

for some constant C depending only on \(\gamma \).

Proof

We start with (3.36). By the boundary condition \((\lambda ^{-1} \partial _{v} - \nu ^{-1} \partial _{u}) (r \phi ) (u, u) = 0\) and (3.10), it follows that

Similar to the case of \(\lambda ^{-1}\partial _v(r\phi )\), the equation for \(\partial _{v}(\nu ^{-1} \partial _{u} (r \phi ) )\) leads to the following integral formula for \(\nu ^{-1}\partial _u(r\phi )\):

For any \(v_1<v_2\), we have the identity

Then from the bound (3.1), we derive

Here we used estimate (3.16) to control \(\frac{2m}{r^{2}}\), (3.11) for \(\vert \phi \vert \) and (3.6) of Lemma 3.1 to bound the integral.

Next, we turn to \(\nu ^{-1} \partial _{u} \phi \). Simply by commuting \(\nu ^{-1} \partial _{u}\) with r, we have

Hence by (3.11) and (3.36), we obtain

which is favorable away from the axis.

To obtain the uniform boundedness of \(\nu ^{-1} \partial _u \phi \) near the axis, one option is to use an averaging formula for \(\nu ^{-1} \partial _{u} \phi \) (as in the proof of (3.12) for \(\lambda ^{-1} \partial _{v} \phi \)), which relies on proving a bound for \((\nu ^{-1} \partial _{u})^{2} (r \phi )\). Alternatively, using the bound that we already have for \(\lambda ^{-1}\partial _v\phi \), we can derive the desired uniform bound of \(\nu ^{-1} \partial _u\phi \) near the axis from the previous estimate (3.36). Indeed, commuting r and \(\partial _{u}\) in estimate (3.42), we can show that

Here we have used the equation (SSESF) on the geometry \(\partial _v\nu =\partial _v\partial _u r\), the estimate for the integral of \(mr^{-2}\phi \), which has been carried out in the previous estimate (3.36), and (3.12) for \(\lambda ^{-1} \partial _{v} \phi \). Dividing by r on both sides, it follows that

which proves (3.37).

Finally, we prove the bounds (3.38) and (3.39) for \((\nu ^{-1} \partial _{u})^{2} (r \phi )\) and \(\nu ^{-1} \partial _{u} \log \nu \), respectively. As before, one may proceed in analogy with the cases of \((\lambda ^{-1} \partial _{v})^{2} (r \phi )\) (Proposition 3.13) and \(\lambda ^{-1} \partial _{v} \log \lambda \) (Proposition 3.14), using averaging formulae near the axis and commutation of r and \(\nu ^{-1} \partial _{u}\) far away. However, for the sake of simplicity, we take a more direct route here, exploiting the bound (3.37) that is already closed.

We begin by bounding the data for \((\nu ^{-1} \partial _{u})^{2} (r \phi )\) and \(\nu ^{-1} \partial _{u} \log \nu \) on the axis. By the regularity of \((r, \phi , m)\), it follows that

By the boundary condition (2.1), the wave equations for \(\phi \) and r and the estimates proved so far, we see that the mixed derivative terms involving \((\lambda ^{-1} \partial _{v}) (\nu ^{-1} \partial _{u})\) and \((\nu ^{-1} \partial _{u}) (\lambda ^{-1} \partial _{v})\) vanish. By (3.3) and (3.35), it follows that

Next, a direct computation shows that

These lead to integral formulae for \((\nu ^{-1} \partial _{u})^{2} (r \phi )\) and \(\nu ^{-1} \partial _{u} \log \nu \), with integrating factors that are bounded by (3.41). Using (3.1), (3.16) and (3.37), we may estimate

as well as

This completes the proof of estimates (3.38) and (3.39). \(\square \)

By an argument similar to that for Corollary 3.15, we obtain the following bounds on \(\partial _{u}^{2}(r \phi )\) and \(\partial _{u}^{2} r\) from Proposition 3.16.

Corollary 3.17

For the global solution we have constructed for (SSESF), we have

where the implicit constant depends only on \(\gamma \).

This completes the proof of the second order derivative bounds for \(r \phi \) and r stated in Theorem 1.1.

Estimates for higher derivatives of r and \(\phi \)

Finally, we derive estimates for higher derivatives of r and \(\phi \), which are uniform with respect to choice of an initial null curve \(C_{u_{0}}\). These estimates require an additional \(C^{2}\) regularity assumption on the initial data \(\Phi \), as well as possibly taking \(\epsilon \) smaller compared to a constant depending only on \(\gamma \). They will be crucial for passing to the limit \(u_{0} \rightarrow - \infty \) in the next section.

Given \(u_{0} \in \mathbb R\) and \(\Phi \) satisfying (1.7) with \(\epsilon \le \epsilon _{1}\), let \((r, \phi , m)\) be the global \(C^{1}\) solution to (SSESF) that we have constructed earlier. Suppose furthermore that \(\Phi \) belongs to \(C^{2}\). By a routine persistence of regularity argument, it follows that \(\log \lambda \), \(\log \nu \), \(\lambda ^{-1} \partial _{v}(r \phi )\) and \(\nu ^{-1} \partial _{u} (r \phi )\) are \(C^{2}\) on their domain; in short, we will say that \((r, \phi , m)\) is a global \(C^{2}\) solution to (SSESF). Our goal is to show that, by taking \(\epsilon \) smaller if necessary, the \(C^{2}\) norm of these variables obeys a uniform bound independent of \(u_{0}\). A more precise statement is as follows.

Proposition 3.18

Given \(v_{0} \in [u_{0}, \infty )\), let

where \(\lambda _{0} = \frac{1}{2}\), and let \(\mathcal D(u_{0}, v_{0})\) be the domain of dependence of the curve \(\{u_{0}\} \times [u_{0}, v_{0}]\), i.e.,

There exists a constant \(\epsilon _{2} > 0\), which is independent of \(u_{0}, v_{0}\) and A, such that if \(\epsilon \le \epsilon _{2}\) then we have the uniform bounds

with an implicit constant independent of \(u_{0}\), \(v_{0}\), A and \(\epsilon \).

We begin by establishing (3.44) and (3.45). As in the proofs of Propositions 3.13 and 3.14, the key step is to bound

To achieve this end, we need a few preliminary estimates for \(\lambda ^{-1} \partial _{v}\) derivatives of \(\phi \) and m. The ensuing computation is somewhat tedious, but the principle is simple: We rely on the differentiated averaging formulae (see Lemma 2.6) to derive estimates which are favorable near the axis \(\{r = 0\}\), whereas we simply commute r with \(\lambda ^{-1} \partial _{v}\) in the region \(\{r \gtrsim 1\}\) away from the axis.

Lemma 3.19

For the global \(C^{2}\) solution considered above, the following estimates hold.

Proof

Since it is rather routine, we will only sketch the proof of each estimate, specifying the relevant computation and previous bounds needed.

Estimate (3.49) follows directly from taking \((\lambda ^{-1}\partial _{v})^{2}\) of the averaging formula (2.17) for \(\phi \) using Lemma 2.6 and bounding the resulting term using (2.19).

For (3.50), we simply commute r with \((\lambda ^{-1} \partial _{v})^{3}\) to arrive at the formula

from which (3.50) follows using (3.49).

For (3.51), we compute

then use (3.12), (3.13) and (3.19) to estimate the right-hand side.

To prove (3.52), we first use Lemma 2.6 to take \((\lambda ^{-1} \partial _{v})^{2}\) of the averaging formula (2.18), which leads to

Expanding the right-hand side, we obtain the formula

Then the desired estimate follows using (3.12), (3.13), (3.19), (3.49), (3.50) and (3.51).

For (3.53), we commute \(r^{2}\) with \(\lambda ^{-1} \partial _{v}\) and obtain

Then the desired estimate follows from (3.16), (3.19) and (3.51).

Finally, for (3.54), we first compute

and then use (3.1), (3.12), (3.13) to estimate the right-hand side. \(\square \)

As an immediate consequence of Lemma 3.19, we have

These can be combined to a single slightly weaker but more convenient bound as follows.

Corollary 3.20

For the global \(C^{2}\) solution considered above, we have

We are ready to establish (3.44) and (3.45). The proofs are similar to those of Propositions 3.13 and 3.14, respectively.

Proof of (3.44) and (3.45)

We first prove (3.44). Commuting \((\lambda ^{-1} \partial _{v})^{2}\) with the equation (2.6) using (2.14), we obtain

As in the proof of Proposition 3.13, we may derive an integral formula for \((\lambda ^{-1} \partial _{v})^{3} (r \phi )\), where the integration factor is uniformly bounded by (3.9). Then we have

For \((u, v) \in \mathcal D(u_{0}, v_{0})\), we claim that

Once (3.56) is proved, the desired estimate (3.44) follows by taking \(\epsilon > 0\) sufficiently small and absorbing the term \(\epsilon ^{2} \sup _{\mathcal D(u_{0}, v_{0})} \vert (\lambda ^{-1} \partial _{v})^{3} (r \phi )\vert \) into the left-hand side.

To establish (3.56), we first expand \(N_{3}\) as

Then using (3.3), (3.10), (3.11), (3.12), (3.24), (3.31) and (3.55), the desired estimate (3.56) follows.

Next, to establish (3.45), we first commute \((\lambda ^{-1} \partial _{v})^{2}\) with the equation (2.8) using (2.14) to obtain

Since \((\lambda ^{-1} \partial _{v})^{2} \log \lambda (u_{0}, v) = 0\) thanks to the initial gauge condition \(\lambda (u_{0}, v) = \frac{1}{2}\), we have

where we again used (3.9) to bound the integration factor. By (3.31), (3.35) and (3.55), as well as (3.44) that we just proved, we have

which proves (3.45). \(\square \)

It remains to prove (3.46) and (3.47). This can be done by a similar argument as in the proofs of(3.44) and (3.45), with the roles of u and v interchanged. To avoid repetition, we only sketch the argument.

Sketch of proof of (3.46) and (3.47)

As in Lemma 3.19, we can prove that

Proceeding as in the proofs of Lemmas 3.7, 3.12 and Corollary 3.20, we also obtain

Furthermore, since \(\partial _{v} r\), \(\partial _{u} r\), \(\partial _{v}(r \phi )\) and \(\partial _{u}(r \phi )\) are \(C^{2}\) up to the axis \(\Gamma = \{u = v\}\), we have

Then by the wave equations for r and \(\phi \), as well as (3.44)–(3.45), we obtain

Commuting \((\nu ^{-1} \partial _{u})^{2}\) with (2.7) and (2.9), estimating the initial data at \(v = u\) by the preceding bounds and estimating the inhomogeneous terms using the earlier bounds, the desired estimates (3.46) and (3.47) follow as in the proofs of (3.44) and (3.45). \(\square \)

Forward- and backward-in-time global solution

The goal of this section is to deduce Theorem 1.8 from Theorem 1.1 and Proposition 3.18. The proof of the causal geodesic completeness assertions are again postponed to Section 6.

Let \(\Phi \) be a \(C^{2}\) function on \(\mathbb R\) satisfying the hypothesis of Theorem 1.8. Define alsoFootnote 14

where \(\lambda _{0} = \frac{1}{2}\). Consider a sequence \(u_{n} \in \mathbb R\) tending to \(-\infty \) as \(n \rightarrow \infty \). For each \(n = 1, 2, \ldots \), let \((r^{(n)}, \phi ^{(n)}, m^{(n)})\) be the solution of (SSESF) with \(\lambda ^{(n)} \upharpoonright _{C_{u_{n}}} = \partial _{v} r^{(n)} \upharpoonright _{C_{u_{n}}} = \frac{1}{2}\) and

Let \(\epsilon > 0\) be sufficiently small (depending on \(\gamma > 0\)), so that Theorem 1.1 applies to each solution \((r^{(n)}, \phi ^{(n)}, m^{(n)})\). Our aim now is to show that \((r^{(n)}, \phi ^{(n)}, m^{(n)})\) tends to a solution \((r, \phi , m)\) that obeys the conclusions of Theorem 1.8.

As a consequence of Theorem 1.1, Proposition 3.18 and the estimates (3.12), (3.37), (3.49) and (3.57), for \(\epsilon >0\) sufficiently small we have the uniform bounds

Uniform bounds for a corresponding number of mixed derivatives follow from the wave equations for \(\phi \) and r. Using the equations for m (see also the bounds (3.16), (3.19) and (3.51)), it also follows that the \(C^{2}\) norm of \(m^{(n)}\) is uniformly bounded on any compact subset of \(\mathcal Q_{[u_{n}, \infty )}\). Hence by the Arzela-Ascoli theorem, there exists a limit \((r, \phi , m)\) on \(\mathcal Q\) such that \(r = m = 0\) on \(\Gamma \), and

on every compact subset \(\Omega \subseteq \mathcal Q\). By this convergence, it is clear that \((r, \phi , m)\) solves (SSESF) in the classical sense. Moreover, the a priori bounds we have proved in the finite \(u_{0}\) case (e.g., (1.8) and (1.9)) still hold for the limiting solution \((r, \phi , m)\), as long as they are uniform in \(u_{0}\).

It remains to justify that the limiting solution \((r, \phi , m)\) assumes \(\Phi \) as the data on the past null infinity. More precisely, we claim that

for every \(v \in (-\infty , \infty )\).

Recalling the proof of Proposition 3.8, for any \(u \ge u_{n}\) we have

Taking the limit \(n \rightarrow \infty \) first and then letting \(u \rightarrow -\infty \), we obtain the desired statement for \(\lambda \). Similarly, proceeding as in the proof of Proposition 3.11,

where we recall that \(\lambda ^{(n)}(u_{n}, v) = \frac{1}{2}\). Taking the limits \(n \rightarrow \infty \) and \(u \rightarrow -\infty \) in order as before, we obtain (4.1).

Remark 4.1

As a byproduct of the Arzela-Ascoli theorem, observe that \(\partial _{v}^{2} (r \phi )\), \(\partial _{u}^{2} (r \phi )\), \(\partial _{v}^{2} r\) and \(\partial _{u}^{2} r\) are Lipschitz. Their weak derivatives obey the bounds

Proof of Corollary 1.4

In this section, we prove Corollary 1.4, i.e., we show that the initial data \(\Phi \) can be chosen to satisfy the assumptions of Theorem 1.1 while at the same time having infinite BV norm and infinite Bondi mass.

Proof of Corollary 1.4

Let \(\chi :\mathbb R\rightarrow [0,1]\) be a non-negative smooth bump function such that \(\chi \) is compactly supported in [1, 6] and \(\chi (x)=1\) for \(x\in [3,4]\). For some \(\epsilon '>0\) to be fixed later, let \(\Phi \) be defined by the following sum of translated bump functions:

We will show that \(\Phi \) satisfies the assumptions of Theorem 1.1 and have infinite BV norm and initial Bondi mass.

Step 1: Verifying (1.7) Since \(k\ge 3\), for every v at most one term in the sum (5.1) is non-zero. Therefore, for every \(\epsilon >0\), one can choose \(\epsilon '>0\) sufficiently small such that \(|\Phi (v)|+|\Phi '(v)|\le \epsilon \). This gives the second condition in (1.7).

Fix any \(\gamma >0\). Given u and v such that \(u_0\le u\le v\), we consider two cases. If \(v-u\le 5\), then we just use the bound

If \(v-u>5\), we use the fact that the support of at most \((\log _2 \lfloor v-u \rfloor )+100\) bumps intersect the interval (u, v). Since \(\int _{-\infty }^{\infty } \chi (x) \, \mathrm {d}x\le 5\), we thus have

for some \(C_{\gamma }>0\) depending only on \(\gamma \) as long as \(\gamma <1\). In both cases, the first condition in (1.7) is satisfied after choosing \(\epsilon '\) to be sufficiently small depending on \(\epsilon \) and \(\gamma \).

Step 2: Infinite BV norm We now show that \(\Phi \) gives rise to data with infinite BV norm. To this end, one observes that \(\int _{-\infty }^\infty |\chi '(x)| \, \mathrm {d}x\ge c_{\chi }\) for some \(c_{\chi }>0\). Hence

Step 3: Infinite Bondi mass Finally, we prove that the data have infinite initial Bondi mass, i.e., the limit of the Hawking mass as \(v\rightarrow \infty \) is infinite. We first recall that the Hawking mass obeys the following equation

i.e.,

This implies

To compute the limit as \(v\rightarrow \infty \), we first write \(\partial _v\phi \) in terms of \(\Phi \). We then show that with the choice of \(\Phi \) in (5.1), \(r\frac{(\partial _v\phi )^2}{\lambda }\) is integrable, while \(r^2\frac{(\partial _v\phi )^2}{\lambda }\) is not, thus demonstrating that \(\lim _{v\rightarrow \infty } m(u_0,v)=\infty \).

To compute \(\partial _v\phi \), we note that

In other words, using also the following condition on \(C_{u_0}\)

we get

Therefore, for some \(C>0\) independent of \(\epsilon '\), we have

On the last line, we used the bound \(\int _{u_{0}}^{v'} \Phi (v'') \, \mathrm {d}v'' \lesssim \epsilon ' (1 + \log (v' - u_{0} + 2))\), as well as the fact that \(\Phi (v) = 0\) for \(u_{0} \le v \le u_{0} + 9\) by definition. Moreover, in a similar manner, we haveFootnote 15

Therefore, by (5.2), we obtain

for some \(0<c<C\). On the other hand, by a similar argument as the proof of the infinitude of the BV norm, we get

Combining (5.4) and (5.5) gives

as \(v\rightarrow \infty \), as is to be proved. \(\square \)

Remark 5.1

We note that for the data given by (5.1) in the proof of Corollary 1.4, the global solution that arises from the data (which exists by Theorem 1.1) in fact has infinite BV norm on each \(C_{u}\), as well as infinite Bondi mass everywhere along future null infinity. More precisely, for every \(u\ge u_0\), we have

To establish the infinitude of the BV norm, note first that by (3.33) and (3.34), we have

where

Note furthermore that \(\Phi \) as given by (5.1) satisfies the assumptions of Theorem 1.1 with any \(\gamma \in (0,1)\). Using estimates (3.10), (3.11), (3.12), (3.24) and (3.31), as well as exploiting the explicit form of \(\vert \Phi (v)\vert \) in (5.1), it can be shown that

On the other hand, since \(\lim _{v \rightarrow \infty } \int _{u}^{v} \vert (\lambda ^{-1} \partial _{v}) \Phi (v')\vert \, \mathrm {d}v' = \infty \) as in the proof of Corollary 1.4, the desired conclusion follows.

Next, to see that the Bondi mass is infinite everywhere along future null infinity, we again apply Theorem 1.1, but now with \(\gamma > \frac{1}{2}\). Then according to (2.23), (3.4), (3.11), (3.14) and (3.25), it can be seen that that the main contribution to the Bondi mass is given by \(\lim _{v\rightarrow \infty }\int _{u}^v \Phi ^2(u,v') \, \mathrm {d}v'\) in a similar manner as in the proof of Corollary 1.4. Therefore, one can argue as in the proof of Corollary 1.4 to show that the Bondi mass is infinite for every \(u\ge u_0\).

Causal geodesic completeness

In this section, we complete the proof of Theorems 1.1 and 1.8 by establishing causal geodesic completeness of the solutions constructed in Sections 3 and 4. We will first show the future causal geodesic completeness of these spacetimes. Once this is achieved, it is easy to see that the past causal geodesic completeness for solutions constructed in Theorem 1.8 can be proven in an almost identical manner. We will return to this at the end of the section.

Geodesics in \(\mathcal M\)

Let \(\gamma : I \rightarrow \mathcal M\) (where I is an interval) be a future pointing causal geodesic on the spacetime \(\mathcal M\) constructed in Theorems 1.1 or 1.8. Given any function f on \(\mathcal M\), we adopt the convention of denoting by f(s) the value of f at the point \(\gamma (s)\) i.e., \(f(s) = f(\gamma (s))\). We also write \(\dot{f}(s) = \frac{\mathrm {d}}{\mathrm {d}s} f(s)\) and \(\ddot{f}(s) = \frac{\mathrm {d}^{2}}{\mathrm {d}s^{2}} f(s)\).

In order to describe the geodesic \(\gamma \), it is convenient to use the double null coordinates \((u, v, \theta , \varphi )\) whenever possible, since then we can directly use the bounds in Theorem 1.1. Under our convention, we may write

Let us note that these are only defined away from the axis \(\Gamma \). On the other hand, it is easy to verify that in fact v(s) and u(s) can be extended to continuous functions in \(\mathcal M\). (Notice that in contrast, \(\dot{u}(s)\) and \(\dot{v}(s)\) may be discontinuous.)

As \(\gamma \) is future pointing causal, we have

We now discuss conserved quantities of a geodesic. We denote by \(\mathbf{C}^{2}\) (the minus of) the magnitude of the 4-velocity \(\dot{\gamma }(s)\), i.e.,

where we recall the metric

on \(\mathcal M\). The quantity \(\mathbf{C}^{2}\) is conserved (i.e., constant in s). The choice of the sign is so that \(\mathbf{C}^{2} > 0\) when \(\gamma \) is a time-like geodesic. In the double null coordinates, it takes the form

Since the spacetime \((\mathcal M, g)\) is spherically symmetric, conservation of angular momentum holds for geodesics. Let \(\Omega _{x}\), \(\Omega _{y}\), \(\Omega _{z}\) be the standard generators of the rotation group \(\mathrm {SO}(3)\) (i.e., infinitesimal rotations about the x-, y-, and z-axes). Let

It can be easily verified that \(J_{x}\), \(J_{y}\), \(J_{z}\) are conserved. We define the (conserved) total angular momentum squared as

In the double null coordinates, \(\mathbf{J}^{2}\) takes the form

This statement is an immediate consequence of the identity

which concerns only the standard sphere \(\mathbb S^{2}\). This identity, in turn, can be verified by observing that each side defines a contravariant symmetric 2-tensor on \(\mathbb S^{2}\) which is invariant under rotations (i.e., Lie derivatives with respect to \(\Omega _{x}\), \(\Omega _{y}\), \(\Omega _{y}\) vanish) and yields 1 when tested against \(\mathrm {d}\varphi \otimes \mathrm {d}\varphi \) on the equator \(\theta = \frac{\pi }{2}\); such a tensor is clearly unique.

By (6.1) and (6.2), we obtain the useful identity

where \(\mathbf{C}^{2}\) and \(\mathbf{J}^{2}\) are conserved.

A basic tool for studying completeness of future pointing causal geodesics is the following lemma.

Lemma 6.1

[Continuation of future pointing causal geodesics] Any future pointing causal geodesic \(\gamma : [0, s_{f}) \rightarrow \mathcal M\) can be continued past \(s_{f}\) if there exists a compact subset \(K \subseteq \mathcal M\) such that \(\{\gamma (s) \in \mathcal M: s \in [0, s_{f})\} \subseteq K\).

Proof

First, observe that it suffices to consider a future causal geodesic \(\gamma \) whose image intersects \(\mathcal M\setminus C_{u_{0}}\). Otherwise, the image of \(\gamma \) is contained in \(C_{u_{0}}\). Since the unique future pointing causal vector tangent to \(C_{u_{0}}\) is its null generator, \(\gamma \) is a radial null geodesic contained in \(C_{u_{0}}\), which is complete thanks to uniform boundedness of \(\vert \log \Omega \vert \) in (1.8).

Without loss of generality, we may assume that \(\gamma (0) \in \mathcal M\setminus C_{u_{0}}\). Since \(\gamma \) is future pointing causal, the closure of its image \(\gamma ([0, s_{f})) = \{\gamma (s) \in \mathcal M: s \in [0, s_{f})\}\) is disjoint from \(C_{u_{0}}\). Then by the compactness assumption, it follows that there exists a sequential limit point \(p \in \mathcal M\setminus C_{u_{0}}\) of \(\gamma ([0, s_{f}))\), i.e., there exists a sequence \(s_{n} \rightarrow s_{f}\) such that \(\gamma (s_{n}) \rightarrow p\).

Recall now the standard result that there exists a geodesically convex neighborhood around any non-boundary point in a smooth Lorentzian manifold. Let \(\mathcal U\) be a geodesically convex neighbordhood of \(p \in \mathcal M\setminus C_{u_{0}}\). By definition, there exists \(s' \in [0, s_{f})\) such that \(\gamma (s') \in \mathcal U\); since \(\gamma \) is a future pointing causal geodesic, it follows that the \(\gamma ([s', s_{f})) \subseteq \mathcal U\). Then \(\gamma \) can be continued as the unique geodesic in \(\mathcal U\) passing through \(\gamma (s')\) and p. \(\square \)

Preliminary discussions

Our strategy for proving geodesic completeness is to argue by contradiction, i.e., we assume that there is a future pointing causal geodesic \(\gamma \) which is not complete and terminates at some finite time \(s_f\) and derive a contradiction (to Lemma 6.1 or otherwise). The following is the simplest case:

Lemma 6.2

If \(\gamma (s): [0, s_f)\mapsto \mathcal M\) is incomplete, then either \(\mathbf{C}\ne 0\) or \(\mathbf{J}\ne 0\).

Proof

If \(\mathbf{C}=0\) and \(\mathbf{J}=0\), then the geodesic \(\gamma \) is a spherically symmetric constant u curve or constant v curve. These geodesics are complete since \(\frac{1}{\Omega ^2}\partial _u\) and \(\frac{1}{\Omega ^2}\partial _v\) are geodesic vector fields and \(|\log \Omega |\) is uniformly bounded. \(\square \)

Before proceeding to the other cases, we need some preliminary considerations. First, we will see that some difficulties arise because the \((u,v,\theta ,\varphi )\) coordinate system is not regular at the axis. It is therefore useful to have the following:

Lemma 6.3

If \(\gamma (s): [0, s_f)\mapsto \mathcal M\) is incomplete, then the set \(\{s: r(s)=0\}\) is a discrete subset of \([0,s_f)\) (with a possible accumulation point at \(s_f\)).

Proof

By standard existence and uniqueness theory for ODEs, it suffices to show that the axis \(\Gamma \) is a complete geodesic. (Indeed, then if \(\{s: r(s)=0\}\) is not a discrete subset of \([0,s_f)\), then the image of \(\gamma \) coincides with \(\Gamma \) and contradicts the incompleteness of \(\gamma \).) To see that \(\Gamma \) is a complete geodesic, we note that \(\lambda \upharpoonright _\Gamma =-\nu \upharpoonright _\Gamma , \partial _v\lambda \upharpoonright _\Gamma =-\partial _u\nu \upharpoonright _\Gamma \) together with \(\frac{m}{r^2}\upharpoonright _{\Gamma }=0\) imply that \(\partial _v\log \Omega \upharpoonright _\Gamma =\partial _u\log \Omega \upharpoonright _\Gamma \). Then by an explicit calculation, one checks that the vector field \(\frac{1}{\Omega }(\partial _v+\partial _u)\upharpoonright _\Gamma \), which is tangent to \(\Gamma \), is a geodesic vector field. Since \(|\log \Omega |\) is uniformly bounded, \(\Gamma \) is a complete geodesic. \(\square \)

Consider the following (smooth) quantity

where T is a smooth vector field on \(\mathcal M\) which is given in the \((u, v, \theta , \varphi )\) coordinate system by

Observe that T is radial, future time-like, and tangent to the constant r hypersurfaces, i.e., \(T r = 0\). In the \((u, v, \theta , \varphi )\) coordinates, E takes the form

In particular, this shows that away from the axis \(\Gamma \), E(s) is non-negative as \(\lambda \), \(\dot{v}\), \(-\nu \), \(\dot{u}\) are non-negative.

It will be useful to have the following slightly stronger statement:

Lemma 6.4

Let \(\gamma (s): [0, s_f)\mapsto \mathcal M\) be a future causal geodesic with either \(\mathbf{J}\ne 0\) or \(\mathbf{C}\ne 0\), then \(E(s)>0\) for \(s\in [0, s_f)\).

Proof

Case 1. \(\mathbf{J}\ne 0\). In this case, \(r(s)>0\) for \(s\in [0, s_f)\) and hence we can use the \((u,v,\theta ,\varphi )\) coordinate system. If \(E(s)=0\), then by (6.5), \(\dot{v}(s)=\dot{u}(s)=0\). By (6.3), \(0=\Omega ^2(\dot{u}\dot{v})(s)\ge r^{-2}(s)\mathbf{J}^2>0\), which is a contradiction.

Case 2. \(\mathbf{C}\ne 0\). If \(r(s)>0\), the proof proceeds in the same way as in Case 1. If \(r(s)=0\) and \(E(s)=0\), by Lemma 6.3, there exists a sequence \(\{s_i\}\) with \(s_i\rightarrow s\) such that \(r(s_i)\rightarrow 0\) and \(E(s_i)\rightarrow 0\). On the other hand, by (6.5), (6.3) and the boundedness of \(|\log \Omega |\), \(E(s_i)\gtrsim \sqrt{\dot{v}\dot{u}}(s_i)\gtrsim \mathbf{C}\), which is uniformly bounded below. This is again a contradiction. \(\square \)

Recall that

Our analysis heavily relies on the evolution equations for E(s), \(\dot{r}(s)\) and \(\gamma (s)\).

Lemma 6.5

Let \(\gamma \) be a geodesic on \(\mathcal M\). If \(\gamma (s) = (u, v, \theta , \varphi )(s)\) lies outside the axis \(\Gamma \), then

Proof

In a coordinate patch, recall that the geodesic equation reads \(\ddot{\gamma }^{\lambda } = - \Gamma _{\alpha \beta }^{\lambda } \dot{\gamma }^{\alpha } \dot{\gamma }^{\beta }\). Hence in order to find the equation for \(\ddot{v}\), it suffices to compute the Christoffel symbols of the form \(\Gamma _{\alpha \beta }^{v}\). By explicit computation, it can be verified that

while all the other components vanish. Recalling the identity (6.2), the equation for \(\ddot{v}\) follows. Similarly we have the equation for \(\ddot{u}\).

Next we use the equations for \(\dot{u}\), \(\dot{v}\) to derive the evolution equations for \(\dot{r}\) and E. By using the Raychaudhuri equations (2.12), (2.13), we can compute that

Here note that \(\partial _u\lambda =\partial _v \nu \). By the definition of E(s) and the equation for \(\dot{r}(s)\), the plus case leads to the equation for \(\dot{r}(s)\) while the minus case gives the equation for E(s). \(\square \)

Basic features of incomplete geodesics in \(\mathcal M\)

Now a very basic feature of an incomplete geodesic is that the quantity E(s) blows up.

Lemma 6.6

If \(\gamma (s): [0, s_f)\mapsto \mathcal M\) is incomplete with \(\mathbf{J}\ne 0\) or \(\mathbf{C}\ne 0\), then

for some constant C depending only on the constants in Theorem 1.1.

Proof

Step 1 We first claim that

To see this, first note the estimate \(\dot{u}(s)+\dot{v}(s)\le CE(s)\) for some \(C>0\), which is a consequence of (6.5), and holds away from the axis. By Lemma 6.3 and using the continuity of u(s) and v(s) (which also holds at the axis), we thus deduce that if \(\limsup \limits _{s\rightarrow s_f} E(s)\) is bounded, then u, v are bounded. In particular the geodesic \(\gamma \) lies in a compact set in \(\mathcal M\). By Lemma 6.1, the geodesic can be continued beyond \(s_f\) which contradicts the assumption.

Step 2 We next make use of the evolution equation for E(s) obtained in the previous lemma. The bounds (1.9) on \(\phi \) imply that \( r(\partial _u\phi )^2+r(\partial _v\phi )^2\) is bounded above. Therefore, for any s such that \(r(s)>0\), we have

for some constant \(C_*>0\). We now divide into two cases.

Case 1. There exists \(s_0\in [0, s_f)\) such that \(r(s)>0\) whenever \(s\ge s_0\). Let \(s_0<s_*<s_{**}<s_f\). Integrating (6.9) from \(s_*\) to \(s_{**}\), we get

Notice that this makes sense thanks to Lemma 6.4. Taking \(\liminf _{s_{**}\rightarrow s_f}\) and using Step 1, we thus obtain

for every \(s_* \in (s_0,s_f)\), as desiredFootnote 16.

Case 2. There exists a sequence \(\{s_k\}\) with \(s_k\rightarrow s_f\) such that \(r(s_k)=0\). By Lemma 6.3, we can assume that \(r(s)>0\) if \(s\ne s_k\). In this case we need to be slightly more careful since (6.9) only holds when \(s\ne s_k\).

Let \(s_*, s_{**}\in [0,s_f)\) be such that \(s_{**}> s_{*}\ge s_2\) and let \(k_*=\min \{k : s_k\ge s_*\}\) and \(k_{**}=\max \{k : s_k\le s_{**}\}\). Assume that \(k_{**}>k_*\). We then compute

This again leads to (6.10) and gives the desired conclusion as in Case 1. \(\square \)

Another feature of future causal incomplete geodesics is that they approach the axis (at least along a sequence of times). More precisely, we have

Lemma 6.7

If \(\gamma (s): [0, s_f)\mapsto \mathcal M\) is incomplete, then for any \(r_0>0\) and any \(s_0\in [0,s_f)\), there exists \(s\in [s_0, s_f)\) such that \(r(s)<r_0\).

Proof

Suppose not, i.e., we assume that \(r(s) \ge r_{0}\) for all \(s\in [s_0, s_f)\) and some constants \(r_0>0\) and \(s_0\in [0,s_f)\). Consider the geodesic equation (6.7) for \(\dot{v}\). We can write

Hence, we have \(\ddot{v} (s) = - \dot{R}(s) \dot{v}(s) + F(s)\), where

It follows that

By the bounds in Theorem 1.1 (which also holds for solutions constructed in Theorem 1.8), as well as conservation of \(\mathbf{C}^{2}\) and \(\mathbf{J}^{2}\), \(\vert \log \Omega \vert \) and \(\vert F\vert \) are uniformly bounded on \([s_0, s_{f})\). It follows that \(\dot{v}\) is uniformly bounded. In particular v is uniformly bounded. Since \(u\le v\), we derive that \(\gamma (s)\) lies in a compact set in \(\mathcal M\). This contradicts Lemma 6.1. \(\square \)

Proof of geodesic completeness

We can now rule out the case when the geodesic is spherically symmetric.

Lemma 6.8

Assume \(\gamma (s): [0, s_f)\mapsto \mathcal M\) is incomplete. Then \(\mathbf{J}\ne 0\).

Proof

Assume for the sake of contradiction that \(\mathbf{J}=0\) (and by Lemma 6.2, we can assume without loss of generality that \(\mathbf{C}\ne 0\)). We consider the following cases (Notice that by Lemma 6.3, they exhaust all possibilities):

Case 1. There exists a sequence \(\{s_k\}\) with \(s_k\rightarrow s_f\) such that \(\dot{r}(s_k)=0\) and \(r(s_k)>0\). This condition, \(\mathbf{J}=0\) and (6.3) together imply that \(\dot{u}(s_k)\) and \(\dot{v}(s_k)\) are uniformly bounded. This contradicts Lemma 6.6.

Case 2. There exists \(s_0\in [0,s_f)\) such thatFootnote 17 \(\dot{r}(s)>0\) and \(r(s)>0\), \(\forall s\in [s_0,s_f)\). Without loss of generality, we may assume \(r(s_0)>0\). Then \(r(s)\ge r(s_0)\) on \([s_0, s_f)\) which contradicts Lemma 6.7.

Case 3. There exists \(s_0\in [0,s_f)\) such that \(\dot{r}(s)<0\) and \(r(s)>0\), \(\forall s\in [s_0,s_f)\). By definition and the bounds on \(\nu \), \(\lambda \), we have \(\dot{v}\le 4 \dot{u}\). Combine this with the uniform bound on \(\dot{u}\dot{v}\) (which follows from (6.3)). We conclude that \(\dot{v}\) is uniformly bounded. In particular v is uniformly bounded. Since \(u\le v\), the geodesic \(\gamma (s)\) lies in a compact set, which contradicts Lemma 6.1. \(\square \)

It now remains to rule out the possibility of \(\mathbf{J}\ne 0\). It is convenient to note that in this case, we have \(r(s)>0\) for \(s\in [0,s_f)\). As a first step, we observe that if \(\mathbf{J}\ne 0\), then Lemma 6.5 implies that \(\ddot{u}\), \(\ddot{v}\) and \(\ddot{r}\) have a particular sign if \(\dot{u}\), \(\dot{v}\) have size \(r^{-1}\) and r is sufficiently small.

Lemma 6.9

There exists \(r_0>0\) such that if \(\mathbf{J}\ne 0\) and at some time \(s_0\)

then

Proof

The lemma follows from the equations (6.7) for \(\ddot{u}\), \(\ddot{v}\), \(\ddot{r}\) together with the bounds on the geometry from Theorem 1.1. \(\square \)

Given Lemma 6.9, one sees that an incomplete geodesic with \(\mathbf{J}\ne 0\) cannot stay inside a small cylinder around the axis.

Lemma 6.10

Assume \(\gamma (s): [0, s_f)\mapsto \mathcal M\) is incomplete and \(\mathbf{J}\ne 0\). Then there exists \(r_0>0\) such that for every \(s_0\in [0,s_f)\) the geodesic \(\gamma (s)\) exits the cylinder with radius \(r_0\) at some time to the future of \(s_0\), that is, there exists \(s_1\in (s_0,s_f)\) such that \(r(s_1)> r_0\).

Proof

Since \(\mathbf{J}\ne 0\), the geodesic does not intersect the axis and in particular we can use the \((u,v,\theta ,\varphi )\) coordinate system. Take \(r_0\) be the constant in Lemma 6.9. We prove this lemma by a contradiction argument. Assume the geodesic \(\gamma (s)\) lies in the cylinder with radius \(r_0\) for all \(s\in [s_0, s_f)\).

Step 1 We claim that

Otherwise there exists \(s'\in [s_0,s_f)\) such that \(\dot{r}(s')>0\). Then by Lemma 6.7, there exists \(s''>s'\) such that \(\dot{r}(s'')<0\). Take

Then \(\dot{r}(s^*)=0\), \(\dot{r}(s)<0\) when \(s^*<s\le s''\). Recall that \(\dot{r}(s^*)=\nu \dot{u}(s^*)+\lambda \dot{v}(s^*)\). Then the identity (6.3) implies that \(\dot{u}(s^*)\), \(\dot{v}(s^*)\) satisfy the conditions in Lemma 6.9. In particular, \(\ddot{r}(s^*)>0\) which contradicts \(\dot{r}(s)<0\) when \(s^*<s\le s''\). Hence (6.12) holds.

Step 2 Next we prove that there exists \(t_0\in (s_0, s_f)\) such that

Otherwise \(\frac{1}{10}\dot{u}>\dot{v}\) for all \(s \in (s_0,s_f)\) which implies that

Integrating from time \(s_0\) to s, we derive that u is uniformly bounded. From the relation \(\dot{v}< \frac{1}{10}\dot{u}\), we derive that v is also uniformly bounded. It then violates Lemma 6.1.

Step 3 Given \(t_0\) satisfying (6.13), we now show that

Define:

If \(s^*=s_f\), then (6.14) holds. Otherwise by continuity, \(\frac{1}{10}\dot{u}(s^*)\le \dot{v}(s^*)\). Since \(\dot{r}(s) \le 0\) (by Step 1), we have \(\dot{v}(s^*)\le 10 \dot{u}(s^*)\). Then from the identity (6.3), we see that \(\dot{u}(s^*)\), \(\dot{v}(s^*)\) satisfy the conditions in Lemma 6.9. In particular, \(\ddot{v}(s^*)>0\), \(\ddot{u}(s^*)<0\). Therefore there exists \(t_1 >s^*\) such that

This contradicts the definition of \(s^*\). Hence the inequality (6.14) holds.

Step 4 The argument above using Lemma 6.9 also implies that \(\ddot{u}(s)<0\), \(\dot{v}(s)\le 10 \dot{u}(s)\) for all \(s\in [t_0, s_f)\). In particular both \(\dot{u}\) and \(\dot{v}\) are uniformly bounded. Hence the geodesic \(\gamma (s)\) lies in a compact set. This contradicts Lemma 6.1 and thus concludes the proof of the lemma. \(\square \)

Lemmas 6.7 and 6.10 together show that as \(s\rightarrow s_f\), the geodesic must enter and leave the cylinder \(\{r=r_0\}\) infinitely many times. However, this will be prohibited by the following lemma:

Lemma 6.11

Assume \(\gamma (s): [0, s_f)\mapsto \mathcal M\) is incomplete and \(r(s)>0\) for all \(s\in [0,s_f)\). Suppose there exists a sequence \(\{s_n\}\) with \(s_n\rightarrow s_f\) such that \(\dot{r}(s_n)=0\). Then \(\lim \limits _{n\rightarrow \infty }(\dot{u}\dot{v})(s_n)=\infty \).

Proof