Abstract

The acquisition of decision rules in multi-intuitionistic fuzzy decision information systems is both challenging and important. To address this issue, it is necessary to combine decision rules from various systems to obtain a more reliable decision rule. Additionally, the use of three-way decisions can help determine the optimal decision value. In this research article, we explore the decision problems of multi-intuitionistic fuzzy decision information systems by utilizing the D-S evidence theory and three-way decisions. We start by providing an overview of the belief structure of intuitionistic fuzzy sets. Then, we propose a fused mass function of decision rules that assists in obtaining satisfactory or optimal decision value sets through three-way decisions. To facilitate the fusion of decision value sets, we present an algorithm that effectively integrates them. Furthermore, we provide examples to illustrate the algorithm and demonstrate the effectiveness and efficiency of our proposed approach.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Atanassov’s intuitionistic fuzzy set (IFS) [1] is a valuable tool for managing uncertainty and imprecision in information and knowledge. It surpasses traditional fuzzy sets by incorporating both membership and non-membership degrees, offering a more expressive framework. Numerous researchers have investigated the properties and operations of IFSs, leading to the development of various approaches for approximating IFSs using rough sets [2,3,4,5]. Despite these advancements, some previously proposed models for IFS approximation have limitations in maintaining the essential properties of IFSs, making them imperfect. Consequently, idealized approximation models preserving the properties of IFSs have been introduced in the literature [6,7,8]. Moreover, various models have gained popularity for different scenarios, such as handling arbitrary intuitionistic fuzzy (IF) binary relations, infinite universes of discourse [9, 10], and variable precision [11]. These models have been extensively quantified to measure degrees of uncertainty [12] and to discuss system reduction and decision-making processes [13] in intuitionistic fuzzy approximation spaces. In this paper, we concentrate on the development and application of IF rough sets, which have demonstrated significant potential in various fields.

Decision rules (DRs) play a pivotal role in decision-making and can be derived from various theories, such as multiple attribute decision (MAD) [14,15,16] and rough set theory [17]. While MAD focuses on identifying the optimal decision maker, rough set theory considers objects with similarity relations as a single class to determine the best decision values. Our research proposes that determining the optimal decision value for an object is the key factor in decision-making. Objects with similarity relations exhibit consistent values and performance for the same attribute, making them suitable for constructing information granules to enhance decision-making accuracy. Therefore, rough set methods are our preferred approach for addressing decision problems. However, traditional rough set theory only captures DRs from individual IF information systems (IFISs), while in practice, we often encounter situations where we need to extract underlying information and knowledge from multiple IFISs (MIFISs). Moreover, decision values for each object in different IF decision information systems (IFDISs) may vary, necessitating the fusion of DRs from multiple IFDISs (MIFDISs) to obtain an integrated decision value. In the process of decision synthesis, we need to tackle two primary challenges: Firstly, determining the uncertainty measure of each object with respect to different decision values in the fused IFDIS. Secondly, selecting suitable decision values (or value sets) for each object using the uncertainty measure. Our study aims to tackle these challenges and offer an efficient approach for decision-making using rough set-based DRs for MIFDISs.

Various techniques exist to handle uncertainty in DRs across multiple information systems. One approach is the employment of the MAD technique, which has been investigated by Liang, Mu and Xu [14, 18, 19]. Another method involves the use of the D-S evidence theory, as introduced by Dempster and Shafer [20, 21]. This theory enables the treatment of both uncertainty and conflicting evidence [22, 23], a capability absent in previous fusion operators utilized with fuzzy sets and MADs. The D-S evidence theory is grounded in concepts such as focal element sets, mass functions, belief functions, and plausibility functions, which structure the basic belief system [24,25,26]. By integrating rough set theory, the belief and plausibility functions can function as probabilistic models for lower and upper approximations [27,28,29,30,31]. The D-S evidence theory offers a comprehensive framework for combining different types and sources of knowledge, as well as amalgamating cumulative evidence with prior opinions [32,33,34,35]. Its applications extend across information fusion, knowledge discovery, and decision-making domains [36,37,38,39,40,41]. An important aspect of fusing information in multiple integrated decision information systems is the establishment of all focal elements [42,43,44]. Through the utilization of an information fusion technique based on the focal element set, it becomes feasible to streamline fuzzy information systems and IFISs [9, 45,46,47,48]. This strategy can lead to a more accurate fused mass function of decision rules in MIFDISs compared to utilizing a single system. As a result, a new mass function fusion rule is suggested to effectively manage conflicting evidence and derive a fused mass function of decision rules in MIFDISs.

Currently, decision-making methods often face challenges when dealing with MIFDISs that have different attribute sets. However, the combination of fused mass functions and the three-way decision theory can effectively address this problem. The three-way decision theory, introduced by Yao in 2010 [49], divides objects into three categories: acceptance, rejection, and non-commitment, allowing for the identification of optimal strategies from multiple decision values [50, 51]. These three categories align with Pawlak’s rough sets, which have been widely discussed in the context of three-way decisions in different decision-theoretic rough sets [30, 31]. Several scholars have discussed three-way decisions in different decision-theoretic rough sets [52,53,54], particularly in IF environments [15, 18, 55,56,57]. In MIFDISs, the construction of a suitable fused uncertainty measure can determine the fused decision value sets and facilitate information extraction. By establishing rules for acceptance, rejection, and non-commitment, valuable information can be mined from MIFDISs. This paper proposes the use of mass function for fused DRs as a replacement for the conditional probability function of each object in three-way decisions, as the probability function of DRs cannot be directly obtained or calculated easily. Consequently, this study focuses on the DR fusion and the selection of optimal fused decision values in MIFDISs.

This paper focuses on decision-making in MIFDISs. Section 2 reviews IF \(({\mathcal {I}},{\mathcal {T}})\) approximation spaces, belief and plausibility functions. Section 3 introduces a mass function proposed for all DRs within the same IFDIS. Section 4 examines fused mass functions of DRs by employing a suitable inclusion degree of two IFSs. Section 5 develops two types of three-way decision models suitable for fused DRs. Subsequently, the algorithm for determining the optimal fused decision value set of each object of MIFDISs is proposed by combining the fused mass functions of DRs and the three-way decisions. The feasibility of the method is demonstrated through examples. Finally, Sect. 6 summarizes the key findings of this paper.

2 Basic Concepts

2.1 The IF Approximation Space

Firstly, we review intuitionistic fuzzy (IF) \(({\mathcal {I}},{\mathcal {T}})\) approximation operators defined in [10].

Denote \(L^{*}=\{(x_{1},x_{2})\in [0,1]\times [0,1]:x_{1}+x_{2}\le 1\}\). An IF relation \(\le _{L^{*}}\) on \(L^{*}\) is: \(\forall (x_{1},x_{2}),(y_{1},y_{2})\in L^{*}\),

The pair \((L^{*},\le _{L^{*}})\) is a complete lattice with the greatest element \(1_{L^{*}}=(1,0)\) and the smallest element \(0_{L^{*}}=(0,1)\). And for all \((x_{1},x_{2})\in L^{*}\), we define \(\mu _{(x_{1},x_{2})}=x_{1}\), \(\gamma _{(x_{1},x_{2})}=x_{2}\).

Suppose U is a nonempty and finite object set, called the universe of discourse.

Definition 2.1

[1] An intuitionistic fuzzy set (IFS) A on U is defined as

where the map \(\mu _{A}:U\rightarrow [0,1]\) is called the membership degree of x to A (namely \(\mu _{A}(x)\)), and \(\gamma _{A}:U\rightarrow [0,1]\) is called the non-membership degree of x to A (namely \(\gamma _{A}(x)\)), respectively, and \((\mu _{A}(x),\gamma _{A}(x))\in L^{*}\) for each \(x\in U\). The family of all IFSs of U is denoted by \(\mathcal{I}\mathcal{F}(U)\).

A fuzzy set \(A=\{\langle x,\mu _{A}(x)\rangle :x\in U\}\) can be identified by the IFS \(\{\langle x, \mu _{A}(x),1-\mu _{A}(x)\rangle : x\in U\}\). The basic operations of IFSs on \(\mathcal{I}\mathcal{F}(U)\) are listed in [1].

We introduce two special IFSs: (IF singleton set) \(1_{\{y\}}=\{\langle x,\mu _{1_{\{y\}}}(x),\gamma _{1_{\{y\}}}(x)\rangle :x\in U\)} for \(y\in U\) as follows:

(IF constant set) If \((a,b)\in L^*\), then \((\widehat{a,b})\) is an IF constant set, and \((\widehat{a,b})(x)=(a,b)\), \(\forall x\in U\). In the following, the properties of an IF approximation space (IFAS) will be reviewed.

As stated in [9], an IF relation (IFR) R on U is an IFS of \(U\times U\), namely, R is given by

where \(\mu _{R}:U\times U\rightarrow [0,1]\) and \(\gamma _{R}:U\times U\rightarrow [0,1]\) satisfy \((\mu _{R}(x,y),\gamma _{R}(x,y))\in L^{*}\), \(\forall (x,y)\in U\times U\). We denote the set of all IFRs on U by \(IFR(U\times U)\). Then \(\forall x\in U\), R(x) is an IF class generated by x, where \(R(x)(y)=(\mu _{R}(x,y), \gamma _{R}(x,y))\), \(\forall y\in U\).

(Special types of IFRs) Let \(R\in IFR( U\times U)\) and T be an IF t-norm on \(L^{*}\), S be an IF t-conorm on \(L^{*}\). We say R is

-

1.

Serial if \(\vee _{y\in U}R(x,y)=1_{L^{*}},~ \forall x \in U.\)

-

2.

Reflexive if \(R(x,x)=1_{L^{*}},~ \forall x\in U.\)

-

3.

Symmetric if \(R(x, y)=R(y, x),~ \forall (x, y)\in U \times U.\)

-

4.

\({\mathcal {T}}\)-transitive if \(\vee _{y\in U}{\mathcal {T}}(R(x,y),R(y, z))\le _{L^{*}}R(x, z),~ \forall (x, z)\in U \times U\).

-

5.

\({\mathcal {T}}\) -equivalent if R is a reflexive, symmetric, and \({\mathcal {T}}\)-transitive IFR.

Definition 2.2

Suppose U is a nonempty object set and R is an IFR on U, then the pair (U, R) is referred to as an IFAS.

Definition 2.3

[9] Suppose (U, R) is an IFAS and \({\mathcal {T}}\) (\({\mathcal {I}}\)) is a continuous IF t-norm (IF implicator, respectively) on \(L^{*}\). \(A\in \mathcal{I}\mathcal{F}(U)\), the \({\mathcal {T}}-\)upper and \({\mathcal {I}}-\)lower approximations of A denoted by \({\overline{R}}^{{\mathcal {T}}}(A)\) and \({\underline{R}}_{{\mathcal {I}}}(A)\), respectively, w.r.t. (U, R) are two IFSs of U and are, respectively, defined as follows:

The operators \({\overline{R}}^{{\mathcal {T}}}\) and \({\underline{R}}_{{\mathcal {I}}}: {\mathcal{I}\mathcal{F}}(U)\rightarrow {\mathcal{I}\mathcal{F}}(U)\) are, respectively, referred to as the \({\mathcal {T}}-\)upper and \({\mathcal {I}}-\)lower IF approximation operators of (U, R). The pair \(({\underline{R}}_{{\mathcal {I}}}(A),{\overline{R}}^{T}(A))\) is called the \(({\mathcal {I}},{\mathcal {T}})\)-IF rough set of A w.r.t. (U, R).

Theorem 2.1

[58] Suppose (U, R) is an IFAS, \({\mathcal {T}}\) and \({\mathcal {I}}\) are IF t-norm and IF S-implicator based on an IF t-conorm \({\mathcal {S}}\), respectively, and \(\sim _{{\mathcal {N}}}\) is a standard IF negator. If \({\mathcal {T}}\) and \({\mathcal {S}}\) are dual w.r.t. \(\sim _{{\mathcal {N}}}\), then

-

1.

\({\underline{R}}_{{\mathcal {I}}(A)}= \sim _{{\mathcal {N}}}{\overline{R}}^{{\mathcal {T}}}(\sim _{{\mathcal {N}}}A)\), \(\forall A\in \mathcal{I}\mathcal{F}(U)\);

-

2.

\({\overline{R}}_{{\mathcal {T}}(A)}= \sim _{{\mathcal {N}}}{\underline{R}}^{{\mathcal {I}}}(\sim _{{\mathcal {N}}}A)\), \(\forall A\in \mathcal{I}\mathcal{F}(U)\).

In [10], the properties of \({\mathcal {T}}-\)upper and \({\mathcal {I}}-\)lower IF rough approximation operators, defined by Eq.(1) and Eq. (2), and the properties of IF binary relations are listed.

2.2 The IF Belief Structure of an IFS

Because the IF probability (IFP) of an IFS serves as the basis for the mass function, we firstly review the definition of IFP of an IFS as defined in [43], and then introduce the belief structure of an IFS.

Let \((U, \Omega )\) be a measurable space, P be a normal probability measure on \((U, \Omega )\), i.e. \(P(x) > 0\), \(\forall x\in U\), then \((U, \Omega , P)\) is a normal probability space [43].

Definition 2.4

[43] Let U be a nonempty and finite set. If P is the probability function of crisp sets of U, then an IFP \(P^{*}\) is defined as: \(\forall A\in \mathcal{I}\mathcal{F}(U)\),

Definition 2.5

[43] Let U be a nonempty and finite set, an IF set function \(m: \mathcal{I}\mathcal{F}(U)\rightarrow [0,1]\) is referred to as a basic probability assignment function (also called mass function) if it satisfies axioms (M1) and (M2):

-

(M1)

\(m(\emptyset )=0\);

-

(M2)

\(\sum \limits _{A\in \mathcal{I}\mathcal{F}(U)}m(A)=1\).

Suppose R is an IF reflexive binary relation on U, (U, R) is an IFAS, \(M=\{R(x):x\in U\}\).

Theorem 2.2

[43] Let \(U=\{x_{1},x_{2},\ldots ,x_{n}\}\) be a nonempty and finite universe of discourse, R be an IF reflexive binary relation on U. \(\forall A\in \mathcal{I}\mathcal{F}(U)\), define

Then \(m_{R}\) is a mass function. M is the focal element set.

Definition 2.6

[43] Assume (U, R) is a reflexive IFAS, P is a probability function on U. Then \(\forall X\in \mathcal{I}\mathcal{F}(U)\),

\(Be_{R}^{{\mathcal {I}}}\) is a belief function, and \(Pla_{R}^{{\mathcal {T}}}\) is a plausibility function.

Theorem 2.3

[43] Suppose (U, R) is a reflexive IFAS, P is a probability function on U. Then we have: \(\forall A\in \mathcal{I}\mathcal{F}(U)\),

Where \({\mathcal {I}}(F\subseteq A)=\bigwedge _{y\in U}{\mathcal {I}}(F(y),A(y))\) and \({\mathcal {T}}(F\cap A)=\bigvee _{y\in U}{\mathcal {T}}(F(y),A(y))\).

Let \((x_{1},x_{2})\in L^{*}\) and

then

Definition 2.7

Assume \(A,B\in \mathcal{I}\mathcal{F}(U)\), denote

where \(||A||=\frac{\sum _{x\in U}(\mu _{A}(x)^{2}+(1-\gamma _{A}(x))^{2})}{2|U|}\), and |U| is the cardinal number of U, \(\forall A\in \mathcal{I}\mathcal{F}(U)\). Then \(\sigma (A,B)\) is called the inclusion degree of A and B on \(\mathcal{I}\mathcal{F}(U)\).

By this definition, the following results can be easily obtained. \(0\le ||A||\le 1\), \(\forall A\in \mathcal{I}\mathcal{F}(U)\), \(||\widehat{(1,0)}||=1\), \(||\widehat{(0,1)}||=0\), where \(\widehat{(1,0)}(x)=(1,0)\) and \(\widehat{(0,1)}(x)=(0,1)\), \(\forall x\in U\). \(\forall A,B,C\in \mathcal{I}\mathcal{F}(U)\), (1) if \(B\subseteq A\), then \(\sigma (A,B)=1\), (2) if \(A\subseteq B\subseteq C\), then \(\sigma (A,C)\le \sigma (A,B)\), (3) if \(A\subseteq B\), then \(\sigma (A,C)\le \sigma (B,C)\). Thus \(\sigma (A,B)\) satisfies the concept of the inclusion degree, which is introduced in [59]. \(\sigma (A,(\widehat{1,0}))\) represents the degree of inclusion of A with respect to \(\widehat{(1,0)}\).

Note: The fused quasi-probability function \(f_{{\mathcal {R}}}\) is also applicable to a reflexive IFR R, that is, if the belief function is \(Be_{R}^{{\mathcal {I}}}\) and the plausibility function is \(Pla_{R}^{{\mathcal {T}}}\), we can define an \(f_{R}\), \(\forall A\in \mathcal{I}\mathcal{F}(U)\),

\(f_{R}\) is a quasi-probability function with respect to an IFR R.

Definition 2.8

Assume R is a reflexive IFR on U. \(\forall A,B\in \mathcal{I}\mathcal{F}(U)\), if \(f_{R}(B)\ne 0\), then

\(f_{{\mathcal {R}}}(A|B)\) is a conditional uncertainty measure of A given B, we call it a conditional quasi-probability function of A given B.

Example 2.1

Let \(U=\{x_{1},x_{2},x_{3}\}\), \(A=\frac{(0.7,0.2)}{x_{1}}+\frac{(0.3,0.5)}{x_{2}}+\frac{(0.9,0)}{x_{3}}\), \(Be_{R}(A)=\frac{1}{3}\), \(Pla(A)=\frac{2}{3}\), \(B=\frac{(0.3,0.7)}{x_{1}}+\frac{(0.2,0.6)}{x_{2}}+\frac{(0.9,0)}{x_{3}}\), \(Be_{R}(B)=\frac{1}{6}\), \(Pla(B)=\frac{1}{3}\), then

And \(f_{R}(B|A)=\frac{f_{R}(A\cap B)}{f_{R}(A)}=\frac{\frac{1}{6}+\frac{0.09+0.09+0.04+0.16+0.81+1}{6}\times \frac{1}{6}}{0.52}=0.44\).

Proposition 2.4

Assume R is a reflexive IFR on U. \(f_{R}\) satisfies the following properties: \(\forall A, B\in \mathcal{I}\mathcal{F}(U)\),

-

1.

\(f_{R}(\emptyset )=0\),

-

2.

\(f_{R}(U)=1\),

-

3.

\(0\le f_{R}(A)\le 1\),

-

4.

If \(A\subseteq B\), then \(f_{R}(A)\le f_{R}(B)\),

-

5.

If \(A_{1}^{0}\ne \emptyset\) and \(Be_{R}(A)\ne Pla_{R}(A)\), then \(f_{R}(A)\ne 0\),

-

6.

If \(f_{R}(B)\ne 0\), then \(f_{R}((\emptyset )|B)=0\), \(f_{R}(U|B)=1\),

-

7.

If \(f_{R}(B)\ne 0\), then \(0\le f_{R}(A|B)\le 1\),

-

8.

If \(f_{R}(B)\ne 0\), \(B\subseteq A\), then \(f_{R}(A|B)=1\).

Proof

These proofs are quite straightforward. \(\square\)

3 The Mass Function of Decision Rules in IF Decision Information Systems

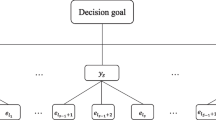

Let \(I=(U,At,d)\) be an IF decision information system (IFDIS, in short) built by a professor, where \(At=\{a_{j}:j=1,\ldots ,|At|\}\) represents a set of conditional attributes, R, induced by At, denotes a reflexive and symmetric IFR (known as similarity IFR) on U, d represents a decision attributes with a value range \(V_{d}\). Hence, we can denote the antecedent of a possible decision rule (DR, in short) for an element x as \(g_{v}(x)=\bigwedge \nolimits _{j=1}^{|At|}(a_{j},a_{j}(x))\rightarrow (d,v)\), where \(At(x)=\bigwedge \nolimits _{j=1}^{|At|}(a_{j},a_{j}(x))\) represents the conjunction of conditional attribute \(a_{j}\in At\), and (d, v) represents its consequent.

Even though every decision rule is derived from an object (or a class of objects) in U, the probability assignment for every DR should not be 0. To overcome this limitation, the IF quasi-probability function of each object, considered as a weakened probability function, is utilized to establish a mass function for each decision rule At(x). And there is no relation between this measure and decision value v. In addition, each DR with a different decision value is associated with its confidence level. The confidence level of each possible DR can be defined by utilizing the IF similarity classes generated by R as follows.

Definition 3.1

Let (U, At, d) be a nonempty and finite IFDIS, \(P^{*}\) the probability on U. For given \(A,B\in IF(U)\), we define the inclusion degree of A with respect to B,

where \(||(x_{1},x_{2})||=\frac{x_{1}^{2}+(1-x_{2})^{2}}{2}\) (\((x_{1},x_{2})\) is an IF number).

Proposition 3.1

Suppose (U, At, d) is a nonempty and finite IFDIS, and \(P^{*}\) is a probability on U. For given \(A,B\in IF(U)\), then

-

1.

\(0\le D(A/B)\le 1\);

-

2.

If \(A\subseteq B\), \(D(A/B)=1\);

-

3.

If \(A\subseteq C\), \(D(B/A)\le D(B/C)\).

Proof

These proofs are quite straightforward. \(\square\)

Definition 3.2

Let (U, At, d) be a nonempty and finite IFDIS, \(P^{*}\) a probability on U, R a similarity IFR on U induced by At. For given \(x\in U\), \(\forall v\in V_{d}\), we define

Then \(c_{v}(R(x))\) reflects the confidence level of the DR \(g_{v}(x)\). Where \(d_v(y)=\left\{ \begin{array}{ll} (1,0), &{} d(y)=v \\ (0,1), &{} d(y)\ne v \end{array} \right.\) and \(R(x)(y)=R(x,y)\), \(\forall x,y\in U\).

\(D(d_v/R(x))\) is also regarded as a conditional probability of R(x) for given decision value v.

We can also define the confidence level \(c_{\lnot v}(R(x))\) of the DR of x for decision value \(\lnot v\) as

In the following, we construct the belief structure of an IFDIS by the quasi-probability function and the confidence level. From the DR view, for given \(v\in V_{d}\), \(\forall x\in U\), R(x) is a focal element.

Definition 3.3

Assume (U, At, d) is a nonempty and finite IFDIS, R is a similarity IFR on U induced by At. Given \(v\in V_{d}\), m is a mass function for the DRs, \(M=\{R(x):x\in U\}\) is the set of focal elements, then \(A\in \mathcal{I}\mathcal{F}(U)\),

Similarly, for decision value \(\lnot v\), suppose \(DR(x,\lnot v)=At(x)\rightarrow (d,\lnot v)\), then

By R is a similarity IFR on U, then \(\emptyset \notin M\), then \(m(\emptyset , v)=0\) and \(m(\emptyset , \lnot v)=0\). Moreover, \(\sum \limits _{A\in IF(U)}m(A,v)=\sum \limits _{A\in M}\frac{ \sum \limits _{\{F_{j}\in M:~F_{j}=A\}}f(F_{j})c_{v}(F_{j})}{\sum \limits _{F_{j}\in M}f(F_{j})c_{v}(F_{j})}=1\) and \(\sum \limits _{A\in IF(U)}m(A,\lnot v)=1\). Thus we can construct two mass functions for DRs \(At(x)\rightarrow (d,v)\) and \(At(x)\rightarrow (d,\lnot v)\) about \(v\in V_{d}\).

Example 3.1

Suppose \(U=\{x_{1},x_{2},x_{3}\}\), \(R(x_{1})=R(x_{2})=\frac{(1,0)}{x_{1}}+\frac{(1,0)}{x_{2}}+\frac{(0.3.0.5)}{x_{3}}\), \(R(x_{3})=\frac{(0.3,0.7)}{x_{1}}+\frac{(0.3,0.6)}{x_{2}}+\frac{(1,0)}{x_{3}}\), \(d(x_{1})=1\), \(d(x_{2})=2\), \(d(x_{3})=2\), \(P^*(x_{1})=P^*(x_{2})=P^*(x_{3})=\frac{1}{3}\).

Then \(f(R(x_{1}))=\frac{2}{3}\), \(f(R(x_{3}))=\frac{1}{3}\). For different decision values, \(c_{1}(R(x_{1}))=\frac{1}{1.585}=0.63\), \(c_{2}(R(x_{1}))=\frac{0.585}{1.585}=0.37\). \(c_{1}(R(x_{3}))=\frac{0.09}{0.653}=0.138\), \(c_{2}(R(x_{3}))=\frac{0.563}{0.653}=\frac{7}{9}=0.862\).

Thus, \(M=\{R(x_{1}),R(x_{3})\}\), \(m(R(x_{1}),1)=\frac{\frac{2}{3}\times 0.63}{\frac{2}{3}\times 0.63+\frac{1}{3}\times 0.138}=0.9\) and \(m(R(x_{3}),1)=\frac{\frac{1}{3}\times 0.138}{\frac{2}{3}\times 0.63+\frac{1}{3}\times 0.138}=0.1\). \(m(R(x_{1}),\lnot 1)=\frac{\frac{2}{3}\times 0.37}{\frac{2}{3}\times 0.37+\frac{1}{3}\times 0.862}=0.462\) and \(m(R(x_{3}),\lnot 1)=0.538\).

4 The Fused Mass Function of Decision Rules

For the same set of objects, different professors may offer different evaluations, leading to distinct information systems and decision rules for each object. This means that the multi-information system contains the same set of objects. Therefore, it is crucial to address the fusion method of these information systems before integrating decision rules. In this section, we introduce a fuzzy information fusion approach for IF information systems. Suppose U is a finite set of objects, \(R_{i}\) is a reflexive IFR induced by the information system given by the i-th professor.

Definition 4.1

Assume \(U=\{x_{1},x_{2},\ldots ,x_{n}\}\), \({\mathcal {R}}=\{R_{1},R_{2},\ldots ,R_{n}\}\) is a set of reflexive IFRs on U. We call \((U,{\mathcal {R}})\) a MIFAS.

According to Sect. 2, we can acquire the IF information granules constructed by \(R_{1}, \ldots ,R_{n}\) and an IF mass function \(m_{R_{i}}\) for every IFDIS. Now our goal is to obtain a new fused mass function using \(\{m_{R_1},\ldots , m_{R_n}\}\), that can generate a new IFDIS based on the set of all focal elements in the fused information system.

Let \(M_{i}\) be the set of all focal elements of \(m_{R_{i}}\) w.r.t. \(R_{i}\). We denote an element of \(M_{i}\) as \(F_{ij}\), where the subscript j represents the \(j-\)th element of the \(i-\)th set of focal elements, thus denoted as \(j_{i}\). The set \(\{\bigcap _{i=1}^{n}\{F_{ij_{i}}\in M_{i}\}:(\bigcap _{i=1}^{n}\{F_{ij_{i}}\in M_{i}\})_{1}^{0}=\emptyset \}\) represents the conflict evidence set, where \(A_{1}^{0}=\{x\in U:A(x)=(1,0)\}\). Each focal element of the fused mass function \(m^{*}\) is affected differently by the conflict evidences. To quantify the influence of conflict evidences, we introduce a novel inclusion degree of two IFSs. This inclusion degree reflects the impact of conflict evidences on each focal element of the fused mass function \(m^{*}\).

The inclusion degree can be utilized to adjust the fused IF mass function, allowing us to allocate and manage conflict evidences effectively. Denote \({\mathcal {M}}=\{\bigcap _{i}\{F_{ij_{i}}\in M_{i}\}:(\bigcap _{i}\{F_{ij_{i}}\in M_{i}\})_{1}^{0}\ne \emptyset \}\), which is the fused focal element set.

Definition 4.2

Assume \((U, R_{i})\) is an IF decision system. If \(\{F_{ij_{i}}\in M_{i}:i=1,\ldots ,n\}\) (\(\{F_{ij_{i}}\in M_{i}\}\), in short) satisfies \((\bigcap _{i=1}^{n}\{F_{ij_{i}}\in M_{i}\})_{1}^{0}=\emptyset\), then for each \(A\in {\mathcal {M}}\), the significance degree of A to \(\{F_{ij_{i}}\in M_{i}\}\) is defined as

where \(\sigma (A,\{F_{ij_{i}}\in M_{i}\})=\sum \limits _{i=1}^{n}\sigma (A,F_{ij_{i}})\).

Example 4.1

Let \(U=\{a,b,c\}\), \({\mathcal {R}}=\{R_{1},R_{2}\}\), \(M_{1}=\{R_{1}(a)=\{\langle a,1,0\rangle ,\langle b,0.8,0.1\rangle ,\langle c,0.2,0.6\rangle \},\)

\(R_{1}(b)=\{\langle a,1,0\rangle ,\langle b,1,0\rangle ,\langle c,0,1\rangle \},R_{1}(c)=\{\langle a,0.2,0.8\rangle ,\langle b,0.4,0.4\rangle ,\langle c,1,0\rangle \}\}\), \(M_{2}=\{R_{2}(a)=\{\langle a,1,0\rangle ,\langle b,0,1\rangle ,\langle c,0.2,0.6\rangle \}, R_{2}(b)=\{\langle a,0.7,0.1\rangle ,\langle b,1,0\rangle ,\langle c,0.7,0.2\rangle \},\)

\(R_{2}(c)=\{\langle a,0,0.8\rangle ,\langle b,0.7,0.1\rangle ,\langle c,1,0\rangle \}\}\).

\({\mathcal {M}}=\{A_{1}=\{\langle a,1,0\rangle ,\langle b,0,1\rangle ,\langle c,0.2,0.6\rangle \}, A_{2}=\{\langle a,0.7,0.1\rangle ,\langle b,1,0\rangle ,\langle c,0,1\rangle \}, A_{3}=\{\langle a,0,0.8\rangle ,\langle b,0.4,0.4\rangle ,\langle c,1,0\rangle \},\)

\(A_{4}=\{\langle a,1,0\rangle ,\langle b,0,1\rangle ,\langle c,0,1\rangle \}\}\). \((R_{1}(a)\cap R_{2}(b))_{1}^{0}=\emptyset\), \((R_{1}(a)\cap R_{2}(c))_{1}^{0}=\emptyset\), \((R_{1}(b)\cap R_{2}(c))_{1}^{0}=\emptyset\), \((R_{1}(c)\cap R_{2}(a))_{1}^{0}=\emptyset\) and \((R_{1}(c)\cap R_{2}(b))_{1}^{0}=\emptyset\).

For decision value 1, if \(m_{D}^1(R_{1}(a),1)=\frac{1}{6}\), \(m_{D}^1(R_{1}(b),1)=\frac{1}{3}\), \(m_{D}^1(R_{1}(c),1)=\frac{1}{2}\). \(m_{D}^2(R_{2}(a),1)=\frac{1}{5}\), \(m_{D}^2(R_{2}(b),1)=\frac{1}{5}\), \(m_{D}^2(R_{2}(c),1)=\frac{3}{5}\). We have \(\sigma (A_{1},\{R_{1}(a), R_{2}(b)\})=\sigma (A_{1},R_{1}(a))+ \sigma (A_{1},R_{2}(b))=0.94\). Thus,

\(\begin{array}{ll} \sigma (A_{1},\{R_{1}(a), R_{2}(b)\})=0.94,&{} \sigma (A_{2},\{R_{1}(a), R_{2}(b)\})=1.5,\\ \sigma (A_{3},\{R_{1}(a), R_{2}(b)\})=0.59, &{}\sigma (A_{4},\{R_{1}(a), R_{2}(b)\})= 0.84.\\ \sigma (A_{1},\{R_{1}(a), R_{2}(c)\})=0.67,&{} \sigma (A_{2},\{R_{1}(a), R_{2}(c)\})=1.15,\\ \sigma (A_{3},\{R_{1}(a), R_{2}(c)\})=0.97,&{}\sigma (A_{4},\{R_{1}(a), R_{2}(c)\})=0.56.\\ \sigma (A_{1},\{R_{1}(b), R_{2}(c)\})=0.57,&{}\sigma (A_{2},\{R_{1}(b), R_{2}(c)\})=1.23,\\ \sigma (A_{3},\{R_{1}(b), R_{2}(c)\})=0.91, &{}\sigma (A_{4},\{R_{1}(b), R_{2}(c)\})=0.51.\\ \sigma (A_{1},\{R_{1}(c), R_{2}(a)\})=1.11,&{}\sigma (A_{2},\{R_{1}(c), R_{2}(a)\})=0.82,\\ \sigma (A_{3},\{R_{1}(c), R_{2}(a)\})=1.09,&{}\sigma (A_{4},\{R_{1}(c), R_{2}(a)\})=0.94.\\ \sigma (A_{1},\{R_{1}(c), R_{2}(b)\})=0.45,&{}\sigma (A_{2},\{R_{1}(c), R_{2}(b)\})=0.98,\\ \sigma (A_{3},\{R_{1}(c), R_{2}(b)\})= 1.38,&{}\sigma (A_{4},\{R_{1}(c), R_{2}(b)\})= 0.32.\\ S(A_{1},\{R_{1}(a), R_{2}(b)\})=0.24, &{}S(A_{2},\{R_{1}(a), R_{2}(b)\})=0.39,\\ S(A_{3},\{R_{1}(a), R_{2}(b)\})=0.15,&{} S(A_{4},\{R_{1}(a), R_{2}(b)\})=0.22.\\ S(A_{1},\{R_{1}(a), R_{2}(c)\})=0.2,&{}S(A_{2},\{R_{1}(a), R_{2}(c)\})=0.34,\\ S(A_{3},\{R_{1}(a), R_{2}(c)\})=0.29, &{}S(A_{4},\{R_{1}(a), R_{2}(c)\})=0.17.\\ S(A_{1},\{R_{1}(b), R_{2}(c)\})=0.18,&{}S(A_{2},\{R_{1}(b), R_{2}(c)\})=0.38,\\ S(A_{3},\{R_{1}(b), R_{2}(c)\})=0.28,&{}S(A_{4},\{R_{1}(b), R_{2}(c)\})= 0.16.\\ S(A_{1},\{R_{1}(c), R_{2}(a)\})=0.28,&{} S(A_{2},\{R_{1}(c), R_{2}(a)\})=0.21, \\ S(A_{3},\{R_{1}(c), R_{2}(a)\})=0.27,&{}S(A_{4},\{R_{1}(c), R_{2}(a)\})= 0.24.\\ S(A_{1},\{R_{1}(c), R_{2}(b)\})=0.145,&{}S(A_{2},\{R_{1}(c), R_{2}(b)\})=0.315,\\ S(A_{3},\{R_{1}(c), R_{2}(b)\})=0.44,&{}S(A_{4},\{R_{1}(c), R_{2}(b)\})= 0.10.\\ \end{array}\)

When encountering multiple DRs regarding an object, it is essential to synthetically consider an ultimate DR. Therefore, we aim to fuse all DRs regarding the same object using the Dempster-Shafer (D-S) evidence theory. Initially, let \(At_{i}=\{a_{ij}: j=1,\ldots ,|At_{i}|\}\) denote a set of conditional attributes, \(R_{i}\) be the relation induced by \(At_{i}\), d indicate the decision attribute, \(V_{d}\) describe the value range of the decision attribute d. The assumption is that the value ranges for the decision attribute are the same for every system being considered. \(\forall v\in V_{d}\), all possible fused DRs w.r.t. v can be expressed as \({\mathcal {G}}_{v}=\{g_{v}(\bigwedge x_{i_{k}})=\bigwedge \limits _{i}(\bigwedge \limits _{a_{ij}\in At_{i}}(a_{ij},a_{ij}(x_{i_{k}})))\rightarrow (d,v): (\bigcap _{i=1}R_{i}(x_{i_{k}}))_{1}^{0}\ne \emptyset , x_{i_{k}}\in U\}\), which can be regarded as a targeted combination of all DRs in every similar IFDS. Similarly, we can obtain all possible fused DRs w.r.t. \(\lnot v\) expressed as \(\lnot {\mathcal {G}}_{v}=\{g_{\lnot v}(\bigwedge x_{i_{k}})=\bigwedge \limits _{i}(\bigwedge \limits _{a_{ij}\in At_{i}}(a_{ij},a_{ij}(x_{ik})))\rightarrow (d,\lnot v): (\bigcap _{i=1}R_{i}(x_{ik}))_{1}^{0}\ne \emptyset , x_{ik}\in U\}\). Especially, the antecedent of the fused DR \(g_{v}(\bigwedge x_{i_{k}})\) and \(g_{\lnot v}(\bigwedge x_{i_{k}})\) is denoted by \(g(\bigwedge x_{i_{k}})\). If \(x_{1_{k}}=\ldots =x_{n_{k}}=x\), \(x\in U\), then \(g_{v}(\bigwedge x_{i_{k}})\) can be abbreviated as \(g_{v}(x)\), and \(g_{\lnot v}(\bigwedge x_{i_{k}})\) as \(g_{\lnot v}(x)\).

In the following, we improve the method to compute the fused mass function of the DR.

Every DR can be induced by an object in an IFDIS, thus \(M_{i}=\{R_{i}(x):x\in U\}\), and \({\mathcal {M}}=\{\bigcap \limits _{i=1 }^{n}R_{i}(x_{i_{k}}):(\bigcap \limits _{i=1 }^{n}R_{i}(x_{i_{k}}))_{1}^{0}\ne \emptyset , x_{i_{k}}\in U\}\). \(\forall A\in \mathcal{I}\mathcal{F}(U)\), if \(A\in {\mathcal {M}}\), which is related to the fused DR \(g_{v}(\bigwedge x_{i_{k}})\) for every decision value \(v\in V_{d}\), thus \(m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),v)=m^{*}(A,v)\), then define

For \(\lnot v\), \(\forall A\in \mathcal{I}\mathcal{F}(U)\), we have

For given \(v\in V_{d}\), \(m^{*}\) is a fused mass function of DRs, which can be proven easily.

Example 4.2

(Following Example 4.1) For \(A_{1}\in {\mathcal {M}}_{{\mathcal {R}}}\), we compute

In the same way, we have \(m^{*}(A_{2},1)=0.24\), \(m^{*}(A_{3},1)=0.46\), \(m^{*}(A_{4},1)=0.16\).

Thus, we can give the basic probability function for every possible DR.

5 The Decision-Making of MIFDISs

In this section, we employ three-way decisions to discuss decision value selection problems. For \(v\in V_{d}\), the state set of decision value v is \(\Omega _{v}=\{v,\lnot v\}\). The set of actions is given by \(\{P,B,N\}\), where, P represents an action of accepting the decision value v for object x, resulting in the decision \(x\in POS(v)\), B represents an action of further investigating the decision value of x, classifying \(x\in BND(v)\), and N represents an action of rejecting the decision value v for the object x, leading to the decision \(x\in NEG(v)\).

\(\lambda _{PP}\), \(\lambda _{BP}\) and \(\lambda _{NP}\) denote IF loss degrees with IF numbers incurred by taking actions of P, B and N respectively, when the decision value is v. Similarly, \(\lambda _{PN}\), \(\lambda _{BN}\) and \(\lambda _{NN}\) denote IF loss degrees with IF numbers incurred by taking actions of P, B and N respectively, when the decision value is \(\lnot v\), where \(\lambda _{\cdot \cdot }=(\mu _{\lambda _{\cdot \cdot }},\gamma _{\lambda _{\cdot \cdot }})\) (\(\cdot =P,B,N\)). If \(\bigcap R_{i}(x_{i_{k}})\in {\mathcal {M}}\), then we can use every \(\bigwedge x_{i_{k}}\) and all \(v\in V_{d}\) to construct all possible fused DRs. We can not directly obtain the conditional probability of possible fused DRs. So in three-way decisions, we replace the conditional probability by the fused mass function \(m^{*}(\bigcap \nolimits _{i=1}^{n}R_{i}(x_{i_{k}}),v)\) or \(m^{*}(\bigcap \nolimits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)\) of DRs.

For possible fused DR \(g_{v}(\bigwedge x_{i_{k}})\)s, \(R(\tau |g_{v}(\bigwedge x_{i_{k}}))(\tau =P,B,N)\) is a DR expected loss function.

The expected loss \(R(\cdot |g_{v}(\bigwedge x_{i_{k}}))(\cdot =P,B,N)\) associated with taking individual action is expressed as

\(R(P|g_{v}(\bigwedge x_{i_{k}}))=m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),v)\lambda _{PP}{\dot{\oplus }} m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)\lambda _{PN}\),

\(R(B|g_{v}(\bigwedge x_{i_{k}}))=m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),v)\lambda _{BP}{\dot{\oplus }} m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)\lambda _{BN}\),

\(R(N|g_{v}(\bigwedge x_{i_{k}}))=m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),v)\lambda _{NP}{\dot{\oplus }} m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)\lambda _{NN}\),

where \(r\times (x,y)=(x,y)\times r=(1-(1-x)^{r},y^{r})\), \(r\in {\mathbb {R}}\) and \(r\ge 0\), and \((x_{1}, y_{1}){\dot{\oplus }}(x_{2},y_{2})=(x_{1}+x_{2}-x_{1}x_{2}, y_{1}y_{2})\), \((x_{1},y_{1}), (x_{2},y_{2})\in L^{*}\) [18].

5.1 Three-Way Decisions of MIFDISs Based on the Intuitionistic Fuzzy Relarion

We aim to minimize the value of the total loss function. As the IF relation \(\le _{L^{*}}\) only meets the conditions of a partial order, we can only provide a satisfactory, albeit possibly suboptimal, decision value using the following approach.

By considering a reasonable kind of loss functions with condition (C0):

we have

Thus, the expected losses \(R(P|g_{v}(\bigwedge x_{i_{k}}))=(\mu _{P},\gamma _{P})\), \(R(B|g_{v}(\bigwedge x_{i_{k}}))=(\mu _{B},\gamma _{B})\) and \(R(N|g_{v}(\bigwedge x_{i_{k}}))=(\mu _{N},\gamma _{N})\) are IF numbers.

If \(\bigcap R_{i}(x_{i_{k}})\in {\mathcal {M}}\), under condition (C0), we define the following decision value rules, which can give a satisfactory decision value.

(P1) If \(\mu _{P}\le \mu _{B}\), \(\gamma _{P}\ge \gamma _{B}\) and \(\mu _{P}\le \mu _{N}\), \(\gamma _{P}\ge \gamma _{N}\), decide \(g(\bigwedge x_{i_{k}})\in POS(v)\);

(N1) If \(\mu _{N}\le \mu _{P}\), \(\gamma _{N}\ge \gamma _{P}\) and \(\mu _{N}\le \mu _{B}\), \(\gamma _{N}\ge \gamma _{B}\), decide \(g(\bigwedge x_{i_{k}})\in NEG(v)\);

(B1) If \(g(\bigwedge x_{i_{k}})\not \in POS(v)\) and \(g(\bigwedge x_{i_{k}})\not \in NEG(v)\), then we decide \(g(\bigwedge x_{i_{k}})\in BND(v)\).

Suppose \(\frac{1}{\infty }=0\), under condition (C0), we simplify the decision value rules (P1)-(N1). For (P1), the first condition,

where \(\log r\) is an abbreviation of \(\log _{10}r\), \(r\in R\).

Because when \(m^{*}(\bigcap \nolimits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)=0\), \(m^{*}(\bigcap \nolimits _{i=1}^{n} R_{i}(x_{i_{k}}),v)(\log (1-\mu _{\lambda _{PP}})-\log (1-\mu _{\lambda _{BP}}))\ge 0\) and \(m^{*}(\bigcap \nolimits _{i=1}^{n} R_{i}(x_{i_{k}}),v)(\log \gamma _{\lambda _{PP}}-\log \gamma _{\lambda _{BP}})\ge 0\) always hold, in this case \(\mu _{P}\le \mu _{B}\) and \(\gamma _{P}\ge \gamma _{B}\). Similarly, we also have \(\mu _{P}\le \mu _{N}\) and \(\gamma _{P}\ge \gamma _{N}\). So, when \(m^{*}(\bigcap \nolimits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)=0\), \(g(\bigwedge x_{i_{k}})\in POS(v)\).

When \(m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)\ne 0\),

For (N1), we conclude: \(m^{*}(\bigcap R_{i}(x_{i_{k}}),\lnot v)\ne 0\),

Thus, \(\bigcap R_{i}(x_{i_{k}})\in {\mathcal {M}}\), under condition (C0), the decision value rules \((P1)-(N1)\) can be re-expressed as:

If \(m^{*}(\bigcap \nolimits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)=0\), then \(g(\bigwedge x_{i_{k}})\in POS(v)\), else

(P2) If \(\frac{m^{*}(\bigcap \nolimits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}{m^{*}(\bigcap \nolimits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)}\ge \max \{\frac{\log (1-\mu _{\lambda _{BN}})-\log (1-\mu _{\lambda _{PN}})}{\log (1-\mu _{\lambda _{PP}})-\log (1-\mu _{\lambda _{BP}})},~\frac{\log \gamma _{\lambda _{BN}}-\log \gamma _{\lambda _{PN}}}{\log \gamma _{\lambda _{PP}}-\log \gamma _{\lambda _{BP}}}\}\)

and \(\frac{m^{*}(\bigcap \nolimits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}{m^{*}(\bigcap \nolimits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)}\ge \max \{\frac{\log (1-\mu _{\lambda _{NN}})-\log (1-\mu _{\lambda _{PN}})}{\log (1-\mu _{\lambda _{PP}})-\log (1-\mu _{\lambda _{NP}})},~\frac{\log \gamma _{\lambda _{NN}}-\log \gamma _{\lambda _{PN}}}{\log \gamma _{\lambda _{PP}}-\log \gamma _{\lambda _{NP}}}\}\),

then \(g(\bigwedge x_{i_{k}})\in POS(v)\);

(N2) If \(\frac{m^{*}(\bigcap \nolimits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}{m^{*}(\bigcap \nolimits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)}\le \min \{\frac{\log (1-\mu _{\lambda _{NN}})-\log (1-\mu _{\lambda _{PN}})}{\log (1-\mu _{\lambda _{PP}})-\log (1-\mu _{\lambda _{NP}})},~\frac{\log \gamma _{\lambda _{NN}}-\log \gamma _{\lambda _{PN}}}{\log \gamma _{\lambda _{PP}}-\log \gamma _{\lambda _{NP}}}\}\)

and \(\frac{m^{*}(\bigcap \nolimits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}{m^{*}(\bigcap \nolimits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)}\le \min \{\frac{\log (1-\mu _{\lambda _{NN}})-\log (1-\mu _{\lambda _{BN}})}{\log (1-\mu _{\lambda _{BP}})-\log (1-\mu _{\lambda _{NP}})},~\frac{\log \gamma _{\lambda _{NN}}-\log \gamma _{\lambda _{BN}}}{\log \gamma _{\lambda _{BP}}-\log \gamma _{\lambda _{NP}}}\}\),

then \(g(\bigwedge x_{i_{k}})\in NEG(v)\).

(B2) If \(g(\bigwedge x_{i_{k}})\not \in POS(v)\) and \(g(\bigwedge x_{i_{k}})\not \in NEG(v)\), then we decide \(g(\bigwedge x_{i_{k}})\in BND(v)\).

Let

\(\alpha _{1}=\max \{a_{PB}=\frac{\log (1-\mu _{\lambda _{BN}})-\log (1-\mu _{\lambda _{PN}})}{\log (1-\mu _{\lambda _{PP}})-\log (1-\mu _{\lambda _{BP}})},~b_{PB}=\frac{\log \gamma _{\lambda _{BN}}-\log \gamma _{\lambda _{PN}}}{\log \gamma _{\lambda _{PP}}-\log \gamma _{\lambda _{BP}}}\}\),

\(\beta _{1}=\max \{a_{PN}=\frac{\log (1-\mu _{\lambda _{NN}})-\log (1-\mu _{\lambda _{PN}})}{\log (1-\mu _{\lambda _{PP}})-\log (1-\mu _{\lambda _{NP}})},~b_{PN}=\frac{\log \gamma _{\lambda _{NN}}-\log \gamma _{\lambda _{PN}}}{\log \gamma _{\lambda _{PP}}-\log \gamma _{\lambda _{NP}}}\}\),

\(\alpha _{2}=\min \{a_{PN}=\frac{\log (1-\mu _{\lambda _{NN}})-\log (1-\mu _{\lambda _{PN}})}{\log (1-\mu _{\lambda _{PP}})-\log (1-\mu _{\lambda _{NP}})},~b_{PN}=\frac{\log \gamma _{\lambda _{NN}}-\log \gamma _{\lambda _{PN}}}{\log \gamma _{\lambda _{PP}}-\log \gamma _{\lambda _{NP}}}\}\),

\(\beta _{2}=\min \{a_{NB}=\frac{\log (1-\mu _{\lambda _{NN}})-\log (1-\mu _{\lambda _{BN}})}{\log (1-\mu _{\lambda _{BP}})-\log (1-\mu _{\lambda _{NP}})},~b_{NB}=\frac{\log \gamma _{\lambda _{NN}}-\log \gamma _{\lambda _{BN}}}{\log \gamma _{\lambda _{BP}}-\log \gamma _{\lambda _{NP}}}\}\).

If \(\max \{\alpha _{1}, \beta _{1}\}>\min \{\alpha _{2}, \beta _{2}\}\), the decision value rules (P2)-(N2) can concisely be re-expressed as follows:

(P2) If \(m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)=0\) or \(\frac{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)}> \max \{\alpha _{1},\beta _{1}\}\), then \(g(\bigwedge x_{i_{k}})\in POS(v)\);

(B2) If \(\frac{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)}\le \max \{\alpha _{1},\beta _{1}\}\), and \(\frac{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)}\ge \min \{\alpha _{2},\beta _{2}\}\), then \(g(\bigwedge x_{i_{k}})\in BND(v)\);

(N2) If \(\frac{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)}< \min \{\alpha _{2},\beta _{2}\}\), then \(g(\bigwedge x_{i_{k}})\in NEG(v)\).

Therefore, if \(\bigcap R_{i}(x_{i_{k}})\in {\mathcal {M}}\), \(\max \{\alpha _{1}, \beta _{1}\}=\min \{\alpha _{2}, \beta _{2}\}\), the decision value rules (P2)-(N2) can be concisely re-expressed as:

(P2) If \(m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)=0\) or \(\frac{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)}>\max \{\alpha _{1},\beta _{1}\}\), then \(g(\bigwedge x_{i_{k}})\in POS(v)\);

(B2) If \(\frac{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)}=\max \{\alpha _{1},\beta _{1}\}\), then \(g(\bigwedge x_{i_{k}})\in BND(v)\);

(N2) If \(\frac{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)}< \min \{\alpha _{2},\beta _{2}\}\), then \(g(\bigwedge x_{i_{k}})\in NEG(v)\).

In this case, for \(v\in V_d\), we can give one satisfactory decision value by using the above decision value rules. In the following, we want to improve decision value rules by improving the order method.

5.2 Three-Way Decisions Based on the Compromise Rule

The estimation of the loss function involves both membership degree and non-membership degree. As \(\le _{L^{*}}\) is a partial order relation, it is possible that some loss function values cannot be compared directly. However, we can use a compromise rule to transform a partial order set into a total order set and facilitate three-way decisions. To achieve this, we define a compromise function E as follows: \((x,y)\in L^{*}\),

\(E(x,y)=\rho x+(1-\rho )(1-y)\), \(\rho \in [0,1]\).

\(E(R(P|g_{v}(\bigwedge x_{i_{k}}))=\rho (1-(1-\mu _{\lambda _{PP}})^{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}(1-\mu _{\lambda _{PN}})^{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)})+(1-\rho )(1- (\gamma _{\lambda _{PP}})^{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}(\gamma _{\lambda _{PN}})^{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)})\),

\(E(R(B|g_{v}(\bigwedge x_{i_{k}}))=\rho (1-(1-\mu _{\lambda _{BP}})^{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}(1-\mu _{\lambda _{BN}})^{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)})+(1-\rho )(1- (\gamma _{\lambda _{BP}})^{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}(\gamma _{\lambda _{BN}})^{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)})\),

\(E(R(N|g_{v}(\bigwedge x_{i_{k}}))=\rho (1-(1-\mu _{\lambda _{NP}})^{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}(1-\mu _{\lambda _{NN}})^{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)})+(1-\rho )(1- (\gamma _{\lambda _{NP}})^{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}(\gamma _{\lambda _{NN}})^{m^{*}(\bigcap \limits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)})\).

Notice: \(E(R(P|g_{v}(\bigwedge x_{i_{k}}))\), \(E(R(B|g_{v}(\bigwedge x_{i_{k}}))\) and \(E(R(N|g_{v}(\bigwedge x_{i_{k}}))\) are real numbers. So, in this case, we can utilize the total order \(\le\) for operations.

If \(\bigcap R_{i}(x_{i_{k}})\in {\mathcal {M}}\), under condition (C0), we define the following decision value rules:

\((P')\) If \(E(R(P|g_{v}(\bigwedge x_{i_{k}}))\le E(R(B|g_{v}(\bigwedge x_{i_{k}}))\) and \(E(R(P|g_{v}(\bigwedge x_{i_{k}}))\le E(R(N|g_{v}(\bigwedge x_{i_{k}}))\), decide \(g(\bigwedge x_{i_{k}})\in POS(v)\);

\((B')\) If \(E(R(B|g_{v}(\bigwedge x_{i_{k}}))< E(R(P|g_{v}(\bigwedge x_{i_{k}}))\) and \(E(R(B|g_{v}(\bigwedge x_{i_{k}}))< E(R(N|g_{v}(\bigwedge x_{i_{k}}))\), decide \(g(\bigwedge x_{i_{k}})\in BND(v)\);

\((N')\) If \(E(R(N|g_{v}(\bigwedge x_{i_{k}}))\le E(R(P|g_{v}(\bigwedge x_{i_{k}}))\) and \(E(R(N|g_{v}(\bigwedge x_{i_{k}}))\le E(R(B|g_{v}(\bigwedge x_{i_{k}}))\), decide \(g(\bigwedge x_{i_{k}})\in NEG(v)\).

Special case 1: When \(\rho =1\), we only need to consider the membership degree. Suppose \(\frac{1}{\infty }=0\), under condition (C0), the decision value rules \((P')-(N')\) can be simplified. For the rule \((P')\), the first condition is described as:

Since \((\frac{1-\mu _{\lambda _{PP}}}{1-\mu _{\lambda _{BP}}})^{ m^{*}(\bigcap \nolimits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}\ge 1\) and \((\frac{1-\mu _{\lambda _{PP}}}{1-\mu _{\lambda _{NP}}})^{ m^{*}(\bigcap \nolimits _{i=1}^{n} R_{i}(x_{i_{k}}),v)}\ge 1\) always hold, thus when

\(m^{*}(\bigcap \nolimits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)=0\), then \(g(\bigwedge x_{i_{k}})\in Pos(v)\).

When \(m^{*}(\bigcap \nolimits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)\ne 0\), for \(N'\),

For \(B'\),

Therefore, if \(a_{PB}>a_{NB}\), the decision value rules can be concisely re-expressed as:

\((P1')\) If \(m^{*}(\bigcap \nolimits _{i=1}^{n} R(x_{i_{k}}),\lnot v)=0\) or \(\frac{m^{*}(\bigcap \nolimits _{i=1}^{n} R(x_{i_{k}}),v)}{m^{*}(\bigcap \limits _{i=1}^{n} R(x_{i_{k}}),\lnot v)}> max\{a_{PB},a_{PN}\}\), then \(g(\bigwedge x_{i_{k}})\in POS(v)\);

\((B1')\) If \(min\{a_{BN},a_{PN}\}\le \frac{m^{*}(\bigcap \nolimits _{i=1}^{n} R(x_{i_{k}}),v)}{m^{*}(\bigcap \nolimits _{i=1}^{n} R(x_{i_{k}}),\lnot v)}\le max\{a_{PB},a_{PN}\}\), then \(g(\bigwedge x_{i_{k}})\in BND(v)\);

\((N1')\) If \(\frac{m^{*}(\bigcap \nolimits _{i=1}^{n} R(x_{i_{k}}),v)}{m^{*}(\bigcap \nolimits _{i=1}^{n} R_{i}(x_{i_{k}}),\lnot v)}< min\{a_{BN},a_{PN}\}\), then \(g(\bigwedge x_{i_{k}})\in NEG(v)\).

Special case 2: When \(\rho =0\), we just need to take the non-membership degree into account. Under condition (C0), for the rule \((P')\), we describe the first condition as follows:

\(\begin{array}{ll} &{} E(R(P|g_{v}(\bigwedge x_{i_{k}}))\le E(R(B|g_{v}(\bigwedge x_{i_{k}}))\\ \Leftrightarrow &{} 1-\gamma _{\lambda _{PP}}^{m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),v)}\gamma _{\lambda _{PN}}^{m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)}\le 1-\gamma _{\lambda _{BP}}^{m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),v)}\gamma _{\lambda _{BN}}^{m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)} \\ \Leftrightarrow &{} \gamma _{\lambda _{PP}}^{m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),v)}\gamma _{\lambda _{PN}}^{m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)}\ge \gamma _{\lambda _{BP}}^{m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),v)}\gamma _{\lambda _{BN}}^{m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)}\\ \Leftrightarrow &{} \gamma _{P}\ge \gamma _{B}\\ \Leftrightarrow &{} \left\{ \begin{array}{ll} \frac{m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),v)}{m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)}\ge b_{BP}, &{} m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)\ne 0; \\ (\frac{\gamma _{\lambda _{PP}}}{\gamma _{\lambda _{BP}}})^{ m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),v)}\ge 1, &{} m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)=0. \end{array} \right. \end{array}\)

\(\begin{array}{ll} &{} E(R(P|g_{v}(\bigwedge x_{i_{k}}))\le E(R(N|g_{v}(\bigwedge x_{i_{k}}))\\ \Leftrightarrow &{} \gamma _{P}\ge \gamma _{N}\\ \Leftrightarrow &{} \left\{ \begin{array}{ll} \frac{m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),v)}{m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)}\ge b_{PN}, &{} m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)\ne 0; \\ (\frac{1-\mu _{\lambda _{PP}}}{1-\mu _{\lambda _{NP}}})^{ m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),v)}\ge 1, &{} m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)=0. \end{array} \right. \end{array}\)

Since \((\frac{1-\mu _{\lambda _{PP}}}{1-\mu _{\lambda _{BP}}})^{ m^{*}(\bigcap \nolimits _{i=1}^{n}R_{i}(x_{i_{k}}),v)}\ge 1\) and \((\frac{1-\mu _{\lambda _{PP}}}{1-\mu _{\lambda _{NP}}})^{ m^{*}(\bigcap \nolimits _{i=1}^{n}R_{i}(x_{i_{k}}),v)}\ge 1\) always hold, thus when

\(m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)=0\), then \(g(\bigwedge x_{i_{k}})\in Pos(v)\).

When \(m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)\ne 0\), for \((N')\),

For \((B')\),

Therefore, if \(b_{PB}>b_{NB}\), the decision value rules can be concisely re-expressed as:

\((P2')\) If \(m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)=0\) or \(\frac{m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),v)}{m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)}> max\{b_{PB},b_{PN}\}\), then \(g(\bigwedge x_{i_{k}})\in POS(v)\);

\((B2')\) If \(min\{b_{BN},b_{PN}\}\le \frac{m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),v)}{m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)}\le max\{b_{PB},b_{PN}\}\), then \(g(\bigwedge x_{i_{k}})\in BND(v)\);

\((N2')\) If \(\frac{m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),v)}{m^{*}(\bigcap \limits _{i=1}^{n}R_{i}(x_{i_{k}}),\lnot v)}< min\{b_{BN},b_{PN}\}\), then \(g(\bigwedge x_{i_{k}})\in NEG(v)\).

By the above analyses, we find that these two special cases of three-way decisions are the degradation forms of three-way decisions of MIFDISs based on the IF relation.

5.3 Acquisition Algorithm of the Fused Decision Value Set for DRs

The fused decision value set can be calculated by utilizing the satisfactory decision value of three-way decision methods of MIFDISs proposed in subsection 5.1, as demonstrated in the following algorithm (the algorithm of the fused decision value set with respected to subsection 5.2 can be similarly given).

These methods are applicable to handle complete IF decision information systems, where the same set of objects is considered, even if the attribute sets may differ. These attribute sets can generate different reflexive binary relations. And the probability of each object is not 0. Because an IFDIS may be inconsistent, and even the fusion system of multiple inconsistent IFDISs can still be inconsistent, leading to generate reasonable inconsistent DRs. And the satisfactory fused decision value set relies on the IF numbers \(\lambda _{\cdot \cdot }\) (\(\cdot =P,B,N\)). In some cases, it is possible that certain objects do not belong to any POS(v), \(\forall v\in V_{d}\), which means that we cannot obtain the satisfactory fused decision value sets for these objects. In these situations, we can adjust IF numbers \(\lambda _{\cdot \cdot }\) so that each object is at least belong in the acceptance region of some decision value. And, in Algorithm 1, if \(x_{1_{k}}=\ldots =x_{n_{k}}=x\), then \(D(\bigwedge x_{i_{k}})\) denotes as D(x), \(\forall x\in U\).

Of course, for different forms of three-way decisions of MIFDISs, there are different algorithms. In these algorithms, only Step 3, Step 5 and Step 6 are different. For the case of the compromise rule, in Step 3, we need to compute thresholds \(a_{PB},a_{PN},a_{NB},b_{PB},b_{PN},b_{NB}\). Step 5 and Step 6 are the operations for decision value rules, so we only need to adjust specific operations to the cases of \((P')\), \((B')\) and \((N')\).

5.4 Example Analyses

Use the following examples to demonstrate this algorithm. Firstly, demonstrate the calculation of various metrics in Step 1 using Examples 5.1 and 5.2.

Example 5.1

Suppose \(U=\{x_{1},x_{2},\ldots ,x_{6}\}\) is an universe of discourse, \(At^{1}=\{a_{1},a_{2},a_{3}\}\) is an attribute set, \(d^{1}\) is a multi-valued decision attribute, the decision table is as follows (Table 1).

Let \(P(x_{i})=\frac{1}{6}\), \(\forall x\in U\). And

\(\mu _{R}(x_{i},x_{j})=\frac{1}{3}\sum _{k=1}^3(1-\sqrt{\frac{(\mu _{a_k}(x_{i})-\mu _{a_{k}}(x_{j}))^{2}+ (\gamma _{a_k}(x_{i})-\gamma _{a_{k}}(x_{j}))^{2}}{2}})\);

\(\gamma _{R}(x_{i},x_{j})=\frac{1}{3}\sum _{k=1}^3(1-\sqrt{\frac{(1-|\mu _{a_k}(x_{i})-\mu _{a_{k}}(x_{j})|)^{2}+ (1-|\gamma _{a_k}(x_{i})-\gamma _{a_{k}}(x_{j})|)^{2}}{2}})\).

For this decision information system, we can compute an IFR \(R_{1}\) as follows (Table 2).

Thus we can compute the confidence level of every DR as follows: for object \(x_{1}\), we have

\(c_{1}^{1'}(R_{1}(x_{1})=\frac{1+\frac{0.65^{2}+(1-0.35)^{2}}{2}}{2}=0.71\), \(c_{2}^{1'}(R_{1}(x_{1})=\frac{\frac{0.76^{2}+(1-0.23)^{2}}{2}+\frac{0.55^{2}+(1-0.42)^{2}}{2}}{2}=0.45\),

\(c_{3}^{1'}(R_{1}(x_{1})=\frac{\frac{0.53^{2}+(1-0.47)^{2}}{2}+\frac{0.4^{2}+(1-0.57)^{2}}{2}}{2}=0.229\), then \(c_{1}^{1}(R_{1}(x_{1})=\frac{0.71}{0.71+0.45+0.229}=0.51\),

\(c_{2}^{1}(R_{1}(x_{1})=0.32\), \(c_{3}^{1}(R_{1}(x_{1})=0.17\).

Thus for \(\lnot 1\), we have

Similarly, for object \(x_{2}\), we have

\(\begin{array}{lll} c_{1}^{ 1}(R_{1}(x_{2}))=0.33, &{} c_{2}^{ 1}(R_{1}(x_{2}))=0.51, &{} c_{3}^{ 1}(R_{1}(x_{2}))=0.16; \\ c_{\lnot 1}^{ 1}(R_{1}(x_{2}))=0.67, &{} c_{\lnot 2}^{ 1}(R_{1}(x_{2})=0.49, &{} c_{\lnot 3}^{ 1}(R_{1}(x_{2})=0.84. \end{array}\)

For object \(x_{3}\), we have

\(\begin{array}{lll} c_{1}^{ 1}(R_{1}(x_{3}))=0.27, &{} c_{2}^{ 1}(R_{1}(x_{3}))=0.21, &{} c_{3}^{ 1}(R_{1}(x_{3}))=0.52; \\ c_{\lnot 1}^{ 1}(R_{1}(x_{3}))=0.73, &{} c_{\lnot 2}^{ 1}(R_{1}(x_{3}))=0.79, &{} c_{\lnot 3}^{ 1}(R_{1}(x_{3}))=0.48. \end{array}\)

For object \(x_{4}\), we have

\(\begin{array}{lll} c_{1}^{1}(R_{1}(x_{4}))=0.23, &{} c_{2}^{1}(R_{1}(x_{4}))=0.53, &{} c_{3}^{1}(R_{1}(x_{4}))=0.24; \\ c_{\lnot 1}^{1}(R_{1}(x_{4}))=0.77, &{} c_{\lnot 2}^{1}(R_{1}(x_{4}))=0.47, &{} c_{\lnot 3}^{1}(R_{1}(x_{4}))=0.76. \end{array}\)

For object \(x_{5}\), we have

\(\begin{array}{lll} c_{1}^{1}(R_{1}(x_{5}))=0.22,&{} c_{2}^{1}(R_{1}(x_{5}))=0.14,&{}c_{3}^{1}(R_{1}(x_{5}))=0.64;\\ c_{\lnot 1}^{1}(R_{1}(x_{5}))=0.78,&{} c_{\lnot 2}^{1}(R_{1}(x_{5}))=0.86, &{}c_{\lnot 3}^{1}(R_{1}(x_{5}))=0.36. \end{array}\)

For object \(x_{6}\), we have

\(\begin{array}{lll} c_{1}^{1}(R_{1}(x_{6}))=0.43,&{} c_{2}^{1}(R_{1}(x_{6}))=0.26, &{}c_{3}^{1}(R_{1}(x_{6}))=0.31;\\ c_{\lnot 1}^{1}(R_{1}(x_{6}))=0.57, &{}c_{\lnot 2}^{1}(R_{1}(x_{6}))=0.74, &{}c_{\lnot 3}^{1}(R_{1}(x_{6}))=0.69. \end{array}\)

And we can also obtain the IF belief function and the IF plausibility function, which are defined in Definition 2.6 and \(T((a_1,b_1),(a_2,b_2))=(a_1\wedge a_2, b_1\vee b_2)\), \(I((a_1,b_1),(a_2,b_2))=(b_1\vee a_2,a_1\wedge b_2 )\), thus \(\forall x_{i}\in U\), we can further calculate to get \(f_{R_{1}}(R_{1}(x_{i}))\).

For object \(x_{1}\), by \(Be(R_{1}(x_{1}))=0.22\), \(Pla(R_{1}(x_{1}))=0.58\), we have

\(f_{R_{1}}(R_{1}(x_{1}))=Be(R_{1}(x_{1}))+\sigma (R_{1}(x_{1}),(\widehat{1,0}))(Pla(R_{1}(x_{1}))-Be(R_{1}(x_{1})))=0.39\).

Similarly, we have \(f_{R_{1}}(R_{1}(x_{2}))=0.44\), \(f_{R_{1}}(R_{1}(x_{3}))=0.46\), \(f_{R_{1}}(R_{1}(x_{4}))=0.46\), \(f_{R_{1}}(R_{1}(x_{5}))=0.4\), \(f_{R_{1}}(R_{1}(x_{6}))=0.51\).

Using the quasi-probability function and the confidence level of DRs, the probability of every DR based on the D-S evidence theory can be obtained, that is, the mass function of DRs can be obtained. Thus for decision value 1,

\(m^{1}(R_{1}(x_{1}),1)=\frac{0.41\times 0.51}{0.41\times 0.51+0.44\times 0.33+0.47\times 0.27+0.46\times 0.23 +0.4\times 0.22+0.51\times 0.43}=0.23.\)

Similarly, we have \(m^{1}(R_{1}(x_{2}),1)=0.16\), \(m^{1}(R_{1}(x_{3}),1)=0.14\), \(m^{1}(R_{1}(x_{4}),1)=0.12\), \(m^{1}(R_{1}(x_{5}),1)=0.1\), \(m^{1}(R_{1}(x_{6}),1)=0.25\).

For decision value \(\lnot 1\), we have

\(\begin{array}{lll} m^{1}(R_{1}(x_{1}),\lnot 1)=0.1, &{} m^{1}(R_{1}(x_{2}),\lnot 1)=0.17, &{} m^{1}(R_{1}(x_{3}),\lnot 1)=0.19,\\ m^{1}(R_{1}(x_{4}),\lnot 1)=0.2,&{} m^{1}(R_{1}(x_{5}),\lnot 1)=0.18,&{} m^{1}(R_{1}(x_{6}),\lnot 1)=0.16. \end{array}\)

For decision value 2, we have

\(\begin{array}{lll} m^{1}(R_{1}(x_{1}),2)=0.14,&{}m^{1}(R_{1}(x_{2}),2)=0.25,&{} m^{1}(R_{1}(x_{3}),2)=0.11,\\ m^{1}(R_{1}(x_{4}),2)=0.27,&{}m^{1}(R_{1}(x_{5}),2)=0.09,&{} m^{1}(R_{1}(x_{6}),2)=0.14. \end{array}\)

For decision value \(\lnot 2\), we have

\(\begin{array}{lll} m^{1}(R_{1}(x_{1}),\lnot 2)=0.15,&{}m^{1}(R_{1}(x_{2}),\lnot 2)=0.12,&{} m^{1}(R_{1}(x_{3}),\lnot 2)=0.21,\\ m^{1}(R_{1}(x_{4}),\lnot 2)=0.12,&{}m^{1}(R_{1}(x_{5}),\lnot 2)=0.18, &{}m^{1}(R_{1}(x_{6}),\lnot 2)=0.22. \end{array}\)

For decision value 3, we have

\(\begin{array}{lll} m^{1}(R_{1}(x_{1}),3)=0.07,&{}m^{1}(R_{1}(x_{2}),3)=0.08,&{}m^{1}(R_{1}(x_{3}),3)=0.27,\\ m^{1}(R_{1}(x_{4}),3)=0.13,&{}m^{1}(R_{1}(x_{5}),3)=0.27,&{}m^{1}(R_{1}(x_{6}),3)=0.18. \end{array}\)

For decision value \(\lnot 3\), we have

\(\begin{array}{lll} m^{1}(R_{1}(x_{1}),\lnot 3)=0.18,&{} m^{1}(R_{1}(x_{2}),\lnot 3)=0.21,&{} m^{1}(R_{1}(x_{3}),\lnot 3)=0.12,\\ m^{1}(R_{1}(x_{4}),\lnot 3)=0.2,&{} m^{1}(R_{1}(x_{5}),\lnot 3)=0.09,&{} m^{1}(R_{1}(x_{6}),\lnot 3)=0.2. \end{array}\)

Example 5.2

(Following Example 4.1) There are another IFDIS \((U, At^{2}, d^{2})\), where \(At^{2}=\{a_{4},a_{5},a_{6}\}\) is an attribute set, \(d^{2}\) is a multi-valued decision attribute, the second decision table is as follows (Table 3):

Let \(P(x_{i})=\frac{1}{6}\), \(\forall x\in U\).

For this information system, we can compute the IFR \(R_{2}\) as follows (Table 4):

Thus we can compute the confidence level of every DR as follows: for object \(x_{1}\), we have

\(\begin{array}{lll} c_{1}^{2}(R_{2}(x_{1}))=0.33,&{}c_{2}^{2}(R_{2}(x_{1}))=0.56,&{}c_{3}^{2}(R_{2}(x_{1}))=0.11;\\ c_{\lnot 1}^{2}(R_{2}(x_{1}))=0.67,&{}c_{\lnot 2}^{2}(R_{2}(x_{1}))=0.44,&{} c_{\lnot 3}^{2}(R_{2}(x_{1}))=0.89. \end{array}\)

Similarly, for object \(x_{2}\), we have

\(\begin{array}{lll} c_{1}^{2}(R_{2}(x_{2}))=0.32,&{} c_{2}^{2}(R_{2}(x_{2}))=0.57,&{} c_{3}^{2}(R_{2}(x_{2}))=0.11;\\ c_{\lnot 1}^{2}(R_{2}(x_{2}))=0.68, &{}c_{\lnot 2}^{2}(R_{2}(x_{2}))=0.43,&{} c_{\lnot 3}^{2}(R_{2}(x_{2}))=0.89. \end{array}\)

For object \(x_{3}\), we have

\(\begin{array}{lll} c_{1}^{2}(R_{2}(x_{3}))=0.22,&{} c_{2}^{2}(R_{2}(x_{3}))=0.1,&{} c_{3}^{2}(R_{2}(x_{3}))=0.68;\\ c_{\lnot 1}^{2}(R_{2}(x_{3}))=0.78,&{} c_{\lnot 2}^{2}(R_{2}(x_{3}))=0.9,&{} c_{\lnot 3}^{2}(R_{2}(x_{3}))=0.32. \end{array}\)

For object \(x_{4}\), we have

\(\begin{array}{lll} c_{1}^{2}(R_{2}(x_{4}))=0.22,&{} c_{2}^{2}(R_{2}(x_{4}))=0.1,&{}c_{3}^{2}(R_{2}(x_{4}))=0.68;\\ c_{\lnot 1}^{2}(R_{2}(x_{4}))=0.78,&{} c_{\lnot 2}^{2}(R_{2}(x_{4}))=0.9,&{} c_{\lnot 3}^{2}(R_{2}(x_{4}))=0.32. \end{array}\)

For object \(x_{5}\), we have

\(\begin{array}{lll} c_{1}^{2}(R_{2}(x_{5}))=0.35,&{} c_{2}^{2}(R_{2}(x_{5}))=0.32, &{}c_{3}^{2}(R_{2}(x_{5}))=0.33;\\ c_{\lnot 1}^{2}(R_{2}(x_{5}))=0.65,&{} c_{\lnot 2}^{2}(R_{2}(x_{5}))=0.68,&{} c_{\lnot 3}^{2}(R_{2}(x_{5}))=0.67. \end{array}\)

For object \(x_{6}\), we have

\(\begin{array}{lll} c_{1}^{2}(R_{2}(x_{6}))=0.52,&{} c_{2}^{2}(R_{2}(x_{6}))=0.14,&{} c_{3}^{2}(R_{2}(x_{6}))=0.34;\\ c_{\lnot 1}^{2}(R_{2}(x_{6}))=0.48,&{} c_{\lnot 2}^{2}(R_{2}(x_{6}))=0.86,&{} c_{\lnot 3}^{2}(R_{2}(x_{6}))=0.66. \end{array}\)

And, we have the focal element set \(\{R_{2}(x_{1}),R_{2}(x_{2}),R_{2}(x_{3})=R_{2}(x_{4}),R_{2}(x_{5}),R_{2}(x_{6})\}\), then

\(f_{R_{2}}(R_{2}(x_{1}))=0.35\), \(f_{R_{2}}(R_{2}(x_{2}))=0.35\), \(f_{R_{2}}(R_{2}(x_{3}))=f_{R_{2}}(R_{2}(x_{4}))=0.32\), \(f_{R_{2}}(R_{2}(x_{5}))=0.34\), \(f_{R_{2}}(R_{2}(x_{6}))=0.35\).

Then, the possible fused DR set is \(\{DR^{2}(x_{1},v),DR^{2}(x_{2},v),DR^{2}(x_{3},v)=DR^{2}(x_{4},v),DR^{2}(x_{5},v),\)

\(DR^{2}( x_{6},v),\forall v\in V_{d_{2}}\}\), and the DR IF mass functions are:

\(\begin{array}{lll} m^{2}(R_{2}(x_{1}),1)=0.17, &{} m^{2}(R_{2}(x_{2}),1)=0.17,&{} m^{2}(R_{2}(x_{3}),1)=0.21,\\ m^{2}(R_{2}(x_{5}),1)=0.18,&{} m^{2}(R_{2}(x_{6}),1)=0.27.&{} \end{array}\)

For decision value \(\lnot 1\), we have

\(\begin{array}{lll} m^{2}(R_{2}(x_{1}),\lnot 1)=0.17,&{}m^{2}(R_{2}(x_{2}),\lnot 1)=0.18,&{}m^{2}(R_{2}(x_{3}),\lnot 1)=0.37,\\ m^{2}(R_{2}(x_{5}),\lnot 1)=0.16,&{} m^{2}(R_{2}(x_{6}),\lnot 1)=0.12.&{} \end{array}\)

For decision value 2, we have

\(\begin{array}{lll} m^{2}(R_{2}(x_{1}),2)=0.31, &{}m^{2}(R_{2}(x_{2}),2)=0.32,&{} m^{2}(R_{2}(x_{3}),2)=0.11,\\ m^{2}(R_{2}(x_{5}),2)=0.18,&{}m^{2}(R_{2}(x_{6}),2)=0.08.&{} \end{array}\)

For decision value \(\lnot 2\), we have

\(\begin{array}{lll} m^{2}(R_{2}(x_{1}),\lnot 2)=0.11,&{}m^{2}(R_{2}(x_{2}),\lnot 2)=0.11,&{}m^{2}(R_{2}(x_{3}),\lnot 2)=0.41,\\ m^{2}(R_{2}(x_{5}),\lnot 2)=0.16,&{}m^{2}(R_{2}(x_{6}),\lnot 2)=0.21.&{} \end{array}\)

For decision value 3, we have

\(\begin{array}{lll} m^{2}(R_{2}(x_{1}),3)=0.05,&{} m^{2}(R_{2}(x_{2}),3)=0.05,&{} m^{2}(R_{2}(x_{3}),3)=0.59,\\ m^{2}(R_{2}(x_{5}),3)=0.15,&{}m^{2}(R_{2}(x_{6}),3)=0.16. &{} \end{array}\)

For decision value \(\lnot 3\), we have

\(\begin{array}{lll} m^{2}(R_{2}(x_{1}),\lnot 3)=0.24,&{}m^{2}(R_{2}(x_{2}),\lnot 3)=0.24,&{}m^{2}(R_{2}(x_{3}),\lnot 3)=0.16,\\ m^{2}(R_{2}(x_{5}),\lnot 3)=0.18,&{}m^{2}(R_{2}(x_{6}),\lnot 3)=0.18.&{} \end{array}\)

Furthermore, we consider the fused mass function of DRs and the selection of optimal decision values using three-way decisions.

Example 5.3

(Following Example 5.1 and 5.2) Step 2: Thus \({\mathcal {M}}=\{R_{1}(x_{1})\cap R_{2}(x_{1}), R_{1}(x_{2})\cap R_{2}(x_{2}),R_{1}(x_{3})\cap R_{2}(x_{3})=R_{1}(x_{3})\cap R_{2}(x_{4}),R_{1}(x_{4})\cap R_{2}(x_{3})=R_{1}(x_{4})\cap R_{2}(x_{4}),R_{1}(x_{5})\cap R_{2}(x_{5}),R_{1}(x_{6})\cap R_{2}(x_{6})\}\), we then obtain the fused mass function of DRs:

Similarly, we have \(m^*(R_{1}(x_{2})\cap R_{2}(x_{2}),1)=0.16\).

By \(DR(x_{3},1)=DR(x_{3}\bigwedge x_{4},1)\), we only need to compute \(m^*(R_{1}(x_{3})\cap R_{2}(x_{3}),1)\), thus \(m^*(R_{1}(x_{3})\cap R_{2}(x_{3}),1)=0.152\). By \(DR(x_{4},1)=DR(x_{4}\bigwedge x_{3},1)\), we only need to compute \(m^*(R_{1}(x_{4})\cap R_{2}(x_{4}),1)\), thus \(m^*(R_{1}(x_{4})\cap R_{2}(x_{4}),1)=0.146\). \(m^*(R_{1}(x_{5})\cap R_{2}(x_{5}),1)=0.142\), \(m^*(R_{1}(x_{6})\cap R_{2}(x_{6}),1)=0.226\).

Similarly, for decision value \(\lnot 1\), we have

\({ \begin{array}{lll} m^*(R_{1}(x_{1})\cap R_{2}(x_{1}),\lnot 1)=0.143,&{}m^*(R_{1}(x_{2})\cap R_{2}(x_{2}),\lnot 1)=0.159,&{}m^*(R_{1}(x_{3})\cap R_{2}(x_{3}),\lnot 1)=0.192,\\ m^*(R_{1}(x_{4})\cap R_{2}(x_{4}),\lnot 1)=0.193,&{}m^*(R_{1}(x_{5})\cap R_{2}(x_{5}),\lnot 1)=0.148,&{}m^*(R_{1}(x_{6})\cap R_{2}(x_{6}),\lnot 1)=0.165. \end{array}}\)

For decision value 2, we have

\(\begin{array}{lll} m^*(R_{1}(x_{1})\cap R_{2}(x_{1}),2)=0.204,&{}m^*(R_{1}(x_{2})\cap R_{2}(x_{2}),2)=0.24,&{} m^*(R_{1}(x_{3})\cap R_{2}(x_{3}),2)=0.12,\\ m^*(R_{1}(x_{4})\cap R_{2}(x_{4}),2)=0.141,&{}m^*(R_{1}(x_{5})\cap R_{2}(x_{5}),2)=0.136,&{}m^*(R_{1}(x_{6})\cap R_{2}(x_{6}),2)=0.159. \end{array}\)

For decision value \(\lnot 2\), we have

\(\begin{array}{lll} m^*(R_{1}(x_{1})\cap R_{2}(x_{1}),\lnot 2)=0.134,&{} m^*(R_{1}(x_{2})\cap R_{2}(x_{2}),\lnot 2)=0.131,&{} m^*(R_{1}(x_{3})\cap R_{2}(x_{3}),\lnot 2)=0.215,\\ m^*(R_{1}(x_{4})\cap R_{2}(x_{4}),\lnot 2)=0.173,&{}m^*(R_{1}(x_{5})\cap R_{2}(x_{5}),\lnot 2)=0.15,&{} m^*(R_{1}(x_{6})\cap R_{2}(x_{6}),\lnot 2)=0.197. \end{array}\)

For decision value 3, we have

\(\begin{array}{lll} m^*_{D}(R_{1}(x_{1})\cap R_{2}(x_{1}),3)=0.099,&{} m^*_{D}(R_{1}(x_{2})\cap R_{2}(x_{2}),3)=0.1,&{} m^*_{D}(R_{1}(x_{3})\cap R_{2}(x_{3}),3)=0.288,\\ m^*_{D}(R_{1}(x_{4})\cap R_{2}(x_{4}),3)=0.192,&{}m^*_{D}(R_{1}(x_{5})\cap R_{2}(x_{5}),3)=0.153,&{} m^*_{D}(R_{1}(x_{6})\cap R_{2}(x_{6}),3)=0.168. \end{array}\)

For decision value \(\lnot 3\), we have

\(\begin{array}{lll} m^*_{D}(R_{1}(x_{1})\cap R_{2}(x_{1}),\lnot 3)=0.191,&{}m^*_{D}(R_{1}(x_{2})\cap R_{2}(x_{2}),\lnot 3)=0.198,&{} m^*_{D}(R_{1}(x_{3})\cap R_{2}(x_{3}),\lnot 3)=0.134,\\ m^*_{D}(R_{1}(x_{4})\cap R_{2}(x_{4}),\lnot 3)=0.149,&{} m^*_{D}(R_{1}(x_{5})\cap R_{2}(x_{5}),\lnot 3)=0.139,&{} m^*_{D}(R_{1}(x_{6})\cap R_{2}(x_{6}),\lnot 3)=0.189. \end{array}\)

In Example 5.3, we have used Step 2 of Algorithm 1 to obtain the fused IF mass functions of DRs as above. In the following, we discuss how to get the optimal decision value set according to Step 3− Step 7 of Algorithm 1.

Example 5.4

(Following Example 5.3) Step 3: If \(\lambda _{PP}=(0.1,0.9)\), \(\lambda _{BP}=(0.65,0.3)\), \(\lambda _{NP}=(0.85,0.1)\), \(\lambda _{NN}=(0,1)\), \(\lambda _{BN}=(0.5,0.4)\), \(\lambda _{PN}=(0.8,0.15)\).

Then we have \(\alpha _{1}=\max \{a_{PB}=0.97,b_{PB}=0.89\}=0.97\), \(\beta _{1}=\max \{a_{PN}=0.9,b_{PN}=0.86\}=0.9\), \(\alpha _{2}=\min \{a_{PN}=0.9,b_{PN}=0.86\}=0.86\), \(\beta _{2}=\min \{a_{NB}=0.82,b_{NB}=0.83\}=0.82\). Thus \(max\{\alpha _1,\beta _1\}>min\{\alpha _2,\beta _2\}\).

By \(m^*(\bigcap \limits _{i=1}^{2}R_{i}(x_{i_{k}}),\lnot v)\ne 0\), then turn to Step 5. Thus, according to Step 5, we can give the optimal decision value of every object.

For decision value 1, we have

by \(\frac{m^*(R_{1}(x_{1})\cap R_{2}(x_{1}),1)}{m^*(R_{1}(x_{1})\cap R_{2}(x_{1}),\lnot 1)}>max\{\alpha _1,\beta _1\}\), we have \(dv(x_{1})=POS(1)\). Thus, we conclude \(dv(x_{1})=POS(1)\), \(dv(x_{2})=POS(1)\),\(dv(x_{3})=NEG(1)\), \(dv(x_{4})=NEG(1)\), \(dv(x_{5})=BND(1)\), \(dv(x_{6})=POS(1)\).

For decision value 2, we have

\(\frac{m^*(R_{1}(x_{1})\cap R_{2}(x_{1}),2)}{m^*(R_{1}(x_{1})\cap R_{2}(x_{1}),\lnot 2)}=\frac{0.204}{0.134}=1.524\), \(\frac{m^*(R_{1}(x_{2})\cap R_{2}(x_{2}),2)}{m^*(R_{1}(x_{2})\cap R_{2}(x_{2}),\lnot 2)}=\frac{0.24}{0.131}=1.83\), \(\frac{m^*(R_{1}(x_{3})\cap R_{2}(x_{3}),2)}{m^*(R_{1}(x_{3})\cap R_{2}(x_{3}),\lnot 2)}=\frac{0.12}{0.215}=0.555\),

\(\frac{m^*(R_{1}(x_{4})\cap R_{2}(x_{4}),2)}{m^*(R_{1}(x_{4})\cap R_{2}(x_{4}),\lnot 2)}=\frac{0.142}{0.173}=0.82\), \(\frac{m^*(R_{1}(x_{5})\cap R_{2}(x_{5}),2)}{m^*(R_{1}(x_{5})\cap R_{2}(x_{5}),\lnot 2)}=\frac{0.136}{0.15}=0.905\), \(\frac{m^*(R_{1}(x_{6})\cap R_{2}(x_{6}),2)}{m^*(R_{1}(x_{6})\cap R_{2}(x_{6}),\lnot 2)}=0.806\),

thus we conclude \(dv(x_{1})= \{POS(1),POS(2)\}, ~dv(x_{2})= \{POS(1),POS(2)\}\), \(dv(x_{3})=\{NEG(1),NEG(2)\}\), \(dv(x_{4})=\{NEG(1),NEG(2)\}\), \(dv(x_{5})=\{BND(1), BND(2)\}\),

\(dv(x_{6})=\{POS(1),NEG(2)\}\).

For decision value 3, we have

\(\frac{m^*(R_{1}(x_{1})\cap R_{2}(x_{1}),3)}{m^*(R_{1}(x_{1})\cap R_{2}(x_{1}),\lnot 3)}=\frac{0.099}{0.191}=0.52\), \(\frac{m^*(R_{1}(x_{2})\cap R_{2}(x_{2}),3)}{m^*(R_{1}(x_{2})\cap R_{2}(x_{2}),\lnot 3)}=\frac{0.1}{0.198}=0.5\), \(\frac{m^*(R_{1}(x_{3})\cap R_{2}(x_{3}),3)}{m^*(R_{1}(x_{3})\cap R_{2}(x_{3}),\lnot 3)}=\frac{0.288}{0.134}=2.15\),

\(\frac{m^*(R_{1}(x_{4})\cap R_{2}(x_{4}),3)}{m^*(R_{1}(x_{4})\cap R_{2}(x_{4}),\lnot 3)}=\frac{0.192}{0.148}=1.3\), \(\frac{m^*(R_{1}(x_{5})\cap R_{2}(x_{5}),3)}{m^*(R_{1}(x_{5})\cap R_{2}(x_{5}),\lnot 3)}=\frac{0.153}{0.139}=1.1\), \(\frac{m^*(R_{1}(x_{6})\cap R_{2}(x_{6}),3)}{m^*(R_{1}(x_{6})\cap R_{2}(x_{6}),\lnot 3)}=\frac{0.168}{0.189}=0.889\),

thus we conclude that

\(dv(x_{1})=\{POS(1),POS(2),NEG(3)\}\), \(dv(x_{2})=\{POS(1),POS(2),NEG(3)\}\),

\(dv(x_{3})=\{NEG(1),NEG(2),POS(3)\}\), \(dv(x_{4})=\{NEG(1),NEG(2),POS(3)\}\),

\(dv(x_{5})=\{BND(1), BND(2),POS(3)\}\), \(dv(x_{6})= \{POS(1),NEG(2),BND(3)\}\).

Thus, comprehensively considering these two IFDISs, we know the satisfactory fused decision value set generated by \(x_{1}\) is \(D(x_{1})=\{1,2\}\), generated by \(x_{2}\) is \(D(x_{2})=\{1,2\}\), generated by \(x_{3}\) is \(D(x_{3})=\{3\}\), generated by \(x_{4}\) is \(D(x_{4})=\{3\}\), generated by \(x_{5}\) is \(D(x_{5})=\{3\}\), generated by \(x_{6}\) is \(D(x_{6})=\{1\}\).

From this example, we find that there are two satisfactory DRs generated by using object \(x_{1}\), that is, \((a_{1}, (0.6,0.3))\wedge (a_{2}, (1,0))\wedge (a_{3}, (0,1))\wedge (a_{4},(1,0)) \wedge (a_{5},(0.1,0.7))\wedge (a_{6},(0.3,0.4) )\rightarrow (d,1)\) and \((a_{1}, (0.6,0.3))\wedge (a_{2}, (1,0))\wedge (a_{3}, (0,1))\wedge (a_{4},(1,0)) \wedge (a_{5},(0.1,0.7))\wedge (a_{6},(0.3,0.4) )\rightarrow (d,2)\).

The satisfactory decision value set (SDVS) of all objects of IFDIS 1 in Example 5.1 and IFDIS 2 in Example 5.2 are studied according to their respective mass functions, and compared with the case of MIFDIS, as shown in Table 5.

For three-way decisions based on the compromise rule, when \(\rho =1\), by \(\max \{a_{PB},a_{PN}\}=a_{PB}=0.97\), \(\min \{a_{NB},a_{PN}\}=a_{NB}=0.82\), so the fused decision value set of every object in this case is the same to the satisfactory fused decision value set in Example 5.4. However, when \(\rho =0\), we have \(\max \{b_{PB},b_{PN}\}=0.89\), \(\min \{b_{NB},b_{PN}\}=0.83\), thus,

\(dv(x_{1})=\{POS(1),POS(2),NEG(3)\}\), \(dv(x_{2})=\{POS(1),POS(2),NEG(3)\}\),

\(dv(x_{3})=\{NEG(1),NEG(2),POS(3)\}\), \(dv(x_{4})=\{\{NEG(1),NEG(2),POS(3)\}\),

\(dv(x_{5})=\{POS(1), POS(2),POS(3)\}\), \(dv(x_{6})= \{POS(1),NEG(2),BND(3)\}\).

Thus the fused decision value set of \(x_{5}\) is \(D(x_{5})=\{1,2,3\}\), which is different from the satisfactory fused decision value set of \(x_{5}\) in Example 5.4.

To provide a clearer representation of the satisfactory decision sets for all objects, the ratios of mass functions of different objects are depicted in blue, red, and gray lines under IFDIS 1, IFDIS 2, and MIFDIS, as shown in Figs. 1, 2 and 3. In Figs. 1, 2 and 3, the horizontal axis represents 6 objects, while the vertical axis represents the ratio \({\overline{m}}\) of the mass function corresponding to a given decision value 1, 2, or 3. These figures also allow us to determine the satisfactory decision sets of each object in the three IFDIFs with varying loss functions.

Comparative analysis reveals that when the values of \({\overline{m}}\) of both the first and second IFDISs are small, the values of \({\overline{m}}\) in MIFDIS are also small; when the values of \({\overline{m}}\) in both systems are large, the values of \({\overline{m}}\) in MIFDIS tend to be large as well; when one system has a large value and the other has a small value, the fused value of \({\overline{m}}\) typically fall in between.

In some cases, the number of possible fusion decision rules generated can exceed the number of elements, as demonstrated in the following example.

Example 5.5

Let \(U=\{x_{1},x_{2},\ldots ,x_{10}\}\), \(R^{1}\) and \(R^{2}\) are two IFRs on U, \(d=\{1,2,3\}\),\(P(\{x_{i}\})=\frac{1}{10}\). Then \((U,R^{1},d^{1})\) and \((U,R^{2},d^{2})\) are two IFDISs, as shown in Tables 6, 7 and 8.

In order to give the satisfactory decision sets of all objects more clearly, when \(\lambda _{PP}=(0.1,0.9)\), \(\lambda _{BP}=(0.65,0.3)\), \(\lambda _{NP}=(0.85,0.1)\), \(\lambda _{NN}=(0,1)\), \(\lambda _{BN}=(0.5,0.4)\), \(\lambda _{PN}=(0.8,0.15)\), we also can give the satisfactory decision sets of each object in three IFDIFs as the following Table 9.

To provide a clearer representation of the satisfactory decision sets for all objects, the ratios of mass functions of different objects are depicted in blue, red, and gray lines under IFDIS 1, IFDIS 2, and MIFDIS, as shown in Figs. 4, 5 and 6. In Figs. 4, 5 and 6, the horizontal axis represents 10 objects, while the vertical axis represents the ratio \({\overline{m}}\) of the mass function corresponding to a given decision value 1, 2, or 3. These figures also allow us to determine the satisfactory decision sets of each object in the three IFDIFs with varying loss functions.

In these three figures, We can conclude,

-

(1)

As \(\mu _{\lambda _{PP}}\) increases and \(\mu _{\lambda _{PN}}\) increases, and \(\mu _{\lambda _{BN}}\) decreases and \(\mu _{\lambda _{BP}}\) decreases, \(a_{PB}\) monotonically does not decrease. In this case, \(\alpha _{1}\) does not necessarily decrease, but the positive region does not increase.

-

(2)

As \(\mu _{\lambda _{PP}}\) increases and \(\mu _{\lambda _{PN}}\) increases, and \(\mu _{\lambda _{NN}}\) decreases and \(\mu _{\lambda _{NP}}\) decreases, \(a_{PN}\) monotonically does not decrease. In this case, \(\beta _{1}\) and \(\alpha _{2}\) do not necessarily decrease, thus the positive region does not increase, and the negative region does not decrease.

-

(3)

As \(\mu _{\lambda _{BP}}\) increases and \(\mu _{\lambda _{BN}}\) increases, and \(\mu _{\lambda _{NN}}\) decreases and \(\mu _{\lambda _{NP}}\) decreases, \(a_{NB}\) monotonically does not decrease. In this case, \(\beta _{2}\) does not necessarily decrease, but the negative region does not decrease.

-

(4)

As \(\gamma _{\lambda _{PP}}\) increases and \(\gamma _{\lambda _{PN}}\) increases, and \(\gamma _{\lambda _{BN}}\) decreases and \(\gamma _{\lambda _{BP}}\) decreases, \(b_{PB}\) monotonically does not increase. In this case, \(\alpha _{1}\) does not necessarily increase, but the positive region does not decrease.

-

(5)

As \(\gamma _{\lambda _{PP}}\) increases and \(\gamma _{\lambda _{PN}}\) increases, and \(\gamma _{\lambda _{NN}}\) decreases and \(\gamma _{\lambda _{NP}}\) decreases, \(b_{PN}\) monotonically does not increase. In this case, \(\alpha _{2}\) and \(\beta _{1}\) do not necessarily increase, thus the positive region does not decrease, and the negative region does not increase.

-

(6)

As \(\gamma _{\lambda _{BP}}\) increases and \(\gamma _{\lambda _{BN}}\) increases, and \(\gamma _{\lambda _{NN}}\) decreases and \(\gamma _{\lambda _{NP}}\) decreases, \(a_{NB}\) monotonically does not increase. In this case, \(\beta _{2}\) does not necessarily increase, but the negative region does not increase.

Thus, for different values of loss functions, we can get the fused satisfactory decision sets of each object shown in Table 10.

From Table 10, it can be observed that the selected results of satisfactory decision sets for fused decision values correspond to the analysis of the impact of changes in loss function values on positive domain changes of decision values.

In the following, we compare the satisfactory decision sets of each object based on the IF relation and the compromise rule, as shown in Table 11.

The analysis above demonstrates that for some loss functions, the satisfactory decision sets of each object from the three different partial orders are identical, indicating insensitivity of the loss function to these partial orders. However, in certain cases, the satisfactory decision sets of each object corresponding to the three partial orders can vary, suggesting sensitivity of the loss function to these partial orders. Therefore, by utilizing the monotonicity of loss functions, we can adjust the values of the six loss functions to better align with specific requirements. Moreover, in this example, it is observed that the elements in the positive region of the IF relation are included in the positive region of the CO when \(\rho = 0\). Similarly, the elements in the positive region of the CO when \(\rho = 1\) are encompassed within the positive region of the IF relation.

5.5 Data Analyses

We apply our proposed method based on the IF relation to analyze the satisfactory decision sets for each object in the Computer Hardware dataset from the UCI repository. This dataset consists of 209 objects with 10 attributes. Initially, we conduct data preprocessing by identifying and removing data with significantly deviated values considered as noise. Subsequently, we select the initial 200 objects and divide them into two groups to establish two information systems, with objects sorted from 1 to 100 within each system. As the first two attributes are deemed unsuitable for IFS construction, we exclude these attributes and focus on the remaining eight for our analysis. The values of the remaining eight attributes are normalized to ascertain their membership degrees. Next, we use random methods within Excel software to calculate the non-membership degrees of IF numbers. It is ensured that the sum of membership and non-membership degrees is greater than or equal to 0.5. Finally, decision values ranging from 1 to 5 are randomly assigned to each object.

Firstly, let the probability of every object is \(\frac{1}{100}\), \(\lambda _{PP}=(0.1, 0.8)\), \(\lambda _{BP}=(0.65, 0.3)\), \(\lambda _{NP}=(0.85, 0.1)\); \(\lambda _{NN}=(0.1, 0.9)\), \(\lambda _{BN}=(0.5, 0.4)\), \(\lambda _{PN}= (0.8, 0.15)\). The calculations show trends in the changes of the values of \({\overline{m}}\) for IFDIS 1, IFDIS 2, and MIFDIS as illustrated in Figs. 7, 8, 9, 10 and 11.

The horizontal axis in the figure represents 100 objects, while the vertical axis represents the ratio \({\overline{m}}\) of the mass functions corresponding to decision values \(1-5\). The blue line represents the curve of the values taken by \({\overline{m}}\) in the first IFDIS, the orange line represents the curve of the values taken by \({\overline{m}}\) in the second IFDIS, and the gray line represents the curve of the values taken by \({\overline{m}}\) in the MIFDIS. Because the non-membership degrees of conditional attributes and decision values in these systems are randomly generated, then objects with completely identical values are seldom encountered, and the values of \({\overline{m}}\) in each information system oscillates around 1. Upon comparing and analyzing the IFDISs of 100 objects, it becomes evident that the values of \({\overline{m}}\) in MIFDIS also tend to fluctuate around 1. Furthermore, the trends observed in the variations of the values of \({\overline{m}}\) in MIFDIS generally align with those in Example 5.4 and Example 5.5. From the above figure, it can be observed that the use of conflict evidence fusion rules may lead to situations in MIFDIS where some values of \({\overline{m}}\) exceed or fall below the values of \({\overline{m}}\) in the two original IFDISs. This does not hinder the identification of satisfactory decision sets for each object using the three-way decision method. Therefore, we can use Fig. 12 to illustrate the satisfactory decision sets for IFDIS 1, IFDIS 2, and MIFDIS.