Abstract

Improving predicting methods for streamflow series is an important task for the water resource planning, management, and agriculture process. This study demonstrates the development and effectiveness of a new hybrid model for streamflow predicting. In the present study, artificial neural networks (ANNs) coupled with wavelet transform, namely Additive Wavelet Transform (AWT), are proposed. Comparative analyses of Discrete wavelet transform (DWT) based ANN and conventional ANN techniques with the proposed method were presented. The analysis of these models was performed with monthly streamflow series for four stations on the Çoruh Basin, which is located in northeastern Turkey. The Bayesian regularization backpropagation training algorithm was employed for the optimization of the ANN network. The predicted results of the models were analyzed by the root mean square error (RMSE), Akaike information criterion (AIC), and coefficient of determination (R2). The obtained revealed that the proposed hybrid model represents significant accuracy compared to other models, and thus it can be a useful alternative approach for predicting studies.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Short- and long-term reliable prediction of river flows are vital for the management, planning and design of water resources. It is also important for various issues related to water resources such as flood control, hydropower generation in drought periods, land use, agriculture and transport planning in rivers. A number of streamflow prediction methods have been proposed and employed in previous studies. They generally fall under statistical/stochastical based and conceptual/physically based methods. Statistical- or stochastic-based techniques include simple and multiple linear and nonlinear regression, autoregressive moving average (ARMA) models, ARMA with exogenous variables (ARMAX), and transfer function techniques (Salas et al. 2000). These techniques are generally called as black-box type of models. On the other hand, conceptual or physically based techniques that are usually employed for streamflow prediction depend on mathematical descriptions of the physical processes that take place in a watershed.

During the last decades, an alternative approach to streamflow prediction has been developed based on Artificial Neural Networks (ANNs) and it has been successfully applied in various areas of hydrological predicting. For instance, Hsu et al. (1995) compared the ANN model with linear ARMAX in rainfall-runoff modeling. They emphasized that the ANN model performed better than ARMAX. Cigizoglu (2003) investigated the performance of the ANN model in predicting of the streamflow data. Unes et al. (2015) employed ANN models to forecast daily reservoir levels for Miller Ferry Dam in USA. They found that the capabilities and accuracy of ANN was better than those of the conventional models. Rajendra et al. (2019) used ANN models for the prediction of hourly meteorological data and compared its results with those of the Multiple linear regression (MLR). They underlined that ANN models achieved higher satisfactory results than the MLR.

Although ANN has been widely adopted in the fields of hydrology and water resource, there are criticisms about it, too. It does not explain as to the structure of the physical process of the data analyzed during the modeling phase (Partal and Cigizoglu 2008), and also the accuracy of the model mostly depends on the expert's capability and knowledge (Cigizoglu 2004). At this point, recently, wavelet and ANN conjunction models have been developed to improve the performance of ANN. The wavelet analysis, which provides information on the behavior and structure of the structure of the series observed in the time–frequency domain, has been successfully applied in the prediction of the hydrological series (Danandeh Mehr et al. 2013; Sithara et al. 2020; Abda et al. 2021).

In these methods, the time series analysis is decomposed into many different resolution components using wavelet techniques. Then, the resulting sub-series are used as the input of ANN. In the last years, several researches relevant to hybrid discrete wavelet transform and ANN (DWT–ANN) have been done in the field of hydrology and water resources. For example, Wang and Ding (2003) suggested a wavelet network model to increase the predict accuracy of the ANN technique. They applied the proposed method for the estimation of the short- and long-term periods of the monthly groundwater level and daily streamflow data. The results indicated that the new method could extend the estimated time and increase the success of the prediction in the hydrological time series. Nourani et al. (2009) utilized ANN in conjunction with the wavelet transform for 1-day-ahead runoff discharge forecasting. This multivariable model has significantly improved the prediction accuracy of the ANN in the rainfall–runoff forecasting models. Mehr et al. (2014) employed wavelet—ANN model and investigated the effect of discrete wavelet transform on neural networks in the estimation of monthly flows. They used the sub-series of the main series as inputs for ANN. Sun et al. (2019) assessed the ability of the wavelet-based ANN model for streamflow forecasting and compared it with a single ANN model. They suggest that the coupled wavelet neural network model is better than the single model. Siddiqi et al. (2021) utilized wavelet pre-processing to improve the accuracy of the ANN model for monthly mean streamflow prediction in Indus River Basin, Pakistan. The results indicate that the integrated models were found to perform better in monthly streamflow prediction compared to other single models.

In this study, we propose a neural network model combined with additive wavelet transform (AWT) for the prediction of hydrology and water resource time series. AWT has been used in the field of image processing and also to define the trends of the time series in hydrology (Nunez et al. 1999; Otazu et al. 2005; Tosunoglu and Kaplan 2018).

In this study, the main reason for using AWT in addition to other classical wavelet transformations is that it is easier to implement, more practical and its calculation cost is lower, especially for large data. AWT basically decomposes the analyzed time series to approximate and wavelet subcomponents. The approximation component (low frequency component) is obtained by a bicubic spline filter (Yilmaz et al. 2020). The detailed component of the time series is obtained from the difference between the approximate component subbands. To achieve a higher level of decomposition, the same process is applied to the approximation component. The time series decomposition process with AWT is much easier in comparison with the other conventional wavelet transform techniques. To our knowledge, there is not published work using AWT for the prediction of hydrometerological variables. This present application is the first study for predicting streamflow using additive wavelet transform and artificial neural network (AWT–ANN) in the literature. In this paper, a new method is suggested for streamflow modelling from previous flow data using Additive Wavelet Transform-based neural network methods. The research methodology of the paper is as follows. First, DWT and AWT techniques are utilized to decompose the analyzed series into detailed and approximate components, which give information about the periodic structure of the data set. Secondly, proper wavelet components are used as inputs to the ANN to predict the monthly streamflow data from four stations in Çoruh Basin, one of Turkey's most important watersheds in terms of water potential. Finally, to evaluate predictive ability of the models, the results obtained from AWT–ANN are compared with those of the DWT–ANN and single ANN and prediction accuracy of the AWT–ANN model is evaluated and discussed.

Methods

Artifical neural networks (ANN)

Artificial neural networks (ANN) can be defined as a data-driven statistical approach that can quickly solve non-linear relationships between input and output data. ANN is a black box that generates outputs for given inputs (Kohonen 1988). An advantage of ANN is the ability to provide models in which the relationship between input and output variables is not fully understood in complex nonlinear problems (Belayneh et al. 2014) ANN is used as a powerful tool for estimation in the different areas of hydrology and water resources (Kisi 2005; Athar and Ayaz 2021). In this study, feed forward back propagation (FFBP) trained with the Bayesian regularization backpropagation algorithm is used as ANN model. Nourani et al. (2008) has proved that FFBP model with the three-layer provides satisfactory results for prediction. For this reason, the FFBP network model used in this study consists of three layers network, namely, an input layer, a hidden layer, and an output layer. In Fig. 1, the structure of the feed—forward network consisting of neurons connected by the connections is given. The connection between the first and the last layer is provided by hidden neurons. The back propagation algorithm with two phases is the most popular learning method for the network in the training process.

First, each node receives the weighted input, which is the output of each node in the previous layer and transmits it to the nodes of the next layer by means of links for proper output after processing it with an activation function. Second, according to the error function calculated by difference between the signal obtained in the output layer and the original signal, the connection weights are updated backwards (Partal 2009; Adamowski and Sun 2010). The values of the weights can be updated during the network training until the calculated error in Eq. (1) is minimized.

where N is length of the training data. k indicates the output neuron number. \({T}_{ij}\) is the observed values. \({O}_{ij}\) is the network output value at the end of the training.

Wavelet transform

Wavelet transform is a multi-resolution analysis that can provide information about the behavior of a signal in the time–frequency domain (Saraiva et al. 2021). One advantage of the wavelet transform compared to the Fourier transform is that it performs with different window sizes analysis instead of a single window technique to decompose the signal. The main problem in using a single window is that the temporal information can be lost at high-frequency while the window is sliding through the analyzed signal (Torrence and Compo 1998). The wavelet transform uses the narrow and wide window analysis to decompose the signal into low and high resolution components, respectively. The second advantage is that since the hydro-meteorological time series are mostly non-stationary, the wavelet transform is considered as a more successful tool than Fourier analysis in these series (Partal and Kisi 2007). It has a wide area of usage for predicting of hydrological variables and for defining the trends of time series in the fields of hydrology and water resources. In this study, two different wavelet forms which are explained in detail in the following sections are used for ANN-based prediction models.

Additive wavelet transform

The conventional AWT technique is widely employed for image analysis (Nunez et al. 1999; Otazu et al. 2005). Firstly, the image is decomposed with a bicubic spline filter to acquire the first approximation compound (Nunez et al. 1999). Since the time series analyzed in this study are 1D, the 1D AWT decomposition approach explained by Tosunoglu and Kaplan (2018) is used to obtain components that give information about the structure of the time series.

The following 1D filter is used for the first decomposition level.

The original data are decomposed by convolving the series with the filter in Eq. (2) and at the end of the process the first approach compound is obtained as:

where, \(A_{{\text{o}}}\) represents the extracted state of the original data and \(*\) is the convolution operator. \(A_{1}\) indicates the first approximation compound. The first detail component is the difference between \(A_{{\text{o}}}\) and \(A_{1}\).

Filtering is continued as follows until the determined decomposition level.

where, \(A_{l}\) shows \(l\;{\text{th}}\) approximation component. The detailed component in the \(l\;{\text{th}}\) level is defined as:

The reconstruction of the signal decomposed for L level is as seen in Eq. (7):

The flowchart for the two levels decomposition of the AWT, which separates the data into the sub-series, is presented in Fig. 2.

Flow chart for 2 levels of decomposition and reconstruction via AWT (Tosunoglu and Kaplan 2018)

Discrete wavelet transform (DWT)

The DWT technique is widely used for prediction and trend analysis, as the hydrological and water resources time series are generally measured at discrete intervals (Nalley et al. 2012; Mehr et al. 2014; Joshi et al. 2016). An advantage of DWT compared to other classic transformation types such as Continuous wavelet transform is that the process of decomposition is easier and it provides an accurate and effective analysis instead of redundant information (Partal and Kucuk 2006). In addition, thanks to the orthogonal feature of the DWT, the decomposed signal can be easily reconstruction (Torrence and Compo 1998). The DWT has the form as

where \(\Psi\) represents the mother wavelet function; a and b are integers that symbolize the wavelet scale and the translation parameter, respectively. \(s_{{\text{o}}}\) is a constant dilation step and its value is greater than 1. \(\tau_{{\text{o}}}\) represent location variable and its value should be higher than zero. The values of \(s_{{\text{o}}}\) and \(\tau_{{\text{o}}}\) are determined as 2 and 1, respectively (Mallat 1989). The coefficients for DWT at scale \(s = 2^{a}\) and location \(\tau = 2^{a} b\) can be defined as:

The DWT operates to obtain approximate and detailed components at any level with low pass filter and a high pass filter, respectively. The approximate components provide information on the low frequency of the signal, while the detailed components contain information on the frequencies of the signal at different levels from high to low (Freire et al. 2019).

Study area and data

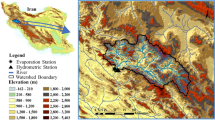

Çoruh river basin situated in north-east of Turkey was chosen as the study area in this study. The Çoruh river originates from Bayburt and discharges to the Black Sea in Batum City and its drainage area is approximately 19.748 km2. The mean annual flow for this river is about 200 m3/s inside Turkey's borders (Danandeh Mehr et al. 2013). Recently, with water structures such as dam and hydroelectric power plants built in the basin has gained significant contribution to Turkey's economy. The Çoruh River has a total of 37 water structures, 10 of which are on the main line and 27 are on the side branches. The annual energy potential is about 16 billion kWh which constitutes 6% of the total energy produced in Turkey (Sume et al. 2017).

Monthly streamflow prediction plays a critical role in the field of water resource management and hydrologic analysis to solve problems of water resource dispatching plans, supply, irrigation, and reservoir operation. (Partal 2009; Honorato et al. 2018). In this study, monthly average flows belonging to four gauging stations in the Çoruh basin were used. These stations were chosen since the observation periods are sufficient and the observations are not influenced by human intervention. The missing flow data of the stations 2323 and 2320 in the period between 2003–2004 and 1990–1992, respectively, were estimated by means of an artificial neural network (Can et al. 2012). Figure 3 gives the map of the study area and the location of the selected gauging stations. The basic statistical characteristics of monthly average flows and some information about gauging stations are presented in Table 1. The symbols SD, Cs and Cv in the table represent the standard deviation, skewness coefficient and coefficient of variation, respectively. It can be seen from the Table 1 that the monthly mean streamflow varies about between 14 and 70 m3/s (stations 2321 and 2305, respectively). The SD for monthly mean streamflow varies approximately between 13 and 75 m3/s and its maximum value is determined at station 2305. The skewness coefficient indicates the degree of asymmetry in a probability distribution function around the point corresponding to the mean. The skewness value for the monthly streamflow varies from 1.34 (station 2321) to 2.56 (station 2323). It can be said that the data has a high positive skewness. In other words, monthly average flows show a scattered distribution. The Cv is the ratio of standard deviation to the average of the observed data series. Cv is a dimensionless value that is used to compare parameter sizes of different variables. The Cv range from 0.94 to 1.13 for monthly values.

Efficiency criteria

The performance of the models used in this study is evaluated by means of several statistical criteria which identified the errors relevant to the model. The coefficient of determination (R2), the root mean squared error (RMSE) and the Akaike Information Criterion (AIC) are employed to evaluate the accuracy of the agreement between the observed and predicted values in the ANN, DWT–ANN and AWT–ANN model.

The RMSE is the square root of the sum of the squares of errors that known as difference between output and target values. The smaller is the value of RMSE, the higher is the accuracy of the model. The R2 evaluates the strength of the correlation between the observed and predicted values. This coefficient ranges from 0 to 1. A higher value of the coefficient indicates that the performance of the model has a high efficiency. The RMSE and R2 are defined as:

where n denotes the number of data points used; \(Q_{i}^{{{\text{obs}}}}\), \(\overline{Q}\) and \(Q_{i}^{{{\text{pre}}}}\) represent observed values, average of the observed values and predicted data, respectively.

The most important problem in ANN modeling is the excess of parameters to be predicted. Because, this situation can reduce the predicted performance of the models and lead to uncertainty (Kisi 2009). The information criteria is suggested by Anders and Korn (1999) for selecting the best ANN model. The main reason for the use of information criteria is the ability to penalize the model to avoid the use of excessive parameters by taking into account the estimation of the model parameters (Kumar et al. 2005). The akaike information criterion (AIC) recommended by Akaike (1981) is one of the commonly used criteria. Based on Salas et al. (1980), the AIC is defined as:

where \(n\) is the length of the data used as input; \(p\) representes the number of parameters used in the model; \(\sigma_{\varepsilon }^{2}\) denotes the variance of the residual values. The low AIC value indicates high performance of the model.

Model development

ANN models

In this part of the study, ANN models for monthly mean streamflow prediction are developed through the commercial software MATLAB R2016 program. We used three-layer feed-forward back propagation (FFBP) networks consisting of an input layer, an output layer and one hidden layer. Tangent sigmoid and linear transfer functions are utilized in the hidden layer and output layer, respectively. The Bayesian regularization backpropagation algorithm was employed in the network training process. Initially, ANN models with different input combinations are trained and tested. In this study, since the antecedent flows are used to predict flow at current time, the number of neurons in the input layer is chosen based on the partial auto-correlation function (PCF) of the monthly mean flows (Shiri and Kisi 2010). The PCF for monthly mean data is given in Fig. 4. This figure clearly shows that the lags that are up to three previous months have a significant impact on the current time. Thus, different combinations were generated for each station using three antecedent lags and these combinations are used as inputs for ANN models. Different combinations can be written mathematically as follows:

where \(Q_{t}\) represents monthly mean streamflow at time t; \(Q_{t - 1}\), \(Q_{t - 2}\) and \(Q_{t - 3}\) denote one month previous flow, two months previous flow and three months previous flow, respectively. One of the most important tasks in ANN modeling is to detect the optimal number of neurons in the hidden layer for an efficient network. As mentioned in the previous sections, excessive number of parameters can cause overfitting problem in the network's training phase.

Conversely, if too few neurons are used in the network, the network may not be able to fully understand the incoming signal from data and this may cause loss of information. According to the researchers, increasing the number of neurons in the hidden layer does not increase the efficiency of the model (Cannas et al. 2006; Wu et al. 2009). For this reason, the number of hidden neurons was define using the trial-and-error technique proposed by the Kisi (2008) and the highest number of neurons we considered was 10. The optimal number of hidden nodes was define according to the AIC value calculated in the test process at each trial. In the literature, there is no definite information about the period of data used in the training and testing steps of the network. However, in general, the length of the data used for training in the studies varies between 70 and 90% of the entire data length (Partal and Cigizoglu 2008). In this study, the first 70 percent of the observations at all stations are selected for the training of the ANN models and the remaining 30 percent are used as test data.

Wavelet-based models

The wavelet-neural network conjunction models are ANN model that uses, as input, sub-series (detail and approximation components) obtained by AWT and DWT. The structure of the wavelet-neural network model developed in this study is given in Fig. 5. Each sub-series component contains important information about the structure of the series. To improve the prediction performance of ANN, ANN models are constructed with the decomposed series and an original series is obtained as output. However, there are two important tasks that need to be done before decomposing the series. The first is the determination of the proper mother wavelet for the DWT. The other is the choice of appropriate decomposition level (Sharie et al. 2020). There are different methods proposed regarding mother wavelet selection in the literature (Nalley et al. 2012; Nourani et al. 2014). For the trend analysis and prediction of the hydrology and water resources time series, The Daubechies wavelet, one of the wavelet families, has been commonly used (Adamowski et al. 2012; Nalley et al. 2013; Araghi et al. 2015). Due to the Daubechies wavelet’ s ease of use, compact support and orthogonal feature (Vonesch et al. 2007), it was determined as the mother wavelet in this study. These features provide the wavelets to be adjusted to include the high and low frequencies of the signal (Nalley et al. 2013). There are different types of Daubechies (db) wavelet family from db1 to db10. In this study, these db types are examined for each data set. To find the type of suitable db wavelet, we have considered the method suggested by Nourani et al. (2009). They compared the effects of different wavelet types on the performance of the wavelet–ANN models and used the RMSE and R2 as criterion to compare the results. According to this procedure, we used the DWT with db6 mother to obtain different resolution sub-components. Our results suggest that db6 can successfully capture the characteristics of the data analyzed in the study.

For the minimum level of decomposition, the following formula recommended by Wang and Ding (2003) is used.

where n is the number of observations; \({\text{INT}}\) represents integer number. In this study, the record lengths vary from 480 to 588 months. Based on Eq. (16), the minimum decomposition level is 2. However, it is selected five to analyze the effect of higher scales on wavelet–ANN models. For instance, the all sub-time series for Station 2323 at the Çoruh watershed has been illustrated in Fig. 6.

The structure consists of two phases. In the pre-processing phase, the series is decomposed into approximation and detail components using DWT and AWT.

The decomposition process is repeated up to the selected decomposition level, so that the series is split into many low resolution components.

In the second phase, namely simulation phase, at first, for the DWT–ANN and AWT–ANN models, the developed ANN networks consists of input layer, hidden layer and output layer consisting of one node denoting the monthly streamflow. The input nodes are determined through following the procedure below. Based on the correlation coefficient between each sub-time series (DW) and the original data, the DW components are selected as input for ANN models. Each of these components represents the different characteristics of the data. Finally, the selected DW components (and approximation series) are summed up to increase the efficiency of the ANN models, and the summed new series is used as input to the ANN model (Kisi 2009; Adamowski and Chan 2011). As in single ANNs, each model analyzed by trial-and-error method to specify the optimum number of neurons in the hidden layer, depending on the number of neurons in the model’s hidden layer. Then, the first 70 percent of the data series at all stations were selected for the training of the AWT–ANN and DWT–ANN models and the remaining 30 percent were used for testing of the methods.

Results

Tables 2, 3, 4 and 5 present the ANN, DWT–ANN, AWT–ANN model performance statistics results( AIC, RMSE and R2) and their model structures for stations 2305, 2320, 2321 and 2323, respectively. The model structure column given in the tables indicates the number of input, hidden and output neurons for each station, respectively. For each ANN, DWT–ANN and AWT–ANN models, the optimal number of hidden neurons that gives the minimum AIC value was selected.

For Station 2305, three input combinations according to monthly mean streamflow of antecedent periods are evaluated to predict current streamflow value. Table 2 indicates that the AWT–ANN model is found to provide more accurate prediction results than the DWT–ANN models and regular ANN models in the prediction of monthly average flows of Station 2305. The best AWT–ANN is a function of the monthly mean streamflow from one, two and three months ago. The best AWT–ANN model has one hidden layer with five neurons. The best AWT–ANN model with a coefficient of determination (R2) of 0.9237, root mean square error (RMSE) of 21.4153 and Akaike information criterion (AIC) of 1136 for the test period showed higher performance than the best DWT–ANN model (R2 = 0.8678, RMSE = 28.1826 and AIC = 1233) and ANN model (R2 = 0.6985, RMSE = 42.5594 and AIC = 1370). Figure 7 compares the predicted values obtained from the best ANN, AWT–ANN and DWT–ANN models and the observed flow values for the test period. It can be seen from the Fig. 5 that The AWT model is able to capture the structure of the observed flows values better than the ANN and DWT–ANN models, especially in high flows. Figure 7 also shows scatterplots comparing the observed and estimated streamflow values derived from these models. The scatterplots denote that the AWT–ANN model has more concentrated estimates around the identity line.

The RMSE, R2 and AIC statistics of the ANN, DWT–ANN and AWT–ANN models for the Station 2320 are given in Table 3. The best AWT–ANN model clearly has higher values R2 (0.9389) and lower RMSE (8.4813) and AIC (681.2857) than those of the best DWT–ANN (R2 = 0.7957, RMSE = 15.5103 and AIC = 855.55) and ANN (R2 = 0.6886, RMSE = 19.14 and AIC = 902.787) models. Table 3 indicates that the AWT–ANN model has a better performance than the ANN and DWT–ANN models in terms of RMSE, R2 and AIC. In the best AWT–ANN model, the input consists of the streamflow of three previous months. The optimum number of hidden neurons of the ANN, DWT–ANN, AWT–ANN models are found to vary between 2 and 5. For the best ANN, DWT–ANN and AWT–ANN models, the number of hidden neurons are 4, 5 and 5, respectively. The prediction performances of the best ANN and DWT–ANN and AWT–ANN models are compared in Fig. 8. It is understood from the figure that AWT–ANN model estimations show a higher harmony with original data than those of the ANN and DWT–ANN models. For the best performing ANN, DWT–ANN and AWT–ANN models, a scatterplot is also presented in Fig. 8. The scatterplots show that the estimates of the AWT–ANN model are much closer to the original time series than those of the ANN, DWT–ANN model.

For the Station 2321, the performance statistics of ANN, DWT–ANN and AWT–ANN models in test period are shown in Table 4. It can be seen from the table the best AWT–ANN model, which has R2 of 0.9015, RMSE of 4.2733 and AIC of 480.5707 shows the highest performance than the best DWT–ANN model (R2 = 0.8069, RMSE = 5.9816 and AIC = 574.1946) and ANN model (R2 = 0.6494, RMSE = 8.0603 and AIC = 635.5891) in predicting monthly mean flows. The best AWT–ANN model whose inputs consist of the flows of the previous three months has the highest accuracy to predict the monthly average flows of the Stations 2321. In all models, the optimal neurons number in the hidden layer varies between 2 and 6. The best AWT–ANN, DWT–ANN and ANN models have 6, 6 and 4 neurons, respectively, in the hidden layer. To graphically compare the performance of the best AWT–ANN, DWT–ANN and ANN models, Fig. 9 presents the scatterplots and plots for predicted and observed data in the test period. It is obvious from the figure that the AWT–ANN model has a high ability to predict the monthly average flows of Station 2321. The AWT–ANN model estimations have better harmony with the observed data compared to the ANN and DWT–ANN models.

Table 5 gives the performance statistics results statistics of ANN, DWT–ANN and AWT–ANN models for station 2323. From Table 5, RMSE and AIC values (14.133 and 922.4664, respectively) and high R2 (0.8218) value for the best AWT–ANN models were found when compared to the best ANN(R2 = 0.5646, RMSE = 22.0821 and AIC = 1080) and DWT–ANN (R2 = 0.8178, RMSE = 14.2903 and AIC = 971.8352) models.It is observed from the table that the accuracy of the AWT–ANN model having two antecedent values of the data series in monthly streamflow prediction is better than ANN and DWT–ANN models. For the ANN, DWT–ANN, AWT–ANN models, the optimal number of hidden nodes in the hidden layer varies between 2 and 9 and the best AWT–ANN, DWT–ANN and ANN models have 4, 7 and 4 neurons, respectively, in this layer. Figure 10 shows the hydrograph of observed and predicted values for the best AWT–ANN, DWT–ANN and ANN models over the test period. This illustration clearly shows that the predicted values obtained from AWT–ANN model are slightly better matched with the observed values compared with those of the ANN and DWT–ANN models. Figure 10 also presents the scatterplot between the predicted and observed streamflow for station 2323. It can be seen that the AWT–ANN estimates are slightly closer to the regression line than those of the DWT–ANN and ANN.

Overall, it can be concluded for monthly streamflow predicting the AWT–ANN models provided more accurate predicting results than the DWT–ANN and single ANN models.

As aforementioned, overfitting is an important problem in ANN modeling because it can reduce the prediction ability of the model and lead to consequent uncertainty. As it was mentioned previously, in this study, the trial-and-error method recommended by the Kisi (2008) is used to avoid overparameterization. For choosing the optimal nodes number in hidden layer, the Akaike information criterion value is used for test period in each trial as it penalizes the models having more parameters. For example, It can be seen from Table 5 that in single ANN modeling the RMSE value of Model 3 is smaller than that of Model 2 and the value of R2 is greater. In other words, the Model 3 is better than model 2 in terms of RMSE and R2. However, 12 (2 × 4 + 4 × 1) and 36 (3 × 9 + 9 × 1) weights are used for model2 and model3, respectively. In addition, the AIC value of Model 2 is smaller than that of the Model 3. Thus, to avoid overfitting issue, the best single ANN model is determined as Model 2.

Discussion

The raw signal is decomposed into various resolution intervals using AWT and DWT technique. Thus, the behavior of periodic structures of original time series can be seen more clearly through sub-series. The ANN model is reconstructed with the effective wavelet components to belong to various resolution levels. The accuracy of the model predictions shows better performance than those obtained directly by original time series. In other words, since the subseries belonging to the different wavelet resolution levels are used as inputs in the network, the combined neural-wavelet model increases the ANN predicting performance. Table 6 gives the best results for each model at all stations. It is clear from the table that wavelet-ANN models outperform regular ANN models for predicting monthly mean streamflow because the wavelet technique provides low and high resolution decompositions of the series and wavelet decomposed data sets improve the prediction performance of ANN by capturing useful information at different resolution levels. Different studies have been carried out to improve the performance of ANN in streamflow prediction. Dalkiliç and Hashimi (2020) used hybrid models by coupling wavelet with ANN to predict monthly streamflow series. The results indicate that wavelet-based ANN models demonstrate more accurate results compared to traditional ANN model. Güneş et al. (2021) developed discrete wavelet-based artificial neural models to predict streamflow at three stations. They indicated that the hybrid model performed better than single ANN models for discharge prediction at all stations.

This study also presented a comprehensive comparative analysis of the AWT–ANN, DWT–ANN and single ANN approaches. As can be seen in Table 6, the AWT–ANN model is more adequate than the other models for predicting monthly mean streamflow.

To illustrate detailed comparison of the performance of the best AWT–ANN, DWT–ANN and ANN models, the observed and predicted hydrographs at test period for Station 2320 is presented in Fig. 11. The figure shows that all three models have great ability in predicting monthly streamflow. However, the AWT–ANN predictions show better match with observed data than those of DWT–ANN and ANN. In addition, the AWT–ANN model has captured the extremes (i.e. minima and maxima) better than other models in the observed monthly mean flows. For further analysis of model performances, the spatial pattern of predicted and observed values of monthly mean flows by the best AWT–ANN, DWT–ANN and ANN models were evaluated using Taylor diagram (Fig. 12). The Taylor diagram (Taylor 2001) provides a comprehensive statistical information for evaluating the performance of different models based on observations. The Taylor diagram exhibits three statistics (i.e., correlation of coefficient (CC), normalized standard deviation, and RMSE). From Fig. 12 it is evident that AWT–ANN shows high spatial CC in all the four Stations. Considering the aspects of standard deviation and RMSE it is understood that the AWT–ANN posseses low values of RMSE with normalized SD close to unity indicating better skill exhibited by the model compared to other models. In summary, the AWT–ANN conjunction model proposed in this study is obtained from the combination of AWT and ANN. As seen in this study, AWT technique has many beneficial points compared to other conventional wavelet types such as DWT:

1) Before decomposing the hydrological time series with DWT, a suitable mother wavelet should be selected and there are many methods proposed for this selection process. As it was mentioned previously, in this study, the approach proposed by Nourani et al. (2009) was tried for each of different types of the Daubechies wavelets. The wavelet type, which gives the most optimum result according to RMSE and values among the db wavelets in DWT–ANN modelling, was chosen as the mother wavelet. However, in AWT technique, the hydrological series is more easily decomposed into its sub-components compared to DWT. The reason behind this is that there is no need to select mother wavelet since there is no mother wavelet used in AWT method. The decomposition process of time series is done simply by a bicubic spline filter. Especially for large data, AWT is more useful than DWT.

2) As mentioned, DWT process uses two different filterbanks to obtain the detail and approximation components, therefore some of the information within the series cannot be transferred to the components. In AWT method, the detail component is obtained by a simple subtraction between the series and approximation compound, so all of the information within the series is transferred into compounds. Moreover, the computational complexity of AWT is three times lower than DWT as given in Table 7. In the table, N is the length of the series and M is the number of vanishing moments of the mother wavelets. Since, the amount of information kept in the components is higher in AWT, it is expected to provide better results.

3) Our results showed that the AWT–ANN model provided more accurate predicting results than the DWT–ANN model. The reason for this is that the sub-series obtained from AWT is better to capture the falling and rising limbs of the original time series than those of DWT. Thus, when these components are used as inputs in ANN modeling, the accuracy of the predictions is found to be more successful than that of DWT–ANN model. In addition, AWT–ANN model provided accurate estimations for four different stations, which means that the method is both stable and reliable.

In summary, for the aforementioned reasons, the new hybrid model obtained by combining the Additive Wavelet Transform and Artificial Neural Networks performed better than the ANN and DWT–ANN methods in predicting monthly mean streamflow. The suggested AWT–ANN predicting method for monthly streamflow could be useful in the management and planning of water resources systems. This method showed good predicting results. In light of this, the proposed method can be used for the prediction in different fields of hydrological and water resources and should be studied further.

Conclusion

A new hybrid method based on coupling Additive Wavelet Transform (AWT) and artificial neural networks (ANN) was presented for monthly streamflow predicting of four stations in the Çoruh basin which is situated in the northeast of Turkey. To totally shown the effectiveness of the suggested model, the AWT–ANN models were compared to single ANN models and DWT–ANN models. Using the Additive Wavelet Transform and discrete wavelet transform, original series were decomposed into subcomponents containing important information about the original data at different resolution levels, which were then used for predicting in ANN modelling. Both the AWT–ANN and the DWT–ANN model improved the predictive performance of ANN. However, since the subcomponents obtained from AWT are better matched to the original series than those of DWT, the AWT–ANN model demonstrates a higher performance than DWT–ANN model in monthly mean streamflow prediction. The accurate prediction results for all stations in the Çoruh basin indicate that the AWT–ANN method is a very useful new method for predicting the monthly flow. We recommend that future studies should use the proposed method to predict other hydrologic and water source variables (e.g., temperature, evapotranspiration and groundwater level) and also to model the rainfall-runoff process of the basins in different geographical regions.

Data availability statement

All data used in this study are available from the web page (http://www.dsi.gov.tr/) of the General Directorate of State Hydraulic Works, Turkey (gauge numbers provided in the manuscript).

References

Abda Z, Chettih M, Zerouali B (2021) Assessment of neuro-fuzzy approach based different wavelet families for daily flow rates forecasting. Model Earth Syst Environ 7:1523–1538. https://doi.org/10.1007/s40808-020-00855-1

Adamowski J, Chan HF (2011) A wavelet neural network conjunction model for groundwater level forecasting. J Hydrol 407(1–4):28–40

Adamowski J, Sun KR (2010) Development of a coupled wavelet transform and neural network method for flow forecasting of non-perennial rivers in semi-arid watersheds. J Hydrol 390(1–2):85–91

Adamowski J, Chan HF, Prasher SO, Ozga-Zielinski B, Sliusarieva A (2012) Comparison of multiple linear and nonlinear regression, autoregressive integrated moving average, artificial neural network, and wavelet artificial neural network methods for urban water demand forecasting in Montreal, Canada. Water Resourc Res. https://doi.org/10.1029/2010WR009945

Akaike H (1981) Citation classic—a new look at the statistical-model identification. Curr Contents/eng Technol Appl Sci 51:22–22

Anders U, Korn O (1999) Model selection in neural networks. Neural Netw 12(2):309–323

Araghi A, Baygi MM, Adamowski J, Malard J, Nalley D, Hasheminia SM (2015) Using wavelet transforms to estimate surface temperature trends and dominant periodicities in Iran based on gridded reanalysis data. Atmos Res 155:52–72

Athar M, Ayaz M (2021) Application of ANN model to predict the sediment removal efficiency of silt extractor. Model Earth Syst Environ. https://doi.org/10.1007/s40808-021-01273-7

Belayneh A, Adamowski J, Khalil B, Ozga-Zielinski B (2014) Long-term SPI drought forecasting in the Awash River Basin in Ethiopia using wavelet neural network and wavelet support vector regression models. J Hydrol 508:418–429

Can I, Tosunoglu F, Kahya E (2012) Daily streamflow modelling using autoregressive moving average and artificial neural networks models: case study of Coruh basin, Turkey. Water Env J 26(4):567–576

Cannas B, Fanni A, See L, Sias G (2006) Data preprocessing for river flow forecasting using neural networks: wavelet transforms and data partitioning. Phys Chem Earth 31(18):1164–1171

Cigizoglu HK (2003) Estimation, forecasting and extrapolation of river flows by artificial neural networks. Hydrol Sci J 48(3):349–361

Cigizoglu HK (2004) Estimation and forecasting of daily suspended sediment data by multi-layer perceptrons. Adv Water Resour 27(2):185–195

Dalkiliç HY, Hashimi SA (2020) Prediction of daily streamflow using artificial neural networks (ANNs), wavelet neural networks (WNNs), and adaptive neuro-fuzzy inference system (ANFIS) models. Water Supply 20:1396–1408

Danandeh Mehr A, Kahya E, Olyaie E (2013) Streamflow prediction using linear genetic programming in comparison with a neuro-wavelet technique. J Hydrol 505:240–249

Freire PKDMM, Santos CAG, Silva GBLD (2019) Analysis of the use of discrete wavelet transforms coupled with ANN for short-term streamflow forecasting. Appl Soft Comput J 80:494–505

Güneş MŞ, Parim C, Yıldız D, Büyüklü AH (2021) Predicting monthly streamflow using a hybrid wavelet neural network: case study of the Çoruh River Basin. Pol J Environ Stud 30(4):3065–3075

Honorato AGDM, Da Silva GBL, CaG S (2018) Monthly streamflow forecasting using neuro-wavelet techniques and input analysis. Hydrol Sci J 63(15–16):2060–2075

Hsu KL, Gupta HV, Sorooshian S (1995) Artificial neural-network modeling of the rainfall-runoff process. Water Resour Res 31(10):2517–2530

Joshi N, Gupta D, Suryavanshi S, Adamowski J, Madramootoo CA (2016) Analysis of trends and dominant periodicities in drought variables in India: a wavelet transform based approach. Atmos Res 182:200–220

Kisi O (2005) Suspended sediment estimation using neuro-fuzzy and neural network approaches. Hydrol Sci J 50(4):683–696

Kisi O (2008) Stream flow forecasting using neuro-wavelet techniqu. Hydrol Process 22(20):4142–4152

Kisi O (2009) Neural networks and wavelet conjunction model for intermittent streamflow forecasting. J Hydrol Eng 14(8):773–782

Kohonen T (1988) An introduction to neural computing. Neural Netw 1(1):3–16

Kumar ARS, Sudheer KP, Jain SK, Agarwal PK (2005) Rainfall-runoff modelling using artificial neural networks: comparison of network types. Hydrol Process 19(6):1277–1291

Mallat SG (1989) A theory for multiresolution signal decomposition—the wavelet representation. IEEE Trans Pattern Anal Mach Intell 11(7):674–693

Mehr AD, Kahya E, Bagheri F, Deliktas E (2014) Successive-station monthly streamflow prediction using neuro-wavelet technique. Earth Sci Inf 7(4):217–229

Nalley D, Adamowski J, Khalil B (2012) Using discrete wavelet transforms to analyze trends in streamflow and precipitation in Quebec and Ontario (1954–2008). J Hydrol 475:204–228

Nalley D, Adamowski J, Khalil B, Ozga-Zielinski B (2013) Trend detection in surface air temperature in Ontario and Quebec, Canada during 1967–2006 using the discrete wavelet transform". Atmos Res 132:375–398

Nourani V, Mogaddam AA, Nadiri AO (2008) An ANN-based model for spatiotemporal groundwater level forecasting. Hydrol Process 22(26):5054–5066

Nourani V, Komasi M, Mano A (2009) A Multivariate ANN-wavelet approach for rainfall-runoff modeling. Water Resour Manage 23(14):2877–2894

Nourani V, Baghanam AH, Adamowski J, Kisi O (2014) Applications of hybrid wavelet-Artificial Intelligence models in hydrology: a review. J Hydrol 517:1189–1189

Nunez J et al (1999) Multiresolution-based image fusion with additive wavelet decomposition. IEEE Trans Geosci Remote Sens 37(3):1204–1211

Otazu X, Gonzalez-Audicana M, Fors O, Nunez J (2005) Introduction of sensor spectral response into image fusion methods application to wavelet-based methods. IEEE Trans Geosci Rem Sens 43(10):2376–2385

Partal T (2009) Modelling evapotranspiration using discrete wavelet transform and neural networks. Hydrol Process 23(25):3545–3555

Partal T, Cigizoglu HK (2008) Estimation and forecasting of daily suspended sediment data using wavelet-neural networks. J Hydrol 358(3–4):317–331

Partal T, Kisi O (2007) Wavelet and neuro-fuzzy conjunction model for precipitation forecasting. J Hydrol 342(1–2):199–212

Partal T, Kucuk M (2006) Long-term trend analysis using discrete wavelet components of annual precipitations measurements in Marmara region (Turkey). Phys Chem Earth 31(18):1189–1200

Rajendra P, Murthy KVN, Subbarao A, Boadh R (2019) Use of ANN models in the prediction of meteorological data. Model Earth Syst Environ 5:1051–1058

Salas JD, Delleur JW, Yevjevich V, Lane WL (1980) Applied modeling of hydrologic time serie. Water resource. Publ Littleton, Colorado

Salas JD, Markus M, Tokar AS (2000) Streamflow forecasting based on artificial neural networks. In: Govindaraju RS, Rao AR (eds) Artificial neural networks in hydrology. Springer, Dordrecht

Saraiva SV, Carvalho FDO, Santos CAG, Barreto LC, Freire PKDMM (2021) Daily streamflow forecasting in Sobradinho Reservoir using machine learning models coupled with wavelet transform and bootstrapping. Appl Soft Comput J 102:1568–4946. https://doi.org/10.1016/j.asoc.2021.107081

Sharie M, Mosavi MR, Rahemi N (2020) Determination of an appropriate mother wavelet for de-noising of weak GPS correlation signals based on similarity measurements. Eng Sci Technol Int J 23:281–288

Shiri J, Kisi O (2010) Short-term and long-term streamflow forecasting using a wavelet and nero-fuzzy conjunction model. J Hydrol 394:486–493

Siddiqi TA, Ashraf S, Khan SA, Iqbal MJ (2021) Estimation of data-driven streamflow predicting models using machine learning methods. Arab J Geosci 14:1058

Sithara S, Pramada SK, Thampi SG (2020) Sea level prediction using climatic variables: a comparative study of SVM and hybrid wavelet SVM approaches. Acta Geophys 68:1779–1790

Sume V, Mete B, Ozener AY (2017) Energy potential and environmental interaction of water structures in Upper Coruh Basin. Turk J Hydraul 1(1):7–12

Sun Y, Niu J, Sivakumar B (2019) A comparative study of models for short-term streamflow forecasting with emphasis on wavelet-based approach. Stoch Env Res Risk Assess 33:1875–1891

Taylor KE (2001) Summarizing multiple aspects of model performance in a single diagram. J Geophys Res Atmos 106(7):7183–7192

Torrence C, Compo GP (1998) A practical guide to wavelet analysis. Bull Am Meteor Soc 79(1):61–78

Tosunoglu F, Kaplan NH (2018) Determination of trends and dominant modes in 7-day annual minimum flows: additive wavelet transform-based approach. J Hydrol Eng 23:12

Unes F, Demirci M, Kisi O (2015) Prediction of millers ferry dam reservoir level in USA using artificial neural network. Period Polytechn Civ Eng 59(3):309–318

Vonesch C, Blu T, Unser M (2007) Generalized daubechies wavelet families. IEEE Trans Signal Process 55(9):4415–4429

Wang W, Ding J (2003) Wavelet network model and its application to the prediction of hydrology. Wavelet Netw Model Appl Predict Hydrol 1:1

Wu CL, Chau KW, Li YS (2009) Predicting monthly streamflow using data-driven models coupled with data-preprocessing techniques. Water Resourc Res 2009:45

Yilmaz M, Tosunoglu F, Kaplan NH (2020) Evaluation of trends and dominant modes in maximum flows in turkey using discrete and additive wavelet transforms. J Hydrol Eng 25:11

Acknowledgements

The authors sincerely thank the General Directorate of State Hydraulic Works, Turkey for the providing the streamflow data used in the study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yilmaz, M., Tosunoğlu, F., Kaplan, N.H. et al. Predicting monthly streamflow using artificial neural networks and wavelet neural networks models. Model. Earth Syst. Environ. 8, 5547–5563 (2022). https://doi.org/10.1007/s40808-022-01403-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40808-022-01403-9