Abstract

Many regions and urban areas are becoming more engaged in selecting the optimum future clean energy technology mix to best fit their local power requirements. At the feasibility stage, such analysis is difficult to perform quantitatively due to the substantial uncertainties associated with many of the key influencing criteria. Moreover, changing climate means the renewable energy mix most suited to many regions is also changing as local climates progressively change. A protocol is proposed and evaluated for conducting qualitative multi-criteria decision analysis (MCDA) of multiple clean energy alternatives, suitable for specific regional conditions, using the TOPSIS method. This begins with linguistic assessments of a large number of pertinent criteria (50 or more) taking into account the diverse preferences of the many stakeholders involved. The linguistic assessments are inverted to integer number, fuzzy and intuitionistic fuzzy scoring (IFS) systems. The IFS method is shown to integrate uncertainty in a more flexible way. The fuzzy and IFS TOPSIS methods adjust their impact matrices with three weight factors: (1) objective weights derived from calculated entropy for each criteria, (2) subjective weights associated with preferences expressed by individual representative stakeholders; (3) subjective weights applied to balance the preferences among stakeholder groups. The three methods are applied using regionally specific case studies to illustrate and compare the clean energy rankings they select for the conditions associated with the specific region evaluated. Fuzzy and IFS scoring systems generate slightly different rankings as they capture uncertainty in different ways.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Multicriteria decision-making analysis (MCDA) offers a meaningful approach to integrate the multiple dimensions involved in sustainable renewable energy technology selection on various scales, and the policy making required to achieve that objective. It can consider the diverse positions of multiple stakeholder and balance their influences on selections taking into account technical, socio-economic, infrastructure and environmental factors (Stein 2013). There are particular benefits in applying this approach at the feasibility or exploratory stage of technology selection before conducting detailed engineering and cost analysis (Baumann et al. 2019a).

MCDA facilitates decision making in the face of multiple alternatives, criteria and stakeholders with conflicting preferences enabling selections and rankings to be made more transparently and systematically (Diakoulaki and Karangelis 2007). MCDA techniques have been widely used for renewable energy planning and selection for some time (Pohekar and Ramachandran 2004) or focused specifically on individual renewable technologies (Cucchiella and D’Adamo 2015). It is well suited for addressing complex problems shrouded in uncertainty, with conflicting views on what is, and what is not, sustainable. It can also handle data and information available in a range of different qualitative and quantitative formats (Wang et al. 2009). MCDA can be configured to meaningfully evaluate potential future energy mixes (Hong et al. 2013; Robeiro et al. 2013), suitable combinations of renewable energy technologies for providing sustainable energy diversity for specific regions (Shmelev and van den Bergh 2016), and for urban energy system planning (Brown et al. 2010; Cajot et al. 2017).

MCDA techniques have evolved over the past 40 years (Figueira et al. 2005; Greco et al. 2016) with some methods integrating fuzzy logic to address uncertainty in their sustainable energy assessments (Awasthi et al. 2011). Developing generic and hypothetical case studies is a useful approach for identifying which criteria should be considered in the early stages of renewable energy selection (Kurka and Blackwood 2013). MCDA techniques are now applied routinely for sustainable renewable energy development assessments (Kumar et al. 2017). However, a systematic protocol for applying MCDA in feasibility-stage assessments of potential future, clean and sustainable energy mixes with only qualitative data available has not been previously proposed.

There are several MCDA techniques and assessment scoring systems that are widely applied. These include:

-

Simple additive weighting (SAW) applying scoring with compensation (Chen 2012);

-

Analytic hierarchy process (AHP) applying pair-wise scoring with compensation (Junior et al. 2014);

-

Simple Multi-Attribute Rating Technique (SMART) applying utility function scoring with compensation (Velasquez and Hester 2013)

-

Measuring Attractiveness by a Categorical Based Evaluation Technique (MACBETH) applying scoring with compensation (Guarini et al. 2018);

-

Complex Proportional Assessment (COPRAS) applying scoring with compensation (Yucenur et al. 2020)

-

Elimination and choice expressing reality (ELECTRE) applying pair-wise preference outweighing without compensation (Saracoglu 2015)

-

Preference ranking organization method for enrichment evaluations (PROMETHEE) applying pair-wise preference outweighing without compensation (Kumar et al. 2017); and,

-

The order of preference by similarity to an ideal solution (TOPSIS) applying scoring and goal seeking with compensation (Taylan et al. 2014).

All of these MCDA systems are used to assess multi-dimensional complex scenarios, subject to uncertain values for their discriminating criteria. The MCDA approach makes it possible to consider multiple perspectives of different stakeholder in a structured manner taking uncertainty, constraints and biases into account in a flexible manner commencing with linguistic scoring systems (Wood 2016).

Many energy-related MCDA studies consider a relatively limited set of criteria and stakeholder groups that lack diversity (Baumann et al. 2019b). Indeed, MCDA methods typically applied do not consider more than 15 criteria (Riley 2020). However, the complexity of sustainability issues for energy selection at the local and regional level is considered sufficient to justify considering a large number of criteria. By do so, it facilitates more comprehensive comparisons of the clean energies available that should lead to better considered energy mix selections taking into account a wider range of pertinent issues.

The need for energy mix evaluations and selections is not a one-off exercise for any region. In a world experiencing rapidly changing climatic conditions that are impacting many regions, precipitation levels are oscillating (Berhail et al. 2021), sunlight hours fluctuating (Mega and Medjerab 2021), wind conditions changing (Puri and Kumar 2021), incidents of wildfires increasing (Wood 2021a), and ecosystem impacts accelerating (Wood 2021b) year to year. Consequently, appropriate energy mixes now have to be frequently re-evaluated in most regions. In addition, urban expansions associated with many cities and towns, particularly in the developing world (Elhamdouni et al. 2021) are continuing, alongside changes in the local weather conditions, in a highly non-linear manner (Karimuzzaman and Moyazzem Hossain 2020). This means that many regions have to scale up energy supply to meet growing and changing seasonal energy demands, and their prevailing energy mixes cease to become fit-for-purpose, forcing them to consider new alternatives.

This study is novel in that it develops a fuzzy MCDA tool considering 50 criteria across seven distinct categories in its assessments, taking into account the preference of 15 diverse stakeholders to evaluate 16 renewable energy alternatives. The protocol used to assess, weight and compare the alternatives is also novel, developed as part of this study, as it focuses specifically on the highly uncertain feasibility stage of evaluating potential future sustainable energy mixes.

Methods

The method proposed involves a 15-step protocol for effective and rigorous feasibility assessment of clean energy technologies devised by the author (“Feasibility assessment of clean energy technologies” and “MCDA protocol applied and recommended for provisional clean energy technology selections”). It applies multi-criteria decision analysis (MCDA) assessing multiple technologies (“Multi-criteria decision analysis (MCDA) methods”) in terms of a large number of criteria, disparate views of a substantial number of stakeholders, with different importance weighting applied to individual or groups of stakeholders by the decision makers. To incorporate uncertainty in a transparent way and to facilitate calculations in standard spreadsheet software the TOPSIS MCDA method is employed configured with the ability to apply fuzzy and intuitionistic fuzzy analysis based on linguistic scoring assessments applying objective and subjective weight adjustments (“TOPSIS configurations applied”).

Feasibility assessment of clean energy technologies

Feasibility stage assessment and comparison of the attributes of clean energy technology alternatives is necessary for several purposes. The term “clean” is used to refer to power generation technologies with low or zero greenhouse gas emissions. As the performance of these technologies varies between different regions, what technology works well in one area may not be viable in another area.

To prioritize industry assessments and refine long-term energy policy and plans, it is necessary for policy makers to understand these differences. By doing so, they are able to target incentives at specific technologies in specific geographic areas. Industry also requires such assessments to determine what kind of equipment and support services are likely to be in demand related to the energy technologies deployed based on a range of technical and non-technical criteria. This needs to be conducted on a feasibility basis before engaging in expensive and time-consuming front-end engineering and design (FEED) or detailed design for specific technologies that may or may not form part of long-term energy strategy of a region.

Feasibility assessment of clean energy technology is a multi-dimensional challenge. It requires the integrated consideration of a range of criteria by diverse stakeholder groups for the significant number of technology alternatives available, and some combinations of those alternatives (Fig. 1), while taking substantial uncertainty into account. This benefits from the application of multi-criteria decision-making methods, particularly those that are transparent and relatively easy to calculate and interpret.

In addition to the multi-dimensional considerations summarized in Fig. 1, policy-maker/decision-maker preferences, perhaps partly imposed by political and/or commercial considerations for example, also influence such assessments. Moreover, taking into account the varying degrees of uncertainty in such assessments makes it essential to apply mathematical techniques, such as fuzzy scoring systems, that can be meaningfully applied to simple linguistic (qualitative) assessments of each criteria relating to each alternative. Figure 2 illustrates conceptually these additional assessment needs.

MCDA protocol applied and recommended for provisional clean energy technology selections

The protocol developed and recommended for such feasibility-stage analysis is developed for generic application and to exploit a linguistic (qualitative) assessment scoring system. Prior to the front-end engineering and design (FEED) and/or detailed engineering design and costing of specific energy supply development projects the uncertainties regarding costs and attainable efficiency are considered too great to accommodate quantitative comparisons. The following 15 steps of the adopted protocol incorporate fuzzy MCDA.

-

1.

Specify geographic, technology and energy market constraints, goals and objectives;

-

2.

Identify the clean/low-emission energy supply technologies as alternatives to include;

-

3.

Define the pros and cons of each alternative with multi-discipline expert inputs;

-

4.

Identify the criteria to evaluate all areas of potential impact relating to technology uptake;

-

5.

Independent multi-disciplined team appraise alternatives with relevant criteria in an impact matrix based on linguistic scores;

-

6.

Determine the stakeholder groups impacted by the energy supply alternatives;

-

7.

Invite stakeholder to indicate their preferences (in the form of subjective weights) with respect to each criteria considered (high weights assign more significance to certain criteria);

-

8.

Conduct preliminary qualitative MCDA using integer number and fuzzy scoring to translate linguistic assessments using transparent calculations run by easy to implement software.

-

9.

Apply various weightings to the MCDA assessments in a sequence (A) objective (entropy-derived) weights for some methods; (B) subjective stakeholder weights; and (C) subjective decision-maker (or assessment facilitator/policy maker) weights assigning relative importance adjustments to each group of stakeholders;

-

10.

Restrict the application of maximum threshold values and/or vetoes for specific criteria to the decision makers; preventing individual stakeholders from manipulating these;

-

11.

Establish a provisional ranking order of suitability for those alternatives from the decision-makers’ perspectives. This is likely to be useful for policy makers and potential investors for planning purposes. It is also likely to cull the low ranking technologies from further consideration in the medium term.

-

12.

At the FEED/detailed design phase capture more quantitative data on multiple criteria and refine the MCDA model to integrate both quantitative and qualitative scoring. Even in this phase, it is likely that several criteria impacts cannot be quantitatively assessed.

-

13.

Certain quantitative thresholds and vetoes may be introduced for a more focused list of criteria by the decision makers from the FEED stage forward, perhaps driven by the prevailing regulatory guidelines and limits.

-

14.

Establish a pre-FID (final investment decision/regulatory approval) ranking order of suitability for those short-listed alternatives from the decision-makers’ perspectives. Such analysis can be used to justify decisions, allocation of incentives, investments and regulatory approvals.

-

15.

Revisit the feasibility stages of the analysis (steps 1–11) as and when substantial technological breakthroughs, availability and cost reductions materialize for certain technologies.

The analysis presented in this study focuses on steps 1–11 of the protocol described. The point of including steps 12–15 is to emphasize that the feasibility-stage analysis can provide a useful benchmark from which to progress into the uptake and development of specific technologies. Those latter steps introduce more quantitative information, captured from Pre-FEED, FEED and detailed design for certain criteria assessed, thereby leading to a revised ranking of the alternatives.

Multi-criteria decision analysis (MCDA) methods

MCDA methods are widely applied to problems using qualitative inputs (linguistic and/or non-monetary) with and without some quantitative (monetary and/or numerical) inputs for some criteria depending on the levels of uncertainty involved and the maturity of the available knowledge.

MCDA technique selected

Some MCDA methods (specifically ELECTRE and PROMETHEE) establish an outranking order amongst the alternatives that avoids compensation and offsetting (trade-offs) among criteria scores; low scores in certain criteria do not directly offset high scores for other criteria. Such non-compensatory methods can integrate several distinct natural scoring/assessment scales without the need to normalize scores for each criteria. This provides an advantage when aggregating quantitative information for development projects of different scales (i.e., MW scales in the context of energy supply) to be integrated within one decision framework (Figueira et al. 2013). Most compensatory methods can be configured to integrate qualitative and quantitative scoring applied to the various criteria under consideration (Figueira et al. 2016). The compensatory methods general achieve this by working with customized scoring systems and normalizing the scored ranges applied to each criteria considered.

The ELECTRE III and PROMETHEE methods can be configured to allow stakeholders to suggest and apply thresholds and vetoes their criteria preferences, i.e., move the right specified in step 10 of the protocol upstream to step 7 (“MCDA protocol applied and recommended for provisional clean energy technology selections”). This is not recommended as such power is almost always used inappropriately by certain stakeholders to exclude alternatives that they are strongly opposed to. The compensatory MCDA methods that involve criteria trade-offs are difficult to configure to allow stakeholders, at an intermediate stage of the assessment, the ability to suggest and apply variable thresholds and/or vetoes to specific criteria. For these reasons, the authority to apply thresholds and vetoes to specific criteria is restricted to the decision maker/policy maker to be introduced in the final stages of the assessment in the recommended protocol (i.e., steps 10 and 13). This means that the proposed protocol is suitable for both compensatory and non-compensatory MCDA methods.

Whatever MCDA method is applied typically a set of n alternative scenarios (different development schemes) are assessed in terms of their performance using a set of m criteria each weighted objectively and/or subjectively. Sometimes, it is necessary to decide upon the exclusion of certain criteria from the analysis as provisional assessment suggests that they have limited impact or relevance based on the opinion of all stakeholders. This enables the MCDA methods to focus on the most relevant criteria. Another approach is to retain all criteria irrespective of their perceived significance and to prioritize the criteria by apply objective adjustments to all criteria in the form of entropy weights. Entropy weighting avoids discarding criteria prematurely from the decision-making process and balances their respective contributions independently from the subjective weights applied by the stakeholders and decision makers.

Several preference ranking schemes have been proposed and applied to integrate subjective stakeholder preferences with respect to each criteria into MCDA analysis. These include:

-

1.

Direct allocation of numerical weights, with the total value of all criteria weights assigned by an individual stakeholder summing to 1 or, in some cases, 100;

-

2.

Pairwise comparison of criteria is also widely applied and forms a key aspect of the AHP method (Saaty and Vargas 2012);

-

3.

Swing weighting (Dieter and Schmidt 2009; Lopes and Almeida 2013) assumes that the alternative with the lowest criteria score is assigned a utility of 0 and the highest criteria score a utility of 1, and those weights are then applied in an additive multi-attribute utility function;

-

4.

Calculating the hypothetical criteria weightings that would preferentially select each alternative considered (Miettinen and Salminen 1999); and,

-

5.

The Simos and Revised Simos procedures (Simos and Maystre 1989; Figueira and Roy 2002), whereby each criteria is presented to each stakeholder as one card in a set of cards (one card for each criteria). The stakeholders then arrange or rank the set of cards in order of their perceived (subjective) importance.

The swing and hypothetical weighting methods are criticized by some (Troffaes and Sahlin 2017) for in many cases generating extreme and unrealistic criteria weights. Such weighting approaches are unlikely to meaningfully discriminate between alternatives on a basis that reflects reasonable due consideration of each criteria. On the other hand, hypothetical cases and swing weights can sometimes provide insightful sensitivity cases identifying what degree of preference is needed for certain criteria for certain alternatives to become high-ranking contenders for selection. Stakeholder ranking scheme #1, from the above list, is used in the case MCDA analysis presented in this study.

TOPSIS (the order of preference by similarity to an ideal solution)

TOPSIS (Hwang and Yoon 1981) is one of the most diversely applied MCDA method (Huang et al. 2011). It offers the following advantages:

-

Multiple criteria (m) and alternatives (n) and stakeholders (l) can be considered;

-

Useful results can be generated limited subjective inputs, if necessary;

-

It is easily coded and readily applied in spreadsheets, with or without visual basic for applications (VBA) macros, to provide rapid assessments;

-

Adaptable to work with combinations of linguistic, semi-quantitative and quantitative scoring systems

-

Consistent and transparent alternative ranking schemes are generated (Khosravanian and Wood 2016; Taylan et al. 2014);

-

Readily adapted to provide fuzzy assessments and flexibly incorporate uncertainty with or without objective entropy weighting (Wood 2016); and

-

Suitable for rapid sensitivity testing through by varying the subjective weighting assumptions.

The TOPSIS algorithm conducts distance-based uncertainty calculations. To do so it identifies one of the alternative with the shortest geometric distance from a “positive ideal” solution and/or with the longest geometric distance from a “negative ideal” solution. Flexibility is introduced by the ability to apply objective weights and more than one level of subjective weights (Hyde et al. 2005). The method can also integrate assessments by multiple decision makers (Shih et al. 2007), if necessary, which is a common requirement in large complex projects or those owned and operated as joint ventures. Thor et al. (2013) suggest TOPSIS outperforms AHP, ELECTRE and SAW because of its consistent structure, rapid/easy calculation and suitability for handling datasets with many attributes. Junior et al. (2014) identified similar advantages for TOPSIS over AHP. It is for these recognized advantages that the TOPSIS method is applied to assess the clean energy technology alternatives.

The TOPSIS method is readily programmable (e.g., in Python, Yadav et al. 2019) and is also easy to run transparently in Excel revealing useful intermediate calculation steps. For large numbers of criteria, alternatives and stakeholders, Excel driven by VBA macros (Hyde and Maier 2006; Wood 2016) executes the technique rapidly, and that is the approach employed in this study as it facilitates the assessment of multiple sensitivity cases.

TOPSIS determines differences between the scored assessments for each alternative. It identifies and incorporates best and worst scores for each criterion considered. Those differences are normalized, typically using a linear “square-root-sum-of-squares” formula (Eq. 1), although various non-linear vector formulas could be applied (Huang et al. 2011):

where \({x}_{ij}\) is the score value assessed for the ith criteria for the jth alternative. This yields a normalized (dimensionless) but as yet unweighted m by n impact matrix R (Eq. 2):

The unweighted impact matrix can then be adjusted by weights or priorities applied to each criterion. This generates a weighted-normalized impact matrix. Subjective weights are commonly applied in one or more steps, for example, one step adjusting for stakeholder preferences and a second step adjusting for decision makers’ perception of the relative importance of each stakeholder’s assessment. Such adjustments are expressed in the following equation:

where \({p}_{jk}\) represents the summed weighted criteria scores for one alternative under consideration, \({Wc}_{jk}\) is the ith criteria weight for alternative j applied by stakeholder k, and \({Wg}_{k}\) is the stakeholder k importance weight applied by the policy maker.

In a real-number summation system, the total of the weighted criteria (m) scores for each of the alternatives (n) could be summed for all the l stakeholders (Eq. 4) to enable the overall scores of each alternative to be compared and ranked:

where \(\stackrel{\sim }{{P}_{j}}\) is the weight-adjusted cumulative score of alternative j incorporating the preferences of all l stakeholders.

Rather than use Eq. 4, TOPSIS establishes the best case (Eq. 5) and the worst case (Eq. 6) values among the n alternatives for each of the m criteria:

where the \({A}^{+}\) vector represents the positive ideal and the \({A}^{-}\) vector represents the negative ideal. \({p}_{i}^{+}\) is the maximum \({p}_{ij}\) value from the j alternatives available for ith criteria, and \({p}_{i}^{-}\) is the minimum \({p}_{ij}\) value from the j alternatives available for ith criteria.

Two geometric distance arrays are thereby generated with Euclidian distances for each normalized criterion element (i) from their respective positive-ideal and negative-ideal solutions. These values are then are then summed to provide an assessment for each alternative (j).

A geometric distance separating each alternative from the best case for each criterion is established with the following equation:

where \({d}_{j}^{+}\) represents that distance for alternative j from its positive-ideal solution \({A}^{+}.\)

Equation 8 calculates a similar geometric distance for each alternative and the worst case for each criterion:

where \({d}_{j}^{-}\) represents that distance from its negative-ideal solution \({A}^{-}.\)

These two “square-root-sum-of-squares” vectors are designed to avoid generating negative numbers and at the same time incorporate all criteria for each alternative (Eqs. 7, 8). These vectors are then meaningfully combined to generate a similarity scale from 0 to 1. That similarity scale is usefully expressed as a ratio between the distance (separation) from the least attractive (worst cases) and the sum of the distances from the best and worst cases (Eq. 9). That relationship is referred to as the relative closeness index (RCj):

Equation 9 enables the n alternatives to be ranked according to their relative \({\mathrm{RC}}_{j}\) magnitudes. The rank #1 alternative has the highest \({\mathrm{RC}}_{g}\) value, because it is located most distant from the negative-ideal solution \({A}^{-}\).

Step-by-step TOPSIS computations to establish \({\mathrm{RC}}_{g}\) are transparent and well documented (Krohling and Campanharo 2011; Ghazanfari et al. 2014). Sometimes it is instructive to calculate two distinct relative closeness indices: one that incorporates just the weight adjustments for the stakeholder weights \({\mathrm{Wc}}_{jk}.\) This approach adjusts Eq. 3 to exclude the weights for stakeholder importance \({\mathrm{Wg}}_{k}\) provided by the decision or policy maker, or it applies equal value \({\mathrm{Wg}}_{k}\) weights to each criteria. This generates the \({\mathrm{RC}}_{j}\) relative closeness index. Alternative ranking orders can are then established; one based on \({\mathrm{RC}}_{j}\) (excluding stakeholder importance adjustments); the other on \({\mathrm{RC}}_{g}\) (including stakeholder importance adjustments). \({\mathrm{RC}}_{g}\) is the same as \({\mathrm{RC}}_{j}\) if weights \({\mathrm{Wg}}_{k}\) are all set the same for each stakeholder.

There is a clear distinction between the TOPSIS relative closeness indices (Eq. 9) and simple summation scoring (Eq. 4) for each alternative. It is useful to compare the results (ranking) obtained from integer number-scoring TOPSIS analysis with that derived from simple stakeholder-weighted linear and non-linear summation scoring and ranking schemes, also involving integer numbers from simple deterministic numerical scoring scales. In the integer number analysis, each element of the \({x}_{ij}\) matrix in Eq. 1 is a single integer number not a fuzzy number. The analysis using integer number-scoring systems do not capture any uncertainties in criteria assessments. They, therefore, typically require extensive sensitivity analysis to evaluate the potential impacts of a range of possible criteria assessment associated with specific alternatives. Applying linear and/or non-linear integer number-scoring systems to qualitative linguistic assessments does not on its own capture the uncertainty that is inherent or implied using typical linguistic assessments. Involving fuzzy number-scoring systems and objective weights broadens TOPSIS analysis to better account for inherent biases and assessment uncertainties.

Integer, fuzzy and intuitionistic fuzzy numerical scoring with TOPSIS

There are three distinct mathematical approaches typically applied for integrating uncertainty with MCDA methodologies: (1) applying sensitivity analysis to integer number assessments; (2) dealing with criteria scores as fuzzy sets (Hsu and Chen 1996); and (3) treating criteria distributions as intuitionistic fuzzy sets (IFS) (Atanassov 1986, 1999). Simple deterministic integer number scoring for inverting initial linguistic assessments typically fails to take into account real-world uncertainties. Moreover, human judgments often tend to be subjective, vague and to an extent systematically biased. This, in itself, introduces preferential prejudices and an additional degree of uncertainty to the MCDA. Such circumstances can be improved by involving “fuzziness” concepts.

TOPSIS is easily adapted to incorporate fuzzy logic to better account for uncertainty (Majd et al. 2014). Fuzzy logic is computed by applying the rules of fuzzy set theory (Zadeh 1965, 1971) and applying the principles of fuzzy arithmetic (Keufmann and Gupta 1991; Zimmermann 1991). This approach can improve the representation of different degrees of uncertainty associated with TOPSIS criteria assessments (Deng 1999). Fuzzy TOPSIS (Shapiro and Koissi 2013) is particularly useful for the feasibility-stage analysis of clean energy technologies when implementation capital costs, plant operating costs and MWh generation capabilities of certain technologies deployed in specific locations at various scales are highly uncertain.

As an alternative to applying fuzzy set theory to better reflect uncertainty in criteria assessments, intuitionistic fuzzy set (IFS) concepts can also be integrated with TOPSIS analysis to consider uncertainty in a different manner. IFS approaches fuzziness (uncertainty) by considering degrees of indeterminacy and vagueness rather than defining specific overlapping fuzzy distributions.

Fuzzy and IFS methods derive their impact matrix input numerical values and calculate associated objective criteria weights differently. Subjective criteria weights are used to reflect the preferences (biased judgments) of stakeholders, or objective, calculated considering the distributions of impact matrix numerical input values for each criteria across all alternatives. The most meaningful analysis is typically generated by involving a combination of both objective and subjective weight adjustments to the impact matrix. It is often instructive to conduct fuzzy and IFS TOPSIS with and without objective weights and to compare the resulting rankings for the alternative considered.

Objective weights can be derived by exploiting “entropy” calculations (Chen and Li 2010), which can be determined using various methods. Entropy provides insight to the magnitude of vagueness (Shannon 1948) displayed by the criteria scores for different clean energy technology alternatives input to the impact matrix. “Fuzziness” expresses a situation where imperfect (not fully known) assessments exists within the impact matrix, because it is not possible with certainty to know whether individual elements of the matrix belong or not to a particular value or outcome. An entropy measure helps to express how far a set of fuzzy numbers is from a deterministic real value (Collan et al. 2015).

De Luca and Termini (1972) adapted probabilistic entropy calculations based on Shannon’s (1948) concept to suit fuzzy set calculations. Szmidt and Kacprzyk (2001) established an intuitionistic fuzzy entropy measure for calculating IFS entropy-based weights, which has since been further developed (Vlachos and Sergiadis 2007). Such entropy-based weights (Parkash et al. 2008) are used in this analysis to determine objective weight adjustments to the TOPSIS impact matrix using IFS or fuzzy inputs (Ye 2010; Wood 2016). The entropy measure, in whatever way it is calculated, should distinguish between those criteria for which the alternative scores show a wide dispersion or uncertainty (high entropy) and those criteria for which the alternative scores show a lower dispersion or uncertainty (low entropy). Each time a criteria assessment score is changed the distribution of entropy values for the impact matrix will also change.

An inverse relationship is applied between the calculated entropy value and the entropy-based weight value used to adjust the impact matrix elements. Criteria with relatively low calculated entropy are, therefore, assigned high-value entropy weights; criteria with relatively high calculated entropy are assigned low-value entropy weights Applying a low-valued entropy weight to adjust a decision matrix element reduces the relative contribution that criterion will make towards alternative rankings, and vice versa (Wang et al. 2007; Wang and Lee 2009). Applying objective entropy weights to the criteria values of an impact matrix tends to bring the relative contributions made by each criteria to the alternative ranking into balance. Of course, the calculated entropy and the derived entropy weights applied will change each time the assessments (numerical score) assigned to each criteria/alternative changes.

Applying entropy weights to the TOPSIS method often result in different alternative rankings compared to integer number-scoring or fuzzy TOPSIS analysis that does not apply entropy weighting. That is particularly the case where there is a highly variable dispersion of scores for the different criteria considered. It is insightful to compare alternative rankings based on simple linear and non-linear assessments with integer number-scoring TOPSIS, fuzzy TOPSIS and IFS TOPSIS methodologies, with and without entropy weightings applied to the latter three methods. Objective entropy weight adjustments to the impact matrix are typically complemented by adjustments related to the two subjective weights, which are optionally applied, as they are in integer number-scoring TOPSIS analysis. These are the subjective criteria weights (Wc) applied by the stakeholders and the stakeholders’ importance weights (Wg) applied by the decision/policy makers.

Numerical scoring systems applied to invert linguistic assessments

Linguistic criteria assessments need to be inverted into numerical scales, suitable for integer number, fuzzy or IFS TOPSIS analysis. The values selected for those scales are themselves subjective. Hence, the values used can ultimately influence the derived TOPSIS alternative ranking outcomes and require sensitivity testing. Table 1 presents the base case linguistic scoring system (left side) and its numerical translation into integer number, fuzzy and IFS numerical scales.

Fuzzy TOPSIS calculations

Linguistic scores are translated into sets of triangular fuzzy numbers (Table 1; Fig. 3) for this study. Trapezoidal sets (defined by four input numbers) could be used, but triangular sets are deemed adequate to illustrate the analysis. A triangular set (defined by three input numbers) offers a simple way of expressing uncertainty in fuzzy form (Kahraman et al. 2009). The linguistic assessments in the impact matrix (an element for each of m criteria and n alternatives) are replaced by triplet fuzzy numbers each consisting of a low, a central and a low value (Table 1). The individual component values of each fuzzy triplet are real numbers belonging to the fuzzy set ã [0,1]. Each linguistic assessment is equivalent to one triangular component of a set of overlapping triangles as illustrated in the right side of Fig. 3. Defined in this way the membership function \({\mu }_{\stackrel{\sim }{a}}\)(x) of that triangular function is easy to calculate (Fig. 3; left side).

Triplet fuzzy number ã comprised of three values (a–c). Its membership function \({\mu }_{\stackrel{\sim }{a}}\)(x) comprises seven overlapping triplets constitute fuzzy set Ã, each representing a distinct linguistic term as defined in Table 1

A similar TOPSIS methodology to that described for integer number-scoring systems (Eqs. 1–9) is applied using fuzzy set scores. Fuzzy TOPSIS Euclidean distances from the positive-ideal and negative-ideal solutions can be derived by applying a vertex method (Eqs. 10 and 11) to replace Eqs. 7 and 8 used for integer number-scoring TOPSIS. Equations 10 and 11 establish these distances by calculating squared differences between the three values of the triplet fuzzy numbers entered into the impact matrix and the maximum and minimum triplet values for each criteria across all alternatives:

where \({d}_{j}^{+}\) and \({d}_{j}^{-}\) are the Euclidian distances from the positive-ideal solution \({A}^{+}\) and the negative-ideal solution \({A}^{-}\), respectively. The other symbols are equivalent to those used in Eqs. 7 and 8 except that a, b, c represent the three values comprising triplet \(\stackrel{\sim }{a}\). Equations 10 and 11 are calculated for each of the n technology alternatives under consideration.

Fuzzy TOPSIS analysis is also usefully calculated in two steps applying separately two distinct subjective weights: \({\mathrm{Wc}}_{jk}\) the ith criteria weight for alternative j applied by stakeholder k; and \({\mathrm{Wg}}_{k}\) the stakeholder k importance weight applied by the decision maker. This also leads to the calculation of two distinct closeness ratios: RCj applying just stakeholder preference weights; and RCg applying both stakeholder preference weights and stakeholder importance weights.

Fuzzy TOPSIS analysis applying objective entropy weights

The fuzzy-triangular sets (triplets) of each element in the fuzzy impact matrix is optionally adjusted by entropy weights prior to applying the subjective weights (\({\mathrm{Wc}}_{jk}\) and \({\mathrm{Wg}}_{k}\)). The “fuzzy-TOPSIS-with-entropy” analysis applied represents an adaption of that described by Wang et al. (2007). It calculates the entropy values by extracting crisp numbers from the fuzzy triplets representing each element in the unweighted fuzzy impact matrix (Eq. 12):

The crisp set is normalized for each criterion by applying the following equation:

Criterion entropy (e) is then be calculated by applying the following equation:

where the constant \(k= {\left(\mathrm{ln}\left(n\right)\right)}^{-1}\), and n refers to the number of alternatives evaluated.

This establishes a set of m criteria entropy values E(Ci), which are then used to derive a set of entropy weights (We). To achieve this, an inverse criteria entropy relationship is expressed as one minus entropy and termed “degree of difference” (\({d}_{i}\)) derived using (Eq. 15):

where \({d}_{i}\) is inversely correlated to \({e}_{i}\) so it measures the inherent (1 – entropy) variations individually for each criteria across the range of alternatives evaluated (Wang et al. 2007). High values of di, generate high entropy weights, which balance out the relative criteria contributions to the impact matrix. The entropy weight wi for criterion i (Eq. 16) is one of a set of entropy weights (We), each with a real value between 0 and 1:

That set of criteria entropy weights is expressed as \({W}_{e}= \left({w}_{1}, {w}_{2},\dots {w}_{i} ,{w}_{m}\right),\) where \({W}_{e}\) is subject to the constraints: \({w}_{i} \ge 0, \sum_{i=1}^{m}{w}_{i}=1\).

The fuzzy triplets constituting the fuzzy TOPSIS unweighted impact matrix are multiplied by the entropy weights. These objectively weighted values are subsequently adjusted by the two subjective weights (\({Wc}_{jk}\) and \({Wg}_{k}\)).

Intuitionistic fuzzy TOPSIS (IFT) method

The intuitionistic fuzzy sets (IFS) use three metrics (Table 1) to define the fuzziness of each element in the fuzzy impact matrix. These are: (1) degree of membership (μ); (2) degree of non-membership (ν); and, (3) an intuitionistic index, derived from a defined relationship between (1) and (2) and also referred to as “hesitancy” (π). IFS elements expressed in this way characterize the vagueness/uncertainty related to each linguistic assessments. It achieves this without the need to approximate fuzzy uncertainty distributions (e.g., fuzzy triangles or trapezoids).

Atanassov (1999) expressed IFS variable A existing within universe of discourse X using the following equation:

where \({\mu }_{A}:X \to \left[\mathrm{0,1}\right]\mathrm{ and }{v}_{A}:X \to \left[\mathrm{0,1}\right]\) with the additional constraints \(0 \le\) \({\mu }_{A}\left(x\right)+ {\mu }_{A}\left(x\right)\le 1, \forall x \in X\).

A represents a crisp-number set when μA or νA are binary (0 or 1), in which case π = 0. Hung and Chen (2009) describe IFS relationships in more detail. As μA + νA for uncertain assessments is less than 1 (πA > 0), the intuitionistic index (πA or hesitancy) of x in A, represents the key uncertainty indicator. That index is calculated by the following equation:

where πA is proportional to uncertainty for each criterion assessment; it relates to the degree of membership of x in IFS A (which is uncertain). The μ, ν and π values assigned to specific linguistic assessments can be varied to suit the overall uncertainty levels of specific projects/criterion. Higher uncertainty is reflected in higher π values. On the other hand, if an outcome is known with certainty then it should be reflected by a crisp assessment value with π = 0.

Calculating and applying objective entropy weights is also possible in IFS TOPSIS analysis. The IFS entropy calculation applied in this study is that adapted and made more flexible by Wood (2016) from a method suggested by Szmidt and Kacprzyk (2001) using the following equation:

The \(E\left({C}_{i}\right)\) set of IFS-calculated entropy values (Eq. 19) are then normalized, with the flexible adjustment factor S (Wood 2016) included in that normalization step, by applying the following equation:

The criterion normalized entropy (\({h}_{i}\)) in the IFS impact matrix will vary slightly depending on the value of S applied to either dampen or accentuate, as necessary, the normalized entropy range. It is useful to be able to vary the value of S as a sensitivity test. The value used for S is typically varied between about 0.01 and 0.5. If S is set to zero, then the criterion calculated with maximum unnormalized entropy value has a normalized entropy value of 1. S adjustment factors > 0 ensure that the highest entropy value is less than 1 meaning that criterion will not be assigned a zero or close to zero entropy weight.

Alternative IFT entropy calculations have been suggested and applied (Ye 2010; Hung and Chen 2009). Equation 20 is preferred because it is easy to calculate and flexibly vary by adjusting its S factor.

The degree of difference (di) is established as 1 − hi (i.e., Eq. 15 applied with E(Ci) replaced by hi). The IFS entropy weight for each of the m criteria is then calculated with Eq. 16 in the same manner described for fuzzy TOPSIS. Matrix Z adjusts the impact matrix D with the entropy weight vector We, as expressed by the following equations:

with

Equation 22 applies the entropy weights as exponents to the IFS elements as suggested by Atanassov (1999). This is distinct from the application of entropy weights in fuzzy TOPSIS, in which the impact matrix triplet values are multiplied by \({W}_{e}\).

The distances from the ideal solutions are then derived for each criterion. Equation 23 calculates the geometric distance between each alternative and the best case for each criterion:

where \({d}_{j}^{+}\) is equivalent to the TOPSIS value calculated in Eq. 7. μ and v are from the IFS impact matrix, where symbol p means the values are entropy weighted.

\({p\mu }_{i}^{+}\) and \({pv}_{i}^{+}\) are the maximum μ and v values for criteria i.

Equation 24 calculates the geometric distance between each alternative and the worst case for each criterion:

where \({d}_{j}^{-}\) is equivalent to the TOPSIS value calculated in Eq. 8. \({p\mu }_{i}^{-}\) and \({pv}_{i}^{-}\) are the minimum μ and v values for criteria i.

The entropy-weighted impact matrix is then ready for adjustment by the subjective weights (\({Wc}_{jk}\) and \({Wg}_{k}\)) to yield RCj and RCg closeness ratios, respectively, using Eq. 9 as applied for integer number-scoring TOPSIS analysis.

TOPSIS configurations applied

The TOPSIS MCDA analysis applied in this study is configured to specifically:

-

1.

Assess uncertainty more implicitly than relying on deterministic analysis plus sensitivity cases

-

2.

Convert the impact matrix into wholly linguistic (qualitative) assessments, on the basis that the information currently available (feasibility stage) for all criteria considered is too uncertain to be meaningfully assessed quantitatively;

-

3.

Inhibit the ability of individual stakeholders to specify criteria thresholds or vetoes. This is done to avoid the likelihood of stakeholders manipulating that power to subvert the ability of MCDA to provide meaningful integrated analysis;

-

4.

Add the ability of decision makers to apply importance weights to the stakeholders contributions, i.e., a second/higher level of subjective weighting. Ultimately, decision makers would also be granted the power to apply overriding thresholds and vetoes, however, that is not considered appropriate for the qualitative stage of analysis considered here. It would become appropriate at a later stage of analysis (pre-FEED to detailed design stage) when sufficient information is available on at least some economic, environmental criteria to conduct a meaningful semi-quantitative assessment.

-

5.

Evaluate the impact of applying objective criteria weights using calculated entropy to improve the balance of the relative contributions to the alternative ranking.

Results

In this section, the model proposed in “Method” is applied to an assessment for a mid-latitude marine area conducted by the author. It is multi-dimensional in that it considers 16 clean energy alternative technologies in terms of 50 criteria, grouped into 7 distinct categories, from the perspective 15 different stakeholders with 5 cases assigning different importance weightings to those stakeholders (“Assessing alternatives in terms of criteria and stakeholder preferences”). Distinct integer number, fuzzy and IFS scoring systems are applied to the TOPSIS analysis. The clean energy alternatives are then ranked according to each MCDA method applied (“Integer number-scoring, fuzzy and IFS TOPSIS evaluations of the case study clean energy impact matrix”). The entropy (objective) weights calculated for the fuzzy and IFS methods are presented and compared in “Integer number-scoring, fuzzy and IFS TOPSIS evaluations of the case study clean energy impact matrix”.

Assessing alternatives in terms of criteria and stakeholder preferences

The base MCDA case evaluated to demonstrate the proposed method’s capabilities is set in a representative, but unspecified, mid-latitude maritime region based on an assessment conducted by the author. Sixteen clean energy alternatives are assessed using 50 criteria with preferences concerning the relative importance of those criteria indicated by 15 individual stakeholders. Various sensitivity cases making adjustments to the base case are evaluated, together with assessments for other geographically distinct regions. Each clean energy alternative is assessed linguistically (Tables 2a and 2b), using the scoring systems of Table 1. In practice, it is more objective for such assessments to be provided by a multi-disciplined advisory team that is independent of the stakeholder’s providing their preferences.

The individual alternatives, criteria and stakeholders are drawn from the categories identified in Fig. 1. Specifically, the 16 clean energies evaluated are:

-

1.

Solar photovoltaic (PV) parks

-

2.

Solar thermal plants

-

3.

Offshore wind

-

4.

Onshore Wind

-

5.

Hybrid PV plus wind facility plus utility scale energy storage battery

-

6.

Biomass

-

7.

Run-of-river hydro

-

8.

Large-scale hydro with integrated pumped storage facility

-

9.

High-temperature geothermal

-

10.

Small-scale modular nuclear reactor

-

11.

Large-scale nuclear reactor

-

12.

Renewable (“green”) hydrogen energy plant

-

13.

Natural gas combined-cycle gas turbine (CCGT) with CCS

-

14.

Hybrid solar PV plus natural gas CCGT plus CCS

-

15.

Hybrid wind plus natural gas (CCGT) pus CCS

-

16.

Tidal wave energy

The 15 stakeholder preferences (Table 3a and 3b) taken into consideration are:

Environment category

-

1.

Environmental regulator

-

2.

Environmental non-governmental organization (NGO)

Community category

-

3.

General community representative

-

4.

Local community representative

-

5.

Indigenous community representative

Academia category

-

6.

Academic: social science expert

-

7.

Academic: science/energy expert

-

8.

Academic: engineering expert

Government category

-

9.

Central government

-

10.

Regional/local government

Judiciary category

-

11.

Legislature/judiciary

Regulator category

-

12.

Power system regulator

Industry category

-

13.

Power grid operator

-

14.

Power generator (utility)

-

15.

Technical service provider to the power industry

Fifty criteria were selected by which to evaluate each clean energy alternatives with the 7-point linguistic assessment defined in Table 1. These criteria belong to the seven categories distinguished in Fig. 1. Specifically, they are:

Resource category

-

1.

Resource availability.

-

2.

Intermittency issues and the need for backup power supply.

-

3.

Overall security and contribution to electricity supply.

-

4.

Compatibility with small-scale grids.

-

5.

Scope for technology improvements.

-

6.

Life expectancy of equipment.

-

7.

Vulnerability to global commodity markets.

-

8.

Dependency on complex and/or mainly imported technologies.

-

9.

Carbon capture and sequestration required to achieve low GHG emissions.

-

10.

Diverse ownership and competition opportunities.

-

11.

Oligopoly (utility) domination risks.

Infrastructure category

-

12.

New power transmission and conversion needs.

-

13.

Distance to market (power and equipment supply chain lengths).

-

14.

Pressurized containment needs.

-

15.

Power or energy storage infrastructure needs.

Operations category

-

16.

Flexibility (ability to stop/start quickly at any time).

-

17.

Logistics and operational complexity.

-

18.

Maintenance and refurbishment requirements.

-

19.

Cooling and/or cleaning requirements.

-

20.

Energy generation and supply efficiency.

Economic category

-

21.

Power unit cost of supply.

-

22.

Ability to secure equity and debt finance for development.

-

23.

Profitable viability of development projects.

-

24.

Wholesale delivered electricity price.

-

25.

Scope for substantial cost of supply reductions.

-

26.

Vulnerability to consumable costs (including fuels).

-

27.

Tax revenue generated.

-

28.

Tax incentives or subsidies required.

-

29.

Exposure to high decommissioning costs.

Social category

-

30.

Employment created and sustained.

-

31.

Potential negative impacts on public health.

-

32.

Safety: likelihood and/or severity of accidents.

-

33.

Security: vulnerability to attack.

-

34.

Proximity of power supply to residential areas.

-

35.

Need for local suppliers and/or support industries.

-

36.

Impacts on valued sites and landscapes.

-

37.

Noise creation.

-

38.

Traffic movements.

-

39.

Local lifestyle impacts.

Legal category

-

40.

Specialist legislation required.

-

41.

Compatible with small-scale power purchase agreements.

-

42.

Carbon capture and sequestration liability legislation needed.

Environmental category

-

43.

Associated life-cycle GHG emissions.

-

44.

Associated other pollution risks and waste production.

-

45.

Water usage.

-

46.

Land usage.

-

47.

Waste heat emissions.

-

48.

Consuming rare metals and/or using toxic materials.

-

49.

Negative ecosystem/ecology impacts.

-

50.

Negative aesthetic/visual impacts.

Tables 3a and 3b reveal that the different stakeholder groups hold quite different views on the criteria preferences, as would be the case in all real-world examples. In the base case example, government and industry stakeholders have high preferences for the resource, infrastructure, operations and economic criteria, whereas environmental and community stakeholders have high preferences for the environmental and social criteria. Heatmap displays of Tables 3a and 3b (not shown due to the large number of criteria considered) are readily able to visually highlight these differences.

Policy/decision makers initially assign equal stakeholder importance weightings to each of the independent stakeholders in the base case evaluation. However, four additional cases were evaluated in which the stakeholder importance weights (Table 4) were varied, either to balance the contributions between the stakeholder category (Fig. 1), or to favor one or more stakeholder categories preferentially. This is highlighted by the heat-map shading in Table 4.

Integer number-scoring, fuzzy and IFS TOPSIS evaluations of the case study clean energy impact matrix

The base case assumptions convert the linguistic scoring (Tables 2a, 2b) into numerical scores according to the scoring scales used for eight distinct MCDA methods applied, as specified in Table 1. The 15 stakeholder weights derived from their preferences expressed in Tables 3a and 3b are then applied. In the base case, it is assumed that the decision maker’s importance weights are applied equally to each stakeholder (i.e., 1/15 = 0.06667 weight). The base case analysis results using eight different MCDA methods described in “Results”, are shown in Table 5 in terms of the ranking order derived for the 16 alternative clean energies considered (rank “1” is best; rank “16” is worst).

For all eight MCDA methods, the run of river hydro (Alt7) is ranked top (#1) and large-scale nuclear is ranked bottom (#16) for the base case. For all MCDA methods, most of the higher ranking alternatives are selected from Alt1 to Alt8 (except for Alt2—solar thermal). On the other hand, most of the lower ranking alternatives are selected from Alt9 to Alt16. However, the detailed ranking order of alternatives varies from MCDA method to method. The two methods that include fuzzy scoring and objective weighting are considered to provide the most reliable rankings. Fuzzy TOPSIS with entropy weight selects the top-five alternatives as Alt7 > Alt4 > Alt5 > Alt1 > Alt6. This compares to the IFS TOPSIS with entropy selection of the top-five alternatives as Alt7 > Alt5 > Alt3 > Alt4 > Alt6. On the other hand, the bottom-five alternatives are Alt11 < Alt9 < Alt14 < Alt15 < Alt13 for Fuzzy TOPSIS with entropy and Alt11 < Alt9 < Alt16 < Alt14 < Alt15 for IFS TOPSIS with entropy.

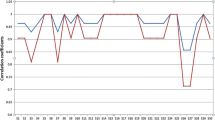

The entropy and entropy weight values calculated for the fuzzy TOPSIS and IFS TOPSIS methods are listed in Table 6 for the base case assumptions and compared in Fig. 4. The entropy weights applied by both methods are quite distinct for base case assumptions due to their different scoring systems (Table 1) and allocation of uncertainty. The fuzzy TOPSIS entropy weights cover a range of 0.088 compared to a range of just 0.028 for IFS TOPSIS with S = 0.2 (Table 6).

It is apparent from Fig. 4 that the fuzzy TOPSIS method calculates particularly high entropy weights (low entropy) for two criteria: water use (Criteria #45) and oligopoly domination risks (Criteria #11). The other 48 criteria cover a weight range similar to that calculated by the IFS TOPSIS method (Fig. 4). The allocation of higher significance to these two criteria by the objective weights applied by the fuzzy method contributes to its different order of ranking compared to the IFS method. For the IFS method, it is carbon capture required (Criteria #9) and carbon capture legislation required (Criteria #42) that are assigned the highest entropy weight of 0.0343. Applying an integer number score for assessments AA and ZZ rather than fuzzy scores does not change the entropy weights significantly (Table 6).

The rebalancing of the influence of the criteria by applying the entropy weights tends to redress the balance of the unweighted scores by increasing the influence of those criteria assigned the highest entropy weights at the expense of those criteria assigned the lowest entropy weights. Two points need to be born in mind: (1) the objectively weighted scores are subsequently adjusted, firstly by the subjective weightings applied by the stakeholders, and then by the stakeholder importance weights applied by decision makers, if required; and, (2) as the linguistic assessments applied to any criteria for any alternative change, the entropy for that criteria is also likely to change, thereby influencing the entropy weights applied, and potentially the relative rankings of the alternatives.

The balance of uncertainty is relatively evenly distributed across the base case fuzzy distribution-scoring system applied (Table 1). On the other hand, for the IFS scoring system (Table 1) uncertainty is greatest towards the middle of the scoring scale (score “M”) and decreases to the extremes of the scale (scores “AA” and “ZZ”). This is so because score “M” has the highest minimum to maximum ratio [(Mu + hesitancy)/(Nu + hesitancy) = 1] for M scores in which Mu = Nu (Table 1). On the other hand, “AA” and “ZZ” have the lowest hesitancy and minimum to maximum ratio compared to the other linguistic assessments in the IFS scoring scheme applied (Table 1). It is this minimum to maximum ratio that has the greatest influence on the IFS entropy calculation. The criteria that have the least number of assessments close to the center of the scoring range tend to be associated with the lowest calculated IFS entropy values and vice versa.

Discussion: sensitivity analysis of MCDA for clean energy alternatives

Whereas the results presented in “Results” focus on the assessment of the proposed model to a specific mid-latitude region with a certain set of assumptions, it is important to test the impact of the assumptions on the clean energy alternative rankings derived using the different MCDA techniques applied. Sensitivity cases are presented that assess the impacts on the base case results of applying: (1) different scoring systems (“Impacts of applying different numerical inversions to linguistic assessments”); (2) different stakeholder importance weighting (“Impacts of applying different stakeholder importance weightings”); (3) different entropy weightings (“Impacts of changing the S value in the IFS entropy-weighting method”). In addition to the base case mid-latitude maritime region (Table 5), MCDA analysis of clean energy alternatives for four other distinct geographic regions are assessed by the author and their summary results compared (“Assessment of generic regional conditions distinct from the base case”; Tables 8, 9, 10, 11).

Impacts of applying different numerical inversions to linguistic assessments

A range of different numerical scoring systems were tested for each MCDA method. These involved changing the range of the non-linear scale, changing the fractional values and spreads of the fuzzy scoring systems, and for the IFS method changing the degree of hesitancy, while maintaining all other base case assumptions. Reassuringly, these alternative numerical inversions had minimal impacts on the ranking order of alternatives for the base case linguistic assessments. The most significant impact was changing assessments AA and ZZ from fuzzy to integer numbers, 1 and 0, respectively. That change slightly altered the order of the top-five ranked alternatives selected by the IFS TOPSIS with entropy method to Alt4 > Alt5 > Alt1 > Alt7 > Alt3 (i.e., onshore wind ranked first). On the other hand, that alternative numerical inversion scheme left the top-five ranking for the fuzzy TOPSIS method with entropy unchanged at Alt7 > Alt4 > Alt5 > Alt1 > Alt6.

Impacts of applying different stakeholder importance weightings

A set of five sensitivity cases, described in Fig. 4, were evaluated to assess the impacts of applying different stakeholder importance weightings (\({\mathrm{Wg}}_{k}\)) with all other base case assumptions retained. These alternative stakeholder importance allocations had very minor effects of the ranking orders selected by the MCDA methods evaluated. For the IFS with entropy method Case 3 and Case 4 (Fig. 4; increased community emphasis) resulted in the positions of ranks #4 and #5 to reverse, Alt5 moving up to rank#4 and Al8 moving down rank#5. In that method, the positions of ranks #13, #14 and #15 also fluctuated from case to case. The other scoring methods showed one or two middle to lower ranking alternatives switching rankings, except for the fuzzy with and without entropy methods which remained completely unaffected by these stakeholder importance modifications. These findings such that the IFS methods are more sensitive to stakeholder importance weight variations than fuzzy MCDA methods.

Impacts of changing the S value in the IFS entropy-weighting method

One of the advantages of the IFS entropy method used is that it enables the S factor in Eq. 20 to be varied testing the sensitivity of the method to different scales of entropy weights. Table 7 lists the evaluations of the base case applying the IFS entropy method with 20 different S values varying from 0.01 to 5.0.

Although Alt #7 remains as rank#1 and Alt#11 remains as rank#20 for all 20 S-value cases, the ranked positions of other alternatives change progressively, some moving up the rankings (e.g., Alt#13), some moving down (e.g., Alt#2) as the S-value increase and range of entropy weights applied narrows. However, the overall ranking order is not dramatically changed by minor changes in S value and it suggest that a base case value of S = 0.2 is providing a representative assessment.

Assessment of generic regional conditions distinct from the base case

In total, five distinct regional scenarios have been assessed and compared by the author. These are:

Scenario #1 (base case; with detailed results presented in “Results”). Marine mid-latitude conditions (Tables 2a and 2b);

Scenario #2 Arid low-latitude region (Table 8);

Scenario #3 Landlocked mountainous low- to mid-latitude region (Table 9);

Scenario #4 Sub-tropical low-lying region (Table 10); and,

Scenario #5 High-latitude harsh frozen winter conditions with spring melt waters (Table 11)

Impact matrix assessments similar to Tables 2a and 2b (Scenario#1; base case) are compiled for each of the scenarios #2 to #5. In the interest of space, these are not displayed as tables but the changes in linguistic assessments made to specific criteria from the base case assessments for each clean energy alternative are described in the following paragraphs. These changes are indicative for the generic regions described and only minimal changes have been made to the base case assumptions to illustrate the impacts of such changes on the alternative rankings. In practice, each country and/or specific regions within countries would have distinct assessments applied to the multiple criteria for each technology alternative.

Scenario #2 linguistic assessment changes applied to the base case are:

Alt#1 Criteria (C) #1 is VG, C#3 is G;

Alt#2 C#1 is VG, C#3 is G;

Alt#5 C#1 is VG, C#3 is VG;

Alt#6 C#1 is P, C#2 is P, C#3 is P, C#10 is P;

Alt#7 C#1 is VP, C#2 is ZZ, C#3 is VP, C#4 is P, C#6 is M, C#10 is VP, C#16 is M, C#17 is M, C#18 is M, C#20 is M.

Alt#8 C#1 is VP, C#2 is ZZ, C#3 is VP, C#6 is M, C#10 is P, C#16 is M, C#17 is M, C#18 is M, C#20 is M;

Scenario #3 linguistic assessment changes applied to the base case are:

Alt#3 C#1 is VP, C#2 is VP, C#3 is ZZ, C#4 is VP, C#5 is M, C#10 is P, C#16 is VP, C#17 is VP, C#18 is VP, C#20 is VP; and,

Alt#16 C#1 is VP, C#2 is VP, C#3 is ZZ, C#4 is VP, C#10 is VP, C#16 is VP, C#17 is VP, C#18 is VP, C#20 is VP.

Scenario #4 linguistic assessment changes applied to the base case are:

Alt#1 C#1 is VG;

Alt#2 C#1 is VG;

Alt#6 C#1 is VG, C#2 is VG, C#3 is VG;

Alt#7 C#1 is G, C#2 is G, C#3 is M; and

Alt#8 C#1 is VP, C#2 is P, C#3 is VP, C#10 is VP.

Scenario #5 linguistic assessment changes applied to the base case are:

Alt#1 C#1 is VP, C#2 is VP, C#3 is VP, C#4 is P, C#5 is M, C#6 is P C#10 is VP, C#16 is VP, C#17 is VP, C#18 is VP, C#20 is P;

Alt#2 C#1 is VP, C#2 is VP, C#3 is VP, C#4 is VP, C#5 is M, C#6 is P, C#10 is ZZ, C#16 is VP, C#17 is VP, C#18 is VP, C#20 is P;

Alt#5 C#1 is P, C#2 is P, C#3 is P, C#4 is M, C#5 is M, C#6 is P, C#16 is P;

Alt#7 C#2 is VP, C#3 is VP, C#6 is M, C#16 is M, C#17 is M, C#18 is M, C#20 is M;

Alt#8 C#2 is VP, C#3 is P, C#6 is M, C#16 is M, C#17 is M, C#18 is M, C#20 is M; and,

Alt#16 C#2 is VP, C#16 is VP, C#17 is VP, C#18 is VP.

The ranked assessment results for Scenarios #2 to #5 are illustrated in Tables 8, 9, 10, and 11 and those results are summarized and assessed in the following paragraphs.

Scenario 2 (generic arid low-latitude region; Table 8) assessments leads to all MCDA methods selecting Alt5, Alt4, Alt#3 and Alt#1 (wind plus solar technologies) above Alt#7 (small-scale hydro) at the top of their rankings. On the other hand, Alt#11, Alt#9, Alt#8, Alt#14 and Alt#16 are consistently in the bottom-five ranked technologies in this setting. IFS TOPSIS with entropy differs from the other methods in placing onshore wind (Alt#4) as the rank # 1 selection, and it ranks biomass (Alt#6) and tide/wave (Alt#16) much more highly than other methods. Other methods ran alt#5 (PV + Wind + Battery) as rank#1.

Scenario 3 (generic landlocked mountainous region; Table 9) assessments leads to all MCDA methods selecting Alt#7 (small-scale hydro) at the top of their rankings with Alt5, Alt4, Alt#1 and Alt#6 (wind/solar/biomass technologies) in the remaining top-five rankings. On the other hand, Alt#11, Alt#16, Alt#9, and Alt#14 are consistently in the bottom-five ranked technologies in this setting. IFS TOPSIS with entropy differs slightly from the other methods in placing offshore wind (Alt#3) and wave/tide (Alt#16) at higher middle ranked positions than the other methods. It is likely than when transmission costs are quantified in subsequent pre-FEED studies Alt#3 and Alt#16 would move towards the bottom of the rankings as they are for the other methods.

Scenario 4 (generic sub-tropical landlocked region; Table 10) calculates very similar ranking to Scenario 3 with Alt#7 ranked #1 by all MCDA methods.

Scenario 5 (generic high-latitude harsh winter region; Table 11) places Alt#7 (small-scale hydro) at the top of the rankings for all but one MCDA method. Alt#3, Al#4. Alt#10 (small modular reactor) and Alt#6 (biomass) consistently make up the other top-five rankings, whereas solar technologies move down the rankings. The Fuzzy TOPSIS with entropy method differs from the other techniques by placing onshore wind (Alt#4) as rank#1.

Consideration of these different generic geographic/climatic scenarios illustrates the benefit of comparing the rankings selected by the different methods. Bearing in mind just a few changes to the linguistic assessments for some of the resource and operational criteria categories are made to the base case to create these scenarios, it is reiterated that in the real world there would most likely also be some additional differences in the economic, social, judicial and environmental criteria assessments in moving from one of these scenarios to the other. In the interests of simplicity such variations have not be included.

Overall, the fuzzy TOPSIS with entropy and IFS TOPSIS with entropy methods are considered to be the most meaningful. This is due to their numerical inversion approaches taking into account uncertainty, albeit in distinctive ways, and applying both objective and subjective weights. Moreover, these two methods are both conducive to conducting simulation analysis by varying the membership function for each fuzzy set or the hesitancy value for the IFS method. A study is underway to illustrate how such simulations can further discriminate between the pros and cons of technology alternatives in specific regional settings. The feasibility assessment protocol described in this study (“MCDA protocol applied and recommended for provisional clean energy technology selections”) can also be readily adapted to pre-FEED and FEED analysis at which points for some of the economic, operations and resource criteria could be assessed quantitatively, albeit with high uncertainty margins. The analysis would then involve a mixture of linguistic assessment for some criteria and quantitative values for other criteria. Also, at that stage, the alternative considered would probably be narrowed down to about five or ten alternative, with the lower ranking alternative selections from the initial feasibility analysis, described here, eliminated from more detailed analysis at that pre-FEED stage.

Conclusions

Feasibility stage assessments of the best future mix of clean energy technologies for specific regions to adopt are hampered by lack of quantitative information with which to compare alternatives. There is typically high uncertainty with respect to many economic, environmental and social impacts, and infrastructure requirements, particularly capital cost requirements and life-cycle emissions along the required supply chains. This makes it almost impossible to conduct quantitative comparisons at the feasibility stage. However, useful and transparent comparisons can be achieved with the qualitative data that is available for clean and sustainable energy alternatives. Such assessments can provide provisional guidance with respect to potential future energy mix configurations for specific local and regional settings. This makes it possible to establish comparative rankings of suitability, which are likely to change over time as local conditions evolve. particularly due to climate change and urban growth.

A 15-step protocol (“MCDA protocol applied and recommended for provisional clean energy technology selections”) for such a qualitative clean energy feasibility assessment is proposed. It applies multi-criteria decision analysis (MCDA) the TOPSIS method based on integer number, fuzzy and intuitionistic fuzzy (IFS) scoring systems. These deterministic and fuzzy methods (described in detail in “Method”) initially assess linguistic assessments of a large number of criteria, which is appropriate for the high level of uncertainty associated with such feasibility-stage analysis. This protocol is designed for policy makers, regulators and investors as it is simple, flexible and transparent to implement.

A case is made for applying objective and subjective weights to fuzzy TOPSIS methods in a specific order. The impacts of two sets of subjective weights should be considered: the first pertaining to diverse stakeholder preferences; the second pertaining to the relative importance of the stakeholder weights relevant to policy makers. The latter subjective weight makes it possible for the policy maker to transparently redistribute emphasis among stakeholders. For instance, if too many of the individual stakeholder assessments available come from specific interest groups, that can be compensated by the latter subjective weight. A recommendation is made to restrict the rights of veto with respect to specific alternatives for policy/decision makers to execute. It is considered more appropriate to focus upon potential vetoes as more quantitative data becomes available and/or regionally specific regulatory limits (e.g., pertaining to emissions) are applied. Granting veto rights to individual stakeholders potentially encourages them to manipulate their use and introduces unnecessary bias into the MCDA assessments.

All the MCDA methods are computed transparently using Excel workbooks driven by VBA coding that readily facilitate multiple sensitivity and scenario analysis. These models can provide step-by-step intermediate calculations before and after each set of objective and subjective weights are applied. The ultimate MCDA output is a suitability ranking order of the clean energy technologies evaluated for a specific area.

Model results and analysis are presented in detail for an unspecified mid-latitude maritime region case study (“Results”) The combined analysis presented compares the results of integer number-scoring and fuzzy TOPSIS methods to provide ranking orders of the suitability of 16 clean energy technologies based on qualitative data assessments for the case study based on 50 criteria and the preferences of 15 diverse stakeholders. Analysis of the case study results (“Integer number-scoring, fuzzy and IFS TOPSIS evaluations of the case study clean energy impact matrix”) reveal that for this mid-latitude maritime region the MCDA methods all select run-of-the-river hydro as the highest ranking energy alternative and large-scale nuclear and high-temperature geothermal as the lowest ranking energy alternatives. However, the order of ranking of other energy alternatives varies according to the MCDA method used. The fuzzy and IFS TOPSIS methods best capture the uncertainties involved but do so with different emphasis. The top-five energy alternatives (listed in descending order) selected by the fuzzy TOPSIS method are Alt7 > Alt4 > Alt5 > Alt1 > Alt6 (Table 5) whereas those selected by IFS TOPSIS method are Alt7 > Alt5 > Alt3 > Alt4 > Alt6 (Table 5). These results suggest that it is worthwhile calculating both methods and comparing the results. It is instructive to calculate both fuzzy and intuitionistic fuzzy TOPSIS methods because they integrate uncertainty in slightly different ways, typically resulting in slightly different rankings of the clean energy alternatives assessed.

Sensitivity analysis is run for the mid-latitude maritime case study region to test different scoring systems (“Impacts of applying different numerical inversions to linguistic assessments”), stakeholder importance weights (“Impacts of applying different stakeholder importance weightings”), the benefits of flexible entropy weighting (“Impacts of changing the S value in the IFS entropy-weighting method”). Four additional scenarios were evaluated considering different geographic regions (“Assessment of generic regional conditions distinct from the base case”) with assessments conducted by the author. For each scenario, the energy alternatives are scored linguistically and region-specific MCDA analysis conducted. For the arid low-latitude region, the MCDA methods selected solar and wind as the highest ranking energy alternatives (Table 8). For the landlocked mountainous region (Table 9) and the landlocked sub-tropical region (Table 10), the MCDA analysis select small-scale hydro, wind, solar and biomass as the high-ranking energy alternatives based on small-scale hydro, solar, wind and biomass as the highest ranking energy alternatives based on the assumptions made. For the high-latitude harsh winter region (Table 11), small-scale hydro, wind (onshore and offshore), biomass and small modular nuclear reactors are selected as the highest ranking energy alternatives.

The results obtained for these scenarios depend heavily on the scoring assessments and stakeholder and decision-maker assumptions. However, their analysis confirms that rigorous and transparent, feasibility-stage assessments of clean energy technology mixes can be achieved applying the detailed 15-step protocol adopted utilizing fuzzy and intuitionistic fuzzy TOPSIS analysis incorporating flexible entropy weights. It can effectively assimilate the perspectives of stakeholders with widely differing views, evaluating a large number of different clean energy technologies, based on 50 or more multi-faceted criteria.

Abbreviations

- A :

-

Set of n alternative bidders

- A j :

-

Sum of unweighted criteria scores x for each of n alternatives

- \(\stackrel{\sim }{A}^+\) :

-

Set of positive ideal solutions for each of n alternatives

- \(\stackrel{\sim }{A}^{-}\) :

-

Set of negative ideal solutions for each of n alternatives

- C :

-

Set of m criteria with which to assess alternatives

- d j − :

-

Euclidian distance from negative ideal solution for each of n alternatives

- d j + :

-

Euclidian distance from positive ideal solution for each of n alternatives

- d i :

-