Abstract

Despite the prevalence of undergraduate drop-in mathematics tutoring, little is known about the behaviors of this specific group of tutors. This study serves as a starting place for identifying their behaviors by addressing the research question: what observable behaviors do undergraduate drop-in mathematics tutors exhibit as they interact with students? We analyzed 31 transcripts of tutoring sessions using inductive coding, finding 83 observable behaviors. We discovered that tutors used behaviors aimed at engaging students, while primarily retaining control of the decision making and problem-solving process. Although tutors asked students to contribute to the mathematics, they often asked a less demanding question before the student had a chance to respond to the initial question. Our findings reveal the existence of opportunities for student learning in tutoring sessions as well as potential areas of growth for tutors. We present questions for future research that arose from analysis of the data and discuss how our results may be used in tutor training.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Academic support for undergraduate mathematics students in the form of tutoring is widely available in the U.S.A. (Bressoud et al., 2015). We define tutoring as a form of academic support outside of class, such as homework help and exam preparation, provided by someone who is not the instructor (Mills et al., 2020). A common expectation from faculty is that students should spend more time doing homework and studying than they spend in class. Thus, out-of-class learning environments may be where students engage more with the course materials. It’s possible that some students spend more one-on-one time with tutors than with their instructors. There is potential for student learning in the tutoring environment because it offers a more individualized, immediate, and possibly interactive experience than students encounter in the classroom (Lepper & Woolverton, 2002).

Tutoring contexts in the U.S.A. vary widely. In drop-in tutoring, students go to a center to work on homework and study while tutors circulate to offer help to those who ask. In appointment tutoring, students schedule meetings with tutors that often last 30–60 min. Tutors may be peers with minimal training or professionals with years of classroom and tutoring experience. For a more detailed description of the common types of mathematics tutoring in the U.S.A, see Mills et al., (2020). In this paper, we will focus on behaviors of tutors who are undergraduate students, not instructors or other content experts, and not trained professional tutors. This choice was made because survey data suggests that the majority of universities in the U.S.A. offer mathematics tutoring by undergraduates. Johnson & Hansen (2015) found that 89.5% of 115 universities surveyed offered tutoring by undergraduates, and Mills et al., (2020) found that 96% of the 75 universities surveyed offered tutoring by undergraduates. While we note that some of these centers also offer support from graduate students and faculty members, we argue that the prevalence of undergraduate mathematics tutors warrants the study of their behaviors. We also note that although undergraduate peer tutors cannot be expected to enact the same practices as experienced instructors, quantitative studies show that students’ use of undergraduate peer tutoring is correlated to improved student grades (Byerley et al., 2018; Rickard & Mills, 2018).

Prior research has examined tutoring interactions to identify certain tutor behaviors (e.g., Graesser & Person 1994; Graesser et al., 1997; Lepper & Woolverton 2002; Schoenfeld et al., 1992). However, these models were created for appointment tutoring, often with K-12 students, and in a variety of content areas, and would not necessarily transfer to the drop-in mathematics tutoring context (Johns, 2019). For example, in contrast to appointment tutors, drop-in tutors have limited time per tutoring interaction (Byerley et al., 2019, 2020). Moreover, these models were developed by studying tutors or teachers with extensive experience and a background in education. In contrast, undergraduate drop-in tutors in this study work part time for 1–3 years (Byerley et al., 2019, 2020). Thus, undergraduate drop-in tutors have fewer years to develop expertise and less time per tutoring interaction than many of the tutors in the models described in the literature. Because we are focusing on tutoring within the content area of mathematics, we also see a need to leverage mathematics education research to analyze mathematics tutoring.

The overarching goal of our research program is to document tutoring practices and identify ways to train tutors to enact more effective practices. When we first looked for tutors’ enactment of productive teaching behaviors such as decentering, which is when the teacher tries to understand a student’s thinking and take on their perspective (Ader & Carlson, 2021; Teuscher et al., 2016), and professional noticing of mathematical thinking (Jacobs et al., 2010), we found some evidence that tutors engaged in these behaviors (Mills et al., 2019), but it was not the norm. However, we did see important differences among tutor behaviors (e.g., asking students no questions versus asking students to perform calculations). Based on this and our desire not to take a deficit perspective, we saw a need for characterizing the observable behaviors tutors do enact. Tutor training could then aim to move tutors towards increasing student engagement and understanding students’ mathematical thinking. This study serves as a first step to this end by identifying and characterizing observable behaviors of tutors.

We seek to answer the following research question: What observable behaviors do undergraduate drop-in mathematics tutors exhibit as they interact with students? We address this question by examining 31 tutor session transcripts from distinct tutors, collected during Fall 2018. We open coded each tutor talking turn to identify observable behaviors (subsequently referred to as behaviors) and then grouped these behaviors into categories. In the results, we describe each category, including example behaviors. We then discuss what our results indicate about students’ opportunities for engagement with the mathematics.

Theoretical Framework

To categorize tutor behaviors, we drew on Bloom and colleagues’ (1956) taxonomic key for learning objectives which was later expanded into a taxonomic matrix (Anderson et al., 2001). The taxonomic matrix, which we describe below, was developed from an assertion that “objectives indicate what we want students to learn” and “objectives are especially important in teaching because teaching is an intentional and reasoned act.” (Anderson et al., 2001, p.3). Similarly, tutoring is intentional in that tutors tutor for a purpose, generally to help students learn and/or help them solve problems. It is also a reasoned act in that what tutors do or communicate with a student is deemed by the tutor to be worthwhile. We argue tutor behaviors likely reflect both how they help students learn and what they want the student to learn.

Anderson and colleagues’ (2001) taxonomic matrix for learning objectives is comprised of a knowledge dimension (Factual, Procedural, Conceptual, Metacognitive) and a cognitive process dimension (Remember, Apply, Understand, Analyze, Evaluate, Create), forming a 24-cell matrix. The cognitive processes are arranged in order of those requiring the least amount of cognitive engagement to those requiring the most. A learning objective falls into one of those 24 cells, having both a knowledge type and cognitive process. For example, the learning objective “Recall the definition of limit” would have a Factual knowledge type and a cognitive process of Remember.

Building on Anderson and colleagues’ (2001) matrix and Tallman and Carlson’s (2016) work adapting the matrix to classify the cognitive demand of Calculus I exam questions, White and Mesa (2014) further adapted the matrix to code the cognitive orientation of homework, quiz, and exam tasks used in Calculus I courses (Fig. 1). White and Mesa (2014) used the term “cognitive orientation” in their work rather than “cognitive demand” because by examining tasks alone, they could not determine what students actually experienced, only the cognitive processes and knowledge types students could potentially use. Similarly, we are coding the potential cognitive processes that students may use to respond to the tutor’s prompt.

White and Mesa (2014) created new codes within Anderson et al.’s (2001) matrix. In Fig. 1, Anderson et al.’s knowledge types are columns and the cognitive processes are rows. White and Mesa’s codes (in grey) span multiple knowledge types and/or cognitive processes and are specific to mathematics. Note, their data did not contain any codes within Metacognitive knowledge. To describe our theoretical framework in detail, we first describe Factual, Procedural, and Conceptual knowledge types, drawing primarily on White and Mesa’s (2014) framework. For Metacognitive knowledge, we draw on Anderson et al.’s description of Metacognitive knowledge as well as research in self-regulated learning (Pintrich, 2000) and problem-solving (Schoenfeld, 1985).

Factual, Procedural, and Conceptual Knowledge

Factual knowledge is defined as “the basic elements students must know to be acquainted with a discipline or solve problems in it” (Anderson et al., 2001, p.46). It consists of isolated pieces of information such as terminology or discrete facts. White and Mesa’s (2014) Remembering code (Fig. 1) is used for tasks that required Factual knowledge and the cognitive process of Remembering, recalling memorized information. For example, tutors may have students recall from memory the quadratic formula.

Hiebert & Lefevre (1986) define procedural knowledge in mathematics as knowledge of rules and algorithms with linear sequences of steps to be followed. This includes procedures for acting on objects that are symbols, such as how to manipulate symbols to solve an algebraic equation. Similarly, Rittle-Johnson & Alibali (1999) define procedural knowledge as “action sequences for solving problems” (p.175). This aligns with White and Mesa’s (2014) Recall and Apply Procedure code (Fig. 1) which utilizes Procedural knowledge and the cognitive processes of Remember and Apply. For example, factoring an expression requires remembering the procedure for factoring and then using it to factor the expression.

Conceptual knowledge is defined as “knowledge that is rich in relationships…a connected web of knowledge…by definition, it is part of a conceptual knowledge only if the holder recognizes its relationship to other pieces of information” (Hiebert & Lefevre, 1986, p. 3–4). Hebert and Lefevre argue that procedures can be linked with conceptual knowledge when learned with meaning, but when procedures are merely memorized, conceptual links are absent. When using procedures connected to conceptual knowledge, one can reason about what the symbols represent, and can consider if the procedures make sense rather than just trying to manipulate symbols to follow a set of steps.

White and Mesa describe the distinguishing feature between Recall and Apply and Procedure and Recognize and Apply a Procedure (Fig. 1) as the potential [emphasis ours] that the task would “elicit students making connections and applying conceptual understanding, while acknowledging that, depending on instruction and on student, the tasks also had the potential to be proceduralized and worked without conceptual understanding or connections” (p.679). For example, consider a tutor asking a student to find the x-intercepts of a quadradic function. The student may have a procedure for finding x-intercepts memorized or they may recognize that they need to set the quadratic function equal to zero. There is the potential for the student to use the relationship between zeroes and x-intercepts to determine the necessary procedure. White and Mesa’s (2014) Understand code (Fig. 1) requires Conceptual knowledge and the cognitive processes of Understand. White and Mesa note that “in Understand tasks, information is extracted from a situation, as opposed to a Procedure, which is enacted” (p.680). For example, White and Mesa classify “asking students to extract features from graphs” (p.680) as Understand. White and Mesa include the processes of interpreting, exemplifying, classifying, summarizing, inferring, comparing, and explaining in this code. For example, tutors may ask a student to interpret an answer within the context of a word problem.

White and Mesa’s (2014) framework in Fig. 1 consists of five additional codes: Apply Understanding, Analyze, Evaluate, and Create. These codes did not appear in our data and in White and Mesa’s study, these codes combined appeared less than 0.1% of the time.

Metacognitive Knowledge

Metacognitive knowledge is knowledge about cognition or one’s own thinking. To examine Metacognitive knowledge, we began with Anderson et al.’s (2001) three subtypes, Knowledge of Cognitive Tasks, Self-Knowledge, and Strategic Knowledge. As an example of Knowledge of Cognitive Tasks, tutors may know the types of problems that might appear on a particular instructor’s exams. Self-knowledge is knowledge of one’s own broad strengths and weaknesses such as knowledge that one tends to make arithmetic mistakes.

We developed subcategories of Strategic Knowledge, drawing upon both self-regulated learning (Pintrich, 2000) and problem-solving (Schoenfeld, 1985) literature. We used both perspectives as tutors may have either a goal for the student to learn the mathematics and/or to solve a particular problem. Strategic Knowledge entails the strategies one uses during the learning process, such as goal-setting, monitoring learning, and rehearsal learning techniques. We describe Strategic Knowledge as organized by Pintrich’s (2000) four cognitive self-regulation phases: Forethought and Planning, Monitoring, Control and Regulation, and Cognitive Reaction and Reflection. Within the context of problem-solving, Schoenfeld’s (1985) knowledge category of Control encompassed many of these same ideas, however Schoenfeld discussed heuristics, or general strategies for solving problems, separately. Given the importance of heuristics in problem-solving, we also adopted Heuristics as a separate category.

Forethought and Planning includes explicit and intentional activation of prior knowledge, target goal setting, and activation of metacognitive knowledge (Pintrich, 2000). For example, at the beginning of a session, a tutor may ask a student what they know about quadratic functions to activate prior knowledge. For Schoenfeld (1985), the planning phase of problem-solving involves orienting oneself to the problem statement, such as rephrasing the problem statement.

Monitoring entails being purposefully aware of one’s thinking to determine comprehension and progress toward the goal (Pintrich, 2000). For example, does the student understand the explanation the tutor has just provided? Monitoring in problems-solving involves reflection on whether the current solution path is productive or a step is correct (Schoenfeld, 1985).

Control and Regulation involves intentionally adapting and changing one’s cognition and is dependent on monitoring for any “discrepancy between goal and current progress” (Pintrich, 2000, p.460). In problem-solving this includes determining when to abandon, revise, or continue one’s path. For example, either a tutor or student could decide to abandon an integration technique that does not appear to be a productive path. It is after deciding to take control and change one’s approach that one must adapt a new tactic or heuristic. Heuristics include many additional problem-solving strategies which would be employed as an initial plan or used after engaging in monitoring and control to determine that a new plan is needed. Schoenfeld defines heuristics to be “strategies and techniques for making progress on unfamiliar or nonstandard problems” (Schoenfeld, 1985, p.15). Examples of heuristics include making a sub-goal, working a simpler problem, and using a contradiction or contrapositive (Schoenfeld, 1979). Tutors may help students break apart a difficult task by asking the student to solve a small piece of the problem that contributes to the whole.

Cognitive Reaction and Reflection contains two facets: cognitive judgements and attributions. Cognitive judgements are reflections about the cognitive process (forethought, monitoring, and control) and whether the process led to meeting the target goal (Pintrich, 2000). This can include considering whether they sufficiently monitored and controlled their process so as not to waste time. Cognitive attributions are the factors to which a student attributes their success or failure (Zimmerman, 1998) and may include attributing success or failure to strategies use or to being “smart” or “dumb” (Zimmerman, 1998; Schoenfeld, 1985) includes checking results and reasoning within reflection of problem-solving.

Summary

In categorizing tutor behaviors, we drew upon the underlying assumptions of both Anderson et al., (2001) and White and Mesa (2014); namely, we argue tutor behaviors are indicative of what tutors want students to learn and how tutors seek to help students learn. To categorize tutor behaviors, we drew upon White and Mesa’s cognitive orientation framework for the knowledge types Factual, Procedural, and Conceptual. To categorize Metacognitive knowledge, we added to the Metacognitive knowledge category discussed in Anderson et al., (2001) by leveraging the self-regulated learning (Pintrich, 2000) and problem-solving literature (Schoenfeld, 1985).

Literature Review

Research on the nature of tutoring has been conducted across a variety of tutoring environments with different characteristics such as tutor and tutee age, amount and type of tutor training and experience, duration of session, and curriculum or content. These studies have identified common tutor strategies and behaviors that are likely impacted by the differences mentioned above. Many of the studies of tutor behaviors were conducted to design intelligent tutoring systems that mimic human tutors, or to improve these programs (e.g., Chi et al., 2001; Fox, 1991; Lepper et al., 1997; Lepper & Woolverton, 2002; McArthur et al., 1990; Merrill et al., 1992; VanLehn et al., 2003). To this end, they often placed experienced teachers or professional tutors in tutoring environments to study their behaviors (Lepper & Woolverton, 2002; McArthur et al., 1990). Across these studies we did not see a consistent definition of “expert tutors,“ nor did we see direct links between these behaviors and student learning outcomes. Additionally, they recognized that “most tutors in school systems are peers of the students, slightly older students, paraprofessionals, or adult volunteers. Skilled tutors are the exception, not the rule” (Graesser et al., 1995, p. 496). Although these results can inform our understanding of desirable tutoring practices, they do not always translate directly to the undergraduate mathematics drop-in tutoring context. We are motivated to find the natural behaviors of tutors and learn how we can build upon their inclinations to support them in providing student-centered tutoring.

Student Engagement

Chi et al., (2001) hypothesized that tutoring is effective because students have more opportunities to be actively engaged and ask questions in a tutoring session than they do in the classroom, and this engagement on the part of the student is what contributes to their learning. Within the classroom context, research suggests that active learning increases performance, decreases failure rates, and decreases the achievement gap for marginalized students (Freeman et al., 2014; Theobald et al., 2020). Graesser & Person, (1994) found that students ask 240 times more questions in appointment tutoring settings than in classroom settings, thus giving students more opportunities to direct their own learning.

Variation exists both within and across studies in how much and in what ways tutors engage their students. Studying professional tutors, Cade et al., (2008) identified eight modes of tutoring, and the most common modes for their eight participants were lecture and scaffolding. Hume et al., (1996) describes tutors using a “directed line of reasoning” which is a carefully sequenced series of questions, often getting easier when students are not able to successfully answer the previous ones. Fox (1991) and Lepper and Wolverton (2002) found that tutors try first to ask questions or give hints and suggestions, but if students are not able to answer, they follow up fairly quickly with support in the form of more detailed hints or questions or providing answers.

Many studies on tutoring in mathematics describe how tutors tend to prioritize solving a particular problem rather than increasing student learning or understanding (Fox, 1991; Graesser et al., 1995; McArthur et al., 1990). Even when tutors in these studies sequence multiple problems for students, they do not often discuss the purpose of the problems, have students generalize from those problems, or explicitly make connections between problems and concepts. In addition, even experienced mathematics tutors often decompose a problem into a series of closed-ended questions, prompting only for key words or the execution of simple steps (McArthur et al., 1990; Fox, 1991; Graesser et al., 1995). However, there is evidence that tutors can be trained to focus more on student understanding and to make connections between procedures and concepts (Bentz & Fuchs, 1996; Fuchs et al., 1997; Topping et al., 2003). Further, Lepper and colleagues (1997, 2002) found expert tutors often encouraged student articulation and reflection, including justifying their choices and explaining their reasoning. These tutors also asked students to make connections between the current problems and others they had seen or worked and to make generalizations.

Monitoring

Researchers have examined the ways in which tutors monitor and provide feedback in terms of student utterances and written work. Putnam (1987) expected to see tutors use what he termed a “diagnostic/remedial” approach to tutoring, where tutors attempt to learn about student thinking, identify misconceptions or gaps in knowledge and adjust their response appropriately to address what they’ve learned. However, he found tutors rarely attempted this diagnostic behavior, even in the face of repeated errors. This trend was confirmed by Graesser et al., (1995) and McArthur et al., (1990). Although Putnam’s subjects did not explore student misconceptions, they did respond to student errors in a variety of ways, including signaling an error directly or asking a question to signal an error, explaining a procedure or rule, making a comparison with another problem to highlight the error, and allowing student room to discover the error themselves.

Control and Heuristics

In addition to providing feedback, tutors are also commonly engaged in devising and selecting strategies for students to employ. McArthur et al., (1990) describe tutors breaking tasks into subgoals. Tutors then involve students in the computational aspects of problem-solving, but deciding what to try next appears to be the domain of the tutor. One common strategy employed is attending to salient information. Cade et al., (2008) found tutors often begin by drawing attention to what the problem asks for as well as given information. Similarly, Hume et al., (1996) explain that a common form of hinting for tutors is “point[ing] to,” meaning that the tutor directs the student’s attention to some feature of the problem or to a step in the solution thus far.

Other strategies identified include referencing a similar problem and identifying or setting goals. Referencing similar problems can be used for hinting, comparison, or pointing out errors (McArthur et al., 1990; Hume et al., 1996), but the consistent feature is that the choice to make a comparison is almost always the choice of the tutor. Similarly, tutors are typically the ones to identify appropriate goals, or at least suggest identifying a goal (VanLehn et al., 2003; McArthur et al., 1990). The exception to this pattern appears with more expert tutors; Lepper and Wolverton (2002) describe the tutors in their study “comply[ing] with [student] requests” when possible and often giving students choices.

Comparison of Tutoring Environments

The distinction between expert and novice tutors is potentially significant, as are variables such as the power dynamic or familiarity between tutor and student, the amount of time spent in one session, and amount of tutor preparation for a session. Given the distinctions in drop-in tutoring, which is rarely discussed in the literature, one would expect drop-in tutors to exhibit different behaviors or a different frequency of similar behaviors. Moreover, much of the tutoring literature has not attended to the role of the content area (e.g., mathematics) on tutor behaviors, instead treating behaviors as if they apply regardless of content. We aim to fill these gaps in the literature by identifying tutor behaviors specifically within the context of undergraduate peer mathematics drop-in tutoring.

Methods

We seek to answer the following research question: What observable behaviors do undergraduate drop-in mathematics tutors enact as they interact with students? We address this question by examining 31 tutoring session transcripts from distinct tutors, collected during Fall 2018. We open coded each tutor talking turn to classify behaviors. We then organized these behaviors into categories, using existing literature to inform our organization. Finally, we calculated estimates of the occurrence of each code and category to gain insight into which behaviors and categories were most common.

Data Collection

Participants were undergraduate students employed as drop-in tutors at the math tutoring center at a large university in the midwestern region of the U.S.A. The math tutoring center was open for 64 h per week and offered free optional out-of-class support for students in mathematics courses from college algebra through differential equations. All of the tutors at this center were undergraduate peer tutors who were successful in their mathematics courses. Students would most often come into the center to work on their homework, and when they encountered difficulty, they called one of the tutors over to help. The tutors typically work with students in several different courses and thus it was not feasible to train them on every topic for every course. Thus, the tutors often help with problems they have never seen, though they can encounter the same problem multiple times while working with different students. In the Fall 2018 semester, the center had 30,776 visits and 6048 total tutor work hours, giving a rough average of 12 min of tutor work time per student visit.

The undergraduate tutors had zero to four semesters of tutoring experience and had six hours of training per semester, focusing on both mathematics content and tutor pedagogy. For example, at one biweekly staff meeting tutors worked together on a few problems from one of the courses they tutored, read a tutor transcript and discussed in groups what the tutor could have done differently, and learned about different questioning strategies. In the Fall 2018 semester, all 37 tutors employed at the tutoring center were required, as part of their training and as part of a larger study, to record a 5-7-minute-long tutoring session with a Livescribe pen, transcribe it, and write a reflection for further evaluation and discussion with their supervisor. The Livescribe pen simultaneously captured the audio and with written work. Tutors were asked to transcribe their session for several reasons: to shorten the amount of time between the session and the meeting with their supervisor, to facilitate deeper reflection on their practices, and so that they could include details that were not captured in the audio file. Some examples from our data of details that tutors included are: “student pointed to the partial derivative with respect to y” or “student finds the formula in their notes.” The tutors asked the students for verbal consent to record before proceeding, as approved by the researchers’ institutional review board. For the purposes of this paper, we define a session as an interaction with a single student. All 37 tutors were invited and gave consent to participate in the study. However, some were missing parts of their data, thus only 31 tutors were included in the study. A few of the tutors recorded sessions that were longer than 5–7 min, and in these cases the researchers finished transcribing the remaining audio for analysis.

Analysis

Within each session, we analyzed tutor turns, that is, an utterance of a tutor bookended by the utterances of the student. As we analyzed the data from tutoring interactions, we recognized that it was impossible to determine precisely what a tutor’s intentions were when they performed a certain move. Thus, in line with previous researchers (e.g., Ader & Carlson 2021), we focus our analysis on the observable behaviors of the tutors. We considered an observable behavior to be a tutor utterance that had the potential to impact the tutoring interaction. Within each tutor turn, it was possible to code multiple behaviors, because the turns could serve several simultaneous functions (Hume et al., 1996). For example, in the excerpt below, lines 7 and 8 were a tutor turn and we coded the turn with the behaviors asked student to graph/sketch something by hand, and gave next step.

7 Tutor: So, the first thing we should do is graph it. Could you try to graph the function for.

8 me on this paper so.

9 Student [student labels \(x\)-axis] that’s as far as I can go

In our analysis, we relied primarily on the written transcript. However, Livescribe files were referenced to fill in obvious omissions in the transcript, help to clarify a tutor behavior through attending to tone or length of pauses, and to view corresponding written work.

Our analysis began with coding each tutor turn to identify specific tutor behaviors. We did not begin with a list of existing behaviors. We developed the codes for tutor behaviors by attending to the apparent function of the tutor’s words in that turn in a way that may generalize to other turns and other tutors. At times this required referencing student dialogue since much of the tutor dialogue was prompted by or in response to student dialogue. When a tutor behavior encouraged a student action, we coded based on the potential cognitive processes needed for the student to respond to the tutor’s prompt, not the student response. For example, if a tutor asked the student to identify the next step in solving a problem, but the student instead asked the tutor a question and never identified the next step, we still coded asked student to give next step.

Each of the three authors independently coded one transcript at a time, building a list of behaviors as we went through the transcripts. We discussed and compared our behaviors after we individually coded each transcript, consistent with the constant comparative method (Glaser & Strauss, 1967). Previous behaviors were modified or new behaviors created when previous behaviors could not adequately or accurately describe the tutor’s behavior in that turn. Early in this process, we realized many behaviors either captured the tutor asking the student to do something or the tutor doing something and mirrored each other. For example, we created the similar codes asked student to give next step and gave next step. Although we did not seek to create mirroring behaviors, at times, when creating or modifying codes for tutor behaviors, we considered the mirror code to aid in refining our language. In addition, for these behaviors, were found ourselves influenced by White and Mesa’s (2014) categorization of mathematics tasks in order to find the language to describe nuances in behaviors, such as distinguishing between tasks that required students to “recall and apply a procedure” versus “recognize and apply a procedure” (p.678–679). Our coding process produced 83 observable behaviors (presented in Appendix A).

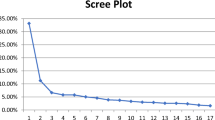

We then used axial coding (Strauss & Corbin, 1990) to identify larger categories of behaviors (Table 1). Initially, we examined several possible existing frameworks for categorizing tutor or teaching behaviors (e.g., Lepper & Woolverton 2002; National Council of Teachers of Mathematics, 2014). Ultimately, for many of the behaviors, we determined the best fit was categorizing by Anderson and colleagues’ taxonomy matrix (2001), in particular relying primary on White & Mesa’s (2014) adaptation (Fig. 1). Each author independently sorted the behaviors based on their perceived intended cognitive orientation, setting aside behaviors not fitting into Factual, Procedural, or Conceptual knowledge. These remaining codes were then determined to align with the Metacognitive knowledge type from Anderson et al., (2001). For codes within Metacognitive knowledge, each author independently sorted the behaviors by the type of metacognitive knowledge or work being done. The researchers then discussed the categories to reach agreement. Since we were not categorizing tasks or objectives, but rather behaviors, and we were observing tutors rather than instructors, we did not use the frameworks in White and Mesa (2014) or Anderson et al., (2001) exclusively as an a priori codebook when creating categories, but we returned to the frameworks frequently to help us differentiate between and classify tutor behaviors. After organizing the behaviors into categories, we then tallied the total number of times each code was used within the corpus of tutoring sessions followed by totaling the number of times each category was used. The purpose of producing these counts was to create a rough idea of which behaviors and categories were common (Table 2), not to produce detailed quantitative results.

Results

We first present categories requiring Factual, Procedural, or Conceptual knowledge and then discuss categories involving Metacognitive knowledge. We visually present our framework in Fig. 2. The figure shows the four knowledge types from Anderson et al., (2001) in the second row and in the leftmost column are the six cognitive processes. The knowledge types Factual, Procedural, and Conceptual align with the cognitive processes and cognitive processes of Remember, Apply, Understand, Analyze, Evaluate, and Create of Anderson et al. In drawing primarily on White and Mesa’s (2014) matrix (Fig. 1), we considered their codes which were embedded within Anderson et al.’s. As we will describe in detail later, our codes Supplied Facts, Carried out Procedure, Determined What-to-do, and Drew upon Understanding, falling under Factual, Procedural, and Conceptual knowledge are similar to codes from White and Mesa. On the right side of the table, Metacognitive knowledge, from Anderson et al.’s framework, is pulled out of the table to show our categories’ alignment with Anderson et al.’s three types of metacognitive knowledge. Our additional layers of Stategic Metacognitive knowledge draw upon Schoenfeld’s (1979, 1985) problem-solving framework and Pintrich’s (2000) phases of self-regulated learning. Our framework (Fig. 2) can be viewed from the perspective of the “tutor does something” or the perspective of “tutor asks student to do something.” Throughout our results, we describe each category presented in Table 1 and provide examples of behaviors, including excerpts from the transcripts to illustrate the category being described.

(adapted from White & Mesa, 2014)

Framework for categorization of tutor behaviors.

Factual, Procedural, and Conceptual Knowledge

Table 1 shows the 5 categories that fell under Factual, Procedural, and Conceptual knowledge. For most categories, behaviors either captured the tutor asking the student to do something or the tutor doing something. Table 2 shows the counts of the behaviors for each category broken down by this distinction.

Supplied Facts

The key features of Supplied Facts are retrieval of information stored in long-term memory and knowing isolated pieces of information (Anderson et al., 2001). This is similar to White and Mesa’s (2014) Remembering. Supplying facts often meant providing a definition or theorem. For example, a tutor said, “So the amplitude is going to be the distance between the midline and the maximum or minimum point.” This is the definition of amplitude as memorized by the tutor and does not involve application or interpretation. We also saw tutors either introducing or explaining mathematical conventions, like notation. Tutors also asked students to supply facts. In our data, this took the form of asking for a memorized definition or formula. For example, when a tutor said, “\(f\left(x\right)={e}^{x}\)…What’s its derivative?” we categorized this as Asked Student to Supply Facts because it is a derivative that students memorize.

Applied a Procedure

The category of Applied a Procedure was used when the tutor used or asked students to use algorithms without explicit connections to concepts, with the goal of applying steps in a certain order to produce correct answers, similar to White and Mesa’s (2014) Recall and Apply a Procedure. Tutors often carried out next step/provided answer, such as “now we can plug these into our formula. So, we know \(g\left(t+h\right)=4\left(t+h\right)+5\) and we know \(g\left(t\right)\) and we’re subtracting off \(g\left(t\right)\) and it’s \(4t+5\) and all over \(h\).” In other cases, tutors asked students to complete procedural work. In fact, having students apply a procedure was one of the most common ways tutors engaged students. It was common for tutors to ask the student to execute the steps of a procedure, such as “what is the integral of \(3x\)?” In other cases, tutors explicitly asked students to perform a specific algebraic or arithmetic calculation or to produce a graph with a computational tool. We distinguish these behaviors from those following by their lack of requiring the student to make decisions or demonstrate conceptual understanding.

Determined What-to-do

Behaviors classified as Determined What-to-do involved providing the next step in the problem-solving process or completing procedures that could not be memorized and required decisions along the way, similar to White and Mesa’s (2014) Recognize and Apply a Procedure. However, our Determined What-to-do category emphasized the role of decision making and allows for a separation of the decision-making and enacting procedure behavior. We most commonly saw tutors giving the next step. For example, “we know this [pointing to \(\frac{g\left(t+h\right)-g\left(t\right)}{h}\)] looks an awful lot like the slope \({y}_{2}-{y}_{1}\). So now we just need to find \(g\left(t\right)\). So, \(g\left(t\right)\) is pretty simple because we’re plugging \(t\) in for \(t\), right?” Here the tutor gave the student the next step and also connected the procedure to the concept of slope. Tutors also asked students to Determine What-to-do on limited occasions. This could mean asking them to identify an appropriate procedure, give the next step, or provide an alternative method. One tutor asked, “what can I do with my derivative to find [the critical] points?” We classified this behavior as Determining-what-to-do because the student needed to connect the definition of critical points with the concept of derivative to determine the next step. However, in our data, we saw that tutors far more often Determined What-to-do instead of asking students to Determine What-to-do. We contrast this with the results from the previous categories. Tutors often engaged students in the problem-solving process, but were more likely to do this by asking students to recall information or execute procedures than by asking them to make decisions.

Drew upon Understanding

Our code of Understanding was similar to White and Mesa’s (2014) Understanding code. Examples of Drew upon Understanding behaviors from our data included mathematized contextual information, supplied example, summarized/rephrased the problem statement, and moved between representations. The most common behavior categorized as Drew upon Understanding was explain how or why procedure works. For example, a tutor explained, “that’s the whole idea of completing the square. We’re turning this term here into something squared. So that’s the importance of why we had to take that negative one out, because if we were to leave the negative one in here, it would mess things up.”

When tutors asked students to Draw upon Understanding, they most commonly asked the student to identify/recognize. For example, a tutor asked a student to identify the function type of \(y=2x+5\), which required the student to extract information from the function to classify it. Other common behaviors in this category involved asking the student to extract information from a graph or to interpret mathematics in context. While the total amount of instances of tutors Drawing upon Understanding is comparable to the amount they prompt it from students, we note that the more frequent specific behaviors differ in an important way. Tutors were more likely to elaborate on responses and provide lengthy explanations, while students were being asked pointed questions that were likely to be evaluated by the tutor rather than used for probing student understanding.

Immediately Reduced Cognitive Orientation

The category Immediately Reduced Cognitive Orientation was applied to tutor turns in which the tutor made a move that lowered the cognitive engagement for the student before the student had an opportunity to respond to the initial prompt. The Immediately Reduced Cognitive Orientation category contains behaviors which transformed questions into lower cognitive engagement questions or into questions which could be answered with little to no mathematical knowledge. For example,

Tutor: Not quite, so let’s just look at the denominator, in order to subtract five from \(\frac{1}{t}\), how would we do that? We can’t just go ahead and subtract it. So, we would have to find a common [pause]

Student: Denominator.

Here the tutor initially asked how to perform the procedure but then changed it to a fill-in-the blank question. The student was required to answer only the name of the procedure. These behaviors were always double-coded with the behavior that addressed the cognitive orientation level of the original question. The most common behavior in this category was asked yes/no, either/or, multiple-chose type question without asking for reasoning. Asking a fill-in-the-blank question with no computation required was also common. In our data, these types of questions were always asked after a prompt with a higher level of cognitive orientation, without an opportunity for the student to respond to the initial question.

Metacognitive Knowledge

While categorizing codes, we drew on Anderson et al.’s (2001) framework for metacognitive knowledge, which is related to self-regulated learning (Pintrich, 2000), as well as Schoenfeld’s (1985) metacognitive work in problem-solving. Most of the Metacognitive knowledge behaviors entail the tutor drawing on Strategic Knowledge to select and use strategies throughout tutoring sessions. However, at times, students were asked to carry out the metacognitive strategies which the tutor had selected. In addition, after monitoring and determining the direction of the solution path, some tutor control behaviors offered the student greater opportunities to be involved in the problem-solving decision making. Control differs from Determine What-to-do as Strategic Knowledge entails generic strategies used for problem-solving. Determining What-to-do can occur alongside the use of Strategic Knowledge, but is drawing on knowledge of specific mathematical content.

Planning

Planning behaviors were aimed at activating prior knowledge to bring relevant knowledge to the forefront of thinking. We did not see these behaviors frequently in our data. Tutors in our study used these behaviors to determine what knowledge would be relevant when working with students. These behaviors also had the potential to help students learn the importance of activating prior knowledge. The most common behavior was asking the student what they’d already done or tried. For example, one tutor, looking at a student’s work, asked, “So what did you start with here?“ This type of question allowed tutors to familiarize themselves with the problem and to assess student progress and understanding. Another planning behavior we saw was asking the student what course they were in. This can be essential in drop-in tutoring since tutors are working with students from multiple courses and soon become aware that problems from different courses may have similar surface features and contexts yet still involve very different underlying concepts.

Monitoring

Monitoring behaviors indicated the tutor was evaluating the student’s understanding and/or their problem-solving process, and often conveyed the correctness of student utterances. Many times, the tutors assessed students’ work or claims themselves. Providing confirmation of correct student responses was one of the most common tutoring behaviors we observed. In other cases, the tutor monitored correctness but prompted the student to self-correct. For example, in the following scenario, a tutor asked a non-rhetorical question to point out the student’s error. The student was taking the partial derivative with respect to y of \({e}^{{x}^{2}+y}\), and stated “we keep it the same up top…and then times y”. Rather than directly correcting the student, the tutor replied “well what’s the deriva… [sic] if I just had, if it was just \({e}^{y}\), what’s the derivative of y?“ The tutor engaged in monitoring the student’s work, however the tutor asked the student a question to perturb their thinking, giving the student the opportunity to monitor their own thinking. More often, we saw tutors using check-in behaviors, such as asking “make sense?” or “okay?” While these questions did not require and often did not elicit any verbal response from the student, they did invite the student, albeit minimally, to participate in the monitoring process if they were so inclined.

Control

The category Control was used when the tutor decided to continue on the current learning/problem-solving path or course correct. Since the decision making involved in control behaviors first requires an assessment of current progress or understanding, control behaviors typically follow monitoring behaviors. Typically tutors engaged in Control when they addressed the correctness of student responses with more information beyond right/wrong. A common behavior was directly explained how or why student answer was incorrect. For example, when factoring a trinomial, a student factored out an expression that was not in all three of the terms. The tutor responded, “I can’t pull out an x because it’s not in all three of my terms, right? I have three terms since they’re all separated by a negative or plus sign.” The tutor indicated the student was incorrect and then explained why, making a decision that the student should be given additional information to aid them in learning.

We did not see behaviors where tutors asked students to engage in control strategies. However, some of their behaviors provided direction while still increasing student engagement. Within these behaviors, it was most common for tutors to ask a follow-up question to continue on student path or verbally prompt the student to continue on student’s path. When the tutor asked a follow-up question, it was often to ask the student to carry out the step the student had proposed. For example, when working on finding the partial derivative with respect to x of \({e}^{{x}^{2}+1}\) the student offered “For this one, I’m guessing just look at x as a constant. Right? Or take x and look at everything else as constant.” The tutor then offered confirmation and asked the student to continue, “Exactly. So, what might we get in that case?” In another instance, a prompt was more subtle, for example, a student stated, “Yeah. I don’t know. I thought maybe I could multiply both sides by \(7y-6\) but then that wasn’t really helpful.” The tutor replied, “Okay. So then why don’t we still go ahead and try that.” In both cases the tutor monitored the solution path, made a decision to continue on that path, and then asked the student to provide the subsequent steps.

Heuristics

Schoenfeld (1985) defines heuristics as “general suggestions that help an individual to understand a problem better or to make progress toward its solution” (p.23). We categorized behaviors as Heuristics when they entailed using a general problem-solving technique. For example, one tutor asked, “We have a function in terms of a, so what are we trying to solve for?” and we coded this as discuss goal or subgoal of task. Schoenfeld (1979) lists “try to establish a subgoal” as a heuristic along with “considering a problem with fewer variables” (p. 178). When tutors asked an easier question, they broke the question they had asked down further into a simpler question. For example, a tutor was helping a student find the amplitude of a periodic function based on the graph. The tutor first asked “so if it starts at zero, then goes to the midline of seven, then doubles that then back to seven, then back to zero, and the amplitude is the distance between the midline and then lowest point, then?” When the student did not answer the original question, the tutor removed some of the difficulty from the question by asking “what’s the distance between zero and seven?” (Note that this differs from Immediately Reducing Cognitive Orientation because the tutor gave the student the opportunity to answer the original question.) Similarly, when the student was unsure of how to proceed with the problem, tutors would draw attention to information from the problem or previous step. For example, when working on the problem, \({\int }_{1}^{\infty}\frac{\text{l}\text{n}\left(x\right)}{x}dx\) the tutor commented, “so right off the bat I notice we have a one to infinity.” The tutor highlighted an aspect of the problem statement which narrowed the student’s attention to the limits of the integral, thereby drawing the student’s attention to the integral being improper. We did see one behavior, asked student to find specific information in textbook/notes, in which the tutor selected the heuristic but asked the student to carry it out.

Contextual Metacognition

The key feature of Contextual Metacognition was a reference to “how it’s done” or “what it’s like” in a specific course or with a specific instructor. An example of the behavior, provided knowledge of specific course/instructor is, “so then you get your \(e-1\) equals \({e}^{xy}-\frac{x}{y}\). And you could set that equal to zero, but I doubt that you have to. I bet your professor will take it like that.” Here the tutor gave the student information about the norms for presenting an answer in a given course.

Discussion and Implications

Summary of Findings

In this study we sought to answer the following question: What observable behaviors do undergraduate drop-in mathematics tutors exhibit as they interact with students? We discovered 83 behaviors that we grouped into categories we developed, utilizing the organization of the matrix from Anderson et al., (2001) and the Cognitive Orientation Framework from White & Mesa (2014). To further analyze the Metacognitive behaviors that we found, we utilized Pintrich (2000) and Schoenfeld (1979, 1985). Four of our categories: Supplied Facts, Applied a Procedure, Determined What-to-do, and Drew Upon Understanding, included behaviors both where the tutors did the mathematical work and others where the tutor was asking the student to do the work. One of the most common ways that tutors engaged students was by asking them to carry out procedures, such as calculations or applying memorized algorithms. However, deciding what to do was largely the role of the tutor. A common sequence in the problem-solving process involved the tutor identifying the next step and then asking the student to execute it. Also common were behaviors where tutors asked students to draw upon understanding, which often took the form of prompting students to identify or recognize, and subsequently tutors would evaluate students’ answers. Elaboration and explanations were primarily provided by the tutors. A fifth category, Immediately Reduced Cognitive Orientation, captured behaviors where tutors reworded a question or prompt without giving students a chance to respond, often leaving them with a yes or no or fill-in-the-blank question that could be answered with little or no mathematical knowledge.

We also identified behaviors that we grouped into five categories requiring Metacognitive Knowledge. Tutors almost always used their own metacognitive knowledge, as opposed to providing opportunities for students to use or develop theirs, though there were limited exceptions. Some behaviors classified as planning, for example, showed the tutors asking the student for specific prior knowledge that the tutor believed needed to be activated. The most common monitoring behaviors were check-in behaviors when the tutor asked “make sense?” or “okay?” Tutors also monitored by evaluating students’ claims or work, and in some cases, tutors prompted students to self-assess. Control behaviors often followed monitoring behaviors, where the tutor either maintained or changed the direction of the session. These included elaborating on student answers, prompting the student to continue on their path, and asking follow-up questions. Tutors also frequently recommended a general problem-solving technique; these behaviors were classified as Heuristics. These behaviors, such as prompting discussion of the goal of a problem or drawing attention to a previous step, positioned tutors as the drivers of the session, but we did see tutors engage students through certain heuristic behaviors, such as asking an easier question or asking them to find information in their resources. Tutors sometimes displayed contextual metacognitive knowledge by sharing their experience of the expectations of a particular instructor or course.

Contributions and Connections to Prior Work

This study identifies and classifies behaviors that are specific to undergraduate mathematics peer tutors in a drop-in tutoring environment, some which are similar to and others different from behaviors of tutors in prior research that studied different tutoring environments. First, there are behaviors that are inherently necessary in the drop-in context. For example, drop-in tutors often work with students in multiple courses and therefore have to ask questions that orient themselves to the specific course and the problems students bring. Second, we saw a particular group of behaviors that tutors used to immediately reduce the cognitive demand on students, without giving them an opportunity to respond to the first prompt or question; this often took the form of changing an open-ended question or prompt into a yes/no or multiple-choice question. Perhaps the tutors intentionally engaged in these behaviors because they believed it was what would help the student in the moment. Alternatively, they may have been constrained by time or concerned they would make the student uncomfortable if they waited for an answer to the initial question. We cannot say with certainty why this happened so often.

We also have evidence, such as tutors providing detailed explanations, that tutors are concerned not just that students get the correct answer or to learn how to execute a procedure correctly, but that they understand how that procedure works and why it is appropriate in a particular situation. Moreover, at times tutors provide opportunities for students to do this type of mathematical work themselves. We contrast this with prior studies that claim that tutors are focused on solving specific problems rather than making connections between problems and concepts (Fox, 1991; Graesser et al., 1995; McArthur et al., 1990). It is important to note that the tutors in this study have received training and we cannot make a claim at this point about how much that training has influenced this tendency.

Asking students to draw upon their understanding was one of the most common ways we saw tutors engage students. Previous research has argued that tutoring is effective because students have opportunities to be actively engaged (Chi et al., 2001; Graesser & Person, 1994; Lepper & Woolverton, 2002). Our results provide a lens through which we can examine whether the tutor or student is doing the mathematical work and what type of work is being done. While we found a higher number of instances of tutors doing the mathematical work, we still saw tutors frequently prompting students to engage in multiple ways (see Table 2). This does not mean students necessarily chose to engage, but the tutors were not predominantly choosing to lecture or solve problems for students without requesting any student input.

Tutor and student interactions exist within a context of a hierarchy; even in situations where tutors and students are peers, they both often perceive a power differential and an expectation that the tutor be the expert and the decision maker (Carino, 2003). Consistent with prior research (Chi et al., 2001; Graesser & Person, 1994), we found undergraduate drop-in mathematics tutors were the driver of the session and asked the majority of the questions. The tutors often engaged students by asking them to execute specific procedures once they had made decisions about how to proceed with a problem, similar to McArthur et al., 1990. Tutors also tended to take on the regulatory processes within the learning and problem-solving activities. For example, tutors often began the problem-solving process by rewording a problem statement for the student, a strategy consistent with findings by Cade et al., (2008). They also assumed most of the responsibility for evaluating student answers and work, which is consistent with prior results for novice tutors (Fox, 1991; Graesser et al.,1995; McArthur et al., 1990).

Implications

The behaviors identified in this study can help us design tutor training that moves tutors toward student centered learning. Our results reveal inclinations of tutors that we can build upon to better serve students in undergraduate mathematics drop-in tutoring and here we present suggestions for how to address existing behaviors in training. For example, we see a desire of tutors to deepen students’ understanding of mathematical concepts and make connections between those concepts and the procedures necessary for solving specific problems. These behaviors can be highlighted and encouraged by tutor training programs. We also recommend training tutors to convert some of their conceptually oriented explanations into opportunities for students to explain their conceptual understanding.

Increasing student independence is one of several areas for potential growth and a focus for tutor training programs. One way to increase the activeness of students and encourage them to do more of the substantive mathematical work is by reducing cases of Immediately Reducing the Cognitive Orientation. This is an area that may be easily influenced through training. Tutors are already asking good questions; they simply need to allow students the opportunity to answer before immediately rewording their questions.

One way to encourage the student to engage in self-regulatory skills is for the tutor to ask the student to rephrase or breakdown the problem statement to determine how to proceed. Alternatively, the tutor can explicitly state why and when they might choose a certain heuristic such as rewording the problem statement. With all metacognitive and problem-solving strategies, tutors can be trained to explicitly model their own metacognitive processes or to ask students to select and carry out their own strategies. As with the other types of knowledge, a goal is to engage the student in the “when” and “why” to form deep understanding that could be applied to other problems and contexts.

The monitoring behaviors we discovered likely demonstrate a concern for student learning or a desire for confirmation that their explanations were helpful. We can build on tutor concern for student learning in training, encouraging tutors to ask questions requiring the student to reason about the tutor’s explanation, which would not only aid tutors in gauging the productiveness of their explanation, but could also help the student solidify their understandings by verbalizing them.

We see limited evidence of tutors asking students to monitor their own mathematical work (such as asking a question to point out error) but the specific behaviors we observed did not necessarily help students learn to monitor in absence of the tutor. Training tutors to ask a question back to the student in response to an incorrect or incomplete student response would allow students to develop self-assessment skills they need to be successful in mathematics. At the same time, it would allow the tutor to get a clearer picture of what the student does and does not understand.

Limitations and Future Research

This study was completed at a single institution and the sessions were selected by the participants; therefore, our sample of tutoring sessions may not be representative of all undergraduate drop-in mathematics tutoring. In addition, tutors selected sessions that they were comfortable discussing with their supervisor and that were within a 5–7-minute length, so the sessions may not be representative of each tutor’s own tutoring. Tutor behaviors also may have been limited by the type of homework problems students brought to the tutor (see Appendix B), which were often exercises rather than true problems (Schoenfeld, 1985, 1992). The content of the exercises likely impacted the tutors’ questions as well as opportunities to explore possible solution paths. We also note that the tutors transcribed their own sessions, and their transcriptions were used as data for this study.

Data collection using Livescribe pens likely impacted the interaction dynamic. Because tutors had to ask the student for consent before recording and may have stopped recording before they finished working with the student, certain affective behaviors such as initial greetings and session closures may have not been captured in our data. It is also possible that some tutors did more writing themselves because of this recording device. In addition, we chose to use a tutor turn as our unit of analysis, without differentiating between short and long turns or the number of turns that it took to resolve a particular issue. Thus, some longer tutor turns contained multiple codes.

Previous work has noted the importance of considering three perspectives in tutoring; tutor, student, interaction between the two (Chi et al., 2001). Our study classifies only the tutor’s behaviors. Future studies may compare how certain tutor behaviors impact student performance and how students respond to certain tutor behaviors. Future work can explore the response/follow-up pattern of tutors. Specifically, what do tutors do with unexpected student responses, and how persistent are they with their intended paths? Or one might study, for example, to what extent the tutors’ moves have the desired impact. We are also interested in to what degree tutors’ patterns of behaviors are malleable and how these might best be influenced. We suspect that tutors progress through a learning trajectory, with some behaviors more innate than others. Experience and training may impact tutor behaviors, which indicates a need for more long-term studies, following tutors over multiple semesters. We speculate that as tutors are trained to use more student-centered approaches, we would see more behaviors such as Asked Student to Determine What-to-do or Asked Student to Draw Upon Understanding in their sessions.

We believe the categories themselves may have utility in training. For example, we envision a training exercise in which tutors could view their own and others’ recordings and comment on different behaviors and how they could adapt their behaviors towards student centered methods. This type of training for in-service teachers has been shown to promote noticing and self-reflection and result in change in teacher behavior (van Es & Sherin 2002; 2008). The categories could also be used to track growth over time by coding a tutor’s transcripts at different periods in time.

Based on the results of this study, we suggest that tutor training emphasize the following: (1) Ask students to identify a solution path as well as the steps needed to carry out the problem. (2) Allow the student to answer higher order questions without turning them into yes/no or multiple-choice type questions. (3) After providing explanation, ask the student a question which requires the student to reason about the explanation provided rather than asking “right?” (4) Explicitly model their own metacognitive processes and use questions to engage students in selecting and using metacognitive strategies. (5) When a student’s utterance is incomplete or incorrect, ask the student a question to help them make their own mathematical realizations. Making these adjustments to tutoring behaviors through training can increase student’s opportunities to learn in tutoring sessions. Future research could develop training modules to address these issues and evaluate the impact of the training on tutor behaviors.

References

Ader, S., & Carlson, M. (2021). Decentering framework: A characterization of graduate student instructors’ actions to understand and act on student thinking. Mathematical Thinking and Learning 24(2), 9-122. DOI:10.1080/10986065.2020.1844608

Anderson, L. W., Krathwohl, D. R., Airasian, P. W., Cruishank, K. A., Mayer, R. E., Pintrich, P. R., & Wittrock, M. C. (2001). Taxonomy for learning, teaching, and assessing: A revision of Bloom’s taxonomy of educational objectives. New York: Longman

Bentz, J. L., & Fuchs, L. S. (1996). Improving peers’ helping behavior to students with learning disabilities during mathematics peer tutoring. Learning Disability Quarterly, 19(4), 202–215

Bloom, B. S., Englehart, M. D., Furst, W. J., Hill, W. H., & Krathwohl, D. R. (1956). The taxonomy of educational objectives, the classification of educational goals, handbook I: Cognitive domain. New York: David McKay Company, Inc

Bressoud, D., Mesa, V., & Rasmussen, C. (2015). Insights and Recommendations from the MAA National Study of College Calculus. MAA Press

Byerley, C., Campbell, T., & Rickard, B. (2018). Evaluation of impact of Calculus Center on student achievement. In Weinburg, Rasmussen, Rabin, Wawro, & Brown, Eds. Proceedings of the Twenty-First Annual Conference on Research in Undergraduate Mathematics Education. San Diego, CA

Byerley, C., Moore-Russo, D., James, C., Johns, C., Rickard, B., & Mills, M. (2019). Defining the varied structures of tutoring centers: Laying a foundation for future research. Proceedings of the Twenty-second Annual Conference on Research in Undergraduate Mathematics Education, Oklahoma City, OK

Byerley, C., James, C., Moore-Russo, D., Rickard, B., Mills, M., Heasom, W., & Moritz, D. (2020). Characteristics and evaluation of ten tutoring centers. Proceedings of the Twenty-third Annual Conference on Research in Undergraduate Mathematics Education. Boston, MA

Cade, W. L., Copeland, J. L., Person, N. K., & D’Mello, S. K. (2008). Dialogue modes in expert tutoring. In International conference on intelligent tutoring systems (pp. 470–479). Springer, Berlin, Heidelberg

Carino, P. (2003). Power and authority in peer tutoring. In M. Pemberton, & J. Kinkead (Eds.), The Center Will Hold: Critical Perspectives on Writing Center Scholarship (pp. 96–113). Utah State University Press

Chi, M. T., Siler, S. A., Jeong, H., Yamauchi, T., & Hausmann, R. G. (2001). Learning from human tutoring. Cognitive Science, 25, 471–533

Fox, B. A. (1991). Cognitive and interactional aspects of correction in tutoring. In P. Goodyear (Ed.), Teaching knowledge and intelligent tutoring (pp. 149–172). Norwood, NJ: Ablex

Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., & Wenderoth, M. P. (2014). Active learning increases student performance in science, engineering, and mathematics. Proceedings of the National Academy of Sciences, 111(23), 8410–8415

Fuchs, L. S., Fuchs, D., Hamlett, C. L., Phillips, N. B., Karns, K., & Dutka, S. (1997). Enhancing students’ helping behavior during peer-mediated instruction with conceptual mathematical explanations. The Elementary School Journal, 97(3), 223–249

Glaser, A. L., & Strauss, B. G. (1967). The discovery of grounded theory: Strategies for qualitative research. Chicago: Aldine Publications

Graesser, A. C., & Person, N. K. (1994). Question asking during tutoring. American Educational Research Journal, 31(1), 104–137

Graesser, A. C., Person, N. K., & Magliano, J. P. (1995). Collaborative dialogue patterns in naturalistic one-on-one tutoring. Applied Cognitive Psychology, 9, 495–522

Hiebert, J., & Lefevre, P. (1986). Conceptual and procedural knowledge in mathematics: An introductory analysis. In J. Hiebert (Ed.), Conceptual and Procedural Knowledge: The Case of Mathematics (pp. 1–28). Routledge

Hume, G., Michael, J., Rovick, A., & Evens, M. (1996). Hinting as a tactic in one-on-one tutoring. The Journal of Learning Sciences, 5(1), 23–47

Jacobs, V. R., Lamb, L. L., & Philipp, R. A. (2010). Professional noticing of children’s mathematical thinking. Journal for Research in Mathematics Education, 41(2), 169–202

Johns, C. (2019). Tutor behaviors in undergraduate mathematics drop-in tutoring. [Doctoral dissertation, The Ohio State University]. ProQuest Dissertations Publishing

Johnson, E., & Hansen, K. (2015). Academic and social supports. In D. Bressoud, V. Mesa, & C. Rasumussen (Eds.), Insights and Recommendations from the MAA National Study of College Calculus (pp. 69–82). MAA Press

Lepper, M. R., Drake, M. F., & O’Donnell-Johnson, T. (1997). Scaffolding techniques of expert human tutors. In K. Hogan, & G. M. Pressley (Eds.), Scaffolding student learning: Instructional approaches and issues (pp. 108–144). Brookline Books

Lepper, M. R., & Woolverton, M. (2002). The wisdom of practice: Lessons learned from the study of highly effective tutors. In J. Aronson (Ed.), Improving academic achievement: Impact of psychological factors on education (pp. 135–158). San Diego, CA: Academic Press

McArthur, D., Stasz, C., & Zmuidzinas, M. (1990). Tutoring techniques in algebra. Cognition and Instruction, 7(3), 197–244

Merrill, D. C., Reiser, B. J., Ranney, M., & Trafton, J. G. (1992). Effective tutoring techniques: A comparison of human tutors and intelligent tutoring systems. The Journal of Learning Sciences, 2(3), 277–305

Mills, M., Johns, C., & Ryals, M. (2019). Peer tutors attending to student mathematical thinking. Proceedings of the 22nd Annual Conference on Research in Undergraduate Mathematics Education, (pp. 250–257). Oklahoma City, OK

Mills, M., Rickard, B., & Guest, B. (2020). Survey of mathematics tutoring centers in the USA.International Journal of Mathematical Science and Technology,1–21

National Council of Teachers of Mathematics (NCTM). (2014). Principles to Action. Reston, VA: NCTM

Pintrich, P. R. (2000). The role of goal orientation in self-regulated learning. In M. Boekaerts, P. R. Pintrich, & M. Zeidner (Eds.), Handbook of self-regulation (pp. 451–502). Academic Press

Putnam, R. T. (1987). Structuring and adjusting content for students: A study of live and simulated tutoring of addition. American Educational Research Journal, 24(1), 13–48

Rickard, B., & Mills, M. (2018). The effect of attending tutoring on course grades in Calculus I. International Journal of Mathematical Education in Science and Technology, 49(3), 341–354

Rittle-Johnson, B., & Alibali, M. W. (1999). Conceptual and procedural knowledge of mathematics: Does one lead to the other? Journal of Educational Psychology, 91(1), 175–189

Schoenfeld, A. H. (1979). Explicit heuristic training as a variable in problem-solving performance. Journal for Research in Mathematics Education, 10(3), 173

Schoenfeld, A. H. (1985). Mathematical problem solving. Orlando, FL: Academic Press, INC

Schoenfeld, A. H. (1992). Learning to think mathematically: Problem solving, metacognition, and sense making in mathematics. In D. Grouws (Ed.), Handbook for Research on Mathematics Teaching and Learning (pp. 334–370). New York, NY: Macmillan

Schoenfeld, A. H., Gamoran, M., Kessel, C., & Leonard, M. (1992). Towards a comprehensive model of human tutoring in a complex subject matter domains. The Journal of Mathematical Behavior, 11(4), 293–319

Strauss, A., & Corbin, J. (1990). Basics of qualitative research. Sage publications

Tallman, M., & Carlson, M. (2016). A characterization of calculus I final exams in U.S. colleges and universities. International Journal of Research in Undergraduate Mathematics Education, 2, 105–133

Teuscher, D., Moore, K. C., & Carlson, M. P. (2016). Decentering: A construct to analyze and explain teacher actions as they relate to student thinking. Journal of Mathematics Teacher Education, 19, 433–456

Theobald, E. J., Hill, M. J., Tran, E., Agrawal, S., Arroyo, E. N., Behling, S., & Freeman, S. (2020). Active learning narrows achievement gaps for underrepresented students in undergraduate science, technology, engineering, and math. Proceedings of the National Academy of Sciences, 117(12), 6476–6483

Topping, K., Campbell, J., Douglas, W., & Smith, A. (2003). Cross-age peer tutoring in mathematics with seven-and 11-year-olds: influence on mathematical vocabulary, strategic dialogue and self-concept. Educational Research, 45(3), 287–308

Van Es, E. A., & Sherin, M. G. (2008). Mathematics teachers’ “learning to notice” in the context of a video club. Teaching and Teacher Education, 24, 244–276

Van Es, E., & Sherin, M. (2002). Learning to notice: Scaffolding new teachers’ interpretations of classroom interactions. Journal of Information Technology, 10(4), 571–596

VanLehn, K., Siler, S., Murray, C., Yamauchi, T., & Baggett, W. S. (2003). Why do only some events cause learning during human tutoring? Cognition and Instruction, 21(3), 209–249

White & Mesa, White, N., & Mesa, V. (2014). (2014). Describing cognitive orientation of Calculus I tasks across different types of coursework. ZDM., 46(4). 675–690

Zimmerman, B. J. (1998). Developing self-fulfilling cycles of academic regulation: An analysis of exemplary instructional models. In D. H. Schunk, & B. J. Zimmerman (Eds.), Self-regulated learning: From teaching to self-reflective practice (pp. 1–19). New York: NY: The Guilford Press

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest Statement:

The authors certify that they have NO affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic Supplementary Material

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Johns, C., Mills, M. & Ryals, M. An Analysis of the Observable Behaviors of Undergraduate drop-in Mathematics Tutors. Int. J. Res. Undergrad. Math. Ed. 9, 350–374 (2023). https://doi.org/10.1007/s40753-022-00197-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40753-022-00197-6