Abstract

This paper is concerned with the estimation problem using maximum likelihood method of estimation for the unknown parameters of exponetiated gumbel distribution based on neoteric ranked set sampling (NRSS) as a new modification of the usual ranked set sampling (RSS) technique. Numerical study is conducted to compare NRSS as a dependent ranked set sampling technique, with RSS, and median ranked set sampling as independent sampling techniques, and then the performance of RSS and its modifications will be compared with simple random sampling based on their mean square errors and efficiencies.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In 1952, McIntyre introduced a new sampling technique which he called RSS. Takahasi and Wakimoto [18] derived a very essential statistical basis for the theory of RSS introduced by McIntyre [9]. Dell and Clutter [4] pointed out the role of ranking errors in RSS which cause loss in efficiency for estimating the population mean. Stokes and Sager [17] used RSS to estimate distribution functions, they showed that the empirical distribution function of a RSS is an unbiased estimator of the distribution function and has a smaller variance than that from a simple random sampling (SRS). For some real applications of RSS, See Patil [12], Yu and Lam [20], Al-Saleh and Al-Shrafat [3], Al-Saleh and Al-Hadrami [1], Al-Saleh and Al-Omari [2], Husby et al. [6], Wang et al. [19], Samawi [15] and references therein.

Muttlak [10] proposed median ranked set sampling (MRSS) as a modification for the RSS technique, he demonstrated that MRSS has the potential to reduce errors in ranking and gives efficient estimate than RSS estimate in case of symmetric distribution. Sinha and Purkayastha [16] used the median ranked set sampling to modify the RSS estimators of the population mean if the underling distribution known to be normal or exponential.

RSS method as suggested by McIntyre [9] may be modified to come up with new sampling methods that can be made more efficient than the usual RSS method. A recently developed extension of RSS, Zamanzade and Al-Omari [21] was proposed a new neoteric ranked set sampling (NRSS).They proved that the NRSS estimators perform much better than their counterparts using RSS and SRS techniques whatever the ranking perfect or imperfect. Under this setup, Sabry and Shaaban [13] derived the likelihood function for NRSS and double neoteric ranked set sampling (DNRSS), they compared maximum likelihood estimators (MLEs) based on RSS, NRSS, and DNRSS schemes with the MLEs based on the SRS technique for inverse Weibull distribution.

Exponetiated gumbel (EG) distribution was introduced by Nadarajah [11] in the same way Gupta et al. [5] generalized the standard exponential distribution. Applications of gumbel distribution in various areas including accelerated life testing, earthquakes, floods, horse racing, rainfall, sea currents, wind speeds and track race records can be seen in Kotz and Nadarajah [8]. The probability density function (PDF) and, the cumulative distribution function (CDF) of the EG distribution are, respectively, given by

and

where \(\alpha > 0 , - \infty < x < \infty ,\lambda > 0\), α and λ are the shape and scale parameters, respectively.

In this paper, an attempt has been made to compare the performance of NRSS, MRSS, and RSS schemes using maximum likelihood (ML) method of estimation with the usual SRS technique for EG distribution parameters. The remaining part of this paper is organized as follows: Sect. 2 introduced some sampling techniques. In Sect. 3, MLHs are derived for the shape and scale parameters of EG distribution using different sampling schemes. Section 4 is devoted to extensive numerical study to compare the performance of NRSS, MRSS with unknown estimators based on RSS and SRS techniques. Conclusions are derived in Sect. 5.

2 Some Ranked Set Sampling Techniques

In this section, various sampling procedures for selection of units in the sample will be considered; brief descriptions of RSS, MRSS and NRSS schemes will be introduced.

2.1 Ranked Set Sampling

In (1952) McIntyre introduced RSS technique as a useful procedure, when quantification of all sampling units is costly but a small set of units can be easily ranked, according to the characteristics under investigation, without actual quantification. This ordering criterion may be based, for example, on values of a concomitant variable or personal judgment. Several studies have proved the higher efficiency of RSS, relative to SRS, for the estimation of a large number of population parameters. The RSS scheme can be described as follows:

-

Step 1 Randomly select m2 sample units from the population.

-

Step 2 Allocate the m2 selected units as randomly as possible into m sets, each of size m.

-

Step 3 Choose a sample for actual quantification by including the smallest ranked unit in the first set, the second smallest ranked unit in the second set, the process is continues in this way until the largest ranked unit is selected from the last set.

-

Step 4 Repeat steps 1 through 4 for r cycles to obtain a sample of size \(mr\) (Fig. 1).

Fig. 1 RSS design [14]

Let \(\{ X_{{\left( {ii} \right)s}} ,\,i = 1,2, \ldots ,m;\,\,\, j = 1,2, \ldots ,r\}\) be a ranked set sample where \(m\) is the set size and \(r\) is the number of cycles. Then the probability density function (PDF) of \(X_{{\left( {ii} \right)j}}\) is given by

where \(C_{1 } = \frac{m!}{{\left( {i - 1} \right)!\left( {m - 1} \right)!}}\) using Eq. (3) the likelihood function corresponding to RSS scheme is given by:

2.2 Median Ranked Set Sampling

Muttlak [10] proposed median ranked set sampling (MRSS) as a modification of the RSS to reduce loss of efficiency in RSS due to errors in ranking and an improvement upon the efficiency of the estimator of the population mean. MRSS procedure can be summarized as follows:

-

Step 1 Select \(m^{2}\) random samples of size \(m\) units from the target population.

-

Step 2 Rank the units within each sample with respect to a variable of interest.

-

Step 3 If the sample size \(m\) is odd, from each sample select for measurement the \(\left( {\frac{m + 1}{2}} \right)\)th smallest ranked unit, i.e., the median of the sample (see Fig. 2).

Fig. 2 MRSS design in case of odd sample size [14]

From Fig. 2, let the measured MRSS units in case of odd set size is \(\left\{ {x_{{\left( {1 \,g} \right)j}} ,x_{{\left( {2 \, g} \right)j}} , \ldots ,x_{{\left( {g\,g} \right)j}} , \ldots ,x_{{\left( {m \,g} \right)j}} } \right\}\) where \(g = \left( {m + 1} \right)/2.\) The PDF of \(\left( g \right)\)th order statistics can be obtained as follows:

Then, using Eq. (5) the likelihood function corresponding to MRSS scheme for odd set sizes and with \(r\) cycles is given as follows:

-

Step 4 If the sample size \(m\) is even, select for the measurement from the first \(\frac{m}{2}\) samples the \(\left( {\frac{m}{2}} \right)\)th smallest ranked unit and from the second \(\frac{m}{2}\) samples the \(\left( {\frac{m}{2} + 1} \right)\)th smallest ranked unit (see Fig. 3).

MRSS design in case of even sample size [14]

-

Step 5 The cycle can be repeated \(r\) times if needed to get a sample of size \(n = mr\) units from MRSS data.

From Fig. 3, let the measured MRSS units in case of even set size will be as follows: \(x_{{\left( {1 \,u} \right)j}} ,x_{{\left( {2\, u} \right)j}} , \ldots ,x_{{\left( {u \,u} \right)j}} ,x_{{\left( {u + 1\,\, u} \right)j}} , \ldots ,x_{{\left( {n \,u} \right)j}} ,\) then the PDFs of \(\left( u \right)\)th and \(\left( {u + 1} \right)\)th order statistics when \(m\). is even are given as follows:

and

where \(u = m/2\)., and \(C_{2} = \frac{m!}{{\left( {u - 1} \right)!\left( u \right)!}}\), using Eqs. (7) and (8), the likelihood function corresponding to MRSS scheme for even set sizes and with \(r\). cycles given as follows:

2.3 Neoteric Ranked Set Sampling

Zamanzade and Al-Omari [21] have defined a NRSS. NRSS technique differs from the original RSS scheme by the composition of a single set of m2 units, instead of m sets of size m. this strategy has been shown to be effective, producing more efficient estimators for the population mean and variance.

The following steps describe the NRSS sampling design:

-

Step 1 Select a simple random sample of size \(m^{2}\) units from the target finite population.

-

Step 2 Ranked the \(m^{2}\) selected units in an increasing magnitude based on a visual inspection or any other cost free method with respect to a variable of interest.

-

Step 3 If m is an odd, then select the \(\left[ { g + \left( {i - 1} \right)m} \right]\)th ranked unit. If m is an even, then select the \(\left[ {u + \left( {i - 1} \right)m} \right]\)th ranked unit, if i is an even and \(\left[ {\left( {u + 1} \right) + \left( {i - 1} \right)m} \right]\)th if \(i\) is an odd where \(\left( {u = \frac{m}{2},g = \frac{m + 1}{2},\,and\, i = 1, 2, . . . , m} \right)\).

-

Step 4 Repeat steps 1 through 3 r cycles if needed to obtain a NRSS osize \(n = rm\)

The NRSS scheme can be described as follows (Fig. 4):

NRSS design in case of odd sample size [13]

Using NRSS method, we have to choose the units with the rank 2, 5, 8 for actual quantification, then the measured NRSS units are \(\left\{ {\boxed{X_{\left( 2 \right)1} }, \boxed{X_{\left( 5 \right)1} }, \boxed{X_{\left( 8 \right)1} }} \right\}\) for one cycle.

Let \(\{ X_{\left( i \right)j} ,i = 1,2, \ldots ,m;j = 1,2, \ldots ,r\}\) be a neoteric ranked set sample where \(m\) is the set size and \(r\) is the number of cycles. Then the likelihood function corresponding to NRSS scheme that proposed by Sabry and Shaaban [13], is given by

where \(m\) is the set size, \(r\) is the number of cycles, \(w = m^{2} !\), and \(k\left( i \right)\) is chosen as

where \(k\left( 0 \right) = 0,k\left( {m + 1} \right) = w + 1\) and \(x_{{\left( {k\left( 0 \right)} \right)}} = - \infty , x_{{\left( {k\left( {m + 1} \right)} \right)}} = \infty .\)

3 Estimation of the Exponentiated Gumbel Distribution Pameters

In this Section MLEs for the unknown parameters of EG distribution based on SRS and RSS will be reviewed, moreover we will derive MLHs for EG distribution based on MRSS and NRSS.

3.1 Estimation Based on SRS

Jabbari and Ravandeh [7] introduced MLHs for EG distribution parameters based on SRS. In this Subsection, MLHs based on SRS will be reviewed. Let \(\varvec{x}_{1} ,\varvec{ x}_{2} , \ldots ,\varvec{ x}_{\varvec{n}}\) be a random sample of size \(\varvec{n}\) from \(\varvec{EG}\left( {\alpha , \lambda } \right)\), then the likelihood function can be written as follows

The first derivatives of the log-likelihood function denoted by \(l_{SRS}\) with respect to \(\lambda\) and \(\alpha\) respectively are as follows

and

From Eq. (13), Jabbari and Ravandeh [7] showed that MLE of \(\alpha\) say \(\hat{\alpha }\left( \lambda \right)\) can be obtained as follows

by substituting \(\hat{\alpha }\left( \lambda \right)\) in Eq. (11), the profile log-likelihood can be obtained of \(\lambda\) as follows

Therefore the MLEs of \(\lambda ,\) say \(\hat{\lambda }_{MLE}\), can be obtained by maximizing Eq. (15) with respect to \(\lambda\). It can be shown that the maximum likelihood of Eq. (15) can be obtained as a fixed point solution

3.2 Estimation Based on RSS

In this subsection, MLHs of EG distribution obtained by Jabbari and Ravandeh [7] will be considered, Suppose \(\left\{ {X_{{\left( {11} \right)j}} ,X_{{\left( {22} \right)j, \ldots ,X_{{\left( {mm} \right)j}} }} ;j = 1,2, \ldots ,r} \right\}\). denotes the ranked set sample of size \(n = mr\) from EG \(\left( {\alpha ,\lambda } \right),\) where m is the set size and \(r\) is the number of cycles. By substituting Eqs. (1) and (2) into Eq. (4), then the likelihood function based on RSS data is given by:

The log likelihood function denoted by \(l_{RSS}\) can be derived directly as follows

and the first derivatives of \(l_{RSS}\) with respect to \(\lambda \, and\, \alpha\) respectively are given by

and

It is clear, it is not easy to obtain a closed form of the non linear Eqs. (16) and (17), so an iterative technique can be used to obtain MLEs of \(\lambda\) and \(\alpha\).

3.3 Estimation Based on MRSS

In the following subsection MLEs based on MRSS for unknown parameters of EG distribution will be obtained. Let \(\{ X_{{\left( {1g} \right)j}} ,X_{{\left( {2g} \right)j, \ldots ,X_{{\left( {mg} \right)j}} }} ;\,\,\, j = 1,2, \ldots ,r, g = \frac{m + 1}{2}\}\) is a MRSS in case of odd set size from EG \(\left( {\alpha ,\lambda } \right)\) with sample size \(n = mr\), where \(m\) is the set size, \(r\) is the number of cycles. By substituting Eqs. (1) and (2) into Eq. (6), then the likelihood function of the MRSS in case of odd set size is given by:

The log likelihood function denoted by \(l_{OMRSS}\) is given as:

The first derivatives of \(l_{MRSS}\) with respect to \(\lambda\) and \(\alpha\) respectively, are given by

and

MLEs of \(\lambda\) and \(\alpha\) in case of odd set size cannot be obtained in a closed form, so an iterative technique will be used to solve Eqs. (18) and (19) numerically.

To obtain MLEs in case of even set size based on MRSS scheme, maximum likelihood function can be obtained by substituting Eqs. (1) and (2) into Eq. (9), as follows:

The log likelihood function denoted by \(l_{EMRSS}\) is given as:

The first derivatives of \(l_{EMRSS}\) with respect to \(\lambda\) and \(\alpha\) respectively, are given by

and

MLEs of \(\lambda\) and \(\alpha\) in case of even set size cannot be obtained in a closed form, so an iterative technique will be used to solve Eqs. (20) and (21) numerically.

3.4 Estimation Based on NRSS

In this subsection, we will derive MLEs for EG (\(\lambda ,\alpha )\) based on NRSS technique by substituting Eqs. (1) and (2) in Eq. (10). Let \(\left\{ {X_{\left( i \right)j} ,i = 1,2, \ldots ,m;j = 1,2, \ldots ,r} \right\}\) be a neoteric ranked set sample where \(m\) is the set size and \(r\) is the number of cycles, then the likelihood function corresponding to NRSS scheme is given by

where \(h = \frac{w!}{{\mathop \prod \nolimits_{i = 1}^{m + 1} \left( {k\left( i \right) - k\left( {i - 1} \right) - 1} \right)! }}, w = m^{2} .\)

The associated log-likelihood function denoted by \(l_{NRSS}\) is as follows

and the first derivatives of the \(l_{NRSS}\) are given by

MLHs of EG distribution parameters based on NRSS can be obtained by solving Eqs. (22) and (23) using iterative technique.

`

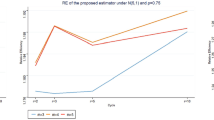

4 Simulation Study

In this section, a simulation study is conducted to compare the maximum likelihood estimators of the shape and scale parameters of EG distribution based on different sampling schemes. The simulation is applied for 10,000 replications and different sample sizes, \(m = \left\{ {3,5,9} \right\}\). The simulation is made for different parameters values \({\text{EG}}\left( {\lambda ,\alpha } \right) = \left\{ {{\text{EG}}\left( {0.25,0.5} \right),{\text{EG}}\left( {0.5,1.5} \right),{\text{EG}}\left( {2,1} \right)} \right\}\). Comparison between the proposed estimators for \(\lambda and \alpha\) using SRS, RSS, MRSS, and NRSS are carried out using mean square errors (MSEs) and efficiencies criteria. The efficiency between all estimators with respect to the MLE based on SRS are calculated. The efficiency of the estimator is defined as

if \(eff\left( {\hat{\theta }_{1} ,\hat{\theta }_{2} } \right) > 1\), then \(\hat{\theta }_{2}\) is better than \(\hat{\theta }_{1} .\)

The results of Biases and MSEs for the different estimators are listed in Tables 1 and 2, and the results of the efficiencies are reported in Table 3, Figs. 5, 6, 7, 8 and 9 are represented to clarify the simulation results. The following conclusions can be observed From Tables 1 and 2:

-

1.

In almost all cases, the biases are small.

-

2.

In all cases, MSEs of the estimators for \(\left( {\lambda ,\alpha } \right)\) based on SRS data are greater than MSEs of the estimators based on RSS, MRSS, and NRSS data (see Fig. 5).

-

3.

In almost all cases, MSEs of all estimators based on SRS, RSS, MRSS, and NRSS decrease as the set sizes increase (see Fig. 6).

-

4.

In almost all cases, MSEs of all estimators based on SRS, RSS, MRSS, and NRSS increase as the value of λ increases.

-

5.

In almost all cases, MSEs of all estimators based on SRS, RSS, MRSS, and NRSS decrease as the value of α increases.

-

6.

MSEs of the estimators for \(\left( \lambda \right)\) based on NRSS have the smallest MSEs in all cases comparing with the other estimators and MSEs of the estimators for \(\left( \alpha \right)\) based on NRSS have the smallest MSEs in all cases comparing with the other estimators except in the case of EG \(\left( {2,1} \right)\) when \(\left\{ {{\text{m}} = 3 , 5} \right\}\), (see Fig. 7).

-

7.

In almost all cases, MSEs of the estimators for \(\left( {\lambda and \propto } \right)\) based on MRSS are smaller than MSEs of the estimators based on RSS (see Fig. 7).

From Table 3, it can be observed that:

-

8.

In almost all cases, efficiencies of all estimators based on RSS, MRSS, and NRSS increase as the set size increase (see Fig. 8).

-

9.

Efficiencies of the estimators for \(\lambda\) based on NRSS have the largest efficiencies in all cases, except when \(\lambda = 2 and m = 3.\) (see Fig. 8).

-

10.

Efficiencies of the estimators for \(\propto\) based on NRSS have the largest efficiencies in all cases except in the case of EG (2,1) when \(\left\{ {{\text{m}} = 3 , 5} \right\}\) (see Fig. 9).

-

11.

In almost all cases, efficiencies of the estimators for \(\left( {\lambda and \propto } \right)\) on MRSS are greater than the efficiencies based on RSS.

5 Conclusions

On the basis of numerical results, it can be concluded that, MSEs based SRS data has the largest MSEs comparing to RSS and its modifications schemes. It can be noted that, in almost all cases, MSEs decrease as the set sizes increase and the efficiencies increase as the set sizes increase. This study revealed that MRSS is better than RSS. Also, NRSS technique has the superior over the rest of other sampling schemes. In almost all cases, NRSS has the smallest MSEs and largest efficiencies. Generally the estimators based NRSS, MRSS, and RSS based on RSS techniques are more efficient than the estimators based on SRS technique.

References

Al-Saleh MF, Al-Hadrami SA (2003) Parametric estimation for the location parameter for symmetric distributions using moving extremes ranked set samplingwith application to trees data. Environmetrics 14:651–664

Al-Saleh MF, Al-Omari AI (2002) Multistage ranked set sampling. J Stat Plan Inference 102:273–286

Al-Saleh MF, Al-Shrafat K (2001) Estimation of average milk yield using ranked set sampling. Environmetrics 44:367–382

Dell TR, Clutter JL (1972) Ranked set sampling theory with order statistics background. Biometrics 28:545–555

Gupta RC, Gupta PL, Gupta RD (1998) Modeling failure time data by Lehman alternatives. Commun Stat Theory Methods 27:887–904

Husby CE, Stasny EA, Wolfe DA (2005) An application of ranked set sampling for mean and median estimation using USDA crop production data. J Agric Biol Environ Stat 10(3):354–373

Jabbari HK, Ravandeh SM (2016) Comparison of parameter estimation in the exponentiated gumbel distribution based on ranked set sampling and simple random sampling. J Math Stat Sci, 490–497

Kotz S, Nadarajah S (2000) Extreme value distributions: theory and applications. Imperial College Press, London

McIntyre GA (1952) A method for unbiased selective sampling, using ranked sets. Aust J Agric Res 3:385–390

Muttlak HA (1997) Median ranked set sampling. J Appl Stat Sci 6:245–255

Nadarajah S (2006) The exponentiated gumbel distribution with climate application. Environmetrics 17(1):13–23

Patil GP (1995) Editorial: ranked set sampling. Environ Ecol Stat 2:271–285

Sabry MA, Shaaban M (2020) A dependent ranked set sampling designs for estimation of distribution parameters with applications. Ann Data Sci. https://doi.org/10.1007/s40745-020-00247-3

Sabry MA, Muhammed HZ, Nabih A, Shaaban M (2019) Parameter estimation for the power generalized Weibull distribution based on one- and two-stage ranked set sampling designs. J Stat Appl Probab 8(2019):113–128

Samawi HM (2011) Varied set size ranked set sampling with applications to mean and ratio estimation. Int J Model Simul 31:6–13

Sinha BK, Purkayastha S (1996) On some aspects of ranked set sampling for estimation of normal and exponential parameters. Stat Decis 14:223–240

Stokes SL, Sager TW (1988) Characterization of a RSS with application to estimating distribution functions. J Am Stat Assoc 83:374–381

Takahasi K, Wakimoto K (1968) On unbiased estimates of the population mean based on the sample stratified by means of ordering. Ann Inst Stat Math 20:1–31

Wang Y-G, Ye Y, Milton DA (2009) Efficient designs for sampling and sub sampling in fisheries research based on ranked sets. J Mar Sci 66:928–934

Yu PLH, Lam K (1997) Regression estimator in ranked set sampling. Biometrics 53:1070–1080

Zamanzade E, Al-Omari AI (2016) New ranked set sampling for estimating the population mean and variance. J Math Stat 45(6):1891–1905

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Shaaban, M., Yahya, M. Comparison Between Dependent and Independent Ranked Set Sampling Designs for Parametric Estimation with Applications. Ann. Data. Sci. 10, 167–182 (2023). https://doi.org/10.1007/s40745-020-00302-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40745-020-00302-z