Abstract

In this paper, we derive the likelihood function of the neoteric ranked set sampling (NRSS) as dependent in sampling method and double neoteric ranked set sampling (DNRSS) designs as combine between independent sampling method in the first stage and dependent sampling method in the second stage and they compared for the estimation of the parameters of the inverse Weibull (IW) distribution. An intensive simulation has been made to compare the one and the two stages designs. The results showed that likelihood estimation based on ranked set sampling (RSS) as independent sampling method, NRSS and DNRSS designs provide more efficient estimators than the usual simple random sampling design. Moreover, the DNRSS is slightly more efficient than the NRSS and RSS designs in the case of estimating the IW distribution parameters.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

McIntyre [1] first introduced the ranked set sampling (RSS) in the estimation of the mean of pasture yields as a method of improving precision of estimates by a method related to two-phase sampling. He proposed a method of sampling to estimate mean pasture yields with greater efficiency than simple random sampling (SRS). RSS is a useful alternative to SRS when measurements for the variable of interest are expensive or difficult to obtain, the method is shown to be at least as efficient as SRS with the same number of quantification. The RSS has wide applications in many scientific problems, especially in environmental and ecological studies where the main focus is on economical and efficient sampling strategies. A recently developed extension of RSS, Zamanzade and Al-Omari [2] was proposed a new neoteric ranked set sampling (NRSS). NRSS differs from the original RSS scheme by the composition of a single set of \(m^{2}\) units, instead of m sets of size m, this strategy has been shown to be effective, producing more efficient estimators for the population mean and variance.

The inverse Weibull (IW) distribution can be readily applied to modeling processes in reliability, ecology, medicine, branching processes and biological studies. The properties and applications of IW distribution in several areas can be seen in the literature Keller et al. [3], Calabria and Pulcini [4], Johnson et al. [5].

A random variable \(X\) has an IW distribution if the probability density function (PDF) is given by

If \(\beta\) = 1, the IW pdf becomes inverse exponential pdf, and when \(\beta\) = 2; the IW PDF is referred to as the inverse Raleigh pdf. The IW cumulative distribution function (CDF) is given by

where \(x > 0\), \(\lambda > 0\), \(\beta > 0\) and \(0 < u < 1\). \(\beta\) and \(\lambda\) are the shape and scale parameters, respectively.

2 Some Ranked Set Sampling

In this section, we give brief descriptions of RSS, NRSS, and double neoteric ranked set sampling (DNRSS) schemes.

Some notation frequently used in this section and in the rest of the paper are given as follows.

2.1 Ranked Set Sampling

RSS is an alternative design of SRS, The RSS design has some advantages according SRS. For example, by this design, efficient estimates can be obtained using less data in the sample.

This ordering criterion may be based, for example, on values of a concomitant variable or personal judgment. Several studies have proved the higher efficiency of RSS, relative to SRS, for the estimation of a large number of population parameters.

The RSS scheme can be described as follows:

Step 1 Randomly select m2 sample units from the population.

Step 2 Allocate the m2 selected units as randomly as possible into m sets, each of size m.

Step 3 Choose a sample for actual quantification by including the smallest ranked unit in the first set, the second smallest ranked unit in the second set, the process is continues in this way until the largest ranked unit is selected from the last set.

Step 4 Repeat steps 1 through 3 for r cycles to obtain a sample of size \(mr\) (Fig. 1).

Fig. 1 RSS design [6]

Let \(\left\{ {X_{\left( i \right)j} ,i = 1,2, \ldots ,m; j = 1,2, \ldots ,r} \right\}\) be a RSS where \(m\) is the set size, \(r\) is the number of cycle, Then the CDF and the PDF of \(X_{{\left( {ii} \right)j}}\) is given by

and

The Likelihood function corresponding to RSS scheme is as follows:

where \(C_{i} = \frac{m!}{{\left( {i - 1} \right)!\left( {m - i} \right)!}}\).

2.2 Noetric Ranked Set Sampling

Zamanzade and Al-Omari [2] have defined a new NRSS. A recently developed extension of RSS. NRSS differs from the original RSS scheme by the composition of a single set of \(m^{2}\) units, instead of m sets of size m. this strategy has been shown to be effective, producing more efficient estimators for the population mean and variance.

In this section, a steps for applying a NRSS scheme will be showed.

The following steps describe the NRSS sampling design:

Step 1 Select a simple random sample of size \(m^{2}\) units from the target finite population.

Step 2 Ranked the \(m^{2}\) selected units in an increasing magnitude based on a visual inspection or any other cost free method with respect to a variable of interest.

Step 3 If m is an odd, then select the \(\left[ { \frac{m + 1 }{2} + \left( {i - 1} \right)m} \right]\) th ranked unit for \(\left( {i = 1, 2, \ldots ,m} \right) .\)

If m is an even, then select the \(\left[ {l + \left( {i - 1} \right)m} \right]\)th ranked unit, where \(\left[ {l = m /2} \right]\) if i is an even and \(\left[ {l = \frac{m + 2}{2} } \right]\) if i is an odd for \(\left( {i = 1, 2, . . . , m} \right)\).

Step 4 Repeat steps 1 through 3 r cycles if needed to obtain a NRSS of size \(n = rm\).

The NRSS scheme can be described as follows: (Fig. 2)

NRSS design in case of odd sample size and one cycle [6]

Where \(m = 3\) and \(r = 1\).

Using NRSS method, we have to choose the units with the rank 2, 5, 8 for actual quantification, then the measured NRSS units are \(\left\{ {\boxed{X_{\left( 2 \right)1} }, \boxed{X_{\left( 5 \right)1} }, \boxed{X_{\left( 8 \right)1} }} \right\}\) for one cycle.

2.3 Double Neoteric Ranked Set Sampling

DNRSS is defined by Taconeli and Cabral [7] which defined to be a two-stage design in which the first stage is defined by as RSS scheme, while the NRSS procedure should be applied in the second stage. To draw a DRSS sample of size n, the following steps must be implemented:

Step 1 Identify m3 elements from the target population and divide them, randomly, into m blocks with m sets of size m.

Step 2 Apply the RSS method to each block to obtain m RSS samples of size n.

Step 3 Employ the NRSS procedure to the m2 elements selected in step 2 to obtain a DNRSS sample of size m. Only these sample units must be measured for the variable of interest.

Step 4 Steps 1–3 can be repeated r times to draw a sample of size mr.

In Fig. 3, we show how to select a sample of size m = 3 and r = 1, then we have to select m3= 27 units as.

3 Maximum Likelihood Function Based on NRSS and DNRSS

In this section, Maximum likelihood function based on NRSS and DNRSS will be derived.

3.1 Maximum Likelihood Function Based on NRSS

In this section, we will define the likelihood function for NRSS scheme using the order statistics theory through Proposition 1 and depend on Lemma 1.

Lemma 1

Let \(X_{1} , X_{2} , \ldots , X_{n}\) be a random sample of size n from a continuous population and \(x_{{r_{1} }} < X_{{r_{1} :n}} \le x_{{r_{1} }} + \delta x_{{r_{1} }} ,x_{{r_{2} }} < X_{{r_{2} :n}} \le x_{{r_{2} }} + \delta x_{{r_{2} }} , \ldots , x_{{r_{k} }} < X_{{r_{k} :n}} \le x_{{r_{k} }} + \delta x_{k}\) denote the corresponding order statistics, Then the joint probability density function (PDF) of \(X_{{r_{i} :n}}\) is given by

where \(r_{0} = 0,r_{k - 1} = n + 1 \;and \;i = 1,2, \ldots ,k.\)

To proof the joint probability density function (PDF) of\(X_{{r_{i} :n}}\)by the multinomial method [8], we could derive the joint PDF of\(X_{{r_{i} :n}}\)for\((1 \le r_{1} < r_{2} < \cdots < r_{k} \le n)\)as

Proposition 1

Depending on the previous lemma, to find the maximum likelihood for NRSS. Let \(\left\{ {X_{{\left( {k\left( i \right)} \right)j}} ,i = 1,2, \ldots ,m; j = 1,2, \ldots ,r} \right\}\) and \(w = m^{2}\) be a NRSS where \(m^{2}\) is the set size, \(r\) is the number of cycles and \(k\left( i \right)\) is chosen as

Then, the joint probability density function (PDF) of \(X_{{\left( {k\left( i \right)} \right)j}}\) is given by

where \(k\left( 0 \right) = 0,k\left( {m + 1} \right) = w + 1\;and\;x_{{\left( {k\left( 0 \right)} \right)}} = - \infty , x_{{\left( {k\left( {i + 1} \right)} \right)}} = \infty .\)

3.2 Maximum Likelihood Function Based on DNRSS

The likelihood function corresponding to DNRSS scheme will be derived in the same DRSS scheme based on the joint of order statistics but two stage.

Proposition 2

Let \(\left\{ {Y_{\left( i \right)j} ,i = 1,2,..,m^{2} ; j = 1,2,..,r} \right\}\) and \(w = m^{2}\) be a neoteric ranked set sample where \(m^{2}\) is the set size and \(r\) is the number of cycles in the second stage, where in the first stage select \(m^{3}\) elements from the target population and divide these elements randomly into \(m\) sets (of size \(m^{2}\) ). Then the PDF of \(X_{{\left( {k\left( i \right)} \right)j}}\) is given by:

4 Estimation of the Inverse Weibull Distribution Parameters

This section is devoted to the MLE for the unknown parameters of IW distribution based on RSS, NRSS and DNRSS designs.

4.1 Estimation Based on SRS

Let \(X_{1} ,X_{2} , \ldots ,X_{n}\) be independent and identically distributed random variables from IW distribution with pdf given in Eq. (1). The likelihood function of \(\lambda\) and \(\beta\) is given by

and the log likelihood function is then derived as

Let

and

4.2 Estimation Based on RSS

According to the Eq. (3) the Likelihood function for set sizes \(m\) and with \(r\) cycles based on RSS is given by

The log likelihood function can be derived directly as follows

and the first derivatives of the \(\ell \left( {\lambda ,\beta } \right)\) are given by

and

These two nonlinear equations can’t be solved analytically and will be solved numerically.

4.3 Estimation Based on NRSS

By substitution in Eq. (4) based on IW distribution the Likelihood function for set sizes \(m\) and with \(r\) cycles based on NRSS is given by

where \(h = \frac{w!}{{ \mathop \prod \nolimits_{i = 1}^{m + 1} \left( {k\left( i \right) - k\left( {i - 1} \right) - 1} \right)! }} .\)

The associated log-likelihood function is as follows

and the first derivatives of the \(\ell \ell \left( {\lambda ,\beta } \right)\) are given by

and

4.4 Estimation Based on DNRSS

By substitution in Eq. (5) based on IW distribution the Likelihood function for set sizes \(m\) and with \(r\) cycles based on DNRSS is given by

The associated log-likelihood function is as follows

and the first derivatives of the \(\ell (\uptheta)\) are given by

and

where \(Q = \log \left[ {\mathop \sum \limits_{t = i}^{m} \left( {\begin{array}{*{20}c} m \\ t \\ \end{array} } \right)\left[ {e^{{ - \lambda x_{\left( i \right)j}^{ - \beta } }} } \right]^{t} \left[ {1 - e^{{ - \lambda x_{\left( i \right)j}^{ - \beta } }} } \right]^{m - t} - \mathop \sum \limits_{t = i - 1}^{m} \left( {\begin{array}{*{20}c} m \\ t \\ \end{array} } \right)\left[ {e^{{ - \lambda x_{\left( i \right)j}^{ - \beta } }} } \right]^{t} \left[ {1 - e^{{ - \lambda x_{\left( i \right)j}^{ - \beta } }} } \right]^{m - t} } \right]\)

5 Simulation Study

Sample units generated by the proposed sampling designs only become order statistics when the ranking process is done without any error (perfect ranking). Because of this, the RSS-based designs will produce sample units that are neither independent nor identically distributed which makes it difficult to analytically derive some of the properties of their respective estimators (see [7]). Therefore, an extensive simulation study was conducted to evaluate the derived MLEs performance and compare their performance with other RSS-based designs estimators’ performance. The Monte Carlo simulation is made for the IW distribution with different parameter values to ensure a wide range of shapes of the IW distribution, namely IW(0.5,0.5), IW(0.5, 1.5), IW(1.5, 1.5) and IW(1, 4). Figure 4 shows the density function for the IW distribution for the initial parameter values used in the simulation. The simulation is made for samples of sizes 3, 4, 5 and 6 and 10,000 replications. Let \(\hat{\theta }_{k}\)·be the kth sample estimator generated by a particular RSS based sampling design \(k = 1, 2, . . ., 10, 000\). The comparison were made using two criteria’s, the relative bias (RB) and mean square errors (MSE), which are calculated as follows:

The relative efficiency (RE) to SRS estimators was calculated for each RSS-based design, by

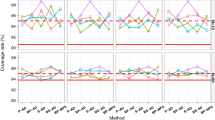

All simulations were performed using routines developed by the authors in the R environment for statistical computing. Simulation results are shown in Tables 1 and 2. Also Fig. 5 shows the performance of the different RSS designs for different parameters.

From figures and tables it can be noticed that:

- 1.

DNRSS presents a slightly better performance than NRSS and RSS.

- 2.

As the sample size increases the relative bias decreases for β for all scheme.

- 3.

As λ and α decreases and the sample sizes increase, the performance of the estimators of λ, and β for different designs become higher.

- 4.

DNRSS design provide more efficient estimator than NRSS and RSS estimator for all the distribution parameters.

- 5.

The relative efficiency from NRSS to the design with best performance more than RSS.

- 6.

The efficiency of both RSS and NRSS for some sample sizes are nearly close but the overall performance of DNRSS is higher than the NRSS design.

- 7.

Regarding the distribution shape, as the distribution becomes almost symmetric the RE is always higher than the RE for the other shapes of the distribution.

6 Conclusions

In this paper, we have derived the likelihood function for the DNRSS design and compare it with the RSS and DNRSS designs. Moreover, the MLE for IW distribution based on SRS, RSS, NRSS and DNRSS has been done. An intensive numerical comparison between the SRS and different RSS deigns is done and showed that the DNRSS is more efficient for all values for the scale parameter and the two shape parameters of the IW distribution. we found that the maximum likelihood estimation based on DNRSS proposed by Taconeli and Cabral [7] provides slightly more efficient estimators than the likelihood estimation based on the NRSS designs proposed by Zamanzade and Al-Omari [2] in case of inverted Weibull distribution.

References

McIntyre GA (1952) A method for unbiased selective sampling, using ranked sets. Aust J Agric Res 3:385–390

Zamanzade E, Al-Omari AI (2016) New ranked set sampling for estimating the population mean and variance. Hacet J Math Stat 45(6):1891–1905

Keller AZ, Giblin MT, Farnworth NR (1985) Reliability analysis of commercial vehicle engines. Reliab Eng 10(15–25):89–102

Calabria R, Pulcini G (1990) On the maximum likelihood and least squares estimation in inverse Weibull distribution. Stat Appl 2:53–66

Johnson NL, Kotz S, Balakrishnan N (1984) Continuous univariate distributions, vol 1, 2nd edn. Wiley, Hoboken

Sabry MA, Muhammed HZ, Nabih A, Shaaban M (2019) Parameter estimation for the power generalized Weibull distribution based on one- and two-stage ranked set sampling designs. J Stat Appl Probab 8(2019):113–128

Taconeli CA, Cabral AS (2018) New two-stage sampling designs based on neoteric ranked set sampling. J Stat Comput Simul 89:232–248

Balakrishnan N, Cohen AC (1991) Order statistics and inference: estimation methods. Academic Press, Boston, MA

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sabry, M.A., Shaaban, M. Dependent Ranked Set Sampling Designs for Parametric Estimation with Applications. Ann. Data. Sci. 7, 357–371 (2020). https://doi.org/10.1007/s40745-020-00247-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40745-020-00247-3