Abstract

In this work, we study the interior transmission eigenvalues for elastic scattering in an inhomogeneous medium containing an obstacle. This problem is related to the reconstruction of the support of the inhomogeneity without the knowledge of the embedded obstacle by the far-field data or the invisibility cloaking of an obstacle. Our goal is to provide an efficient numerical algorithm to compute as many positive interior transmission eigenvalues as possible. We consider two cases of medium jumps: Case 1, where \(\mathbf{C }_0=\mathbf{C }_1\), \(\rho _0\ne \rho _1\), and Case 2, where \(\mathbf{C }_0\ne \mathbf{C }_1\), \(\rho _0=\rho _1\) with either Dirichlet or Neumann boundary conditions on the boundary of the embedded obstacle. The partial differential equation problem is reduced to a generalized eigenvalue problem (GEP) for matrices by the finite element method. We will apply the Jacobi–Davidson (JD) algorithm to solve the GEP. Case 1 requires special attention because of the large number of zero eigenvalues, which depends on the discretization size. To compute the positive eigenvalues effectively, it is necessary to deflate the zeros to infinity at the beginning of the algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

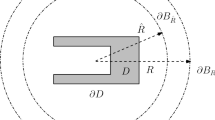

In this paper, we study the interior transmission eigenvalue problem (ITEP) for elastic waves propagating outside of an obstacle. Our aim is to design an efficient numerical algorithm to compute as many transmission eigenvalues as possible for time-harmonic elastic waves. Let D and \(\Omega \) be open bounded domains in \({{\mathbb {R}}}^2\) with smooth boundaries \(\partial D\) and \(\partial \Omega \), respectively. Assume that \(\overline{D}\subset \Omega \). Let \({\mathbf {u}}(x)=[u_1(x),u_2(x)]^{\top }\) be a two-dimensional vector representing the displacement vector, and let its infinitesimal strain tensor be given by \(\varepsilon ({\mathbf {u}})=((\nabla {\mathbf {u}})^T+\nabla {\mathbf {u}})/2\). We consider the linear elasticity; that is, the stress tensor \(\sigma _{{\mathbf {C}}}({\mathbf {u}})\) is defined by \(\varepsilon ({\mathbf {u}})\) via Hook’s law:

where \(\mathbf{C }\) is the elasticity tensor. The elasticity tensor \(\mathbf{C }=(C_{ijkl})\), \(1\le i,j,k,l\le 2\), is a fourth-rank tensor satisfying two symmetry properties:

We require that \(\mathbf{C }\) satisfies the strong convexity condition: there exists a \(\kappa >0\) such that for any symmetric matrix A

In the following, for any two matrices A, B, we denote \(A:B=\sum _{ij}a_{ij}b_{ij}\) and \(|A|^2=A:A\). The elastic body is called isotropic if

where \(\mu \) and \(\lambda \) are called Lamé coefficients. In other words, for an isotropic elastic body, the stress–strain relation is given by

where I stands for the identity matrix. It is not difficult to check that the convexity condition (2) is equivalent to

The core of the ITEP is to find \(\omega ^2\in {{\mathbb {C}}}\) such that there exists a nontrivial solution \(({\mathbf {u}},{\mathbf {v}})\in [H^1(\Omega )]^2\times [H^1(\Omega {\setminus }\overline{D})]^2\) of

where \({{\mathbf {C}}}_0\), \({{\mathbf {C}}}_1\) are elasticity tensors, \(\rho _0, \rho _1\) are density functions, and \(\nu \) is the outer normal of \(\partial \Omega \). Here, we define the boundary operator \(\mathbf{B }\) on \(\partial D\)

where, to abuse the notation, \(\nu \) denotes the unit normal on \(\partial D\) pointing into the interior of D. Recall that \(\sigma _{{{\mathbf {C}}}_1}({\mathbf {v}})\nu \) represents the traction acting on \(\partial D\) or \(\partial \Omega \).

The investigation of the ITEP (4) is motivated by the following practical problem. Let us assume that \(\rho _0\) is a positive constant and \(\mathrm{supp}({{\mathbf {C}}}_0-{{\mathbf {C}}}_1)\subset \Omega {\setminus }\overline{D}\), \(\mathrm{supp}(\rho _1-\rho _0)\subset \Omega {\setminus }\overline{D}\). We can regard \(({{\mathbf {C}}}_1-{{\mathbf {C}}}_0, \rho _1-\rho _0)\) as a “coated” material near \(\partial D\). We now take any solution \({\hat{{\mathbf {u}}}}\) of

as an incident field and consider the incident field \({\hat{{\mathbf {u}}}}\) scattered by the object D and the inhomogeneity of the material, that is, \(({{\mathbf {C}}}_1,\rho _1)\). In particular, when \(\rho _0=1\) and \({{\mathbf {C}}}_0\) is isotropic with constant Lamé coefficients \(\lambda \) and \(\mu \), the typical incident field \({\hat{{\mathbf {u}}}}=:{\hat{{\mathbf {u}}}}_e^{\text{ in }}(x)\) with \(e=p\) or s is given by

where \(\xi \in \Omega :=\{\Vert \xi \Vert _2=1\}\) and \(k_p:=\omega /\sqrt{\lambda +2\mu }\) and \(k_s:=\omega /\sqrt{\mu }\) represent the compressional and shear wave numbers, respectively. Let \({\mathbf {v}}\) be the total field satisfy

Then, if \(\omega ^2\) is an interior transmission eigenvalue of (4) and \({\mathbf {u}}|_{\Omega }={\hat{{\mathbf {u}}}}|_{\Omega }\), then the obstacle and the inhomogeneity \(({{\mathbf {C}}}_1-{{\mathbf {C}}}_0, \rho _1-\rho _0)\) would be nonscattered objects at \(\omega ^2\) when the incident field is \({\hat{{\mathbf {u}}}}\). Consequently, if our aim is to detect D and the inhomogeneity \(({{\mathbf {C}}}_1-{{\mathbf {C}}}_0, \rho _1-\rho _0)\) by the scattering information, we have to avoid the interior transmission eigenvalues.

On the other hand, a more interesting implication of this investigation is to “cloak” the domain D from the elastic waves with suitable coated materials. Now, we regard the incident field \({\hat{{\mathbf {u}}}}\) as a source of seismic waves, i.e., p-waves or s-waves. If \(\omega ^2\) is an interior transmission eigenvalue of (4), then D with coated material \({{\mathbf {C}}}_1, \rho _1\) will be “invisible” from the seismic waves propagating at frequency \(\omega \).

The study of the ITEP originates from the validity of some qualitative approaches to the inverse scattering problems in an inhomogeneous medium, such as the linear sampling method [12] and the factorization method [18]. In recent years, the ITEP has attracted much attention in the study of direct/inverse scattering problems for acoustic and electromagnetic waves in inhomogeneous media [3,4,5,6, 13,14,15, 19, 23]. For the investigation of the ITEP for elastic waves in the case of \(D=\emptyset \), there are a few theoretical results [1, 2, 9,10,11]. Recently, theoretical results on the discreteness and the existence of interior transmission eigenvalues of (4) were proven in [7].

The purpose of this paper is to develop a numerical method to compute the transmission eigenvalues of elastic waves (4). To put this work in perspective, we mention only some results related to the ITEP for elastic waves. As far as we know, two works have studied the computation of the ITEP for elastic waves in the case of \(D=\emptyset \), see [8] and [16]. In [16], a numerical method was presented to compute a few smallest positive transmission eigenvalues of (4). The ITEP was reformulated as locating the roots of a nonlinear function whose values are generalized eigenvalues of a series of self-adjoint fourth-order problems. After discretizing the fourth-order eigenvalue problems using \(H^2\)-conforming finite elements, a secant-type method was employed to compute the roots of the nonlinear function. In [8], a numerical method based on the ideas in [21, 22] was proposed to compute many interior transmission eigenvalues for the elastic waves. In this paper, not only was the case of different densities considered but also the case of different elasticity tensors. The strategy used in [8, 21, 22] is as follows. One first discretizes (4) by the finite element method (FEM). Then, the ITEP is transformed into a generalized eigenvalue problem (GEP). By some ingenious observations, this GEP can be reduced to a quadratic eigenvalue problem (QEP) and, at the same time, unwanted eigenvalues (0 or \(\infty \) eigenvalues) can be removed. One then applies a quadratic Jacobi–Davidson (JD) method with nonequivalence deflation to compute the eigenvalues of the resulting QEP. Contrary to the results obtained in [16], the method implemented in [8] is able to locate a large number of positive transmission eigenvalues of (4).

The paper is organized as follows. In Sect. 2, we describe the discretization of the ITEP using the FEM. The discretization reduces the PDE problem to a GEP. We will apply the JD method to locate positive eigenvalues of the GEP. However, the existence of zero eigenvalues of the GEP will hinder our task. Thus, in Sect. 3, we discuss a nonequivalence deflation technique to remove the zero eigenvalues. In Sect. 4, we describe our numerical algorithm based on the JD method in detail. Section 5 contains all numerical simulations and remarks. We conclude the paper with a summary in Sect. 6.

2 Discretization and the GEP

We first review the discretization of the ITEP (4) based on the standard piecewise linear FEM (see [14] for details). Let

where DOF is the degrees of freedom. Let \(\{\psi _i\}_{i=1}^{n_D}\), \(\{\phi _i\}_{i=1}^{n_I}\), \(\{\theta _i\}_{i=1}^{m_\Sigma }\), and \(\{\xi _i\}_{i=1}^{m_B}\), denote standard nodal bases for the finite element spaces of \(S_h^D\), \(S_h^{I}\), \(S_h^\Sigma \), and \(S_h^B\), respectively. We then set

and

Here we take into account the boundary condition \({\mathbf {u}}= {\mathbf {v}}\) on \(\partial \Omega \) and set \({\mathbf {u}}_h^B = {\mathbf {v}}_h^B\). Expressed by the nodal bases, \({\mathbf {u}}\) and \({\mathbf {v}}\) have different dimensions. We will discuss FEM for the Dirichlet and Neumann data separately.

2.1 Dirichlet condition: \({{\mathbf {v}}}=0\) on \(\partial D\)

For the zero Dirichlet condition, we take \(v_j^{\Sigma } = 0\). Applying the standard piecewise linear FEM and using the integration by parts, we obtain

where \(\lambda =\omega ^2\). Likewise for \({\mathbf {v}}\) but with \(v_j^\Sigma = 0\), we have

and

where \(\nu \) is the unit outer normal of \(\partial \Omega \) and \(D^c=\Omega {\setminus }{\bar{D}}\). Finally, taking into account boundary conditions (4d), (4e), applying the linear FEM to the difference equation between \({\mathbf {u}}\) and \({\mathbf {v}}\) in \(D^c\), and performing the integration by parts again, using (6)–(8), we can derive for \(i=1,\ldots ,m_B\),

For clarity, we define the stiffness matrices and mass matrices as in Table 1.

Additionally, we set \({\mathbf {u}}^I = \begin{bmatrix} u^I_1, \ldots , u^I_{2n_I} \end{bmatrix}^{\top }\), \({\mathbf {u}}^D = \begin{bmatrix} u^D_1, \ldots , u^D_{2n_D}\end{bmatrix}^{\top }\), \({\mathbf {w}}= \begin{bmatrix} w_1,\ldots ,w_{2m_B} \end{bmatrix}^{\top }\), \({\mathbf {u}}^\Sigma = \begin{bmatrix} u_1^\Sigma ,\ldots ,u_{2m_\Sigma }^\Sigma \end{bmatrix}^{\top }\), and \({\mathbf {v}}^I = \begin{bmatrix} v^I_1, \ldots , v^I_{2n_I}\end{bmatrix}^{\top }\). Then, the discretization gives rise to a generalized eigenvalue problem (GEP)

2.2 Neumann condition: \(\sigma _{{{\mathbf {C}}}_1}({{\mathbf {v}}})\nu =0\) on \(\partial D\)

We now consider the homogeneous Neumann condition. The equations for \({\mathbf {u}}\) remain the same. For \({\mathbf {v}}\), using the integration by parts and the boundary condition \(\sigma _{{{\mathbf {C}}}_1}({\mathbf {v}})\nu =0\) on \(\partial D\), we have

to replace Eq. (7) and additionally we have

Similarly, taking into account the boundary conditions on \(\partial \Omega \), applying the linear FEM to the difference equation between \({\mathbf {u}}\) and \({\mathbf {v}}\), and performing the integration by parts, we obtain Eq. (9). With \({\mathbf {v}}^\Sigma = \begin{bmatrix} v_1^\Sigma ,\ldots ,v_{2m_\Sigma }^\Sigma \end{bmatrix}^{\top }\), expressing the system in the matrix form gives the following GEP

3 Solving GEPs

In this section, we will attempt to solve GEPs (10) and (13). In each case of the boundary condition on \(\partial D\), we consider two situations where (i) \({{\mathbf {C}}}_0={{\mathbf {C}}}_1\) and \(\rho _0\ne \rho _1\) (Case 1) and where (ii) \({{\mathbf {C}}}_0\ne {{\mathbf {C}}}_1\) and \(\rho _0=\rho _1\) (Case 2). We discuss the two cases separately.

3.1 Case 1 with Dirichlet condition

In this case, \(K_0^B=K_1^B\), \(K^I_0=K_1^I\), \(K_0^{IB}=K_1^{IB}\), and the stiffness matrix \({{\mathcal {K}}}\) of (10) becomes

We would like to implement the Jacobi–Davidson (JD) method to solve (10). To make the numerical method effective, it is important to remove the null space of \({{\mathcal {K}}}\) as much as possible, which corresponds to zero eigenvalue (unphysical) of (10). To this end, we consider the linear system:

The second equation of (15) reads

By (16) and the first equation of (15), we have

Since, in general, \(n_I\gg m_\Sigma \), it is reasonable to assume that \(K_0^{I\Sigma }\) is of full rank and thus \({\mathbf {u}}_4=0\). Using the third and fourth equations of (15), we immediately obtain

Combining this and (16) gives

Note that the dimension of \({{\mathcal {A}}}\) is \((2n_I +2m_\Sigma )\times (2n_I+2m_B)\). So the nullity of \({{\mathcal {A}}}\) is at most \(2(m_B-m_\Sigma )\). Let \([{\mathbf {u}}_1^\top ,{\mathbf {u}}_5^\top ]\ne 0\) satisfy (17), then

is a null vector of \({{\mathcal {K}}}\), i.e., a solution of (15).

Next, we need an important deflation technique to deflate eigenvalues (including zero eigenvalues) that have been computed to \(\infty \). Suppose that we have computed

that is, \((X_0,\Lambda _0)\) is an eigenpair of \(({{\mathcal {K}}},{{\mathcal {M}}})\). Let \(Y_0\) be chosen so that \(Y_0^\top {{\mathcal {M}}}X_0=I_r\). We then define

where \(\alpha \not \in \text{ Spec }(\Lambda _0)\). Then we can show that

Theorem 1

where \(\text{ Spec }(\widetilde{{\mathcal {K}}},\widetilde{{\mathcal {M}}})\) denotes the set of eigenvalues of the linear pencil \(\widetilde{{\mathcal {K}}}-\lambda \widetilde{{\mathcal {M}}}\).

Proof

It suffices to compute

Recall that \({{\mathcal {K}}}X_0={{\mathcal {M}}}X_0\Lambda _0\). We obtain \(({{\mathcal {K}}}-\lambda {{\mathcal {M}}})X_0={{\mathcal {M}}}X_0(\Lambda _0-\lambda I_r)\) and thus

Substituting this relation into (19) leads to

where we have used the identity \(\hbox {det}(I_s+AB)=\hbox {det}(I_r+BA)\), where \(A\in {{\mathbb {R}}}^{s\times r}\), \(B\in {{\mathbb {R}}}^{r\times s}\), in the second equality above. The theorem follows easily from (20). \(\square \)

In our numerical algorithm, we first use Theorem 1 to deflate the zero eigenvalues to \(\infty \) and compute a number of positive eigenvalues (from the smallest) of the associated matrix pair \((\widetilde{{\mathcal {K}}},\widetilde{{\mathcal {M}}})\). Since the zero eigenvalues have been deflated, it will be quite effective to compute those small positive eigenvalues. We could continue deflating the computed eigenvalues to \(\infty \). However, we will not do so due to the sparsity of matrices \({{\mathcal {K}}}, {{\mathcal {M}}}\). Note that the deflation process will destroy the sparsity of \({{\mathcal {K}}}, {{\mathcal {M}}}\). To keep the deflated matrices \(\widetilde{{\mathcal {K}}}, \widetilde{{\mathcal {M}}}\) as sparse as possible, after computing a number of positive eigenvalues, we first restore those eigenvalues that have been deflated to \(\infty \) back to the matrix pair and deflate the positive eigenvalues that were just computed.

3.2 Case 1 with Neumann condition

Here, the stiffness matrix \({{\mathcal {K}}}\) of (13) becomes

We want to point out that \({\widetilde{K}}_1^{\Sigma }\) here is evaluated in terms of the elasticity tensor \({{\mathbf {C}}}_0\) since we have \({{\mathbf {C}}}_1={{\mathbf {C}}}_0\). Similarly, we want to find the null space of \({{\mathcal {K}}}\) as much as possible. For this purpose, we want to consider the linear system

By the first and second equations of (22), we have

Reasoning as above, we can assume that \(K_0^{I\Sigma }\) is of full rank and hence \({\mathbf {u}}_4-{\mathbf {u}}_6=0\), i.e., \({\mathbf {u}}_4={\mathbf {u}}_6\). It follows from the last equation of (22) that

Substituting (23) into the fourth equation of (22) and combining its third equation gives

Since the matrix in (24) is symmetric and its diagonal matrices are positive-definite, its rank is most likely small if it is singular. Therefore, we can take \({\mathbf {u}}_3={\mathbf {u}}_4=0\) and (23) leads to \((K_0^{I\Sigma })^\top {\mathbf {u}}_1=0\). Using the first or the second equation again, we thus conclude that

Hence if \([{\mathbf {u}}_1^\top ,{\mathbf {u}}_5^\top ]\ne 0\) satisfies (25), then

is a null vector of \({{\mathcal {K}}}\) in (21). Having discovered most of null vectors of \({{\mathcal {K}}}\), we then solve the GEP (13) by the JD method with the deflation technique described as before.

3.3 Case 2 with Dirichlet condition

In this case, we have \(M_1^I=M_0^I\), \(M_1^{IB}=M_0^{IB}\), \(M_1^B=M_0^B\) and the mass matrix \({{\mathcal {M}}}\) is reduced to

This reduced mass matrix has exactly the same form as \({{\mathcal {K}}}\) in (11). Arguing as above, we find the null space of \({{\mathcal {M}}}\), which corresponds to \(\infty \) eigenvalues of (10). Since we are interested in locating first 500 positive eigenvalues, we do not need to carry out this step in the JD method.

3.4 Case 2 with Neumann condition

Similar to the case above, we have \(M_1^I=M_0^I\), \(M_1^{IB}=M_0^{IB}\), \(M_1^B=M_0^B\), \(M_1^{I\Sigma }=M_0^{I\Sigma }\), and \({\widetilde{M}}_1^\Sigma ={\widetilde{M}}_0^\Sigma \). Thus, the mass matrix becomes

while the stiffness matrix \({{\mathcal {K}}}\) is given by (14). This case can be treated similarly as in Sect. 3.3.

4 Numerical strategies

We want to apply the JD method and the deflation technique to solve the GEP: \({\mathcal {L}}(\lambda ){\mathbf {z}}:= ({{\mathcal {K}}}-\lambda {{\mathcal {M}}}){\mathbf {z}}=0\). Roughly speaking, we will apply the JD method to the deflated pencil \(\widetilde{{\mathcal {L}}}(\lambda ):=\widetilde{{\mathcal {K}}}-\lambda \widetilde{{\mathcal {M}}}\). In Case 1, we first deflate the zero eigenvalues to infinity and then apply the JD method to the deflated system to locate a small group of positive eigenvalues (15–20 positive eigenvalues). In Case 2, we begin with the direct implementation of the JD method to \({{\mathcal {L}}}(\lambda )\) and locate a small group of positive eigenvalues. Before continuing with the JD method, we first deflate these eigenvalues to infinity. However, we would not keep deflating found eigenvalues to infinity, because that will destroy the sparsity of the system. It is important to restore the previously deflated eigenvalues (including the zero eigenvalues in Case 1) before further deflation. In doing so, we always perturb \({{\mathcal {L}}}(\lambda )\) by matrices of lower ranks.

To make the paper self-contained, we outline the JD method here (also see [24]). Let \(V_k = [{\mathbf {v}}_1,\ldots ,{\mathbf {v}}_k]\) be a given orthogonal matrix and let \((\theta _k,{\mathbf {u}}_k), \theta _k\ne 0\), be a Ritz pair (an approximate eigenpair) of \(\widetilde{{\mathcal {L}}}(\lambda )\), i.e., \({\mathbf {u}}_k = V_k{\mathbf {s}}_k\) and \((\theta _k,{\mathbf {s}}_k)\) is an eigenpair of \(V_k^T\widetilde{{\mathcal {L}}}(\lambda )V_k\), namely,

with \(\Vert {\mathbf {s}}_k\Vert =1\). Since \(V_k^T\widetilde{{\mathcal {L}}}(\theta _k)V_k\) is of lower rank, (26) can be solved by a usual eigenvalue solver.

Starting from the Ritz pair \((\theta _k,{\mathbf {u}}_k)\), we aim to find a correction direction \({\mathbf {t}}\bot {\mathbf {u}}_k\) such that

Let

be the residual vector of \(\widetilde{{\mathcal {L}}}(\lambda )\) corresponding to the Ritz pair \((\theta _k,{\mathbf {u}}_k)\). To solve \({\mathbf {t}}\) in (27), we rewrite and expand (27)

Using the fact that

we multiply \(\left( I - \dfrac{\widetilde{{\mathcal {M}}}{\mathbf {u}}_k{\mathbf {u}}_k^{\top }}{{\mathbf {u}}_k^{\top }\widetilde{{\mathcal {M}}}{\mathbf {u}}_k} \right) \) on both sides of (29) to eliminate the term \((\lambda -\theta _k )\) and get

Next, applying the orthogonal projection \((I - {\mathbf {u}}_k{\mathbf {u}}_k^{\top })\) and approximating \(\widetilde{{\mathcal {L}}}(\lambda )\) by \(\widetilde{{\mathcal {L}}}(\theta _k)\), we then have the following correction equation:

In designing a stopping criterion in the JD method, we will check the residual of \({{\mathcal {L}}}(\theta _k){\mathbf {w}}_k\) because of the consideration of efficiency, where \((\theta _k,{\mathbf {w}}_k)\) is a Ritz pair of \({{\mathcal {L}}}(\lambda )\) related to \((\theta _k,{\mathbf {u}}_k)\). Moreover, in the deflation process (see Theorem 1), we need to make use of the eigenpairs of the original pencil \({{\mathcal {L}}}(\lambda )\). Therefore, it is required to transform the Ritz pair \((\theta _k,{\mathbf {u}}_k)\) of \(\widetilde{{\mathcal {L}}}(\lambda )\) to a Ritz pair \((\theta _k,{\mathbf {w}}_k)\) of \({{\mathcal {L}}}(\lambda )\). Recall that \(\widetilde{{\mathcal {L}}}(\lambda )=\widetilde{{\mathcal {K}}}-\lambda \widetilde{{\mathcal {M}}}\), where

where

\(Y_0\) satisfies \(Y_0^\top {{\mathcal {M}}}X_0=I_r\), and \(\alpha \not \in \text{ Spec }(\Lambda _0)\).

Theorem 2

Let \((\theta ,{\mathbf {u}})\), \(\theta \ne 0\) and \(\theta \not \in \text{ Spec }(\Lambda _0)\cup \{\alpha \}\), be an eigenpair of \(\widetilde{{\mathcal {L}}}(\lambda )\), i.e., \(\widetilde{{\mathcal {L}}}(\theta ){\mathbf {u}}=0\). Then \((\theta ,{\mathbf {w}})\) is an eigenpair of \({{\mathcal {L}}}(\lambda )\), where

and \({\mathbf {q}}=(\alpha -\theta )(\Lambda _0-\theta I_r)^{-1}Y_0^\top {{\mathcal {M}}}{\mathbf {u}}\).

Proof

Assume that \((\theta ,{\mathbf {u}})\) satisfy

Consider \({\mathbf {w}}={\mathbf {u}}-X_0{\mathbf {q}}\), where \({\mathbf {q}}\in {{\mathbb {R}}}^{r\times 1}\) will be determined later. We now write (33) as

In view of (34), \({{\mathcal {K}}}{\mathbf {w}}=\theta {{\mathcal {M}}}{\mathbf {w}}\) if and only if

i.e.,

Multiplying \(Y_0^\top \) on both sides of (35) and using \(Y_0^\top {{\mathcal {M}}}X_0=I_r\), one obtains that

\(\square \)

By Theorem 2, if we have found a Ritz pair \((\theta _k,{\mathbf {u}}_k)\) of \(\widetilde{{\mathcal {L}}}(\lambda )\) in the JD algorithm, we then transform \({\mathbf {u}}_k\) into \({\mathbf {w}}_k\) using (32) and check the stopping criterion to determine whether \((\theta _k,{\mathbf {w}}_k)\) is a good approximation of the eigenpair for \({{\mathcal {L}}}(\lambda )\). We summarize our method in the following two algorithms. In Algorithm 1, we perform the deflation. Algorithm 2 describes the JD method (with partial deflation).

Before presenting our numerical results in the next section, we would like to validate the GEP deduced from the FEM. In other words, the eigenvalues of the GEP are indeed approximations of the interior transmission eigenvalues. To this end, we compare the theoretical computation for the radially symmetric transmission eigenfunctions obtained in [7] with the eigenvalues of the GEP. For simplicity, we only consider the result of Case 1 with the zero Dirichlet boundary condition. According to [7], \(\Omega \) is a disk of radius 1 and the embedded obstacle D is a disk of radius 0.5. The parameters are set to \(\mu = \lambda = 1\), \(\rho _0 = 1\) and \(\rho _1 = 0.5\). Note that the symmetry is in the sense of \({\mathbf {u}}= u_1^2 + u_2^2\) and \({\mathbf {v}}= v_1^2 + v_2^2\). The comparison is given in Table 2. We also demonstrate that the eigenfunctions computed from the GEP are indeed radially symmetric, as shown in Figs. 1 and 2.

Eigenfunctions \({\mathbf {u}}\) (left sub-figure) and \({\mathbf {v}}\) (right sub-figure) corresponding to the eigenvalue \(\omega = 9.8792\). Here \({\mathbf {u}}\) and \({\mathbf {v}}\) are radially symmetric described by \({\mathbf {u}}=u(r)e_r\) and \({\mathbf {v}}=v(r)e_r\), where \(e_r\) is the unit vector directed at the radial direction

5 Numerical results

In our numerical simulations, we consider an isotropic elasticity system. We test our method for four different embedded obstacles inside the domain of the circle centered at the origin with radius 6; i.e., \(\Omega =\{(x,y): x^2 + y^2 < 6^2 \}\). The dimensions of the four obstacles are shown in Table 4. Standard triangular meshes with equal mesh lengths of approximately 0.04 are generated in the FEM. The number of points for each part is also shown in Table 3. Since we are dealing with an elasticity system in the plane, the dimensions of the stiffness and mass matrices in Table 3 are doubled. The size of each matrix is approximately \(300{,}000 \times 300{,}000\). The parameters for Case 1 are \(\mu _0 = \lambda _0 = 1\), \(\mu _1 = \lambda _1 = 1\), \(\rho _0 = 5\), and \(\rho _1 = 1\), while those for Case 2 are \(\mu _0 = \lambda _0 = 2\), \(\mu _1 = \lambda _1 = 1\), and \(\rho _0 = \rho _1 = 1\). All computations were carried out in MATLAB R2020a. Additionally, the hardware configurations used were two serves equipped with Intel 32-Core Xeon E5-2650 2.60 GHz CPUs with 125.72 GB and Intel Octa-Core Xeon E5520 2.27 GHz CPUs with 70.79 GB.

The major step in our paper [8] is to transform the GEP to a QEP in which the zero eigenvalues are deflated to infinity. Unfortunately, the same approach fails in the GEP considered here, so we must solve the GEP directly. To explain the difficulty of finding positive eigenvalues of the GEP by the JD method, we demonstrate the distribution of eigenvalues for a toy model in Figs. 3 (Case 1) and 4 (Case 2), where the size of the matrix is \(1850\times 1850\). In both cases, positive eigenvalues are surrounded by complex eigenvalues. However, in Case 1 (Fig. 3), there exist a large number of zero eigenvalues (the larger the matrix is, the larger the number of zeros). Therefore, to compute the positive eigenvalues, we must first deflate the zero eigenvalues to infinity (Algorithm 1) and apply the JD method to the deflated matrices. Moreover, in both cases, positive eigenvalues are surrounded by complex eigenvalues, and we also need to deflate them to find our desired eigenvalues.

5.1 Distribution of positive eigenvalues

The simulation results for the Dirichlet and the Neumann boundary conditions on \(\partial D\) are described in more detail below. Recall that in Case 1, we choose \(\mu _0 = \lambda _0 = 1\), \(\mu _1 = \lambda _1 = 1\), \(\rho _0 = 5\), \(\rho _1 = 1\), and in Case 2, we take \(\mu _0 = \lambda _0 = 2\), \(\mu _1 = \lambda _1 = 1\), and \(\rho _0 = \rho _1 = 1\). In Fig. 5, we plot the first 50 positive eigenvalues for Case 1 with either the Dirichlet or the Neumann boundary condition on the obstacles. Figure 6 is the plot of the first 50 positive eigenvalues for Case 2 with either the Dirichlet or the Neumann boundary condition. We also compare the first positive eigenvalues for all the scenarios discussed here; see Table 5.

5.2 Interior transmission eigenvalues and near invisibility

As we mentioned in the Introduction, the study of the interior transmission eigenvalues is closely related to the development of some reconstruction methods. On the other hand, the interior transmission eigenvalues are also connected to invisible cloaking. Such connections were studied extensively for acoustic and electromagnetic waves in [17] and [20]. Roughly speaking, one hopes to make the obstacle invisible (more precisely, the obstacle does not perturb the field corresponding to the background equation) by applying layer isotropic media outside of the obstacle. Similar results for elastic scattering have yet to be investigated. Here, we present some numerical observations that, we hope, can provide some insights into the problem for future study.

We plot the eigenfunctions corresponding to the first positive eigenvalues for all cases. We first show the results for Case 1. The eigenfunctions \(({\mathbf {u}},{\mathbf {v}})\) corresponding to the first positive eigenvalue for four different obstacles with Dirichlet boundary conditions are given in Fig. 7. Figure 8 shows the corresponding results for the Neumann condition.

The eigenfunctions associated with the first positive eigenvalues for four obstacles with Dirichlet condition (Case 1). In each subfigure (grouped by \(2\times 2\) plots corresponding to different shape of obstacle), the upper left, upper right, lower left, lower right plots correspond to the values of \(u_1\), \(u_2\), \(v_1\), and \(v_2\), respectively

The eigenfunctions associated with the first positive eigenvalues for four obstacles with Neumann condition (Case 1). In each subfigure (grouped by \(2\times 2\) plots corresponding to different shape of obstacle), the upper left, upper right, lower left, lower right plots correspond to the values of \(u_1\), \(u_2\), \(v_1\), and \(v_2\), respectively

Similar plots for Case 2 with either Dirichlet or Neumann conditions are given in Figs. 9 and 10 , respectively.

The eigenfunctions associated with the first positive eigenvalues for four obstacles with Dirichlet condition (Case 2). In each subfigure (grouped by \(2\times 2\) plots corresponding to different shape of obstacle), the upper left, upper right, lower left, lower right plots correspond to the values of \(u_1\), \(u_2\), \(v_1\), and \(v_2\), respectively

The eigenfunctions associated with the first positive eigenvalues for four obstacles with Neumann condition (Case 2). In each subfigure (grouped by \(2\times 2\) plots corresponding to different shape of obstacle), the upper left, upper right, lower left, lower right plots correspond to the values of \(u_1\), \(u_2\), \(v_1\), and \(v_2\), respectively

To interpret Figs. 7, 8, 9, and 10 , let \({\mathbf {u}}=[u_1,u_2]^\top \) be a solution of the elasticity system without obstacle D in \(\Omega \). Ideally, to achieve invisibility, we expect that the existence of the obstacle does not perturb the field \({\mathbf {u}}\) outside of D. In the case of changing only the density function outside of D; i.e., \({{\mathbf {C}}}_0={{\mathbf {C}}}_1\), where \(\rho _0\ne \rho _1\), the field \({\mathbf {v}}=[v_1,v_2]^\top \) in \(\Omega {\setminus }{\bar{D}}\) is clearly different from the background field \({\mathbf {u}}\) (Figs. 7, 8). Those numerical simulations strongly suggest that changing only the density in \(\Omega {\setminus }{\bar{D}}\) is unlikely to make an obstacle invisible.

The situation changes dramatically if we apply a different elasticity tensor outside of D, which is Case 2. Figures 9 and 10 clearly indicate that the background field in \(\Omega {\setminus }{\bar{D}}\) is not perturbed by the existence of an obstacle. We observe this phenomenon regardless of the shape of the obstacle or the boundary condition on boundary \(\partial D\). Even though we show the numerical results for only the first positive eigenvalues, we believe that the same phenomenon holds for other positive eigenvalues. In other words, if an incident field, e.g., plane waves or Herglotz waves, can be approximated arbitrarily closely by the linear span of the eigenfunctions corresponding to the positive eigenvalues for the background equation, then the embedded obstacle will produce a small scattered field; that is, the obstacle will be nearly invisible. Consequently, our simulation results provide strong evidence that the invisibility of the obstacle D can most likely be achieved by applying an appropriate elasticity tensor outside of D. Of course, ideally, one would like to find a universal elasticity tensor with such an obstacle that is invisible for all frequencies. However, this is still a challenging open problem.

6 Conclusions

In this work, we study the computation of interior transmission eigenvalues for elastic waves containing an obstacle. This problem is inspired by the inverse problem of determining the support of the inhomogeneity and the invisibility cloaking of an obstacle. Using the FEM, we transform the continuous ITEP into a GEP for matrices. We then develop numerical strategies based on the JD method to locate the first 50 positive eigenvalues of the GEP. Notably, in the case of different density functions, we must first deflate zero eigenvalues to infinity to find positive eigenvalues effectively.

The numerical results for Case 2 show some interesting phenomena about the transmission eigenfunction \(({\mathbf {u}},{\mathbf {v}})\). It can be observed from Figs. 9 and 10 that the existence of an obstacle does not perturb the field \({\mathbf {u}}\) in \(\Omega {\setminus }{\bar{D}}\). We hope that this observation can pave the way to the study of invisibility cloaking of an obstacle in elastic waves.

References

Bellis, C., Cakoni, F., Guzina, B.B.: Nature of the transmission eigenvalue spectrum for elastic bodies. IMA J. Appl. Math. 78, 895–923 (2013)

Bellis, C., Guzina, B.B.: On the existence and uniqueness of a solution to the interior transmission problem for piecewise homogeneous solids. J. Elast. 101, 29–57 (2010)

Cakoni, F., Colton, D., Monk, P., Sun, J.: The inverse electromagnetic scattering problem for anisotropic media. Inverse Prob. 26(7), 074004 (2010)

Cakoni, F., Gintides, D., Haddar, H.: The existence of an infinite discrete set of transmission eigenvalues. SIAM J. Math. Anal. 42(1), 237–255 (2010)

Cakoni, F., Haddar, H.: On the existence of transmission eigenvalues in an inhomogeneous medium. Appl. Anal. 88(4), 475–493 (2009)

Cakoni, F., Haddar, H.: Transmission eigenvalues in inverse scattering theory. In: Uhlmann, G. (ed.) Inverse Problems and Applications: Inside Out II, Volume 60 of Mathematical Sciences Research Institute Publications, pp. 527–578. Cambridge University Press, Cambridge (2012)

Cakoni, F., Kow, P.-Z., Wang, J.-N.: The interior transmission eigenvalue problem for elastic waves in media with obstacles. Preprint (2020)

Chang, W.-C., Lin, W.-W., Wang, J.-N.: Efficient methods of computing interior transmission eigenvalues for the elastic waves. J. Comput. Phys. 407, 109227 (2020)

Charalambopoulos, A.: On the interior transmission problem in nondissipative, inhomogeneous, anisotropic elasticity. J. Elast. 67, 149–170 (2002)

Charalambopoulos, A., Anagnostopoulos, K.A.: On the spectrumn of the interior transmission problem in isotropic elasticity. J. Elast. 90, 295–313 (2008)

Charalambopoulos, A., Gintides, D., Kiriaki, K.: The linear sampling method for the transmission problem in three-dimensional linear elasticity. Inverse Prob. 18, 547–558 (2002)

Colton, D., Kirsch, A.: A simple method for solving inverse scattering problems in the resonance region. Inverse Prob. 12(4), 383 (1996)

Colton, D., Kress, R.: Inverse Acoustic and Electromagnetic Scattering Theory. Applied Mathematical Sciences, vol. 93, 3rd edn. Springer, New York (2013)

Colton, D., Monk, P., Sun, J.: Analytical and computational methods for transmission eigenvalues. Inverse Prob. 26, 045011 (2010)

Colton, D., Päivärinta, L., Sylvester, J.: The interior transmission problem. Inverse Probl. Imaging 1(1), 13–28 (2007)

Ji, X., Li, P., Sun, J.: Computation of transmission eigenvalues for elastic waves. arXiv:1802.03687v1 [math.NA] (2018)

Ji, X., Liu, H.: On isotropic cloaking and interior transmission eigenvalue problems. Eur. J. Appl. Math. 29(2), 253–280 (2018)

Kirsch, A.: Factorization of the far field operator for the inhomogeneous medium case and an application in inverse scattering theory. Inverse Prob. 15, 413–429 (1999)

Kirsch, A.: On the existence of transmission eigenvalues. Inverse Probl. Imaging 3(2), 155–172 (2009)

Li, J., Li, X., Liu, H., Wang, Y.: Electromagnetic interior transmission eigenvalue problem for inhomogeneous media containing obstacles and its applications to near cloaking. IMA J. Appl. Math. 82, 1034–1042 (2017)

Li, T., Huang, T.M., Lin, W.W., Wang, J.N.: An efficient numerical algorithm for computing densely distributed positive interior transmission eigenvalues. Inverse Prob. 33, 035009 (2017)

Li, T., Huang, T.M., Lin, W.W., Wang, J.N.: On the transmission eigenvalue problem for the acoustic equation with a negative index of refraction and a practical numerical reconstruction method. Inverse Probl. Imaging 12(4), 1033–1054 (2018)

Päivärinta, L., Sylvester, J.: Transmission eigenvalues. SIAM J. Math. Anal. 40(2), 738–753 (2008)

Sleijpen, G.L.G., van der Vorst, H.A.: A Jacobi–Davidson iteration method for linear eigenvalue problems. SIAM J. Matrix Anal. Appl. 17, 401–425 (1996)

Acknowledgements

Chen and Wang were supported in part by MOST 108-2115-M-002-002-MY3, Taiwan. Li was partially supported by the NSFC grant 11971105, China and the Nanjing Center of Applied Math (NCAM). Lin was partially supported by the MOST 106-2628-M-009-004, Taiwan, the ST Yau Center at NCTU and the NCAM at Nanjing.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chang, WC., Li, T., Lin, WW. et al. Computation of the interior transmission eigenvalues for elastic scattering in an inhomogeneous medium containing an obstacle. Res Math Sci 8, 49 (2021). https://doi.org/10.1007/s40687-021-00276-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40687-021-00276-1