Abstract

Given a desired signal \( y^d= (y^{d}_{i})_{i \in \{0,\ldots ,N \}}\), we investigate the optimal control, which applied to nonlinear discrete distributed system \( x_{i+1} = Ax_i + Ex_{i} + Bu_i\), to give a desired output \( y^d \). Techniques based on the fixed point theorems for solving this problem are presented. An example and numerical simulation is also given.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The research devoted the controllability was started in the 1960s by Kalman and refers to linear dynamical systems. Because the most of practical dynamical systems are nonlinear, that’s why, in recent years various controllability problems for different types of nonlinear or semilinear dynamical systems have been considered [1,2,3,4,5,6,7,8,9].

There are large type of controllability such as completely controllability, small controllability, local controllability, regional controllability, near controllabilitry, null controllability and output controllability [4,5,6, 8,9,10,11,12,13,14].

In the present paper we investigate the output controllability of a class of nonlinear infinite-dimensional discrete systems. More precisely, we consider the nonlinear system whose state is described by the following difference equation

the corresponding output signal is

The operator \(A : X \longrightarrow X\) is supposed to be bounded on the Hilbert space X (the state space), \(E : X \longrightarrow X\) is a nonlinear operator, \(B \in \mathcal{L}(U,X)\) and \(C \in \mathcal{L}(X,Y)\) where the Hilbert space U is the input space and the Hilbert space Y is the output one.

Given a desired output \( y^d = (y^{d}_{i})_{i \in \{1,\ldots ,N \} } \), we investigate the optimal control \( u= (u_{i})_{i \in \{0,1,\ldots ,N-1 \}} \) which minimizes the functional cost

over all controls satisfying

To solve this problem and inspired by what was done in [15, 16] we use, in the first part, a state space technique to show that the problem of input retrieval can be seen as a problem of optimal control with constraints on the final state [17]. In the second part, we use a technique based on the fixed point theorem (see [2, 3, 7, 18,19,20,21]). We establish that the set of admissible controls is completely characterized by the pseudo inverse corresponding to the linear part of the system and the fixed points of an appropriate mapping. Finally, A numerical example is given to illustrate the obtained results.

Remark 1

The assumption that A is a bounded operator is not so restrictive even in the distributed parameter system. We can see, for example that the discrete system obtained from the evolution equation considered in [22], satisfies this condition.

2 Statement of the problem

We consider the discrete system described by

the corresponding output

where \( x_i \in X \) is the state of system \((S),\;u_i \in U \) is the control variable and \(y_i \in Y\) is the output function, \(A \in \mathcal{L}(X), \, B \in \mathcal{L}(U,X)\) and \(C \in \mathcal{L}(X,Y)\). Consider the following control problem. Given a desired trajectory \( y^d = (y_1^d,\ldots , y_N^d)\), we try to find the optimal control \(u = (u_0,u_1,\ldots , u_{N-1})\) which minimizes the functional cost

over all controls satisfying

2.1 An adequate state space approach

In this subsection, we give some technical results which will be used in the sequel. For a finite subset \(\sigma _{r}^{s}=\{r,r+1,\ldots ,s\} \;\text{ of }\;{ ZZ}\), with \(s \ge r\), let \(l^{2}(\sigma ^s_{r},X)\) be the space of all sequences \((z_i)_{i \in \sigma _{r}^{s}}\;\;,\;z_i \in X.\)

Remark 2

\(l^{2}(\sigma ^{s}_{r},X)\) is a Hilbert space with the usual addition, scalar multiplication and with an inner product defined by

Let \(L_{1}\;\text{ and }\;F\) be the operators given by

and define the variables \(z^i \in l^2(\sigma _{-N}^{-1};Y)\) by

where \((x_i)_i\) is the solution of system (S). Then the sequence \((z^i)_i\) is the unique solution of the following difference equation

Let \(e_i \in X \times l^2(\sigma _{-N}^{-1};X)\) be the signals defined by \(e_{i} = \left( \begin{array}{l} x_i \\ z^i \end{array} \right) \), then we easily establish the following result.

Proposition 1

\( (e_i)_{i \in \sigma _{0}^{N}} \) is the unique solution of the difference equation described by

where \( \varPsi \) = \( \left( \begin{array}{cc} A &{} 0 \\ F &{} L_{1} \end{array} \right) \), \( \varPhi \) = \( \left( \begin{array}{cc} E &{} 0 \\ 0 &{} 0 \end{array} \right) \;\) and \(\; \bar{B} \) = \( \left( \begin{array}{c} B \\ 0 \end{array} \right) \).

Remark 3

The equality

allows us to assimilate the trajectory \((x_0,\ldots ,x_{N-1},x_N)\) of system (S) to the final state \(e_N\;\text{ of }\;(S_1)\). This implies that our problem of input retrieval is equivalent to a problem of optimal control with constraints on the final state \(e_N\).

2.2 The optimal control expression

Let’s consider the operator \(\varGamma \) defined by

with \( t_i=C\xi _{i-N}, \quad \forall i \in \sigma _{1}^{N-1} \;\;\; \text{ and } \;\;\; t_N=Cx \).

Definition 1

-

(a)

The system (S) is said to be exactly output controllable on \(\sigma _{1}^{N}\) if \( \forall x_0 \in X, \;\; \forall y \in l^{2}(\sigma _1^{N};Y),\;\;\exists u \in l^{2}(\sigma _0^{N-1};U)\;\;\text{ such } \text{ that }\;\; Cx_{i} = y_{i},\;\;\;i \in \sigma _{1}^{N}.\)

-

(b)

The system (S) is said to be weakly output controllable on \(\sigma _{1}^{N}\) if \(\forall \epsilon > 0, \;\;\forall x_0 \in X, \;\; \forall y \in l^{2}( \sigma _1^N;Y),\;\exists u \;\;\text{ such } \text{ that }\;\; \parallel Cx_{i} - y \parallel _{Y}\; \le \;\epsilon . \)

Definition 2

-

(a)

The system (S) is said to be \(\varGamma \)-controllable on \(\sigma _{1}^{N}\) if \( \forall e_0 \in X, \;\; \forall y^{d} \in l^{2}(\sigma _1^{N};Y), \;\;\exists u \in l^{2}(\sigma _0^{N-1};U)\) such that \(\varGamma e_N = y^{d}\).

-

(b)

The system (S) is said to be \(\varGamma \)-weakly controllable on \(\sigma _{1}^{N}\) if \(\forall \epsilon > 0, \;\;\forall e_0 \in X, \;\; \forall y^{d} \in l^{2}(\sigma _1^N;Y),\;\exists u\) such that\( \parallel \varGamma e_N - y^{d} \parallel _{l^{2} (\sigma _1^N;Y)} \;\le \;\epsilon \).

Remark 4

From the above definition, we can easily establish the following results

-

(i)

(S) is exactly output controllable \(\sigma _{1}^{N}\) \(\Longleftrightarrow \) \((S_1)\) is \(\varGamma \)-controllable on \(\sigma _{1}^{N}\).

-

(ii)

(S) is weakly output controllable on \(\sigma _{1}^{N}\) \(\Longleftrightarrow \) \((S_1)\) is \(\varGamma \)-weakly controllable on \(\sigma _{1}^{N}\).

Proposition 2

Given a desired output \( y^d = (y_1^d,\ldots , y_N^d)\) in \(l^2(\sigma _1^N;Y)\), the problem \((\mathcal{P}_{1})\) and \((\mathcal{P}_{2}) \) defined as:

have the same solution \( u^* \).

By Proposition 2, the resolution of problem \((\mathcal{P}_{1})-(\mathcal{P}_{2}) \) is equivalent to find the control \(u^{*}\) which ensure the \(\varGamma \)-controllability of system \((S_1)\) and with a minimal cost.

3 Statement of the new problem

We consider the discrete system described by

where \( e_i \in \mathcal{X}= X \times l^{2}(\sigma _{-N}^{-1};X) \) is the state of system \((S_1),\;u_i \in U\) is the control variable, \(\varPsi \in \mathcal{L}(\mathcal{X})\) and \(\bar{B} \in \mathcal{L}(U,X)\). Consider the following control problem. Given a desired trajectory \( y^d = (y_1^d,\ldots , y_N^d)\), we find the control \(u^{*}\) which minimizes the functional cost

over all controls satisfying

\(e_{N}\) is the final state of system \((S_1)\) at instant N, and \(\varGamma \) is given by (4). We shall call \(u^*\) the wanted control and the solution of system \((S_1)\) is

Let L denote the linear operator defined on \(T=l^{2}\left( \sigma _1^{N};\mathcal{X}\right) \) by

where

and Let H denote the linear operator defined on \(\mathcal{U}\) by

where

So, the Eq. (7) can be rewritten as

where

The operator H is not invertible in general. Introduce then:

this operator is invertible and its inverse, which is defined on Range(H) can be extended to \(Range(H)\bigoplus \) \(Range(H)^{\perp }\) as follows

the operator \(H^{\dag }\) is known as the pseudo inverse operator of H. If Range(H) is closed then \(T= Range(H) \bigoplus \) \(Range(H)^{\perp }\) and \(H^{\dag }\) is defined on all the space T. The mapping \(H^{\dag }\) satisfies in particular

4 Fixed point technique

4.1 Characterization of the set of admissible controls

Let \( y^d = (y_1^d,\ldots , y_N^d)\) a predefined output. The aim of this section is to give a characterization of the set of all admissible control in consideration the fixed points of a function appropriately chosen, i.e., We shall characterize the set \(\mathcal{U}_{ad}\) of all control which ensure the \(\varGamma \)-controllability.

where \((e_{0},\dots ,e_{N})\) is the trajectory which takes system from the initial state \(e_0\). If Range(H) is closed then \(T= Range(H)\bigoplus Range(H)^{\perp }\) and \(H^{\dag } \) is defined on all the space T. We suppose that Range(H) is closed. Let \(p: T \longrightarrow Range(H)\) be any projection on Range(H) and \(\bar{e} \ne 0\) be any fixed element of Range(H), we define

and let

and we consider the mapping

Then, we have the following proposition.

Proposition 3

Let \( P_{g}= \{e \in T /g(e) = e \}\) denotes the set of all fixed points of g. Then

Proof

Let \(e^{*} \in P_{g}\), we have

then

which implies that

that means

and \( f_{\bar{e}}(e^{*})=0\) which carries that \( \varGamma e_{N}^{*}= y^{d}.\)

Consequently, the Eq. (11) become

Let \( u^{*} = H^{\dag }\xi (e^{*}) + \alpha ^{*},\;\text{ with }\;\alpha ^{*} \in \ker (H)\;\text{ and }\;e^{*} \in P_{g} \), then

and from (12), we have

which implies that

thus

Consequently, \(\forall e \in P_{g}\), we have  and

and

Now, we show that  . Let

\(u^{*} \in \mathcal{U}_{ad}\) and \((e_1^{u^{*}},\ldots ,e_{N-1}^{u^{*}})\) the trajectory of system

\((S_1)\) corresponding to control \(u^{*}\), then we have

. Let

\(u^{*} \in \mathcal{U}_{ad}\) and \((e_1^{u^{*}},\ldots ,e_{N-1}^{u^{*}})\) the trajectory of system

\((S_1)\) corresponding to control \(u^{*}\), then we have

and

Consequently

and

Then \(e^{u^{*}}\) is a fixed point of the mapping of g, moreover, we can write

which implies that

consequently

and finally we have

\(\square \)

Remark 5

The fixed points of g are independent of the choice of the projection p and the element \(\bar{e}\). Indeed, let \(p_1\) and \(p_2\) two projection on \(Im \; H\) and \(\bar{e}_1\) and \(\bar{e}_2\) two any elements not equal to zero of \(Im \; H\). Let’s consider the applications

Let e a fixed point of \(g_1\), by proof of Proposition 3, we have \(\varGamma e_N=y^d\) and \(\xi (e)\in Im\;H\), he result that \(p_2\xi (e)=\xi (e)\) and \(f_{\bar{e}_1}(e)=0\), then

What shows that e is a fixed point of \(g_2\). By symmetry, it clear that the fixed points of \(g_2\) are also the fixed points of \(g_1\).

4.2 Problem of minimization

By the above proposition, we can characterize the set of admissible control \(\mathcal{U}_{ad}\), among those controls, we allow to determine those with the minimal norm, i.e., we solve the following problem:

If we suppose that \(P_{g}\) is fini, i.e., \(P_{g}= \{e^{1},\ldots ,e^{q} \}\), we have

where

then, we obtain

Remark 6

Let \(u \in \mathcal{U}_{ad}^{i}\) then \(u = H^{\dagger }\xi (e^{i}) + v\) with  . Thus

. Thus

finally we have

with

Lemma 1

The two following problems are equivalents

-

(a)

\(\left\{ \begin{array}{l} {\displaystyle \min _{u \in \mathcal{U}_{ad}^{i}}}J(u) =\,\parallel u^{*} \parallel ^{2} \\ \text{ with }\; u^{*}= H^{\dagger }\xi (e^{i}) + v^{*} \end{array} \right. \)

-

(b)

Proof

(b) \(\Longrightarrow \) (a) Let \(w \in \mathcal{U}_{ad}^{i}\) which implies that \(w = H^{\dagger }\xi (e^{i}) + \bar{w}\) with  , then we have

, then we have

thus

consequently

So, \(\forall w \in \mathcal{U}_{ad}^{i} \), we have \( J(w) \ge J(u^{*})\) and

(a) \(\Longrightarrow \) (b) Let \(u^{*}\) such that \(\parallel u^{*} \parallel ^{2}\,= {\displaystyle \min _{u \in \mathcal{U}_{ad}^{i}}}(J(u))\), or \(\mathcal{U}_{ad}^{i}\) is closed, then we have \(u^{*} \in \mathcal{U}_{ad}^{i}\) and there exists  such that \(u^{*}= H^{\dag } \xi (e_{i}) + v^{*}\).

such that \(u^{*}= H^{\dag } \xi (e_{i}) + v^{*}\).

Let  and consider \(u=H^{\dag }\xi (e_{i}^{*}) + w\) then we have

and consider \(u=H^{\dag }\xi (e_{i}^{*}) + w\) then we have

\({\parallel } H^{\dag }\xi (e_{i}^{*}){\parallel }^{2} {+}\,2{<}H^{\dag }\xi (e_{i}^{*}), w{>} + {\parallel } w {\parallel }^{2} \ge {\parallel } H^{\dag }\xi (e_{i}^{*}){\parallel }^{2}+\,2<H^{\dag }\xi (e_{i}^{*}), v^{*}> + \parallel v^{*}\parallel ^{2}\)

thus, we have

which implies that

consequently

\(\square \)

Theorem 1

If we suppose that the set \(P_g\) is finite, then the optimal control allow to have the \(\varGamma \)-Controllability (then the exactly output controllability of system (S)) is given by

with \( e^{i_{0}}\) a fixed point of application g given by (10) and which verified

Proof

Let’s consider \(P_g=\{e^1,\ldots ,e^q\}\), then by Lemma 1, we have

where \(v^{*}\) is an element of H that achieves the minimum of the functional J given by (14), However, H is a closed subspace, then the minimum of J is reached for \(v^{*}=0\) and therefore, we have

While using the equivalence (13), we deduct that

where \(e^{i_0}\in \{e^{i_1},\ldots ,e^{i_q}\}\). \(\square \)

5 Other application for characterize the set of admissible controls

In this section, a necessary and sufficient condition based on the set of fixed points of an other application appropriately chosen, for that a control is admissible. Indeed, let’s consider the operator L, H, \(\varPsi \) and \(\varGamma \) defined in preceding paragraph and we define the Hilbert spaces \(\mathcal{M} = T \times \mathcal{Y}\) with \(\mathcal{Y}=l^2(\sigma _1^N;Y)\) and the operators \(\mathcal{S}\), \(\mathcal{L}\), \(\mathcal{H}\) and \(\tilde{\xi }\) by

We remind that the solution of system (5) is written in the form

which give

We suppose that \(Im\;\mathcal{H}\) is closed, then the pseudo inverse \(\mathcal{H}^{\dagger }\) of \(\mathcal{H}\) is defined on all space \(\mathcal{M}\). Let’s \(y^d\) a fixed element of \(\mathcal{Y}\), we define the following application

with \( p: T \longrightarrow Im \; \mathcal{H}\) an any projection on  . Then we have the following result.

. Then we have the following result.

Lemma 2

If \(\left( \begin{array}{l} x \\ z \end{array} \right) \in \mathcal{M}\) is a fixed point of \(\mathcal{G}\), then \(\tilde{\xi }\left( \begin{array}{l} x \\ y^d \end{array} \right) \in Im\;\mathcal{H}\).

Proof

If \(\left( \begin{array}{l} x \\ z \end{array} \right) \) is a fixed point of \(\mathcal{G}\), then we have

which implies

which show that \(\tilde{\xi }\left( \begin{array}{l} x \\ y^d \end{array} \right) \in Im\;\mathcal{H}\). \(\square \)

Theorem 2

Let’s \(y^d\) a desired output, the control \(u^{*}= \mathcal{H}^{\dagger }\tilde{\xi }\left( \begin{array}{l} x^{*} \\ y^d \end{array} \right) + v^{*}\) ensure the \(\varGamma \)-controllability of system (5) where \(x^{*} \in T\) and \(v^{*}\in Ker \; \mathcal{H}\) if and only if \((x^{*}, y^d)\) is a fixed point of application \(\mathcal{G}\) given by (16).

Proof

Let’s consider \(x^{*} \in T\) and \(v^{*}\in Ker \; \mathcal{H}\). If we suppose that \(u^{*}= \mathcal{H}^{\dagger }\tilde{\xi }\left( \begin{array}{l} x^{*} \\ y^d \end{array} \right) + v^{*}\in \mathcal{U}\) ensure the \(\varGamma \)-controllability of system (\(S_1\)), i.e., \(\varGamma e_N^{u^{*}}=y^d\) where \(e=(e_1^{*},\ldots ,e_N^{*})\) is the trajectory of system (5) corresponding to the control \(u^{*}\). Then, by Eq. (15), we have

which implies that \(\tilde{\xi }\left( \begin{array}{l} x^{*} \\ y^d \end{array} \right) \in Im\;\mathcal{H}\), consequently

So, we have

which carries that \((x^{*},y^d)^{\top }\) is a fixed point of \(\mathcal{G}\).

Now, if we suppose that \((x^{*},y^d)^{\top }\) is a fixed point of \(\mathcal{G}\), then by Lemma 2, we have \(\tilde{\xi }\left( \begin{array}{l} x^{*} \\ y^d \end{array} \right) \in Im\;\mathcal{H}\). On other hand, we have

Let \(v^{*}\in Ker \; \mathcal{H}\), then if we consider the control \(u^{*}= \mathcal{H}^{\dagger }\tilde{\xi }\left( \begin{array}{l} x^{*} \\ y^d \end{array} \right) + v^{*}\), we have \(\mathcal{H}u^{*}= \tilde{\xi }\left( \begin{array}{l} x^{*} \\ y^d \end{array} \right) \) and

If we replace \(\mathcal{S}\), \(\mathcal{L}\) and \(\mathcal{H}\) by their expression, we obtain

So, \(x^{*} = \tilde{\varPhi }e_0 + Lx^{*} + Hu^{*}\) implies that \(x^{*}\) is the trajectory of system (5) corresponding to control \(u^{*}\) and

Consequently the control \(u^{*}= \mathcal{H}^{\dagger }\tilde{\xi }\left( \begin{array}{l} x^{*} \\ y^d \end{array} \right) + v^{*}\) ensure the \(\varGamma \)-controllability of system (5), which end the proof. \(\square \)

6 Example and numerical simulation

Consider the following system

the output function is given by

where \(x(t)\in X=L^2(0,1;{ IR})\), \(u(t)\in U={ IR}\), D and M are respectively the linear and the nonlinear maps defined by

and

where \(\phi _n=\sqrt{2}\sin {(n\pi .)}, \; n\ge 1\). The laplacien \(\varDelta \) is the infinitesimal generator of the strongly-continuous semi-group \((S(t))_{t\ge 0}\) defined by

The operator M satisfied the Lipshitz condition. Indeed, for all \(x,y \in X\), we have

where \(x_1=<x,\phi _1>\) and \(y_1=<y,\phi _1>\).

Consequently, the system (17) has a unique mild solution in \(L^2(0,1;X)\) (see Balakrishnan [23]) given by

Let \(N\in { IN}\), \(\delta =\frac{1}{N}\) the sampling data, \(t_i=i\delta \), \(\forall i \in { IN}\), \(A=S(\delta )\), \(x_i=x(t_i)\), \(u_i=u(t_i)\) and \(y_i=y(t_i)\), the discrete version of system (17), (18) is the following

where E, B, and C are given by

\(E={\displaystyle \int _{0}^{\delta }}S(r)M dr\), \(\quad B={\displaystyle \int _{0}^{\delta }}S(r)D dr\) and

\(Cx=<x,\phi _1>\), \(\quad \forall x \in X\).

By a direct calcul, we can verifies that the operator H, L and \(\xi \), the sets \(Ker \;H\), \((Ker \; H)^{\top }\) and \(Im \;H\) are given by

where \(\bar{u}_i={\displaystyle \sum \nolimits _{j=0}^{i}}e^{-j\pi ^2 \delta }u_{i-j}\phi _1\) and \(i\in \sigma _{1}^{N}\),

where \(\bar{x}_i={\displaystyle \sum \nolimits _{j=0}^{i}}e^{-j\pi ^2 \delta }\sin {(<x_{i-j},\phi _1>)}\phi _1\) and \(x_i=Ge_i, \;\;\; i\in \sigma _{1}^{N}\) with

and

Let \(\tilde{H}\) the operator defined by

where

Lets \(\bar{e}=(b_1,0,\ldots ,0)^{\top }\) where \(b_1=\left( \begin{array}{c} \phi _1\\ (0,\ldots ,0) \end{array} \right) \) and the projection P

with \(\bar{z}_i=<z_{i}^1,\phi _1>\phi _1\) and \(z_i^1=Gz_i, \;\;i\in \sigma _{1}^{N} \).

The map H is given by \(H : e\in l^2(\sigma _{1}^{N},\mathcal{X}) \longrightarrow H e\in l^2(\sigma _{1}^{N},\mathcal{X})\), where, for every \(i\in \sigma _{1}^{N}\), we have

where \(\bar{x}_i=<x_{i},\phi _1>\phi _1\).

Let e a fixe point of H, we have

where \(x_i=Ge_i\). As \((f_{\bar{e}}(e))_i\), \(i\in \sigma _{2}^{N}\), we show that \((f_{\bar{e}}(e))_1=0\). Indeed, if we suppose that \((f_{\bar{e}}(e))_1 \not = 0\), then \((f_{\bar{e}}(e))_1=\bar{e}_1=b_1=\left( \begin{array}{c} \phi _1\\ (0,\ldots ,0) \end{array} \right) \) thus

which implies that

which is absurd, then \(f_{\bar{e}}(e)=0\) and

Consequently

where \(\bar{y}_{i}^d={\displaystyle \sum \nolimits _{k=0}^{i}}e^{-k\pi ^2 \delta }y_{i-k}^d\phi _1\) and \(\forall i\in \sigma _{1}^{N}\).

Consequently

Numerical simulation For

we obtain the numerical results describes in Table 1.

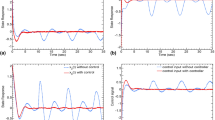

The corresponding optimal cost is \(J(u^{*})=0.34819\). Some control trajectories for different values of N are depicted in Fig. 1.

7 Conclusion

In this paper, we investigate the output controllability problem for nonlinear discrete distributed system with energy constraint. We use a technique based on the fixed point theorem and we establish that the set of admissible controls can be characterized by the set of the fixed point of an appropriate mapping. A numerical example is given to illustrate the obtained results.

References

Chalishajar DN, George RK, Nandakumaran AK, Acharya FS (2010) Trajectory controllability of nonlinear integro-differential system. J Frankl Inst 347:10651075

De Souza JAMF (1986) Control, state estimation and parameter identification of nonlinear distributed parameter systems using fixed point techniques: a survey. In: Proceedings of the 4th IFAC symposium on control of distributed parameter systems, Los Angeles, California, USA, July 1986, pp 377–382

De Souza JAMF (1988) Some techniques for control of nonlinear distributed parameter systems. In: Anales (Proceedings) of the III Congreso Latino-Americano de Control Automático (III CLCA), vol 2. Viña del Mar, Chile, pp 641–644

Klamka J (2009) Constrained controllability of semilinear systems with delays. Nonlinear Dyn 56(12):169–177

Klamka J (2013) Controllability of dynamical systems. A survey. Bull Pol Acad Sci Tech Sci 61(2):335–342

Klamka J, Czornik A, Niezabitowski M, Babiarz A (2015) Trajectory controllability of semilinear systems with delay. In: Nguyen N, Trawinski B, Kosala R (eds) Intelligent information and database systems, ACIIDS 2015. Lecture notes in computer science, vol 9011. Springer, Cham, pp 313–323

Tan XL, Li Y (2016) The null controllability of nonlinear discrete control systems with degeneracy. IMA J Math Control Inf 32:1–12

Tie L (2014) On the small-controllability and controllability of a class of nonlinear systems. Int J Control 87:2167–2175

Tie L, Cai K (2011) On near-controllability of nonlinear control systems. Control Conf (CCC) 30th Chin 1416(1):131–136

Babiarz A, Czornik A, Niezabitowski M (2016) Output controllability of discrete-time linear switched systems. Nonlinear Anal Hybrid Syst 21:1–10

Chapman A, Mesbahi M (2015) State Controllability, output controllability and stabilizability of networks: a symmetry perspective. In: IEEE 54th annual conference on decision and control (CDC) Osaka Japan, pp 4776–4781

Germani A, Monaco S (1983) Functional output \(\epsilon \)-controllability for linear systems on Hilbert spaces. Syst Control Lett 2:313–320

Kawano Y, Ohtsuka T (2016) Commutative algebraic methods for controllability of discrete-time polynomial systems. Int J Control 89:343–351

Zerrik E, Larhrissi R, Bourray H (2007) An output controllability problem for semilinear distributed hyperbolic systems. Int J Appl Math Comput Sci 17(4):437–448

Karrakchou J, Bouyaghroumni J, Abdelhak A, Rachik M (2002) Invertibility of discrete distributed systems: a state space approach. SAMS 42(6):879–894

Karrakchou J, Rabah R, Rachik M (1998) Optimal control of discrete distributed systems with delays in state and in the control: state space theory and HUM approaches. SAMS 30:225–245

Delfour MC, Karrakchou J (1987) State space theory of linear time invariant with delays in state, control and observation variable. J Math Anal Appl 25 partI: 361–399 partII: 400–450

Carmichael N, Prichard AJ, Quin MD (1989) State and parameter estimation problems for nonlinear systems. Control Theory Center Report University of Warwick Coventry England

Coron JM (2007) Control and nonlinearity. Mathematical surveys and monographs, vol 136. American Mathematical Society, Providence

Magnusson K, Prichard AJ, Quin MD (1985) The application of fixed point theorems to global nonlinear controllability problems. In: Proceedings of the Semester on Control Theory Banach International Mathematical Center Warsaw Poland

Prichard AJ (1980) The application of fixed point theorem to nonlinear systems theory. In: Proceedings of the third IMA conference on control theory university of Sheffield, Sheffied, pp 775–792

Karrakchou J, Rachik M (1995) Optimal control of distributed systems with delays in the control: the finite horizon case. Arch Control Sci 4:37–53

Balakrishnan AV (1981) Appl Funct Anal. Springer, New York

Acknowledgements

The authors would like to thank all the members of the Editorial Board who were responsible of this paper, and the anonymous referees for their valuable comments and suggestions to improve the content of this paper. This work is supported by the Morocco Systems Theory Network.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lhous, M., Rachik, M., Bouyaghroumni, J. et al. On the output controllability of a class of discrete nonlinear distributed systems: a fixed point theorem approach. Int. J. Dynam. Control 6, 768–777 (2018). https://doi.org/10.1007/s40435-017-0315-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40435-017-0315-9