Abstract

In this work, a nominal-plus-neighboring-optimal control approach is suggested for the treatment of cancer using the adoptive cellular immunotherapy. The main goal of this therapy is to minimize both tumor concentration and treatment costs while restoring natural defense mechanisms and activating immune response. In the presence of an additional initial concentration of cancer cells, the biological effects of the introduction of a neighboring-optimal treatment in addition to the existing nominally therapy are explored and investigated. The optimal control problem is presented by defining appropriate objective functions. The Pontryagin’s maximum principle and the Pontryagin procedure are both used to obtain optimal solutions for subsequently providing nominal and neighboring-optimal control configurations. The optimal systems are derived and solved numerically using an adapted iterative method with a Runge–Kutta fourth order scheme.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Cancer is a pathology characterized by abnormally high and uncontrolled growth of cells within an organ or body tissue. Cells proliferate indefinitely and are responsible for the formation of masses called tumors. Without treatment, this abnormal cell clusters lead to the destruction of the organ, and involve cell migration to other areas; this is the stage of generalization of cancer through metastasis, which correspond to the transfer of pathogenic organisms or malignant cells in a part of the body remote from the primary hearth of the tumor. The most common treatments are surgical ablation [1], chemotherapy [2–5], radiotherapy [6] and immunotherapy [7–11].

The choice of an optimal therapeutic strategy is mainly conditioned by an understanding of the evolution of the disease, in order to design treatments tailored according to each clinical case. In this context, mathematical modeling proves to be a very effective tool for best use of the large number of biological and pharmacological information available. Note that many mathematical models have been interested in dynamics [12], evolution [13] and treatment [14, 15] of some types of cancers such as brain cancer [16], bladder cancer [17–19] and prostate cancer [20].

Recent scientific studies [21–23] have proposed methods of finite duration optimal therapies to treat cancer and limit the development of tumor cells using theoretical and numerical approaches based on the state-dependent Riccati equation (SDRE)-based optimal control method [24–26]. The results of these studies have also shown the essential interest of changing the dynamics of the cancer model to have finite duration treatment.

The effector cells (B-cells, T-cells, interleukin) belong to the immune system, and are involved in the fight against anomalies while defending the organism against external infections, and abnormal proliferation of some cells of the human body. Interleukin-2 (IL-2) [27, 28] is an immune system cytokine which enables stimulation of the lymphocyte proliferation and the cell differentiation between foreign and personal cells.

The cancer immunotherapy is a treatment being increasingly used. This type of treatment is based on the mechanisms normally used by the immune system of human body. Immunotherapy seeks to mobilize the immune system for fighting against tumors using natural body defenses to kill subsequently cancer cells.

The adoptive immunotherapy [29–32] involves injection of T-cells which are activated in-vitro into the patient’s body. An adoptive cellular immunotherapy strategy [9] and [33, 34] involves the withdrawal of a number of cells which have infiltrated the tumor (Tumor infiltrating lymphocytes (TIL)) [35] and that have further been activated for tumor cell recognition. Starting from a fresh biopsy of the patient’s tumor, specific T-cells of the tumor are progressively selected in vitro, using different types of cell cultures, as well as interleukin-2 doses.

In this work, a nominal-plus-neighboring-optimal control approach [36] is proposed with the objective of treating cancer using adoptive cell immunotherapy. Indeed, an optimal control problem is formulated via suitable objective functions summarizing all biological objectives of the adopted treatment strategy. First, in order to highlight the importance of this treatment strategy, an optimal nominal treatment approach [33] is presented and applied for an initial concentration of cancer cells. Then, the initial tumor load is increased until the nominal strategy is no longer optimal. Subsequently, a neighboring treatment is introduced in addition to the existing nominal treatment, to counteract the progression of cancer, and to minimize the concentration of tumor cells. Finally, the biological results for this new nominal-plus neighboring-optimal therapy are discussed and explored. The nominal–optimal control is characterized using the Pontryagin’s maximum principle [37, 38]. However, the Pontryagin procedure [26] is followed to obtain optimal configuration of the neighboring control, in the form of a linear feedback control law [39]. Concerning the numerical resolution of optimal systems, an adapted iterative method based on the forward backward sweep method (FBSM) [40–42] is developed using a Runge–Kutta fourth order scheme.

Indeed, the nominal therapy adopted in this study to treat cancer, is designed to maximize the efficiency of treatments used and to minimize subsequently the side effects and the overall cost of nominal therapy, thereby reducing the tumor cells concentration, stimulating the immune cells and limiting the tumor progression, which involves a significant improvement of the quality of life for cancer patients. The Pontryagin’s maximum principle [37, 38] derived from the optimal control theory allows characterizing the nominal optimal control that accurately defines the optimal treatment schedule used. It is observed that for a small increase in the initial estimate of tumor cells concentration, the combination of the nominal therapeutic process and the natural immune response has easily succeeded in eliminating cancer cells. However, if the increase in the initial estimate of cancer cells concentration is widely important, the adopted nominal therapy is no longer optimal and the introduction of a new treatment approach becomes necessary to satisfy the system optimality. The tumor grows abnormally causing a deterioration of the patient’s general condition. Thus, the treatment process must be adjusted to adapt to change of the tumor initial state. In this paper, it is shown that the linear feedback control [39] in addition to the nominal control enables providing complete and effective treatment which satisfies the optimality of the studied system with the new tumor initial state. The Pontryagin’s procedure [26] allows to prove the existence of an optimal trajectory associated with the neighboring control which relates to the resolution of a specific state-dependent Riccati equation [25, 26] and [36] in the optimal control problem. The steepest-descent method is used to generate successive approximations of the optimal control \(u^{*}(t)\). A number of 10–20 iterations are needed to obtain an optimal solution for the control u(t) [36], which manages to minimize the objective function J(u) relating to a system of ordinary differential equations. Hence the interest to implement the iterative forward backward sweep method [40–42] using a Runge–Kutta [43] fourth order scheme with a view to solve the optimal system with a minimum number of iterations.

This paper is organized as follows: Sect. 2 describes the basic mathematical model of cancer treatment using the ACI immunotherapy. The analysis of both nominal and neighboring-optimal control problems, are also presented in the same section. In Sect. 3, the adapted iterative method is introduced and the numerical simulations are discussed. Finally, the results of this therapeutic approach are explored in the conclusion in Sect. 4.

2 Mathematical model

2.1 Presentation of the treatment model

In this section, an optimal control approach modeling the cancer treatment of an existing work [9] which describes the tumor–immune interaction is presented. Note that this basic model studying the dynamics and evolution of immune cells in the presence of tumor cells, represents an important reference in the field of biomathematics relating specifically to cancer modeling which obviously aroused the interest of specialists in analysis (Kirschner et al. [44], Starkov et al. [45], Banerjee et al. [46]) and optimal control (Hamdache et al. [33], Burden et al. [34]). The prospects of application of optimal control theory to this global dynamics model, are far from being completed, hence the importance of this work which presents a nominal-plus-neighboring-optimal control approach to treat cancer. Three compartments characterizing the different populations are defined as follows: x(t), the activated immune cells (effector cells), y(t), the tumor cells and z(t) the concentration of IL-2 cells in the single tumor-site compartment. The equations that describe the interactions of these three state variables are given in [9]:

where the control function u(t) represents the adopted treatment using adoptive cellular immunotherapy (ACI) with Tumor infiltrating lymphocytes (TIL).

The system given by (1) is non-dimensionalized using an appropriate scaling [9] which is indispensable [46] to numerically solve the state equations (1). Thus, it is assumed that the normalized initial data [9] for state system (1) are measured in colony-forming unit (c.f.u) and satisfy at \(t=0\):

where the parameters units are in \(day^{-1}\), except of \(g_{1}\), \(g_{2}\), \(g_{3}\) and b, which are in volumes. The descriptions of model parameters (1) are listed in the Table 1.

The terms \(\displaystyle \frac{p_{1}xz}{g_{1}+z}\),\(\displaystyle \frac{axy}{g_{2}+y}\) and \(\displaystyle \frac{p_{2}xy}{g_{3}+y}\) are expressed in the Michaelis–Menten form and model respectively: The saturated effects of the immune response, the tumor cells loss and the IL-2 source produced by effector cells. However, the logistic term \(r_{2}y(1-by)\) represents the rate of change of tumor cells. Notice that the model parameters a, \(p_{1}\) and \(p_{2}\) and volumes b, \(g_{1}\), \(g_{2}\) and \(g_{3}\) are derived from scientific experiments [9, 46]. The control function u(t) represents an external source of active immune cells (effector cells) using the adoptive cellular immunotherapy (ACI) [9]. Lymphocytes are isolated from the tumor site after their activation. Then, these tumor infiltrating lymphocytes (TIL) undergo an important amplification in order to inject them back intravenously into the patient’s tumor site [9]. The possible values of the control function u(t) representing the drug amount are between 0 and \(\lambda =1000\) units per day during the 350 days of treatment period [34]. Note that the works [18] and [33] have proposed therapeutic approaches allowing the introduction of a control isoperimetric constraint representing the total dose of immunotherapy that can be administered continuously to the cancer patient during a given treatment period. In addition, the study [19] examines the biological and clinical effects observed in the patient during the administration of immunotherapeutic agents following a pulse vaccination process in order to treat bladder cancer.

2.1.1 The nominal optimal control problem

The main objective of the proposed therapeutic approach for the treatment of cancer, is to minimize both tumor concentration and treatment costs based on the ACI immunotherapy involving a logic activation of immune response cells. Thus, the problem comes down to minimize the following objective function:

where the positive parameter W balances the terms size and it represents a weight factor characterizing a patient’s level of acceptance of the treatment [34]. Note that the objective function (3) is defined in a manner to maintain the same order of potency \((k=2)\) for all terms within the integrand to ensure more consistency to the following optimal control problem. The objective function elements are squared to amplify the effects of large variations and to minimize contributions of small variations [36]. Mathematically, an optimal control \(u^{*}\in U\) is sought such that:

where U is the control set defined by

The control system (1) is rewritten implicitly as follows:

where \(X(t)=\left( \begin{array}{c} x(t) \\ y(t) \\ z(t) \\ \end{array} \right) \) is the state vector and u(t) is the control function. Thus, the objective function (3) relating to control u(t) takes the general form:

where \(R=W\) and the matrix \(Q=\left( \begin{array}{ccc} 0 &{} 0 &{} 0 \\ 0 &{} 1 &{} 0 \\ 0 &{} 0 &{} 0 \\ \end{array} \right) \)

The Pontryagin’s maximum principle [37, 38] provides necessary conditions for the optimal control problem (4). This principle converts the problem of finding a control which minimizes the objective function J (3) subject to the state system (1) with initial conditions (2), to a problem of minimizing the Hamiltonian \(\mathcal {H}\):

Thus, in order to characterize the optimal control \(u^{*}\), it is sufficient to derive the Hamiltonian \(\mathcal {H}\) instead of deriving the objective function J. In general form, the Hamiltonian \(\mathcal {H}\) is defined starting from the formulation of the objective function (3) as follows:

where \(\psi (t)=\left( \begin{array}{c} \psi _{1}(t) \\ \psi _{2}(t) \\ \psi _{3}(t) \\ \end{array} \right) \) is the adjoint variable vector.

Explicitly, we have,

The \(\psi _{i}\) where \(i=1,\ 2,\ 3\) are adjoint variables.

Note that the convexity with respect to the control u is correct for a minimization problem considering the following second order condition [26]:

The existence of a control solution is verified using a classical existence result [37]. Thus, the following properties must be checked:

-

1.

The class of all initial conditions with a control u in the admissible control set U along with each state equation being satisfied (1) is not empty;

-

2.

The control set U is convex and closed;

-

3.

The right-hand side of the state system (1) is continuous, is bounded above by a sum of the bounded control and the state, and can be written as a linear function of u with coefficients depending on time and the state;

-

4.

The integrand G(t, X(t), u(t)) of the objective functional J(u) is convex on U;

-

5.

There exist constants \(b_{1}\), \(b_{2}>0\) and \(\alpha >1\) such that the integrand G(t, X(t), u(t)) is bounded below by \(b_{2}-b_{1}(|u|^{2})^{\frac{\alpha }{2}}\) as follows:

$$\begin{aligned} G(t,X(t),u(t)) \ge b_{2}-b_{1}(|u|^{2})^{\frac{\alpha }{2}}. \end{aligned}$$(10)

Proof

Since the system (1) has bounded coefficients and any solutions are bounded on the finite time interval [0, T] [34], a result from [47] is used to obtain the existence of a solution of the state system (1). The admissible control set U is convex and closed by definition. The state system (1) is bilinear in the control and each right-hand side of this state system (1) is continuous since each term has a nonzero denominator and can be written as a linear function of u with coefficients depending on time and state. Furthermore, the fact that all variables x, y, z and u are bounded on [0, T] implies the rest of the third condition. Finally, in order to verify that the integrand g(t, X, u) is convex on U, the following property must be checked:

where \(u,v \ge 0\) and \(\alpha \in [0,1]\). \(\square \)

In addition, It is noticed that there exist a constant \(\alpha >1\) and positive numbers \(b_{1}\) and \(b_{2}\) satisfying:

where \(b_{2}\) depends on the upper bounds on y and by analogy we set \(b_{1}=W\) and \(\alpha =2\).

Using the Pontryagin’s maximum principle [38] and the optimal control existence result [37], the following theorem is obtained:

Theorem 1

Given optimal control u and solutions x, y and z of the corresponding state system (1), there exists adjoint variables \(\psi _{1}\), \(\psi _{2}\) and \(\psi _{3}\) satisfying the following equations:

with transversality conditions

Using the penalty multipliers \(\omega _{1}(t),\omega _{2}(t)\ge 0\) [34] satisfying:

and

The optimal control \(u^{*}\) is represented by:

Proof

Due to the convexity of integrand G(t, X(t), u(t)) of the objective function J (3), and to the existence of an optimal couple \((X^{*},u^{*})\) which minimizes the objective function J (3) relating to the state system (1), the adjoint equations (13) can be obtained using Pontryagin’s maximum principle such that:

The optimal control \(u^{*}\) can be solved from the following optimality condition:

By the bounds on the admissible control set U and using the penalty multipliers [34], it is easy to obtain \(u^{*}\) in the form of (14). However, an uniqueness theorem [34, 48] is used to prove that the solution to the optimal system is unique for T sufficiently small. \(\square \)

2.1.2 The neighboring-optimal control problem

The strengthening and consolidation of the nominal therapy in the presence of an additional initial amount of cancer cells can be based on the introduction of an neighboring-optimal therapy. The actual state and control histories X(t) and u(t) can be expressed as sums of the optimal histories \(X^{*}(t)\) and \(u^{*}(t)\) derived from the nominal optimal control problem (4), and deviations \(\varDelta X(t)\) and \(\varDelta u(t)\) from those histories as follows:

The state system (5) can be expanded as:

Thus, using the formulation (5), the perturbations dynamics can be approximately expressed by the following linear equation:

where A(t) and B(t) are the Jacobian matrices evaluated along the nominal optimal history and calculated using respectively \(\displaystyle \frac{\partial F}{\partial X}\) and \(\displaystyle \frac{\partial F}{\partial u}\).

The matrix A(t) is

The vector B(t) is defined such that: \(B(t)=\left( \begin{array}{c} 1 \\ 0 \\ 0 \\ \end{array} \right) \)

The objective function relating to the neighboring-optimal control problem is the second variation of the nominal cost function (6) and it is redefined as a function of the perturbation variables \(\varDelta X(t)\) and \(\varDelta u(t)\):

The new cost function (20) can be expressed as follows:

Using (8) and (21), the Hamiltonian relating to the neighboring-optimal control problem is formulated as:

where \(\varDelta \psi \) is the 3th order costate vector for the linearized system (19).

Thus,

The condition concerning the second partial derivative of \({\mathcal {H}}\) with respect to u(t), which is R(t), is positive definite, is sufficient to ensure a minimization problem.

Linear quadratic problem:

An initial point \(\varDelta X(0)\in \mathbb {R}^{3}\) is fixed, the objective of this problem is to determine the trajectories starting from \(\varDelta X(0)\) and which minimize the cost function \(\varDelta ^{2}J(\varDelta X(t),\varDelta u(t))\).

Note that:

so that,

Let introduce the following assumption:

\(\exists \alpha >0 \ \mid \ \forall \varDelta u(t)\in L^{2}([0,T],\mathbb {R}^{3})\) such that:

Theorem 2

Under the assumption (26), there exists a unique minimizing trajectory for the LQ problem.

Proof

Consider a minimizing sequence \((\varDelta u_{n})_{n\in \mathbb {N}}\) of neighboring controls which is bounded in \(L^{2}([0,T],\mathbb {R}^{3})\) [49]. Consequently, this sequence converges weakly to a neighboring control \(\varDelta u\) in \(L^{2}\) [49]. Note that \(\varDelta X_{n}\) (resp. \(\varDelta X\)) is the trajectory associated to a neighboring control \(\varDelta u_{n}\) (resp. \(\varDelta u\)) over [0, T]. Using the method of variation of constants [49], \(\forall t\in [0,T]\),

where M(t) is the matrix solution [49] of:

Note that the sequence \((\varDelta X_{n})\) converges simply to the application \(\varDelta X\) over [0, T]. Thus, passing to the limit in formula (27) allows to obtain, \(\forall t\in [0,T]\),

Therefore, \(\varDelta X\) is a solution of the system associated with the neighboring control \(\varDelta u\). Notice that, since \(\varDelta u_{n}\) converges to \(\varDelta u\) in \(L^{2}\), the following inequality is satisfied [49]:

involving subsequently that:

which shows the existence of an optimal trajectory [49] since \((\varDelta u_{n})_{n\in \mathbb {N}}\) is a minimizing sequence. Finally, the fact that the function \(\varDelta ^{2} J\) is strictly convex is necessary and sufficient to show the uniqueness of an optimal trajectory [49] which completes the proof of the theorem. \(\square \)

Theorem 3

Under the assumption (26), for all \(\varDelta X(t)\in \mathbb {R}^{3}\) there exists a unique optimal trajectory \(\varDelta X(t)\) associated to neighboring control \(\varDelta u(t)\) for the problem (19),(20). The neighboring-optimal control is characterized as a linear feedback control law [39]:

where C(t) is the feedback gain vector, \(\varDelta \psi (t)\) is the corresponding adjoint vector for the linearized system (19) and P(t) is the solution the following matrix Riccati equation [49] over a finite interval [0, T]:

where P(T) \(=\) 0.

The optimal control u(t) characterizing the total therapy applied at time t can be expressed in the form:

Proof

For connecting the perturbed costate \(\varDelta \psi (t)\) with the perturbed state \(\varDelta X(t)\) over the finite interval [0, T], the Riccati transformation [50, 51] is introduced:

where P(t) is the Riccati coefficient matrix. \(\square \)

The optimality conditions (15) and (16) remains valid [36] and can be subsequently applied to the variational system (19),(20) and (23). Using (16), the neighboring-optimal control \(\varDelta u^{*}(t)\) is formulated as:

The Riccati transformation (35) provides a new formulation of the neighboring-optimal control \(\varDelta u^{*}(t)\):

The adjoint (costate) equation is obtained according to the optimality condition (15):

Substitute the neighboring-optimal control relation (36) in the perturbed state equation (19) to obtain the following canonical system of equations [26]:

where \(E(t)=B(t)R^{-1}(t)B^{T}(t)\)

Differentiating (19) with respect to time t,

Using the Riccati transformation (35) in the perturbed state and costate systems (19) and (38), respectively, the following system of equations is obtained:

Now, substituting perturbed state and costate relations (41) in (40), the following equality is satisfied:

Note that the relation (38) is verified for all \(t \in [0,T]\) and for any initial state \(\varDelta X(0)\) [26]. Since the function P(t) does not depend on the initial state, therefore it satisfies the following matrix differential equation:

This matrix equation (43) is generally known as the matrix Riccati equation [26, 49] and can be expressed in the form:

where \(E(t)=B(t)R^{-1}(t)B^{T}(t)\)

Finally, using the Riccati transformation (35) and the transversality conditions (13) on \(\varDelta \psi \), the final condition on P(t) satisfies the following relationship:

Thus,

Using (17) and (37), the optimal control u(t) characterizing the total therapy applied at time t can be expressed in terms of \(u^{*}(t)\), X(t) and \(X^{*}(t)\) as follows:

where \(C(t)=R^{-1}B(t)P(t)\) is the feedback gain vector which is calculated along the nominal-optimal therapeutic history [36].

3 Numerical simulation

3.1 Summary of parameters and values used

In various similar work, a fairly wide range of values is proposed for modeling the treatment of cancer [7, 8, 10, 17, 19]. In general, it is always complex to attribute a set of general settings to patients with various types of cancer and representing different clinical cases. One of the main objectives of the study elaborated by Kirschner [9] was to find a specific set of values assigned to the model parameters which ensures the complete control [9] of the disease and allows eventually the introduction of treatment strategies for cancer. However, since the main purpose of this study is to use the optimal control theory for finding nominal–optimal and neighboring-optimal therapeutic strategies based on the adoptive cellular immunotherapy (ACI), the parameters values found in [9, 33, 34] are kept and it is specified that the stability properties [9] of the state system (1) are stored for these parameters which are rearranged in the Table 2. Finally, note that the experimental time required for obtaining satisfactory therapeutic results is \(T=350\,\hbox {days}\) [9].

3.2 Numerical method

Different numerical techniques [52] are employed to solve the optimal system corresponding to the nominal–optimal control problem (4) for finding \(u^{*}\) that minimizes the cost function J(u) (3). Equations of the optimal systems (1 and 13) characterizing the studied model describe a two-point boundary value problem [53]. The numerical resolution of this non-linear system provides an iterative solution since the state system is solved with initial conditions (2) and the adjoint system is solved with final conditions (13). In this work, the forward backward sweep method (FBSM) [40–42] is implemented to illustrate the nominal–optimal numerical solutions. Concerning the neighboring–optimal control problem whose aim is to minimize the objective function \(\varDelta ^{2}J(\varDelta X,\varDelta u)\) (20), in order to find a numerical solution to the matrix differential Riccati equation, many numerical approaches are proposed [26, 49, 54, 55]. However, the use of these same methods for the resolution of the state-dependent Riccati equation (33) [25, 26, 36] manifests the presence of many complexities for finding directly a solution for this type of equation when the matrix A(t) depends on the state variables (1). For this, other numerical techniques are considered [40, 41]. Indeed, an adapted iterative forward backward sweep method is extended and developed using a Runge–Kutta [43] fourth order scheme view to finding numerical optimal solutions relating to nominal \(u^{*}\) and neighboring \(\varDelta u^{*}\) controls. This new development allows surpassing many of the difficulties and inadequacies of the existing numerical methodologies, and provides a computational algorithm that has been very effective in developing the numerical simulations in this work. Here the vector approximations for the state \(\mathbf {X}=(X^{1},\ldots ,X^{N+1})\), costate \(\mathbf {\psi }=(\psi ^{1},\ldots ,\psi ^{N+1})\) and perturbed state \(\mathbf {\Delta X}=(\varDelta X^{1},\ldots ,\varDelta X^{N+1})\).

Algorithm

Step 0:

-

Make an initial guess for the nominal control \(\mathbf {u}\) and the neighboring control \(\mathbf {\Delta u}\) over the finite interval [0, T],

Step 1:

-

Use the initial condition on \(\mathbf {X}: X^{1}=X(0)\) and the stored values for \(\mathbf {u}\).

-

Solve \(\mathbf {X}\) forward in time according to its state differential system (1).

Step 2:

-

Use the transversality conditions on \(\mathbf {\psi }: \psi ^{N+1}=\psi (T)\) and the stored values for \(\mathbf {u}\) and \(\mathbf {X}\).

-

Solve \(\mathbf {\psi }\) backward in time according to its adjoint differential system (13).

Step 3:

-

Use the initial condition on \(\mathbf {\Delta X}: \varDelta X^{1}=\varDelta X(0)\) and the stored values for \(\mathbf {u}\), \(\mathbf {\Delta u}\), \(\mathbf {X}\) and \(\mathbf {\psi }\).

-

Solve \(\mathbf {\Delta X}\) forward in time according to its perturbed state linear time-varying equation (19).

Step 4:

-

Use the final conditions on \(\mathbf {P}\): \(P^{N+1}=P(T)\) and the stored values for \(\mathbf {u}\), \(\mathbf {\Delta u}\), \(\mathbf {X}\), \(\mathbf {\psi }\) and \(\mathbf {\Delta X}\).

-

Solve \(\mathbf {P}\) backward in time according to its Riccati coefficient system, solution of state-dependent Riccati equation (33).

Step 5:

-

Update both nominal control \(\mathbf {u}\) and neighboring control \(\mathbf {\Delta u}\) formulations by inserting the new \(\mathbf {X}\), \(\mathbf {\psi }\), \(\mathbf {\Delta X}\) and \(\mathbf {P}\) values into the characterization of optimal controls \(\mathbf {u}\) and \(\mathbf {\Delta u}\).

Step 6:

-

Check convergence: If the difference of values of these variables in this iteration and the last iteration is infinitesimally small, output the obtained current values as solutions. If the difference is not considerably small, go to Step 1.

3.3 Numerical results

Since its introduction in the 1980s, the adoptive cellular immunotherapy has been the subject of many studies and experiments [56–60] that investigate the immune system dynamics and the tumor cells development in the presence of immunotherapeutic agents derived from this treatment approach. This immunotherapeutic strategy is an innovative and promising therapeutic approach [57] for the treatment of various cancers [35, 59–62]. The adoptive cellular immunotherapy stimulates the immune system of patient to prevent cancer recurrence and treat metastatic cancer. This treatment approach consists of removing from the cancer patient the lymphocytes which are subsequently placed in cell culture for re-injecting them finally in the tumor site with a view to limit the development of cancer cells. Such an approach has enabled to bring the clinical response rate to over 50 % for metastatic patients, presenting this approach of treatment, as one of the most effective [61]. As is the case for studies that have focused on the the state-dependent Riccati equation and its applications in optimal control theory [21–23], this work shows the interest of solving the state-dependent Riccati equation [25, 26, 36] to obtain an optimal solution for the feedback neighboring-control [39]. Moreover, the choice of implementation of the iterative forward backward sweep method [40–42] using a Runge–Kutta [43] fourth order scheme to solve numerically the optimal systems has allowed to propose an algorithm able to provide direct numerical solution of the state-dependent Riccati equation with a minimum number of iterations and to eventually find optimal characterizations for controls.

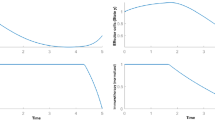

In this work, the main objective of the nominal therapy relating to the nominal–optimal control problem (4) is to minimize the concentration of cancer cells by stimulating the different effector cells of the immune system. In this sense, the adopted nominal therapeutic strategy provides encouraging biological results. Indeed, the concentration of tumor cells decreases gradually, shortly after the introduction of the treatment from the 76th day, involving thereafter a total eradication of the tumor exactly from the 150th day of treatment (Fig. 2). However, the treatment continues its therapeutic action to deal with any reappearance of tumor cells (Fig. 4). It is noted with interest that the mechanism of action of immune response reacts accordingly to the increasing level of cancer cells by generating significant growth in the concentration of immune cells (effector cells and IL-2 cells) (Figs. 1, 3). Taking into account the values attributed to the parameters c (\(c=0.015\,\hbox {day}^{-1}\)) and W (\(W = 1000\) [33]) and the evolution of the nominal–optimal control \(u^{*}(t)\) (Fig. 4), the stability analysis of the model in presence of an appropriate treatment shows that the problem is located in a region where the equilibrium \(E_{0}\) is stable [9] and where variables of the state system x(t), y(t) and z(t) converge to their respective equilibrium points involving the total eradication of tumor cells [9] and subsequently confirming the effectiveness of the nominal–optimal treatment strategy.

In the same biological context, the fact of increasing the initial estimated concentration of cancer cells by introducing an additional initial quantity \(\varDelta y_{0}\) generates considerable changes in the state variables evolution and the populations dynamics. Indeed, the nominal therapy is no longer optimal involving an important increase in the concentration of tumor cells which reached the number of \(7.7\times 10^{5}\) cell units in the 80th day of treatment (Fig. 6). Despite an observed decrease in the level of cancer cells in the 80th and the 230th day of treatment, the tumor reappeared and grew once again (Fig. 6) causing the failure of the nominal therapy. However, note that the active immune response reacts positively to any tumor growth by stimulating more effector cells in the human body and more IL-2 cells in the tumor site (Figs. 5, 7).

The state x(t) (Effector cells) during the nominal–optimal therapy with an additional \(\varDelta y_{0}\) where (\(x(0)=1\) c.f.u, \(y(0)=1\) c.f.u, \(z(0)=1\) c.f.u, \(\varDelta x(0)=0\) c.f.u, \(\varDelta y(0)=1\) c.f.u, \(\varDelta z(0)=0\) c.f.u, \(c=0.015\,\hbox {day}^{-1}\), \(W=1000\) and \(T=350\,\hbox {days}\))

The state y(t) (Cancer cells) during the nominal–optimal therapy with an additional \(\varDelta y_{0}\) where (\(x(0)=1\) c.f.u, \(y(0)=1\) c.f.u, \(z(0)=1\) c.f.u, \(\varDelta x(0)=0\) c.f.u, \(\varDelta y(0)=1\) c.f.u, \(\varDelta z(0)=0\) c.f.u, \(c=0.015\,\hbox {day}^{-1}\), \(W=1000\) and \(T=350\,\hbox {days}\))

The state z(t) (Interleukin-2 cells) during the nominal–optimal therapy with an additional \(\varDelta y_{0}\) where (\(x(0)=1\) c.f.u, \(y(0)=1\) c.f.u, \(z(0)=1\) c.f.u, \(\varDelta x(0)=0\) c.f.u, \(\varDelta y(0)=1\) c.f.u, \(\varDelta z(0)=0\) c.f.u, \(c=0.015\,\hbox {day}^{-1}\), \(W=1000\) and \(T=350\,\hbox {days}\))

The state x(t) (Effector cells) during the nominal-plus-neighboring-optimal therapy with an additional \(\varDelta y_{0}\) where (\(x(0)=1\) c.f.u, \(y(0)=1\) c.f.u, \(z(0)=1\) c.f.u, \(\varDelta x(0)=0\) c.f.u, \(\varDelta y(0)=1\) c.f.u, \(\varDelta z(0)=0\) c.f.u, \(c=0.015\,\hbox {day}^{-1}\), \(W=1000\) and \(T=350\,\hbox {days}\))

The state y(t) (Cancer cells) during the nominal-plus-neighboring-optimal therapy with an additional \(\varDelta y_{0}\) where (\(x(0)=1\) c.f.u, \(y(0)=1\) c.f.u, \(z(0)=1\) c.f.u, \(\varDelta x(0)=0\) c.f.u, \(\varDelta y(0)=1\) c.f.u, \(\varDelta z(0)=0\) c.f.u, \(c=0.015\,\hbox {day}^{-1}\), \(W=1000\) and \(T=350\,\hbox {days}\))

The state z(t) (interleukin-2 cells) during the nominal-plus-neighboring-optimal therapy with an additional \(\varDelta y_{0}\) where (\(x(0)=1\) c.f.u, \(y(0)=1\) c.f.u, \(z(0)=1\) c.f.u, \(\varDelta x(0)=0\) c.f.u, \(\varDelta y(0)=1\) c.f.u, \(\varDelta z(0)=0\) unit, \(c=0.015\,\hbox {day}^{-1}\), \(W=1000\) and \(T=350\,\hbox {days}\))

Facing this biological situation, a nominal-plus-neighboring therapeutic strategy is proposed to treat the cancer in the presence of a larger initial quantity of tumor cells. Indeed, this optimal strategy is based on the fact of introducing a neighboring therapy (Fig. 12) in addition to the existing nominal therapy. Effectively, even though the concentration of cancer cells reaches a very high level (\(5.2\times 10^{5}\) cell units) at the 88th day of treatment, but the action of the treatment administered to the cancer patient allows eliminating completely the tumor exactly from the 128th day of treatment. In the tumor site, the IL-2 cells grow considerably (Fig. 10) to further stimulate lymphocyte proliferation enabling the increase of the concentration of effector cells (Fig. 8) which act directly in the immune response against the development of tumor cells. The therapeutic process is characterized by the action of the neighboring therapy during the first 52 days of treatment (Fig. 11) followed in parallel by the intervention of the nominal therapy from the 53rd day (Fig. 12) generating a complete eradication of tumor (Fig. 9). This optimal treatment process subsequently begins a prevention phase from the 130th day in order to eliminate all possible risk of cancer recurrence (Fig. 12). Note with interest that the growth of feedback gains relating to the immune cells action (Figs. 13, 15) and the observed decrease of feedback gain characterizing the tumor cells evolution (Fig. 14) confirms the effectiveness of the adopted treatment strategy and justifies the obtained numerical simulations.

4 Conclusion

In this work, a nominal-plus-neighboring-optimal control approach was suggested for the treatment of cancer using the adoptive cellular immunotherapy (ACI). In order to illustrate the effects of this treatment strategy on the tumor evolution, a nominal–optimal therapy was adopted for a given initial concentration of tumor cells. However, it was subsequently observed that the increase in the initial quantity of cancer cells has automatically generated the failure of the nominal treatment approach. Therefore, the introduction of a new neighboring treatment strategy in addition to the existing nominal therapy has been effective to eradicate completely the tumor cells by restoring the active immune response and by stimulating much more effector cells and IL-2 cells especially in the tumor site. The Pontryagin’s maximum principle was used to characterize the formulation of both nominal and neighboring-optimal controls. An adapted forward backward sweep method was implemented to solve numerically the optimal systems. Numerical simulations were presented for the different adopted therapies to highlight the various biological changes undergone by the studied populations and the possible consequences on the dynamics and on the evolution of state variables.

Finally, it is important to note that the proposed therapeutic strategy for the treatment of cancer has allowed reaching all objectives of the optimal control problem and has enabled to display consistent outcomes particularly compatible with the stability analysis of the model. Indeed, the immune system in presence of the adopted treatment has managed to eliminate completely the tumor cells while minimizing the overall cost of the therapy thereby allowing a significant improvement in the quality of life of cancer patients.

References

Chelur DS, Chalfie M (2007) Targeted cell killing by reconstituted caspases. Proc Natl Acad Sci 104(7):2283–2288

Martin RB (1992) Optimal control drug scheduling of cancer chemotherapy. Automatica 28(6):1113–1123

Swan GW (1990) Role of optimal control theory in cancer chemotherapy. Math Biosci 101(2):237–284

Engelhart M, Lebiedz D, Sager S (2011) Optimal control for selected cancer chemotherapy ODE models: a view on the potential of optimal schedules and choice of objective function. Math Biosci 229(1):123–134

Zouhri S, Saadi S, Elmouki I, Hamdache A, Rachik M (2013) Mixed immunotherapy and chemotherapy of tumors: optimal control approach. Int J Comput Sci Issues 10(4):1

Bomford CK, Kunkler IH (1993) Walter and Miller’s textbook of radiotherapy: radiation physics, therapy, and oncology. Churchill Livingstone, London

Castiglione F, Piccoli B (2007) Cancer immunotherapy, mathematical modeling and optimal control. J Theor Biol 247(4):723–732

De Pillis LG, Gu W, Radunskaya AE (2006) Mixed immunotherapy and chemotherapy of tumors: modeling, applications and biological interpretations. J Theor Biol 238(4):841–862

Kirschner D, Panetta JC (1998) Modeling immunotherapy of the tumorimmune interaction. J Math Biol 37(3):235–252

Chan C, George AJ, Stark J (2003) T cell sensitivity and specificity-kinetic proofreading revisited. Discrete Contin Dyn Syst Ser B 3(3):343–360

Blattman JN, Greenberg PD (2004) Cancer immunotherapy: a treatment for the masses. Science 305(5681):200–205

Starkov KE, Krishchenko AP (2014) On the global dynamics of one cancer tumour growth model. Commun Nonlinear Sci Numer Simul 19(5):1486–1495

Beerenwinkel N, Schwarz RF, Gerstung M, Markowetz F (2015) Cancer evolution: mathematical models and computational inference. Syst Biol 64(1):e1–e25

Babaei N, Salamci MU (2014) State dependent riccati equation based model reference adaptive stabilization of nonlinear systems with application to cancer treatment. In: Proceedings of the 19th IFAC World Congress, Cape Town, South Africa

Babaei N, Salamci MU (2015) Personalized drug administration for cancer treatment using model reference adaptive control. J Theor Biol 371:24–44

Swanson KR, Bridge C, Murray JD, Alvord EC (2003) Virtual and real brain tumors: using mathematical modeling to quantify glioma growth and invasion. J Neurol Sci 216(1):1–10

Bunimovich-Mendrazitsky S, Shochat E, Stone L (2007) Mathematical model of BCG immunotherapy in superficial bladder cancer. Bull Math Biol 69(6):1847–1870

Elmouki I, Saadi S (2014) BCG immunotherapy optimization on an isoperimetric optimal control problem for the treatment of superficial bladder cancer. Int J Dyn Control 1–7. doi:10.1007/s40435-014-0106-5

Saadi S, Elmouki I, Hamdache A (2015) Impulsive control dosing BCG immunotherapy for non-muscle invasive bladder cancer. Int J Dyn Control 3(3):313–323

Higano CS, Schellhammer PF, Small EJ, Burch PA, Nemunaitis J, Yuh L, Frohlich MW (2009) Integrated data from 2 randomized, double-blind, placebo-controlled, phase 3 trials of active cellular immunotherapy with sipuleucel-T in advanced prostate cancer. Cancer 115(16):3670–3679

Nazari M, Ghaffari A (2015) The effect of finite duration inputs on the dynamics of a system: proposing a new approach for cancer treatment. Int J Biomath 8(03):1550036

Nazari M, Ghaffari A, Arab F (2015) Finite duration treatment of cancer by using vaccine therapy and optimal chemotherapy: state-dependent Riccati equation control and extended Kalman filter. J Biol Syst 23(01):1–29

Ghaffari A, Nazari M, Arab F (2015) Suboptimal mixed vaccine and chemotherapy in finite duration cancer treatment: state-dependent Riccati equation control. J Braz Soc Mech Sci Eng 37(1):45–56

Sahami F, Salamci MU (2015) Decentralized model reference adaptive control design for nonlinear systems; state dependent Riccati equation approach. In: 2015 16th international Carpathian control conference (ICCC), IEEE, pp 437–442

Cimen T (2008) State-dependent Riccati equation (SDRE) control: a survey. In: Proceedings of the 17th World Congress of the international federation of automatic control (IFAC). Seoul, Korea, July, pp 6–11

Naidu DS (2002) Optimal control systems, vol 2. CRC Press, Boca Raton

Dutcher J (2002) Current status of interleukin-2 therapy for metastatic renal cell carcinoma and metastatic melanoma. Oncology (Williston Park) 16(11 Suppl 13):4–10

Hamdache A, Saadi S, Elmouki I, Zouhri S (2013) Two therapeutic approaches for the treatment of HIV infection in AIDS stage. Appl Math Sci 7(105):5243–5257

Rosenberg SA (2008) Overcoming obstacles to the effective immunotherapy of human cancer. Proc Natl Acad Sci 105(35):12643–12644

Rosenberg SA, Restifo NP, Yang JC, Morgan RA, Dudley ME (2008) Adoptive cell transfer: a clinical path to effective cancer immunotherapy. Nat Rev Cancer 8(4):299–308

Rosenberg SA, Yang JC, Restifo NP (2004) Cancer immunotherapy: moving beyond current vaccines. Nat Med 10(9):909–915

Zitvogel L, Kroemer G (2008) Introduction: the immune response against dying cells. Curr Opin Immunol 20(5):501–503

Hamdache A, Elmouki I, Saadi S (2014) Optimal control with an isoperimetric constraint applied to cancer immunotherapy. Int J Comput Appl 94(15):31–37

Burden TN, Ernstberger J, Fister KR (2004) Optimal control applied to immunotherapy. Discrete Contin Dyn Syst Ser B 4(1):135–146

Ben-Ami E, Schachter J (2015) Adoptive transfer of tumor-infiltrating lymphocytes for melanoma: new players, old game. Immunotherapy 7(5):477–479

Stengel RF, Ghigliazza RM, Kulkarni NV (2002) Optimal enhancement of immune response. Bioinformatics 18(9):1227–1235

Fleming W, Rishel R (1975) Deterministic and stochastic optimal control. Springer, New York

Pontryagin LS (1987) Mathematical theory of optimal processes. CRC Press, Boca Raton

Stengel RF (2012) Optimal control and estimation. Courier Corporation, North Chelmsford

Lenhart S, Workman JT (2007) Optimal control applied to biological models. CRC Press, Boca Raton

McAsey M, Mou L, Han W (2012) Convergence of the forward-backward sweep method in optimal control. Comput Optim Appl 53(1):207–226

Graves RN (2010) A method to accomplish the optimal control of continuous dynamical systems with impulse controls via discrete optimal control and utilizing optimal control theory to explore the emergence of synchrony

Brugnano L, Iavernaro F, Trigiante D (2015) Analysis of Hamiltonian boundary value methods (HBVMs): a class of energy-preserving Runge–Kutta methods for the numerical solution of polynomial Hamiltonian systems. Commun Nonlinear Sci Numer Simul 20(3):650–667

Kirschner D, Tsygvintsev A (2009) On the global dynamics of a model for tumor immunotherapy. Math Biosci Eng 6(3):573–583

Starkov KE, Coria LN (2013) Global dynamics of the Kirschner–Panetta model for the tumor immunotherapy. Nonlinear Anal Real World Appl 14(3):1425–1433

Banerjee S (2008) Immunotherapy with interleukin-2: a study based on mathematical modeling. Int J Appl Math Comput Sci 18(3):389–398

Lukes DL (1982) Differential equations. Elsevier, Amsterdam

Elmouki I, Saadi S (2015) Quadratic and linear controls developing an optimal treatment for the use of BCG immunotherapy in superficial bladder cancer. Optim Control Appl Methods . doi:10.1002/oca.2161

Trelat E (2005) Contrôle optimal: théorie et applications. Vuibert, Paris

Meyer GH (1973) Initial value methods for boundary value problems. Academic Press, New York

Ramirez WF (1994) Process control and identification. Academic Press, New York

Cheney E, Kincaid D (2012) Numerical mathematics and computing. Cengage Learning, Boston

Zill D, Wright W (2012) Differential equations with boundary-value problems. Cengage Learning, Boston

Grewal MS, Andrews AP (2011) Kalman filtering: theory and practice using MATLAB. Wiley, New York

Xue D, Chen Y (2008) Solving applied mathematical problems with MATLAB. CRC Press, Boca Raton

Siddiqui I, Mantovani A, Allavena P (2015) Adoptive T-cell therapy: optimizing chemokine receptor-mediated homing of T cells in cancer immunotherapy. In: Rezaei N (ed) Cancer immunology. Bench to bedside immunotherapy of cancers. Springer, Berlin, pp 263–282

Darcy PK, Neeson PJ (2015) Adoptive immunotherapy: a new era for the treatment of cancer. Immunotherapy 7(5):469–471

Rosenberg SA, Restifo NP (2015) Adoptive cell transfer as personalized immunotherapy for human cancer. Science 348(6230):62–68

Stefanovic S, Schuetz F, Sohn C, Beckhove P, Domschke C (2014) Adoptive immunotherapy of metastatic breast cancer: present and future. Cancer Metastasis Rev 33(1):309–320

Shindo Y, Hazama S, Maeda Y, Matsui H, Iida M, Suzuki N, Oka M (2014) Adoptive immunotherapy with MUC1-mRNA transfected dendritic cells and cytotoxic lymphocytes plus gemcitabine for unresectable pancreatic cancer. J Transl Med 12:175

Dudley ME, Wunderlich JR, Yang JC, Sherry RM, Topalian SL, Restifo NP, Rosenberg SA (2005) Adoptive cell transfer therapy following non-myeloablative but lymphodepleting chemotherapy for the treatment of patients with refractory metastatic melanoma. J Clin Oncol 23(10):2346–2357

Shaffer DR, Cruz CRY, Rooney CM (2013) Adoptive T cell transfer. In: Curiel TJ (ed) Cancer immunotherapy. Paradigms, practice and promise. Springer, New York, pp 47–70

Acknowledgments

The authors would like to thank the Editor in Chief and all anonymous referees for their valuable comments.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hamdache, A., Saadi, S. & Elmouki, I. Nominal and neighboring-optimal control approaches to the adoptive immunotherapy for cancer. Int. J. Dynam. Control 4, 346–361 (2016). https://doi.org/10.1007/s40435-015-0205-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40435-015-0205-y

Keywords

- Adoptive cellular immunotherapy

- Pontryagin’s maximum principle

- State-dependent Riccati equation

- Neighboring-optimal control problem

- Forward backward sweep method