Abstract

The timely growth of data collection and virtual technology has led to advancements in digital twin (DT) technology, which has since become one of the primary research areas and provides enormous possibilities for various industry fields. Based on ultra-fidelity models, DT is an efficient method for realizing the merging of physical and virtual environments. Scholars investigated associated theories and modeling techniques, critical technologies for realizing continuous links and communication between physical and virtual entities, and other researchers concentrated on providing frameworks for the practical application of DT. This article aims to summarize common industrial cases to determine the present status of DT research. This paper discusses the concept of DT technology, the properties that define it, and the DT framework used in the industry. It also reviews the DT application and the findings of associated studies. Some present challenges in DT development, and potential DT prospects are discussed. Finally, we utilize the 6-degrees-of-freedom parallel robot as a case study to illustrate a future application of DT.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Compared with traditional computer-aided design or computer-aided manufacturing (CAD/CAM), digital twin technology has more development space and potential, and its significance is enormous. In recent years, scholars have continued to explore and deepen the concept of digital twinning and the deployment of new models and frameworks to utilize DT technology in new fields.

The digital twinning concept was put forward in 2003 by a professor from the University of Michigan, Professor Grieves, in the USA [1]. In the year 2010, the term "Digital Twin" was coined in the National Aeronautics and Space Administration’s (NASA’s) technical report, and DT was formally proposed and defined as “a system that integrates multiple physical quantities, scales, and probabilities on aircraft simulation processes.” The word "Twin" was first used by NASA to apply the concept of digital twinning to practical scenes. NASA applied digital twinning technology to spacecraft to obtain flight data from physical aircraft. The first paper on DT appeared in 2011 [2], and in recent years, the scope of DT study projects has increased quickly and extended into new fields. The term "digital twin" has gained popularity. It is now often used to describe the technology that creates digital copies of actual entities and connects them to their virtual versions.

In the year 2012, the US Air Force and NASA collaborated on an article on DT technology, stating that DT is one of the core technologies to facilitate airplane design for the future [3]. Through simulations of digital twins running on the ground, possible future situations are successfully predicted for the staff to make correct decisions, providing a robust basis [4]. Thus, digital twinning technology in the aviation space field has been attached to great importance.

Due to the vigorous advancement of information and communication technologies, the DT has been applied in different fields and scenes, such as agriculture, smart cities, electric power, production, and manufacturing [5,6,7,8,9]. In manufacturing, digital twin production is generally defined as an effective means to achieve the virtual-real integration of the information space and the actual manufacturing scene [4].

Over the lifecycle of a product, several electronic tools and computer programs may be used. Hence, a great deal of information in various forms is generated. These data are large, but they are scattered and disconnected, leading to inefficiency and underutilization. Simulation using theoretical and static models has been widely used and is an effective analytical tool, validating and optimizing systems in their early phases of planning. However, the simulation application is given no thought during system run-time. New information and digitalization technologies have made it possible to potentially collect more data; the next step is to determine ways to thoroughly utilize this plethora of new data. Because of this, the DT notion has generated much interest and is advancing swiftly. Increases in computing power and network bandwidth have been a boon in the industrial sector. Additionally, technological advancements like CAD, CAM, computer-aided engineering (CAE), product data management (PDM), finite element analysis (FEA), and other developments in computer-aided technology are accelerating and playing an increasingly important as well as common industry function. Every industry benefits significantly from the rapid development of technologies like artificial intelligence, big data, the Internet of Things, cloud technology, edge computing, 5G cellular technology, and wireless sensor networks. Although these technologies offer a wide range of chances for merging the digital and real worlds, not all this integration's strategic potential is being used. Recently, progress has been made in digital twins, but there is still a long way to go in the implementation phase.

The advent of digitization has also enabled the utilization of simulations in product designs and production processes. To enable real-time planning, what follows from these massive volumes of information is analyzed, examined, and assessed with the use of simulated environments and optimization software [10,11,12]. Among many simulation-based planning and optimization approaches, DT continues to show enormous promise in a wide range of commercial contexts [13]. Incorporating field-level data sensing into a time-synchronized system, DT may be used to replicate it for a variety of reasons. It can choose connections between activities to orchestrate and carry out the full manufacturing chain as efficiently as feasible. This increases production efficiency and precision and brings about financial gains [11, 14]. DTs are anticipated to predict the physical object's state progression utilizing the shared data. It is possible to exchange information between physical and virtual domains while adhering to time-sensitive requirements. DTs can also make available information about the system's current state and performance to aid in the creation of novel business models. Additionally, it is feasible to provide situational awareness and create more accurate forecasts.

DT can be incorporated into the management of physical items with several benefits. For instance, in the form of machine learning, or other model approaches, it is feasible to forecast future system traits and adjust them to increase process productivity. For this reason, DTs are frequently used to avoid service interruptions during repair operations. A DT can be utilized for continuous monitoring by acquiring data in real time. This makes it possible for the DT to regulate the physical system and offer information for improved business decisions. DTs can offer a platform for testing several situations to select the most effective one, enhancing the system’s performance. Due to their capacity to identify harmful activity on a system, DTs are frequently used to enhance security and resilience. Additionally, it enables improved risk analysis to compare and contrast potential outcomes that might impact the actual items.

During the preceding several years, enthusiasm for DT has expanded at both academic and industrial levels because of its vast advantages, long-term potential, and remarkable scope of implementation. In Industry 4.0, to put it simply, DT is one of the foundations of technology [15]. DT was listed as one of the next most anticipated technological developments [16].

The existence of review articles [2, 8, 9, 11, 17,18,19,20,21,22] has contributed greatly to the Digital Twin technology. While these seminal works have undoubtedly enriched the discourse on DT, this review extends beyond the enumeration of enabling technologies and challenges. The paper delves into a comprehensive exploration of the fundamental concepts underpinning DTs, offering readers a solid foundation to grasp the topic. This paper critically evaluates various definitions of DT in academia, aiming to propose a consolidated definition that can be used in any industry, despite the various ways DT is defined across different fields. Moreover, this paper will further provide an in-depth examination of DT applications across various domains categorizing them under three subsections, and the associated studies are critically evaluated and summarized. The study not only draws upon prior research but also offers a synthesis that highlights the evolving landscape of DT utilization trends, and a practical case study to exemplify real-world implementation was conducted by the six-degrees-of-freedom (6-DOF) parallel robot to illustrate one of the future application modes of the DT technology by reflecting critically on digital twin technology's function across its control. In this manner, this review paper provides a holistic and nuanced perspective on DT technology, making it a valuable resource that distinguishes itself through its depth and breadth of coverage. Ultimately, the unique and innovative contribution of this manuscript lies in its unparalleled dimensions, its unflinching critical examination of the existing literature, and its commitment to illuminating the path toward the successful integration of digital twin technology across various facets of industry and academia. An overview of this study is presented in Fig. 1.

The rest of this paper is organized as follows: Section 2 starts by presenting the methodology adopted, followed by subsections on the definition and characterization of the technology. Section 2.2 presents concepts and an analysis of the definition. Section 2.3 presents the key enabling technologies of the DT platform. Section 2.4 lays characteristics. Section 2.5 details the various constituents of the framework. Section 3 presents applications in different areas. Section 4 presents challenges and perspectives. Section 5 presents a case study on the DT-driven real-time visual control method for 6-DOF parallel robots. And finally, Sect. 6 draws conclusions.

2 Methodology revision of published literature

2.1 Scope of the paper

Our analysis provides a thorough overview of the idea of DT technology, its defining characteristics, and the industry-standard DT framework. It also goes over the results of related investigations and the implementation of DT. All references were searched from mainstream sci-tech journal databases. We looked at a number of research on DT technology, first going over the methodology implementation and then defining and characterizing the technology. The main enabling technologies of the DT platform were described in literature that provided information on the ideas and analyzed the definition. Additionally, we provided attributes that corresponded with the different components of the framework. In addition, 3371 published publications in the WoS database were found by our search using the keywords "digital twin," "virtual environment," "digital system," and "computer-aided technologies." The keyword “digital twin application” search generated 1224 documents during the last ten years. Among these, the most recent in past 5 years were 81 categorized and reviewed under digital twin application in Sect. 3. Nevertheless, the most modern and thorough DT technology was the main focus of our review. The most thorough papers that covered ideas, traits, framework components, applications, difficulties, and opportunities were considered. We provide information as well as key findings from 185 references published in the last 10 years with majority from the last 5 years.

As illustrated in Fig. 2, utilizing the Web of Science (WoS) database revealed published manuscripts in digital twin technology. Three thousand three hundred and seventy-one (3371) published documents on digital twin technology have been issued since 2013. There have been 3222 new releases in the previous five years. Almost all papers (95.6%) were published over the last five years. Since 2013, 3893 manuscripts have been added to the following databases: WoS, Chinese Science Citation Database℠ (CSCD), Derwent Innovations Index (DII), KCI-Korean Journal Database (KCI-KJD), and SciELO Citation Index. Of these, 3812 were published over the past five years, accounting for 97.9% of the literature on the subject. These data show a spike in the research on the said topic. These demonstrate a severe interest in digital twin technology and have facilitated advancements in recent years.

Digital twin technology publication history and development: The Web of Science database (Blue) reveals 3371 published documents since 2013, with 95.6% of these being published within the last five years. All Databases (Orange) have contributed 3893 manuscripts since 2013, with 3812 published in the preceding five years, accounting for 97.9% of the subject's literature

Utilizing the Web of Science (WoS) database showed published manuscripts (see Fig. 3) in the application of digital twin technology. One thousand two hundred and twenty-four (1224) published documents on digital twin technology have been published since 2013. Over the past five years, 1195 of these have seen publication. Nearly all of the manuscripts (97.2%) have been released over the previous five years. Since 2013, there have been a total of 1616 articles indexed in the following databases: WoS, Chinese Science Citation Database℠ (CSCD), Derwent Innovations Index (DII), KCI-Korean Journal Database (KCI-KJD), and SciELO Citation Index. One thousand five hundred and eighty-five (1585) studies have been published in the last five years, accounting for 98.1% of the total. These data show a spike in research on the said topic. These demonstrate a grave interest in the application of digital twin technology in various fields and have facilitated advancements in recent years. This paper discusses current trends by reviewing literature published in the past decade.

Application of digital twin technology publication history and development: The Web of Science database (orange) lists 1224 published digital twin technology application manuscripts since 2013, with 97.2% released within the past five years. 1616 articles have been indexed in all databases, with 1585 studies published in the last five years, indicating a significant increase in research on the topic

2.2 Digital twin concept

Utilizing "twins" is a long-standing concept. It has its roots in NASA's Apollo program. In their program, two conformal spacecraft were constructed to enable the visualization of the spacecraft's travel experiences. The component remaining on Earth was the twin. During the lead-up to the trip, the twin was heavily utilized for training. To aid the astronauts under orbit in difficult circumstances, it served as a simulation for potential outcomes on the model on earth during the mission. The in-flight events were simulated accurately using the available flight data. In this respect, any prototype that mimics the actual equivalent in terms of operational conditions and behavior in real time is known as a twin [12].

Several scholars have, over the years, analyzed the DT notion and its definition. In the work done by Zhang et al. [23], both the limited and broad senses of the term "DT" were explored, along with the notion and attributes of this term. In the constrained sense, they explained DT as an accumulation of virtual data that completely characterizes a hypothetical or existing physical manufacturing process from the microscopic resolution to the contextual geometrical level [23]. DT can be used to acquire any piece of data that might be examined based on a physically fabricated product. They also stated that DT is primarily an embedded system with the ability to simulate, supervise, evaluate, and control the state of the system and procedure, with the stipulation that DT is constructed by collecting data and digital production techniques centered on the control, information processing, and data transmission units. The recommended application structure consisted of physical space, virtual space, and an information processing layer. The DT is capable of achieving complete system mapping, dynamic modeling across the whole lifetime, and real-time optimization within the context of an application [23].

According to the viewpoint of the production process on the shop floor, Bao et al. [47] identified three categories of DT models: product DTs, process DTs, and operation DTs. According to Schluse and Rossmann [48], a DT is a digital depiction and communication capabilities of a real object that may operate as an intelligent node in the Internet of Things and Services [48]. According to Shafto et al. [49], it is a unified multi-scale, stochastic framework of a system that utilizes the most up-to-date real product models, sensor-acquired data, and fleet history. A DT is the digital depiction of a tangible item, as defined by Fotland et al. [50]. Rather than monitoring the asset itself, DT gathers and processes real-time data to determine metrics that simply cannot be seen at the hardware level. There are several scholarly works, including [51,52,53,54] that provide further definitions of DT.

The concept of DT has evolved, with various scholars and experts offering diverse interpretations and definitions. The historical reference to NASA's Apollo program, where conformal spacecraft were used as twins for training, simulations, and in-flight event monitoring, serves as a valuable starting point. However, as the concept has matured and found implementation across multiple industries, it is evident that a more consolidated and universally applicable definition is needed. While there are several hypotheses for DT, there is no agreement on the features that define digital twins. The definition and connotation of the digital twin concept were widely discussed in academic works, and this was done across a variety of different sectors, as summarized in Table 1.

The subject is presented from several perspectives, all of which are valid. However, it is not possible to prove that any one of these theories is better than the others. The diverse interpretations highlight the multifaceted nature of the concept, making it challenging to arrive at a single, definitive definition. These interpretations range from emphasizing the accumulation of virtual data characterizing physical processes to highlighting DT as an embedded system for simulation, supervision, evaluation, and control. There are also distinctions between product DTs, process DTs, and operation DTs, underscoring the versatility of DT within manufacturing contexts. Additional definitions refer to DT as a digital depiction with communication capabilities and a unified multi-scale framework utilizing real product models, sensor data, and historical records. The challenge lies in synthesizing these various perspectives into a consolidated definition that can be applied universally, irrespective of industry. A proposed consolidated definition of DT could be as follows:

Digital Twin (DT): A DT is a digital representation of a physical entity, process, or system that operates in real time. It encompasses a broad spectrum of digital data, including geometrical, behavioral, and operational information. A DT serves as a dynamic and interconnected model that can simulate, monitor, evaluate, and control the state and behavior of its physical counterpart while facilitating data-driven decision-making. It comprises a comprehensive mapping of the entire system, supports dynamic modeling throughout its lifecycle, and enables real-time optimization within the context of its application.

This consolidated definition recognizes the fundamental attributes of a DT (as illustrated in Fig. 4), such as its real-time nature, the ability to mimic and control the physical entity, and its specific operational utility. It provides a framework that can be employed across various industries and contexts, emphasizing the versatility and practicality of the DT concept. While the interpretations presented by various scholars remain valid, a consolidated definition serves to unify and clarify the core characteristics of DT, making it a valuable reference point for widespread utilization.

2.3 Key enabling technologies of the DT platform

Digital twin technology promotes the development of intelligent processes. On the one hand, considering the traditional computer-aided technologies (CAx) simulation, the environment does not have the attribute of the internet, and data access is the key problem of digital twin technology. Some scholars used Web racks to solve this problem, Schroeder et al. [55] addressed this by proposing a Cyber-Physical System (CPS) solution, digital twin of sensors, PLC, and other industrial equipment was constructed, and a method of data access and management based on the Web service is proposed to exchange data between digital twins and external systems. A new method based on this theory is the proposed Data exchange model of Automation ML (automation modeling language). Similarly, Liu et al. [29] proposed a Web-based system framework that integrates CPS, digital twin modeling technology, event-driven distributed cooperation mechanisms, and Web technology based on digital twin CPPS (Cyber-Physical Production System) fast department guides for general configuration and operation. Moreover, Urbina Coronado et al. [56] described a Manufacturing Execution System (MES) that was powered by cloud computing tools and Android devices, integrated MTConnect data with production data collection, and applied this method to track a production run of titanium parts. Meanwhile, visualization of digital twins poses another challenge, tackled by Zhang et al. [57] who developed a simulation platform with 3D graphics for production line optimization.

Some scholars use Web 3D technology to realize remote online visualization of the DT. Eamnapha et al. [58] proposed a graphics and physics engine for the rapid development of 3D Web systems that were also implemented. In the work done by Khrueangsakun et al. [59], the above results are applied to digital twinning technology, and based on this, the Web end of the digital twinning of the manipulator is realized for 3D display, monitoring, and control. Han et al. [60] proposed the lightweight and visual display method of the 3D twin model based on the Web and verified and analyzed the proposed 3D visual monitoring system of continuous casting machines driven by digital twins based on the Web.

Yu et al. [61] proposed the use of ontology expression language to build a product DT model and store product configuration information in the DT model, providing ideas to control product setup in the full 3-dimensional mode. In the realm of manufacturing, Zou et al. [62] proposed DT models tailored for machined parts, incorporating key feature information to address modeling challenges. Additionally, Söderberg et al. [63] presented a digital twinning modeling method for real-time mapping of product geometric information during design and processing stages, ensuring geometric size accuracy. Wärmefjord et al. [53] devised an inspection strategy utilizing DT models with precise geometric assurance directions and integrating geometric shape data for product inspection. Tool state variations (wear, temperature, etc.) are often unpredictable in traditional simulation and analysis environments. Botkina et al. [64] established a tool DT model oriented to the design and manufacturing processes to analyze the state changes of tools in their life cycle. Also, Sun Huibin et al. [65] discussed the definition, composition, and construction method of the tool DT model oriented to the cutting process, explored tool health management, tool life prediction, tool selection, and tool change methods driven by the fusion of the digital twin model and actual tool sensor data, and verified the proposed methods through machine tool processing. The essential technologies included in a majority of the DT platforms are depicted in Fig. 5 after a comprehensive assessment of the literature on digital twin technology.

Key technologies involved in the DT platform: These include Extended Reality (XR) for digital modeling and visualization of physical entities, cloud computing for data storage and also allow easy data access, Internet of Things (IoT) for constant data transmission between the physical and the virtual entity, and Artificial intelligence (AI) for automatic analysis of obtained data, to provide data insight and make predictions

The DT is commonly regarded as a technological entity. However, it can be more precisely conceptualized as a system that integrates multiple enabling technologies to create an intelligent virtual model of a physical object. This model facilitates a continuous and reciprocal exchange of information between the twin entities. The DT comprised of various modules, including physical entities, virtual model data, connections, and innovative services. The different moduli require various enabling technologies to support their function to fully realize the DT.

Since DT necessitates expertise in a wide range of fields, including but not limited to dynamics, structural mechanics, acoustics, thermals, electromagnetism, materials science, hydro-mechatronics, and control theory, a thorough grounding in the physical world is an absolute necessity. Physical things and processes are mapped to the digital world to bring the models closer to reality by utilizing knowledge, sensing, and measuring technology.

Various modeling technologies are necessary to create a virtual model, and utilizing visualization technologies is crucial to track physical entities and processes in real time. The precision of digital representations has a direct impact on the efficacy of the DT. Hence, it is imperative to subject the models to verification, validation, and accreditation (VV&A) technologies and optimize them using optimization algorithms. In addition, using simulation and retrospective technologies can facilitate the prompt identification of quality issues and validation of viability. Given the need for virtual models to adapt to ongoing changes in the physical world, it is paramount to employ model evolution technologies to facilitate model updates.

Furthermore, the operation of DT generates a substantial amount of data. Fusion and advanced data analytics technologies are essential for extracting valuable insights from unprocessed data. DT encompasses a range of services, such as operational, resource, knowledge, and platform. The provision of these services necessitates the utilization of software solutions, platform architecture technology, service-oriented architecture (SOA) technologies, as well as knowledge technologies. The various components of DT, namely the physical entity, virtual model, data, and service, are interconnected to facilitate communications and information sharing. The connection pertains to the Internet, cyber-security, interface, communication protocols, and interaction technologies.

Also, the enabling technologies themselves can take many forms depending on the DT’s use case. For example, although it is known that a communication medium is needed between the real and digital twins, the choice of the specific communication protocol is entirely dependent on the communication requirements of the DT’s implementation. Table 2 presents a summary of the key enabling technologies required for the various DT moduli.

2.3.1 Extended reality technologies

Extended Reality (XR) technologies encompass the collective environment comprising both physical and virtual elements, where humans interact with machines. This is anticipated to bring about significant changes in the respective deployment domain. XR technologies, which include Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR), greatly extend the possibilities of digital twins by offering immersive and interactive experiences [66, 67]. The XR framework has been explored by researchers. Gong et al. [68] developed a framework for integrating XR systems in manufacturing, focusing on product development methodology and enhancing usability and user acceptance. Pereira et al. [69] proposed a framework for developing collaborative XR systems, focusing on object manipulation in VR. User tests validated their framework's effectiveness in enabling real-time interaction in mixed-reality environments. Catalano et al. [70] presented a DT-based framework for extended reality applications, demonstrating its effectiveness in a brake disk production company, and suggested future research using 5G networks.

Within the domain of VR, the fundamental ideas revolve around the creation of a completely computer-generated environment. VR utilizes advanced graphics rendering methods to create realistic 3D environments. It incorporates algorithms for rendering, shading, and spatial interaction. Typically, motion-tracking algorithms are incorporated to observe user motions and make appropriate adjustments to the virtual world. VR content makers produce and convert 3D models, 2D images, and spatial sounds into formats that can be processed by machines. They take into account the intended purpose, device, and level of engagement. VR experiences can be categorized as either static, which involves the immersive viewing of 360° spherical images or films, or dynamic, which allows users to freely move and interact inside the VR environment. With its advanced capabilities, the technology has been studied in various application DT works. The study by Kaarleal et al. [71] researched digital twins and virtual reality environments for safety training, focusing on practical use cases for local small- and medium-sized enterprises, highlighting the current maturity of virtual reality technology. Pérez et al. [72] introduced a novel process automation methodology using a virtual reality interface, enhancing implementation and real-time monitoring, and was validated in an assembly manufacturing process. Kwok et al.’s [73] studies revealed that perceived usefulness, ease of use, behavioral control, self-efficacy, and attitude influence users' acceptance of a system, explaining 60% of behavioral intention variance. Burghardt et al. [74] developed a method for programming robots using virtual reality and digital twins. Their virtual environment was a digital twin of a robotic station, which allowed the robot to replicate human movements in complex robotization situations. An example was presented for cleaning ceramic casting molds.

Augmented Reality superimposes digital data onto the user's physical environment, commonly through devices such as smartphones, tablets, or AR glasses. The fundamentals of AR encompass computer vision algorithms that facilitate object recognition and tracking. These algorithms include markerless tracking, simultaneous localization and mapping (SLAM), and image recognition algorithms. These technologies augment the user's experience by seamlessly integrating digital content with their physical environment. He et al.’s [75] research proposed a mobile augmented reality remote monitoring system to assist operators with low knowledge and experience levels in understanding digital twin data and interacting with devices. Their system analyzed historic and real-time data, enriched 2D data with 3D models, and used a cloud-based machine learning algorithm to transform knowledge into live presentations on a mobile device. They conducted a scaled-down case study that showed consistent measurable improvements in human–device interaction for novice users. In another study, Zhu et al. [76] presented an AR system using Microsoft HoloLens to visualize DT data of a CNC milling machine in a manufacturing environment. Their utilization allowed operators to monitor and control the machine tool simultaneously, providing an intuitive interface for efficient machining. This laid the groundwork for future intelligent control processes using AR devices. Bogosian and colleagues showcased a prototype mobile AR system for robotic automation training for construction industry workers, allowing users to interact with a virtual robot manipulator [77].

Mixed Reality (MR) integrates components from both VR and AR, enabling the simultaneous existence and interaction of digital and physical things in real time. MR enables users to control virtual elements in a way consistent with real-world interactions, with the digital information responding and reacting accordingly. To fully engage with this experience, it is necessary to utilize spatial mapping algorithms to comprehend and engage with the physical surroundings. Gesture detection and hand-tracking algorithms enable users to interact with virtual items, seamlessly integrating the real and virtual worlds. The study led by Osorto Carrasco found that MR-based design review can effectively communicate 85% of information to clients, outperforming traditional 2D methods by 70% [78]. It also enhanced client comprehension of material aesthetics, allowing for the replacement of physical samples or mockups during the construction finishing stage. Also, Tu et al. [79] created a human–machine interface (HMI) for digital twin-driven services utilizing an industrial crane platform. The utilization of a Microsoft HoloLens 1 gadget enabled crane operators to oversee and manipulate movement through interactive holograms and bidirectional data transfer in an MR system. The control accuracy of the prototype was assessed by 20 measurements, revealing minimal disparities between the desired and actual positions. Choi et al. [80] proposed an MR system designed for safety-conscious human–robot collaboration (HRC). Their system incorporated deep learning techniques and the creation of digital twins. The system precisely calculated the minimum safe distance in real time and provided task guidance to human operators. In addition, the system employed a pair of RGB-D sensors to rebuild and monitor the operational surroundings, while utilizing deep learning-based instance segmentation to enhance alignment between the physical robot and its virtual counterpart. The 3D offset-based safety distance calculation approach enabled real-time implementation while maintaining accuracy.

Real-time data synchronization is crucial when integrating XR technology with digital twins. Algorithms that handle data flow, synchronization, and ensuring consistency between digital and physical representations are crucial. Additionally, XR used in digital twins frequently require compatibility with several data sources and formats, which necessitates the use of algorithms for integrating and transforming data, as well as established communication protocols to ensure smooth connectivity.

Advanced human–computer engagement (HCI) principles are essential for user engagement in XR and digital twins. These interfaces encompass gesture recognition and voice commands, typically executed using machine learning algorithms. Furthermore, ensuring optimal performance is of utmost importance for XR system, which necessitates the implementation of techniques like level-of-detail rendering and real-time adaptive graphics modifications. These approaches are employed to uphold a seamless and prompt user experience.

Extended Reality technologies greatly enhance the value and efficacy of DT solutions in various industries. This leads to increased effectiveness, cost reduction, and improved decision-making. These technologies provide a more authentic and engaging visualization experience, enabling users to navigate and engage with DT in a virtual world, hence boosting their comprehension of physical systems [81]. VR enables remote collaboration and communication, enabling individuals to concurrently access and engage with DT from distant locations [82]. Furthermore, these technologies facilitate iterative design processes by enabling designers to electronically evaluate and simulate their concepts, hence minimizing the need for actual prototypes and the accompanying expenses [83]. In addition, the integration of XR technologies with DT can improve maintenance and support tasks by offering real-time repair instructions and instructing remote professionals with augmented information. This reduces downtime and enhances efficiency [84].

Comprehending and applying these technical elements of XR in the framework of digital twins help in developing more advanced and efficient immersive experiences.

2.3.2 Cloud computing technology

Cloud computing is a foundational technology in the implementation of digital twins, providing scalable resources, storage, and processing capabilities. The underlying principles of cloud computing include resource virtualization, abstracting physical resources into virtualized components, and enabling on-demand access to computing infrastructure without managing the underlying hardware. Scalability is a key feature that allows digital twin systems to dynamically scale resources based on demand, crucial for handling the computational requirements of complex simulations and real-time data processing. Additionally, cloud computing operates on various service models such as Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS), offering a range of solutions from virtual machines to ready-to-use software.

Algorithms and methodologies within cloud computing for digital twins encompass distributed computing algorithms, such as MapReduce, for efficient processing of large datasets across multiple servers. Load-balancing algorithms distribute incoming requests to optimize resource utilization and enhance overall efficiency. Data partitioning, replication, and containerization utilizing technologies like Docker and Kubernetes are key to managing data and orchestrating application components in cloud environments. Integration and interoperability are facilitated through APIs, microservices architecture, and message queue systems like RabbitMQ or Apache Kafka, ensuring decoupled communication and flexibility.

Virtualizing composite multimodal equipment or services necessitates substantial computational capabilities. The requirement for distributed and parallel computing arises from the demand for real-time reactivity, which is a property of the DT and involves the synchronization of real and virtual elements. Cloud computing technology is commonly found in studies connected to digital transformation.

The cloud system serves as a data storage facility with powerful processing capabilities. It also facilitates the seamless connection and hosting of virtual counterparts of diverse subsystems comprising a complex DT. Meanwhile, the DT focuses on synchronizing physical and virtual assets [27, 46]. The purpose of cloud computing technology in DT varies depending on the specific deployment area. For instance, within the manufacturing sector, it can function as a shared platform for companies to exchange information about the failure mechanisms and maintenance requirements of comparable equipment, to facilitate the implementation of DT-enabled solutions such as predictive maintenance, while in the healthcare industry, it involves utilizing the cloud as a collaborative information platform for medical service providers and patients [85, 86].

The implementation of cloud computing in DT has been explored by researchers. In view of this, Xu et al. [27] developed a system called digital twin-based industrial cloud robotics (DTICR) to control industrial robots. The study categorized the DTICR into four distinct components: physical industrial robots, digital industrial robots, robotic control services, and digital twin data. Furthermore, the ability to operate robots was consolidated into a service called Robot Control as-a-Service (RCaaS), which was built on manufacturing functionalities. Following the simulation of the manufacturing process, RCaaSs were assigned to practical robots for robotic control. Their DTICR successfully integrated and combined digital and physical industrial robots, allowing precise sensing control. The study by Liu et al. [85] proposed a cloud-based healthcare system, which utilizes digital twin healthcare technology to oversee, diagnose, and forecast health conditions using wearable medical equipment. They established a framework for reference, investigated essential technologies, and showcased the practicality in real-time monitoring scenarios. Also, the study by Wang et al. [36] developed a DT paradigm employing advanced driving assistance systems (ADAS) for linked automobiles. In their study, the data were uploaded to a server through a vehicle-to-cloud connection, where it was processed and subsequently delivered back to the vehicles. Their cooperative ramp merging case study showcased the advantages of utilizing a vehicle-to-cloud ADAS for transportation systems, such as enhanced mobility and environmental sustainability, while experiencing low communication delays and packet losses.

Security and privacy considerations in cloud-based digital twins involve Identity and Access Management (IAM) for controlling resource access, data encryption mechanisms like TLS and AES to protect sensitive information, and compliance auditing tools to monitor activities and adhere to data protection regulations. Cloud computing's role in digital twins extends to providing standardized data interchange formats such as JavaScript Object Notation (JSON) or eXtensible Markup Language (XML) for seamless information exchange between different components. In essence, the comprehensive use of cloud computing principles, algorithms, and methodologies supports the robust development and operation of digital twins by addressing scalability, data management, security, and interoperability challenges.

2.3.3 The Internet of Things

The Internet of Things (IoT) plays a pivotal role in the development and functionality of digital twins, enhancing their capabilities through the integration of sensor data, connectivity, and real-time monitoring. At its core, IoT involves connecting physical devices and objects to the internet, allowing them to collect and exchange data. This connectivity enables a continuous flow of information between the physical entity and its digital representation. The IoT technology enables the DT. However, the DT can also support the IoT by providing a self-adaptive and self-integrating digital representation of IoT devices. This helps make the IoT framework resilient to dynamic changes. Additionally, the DT can allow virtual simulations of large sensor networks [87, 88].

Underlying the principles of IoT in digital twins is the concept of sensor integration [89, 90]. Various sensors embedded in the physical system continuously collect data related to its state, performance, and surrounding environment. These sensors can include temperature sensors, accelerometers, cameras, and other types, depending on the nature of the system being modeled. The collected data are then transmitted to the digital twin, providing a real-time reflection of the physical entity. This was demonstrated in the study by Yasin et al. [91] which explored the feasibility of implementing DT for small- and medium-sized enterprises (SMEs) by developing a low-cost framework and providing guidance to overcome technological barriers. They presented an experimental scenario involving a resistance temperature detector sensor connected to a programmable logic controller for data storage and analysis, predictive simulation, and modeling. Their results showed sensor data can be integrated with IoT devices, enabling DT technologies and allowing real-time data and key performance indicators to be displayed in a 3D model. Algorithms in IoT-based digital twins focus on data processing, analysis, and communication [92]. Machine learning algorithms are such algorithmic approaches, employed for predictive analytics, allowing the digital twin to anticipate future states based on historical data trends [89]. Hofmann et al. [93] utilized DT to aid truck dispatching operators by employing simulation-based performance predictions and an Internet of Things (IoT) platform to determine the most effective strategies. This system was implemented as a cloud-based service to ensure scalability.

Integrating IoT with digital twins often involves the use of standardized communication protocols such as Message Queuing Telemetry Transport (MQTT), MTConnect, Constrained Application Protocol (CoAP), and OPC UA [94, 95]. These protocols are lightweight and efficient protocols designed for IoT communication as well as offer a platform capable of comprehending and converting data from various protocols facilitating the transmission of data between physical devices and their digital twins in a standardized and interoperable manner.

2.3.4 Artificial intelligence technology

Artificial Intelligence (AI) technology profoundly transforms the capabilities of digital twins, imbuing them with the ability to simulate, analyze, and make informed decisions based on intricate data. DT enhances awareness of physical assets by the utilization of specialized intelligence capable of processing numerical data and generating domain-specific conclusions at a faster rate than a human expert [96]. It can deduce significant and practical information from its physical counterpart and surroundings. At the core of this advantage is the integration of principles rooted in various machine learning (ML) algorithms such as classification ML, deep learning, traditional ML, supervised ML, unsupervised ML, regression ML, and reinforcement learning [96,97,98,99,100,101,102]. These machine learning principles lay the foundation for predictive analytics, employing methodologies such as time-series analysis for forecasting and anomaly detection to identify deviations from normal behavior.

However, the selection of a specific machine learning model/algorithm is dependent upon the application scenario and the services of the DT. Studies have been undertaken to incorporate physics-based and data-driven models into the DT, such as in anomaly detection and fault diagnosis. This integration aims to provide transparency regarding the factors influencing the predictions. Chakraborty et al. [103] conducted a study that integrated both methodologies to create a digital twin framework. This framework facilitates a prediction service that can forecast the future parameters of linear single-degree-of-freedom structural dynamic systems as they evolve in two operational time scales. The study utilized a two-part approach. Firstly, a physics-based nominal model was employed to process the data and make predictions about the responses. Secondly, a data-driven machine learning model called Mixture of Experts and Gaussian Processes (ME-GP) was used to combine the extracted information and forecast future behaviors of each parameter in the time series. Their digital twin was robust and precise, capable of offering reliable forecasts for future time intervals. Previous research employed machine learning in DT systems that involve tasks requiring remote-control support, such as space station maintenance and remote surgery [104, 105]. ML was utilized in certain instances to address optimization concerns, where data-driven models were employed to minimize or maximize a process parameter [31, 33, 35]. Artificial neural networks (ANNs) have been employed in DTs to forecast the future behavior of physical assets [106]. In addition to machine learning, natural language processing (NLP), knowledge representation, and reasoning mechanisms facilitate human–machine interaction within digital twins further enriching the capabilities of digital twins.

The integration of AI with digital twins involves a holistic approach, combining diverse principles and methodologies such as machine learning, NLP, computer vision, and knowledge representation. This fusion enables digital twins to not only replicate physical systems but also to analyze data, predict future states, and make informed decisions, contributing to their effectiveness in dynamic and evolving environments.

Across the reviewed literature, it can be established that there exists a symbiotic relationship among the enabling technologies. Digital twins are a system that utilizes the integration of Extended Reality (XR), Cloud Computing, the Internet of Things (IoT), and Artificial Intelligence (AI). IoT devices collect real-time information from physical entities, which is then transmitted to cloud computing platforms for storage and processing. These data are then used to analyze and interpret vast datasets generated by IoT devices, enhancing their predictive capabilities. AI models, facilitated by cloud-based environments, ensure that digital twins adapt and improve over time, remaining effective in dynamic scenarios.

Extended Reality technologies provide immersive visualizations for digital twins, while cloud computing supports remote collaboration and communication. AI models process incoming IoT data to identify patterns, anomalies, and critical events, enabling digital twins to respond dynamically to changing conditions. AI-driven chatbots or voice assistants integrated with XR technologies enhance human interactions with digital twins. This symbiotic relationship allows digital twins to mirror physical systems, simulate diverse scenarios, and offer valuable insights for improved decision making across various domains. Table 3 presents a high-level overview of how these technologies contribute to digital twins, considering their definitions, roles, integration points, key algorithms or methods, data management, security or privacy, and some used cases in the literature.

2.4 Digital twin characteristics

The DT consists of a physical space with a physical entity and a virtual space with a virtual entity, as well as a connection that allows data to flow from the real space to the virtual space and information to flow from the virtual space to the real space. A DT can therefore be thought of as a digitization that represents an actual thing and its workings. The activity of the physical item is translated into the behavior of the virtual object. All elements are highly synchronized with one another. DT models the physical product’s mechanical, electrical, software, and other qualities based on the most up-to-date, synchronized data from sensors to optimize the product’s performance [107].

A DT may incorporate several physical rules, scales, or probabilities that reflect the physical object’s fundamental condition. This may be based on the physical model’s historical data [2, 108]. Different physics models, such as those for aerodynamics, fluid dynamics, electromagnetics, and stresses, collaborate with various system descriptions. Additionally, the usage of several scales allows the simulation to adjust to the needed level in terms of time limitations. For example, operators may visit various areas of each component while navigating the Digital Twin. The connectivity and real-time data exchange capabilities of DTs enable keeping the virtual item and the real one in sync with each other at regular intervals [109]. In this manner, information primarily flows from the real world into the digital world, and the virtual object may communicate data and information to the real object [110]. In addition to the dynamic data observed in real time and gathered from various data sources characterizing the situation of the physical entity and its environment, the virtual object also analyzes previous data from the physical object, including maintenance and operating histories. To repair some faults, restart a device after an interruption, or coordinate the actions of many robots, the virtual object delivers information to improve system maintenance, and it could generate more forecasts [109].

Digital Twins are dynamic representations that bridge the physical and virtual aspects of an entity, allowing for real-time mirroring and translation of activity into the behavior of the virtual object. They include mechanical, electrical, and software qualities, allowing for a holistic view derived from synchronized information. DTs incorporate diverse physics models, demonstrating their comprehensive nature. The real-time data exchange capabilities highlight the practical utility of DTs, with the virtual object's role expanding beyond real-time observations to include analysis of historical data, fault repair, device restarts, and robotic actions. Digital Twins are not just digitized replicas but intelligent, adaptable systems deeply connected to their physical counterparts.

2.5 The framework of digital twin

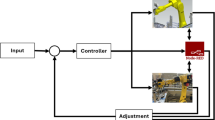

As illustrated in Fig. 6, three components constitute the DT utilization framework: the physical space, the information processing layer, and the virtual space. While being used, DT technology is capable of producing a complete physical system map, dynamic modeling of the whole life span, and ultimately an optimized procedure in real-time. The two-way connecting and mapping of real and virtual worlds are made possible through means of sharing information. Iterative optimization and the interaction of two spaces' regulatory systems enable intelligent decision-making.

2.5.1 The physical space

The physical space, which comprises people, equipment, materials, laws, and the environment, has an intricate, diversified, and dynamic nature of the manufacturing space. All types of items associated with product development and manufacture are included in the resources layer, including production resources (equipment for production lines), high-performance computer clusters, product data, and software resources. Various types of things are scattered throughout the world and need to be linked up via IOT technologies. Then, the physical world's data are gathered, combined, and employed for enhancement. For instance, sensors and wireless networking gear with intelligence are utilized in production for the gathering and sending of information from a variety of sources with varying characteristics, such as knowledge of the properties, state, functioning, defects, and disruptions of the system. Digital devices will gather this data and send it across the network. A timely, intelligent response will also be made by connected physical devices in accordance with the response guidance from the virtual space. Data and device attributes can be mapped (both directly and indirectly) to connect the virtual and real worlds.

Data gathering and transmission protocols are crucial in DT technology, enabling efficient and accurate data collection from various sources within the physical space and transferring to the information processing layer. Key protocols include Operational Process Control (OPC), Unified Architecture (OPC UA), Representational State Transfer (REST), Message Queuing Telemetry Transport (MQTT), Constrained Application Protocol (CoAP), Serial Communication Protocol (Modbus), HTTP, and HTTPS. These protocols are chosen based on the specific requirements of the DT application and the nature of the data sources. They enable real-time monitoring, analysis, and control by collecting data from sensors, devices, and systems within the physical space. The selection of the appropriate protocol depends on factors such as data volume, latency requirements, device compatibility, and security considerations.

Security protocols like Transport Layer Security/Secure Sockets Layer (TLS/SSL) and Open Authorization (OAuth) ensure data transmission between the physical space and the digital counterpart. These protocols protect data from eavesdropping and tampering and manage authorization and access rights. By combining these protocols, the digital twin can connect with physical components, gather accurate data, and transmit it securely.

2.5.2 The information processing layer

The physical and digital worlds may be mapped and interacted in both directions due to the information exchange at this level. The information processing layer serves as a connection between the real and the virtual space. There are three major operational components at this level. They are data processing, data storage, and data mapping. The data processing consists of four processes: data collection, preprocessing of data, data analysis and mining, and data fusion. Various databases of Management Information Systems (MISs), Programmable Logic Controllers (PLCs), and production systems are the data sources.

DT relies on specific protocols for data collection, including the Message Queuing Telemetry Transport protocol for real-time data transmission from sensors and IoT devices, HTTP/HTTPS for Web-based data collection from APIs and cloud services, Simple Network Management Protocol (SNMP) for network device monitoring, OPC Unified Architecture (OPC UA) for industrial and manufacturing applications, and Constrained Application Protocol (CoAP) for real-time streaming and data collection in resource-constrained devices for IoT services [111,112,113]. These protocols ensure efficiency and accuracy in data collection and transmission. Secure communication protocols and authentication mechanisms are implemented to prevent unauthorized access, data interception, and data tampering, ensuring data integrity and protection against data tampering. The process of preprocessing the raw data set consists of information based on rules cleansing, data structure, and primary clustering [23]. Data preprocessing is a crucial step in DT workflow, involving various protocols to clean, transform, and prepare data for analysis. These protocols depend on the data’s nature and application requirements. Common protocols include Open Database Connectivity (ODBC), Extensible Markup Language (XML), JavaScript Object Notation (JSON), custom data cleaning, data normalization, aggregation, compression, and encryption [114]. Custom preprocessing pipelines are often tailored to specific applications, ensuring high-quality, reliable data. Integrating these protocols enhances security, protects sensitive data, and mitigates cyber threats. Data analysis and mining are crucial for digital twins to provide insights and predictions. Advanced machine learning techniques like regression clustering, and deep learning are used for predictive maintenance, anomaly detection, and optimization. Data analysis security involves anonymizing or encrypting sensitive information, particularly when sharing results or collaborating with external parties, to prevent data leakage during the analysis process. Digital twin technology uses data mining algorithms like association rule mining, decision trees, and clustering to discover patterns and trends. Security measures protect intellectual property by preserving sensitive data, while privacy-preserving protocols like secure multi-party computation extract valuable knowledge.

Information or data requiring storage at this level comes from both the real world and the virtual realm. Physical environment data typically includes information on manufacturing, apparatus, materials, labor, services, and the workshop setting. Information gleaned from cyberspace primarily consists of data from simulations, data from evaluations and predictions, and data used in making decisions.

Protocols for data storage in the digital twin are essential to ensure the efficient and secure handling of data associated with physical assets or systems. DTs rely on extensive data storage and management to create and maintain a virtual representation of the physical world. As a result of the growing amount and diversity of data from multiple sources in the field of DT technology, there is a growing interest in big data storage technologies such as distributed file storage (DFS), Not Only SQL (NoSQL) database, NewSQL database, and cloud storage [115]. Distributed File System (DFS) enables concurrent access to shared files and directories by several hosts. NoSQL is designed to handle large amounts of data by scaling horizontally. NewSQL is a high-performance database that supports ACID properties and SQL functionality of traditional databases. It also incorporates replication and failback mechanisms using redundant computers.

In addition, synchronization, correlation, and time-sequence analysis of data constitute the three components of data mapping. Data mapping facilitates the simultaneous connection between the real object and digital object functionality by utilizing the information from the storage module and the data processing module. Synchronization protocols, such as Network Time Protocol (NTP) and Precision Time Protocol (PTP), ensure consistency between physical and digital representations of an object in real time. Correlation protocols, such as XML or JSON, help identify and associate related information from various sources for contextual analysis. Semantic Web technologies like RDF and OWL enable semantic data integration, creating a unified view of data for better decision-making. Time-sequence analysis protocols, like InfluxDB and Apache Cassandra, track historical data and predict future events based on observed data points. Security protocols, like TLS and SSL, protect sensitive information during transmission and storage. Access control and authentication protocols like OAuth and OpenID Connect ensure authorized users can access and modify data within the Digital Twin. Proper implementation of these protocols is crucial for the successful deployment of Digital Twins across various industries.

Digital twin technology security is crucial and involves implementing protocols and measures to protect data, systems, and communication channels. Key protocols include SSL or TLS, which encrypts data during transmission to prevent unauthorized access [116, 117]. Authentication protocols like Oauth 2.0 and OpenID Connect verify user identity, while role-based access control (RBAC) ensures data access is limited to relevant roles. Strong encryption algorithms like the Advanced Encryption Standard (AES) protect sensitive information [118]. Intrusion Detection and Prevention Systems (IDPS) monitor networks and systems for unusual activities, while Security Information and Event Management (SIEM) systems gather and analyze security data to detect security incidents [119, 120]. Public Key Infrastructure (PKI) protocols manage digital certificates and keys, while secure APIs provide authorization and access control mechanisms [121]. Blockchain technology is used to ensure data integrity and transparency [122,123,124]. Regular security audits and penetration testing identify vulnerabilities, while data privacy regulations like the General Data Protection Regulation (GDPR) compliance ensure data management [125, 126]. A secure Software Development Lifecycle (SDLC) is essential for developing and maintaining secure digital twin systems [127, 128]. By incorporating these protocols and security measures organizations can enhance digital twin system security by implementing protocols and measures, protecting sensitive data, mitigating cyber threats, and ensuring technology safety and reliability.

2.5.3 Virtual space

The virtual world framework and the digital twin application subsystem constitute the virtual space. The virtual world framework is completely digital. Three-dimensional representations of applications are established by the virtual world framework, which also offers a working environment for a variety of algorithms. The virtual environment platform offers a variety of virtual models, such as poly-physical models, workflow models, and simulation models. As part of the activity, DT compiles a variety of models, techniques, and historical data into a virtual environment platform. By collecting the features of the virtual model obtained from the computer system's database and utilizing the associated interfaces, it is possible to model actual entities in a virtual space. Feedback from 3D models is then recorded in the database. The DT system uses information from the past and the present from 3D virtual models and actual products to power the synchronized working of the digital twin. Real-world mapping of physical objects enables the simulation of complicated systems in addition to product visualization. Virtual models may be used to test or forecast disputes and disruptions before they happen in the actual world and then feed that knowledge back into the real world.

Depending on the demands and utilization goals, data fusion, database administration, algorithmic refinement, state assessment, forecasting, plan, and instruction release, as well as other capabilities, are included in the DT program subsystems to accommodate the needs. They can carry out testing of the product, ergonomics analysis, efficiency forecasting, functionality assessment, planning the arrangement of the production line, device status forecasting, prediction of product failure, the study of plant operations to enhance the quality of the product, and assessment of an execution process.

The digitization of physical objects is crucial in DT as it enables the processing, analysis, and management of data by computers. This allows for the use of information representation techniques in various areas such as quality assessment, analysis, computer numerical control machining, production management, and product design. DT-related modeling technologies encompass behavioral modeling, geometric modeling, rule modeling, and physical modeling.

Physical models necessitate a thorough comprehension of the physical characteristics and their interplay. Hence, the use of multi-physics modeling is crucial for achieving an accurate and detailed representation of a digital twin. Modelica is the prevailing multi-physics modeling language. Sun et al. [129] combined a theoretical model with a physical model to validate the assembly of high-precision goods. The theoretical model was derived via the use of Model-Based Definition (MBD) approaches, while the physical model was constructed through the process of point cloud scanning.

Geometric models represent a physical object by its geometric shape, structure, and visual characteristics, without including specific details or limitations of the object. These models are created using data structures including surface, wireframe, and solid modeling. Wireframe modeling uses simple lines to delineate the prominent edges of the object, creating a three-dimensional framework. On the other hand, surface modeling involves defining each surface of the object and then combining them to create a complete and unified model. Solid modeling refers to the representation of the internal composition of a three-dimensional object, encompassing details such as vertices, edges, surfaces, and bodies.

Behavioral models elucidate the diverse behaviors exhibited by a physical entity to accomplish tasks, adapt to alterations, engage with others, regulate internal processes, and sustain well-being. The simulation of physical behaviors is an intricate procedure that encompasses various models, including problem models, state models, dynamics models, assessment models, and so forth. These models can be constructed using finite-state machines, Markov chains, and ontology-based modeling techniques. The study by Negri et al. [43] proposed incorporating black-box modules into the main simulation model. In their studies, behavior models of the digital twin were selectively engaged as required. The modules communicate with the main simulation model via a standardized interface. Ghosh et al. [130] explored the development of DTs using hidden Markov models. The twins were comprised of a model component and a simulation component, which together created a Markov chain to contain the dynamics of manufacturing phenomena. The models represented the dynamics of the phenomena by employing discrete states and their corresponding transition probabilities.

Rule models encompass the rules that are derived from past data, expert knowledge, and predetermined logic. The virtual model is endowed with the capacity to engage in reasoning, judgment, evaluation, optimization, and prediction. Rule modeling encompasses the processes of extracting, describing, associating, and evolving rules. Important modeling technologies encompass solid modeling for the geometric representation, texture technologies for enhancing realism, finite element analysis for the physical model, finite-state machines for the behavioral model, XML-based representation for the rule model, and ontology representation for the rule model. In the World Avatar project, Akroyd et al. [131] showcased a digital twin that utilized a versatile dynamic knowledge network. They made use of ontology-based information representation to create a unified interface for querying data. Banerjee et al. [132] exploited the reasoning power in a knowledge graph and developed a query language to extract and infer knowledge from large-scale production line data to support manufacturing process management in digital twins.

The Model Verification, Validation, and Accreditation (VV&A) process is incorporated to enhance model accuracy and simulation confidence by assessing the extent to which the correctness, tolerance, availability, and running results align with the specified requirements. Static methods assess the static elements of modeling and simulation, while dynamic methods verify the dynamic elements of modeling and simulation.

Existing modeling methods predominantly prioritize geometric and physical models, neglecting the incorporation of multi-spatial scale and multi-time scale models that can accurately depict behaviors and characteristics across various spatial and temporal dimensions. The integration of systems is hindered by the inability of current virtual models to adequately represent physical phenomena. Future modeling technologies should prioritize the development of synthesis methods that integrate several disciplines and functions. The process of DT modeling should be approached as an interdisciplinary synthesis process. Optimizing multi-objective and full-performance modeling is essential for accurately replicating both static and dynamic characteristics with high precision and dependability.

The comprehensive framework of DTs unveils a structured and multifaceted approach to the utilization of DT technology. The delineation of three key components, such as physical space, information processing layer, and virtual space, crafts a holistic framework where the dynamic interplay between the physical and digital realms unfolds seamlessly. The physical space involves the integration of IoT technologies, such as OPC, MQTT, and CoAP, to link scattered elements encompassing people, equipment, materials, laws, and the environment. The Information Processing Layer focuses on data processing, storage, and mapping, with protocols like MQTT, HTTP/HTTPS, SNMP, OPC UA, and CoAP. Security protocols like TLS/SSL and OAuth ensure data transmission integrity. Data preprocessing involves protocols like ODBC, XML, JSON, and custom cleaning, while advanced machine learning techniques like regression clustering and deep learning provide insights. Virtual Space focuses on the digital transformation of physical objects, with DT applications syncing to create a digital environment. Future modeling technologies should prioritize synthesis methods that integrate various disciplines and functions.

3 Applications of digital twin

After evaluating and filtering the papers concentrating on DT application from Sect. 2, the studies with developed DT application in the past 5 years were 81 related to aerospace, building and construction, energy, education, information technology (IT), medical and healthcare, and manufacturing. As shown in Fig. 7, manufacturing is by far the most prominent DT research field, accounting for more than 52% of the entire application. The majority of the study focuses on improving manufacturing planning, modeling, monitoring, and prediction of tools and machines regarding sustainable production. DT was initially launched as a tool for production and product lifecycle management, and due to this, manufacturing accounts for more than 50 percent of all DT application research. It is, nevertheless, expanding to other sectors. Performance monitoring and fault detection, power plant efficiency improvement, and energy grid growth and development are prominent energy topics. DT application research on building and smart cities includes monitoring the health of structures, building control and administration, project planning optimization, and maintenance prediction. Next, DT research on computer systems, communication security, and cloud services accounts for 5% (IT). Automotive, aerospace, and education DT application research is mostly for product state monitoring and prediction, testing and simulation, and online teaching optimization. Finally, medical-surgical simulation has increased DT applications in healthcare. Based on the idea, review, and case studies offered in the various publications, the different applications of DT may be categorized as manufacturing, design, and services, as summarized in Table 4. Figure 8 presents some industrial applications of DT.

3.1 Application in design

The DT’s goal is to perceive, diagnose, and predict the status of a physical object in real time using testing, simulation, and data analysis according to the physical and digital model and to rectify it as well as capable of completing their own iteration optimization via reciprocal learning between digital models, thereby expediting the design process and cutting down on redesign expenses [178]. DT’s abilities have been projected in task description, design principles, and virtual verification [179].

Tao et al. [179] stated that using a DT provided designers with a comprehensive grasp of a product’s digital footprint throughout its construction. It served as an “engine,” transforming vast amounts of data into useful information. Designers may utilize the knowledge right away to assist their decisions during multiple design phases. In their work, a detailed digital twin framework for product design focused on linking the physical and virtual products was explained and analyzed. They discussed Pahl and Beitz's structural design approach, which partitions the process of design into four phases, namely task clarification, conceptual design, embodiment design, and detail design. They affirmed that a commonly used combination-based design theory and methodology allows designers to accomplish various design tasks. In addition, the study presented a DT-based bicycle design, which showed that DT-product design is particularly beneficial for the iterative redesign of a preexisting product as opposed to the design of a novel product or an entirely new one.

3.1.1 Iterative optimization

The ultimate objective of excellent design is to constantly enhance the product requirements from an idea to a detailed design. According to Liu et al. [178], the usefulness of DT can be extended to predict how products or systems will function and to identify potential faults that may need fixing. Also, iterative optimization is possible by monitoring design improvements and historical tendencies via a digital twin for the product in question.

Furthermore, using the DT technique, it is feasible to achieve static setup and dynamical execution as well as iterative design optimizations [180]. They demonstrated by developing a mechanism for bi-level iterative coordination that achieved optimal design performance for the needed functionalities of an automated flow-shop manufacturing system (AFMS) and proposed a DT-driven technique for speedy personalized designing of the AFMS. The DT integrated physics-based system modeling with semi-physical simulation gave analytical capabilities for engineering solutions to provide a reliable digital design. Further, their work presented feedback on acquired decision-support information from the intelligent multi-objective optimization of the dynamic execution, which evidenced the usefulness of the DT vision in AFMS design. Their developed digital twin prototype was successfully designed in a sheet material automated flow-shop production system proving the proposed digital twin prototype gives a design with an intelligent simulation and optimization engine.

The application of DT was extended to enhance the precision and effectiveness of green material optimal selection (GMOS) in product development by selecting materials more accurately and effectively [181]. Xiang and colleagues suggested a brand-new technique, the 5D Evolvement Digital Win Model (5D-EDTM), powered by a digital twin. In which all of the data was combined into a perfect model of evolution. The technique enabled material selection through simulation and assessment that was based on mode and fused data. As shown in Fig. 9a, the laptop case was utilized to demonstrate the effectiveness of the digital twin-driven 5D-EDTM as well as how the technique has evolved.

Some proposed DT applications in design. a Selecting the most eco-friendly material for a laptop case using 5D-EDTM: The technique improved the precision and effectiveness of green material optimal selection (GMOS) in product development by selecting materials more accurately and effectively [181]. (Reproduced with permission, license number: 5615211433981). b Product development processes based on DT: The ultrahigh-fidelity virtual manufacturing system facilitated the virtual production of trial versions of products, product verification, facilitated design improvement, and accelerated the construction of the new product's digital twin. Adapted from [184]. c DT-based virtual factory. Model for combining (1), virtual reality training simulations and (2) linear simulations: Multi-user virtual reality learning or training scenarios aided industrial system modeling, simulation, and assessment [185]. (Reproduced with permission, license number: 5615231256666). d Traditional production process development is used in comparison to the DT-based product development process [182]. (Reproduced with permission, license number: 5615350034527). e Concurrent engineering approach in digital production process development prevented production line errors by addressing design faults, saving money on fixing mistakes, and expediting product market entry through virtual cost-free design modifications [182]. (Reproduced with permission, license number: 5615350034527)

3.1.2 Provide data integrity