Abstract

A robust Kalman filter based on Chi square test with sequential measurement update is proposed. This approach can not only handle outliers in part or even individual measurement channel, but can also further improve the accuracy especially when a novel ordering strategy in processing the measurement elements is adopted. The accuracy improvement can be attributed to the higher statistical efficiency, i.e., an increased probability of correctly resisting the outlying measurement elements and retaining the good ones. The accuracy improvement of the proposed method is illustrated by a simulating example.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Kalman filter (KF) is widely used in processing kinematic geodetic measurements (Bogatin and Kogoj 2008). For linear systems with Gaussian noises, KF is optimal in almost every conceivable sense (Simon 2006). Gaussianity is often adopted due to its tractability and its good asymptotic performances, but exact Gaussianity seems to be an idealistic assumption in many practical cases. In fact Gaussianity is only approximate for cases with small sample data, especially when outliers/biases are not negligible. As a 2-norm-of-error minimizer, KF is rather sensible to deviations from the assumed Gaussianity which is well known as the lack-of-robustness or lack-of-reliability. While robustness/reliability can be rather general concepts, only the robustness against uncertainties in noise probability distribution is considered, i.e., the distributional robustness to be more specific (Huber and Ronchetti 2009).

In the geodetic literature, there are two kinds of approaches to address the non-Gaussianity and/or outliers/biases (Lehmann 2013a), one is the test-based outlier detection methods and the other is robust statistics based method.

For the test-based outlier detection method, in his pioneer work, (Baarda 1968) proposed to use hypothesis test to detect outliers in geodetic measurement. While the a priori variance is used in (Baarda 1968), the test using estimated variance is introduced in (Pope 1976). Trying to extend the the theory of Baarda (1968) and Pope (1976), Teunissen developed the recursive detection, identification, and adaptation (DIA) theory (Teunissen 1990a, b; Teunissen and Salzmann 1989). In DIA, the detection process aims to decide by using a overall test whether some kind of bias or outlier is present; the identification process serves to decide in which channel of the measurement and/or process vector and at which epoch the bias/outlier occurred; in the adaptation process, the detected bias/outlier are corrected or discarded. The DIA methods have found widely applications in the geodetic community, e.g., to detect GNSS pseudorange outliers, phase cycle slips or ionospheric disturbances (Teunissen 1998; Teunissen and De Bakker 2013), station coordinate discontinuities (Perfetti 2006), etc. Also, by inversing the power function of the test, a concept called minimal detectable bias/outlier, some kind of reliability measure, can be derived which can be used to evaluate the strength of the measurement model (De Jong 2000; Koch 2015; Teunissen 1998), and hence further to conduct the design of the measurement model (Salzmann 1991). Some possible difficulties in using DIA include the following. First, appropriate alternative hypotheses should be carefully chosen, this may be the most non-trivial task in DIA (Teunissen 1990a). The alternative hypotheses construction in the identification process depends heavily on the specific problem to be solved and determines directly how to adapt the detected unusual measurement in the adaptation process (Lehmann 2013b). Second, the critical values of the test statistics in the detection and/or identification process are often hard to determine because the complex distribution pattern of the test statistics, sometimes, some kind of numerical method, e.g., the Monte Carlo method, can be used instead of the analytical methods (Lehmann 2012). Third, the application of DIA in the multiple-outlier case needs further investigation.

For the robust statistics based method, the well-developed discipline called robust statistics, aiming to robustify the conventional statistical inference methods such as estimation (Huber and Ronchetti 2009), has been successfully used in geodesy (Guo 2013; Hekimoğlu et al. 2011; Třasák and Štroner 2014). The starting point, which make it different from the test-based method, is to only make the method insensitive to outliers or other statistical uncertainties. In other words, it does not try to detect/identify/correct the uncertainties but only to resist them. It seems also natural to borrow concepts and methods from robust statistics to analyze and improve the robustness of KF. It is indeed so, e.g., the Bayesian estimator, of which KF can be seen a special case, was robustified using the celebrated M-estimator in (Yang 1991). Robust KF for rank-deficient measurement models was studied in (Koch and Yang 1998) focusing on getting the initial estimates at the start of the filtering. Rank-deficient model together with process model uncertainties was addressed recently in (Chang and Liu 2015) focusing on getting more suitable initial estimate in iteratively solving the M-estimation problem, also in (Chang and Liu 2015), the influence function of KF is introduced and derived to evaluate the robustness of a KF-based approach. An adaptively robust KF is proposed in (Yang et al. 2001) to address uncertainties in both process and measurement models. M-estimator based robust KF is also developed in the framework of nonlinear KF, e.g., the unscented KF (Karlgaard 2015).

Note that there are some kind of overlapping of these two categories. First of all, both aim to robustify the methods, though through different approaches. In the robust statistics method, e.g., the M-estimator, some kind of detection and adaptation (down weighting) can be safely considered existing (Lehmann 2013a). Some kinds of combinations of the two are also possible, e.g., in (Lehmann 2013a), it is stated that “Robust estimation procedures can also be considered as preparatory tools for improved outlier testing”.

A robust KF using Chi square test to detect outliers in the measurement is studied in (Chang 2014), in this approach, the Mahalanobis distance of the measurement under assumed Gaussian distribution is constructed as the test statistic. This approach bears some features of both the above two methods. Outliers, raised due to statistical uncertainties, is detected using a Chi square test, this is the same to the detection process of the DIA in its local test form. However, for the detected outlier, the corresponding measurement is down weighted to make the estimate insensitive to it, this follows the lines of robust statistics, of course one can also consider it is following one kind of the adaptation of DIA. In (Chang 2014) Only one total Mahalanobis distance of all measurement elements is calculated and only one scaling factor is introduced to inflate the overall covariance matrix of the innovation vector, so in its original form, the approach cannot efficiently address uncertainties in only part of the measurement channels (Chang 2014). It was mentioned in (Chang 2014) that this problem can be fixed through implementing sequential measurement update, i.e., processing the vectorial measurement element by element. This idea is detailed and further explored in the current work. In addition to addressing uncertainties in part or even individual measurement channel, there are some by-products of this idea, e.g., superior numerical stability can be expected because no matrix inversion is involved. More importantly, accuracy can be further improved especially through elaborately choosing the order of processing the elements of the measurement vector. We attribute this improvement to the higher statistical efficiency gained. More specifically, after part of the elements are processed, better estimation (better than the prediction) can be obtained which serves as better reference to detect outliers in processing the remaining part of the elements. Better reference will increase the probability of correctly resisting the outlying ones and retaining the good ones in the measurement elements, which means a higher statistical efficiency.

The remaining part of the paper is organized as follows. The method is presented in Sect. 2. Accuracy improvement is illustrated with a simulating example in Sect. 3. Some concluding remarks is given in Sect. 4.

2 Method

In the first subsection, after presenting the basic formulae of the KF, the approach of detecting and resisting outliers in the measurement is introduced. In the second subsection, sequentially implementing the measurement update with the previously introduced outlier handling method is derived emphasizing a novel ordering strategy in processing the elements of the measurement vector.

2.1 Kalman filter and the resistance of outliers

The problem studied is represented as the following discrete-time state space model,

where \( \varvec{x}_{k} \) and \( \varvec{y}_{k} \) are n- and m-dimensional state and measurement vectors at the kth epoch, F and H are transition and design matrices with appropriate dimensions, \( \varvec{w}_{k} \) and \( \varvec{v}_{k} \) are process and measurement noises which are assumed zero-mean Gaussianly distributed with nominal covariance matrix Q and R respectively. Note that in this study the real distribution of \( \varvec{v}_{k} \) can deviate from this assumption. Assume the initial estimate at 0 epoch is \( \widehat{\varvec{x}}_{0\left| 0 \right.} \) with associate covariance estimate being \( \varvec{P}_{{\widehat{\varvec{x}}_{0\left| 0 \right.} ,\widehat{\varvec{x}}_{0\left| 0 \right.} }} \). At any epoch, say k, we have the following KF formulae.

where \( \widehat{\varvec{x}}_{i\left| j \right.} \) represents an estimate of the state vector at the ith epoch using measurements up to the jth epoch, specifically, \( \widehat{\varvec{x}}_{{k\left| {k - 1} \right.}} \) and \( \widehat{\varvec{x}}_{k\left| k \right.} \) are also called the a priori and the a posteriori estimates; \( \varvec{P}_{{\varvec{a},\varvec{b}}} \) represents the (cross) covariance matrix between a and b; \( \tilde{\varvec{y}}_{k} \), a non-random constant, is the actual measurement, or a realization of \( \varvec{y}_{k} \); \( \varvec{K}_{k} \) is gain matrix which combines \( \widehat{\varvec{x}}_{{k\left| {k - 1} \right.}} \) and \( \tilde{\varvec{y}}_{k} - \widehat{\varvec{y}}_{{k\left| {k - 1} \right.}} \) linearly to get \( \widehat{\varvec{x}}_{k\left| k \right.} \). Note that \( \varvec{e}_{k} \text{ = }\tilde{\varvec{y}}_{k} - \widehat{\varvec{y}}_{{k\left| {k - 1} \right.}} \) is often called the innovation vector (Kailath et al. 2000) whose covariance is equal to \( \varvec{P}_{{\widehat{\varvec{y}}_{{k\left| {k - 1} \right.}} ,\widehat{\varvec{y}}_{{k\left| {k - 1} \right.}} }} \) because \( \tilde{\varvec{y}}_{k} \) is non-random.

Under the Gaussian assumption, \( \varvec{y}_{k} \) should be Gaussian with mean \( \widehat{\varvec{y}}_{{k\left| {k - 1} \right.}} \) and covariance \( \varvec{P}_{{\widehat{\varvec{y}}_{{k\left| {k - 1} \right.}} ,\widehat{\varvec{y}}_{{k\left| {k - 1} \right.}} }} \), and the squared Mahalanobis distance of \( \varvec{y}_{k} \) should be Chi square distributed with m freedoms, i.e.,

So we can do a Chi square test to judge whether an actual measurement is a realization of \( \varvec{y}_{k} \) under the Gaussian assumption. Let the null hypothesis be that \( \varvec{v}_{k} \) is Gaussianly distributed. For a given significance level, say 1 − α, and the corresponding upper α-quantile \( \chi_{m,\alpha }^{2} \), we have

if the null hypothesis holds, where Pr[·] denotes the probability of an event. Given α a rather small value, if \( \gamma \left( {\tilde{\varvec{y}}_{k} } \right) > \chi_{m,\alpha }^{2} \), we can say with a rather high probability, i.e., 1 − α, that the null hypothesis should be rejected, and in this case, \( \tilde{\varvec{y}}_{k} \) is deemed to be outlier. This shows how the outliers are detected. For a detected outlier, its contribution in the measurement update should be decreased, and this is achieved by inflating the covariance of the innovation vector. The inflating factor can be calculated as

This shows how the detected outlier is resisted.

As in Eq. (10), only one statistic is calculated to judge whether the overall measurement vector is an outlier, and as in Eq. (12), only one scaling factor is introduced to inflate the whole covariance matrix. If only part or even a single element of the measurement is outlying, the overall measurement vector may be deemed as being outlying. This means that some good measurement elements may be mistaken as outliers. Also an outlying element may fail to be detected because it may be masked by other good elements. This means that some outlying measurement elements may be mistaken as good ones. To summarize, either mistaking good measurement elements as outlying ones or mistaking outlying ones as good ones will result in loss of statistical efficiency and hence in lower accuracy of the estimates.

2.2 Implementing sequential measurement update in robust Kalman filter

As mentioned in (Chang 2014), outliers in individual measurement channel can be efficiently addressed by implementing sequential measurement update of the KF, i.e., only a single measurement element is processed once in a measurement update and hence there will be m measurement updates in one recursion at any epoch. In every measurement update, the previous outlier detection and resistance will be performed.

If the measurement elements are cross correlated, we first de-correlate them through the Cholesky decomposition. One reviewer insisted on putting douts on this decorrelation approach, as it would spread outliers over different observations. Unfortunately, the authors have no sounder solutions for now and will work in depth on one this issue in the future. Let

From Eq. (2), we have

Let \( \bar{\varvec{y}}_{k} = \varvec{L}^{ - 1} \varvec{y}_{k} \), \( \bar{\varvec{H}} = \varvec{L}^{ - 1} \varvec{H} \), and \( \bar{\varvec{v}}_{k} = \varvec{L}^{ - 1} \varvec{v}_{k} \), we have de-correlated measurement equation

Note that for this measurement equation, the actual measurement should be \( \tilde{\bar{\varvec{y}}}_{k} = \varvec{L}^{ - 1} \tilde{\varvec{y}}_{k} \) accordingly.

Let

In the jth measurement update at the kth epoch, we have

where \( \hat{\varvec{x}}_{k,j - 1} \) is the estimate after processing the (j − 1)th measurement element and \( \hat{\varvec{x}}_{k,0} = \hat{\varvec{x}}_{{k\left| {k - 1} \right.}} \), \( \hat{\bar{y}}_{k,j} \) is the prediction of the jth element of \( \bar{\varvec{y}}_{k} \). One-freedom Chi square test, is carried out to judge whether \( \tilde{{\overline{y} }}_{{k,j}} \) is an outlier. The statistic is calculated as

For a given significance level, say also 1 − α, and the corresponding upper α-quantile \( \chi_{1,\alpha }^{2} \), we check if

If Eq. (21) holds, \( \tilde{\bar{y}}_{k,j} \) is deemed as an outlier, then the following scaling factor is calculated to inflate the variance in Eq. (19), i.e.,

And then update the estimate as

It is well known that \( \hat{\varvec{x}}_{k,j} \) is a more accurate estimate than \( \hat{\varvec{x}}_{k,j - 1} \), because \( \varvec{P}_{{\hat{\varvec{x}}_{k,j} ,\hat{\varvec{x}}_{k,j} }} \le \varvec{P}_{{\hat{\varvec{x}}_{k,j - 1} ,\hat{\varvec{x}}_{k,j - 1} }} \), i.e., \( \varvec{P}_{{\hat{\varvec{x}}_{k,j - 1} ,\hat{\varvec{x}}_{k,j - 1} }} - \varvec{P}_{{\hat{\varvec{x}}_{k,j} ,\hat{\varvec{x}}_{k,j} }} \) is positive semi-definite, which is explicitly shown in Eq. (26). So in checking the (j + 1)th measurement elements, the reference information used, i.e., \( \hat{\varvec{x}}_{k,j} \), is better than \( \hat{\varvec{x}}_{k,j - 1} \), of course even better than \( \hat{\varvec{x}}_{{k\left| {k - 1} \right.}} \). Better reference information implies an increased probability of detecting outliers, so it is more probable to correctly resist the outlying elements and to retain the good ones.

In implementing the above robust sequential measurement update, it should be apparent that the more reliable a measurement element is, the more accurate the estimate will become. So the more reliable elements should be firstly processed in order to get a even better reference information to check the remaining dubious elements. The Mahalanobis distances or their squares of individual elements can represent to some extent the relative qualities of these elements, i.e., the smaller one’s Mahalanobis distance is, the more reliable the elements should be. So for given reference information, Mahalanobis distances of all the remaining elements are calculated, and the element with the smallest Mahalanobis distance is processed in the next measurement update. Of course this sorting process will increase the computation, but the accuracy improvement may reward in some cases.

The algorithm is depicted in Table 1 in the form of pseudo code.

3 An illustrating example

Assume an object is moving forward without side slip in the horizon, the forward distance and velocity are of interest and the north and east position is measured.

A constant velocity model is assumed, so we have

where, p and v are the forward position and velocity which should be estimated, a is the forward acceleration which is assumed white Gaussian noise with variance \( \sigma_{a}^{2} \). Given the integration interval τ, Eq. (27) is discretized to get the process equation

with

The measurement equation is

where θ is the heading angle. The measurement noises \( \varepsilon_{k} \) and \( \xi_{k} \) are assumed to be distributed as gross error model, which is proposed in (Huber 1964) to formulate the full kind of the “neighborhood” of an assumed parametric model, the probability density function is

where f, f 0, and f c represent the real, the nominal, and the contaminating distribution respectively, μ is called the contaminating ratio. When μ = 0, the nominal distribution represent the real distribution exactly. In this study both f 0 and f c are assumed to be zero-mean Gaussian but with different variance, in this case, the gross error model in Eq. (31) is also called a Gauss mix model.

The following three cases are studied.

Case 1

NoUn: uncertainty exists in neither of the two channels, i.e., the nominal distributions of both \( \varepsilon_{k} \) and \( \xi_{k} \) represent their real distributions;

Case 2

UnOn: uncertainty exists in only one channel, say the north channel;

Case 3

UnBo: uncertainties exist in both channels.

Let f 0 ~ N(0,1) and f c ~ N(0,10), if uncertainty exists, let μ = 0.1, otherwise μ = 0.

Three approaches are checked in all three cases,

-

Approach 1 KF, the standard KF;

-

Approach 2 RKF, the robust KF proposed in (Chang 2014), also introduced in Sect. 2.1. In this approach let α = 0.05, so \( \chi_{{2,{\kern 1pt} {\kern 1pt} {\kern 1pt} 0.05}}^{2} = 5.99 \).

-

Approach 3 RKFs, the robust KF with sequential measurement update proposed in this work, illustrated in Table 1. In this approach let α = 0.05, so \( \chi_{{1,{\kern 1pt} {\kern 1pt} {\kern 1pt} 0.05}}^{2} = 3.84 \).

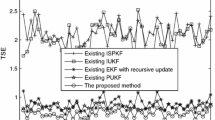

Monte Carlo experiments with 10,000 independent runs are conducted. The mean squared (over different Monte Carlo runs) errors of the estimates at any epoch by all three approaches in all three cases are calculated and depicted in the figures. The overall mean squared errors (over both different Monte Carlo runs and different epochs) of the estimates by three approaches in three cases are also calculated and summarized in Table 2.

In Figs. 1 and 2, it is found that all three approaches perform well in the first case. As the KF is optimal in this case, the comparative performance of the two robust approaches validates their statistical efficiency in this case. More specifically, there exists inevitably probability that the good measurement may be mistaken as outliers, but this probability is rather low. The high efficiency is achieved by deliberately selecting a rather high significance level, i.e., 1 − α.

From Figs. 3 and 4, we see that the performance of the KF degrades significantly which clearly shows its lack of robustness. However, two robust approaches can still provide relatively good estimates in this case. The higher accuracy of the two robust approaches compared to that of the KF is due to the robustness of two. Note that in spite of the robustness, the root mean squared errors of the two robust approaches still increase compared to the first case, this has nothing to do with robustness, rather this is because the equivalent accuracy of the measurement decrease compared to the first case. In the first case, the standard deviation of the measurement noise (north channel) is 1 m, while the equivalent standard deviation (north channel) in the second case is

From Figs. 3 and 4, we can also find that the RKFs approach performs slightly better than the RKF approach. The superiority of RKFs can be clearly seen in Table 2. As explained previously, this is due to the higher statistical efficiency of RKFs than RKF.

From Figs. 5 and 6, we observe that again in this case, the two robust approaches outperform the standard KF which is due to the robustness. Again in this case, the performances of the two robust approaches decrease compared to the second case which is caused by the increased standard deviation of measurement noise in east channel. The RKFs performs slightly better than RKF as is clearly demonstrated in Table 2, again, this is due to the relatively higher statistical efficiency of the former compared to the latter.

4 Concluding remarks

The distributional uncertainty in the measurement noise, or more specifically the non-Gaussianity of the measurement noise’s distribution, is addressed through employing a robust KF based on Chi square test to detect outliers and innovation vector covariance inflation to resist the detected outliers. Through implementing the so called sequential measurement update of the robust KF, we achieved manifold merits: the ability to address outliers in part of or even individual measurement channel; higher numerical stability because matrix inverse is no longer needed; and most importantly higher accuracy because of a higher statistical efficiency in detecting outliers. The higher statistical efficiency is brought about by a higher probability of correctly detecting the outlying measurement elements and retaining the good ones.

It must be admitted that there is inevitably computation increase in the proposed method, mainly because hypothesis test should be done for every measurement element and a sorting process should be carried out to select the measurement element for the next processing, but we believe that in some case, the higher accuracy gained may reward the increased computation paid.

References

Baarda W (1968) A testing procedure for use in geodetic networks. Publ Geod New Ser 2(5):1–97

Bogatin S, Kogoj D (2008) Processing kinematic geodetic measurements using Kalman filtering. Acta Geodaetica et Geophysica Hungarica 43(1):53–74

Chang G (2014) Robust Kalman filtering based on Mahalanobis distance as outlier judging criterion. J Geod 88(4):391–401

Chang G, Liu M (2015) M-estimator based robust Kalman filter for systems with process modeling errors and rank deficient measurement models. Nonlin Dyn 80(3):1431–1449

De Jong K (2000) Minimal detectable biases of cross-correlated GPS observations. GPS Solut 3(3):12–18

Guo J (2013) The case-deletion and mean-shift outlier models: equivalence and beyond. Acta Geodaetica et Geophysica 48(2):191–197

Hekimoğlu S, Erdogan B, Erenoglu R, Hosbas R (2011) Increasing the efficacy of the tests for outliers for geodetic networks. Acta Geodaetica et Geophysica Hungarica 46(3):291–308

Huber PJ (1964) Robust estimation of a location parameter. Ann Math Stat 35(1):73–101

Huber PJ, Ronchetti EM (2009) Robust statistics. Wiley, New Jersey

Kailath T, Sayed AH, Hassibi B (2000) Linear estimation. Prentice Hall, New Jersey

Karlgaard CD (2015) Nonlinear regression huber-kalman filtering and fixed-interval smoothing. J Guid Control Dyn 38(2):322–330

Koch KR (2015) Minimal detectable outliers as measures of reliability. J Geod 89(5):483–490

Koch KR, Yang Y (1998) Robust Kalman filter for rank deficient observation models. J Geod 72(7):436–441

Lehmann R (2012) Improved critical values for extreme normalized and studentized residuals in Gauss–Markov models. J Geod 86:1137–1146

Lehmann R (2013a) 3σ-Rule for outlier detection from the viewpoint of geodetic adjustment. J Surv Eng 139(4):157–165

Lehmann R (2013b) On the formulation of the alternative hypothesis for geodetic outlier detection. J Geod 87:373–386

Perfetti N (2006) Detection of station coordinate discontinuities within the Italian GPS fiducial network. J Geod 80(7):381–396

Pope A. (1976). The statistics of residuals and the detection of outliers. NOAA Technical Report, NOS 65(NGS 1): 1-133

Salzmann M (1991) MDB: a design tool for integrated navigation systems. Bull Géod 65(2):109–115

Simon D (2006) Optimal state estimation: Kalman, H∞, and nonlinear approaches. Wiley, New Jersey

Teunissen PJG. (1990a). An integrity and quality control procedure for use in multi sensor integration. In: Proceedings ION GPS, pp 19–21

Teunissen PJG (1990b) Quality control in integrated navigation systems. IEEE Aerosp Electron Syst Mag 5(7):35–41

Teunissen PJG (1998) Minimal detectable biases of GPS data. J Geod 72(4):236–244

Teunissen PJG, De Bakker PF (2013) Single-receiver single-channel multi-frequency GNSS integrity: outliers, slips, and ionospheric disturbances. J Geod 87(2):161–177

Teunissen PJG, Salzmann MA (1989) A recursive slippage test for use in state-space filtering. Manuscripta geodaetica 14(6):383–390

Třasák P, Štroner M (2014) Outlier detection efficiency in the high precision geodetic network adjustment. Acta Geodaetica et Geophysica 49(2):161–175

Yang Y (1991) Robust bayesian estimation. Bull Géod 65(3):145–150

Yang Y, He H, Xu G (2001) Adaptively robust filtering for kinematic geodetic positioning. J Geod 75(2):109–116

Acknowledgments

The authors are grateful to two anonymous reviewers whose valuable comments improved the paper significantly. This work was supported by the National Natural Science Foundation of China (No. 41404001).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chang, G., Wang, Y. & Wang, Q. Accuracy improvement by implementing sequential measurement update in robust Kalman filter. Acta Geod Geophys 51, 421–433 (2016). https://doi.org/10.1007/s40328-015-0134-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40328-015-0134-4