Abstract

The article deals with the possibilities of the automatic detection of outliers during the processing of accurate surveying measurements which are assessed by the least squares adjustment. These are classical terrestrial measurements obtained during the survey of high precision spatial geodetic networks. The authors use robust M-estimators for the automatic detection of outlying measurements. Robust adjustment is combined with the method of the assessment adjusted measurements’ residuals. In the article, the authors focus on the description of the detection method and its testing. The testing concept consists in the determination of the efficiency of the detection of outliers of experimental measurements in a model spatial geodetic network. By means of a computer pseudorandom number generator, a model of ideally created measurements is generated in the first phase of experimental testing. This model is repeatedly contaminated with varying numbers of differently outlying measurements, and the automatic detection method is applied during their subsequent processing. The resulting detected measurements are compared against contaminated input outliers and the actual efficiency of the detection method is identified.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The effect of measurement errors is an inevitable natural part of the process of measuring data acquisition. The measuring process is continuously affected by numerous disturbing factors resulting in the appearance of measurement inaccuracies. This natural effect of errors cannot be eliminated from the process in any way, but only, more or less, suppressed, e.g. by the selection of appropriate surveying instruments, experienced qualified operators or sophisticated technological procedures. The effects of measurement errors in general must be predicted, and the measurement methods and successive processing techniques must be set up so that they are maximally resistant to the effect of these errors and are able to suppress their appearance as much as possible, or to successfully detect the already existing errors and eliminate them from the computational process. To ensure the highest quality of results, therefore, the computational processing apparatus must be equipped with a tool allowing the detection and elimination of present erroneous measurements. During classical processing of geodetic measurements, it is assumed that geodetic data are a random sample from a normal probability distribution.

Based on the assumption of the validity of this requirement, the least squares method is exclusively used in practical problems of the adjustment of geodetic measurements. The assumption of the normality, however, is hardly fulfilled in the real world. The quality of surveying measurements is continuously threatened by the effect of errors caused by the measuring technology and operators of geodetic instruments. Due to the effects of these errors, data are contaminated by outlying observations, and the condition of the origin of measurements from a normal probability distribution is violated. The application of the least squares method, in this case, is rather problematic as its sensitivity to the violation of the normality condition of processed data is quite high. If this method is used during the processing of measurements contaminated by outliers, the adjustment computation is significantly affected and erroneous results are obtained. In the case of the input data contamination with outliers, therefore, prior to the adjustment itself, it is necessary to clean the data (by outlier detection and elimination) or process (adjust) the data by using methods less sensitive to the effect of outliers.

The principal objective of the presented article is the description of an original method of the detection of outliers within a set of measured values. The designed method is applied for the adjustment of high precision geodetic networks commonly used in engineering surveying in which large sets of repeatedly measured values are presumed, i.e. large volumes of redundant measurement are considered.

The issues of outlier detection may be solved by using different techniques; apart from the methods applied here, the principles of fuzzy logic (Sisman et al. 2012), the Random Sample Consensus method (Fischler and Bolles 1981), the MINIMAX method (Gašincová and Gašinec 2010) or e.g. the Quantum-Inspired Evolutionary Algorithm (Akter and Khan 2010) may be used. Outlier detection may be based purely on least squares theory too (Baselga 2011). Efficiency testing of outlier detection for geodetic adjustment has been published also in Erenoglu and Hekimoglu (2010), where author’s compared some detection methods based on different principles. Other articles describing this issue are Benning (1995), Hekimoglu and Koch (1999) or Hekimoglu (2005).

2 Design of the outlier detection method

The method is designed and tested in two versions. Both versions are based on the classical least squares adjustment of geodetic measurements. They combine the robust statistical methods and the methods assessing outliers by the magnitude of residuals of individual measurements identified from the adjustment. The detection procedure of outlying values is briefly described below for both selected versions.

Similar applications of robust estimations as a tool for detecting outliers in geodetic networks are presented e.g. in Harvey (1993), Berné and Baselga (2005) focusing on the testing of the detection of gross measurement errors, or Gökalp et al. (2008) comparing different methods of outlier detection in the GPS network.

2.1 One-step detection method

In the initial phase of the measured data processing, the robust M-estimator method (Huber 1964) serves for the estimation of sought unknown parameters, and, subsequently, for the robust estimation of adjusted measurements. The robust M-estimator is based on the modification of the commonly used adjustment principle of measurements by the least squares method. Unlike the least squares method, the used robust M-estimator method is far less sensitive to the fulfilment of the normality condition of processed data, hence it resists, to a certain extent, the effect of outliers. The resulting robust estimation is not significantly affected by outliers.

In the second phase of data processing outlying observations are eliminated. This elimination is based on the assessment of the outlyingness of individual measurements from the computed robust estimation. The measurement is declared as outlying if its residual (identified from the robust adjustment) exceeds an identified limit value. The limit values for residuals are constructed in keeping with the formula prescribed by the data-snooping method (Baarda 1968).

2.2 Two-step detection method

Unlike one-step detection, this method assumes not only the effect of outlying observations, but also gross measurement errors, which are detected separately.

In the first phase of the computation, the robust M-estimator method is used for the estimation of adjusted measurements. Considering the assumed effect of gross errors, it is admitted that despite of its robustness the computation of the M-estimator may partially fail, and due to the effect of gross errors the obtained estimation of adjusted measurements is inaccurate (the obtained estimation is distorted).

By testing the outlyingness of individual values of measurements (by assessing their residuals identified from the robust adjustment) in the next phase of processing, the values affected by gross errors are eliminated from the measured data set. Like in the one-step detection, the elimination principle is also based on the data-snooping method. The criterion of outlyingness is set up in such a way to eliminate only far outlying values, i.e. to eliminate measurements with gross errors.

Now, having eliminated the effect of gross measurement errors, a more accurate estimation of adjusted measurements, which is sufficiently independent of the effect of outliers, is obtained through the recalculation of the robust M-estimator. By repeated testing of the outlyingness (by assessing measurement residuals), the remaining erroneous measurements (outliers) are eliminated from the processed data set. The parameters of the modified data-snooping method in this case correspond to the parameters of testing the outlyingness by means of the one-step detection method.

According to Hekimoglu (2005), if the observations include gross errors, it should be use firstly a robust method with high breakdown point such as least median of squares (LMS), least trimmed squares (LTS) or the robust method which is based on the median presented in Youcai (1995), rather than an M-estimator. The two-step method is presented to be an alternative way of gross error detection. It doesn’t dispose the breakdown point as high as above methods, but during detection processing the different methods don’t require to be combined. Thus, processing implementation of the two-step method is much easier.

3 Robust M-estimator of measurements in a geodetic network

The principle of the robust M-estimator application is based on an iterative least squares adjustment respecting the condition of a gradual change in the weights of individual measurements. A change in the weight is directly proportional to the development of the magnitude of its residual determined by adjustment. The more outlying a measurement from its adjusted value is (i.e. it has a greater residual), the lower its weight, and, hence, the lower its effect on adjustment. In this way, outliers are gradually eliminated and a robust estimation independent of the effect of outliers is obtained.

In each iterative step, depending on the standardised residual of a measurement \(\hat{v}\), a robust change in the measurement weight is calculated

In our general case of the spatial geodetic network adjustment there is the total of \(n\) unknown parameters (Cartesian coordinates of network points \(X,Y,Z\)) grouped into the vector \(\mathbf {x}(n,1)\) and \(m\) measurements (horizontal directions \(\varphi \), zenith angles \(z\) and slope distances \(d\)) grouped into the vector \(\mathbf {l}(m,1)\). The matrix of robust changes in the weights of measurements \(\mathbf {W}(m,m)\) may be expressed in the form

In the initial (zero) iterative step, the robust weights of all measurements are set up as \(w_{i}^{(0)}=1\), the robust weights are not introduced and the estimation of measured variables is computed using the non-robust least squares method, for more detail see e.g. Koch (1996) or Koch (1999). The solution of a set of linearized equations of measurements in a spatial geodetic network in a general \(j\)th iterative step may be expressed as

where \(\overline{\mathbf {x}}(n,1)\) is the vector of the estimation of unknown parameters \(\mathbf {x}\), \(\mathbf {x}_{0}(n,1)\) the vector of approximate values of unknown parameters, \(\mathbf {dx}(n,1)\) the vector of corrections to approximate values of unknown variables, \(\mathbf {A}(m,n)\) the matrix of linearized relations between measurements and unknown parameters (Jacobian matrix), \(\mathbf {P}(m,m)\) the matrix of measurement weights and \(\mathbf {l}'(m,1)\) the vector of reduced measurements. The case of non-correlated measurements is considered in the article, and the weights matrix is diagonal

Correlated measurements are solved e.g. in Rangelova et al. (2009), Xu (1989) or Yang (1994). With the help of the estimation of unknown parameters, standardised residuals of individual values of a measurement \(\hat{v}_{i}^{\left( j\right) }\) (in \(j\)th step) are calculated

where \(\mathbf {v}(m,1)\) is the vector of residuals of measurements \(\mathbf {l}\), \(p_{i}\) the weight of a general measurement \(l_{i}\), \(\sigma _{0}\) the a priori unit standard deviation and \(\sigma _{i}\) the a priori standard deviation of a measurement (\(\sigma _{\varphi },\sigma _{z},\sigma _{d}\)).

In the next iterative step, a set of normal equations is further refined

Depending on the magnitude of a standardised measurement residual, a new robust change in the weight of measurement is calculated according to the weight function of the robust M-estimator

After the iterative adjustment has sufficiently stabilized, the computation process is stopped and the final robust estimation is determined.

4 Robust M-estimators used

The shape of the weight function, i.e. the formula for the calculation of a robust change in the weight of a measurement, expressed in relation to the magnitude of the standardised residual (described in Eq. 1), depends on the type of robust M-estimators. The basic M-estimator is the Huber’s estimator (Huber 1964) whose formula and the weight function shape are displayed in Fig. 1.

The mentioned boundary of the start of the fall in the robust change of the weight of a measurement according to Koch (1999) is usually selected as \(c=1.5 \div 2.0\). While testing the detection method described here, twelve different formulae for the robust weight calculation were used. According to Erenoglu and Hekimoglu (2010), Hekimoglu (2005), Hekimoglu and Koch (1999) the different efficiency of these M-estimators is required. In addition to the above mentioned Huber’s estimator, it was a modified Huber’s estimator, Hampel’s estimator, the Talwar estimator, the Cauchy distribution estimator, Tukey’s Biweight estimator, the Geman–McClure estimator, Andrews’s estimator, the Welsch estimator, the Fair estimator, the L1-norm estimator and the hybrid L1/L2-norm. A detailed description of all these estimators with their prescribed functions may be found e.g. in Jäger et al. (2005). To get a better idea of the design of the robust weight of a measurement, Figs. 2 and 3 display examples of selected weight functions of robust estimations.

5 Detecting outliers of measured variables

The principle of detecting outliers in a set of measurements is based on the assessment of the magnitude of residuals of individual measurements obtained from the robust adjustment. By using the robust M-estimator during the adjustment of geodetic networks, the effect of outlying observations is limited and thus an estimation of measured variables independent of outliers is obtained. It is assumed that the obtained estimation of a measured variable \(\overline{\mathbf {l}}\) approximates its actual (true) value \(L\), and that the set of values (a random sample) of a measured variable comes from a normal probability distribution \(N(L,\sigma ^{2})\).

After the iterative adjustment is stabilised and the robust estimation is determined, individual measurements are subjected to testing for outliers. The comparison

is made, where \(v_{i}\) is the residual of a general measurement \(l_{i}\) and \(v_{Mi}\) is its limit value expressed according to Xu (1993) by the formula

where \(u_{\left( 1-\alpha /2\right) }\) is the critical value calculated for a entered significance level \(\alpha \) as a quantile of the standardised normal probability distribution \(N\left( 0,1\right) \) and \(\sigma _{v_{i}}\) is the standard deviation of the residual of a measurement \(l_{i}\) which is expressed by the formula

where \(\sigma _{0}\) is the a priori unit standard deviation and \(Q_{v_{ii}}\) is \(i\)th diagonal element of the matrix of weight coefficients of residuals \(\mathbf {Q}_{v}\) which is define as

where \(\mathbf {Q}_{l}\) is the matrix of weight coefficients of a measurements and \(\mathbf {Q}_{\overline{l}}\) the matrix of weight coefficients of adjusted measurements. If the limit value \(v_{Mi}\) is exceeded (Fig. 4), the measurement \(l_{i}\) (at the chosen significance level \(\alpha \)) is declared as outlying and eliminated from the set.

In the case of testing the efficiency of the one-step detection method, the value was selected as

In using the two-step detection method, only far outlying values, i.e. presumed gross errors, are eliminated during the first detection at the significance level \(\alpha _{1}\). Then, the second, more accurate robust estimation is made and the second detection of outliers, now at the significance level \(\alpha _{2}\), which corresponds to the significance level \(\alpha \) used for the one-step detection method. To test the efficiency of this method the value was subjectively selected as

This limit value was empirically set with respect to the increasing of residuals of non-outlying measurements which was caused by influence of inserted gross errors.

The described outlier detection method is based on the data-snooping method (Baarda 1968), except for the difference that the outlyingness of the measurements is assessed in relation to their robust M-estimator and not to the least squares method estimation. In this case, the detected outliers need not be gradually eliminated during the gradual readjustment of a measurement (estimation refining), but all measurements are tested and potentially eliminated at once, i.e. in only one computational step. The application of the classical procedure of a gradual elimination of outlying measurements in geodetic networks is described e.g. in Axelson (1996), Gullu and Yilmaz (2010). In Sisman (2010), the comparison of the results of the geodetic network adjustment using the data-snooping method and a simple robust adjustment using the M-estimator method may also be found. A similar comparison including more detection methods applied to the area of GPS measurements processing is also presented in Knight and Wang (2009).

6 Testing the outlier detection method

The usability of the designed detection method of outlying observations is tested by an experiment based on the simulation of the measurements of a model geodetic network. In the first phase of the experiment, a set of measurements is artificially modelled following predefined accuracy parameters. This set is gradually contaminated with varying numbers of differently outlying measurements, and the detection method is repeatedly applied. The efficiency of the detection method is identified by comparing the numbers of inserted and successively detected outliers.

6.1 Simulation of measurements

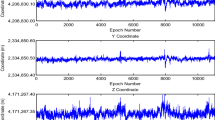

The model simulates a classical output from a high precision terrestrial geodetic measurement made with a total station during the observation of a free geodetic network. Model data contains repeatedly measured horizontal directions \(\varphi \), zenith angles \(z\) and slope distances \(d\) between individual network points. These measurements were simulated as measured in sets. Repeated sets were not averaged. In case of averaging only one outlier would degrade the result (the average value). The model network is designed as a spatial free network shaped like an irregular pentagon with the total number of six station points (see Fig. 5). The model is a copy of real case of setting-out network. The maximum horizontal length between the points is \(100{,}574\) m, the maximum height difference equals \(6.728\) m.

The set of experimental measurements was simulated using a pseudorandom number generator. Model data were generated as pseudorandom samples with a normal probability distribution \(N(X_{0},\sigma ^{2})\). The true values of measured variables \(X_{0}\) (\(\varphi _{0},z_{0},d_{0}\)) are determinated by the input coordinates of the points in the model network. The standard deviations of measured variables \(\sigma \) were selected as \(\sigma _{\varphi }=\sigma _{z}=0.3\) mgon and \(\sigma _{d}=1.4\) mm. The model contains the total of 241 measurements (\(n_{\varphi }=n_{z}=85, n_{d}=71\)) and 26 unknown parameters (18 coordinates of points, 8 orientation corrections (2 standpoints were observed two times with different orientation corrections)). Because the number of degrees of freedom of the selected spatial free network is 4, the model contains 219 redundant measurements, i.e. relatively expressed as 995 % (nearly ten times than it is necessary).

6.2 Data contamination by outliers

Outliers are inserted into the model quite randomly regardless of the type of measurement (\(\varphi ,z,d\)). The number of inserted outliers is expressed in relation to the total number of measurements in the model network. The set of experimental measurements was gradually contaminated with 1–32 % of differently outlying values. The outlyingness rate of a general measured value \(l_{i}\) is defined by the coefficient \(h\) of the standard deviation of a measurement \(\sigma _{i}\) (\(\sigma _{\varphi },\sigma _{z},\sigma _{d}\)). The outlying measurement is defined by the formula

Differently outlying measurements are inserted into the model, starting from slightly outlying measurements (\(h=2\)) up to far outlying measurements affected by a gross error (\(h=500\)). The similar classification of outliers into two categories (outliers with small magnitudes and outliers with large magnitudes called as gross errors) has been previously investigated by Hekimoglu (2005). Extremely high measurement errors (gross errors) are inserted into the experiment for the reason of assessing the theoretic resistance of the detection method.

Outliers are inserted in two ways. In the first case model data are always contaminated by varying numbers of equally outlying values. The results of this simplified test should provide a basic preliminary view of the designed method characteristics. Only the one-step detection method was used for the evaluation of this test. In the second case, more objective testing is carried out. Varying numbers of outliers with a variable outlyingness rate are inserted into the model. Inserted outliers are grouped into individual classes by outlyingness rate expressed coefficient \(h\) (from 4 to 15 classes were used). Both the one-step and two-step detection method were simultaneously applied during the evaluation of the second test. The results from both methods were compared.

7 Evaluation of the experiment

The task of the experiments is to determine the efficiency of outlier detection. This efficiency is described in tables below by two variables. The variable \(\mathbb {A}\) represents the number of correctly detected outliers (the number of values of measured variables which are correctly considered as outlying by the method; expressed as the percentage of the number of outliers introduced into the set) and the variable \(\mathbb {B}\) is the number of wrongly detected non-outliers (the number of values of measured variables which are incorrectly considered as outlying by the method; expressed as the percentage of the total number of values in the set).

To increase the credibility of the results, the whole experiment (each test) was repeated ten times, and the final results were determined as the arithmetic mean of the results of all individual repetitions.

In each test, all 12 selected robust M-estimates (different weight functions) were used. As the reference outlier detection method was chosen the method using the Huber’s robust M-estimator. Because of a large number of outputs, in addition to the results of the basic Huber’s estimator, only the results of the most and the least effective robust estimations are presented here.

7.1 Test at constant outlyingness rate of measurements

The results of testing at a constant outlyingness rate of measured data are shown in Tables 1, 2 and 3. Model data were always contaminated by varying numbers of equally outlying values. The number of inserted outliers was separately 1, 2, 5, 10, 15, 20, 25 and 30 %. The magnitude of outlyingness (expressed by the coefficient \(h\)) was also separately used 2, 2.5, 3, 3.5, 4, 5, 6, 8, 10, 20, 40, 60, 100, 200 and 500. In order to reduce the number of results, the achieved efficiency values presented in the tables are classified into interpretation areas, and only average efficiency values for these areas are presented.

The results of this test imply that the efficiency of all twelve tested robust M-estimators used in the one-step detection method is comparable in the case of a model network with very high number of redundant measurements. Therefore, in the tables there are presented results for Huber’s, Hampel’s and Andrews’s estimator only. The detection of outliers, in this case, is quite successful. Problems arise if the network is contaminated with extreme gross errors, the negative effect of the incorrect detection of non-outliers reveals. The value \(\mathbb {B}=47.2\) % of wrongly detected measurements for Andrews’s robust estimator displayed in Table 3 testifies to the collapse of the whole method.

Based on the testing, the following conclusions may be formulated. The efficiency of the correct identification of outliers \(\mathbb {A}\) is directly proportional to the magnitude of outlyingness (expressed by the coefficient \(h\)). Furthermore, it is evident that the number of wrongly identified non-outliers \(\mathbb {B}\) grows with this magnitude too. In the case of processing geodetic data with a considerable number of redundant measurements and in the absence of the effect of gross errors, the designed detection method appears highly efficient.

7.2 Test at variable outlyingness rate of measurements without gross errors

In this test, the contamination of model measurements with only slightly outlying values, i.e. without gross errors and mistakes present in processed data, is considered. Model data were always contaminated by varying numbers of different outlying values. The inserted outliers were always divided in 5 classes (characterized by \(h = 2.5, 3, 3.5, 4, 5\)). The number of outliers in each class was equal. The test was repeated three times, where number of outliers in each class was 1, 3 and 6 %, i.e. the total number of outliers was 5, 15 and 30 %.

The results describe testing using only the one-step detection method. The two-step detection method is designed for cases where the effect of gross errors is apparent; in their absence, the results are identical to the one-step method. If only less outlying values are present, the detection method is stable and efficient for all twelve tested robust M-estimators. Even if measurements are contaminated with up to 30 % of outliers, the method is able to detect 85–90 % of these (the value A). Using different robust M-estimators the result is virtually identical. Comparing individual robust estimators there is only one difference evident that the number of wrongly identified non-outliers (the value B) grows in some less stable estimations. The number of measurements wrongly considered as outlying by the more stable estimators (demonstrated by Huber’s estimator in Table 4) ranges around 10 % of the total number of outliers present. The number of measurements wrongly considered as outlying by the less stable estimators reaches up to 30–40 % of the total number of outliers present (see Andrews’s estimator in Table 5). This growth was mainly observed in the case of data contamination with 5 % of outliers, while the differences between individual M-estimators fall with the growing number of outliers in a model. Besides shown Andrews’s estimator the less stable estimators are, in this case, the Cauchy distribution estimator, the Geman–McClure estimator and the L1-norm. These conclusions only apply to a network with a high number of redundant measurements.

7.3 Test at variable outlyingness rate of measurements with gross errors

The testing described below focuses on the situation when processed measurements are contaminated not only by slightly outlying values, but also by gross errors. Outliers are detected by the one-step and the two-step detection method. Two cases of the contamination of measurements are described here. Besides the slightly outlying values (represented by 5 classes with \(h\) from 2.5 to 5) there are also gross errors with different magnitudes (three classes with \(h = 10, 20, 100\)) in the first case (Table 6). The total number of outliers is 16 % (2 % in each class). In second case (Table 7) the gross errors are represented by one class with same magnitude (\(h = 100\)). The total number of outliers is 18 % (3 % in each class). Comparing individual robust M-estimators, their precise qualitative order cannot be made on the base of achieved results. Instead, it is more expedient to classify these methods into two categories, estimators with a lower and higher stability. The two categories are represented by the Huber’s estimator and the Andrews’s estimator.

The results imply that the detection method is able to respond to the presence of a certain number of gross errors present in the processed data and detect outliers as well as gross errors. The one-step detection method is able to detect about 90 % of these outliers (the value \(\mathbb {A}\)). The number of measurements wrongly considered as outlying by the method ranges around 20 % (for more stable estimators) and up to 50 % (for less stable estimators) of the total number of outliers present, depending on the type of robust estimation used. All tested robust M-estimators reach virtually the same efficiency in the correct detection of outliers. The difference in the estimators (or their categories) is in the computational stability in the presence of the effect of gross errors. This stability is manifested by the number of wrongly detected non-outliers. In case of the gross error is present, an unstable estimator detects outliers wrongly, i.e. its estimation is not close to the true values. The subsequent detection results in the wrong marking of non-outlying measurements as outliers. Based on the results of testing, the Huber’s estimator, Hampel’s estimator and the Fair estimator may be ranked among the most stable estimators. Lower stability was manifested by the L1-norm estimator, the Talwar estimator, Tukey’s (Biweight) estimator, the Geman–McClure estimator and Andrews’s (sine) estimator. The differences in the stability (i.e. and efficiency) of individual robust M-estimators reveals only in the case when a greater number of more outlying measurements is present. It may lead up to the collapse of the detection method, as is displayed for Talwar estimator in Table 8. In this case, data were contaminated by significant number (30 %) of extremely (\(h = 500\)) outlying measurements.

Comparing the one-step and two-step detection, both methods are practically identical in terms of the assessment of the number of correctly detected outliers. The two-step method gains in importance in the case of the effect of gross errors when it ensures a higher stability of detection. Its application results in the reduction of the effect of gross errors and, successively, in the reduction of the number of measurements that are wrongly considered as outlying. The value of the significance level of the first elimination of outliers \(\alpha _{1}\) (i.e. the limit value of the measurement residual described in Eq. 11) affects the rate of the reduction of the number of wrongly detected outliers. It is expedient to select the value of the significance level \(\alpha _{1}\) on the basis of the presumed magnitude of gross errors. The more probable the occurrence of larger gross errors and mistakes in processed data, the more suitable the selection of a lower significance levels \(\alpha _{1}\) (i.e. a greater limit value of the measurement residual should be selected). Two-step detection of outliers contributes to enhanced stability. If, however, the data contamination exceeds a certain limit, the robust estimation fails like in the one-step method, and the whole detection process collapses (see Table 8). The robust estimation is then so distant from true that the majority of correct non-outlying measurements are eliminated in the process of detection. By the elimination of these values, the effect of non-eliminated outlying measurements further grows and a new estimation is becoming even more distant from true. However, it must be pointed out that these cases of high contamination with outliers and gross errors are practically non-existent in high precision engineering surveying. In this branch, only partial data contamination with outliers with single gross errors is common. In these cases, the detection method shows high stability and allows successful detection of outliers. Therefore, in our opinion, the detection method makes a significant contribution to practice.

The conclusions formulated on the basis of the testing only apply to cases of data with high number of redundant measurements.

8 Conclusion

The experiment resulted in the identification of the usability of the designed outliers detection method based on the application of robust M-estimators. The application of robust estimator for the detection of outliers is a highly efficient method whose applicability, however, is conditioned on numerous factors and input conditions. In order to achieve adequate results, a sufficient number of redundant measurements (corresponding to the number of redundant measurements in commonly measured high precise networks used in engineering surveying) must be guaranteed plus the low presence of gross errors in the measurement (which may be removed by checking the measurements before the adjustment).

The achieved results show that the designed method is able to effectively detect not only less outlying measurements, but also gross errors. All this, however, depends on the number of redundant measurements in the network and on the quantity and magnitude of measurement errors. It corresponds to the conclusions published in Erenoglu and Hekimoglu (2010) or Hekimoglu and Koch (1999). At higher concentrations of gross errors, the negative effect of the wrong elimination of correct non-outlying measurements reveals. This effect can be partly reduced by using the two-step detection method. While assessing objectively the detection method it must be emphasized that the testing in which the method showed instability simulates extreme cases that hardly occur in high precision engineering surveying. In real cases, where the proportion of erroneous measurements is much lower, the detection method showed high stability and efficiency, and its contribution to practical applications appears to be considerable.

In presented experiments was used also high number of exceptionally outlying values to assess the behavior of detection method under extreme conditions. These circumstances must be assumed for usage of the method in the automated adjustment process; the detection method was designed and tested especially for this purpose.

Unlike commonly used outlier detection methods (such as e.g. the data snooping method (Baarda 1968) or the Tau-test (Pope 1976) whose principle is based on repetitive rejections of individual measurements with high residuals), the application of the robust estimation is fully automated, and a decision on the rejection of all outliers can be made at once, and not gradually by assessing single individual measurements.

A significant weakness of the described method is its dependence on the classical least squares adjustment procedure, which is computationally unstable in case of non-convergence of iterative adjustment process. If the least squares method computation fails, the whole outlier detection procedure fails, too. This failure, however, should practically not occur in the case of high precision measurements in engineering.

References

Akter S, Khan MHA (2010) Multiple-case outlier detection in multiple linear regression model using quantum-inspired evolutionary algorithm. J Comput 5(12):1779–1788

Axelson P (1996) Outlier detection in relative orientation: removing or adding observations. In: Kraus K, Waldhäusl P (eds) XVIIIth ISPRS congress-technical commission III: theory and algorithms, international archives of photogrammetry and remote sensing, vol XXXI, pp 42–47

Baarda W (1968) A testing procedure for use in geodetic networks, vol 2. Netherlands Geodetic Commission, Delft

Baselga S (2011) Exhaustive search procedure for multiple outlier detection. Acta Geod Geophys Hung 46(4):401–416

Benning W (1995) Vergleich dreier Lp-Schaetzer zur Fehlersuche in hybriden Lagenetzen. Z Vermess 120(12):606–617

Berné JL, Baselga S (2005) Robust estimation in geodetic networks. Física de la Tierra 17:7–22

Erenoglu RC, Hekimoglu S (2010) Efficiency of robust methods and tests for outliers for geodetic adjustment models. Acta Geod Geophys Hung 45(4):426–439

Fischler MA, Bolles RC (1981) Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun ACM 24(6):381–395

Gašincová S, Gašinec J (2010) Adjustment of positional geodetic networks by unconventional estimations. Acta Montan Slov 15(1):71–85

Gökalp E, Güngör O, Boz Y (2008) Evaluation of different outlier detection methods for gps networks. Sensors 8(11):7344–7358

Gullu M, Yilmaz I (2010) Outlier detection for geodetic nets using adaline learning algorithm. Sci Res Essays 5(5):440–447

Harvey BR (1993) Survey network adjustments by the L1 method. Aust J Geod Photogramm Surv 59:39–52

Hekimoglu S (2005) Do robust methods identify outliers more reliably than conventional tests for outliers? Z Vermess 3:174–180

Hekimoglu S, Koch KR (1999) How can reliability of the robust methods be measured?, vol 1. In: Third Turkish-German Joint Geodetic Days, pp 179–196

Huber P (1964) Robust estimation of a location parameter. Ann Math Stat 35(1):73–101

Jäger R, Müller T, Saler H, Schwäble R (2005) Klassische und robuste Ausgleichungsverfahren: Ein Leitfaden für Ausbildung und Praxis von Geodäten und Geoinformatikern. Herbert Wichmann Verlag, Heidelberg

Knight N, Wang J (2009) A comparison of outlier detection procedures and robust estimation methods in gps positioning. J Navig 62:699–709

Koch KR (1996) Robuste parameterschaetzung. Allg Vermess Nachr 103:1–18

Koch KR (1999) Parameter estimation and hypothesis testing in linear models. Springer, Berlin

Pope AJ (1976) The statistics of residuals and the outlier detection of outliers. NOAA Tech Rep 65(1):256

Rangelova E, Fotopoulos G, Sideris MG (2009) On the use of iterative re-weighting least-squares and outlier detection for empirically modelling rates of vertical displacement. J Geod 83(6):523–535

Sisman Y (2010) Outlier measurement analysis with the robust estimation. Sci Res Essays 5(7):668–678

Sisman Y, Dilaver A, Bektas S (2012) Outlier detection in 3D coordinate transformation with fuzzy logic. Acta Montan Slov 17(1):1–8

Xu PL (1989) On robust estimation with correlated observations. Bull Geod 63:237–252

Xu PL (1993) Consequences of constant parameters and confidence intervals of robust estimation. Boll Geod Sci Affin 52(3):231–249

Yang Y (1994) Robust estimation for dependent observations. Manuscr Geod 19:10–17

Youcai H (1995) On the design of estimators with high breakdown points for outlier identification in triangulation networks. Bull Geod 69:292–299

Acknowledgments

The article was written with support from the internal grant of Czech Technical University in Prague: SGS13/059/OHK1/1T/11 “Optimization of acquisition and processing of 3D data for purpose of engineering surveying”.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Třasák, P., Štroner, M. Outlier detection efficiency in the high precision geodetic network adjustment. Acta Geod Geophys 49, 161–175 (2014). https://doi.org/10.1007/s40328-014-0045-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40328-014-0045-9