Abstract

Based on a two-step Newton-like iterative scheme of convergence order \(p \ge 3\), we propose a three-step scheme of convergence order \(p+3\). Furthermore, on the basis of this scheme a generalized \(q+2\)-step scheme with increasing convergence order \(p+3q\,(q \in \mathbb {N})\) is presented. Local convergence, including radius of convergence and uniqueness results of the methods, is presented. Theoretical results are verified through numerical experimentation. The performance is demonstrated by the application of the methods on some nonlinear systems of equations. The numerical results, including the elapsed CPU-time, confirm the accurate and efficient character of proposed techniques.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The construction of fixed point iterative methods for solving nonlinear equations or systems of nonlinear equations is an interesting and challenging task in numerical analysis and many applied scientific branches. The huge importance of this subject has led to the development of many numerical methods, most frequently of iterative nature (see [4,5,6,7, 31, 32]). With the advancement of computer hardware and software, the problem of solving nonlinear equations by numerical methods has gained an additional importance. In this paper, we consider the problem of approximating a solution \(x^*\) of the equation \(F(x)=0\); where \(F:\Omega \subseteq B_1\rightarrow B_2\), \(B_1\) and \(B_2\) are Banach spaces and \(\Omega \) is a nonempty open convex subset of \(B_1\), by iterative methods of a high order of convergence. The solution \(x^*\) can be obtained as a fixed point of some function \(\Phi :\Omega \subseteq B_1\rightarrow B_2\) by means of fixed point iteration

There are a variety of iterative methods for solving nonlinear equations. A classical method is the quadratically convergent Newton’s method [4]

where \( {F}'({x})^{-1}\) is the inverse of first Fréchet derivative \({F}'(x)\) of the function F(x). This method converges if the initial approximation \(x_0\) is closer to the solution \(x^*\) and \( F'({x})^{-1}\) exists in an open neighborhood \(\Omega \) of \(x^*.\) In order to attain the higher order of convergence, a number of modified Newton’s or Newton-like methods have been proposed in literature, see, for example [1,2,3, 6, 8,9,10, 12,13,30] and references therein.

In this paper, we consider a three-step iterative scheme and its multistep version for solving the nonlinear system \(F(x)=0\). The three-step scheme is given by

where \(\varphi ^{(p)}_{\alpha }(x_{n}, y_{n})\) is any iterative scheme of convergence order \(p\ge 3 \), \(\psi (x_n, y_n)=\big (\beta I+\gamma F'(y_n)^{-1}F'(x_n)+\delta F'(x_n)^{-1}F'(y_n)\big )F'(x_n)^{-1}\) and \(\{\alpha , \, \beta ,\, \gamma ,\, \delta \} \in \mathbb {R}\).

The multistep version of (2), consisting of \(q+2\) steps, is expressed as

where \(q \in \mathbb {N}\) and \(z_n^{(0)}=z_n.\)

In Sect. 2, we show that for a particular set of values of the parameters \(\alpha , \, \beta ,\, \gamma \) and \(\delta \) the methods (2) and (3) possess convergence order \(p+3\) and \(p+3q\), respectively. In Sect. 3, the local convergence including radius of convergence, computable error bounds and uniqueness results of the proposed methods is presented. In order to verify the theoretical results, some numerical examples are presented in Sect. 4. Finally, in Sect. 5 the methods are applied to solve some systems of nonlinear equations.

2 Convergence-I

We present the convergence of method (2), when \( F:\Omega \subset \mathbb {R}^{m}\rightarrow \mathbb {R}^{m}\).

Theorem 1

Suppose that

-

(i)

\(F:\Omega \subset \mathbb {R}^{m}\rightarrow \mathbb {R}^{m}\) is a sufficiently many times differentiable mapping.

-

(ii)

There exists a solution \(x^*\in \Omega \) of equation \(F(x)=0\) such that \(F'(x^*)\) is nonsingular.

Then, sequence \(\{x_n\}\) generated by method (2) for \(x_0\in \Omega \) converges to \(x^*\) with order \(p+3\) for \(p\ge 3\) if and only if

Proof

Let \(e_n=x_n-x^*\). Using Taylor’s theorem and the hypothesis \(F(x^*)=0\), we obtain in turn that

where \(T_{i}=\frac{1}{i!}F'(x^*)^{-1}F^{(i)}(x^*)\, \, \, \text {and} \, \, (e_n)^{i}=(e_{n}, e_{n},{\mathop {\ldots }\limits ^{i}},e_{n}), \, \, e_{n}\in \mathbb {R},\, \, {i}\in \mathbb {N}\).

Also

and

For \(\tilde{e}_n=y_n-x^*\), we have that

Using again Taylor’s theorem on \(F'(y_n)\) about \(y_n=x^*\), we get in turn that

so

It then follows from the Eqs. (5), (6), (8) and (9), respectively that

and

Consequently, summing up we get in turn that

Then

By hypothesis \(\{z_n\}\) is of order p. Set \(\bar{e}_{n}:z_n-x^*=K((e_{n})^{p})+O((e_{n})^{p+1}), \ K\ne 0\). Then, we have

Using (10) and (11) in the third substep of method (2), it follows that

Therefore, the order of convergence to \(x^*\) is of order \(p+3\, \, (p\ge 3)\), if and only if, the parameters \(\alpha \), \(\beta \), \(\gamma \) and \(\delta \) satisfy

leading to the unique solutions of the system (13) given in (4).

Note that we have not shown the coefficient of \((e_n)^3\bar{e}_{n}\) in (12) due to lengthy expression. However, using the values of parameters given in (4), we can write the error equation in simplified form as

It follows from Theorem 1 that the method to be used from the family (2) is given by

Next we show that the method (3), on using the values of parameters \(\alpha , \, \beta ,\, \gamma \) and \(\delta \) given in (4), possesses convergence order \(p+3q\). Thus the following theorem is proved:

Theorem 2

Under the hypotheses of Theorem 1, the sequence \(\{x_n\}\) generated by method (3) for \(x_0\in \Omega \) converges to \(x^*\) with order \(p+3q\) for \(p\ge 3\) and \(q \in \mathbb {N}\).

Proof

Taylor’s expansion of \(F(z^{(q-1)}_n)\) about \(x^*\) yields

Then, we have that

As we know that \(z^{(1)}_n-x^*=2KT_2(4T_2^2-T_3)(e_n)^{p+3}+O((e_n)^{p+4})\), therefore, from (17) for \(q=2,3\), we have

and

Proceeding by induction, we have

This completes the proof of Theorem 2. \(\square \)

3 Convergence-II

In this section we study the convergence of new methods in Banach space settings. Let \(w_0:\mathbb {R}_+\cup \,\{0\}\rightarrow \mathbb {R}_+\cup \,\{0\}\) be a continuous and nondecreasing function with \(w_0(0)=0\). Let also \(\varrho \) be the smallest positive solution of equation

Consider, function \(w:[0, \varrho )\rightarrow \mathbb {R}_+\cup \,\{0\}\) continuous and nondecreasing with \(w(0)=0\). Define functions \(g_1\) and \(h_1\) on the interval \([0, \varrho )\) by

and

We have \(h_1(0)=-1<0\) and \( h_1(t)\rightarrow +\infty \) as \(t\rightarrow \varrho ^{-}\). The intermediate value theorem guarantees that equation \(h_1(t)=0\) has solutions in \((0,\varrho )\). Denote by \(\varrho _1\) the smallest such solution. Let \(\lambda \ge 1\) and \(g_2:[0, \varrho _1)\rightarrow \mathbb {R}_+\cup \,\{0\}\) be a continuous and nondecreasing function. Define function \(h_2\) on \([0, \varrho _1)\) by

Suppose that \(g_2(t)t^{\lambda -1}-1\rightarrow +\infty \) or a positive number as \(t\rightarrow \varrho _1^{-}\).

Then, we get that \(h_2(0)=-1<0\) and \(h_2(t)\rightarrow +\infty \) or a positive number as \(t\rightarrow \varrho _1^{-}\). Denote by \(\varrho _2\) the smallest solution in \((0, \varrho _1)\) of equation \(h_2(t)=0\). If \(\lambda =\,1\), suppose instead of (20) that

and \(g_2(t)-1\rightarrow +\infty \) or a positive number as \(t\rightarrow \varrho _1^{-}\). Denote again by \(\varrho _2\) the smallest solution of equation \(h_2(t)=0\).

Let \(v:(0, \varrho _1)\rightarrow \mathbb {R}_+\cup \,\{0\}\) be a continuous and nondecreasing function. Define functions \(g_3\) and \(h_3\) on the interval \((0, \varrho _1)\) by

and

We obtain that \(h_3(0)=-1<0\) and \(h_3(t)\rightarrow \infty \) as \(t\rightarrow \varrho _2^{-}\). Denote by \(\varrho _3\) the smallest solution of equation \(h_3(t)\) in \((0, \varrho _2)\). Then, we have that for each \(t\in [0,\varrho )\)

Denote by \(U(\mu ,\varepsilon )=\{x\in B_1: \Vert x-\mu \Vert <\varepsilon \}\) the ball with center \(\mu \in B_1\) and of radius \(\varepsilon >0\). Moreover, \(\bar{U}(\mu ,\varepsilon )\) denotes the closure of \(U(\mu ,\varepsilon )\). We shall show the local convergence analysis of method (14) in a Banach space setting under hypotheses (A):

-

(a1)

\(F:\Omega \subseteq B_1\rightarrow B_2\) is a continuously Fréchet-differentiable operator.

-

(a2)

There exists \(x^*\in \Omega \) such that \(F(x^*)=0\) and \(F'(x^*)^{-1}\in \mathfrak {L}(B_2,B_1).\)

-

(a3)

There exists function \(w_0: {\mathbb {R}_{+}}\cup \{0\}\rightarrow {\mathbb {R}_{+}}\cup \{0\}\) continuous and nondecreasing with \(w_0(0)=0\) such that for each \(x\in \Omega \)

$$\begin{aligned} \Vert F'(x^*)^{-1}(F'(x)-F'(x^*))\Vert \le w_0(\Vert x-x^*\Vert ). \end{aligned}$$ -

(a4)

Let \(\Omega _0=\Omega \cap U(x^*,\varrho )\), where \(\varrho \) was defined previously. There exist functions \(w:[0,\varrho )\rightarrow {\mathbb {R}_{+}}\cup \{0\}\), \(v:[0,\varrho )\rightarrow {\mathbb {R}_{+}}\cup \{0\}\) continuous and nondecreasing with \(w(0)=0\) such that for each \(x, y \in \Omega _0\)

$$\begin{aligned} \Vert F'(x^*)^{-1}(F'(x)-F'(y))\Vert \le w(\Vert x-y\Vert ) \end{aligned}$$and

$$\begin{aligned} \Vert F'(x^*)^{-1}F'(x)\Vert \le v(\Vert x-x^*\Vert ). \end{aligned}$$ -

(a5)

There exists function \(g_2: [0,\varrho _1)\rightarrow {\mathbb {R}_{+}}\cup \,\{0\}\) continuous and nondecreasing and \(\lambda \ge 1\) satisfying (20) if \(\lambda > 1\) and (21), if \(\lambda = 1\) such that

$$\begin{aligned} \left\| \varphi _\alpha ^{(p)} \big (x, x-F'(x)^{-1}F'(x)\big )-x^*\right\| \le g_2(\Vert x-x^*\Vert )\Vert x-x^*\Vert ^{\lambda }. \end{aligned}$$ -

(a6)

\(\bar{U}(x^*,\varrho _3)\subseteq \Omega \).

-

(a7)

Let \(\varrho ^*\ge \varrho _3\) and set \(\Omega _1=\Omega \cap \bar{U}(x^*,\varrho ^*)\), \(\int _0^1w_0(\theta \varrho ^*)d\theta <1.\)

Theorem 3

Suppose that the hypotheses (A) are satisfied. Then, the sequence \(\{x_n\}\) generated for \(x_0\in U(x^*,\varrho _3)-\{x^*\}\) by method (14) is well defined in \(U(x^*,\varrho _3)\), remains in \(U(x^*,\varrho _3)\) for all \(n=0,1,2,\ldots \) and converges to \(x^*\), so that

and

where the functions \(g_i, \, \, i=1,2,3\) are defined previously. Moreover, the vector \(x^*\) is the only solution of equation \(F(x)=0\) in \(\Omega _1\).

Proof

We shall show estimates (23)–(25) using mathematical induction. By hypothesis (a2) and for \(x\in U(x^*,\varrho _3)\), we have that

By the Banach perturbation Lemma [4, 6] and (26) we get that \(F'(x)^{-1}\in \mathfrak {L}(B_2, B_1)\) and

In particular, (27) holds for \(x=x_0\), since \(x_0\in U(x^*,\varrho )-\{x^*\}\) and \(y_0\), \(z_0\) are well defined by the first and second substep of method (14) for \(n=0\). We can write by the first substep of method (14) and (a2) that

Then, using (22) (for \(i=1\)), the first condition in (a4), (27) (for \(x=x_0\)) and (28) we get in turn that

which implies (23) for \(n=0\) and \(y_0\in U(x^*,\varrho _3)\).

Using (a5) and (22) for \(i=2\), we get that

so (24) holds for \(n=0\) and \(z_0\in U(x^*,\varrho _3)\). Notice that since \(y_0, z_0\in U(x^*, \varrho _3)\), we have that

and

Moreover, \(x_1\) is well defined by the third substep of method (14) for \(n=0\). We can write by the third substep of method (14) for \(n=0\)

Using (22) (for \(i=3\)), (27), (a3), (29)–(33), and the triangle inequality, we obtain in turn that

which shows (25) for \((k=0)\) and \(x_1\in U(x^*,\varrho _3)\). The induction for estimates (23)–(25) is completed by simply replacing \(x_0\), \(y_0\), \(z_0\), \(x_1\) by \(x_k\), \(y_k\), \(z_k\), \(x_{k+1}\) in the preceding estimates. Then, from estimate

we deduce that \({\lim }_{k\rightarrow \infty } {x_k}=x^*\) and \(x_{k+1}\in U(x^*,\varrho _3)\).

The uniqueness part is shown using (a3) and (a7) as follows:

Define operator Q by \(Q=\int _0^1F'(x^{**}+\theta (x^*-x^{**}))d\theta \) for some \(x^{**}\in \Omega _1\) with \(F(x^{**})=0\). Then, we have that

so \(Q^{-1}\in \mathfrak {L}(B_2, B_1)\). Then, from the identity

we conclude that \(x^*=x^{**}\). \(\square \)

Next, we present the local convergence analysis of method (3) along the same lines of method (14). Define functions \(\bar{g}_2\), \( \lambda \), \(\mu \) and \(h_{\mu }\) on the interval \([0,\varrho _2)\) by

and

We have that \(h_{\mu }(0)<0\). Suppose that

Denote by \(\varrho ^{(q)}\) the smallest zero of function \(h_{\mu }\) on the interval \((0,\varrho _2)\). Define the radius of convergence \(\varrho ^*\) by

Denote by \((A')\) the conditions (A) but with \(\varrho ^*\) replacing \(\varrho \) together with condition (35).

Proposition 1

Suppose that the conditions \((A')\) hold. Then, sequence \(\{x_n\}\) generated for \(x_0\in U(x^*, \varrho ^*)-\{x^*\}\) by method (3) is well defined in \(U(x^*, \varrho ^*)\), remains in \(U(x^*, \varrho ^*)\) and converges to \(x^*\). Moreover, the following estimates hold

and

where the functions \(\lambda \) and \(\mu \) are defined previously. Furthermore, the vector \(x^*\) is the only solution of equation \(F(x)=0\) in \(\Omega _1\).

Proof

We shall only show new estimates (37) and (38). Using the proof of Theorem 3, we show the first two estimates. Then, we can obtain that

Moreover, we have in turn the estimates

Similarly, we get that

That is we have \(x_k\), \(z_k\), \(z_k^{(i)}\) \(\in U(x^*, \varrho ^*)\), \(i=1,2,\ldots , q \) and

where \(\bar{c}=\mu (\Vert x_0-x^*\Vert )\in [0,1)\), so \(\lim _{k\rightarrow \infty }x_k=x^*\) and \(x_{k+1}\in U(x^*, \varrho ^*)\). \(\square \)

Remark

-

(a)

The result obtained here can be used for operators F satisfying autonomous differential equation [5] of the form

$$\begin{aligned} F'(x)=T(F(x)), \end{aligned}$$where T is a known continuous operator. Since \(F'(x^*)=T(F(x^*))=T(0)\), we can apply the results without actually knowing the solution \(x^*\). Let as an example \(F(x)=e^x-1\). Then, we can choose: \(T(x)=x+1\).

-

(b)

It is worth noticing that methods (14) and (3) do not change when we use the conditions of Theorem 3 instead of stronger conditions used in Theorems 1 and 2. Moreover, we can compute the computational order of convergence (COC) [32] defined by

$$\begin{aligned} COC=\text {ln}\bigg (\frac{\Vert x_{n+1}-x^*\Vert }{\Vert x_{n}-x^*\Vert }\bigg )\bigg / \text {ln}\bigg (\frac{\Vert x_{n}-x^*\Vert }{\Vert x_{n-1}-x^*\Vert }\bigg ),\quad n=1,2,\ldots \end{aligned}$$(39)or the approximate computational order of convergence (ACOC) [14], given by

$$\begin{aligned} ACOC=\text {ln}\bigg (\frac{\Vert x_{n+1}-x_n\Vert }{\Vert x_{n}-x_{n-1}\Vert }\bigg ) \bigg /\text {ln}\bigg (\frac{\Vert x_{n}-x_{n-1}\Vert }{\Vert x_{n-1}-x_{n-2}\Vert }\bigg ). \quad n=1,2,\ldots \end{aligned}$$(40)This way we obtain in practice the order of convergence.

-

(c)

Numerous choices for function \(\varphi _\alpha ^{(p)}\) are possible. Let us choose, e.g. \(p=4\), \(\alpha =1\) and

$$\begin{aligned} \varphi _1^{(4)}(x_n, y_n) = y_n-F'(y_n)^{-1}F(y_n), \end{aligned}$$(41)which is a fourth order iteration function. Then, we can have as in (29) that

$$\begin{aligned} \Vert z_n-x^*\Vert \le&\ \frac{\int _0^1w(1-\theta )\Vert y_n-x^*\Vert )d\theta \Vert y_n-x^*\Vert }{1-w_0(\Vert y_n-p\Vert )}\\ \le&\ \frac{\int _0^1w(1-\theta )g_1(\Vert x_n-x^*\Vert )\Vert x_n-x^*\Vert )d\theta g_1 (\Vert x_n-x^*\Vert )\Vert x_n-x^*\Vert }{1-w_0(g_1(\Vert x_n-x^*\Vert )\Vert x_n-x^*\Vert )}. \end{aligned}$$So, we can choose

$$\begin{aligned} g_2(t)=\frac{\int _0^1w((1-\theta )g_1(t)t)g_1(t)d\theta }{1-w_0(g_1(t)t)}\, \, \text {and}\, \, \lambda =1. \end{aligned}$$

Then function \(g_3\) is given by Eq. (22).

It is worth noticing that the definition of function \(g_2\) (and consequently of function \(g_3\)) is not unique. Indeed, let \(w_0(t)=l_0\,t\), \(w(t)=l\,t\). Then we get \(g_1(t)=\frac{lt}{2(1-l_0t)}\), \(g_2(t)=\Big (\frac{l}{2}\Big )^3 \frac{1}{(1-l_0t)^2}\) and \(\lambda =4\) (see also Example 2 for \(l_0=15 \) and \(l=30\)).

4 Numerical examples

Here, we shall demonstrate the theoretical results which we have proved in section 3. For this, the methods of the family (3) chosen, with the choices \(g_1\), \({g_2}\), \(g_3\) and \(\varphi _1^{(4)}(x_n, y_n)\) given in Remark (c), are of order seven and ten that now we denote by \(M_7\) and \(M_{10}\), respectively. We consider two numerical examples, which are defined as follows:

Example 1

Let \(B_1=B_2=C[0,1]\). Consider the equation

where the kernel T is the Green’s function defined on the interval \([0,1]\times [0,1]\) by

Define operator \(F:C[0,1]\rightarrow C[0,1]\) by

then,

Notice that \(x^*(s)=0\) is a solution of (42). Using (43), we obtain

Then, by (43) and (a4), we have that

Hence, we can set \(w_0(t)=w(t)=\frac{1}{32}\big (3t^{\frac{1}{2}}+t \big )\) and \(v(t)=1+w_0(t)\). Numerical results are displayed in Table 1. From the numerical values we observe that \(\varrho *> 1\). But, since we cannot go outside the unit ball, we choose \(\varrho *\) to be the maximum available value which is 1. to be the maximum available value which is 1. Thus, for both methods M\(_7\) and M\(_{10}\), we have \(\varrho * = 1\).

Thus the convergence of the methods \(M_7\) and \(M_{10}\) to \(x^*(s)= 0\) is guaranteed, provided that \(x_0 \in U(x^*,\varrho ^*)\). Notice that in view of (45) earlier results using hypotheses on the second derivative or higher cannot be used to solve this problem [3, 4, 26].

Example 2

Let \(B_1=B_2=C[0,1]\), be the space of continuous functions defined on the interval [0, 1] and be equipped with max norm. Let \(\Omega =\bar{U}(0,1)\). Define function F on \(\Omega \) by

We have that

Then for \(x^*=0\) we have \(w_0(t)=15t\), \(w(t)=30t\), \(v(t)=2\). The parameters are shown in Table 2.

Thus, the methods \(M_7\) and \(M_{10}\) converge to \(x^*=0\), provided that \(x_0 \in U(x^*,\varrho ^*)\).

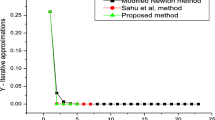

5 Applications

We apply the methods \(M_7\) and \(M_{10}\) of the proposed family (3) to solve systems of nonlinear equations in \(\mathbb {R}^{m}\). A comparison between the performance of present methods with existing higher order methods is also shown. For example, we choose the fifth order method proposed by Madhu et al. [23]; sixth order methods by Parhi and Gupta [26], Esmaeili and Ahmadi [15], Behl et al. [9] and Grau et al. [17]; eighth order method by Sharma and Arora [28]. These methods are given as follows:

Madhu–Babajee–Jayaraman method (\(MBJ_5\)):

where \(H=2I-t(x_n)+\frac{5}{4}(t(x_n)-1)^2 \) and \(t(x_n)=\ {F}'({x}_{n})^{-1}{F'}({y}_{n}).\)

Behl–Cordero–Motsa–Torregrosa method (\(BCMT_6\))

where \(a=\frac{2}{3}\), \(b=-\frac{1}{6}\), \(c=-1\), \(d=3\), \(g=\frac{1}{2}\), \(e=-\frac{2g+1}{2(g-1)^2}\) and \(h=\frac{3}{2(g-1)^2}\).

Parhi–Gupta method (\(PG_6\)):

Esmaeili–Ahmadi method (\(EA_6\)):

Grau–Grau–Noguera method (\(GGN_6\)):

Sharma–Arora method (\(SA_8\)):

where \(G_n=F'(x_{n})^{-1}{F}'(y_{n})\).

The programs are performed in the processor, AMD A8-7410 APU with AMU Radeon R5 Graphics @ 2.20 GHz(64 bit Operating System) Microsoft Window 10 Ultimate 2016 and are complied by Mathematica using multi-precision arithmetics. For every method, we record the number of iterations (n) needed to converge to the solution such that the stopping criterion

is satisfied. In order to verify the theoretical order of convergence, we calculate the approximate computational order of convergence (ACOC) using the formula (40). In the comparison of performance of methods, we also include CPU time utilized in the execution of program which is computed by the Mathematica command “TimeUsed[ ]”.

We consider the nonlinear integral equation \(F(x)=0\) where

wherein \(s\in [0,1]\) and \(x\in \Omega =U(0,2)\subset X\). Here, \(X=C[0,1]\) is the space of continuous functions on [0, 1] with the max-norm,

Integral equation of this kind is called Chandrasekhar equation (see [11]) which arises in the study of radiative transfer theory, neutron transport problems and kinetic theory of the gases.

Using the trapezoidal rule of integration with step \(h=1/m\) to discretize (46), we obtain the following system of nonlinear equations

where \(s_i=t_i=i/m\) and \(x_i=x(t_i)\) with \(x_0=1/2\). We apply the methods to solve (47) for the size \(m= 8, 25, 50, 100\) by selecting the initial value \(\{x_1,x_2, \ldots , x_m\}^T=\{\frac{1}{10},\frac{1}{10}, {\mathop {\cdot \,\cdot \,\cdot \,\cdot \,\cdot \,\cdot \,,}\limits ^{m-times}} \frac{1}{10}\}^T\) towards the required solutions of the systems. The corresponding solutions are given by

and

Numerical results are displayed in Table 3, which include:

-

The dimension (m) of the system of equations.

-

The required number of iterations (n).

-

The error \(||x_{n+1}-x_n||\) of approximation to the corresponding solution of considered problems, where \(A(-h)\) denotes \(A \times 10^{-h}\) in each table.

-

The approximate computational order of convergence (ACOC) calculated by the formula (40).

-

The elapsed CPU time (CPU-time) in seconds.

It is clear from the numerical results displayed in Table 3 that the new methods like the existing methods show stable convergence behavior. Also, observe that at same iteration the absolute value of error of approximating solution obtained by the higher order methods is smaller than the error by the lower order methods which justifies the superiority of higher order methods. From the calculation of computational order of convergence, it is also verified that the theoretical order of convergence is preserved. The CPU time used in the execution of program shows the efficient nature of proposed methods as compared to other methods. Similar numerical experimentations, carried out for a number of problems of different type, confirmed the above conclusions to a large extent.

References

Amat, S., Busquier, S., Plaza, S.: Dynamics of the King and Jarratt iterations. Aequationes Math. 69, 212–223 (2005)

Amat, S., Busquier, S., Plaza, S.: Chaotic dynamics of a third-order Newton-type method. J. Math. Anal. Appl. 366, 24–32 (2010)

Amat, S., Hernández, M.A., Romero, N.: A modified Chebyshev’s iterative method with at least sixth order of convergence. Appl. Math. Comput. 206, 164–174 (2008)

Argyros, I.K.: Convergence and Applications of Newton-type Iterations. Springer, New York (2008)

Argyros, I.K.: Computational Theory of Iterative Methods. In: Chui, C.K., Wuytack, L. (eds.) Studies in Computational Mathematics, vol. 15. Elsevier Publ. Co., New York (2007)

Argyros, I.K., Magreñán, Á.A.: Ball convergence theorems and the convergence planes of an iterative method for nonlinear equations. SeMa 71, 39–55 (2015)

Argyros, I.K., Magreñán, Á.A.: Iterative Methods and Their Dynamics with Applications. CRC Press, New York (2017)

Argyros, I.K., Ren, H.: Improved local analysis for certain class of iterative methods with cubic convergence. Numer. Algorithms 59, 505–521 (2012)

Behl, R., Cordero, A., Motsa, S.S., Torregrosa, J.R.: Stable high-order iterative methods for solving nonlinear models. Appl. Math. Comput. 303, 70–88 (2017)

Candela, V., Marquina, A.: Recurrence relations for rational cubic methods I: the Halley method. Computing 44, 169–184 (1990)

Chandrasekhar, S.: Radiative Transfer. Dover, New York (1960)

Chun, C., Stănică, P., Neta, B.: Third-order family of methods in Banach spaces. Comput. Math. Appl. 61, 1665–1675 (2011)

Cordero, A., Ezquerro, J.A., Hernández-Veron, M.A., Torregrosa, J.R.: On the local convergence of a fifth-order iterative method in Banach spaces. Appl. Math. Comput. 251, 396–403 (2015)

Cordero, A., Torregrosa, J.R.: Variants of Newton’s method using fifth-order quadrature formulas. Appl. Math. Comput. 190, 686–698 (2007)

Esmaeili, H., Ahmadi, M.: An efficient three-step method to solve system of non linear equations. Appl. Math. Comput. 266, 1093–1101 (2015)

Ezquerro, J.A., Hernández, M.A.: New iterations of R-order four with reduced computational cost. BIT Numer. Math. 49, 325–342 (2009)

Grau-Sánchez, M., Grau, Á., Noguera, M.: Ostrowski type methods for solving systems of nonlinear equations. Appl. Math. Comput. 218, 2377–2385 (2011)

Hernández, M.A.: Chebyshev’s approximation algorithms and applications. Comput. Math. Appl. 41, 433–455 (2001)

Hernández, M.A., Martínez, E.: On the semilocal convergence of a three steps Newton-type process under mild convergence conditions. Numer. Algorithms 70, 377–392 (2015)

Homeier, H.H.H.: A modified Newton method with cubic convergence: the multivariate case. J. Comput. Appl. Math. 169, 161–169 (2004)

Kou, J.: A third-order modification of Newton method for system of nonlinear equation. Appl. Math. Comput. 191, 117–121 (2007)

Lukić, T., Ralević, N.M.: Geometric mean Newton’s method for simple and multiple roots. Appl. Math. Lett. 21, 30–36 (2008)

Madhu, K., Babajee, D.K.R., Jayaraman, J.: An improvement to double-step Newton method and its multi-step version for solving system of nonlinear equations and its applications. Numer. Algorithms 74, 593–607 (2017)

Neta, B.: A sixth order family of methods for nonlinear equations. Int. J. Comput. Math. 7, 157–161 (1979)

Parhi, S.K., Gupta, D.K.: Recurrence relations for a Newton-like method in Banach spaces. J. Comput. Appl. Math. 206, 873–887 (2007)

Parhi, S.K., Gupta, D.K.: A sixth order method for nonlinear equations. Appl. Math. Comput. 203, 50–55 (2008)

Ren, H., Wu, Q., Bi, W.: New variants of Jarratt’s method with sixth-order convergence. Numer. Algorithms 52, 585–603 (2009)

Sharma, J.R., Arora, H.: Improved Newton-like methods for solving systems of nonlinear equations. SeMa 74, 147–163 (2017)

Sharma, J.R., Guha, R.K., Sharma, R.: An efficient fourth-order weighted-Newton method for system of nonlinear equations. Numer. Algorithms 62, 307–323 (2013)

Sharma, J.R., Sharma, R., Bahl, A.: An improved Newton–Traub composition for solving systems of nonlinear equations. Appl. Math. Comput. 290, 98–110 (2016)

Traub, J.F.: Iterative Methods for the Solution of Equations. Chelsea Publishing Company, New York (1982)

Weerakoon, S., Fernando, T.G.I.: A variant of Newton’s method with accelerated third order convergence. Appl. Math. Lett. 13, 87–93 (2000)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sharma, J.R., Argyros, I.K. & Kumar, D. Newton-like methods with increasing order of convergence and their convergence analysis in Banach space. SeMA 75, 545–561 (2018). https://doi.org/10.1007/s40324-018-0150-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40324-018-0150-8