Abstract

This paper solves a class of Fredholm integro-differential equations involving a small parameter with integral boundary conditions numerically. The solution to the problem possesses boundary layers at both end boundaries. A central difference scheme is used for approximating the derivatives. In contrast, the trapezoidal rule is used for the integral term, provided an appropriately adapted mesh is considered, namely Shishkin and Bakhvalov–Shishkin meshes. The proposed numerical method presents a uniform second-order convergence rate regardless of the perturbation parameter. Furthermore, using a post-processing technique, we have significantly improved the convergence from second to fourth order. The effectiveness of the proposed approach is validated through some numerical examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In many scientific domains, including engineering, biology, physics, chemistry, potential theory, electrostatics, finance, theory of elasticity, fluid dynamics, astronomy, economics, heat-mass transfer and other subjects, Fredholm integro-differential equations (FIDEs) are fundamental (see, e.g., Brunner 2018; Jalilian and Tahernezhad 2020; Saadatmandi and Dehghan 2010). However, obtaining precise solutions to these problems is highly challenging. As a result, numerical methods play a significant role in solving these problems, as in Brunner (2018), Chen et al. (2019), Chen et al. (2020), Jalilian and Tahernezhad (2020), Saadatmandi and Dehghan (2010). As an example, we show the convection-diffusion parabolic partial integro-differential equation Fahim and Araghi (2018); Siddiqi and Arshed (2013) given by:

with \(u(s,0)=g(s),~~s\in (a,b)\) and \(y(a,t)=g_1(t),~y(b,t)=g_2(t),~ t>0,\) where the integral term is known as memory term and g(s), \(g_1(t),~g_2(t)\) are known functions.

Suppose the most excellent derivative term in a differential equations (DEs) is multiplied by a tiny parameter \(\varepsilon \in (0,1)\). In that case, this parameter is said to be a singular perturbation parameter and the DEs is called a singularly perturbed differential equations (SPDEs). Due to the presence of the perturbation parameter, layers occur on the boundaries, named boundary layers. These SPDEs have a lot of applications in biology, ecology, physical sciences and other areas. For example, the one-dimensional groundwater flow and solute transport problem is governed by the following equation:

It explains how water and solutes flow through the unsaturated zone (De Marsily 1986), where the time is t, the horizontal distance is s and both quantities are positive and measured to the right of the soil’s center.

Furthermore, polymer rheology, population dynamics and mathematical models of glucose tolerance all use singularly perturbed integro-differential equations (SPIDEs). For more applications of SPIDEs, we can cite Lodge et al. Lodge et al. (1978), De Gaetano and Arino De Gaetano and Arino (2000), Brunner and van der Houwen Brunner and van der (1986) and Jerri Jerri (1999). Mainly, Nefedov and Nikitin’s Nefedov and Nikitin (2007) optimum control issues use the singularly perturbed Fredholm integral equations (SPFIDEs). There have been some asymptotic solutions to this issue mentioned in Lange and Smith (1993), Nefedov and Nikitin (2000, 2007)) For instance, in Grimmer and Liu (1994) a class of singularly perturbed partial integro-differential equations in viscoelasticity is given as

However, the theory and approximate numerical solutions of SPIDEs are still at an initial stage. In recent years, various numerical methods have been proposed for first-order SPIDEs without non-local conditions on uniform and non-uniform meshes, as in Amiraliyev et al. (2018), Amiraliyev and Sevgin (2006), Cakır and Gunes (2022), De Bonis et al. (2021), De Bonis et al. (2023), Durmaz et al. (2022), Kudu et al. (2016), Mennouni (2020). In Cimen and Cakir (2021), Durmaz and Amiraliyev (2021), Durmaz et al. (2022), Durmaz et al. (2022), Durmaz et al. (2022), second-order SPIDEs without non-local boundary conditions on Shishkin meshes are discussed. In Sekar (2022), Sekar and Tamilselvan (2019), Sekar et al. (2021), Sekar and Tamilselvan (2019) various problems on singularly perturbed delay differential equations with integral boundary conditions are considered. To our knowledge, numerical methods for first-order SPFIDEs with initial and boundary conditions (IBCs) on Shishkin meshes have been discussed only in Durmaz et al. (2022). Motivated by the above research, we present an effective numerical approach for second-order SPIDEs with IBCs on Shishkin-type meshes(Shishkin and Bakhvalov-Shishkin meshes). The main aim of this article is to solve numerically a singularly perturbed Fredholm integro-differential equation with integral boundary conditions given by

where \(L_{1}u= -\varepsilon u''+a(x)u\), \(L_{2} u= -\lambda \displaystyle \int \nolimits _{0}^{1} K(x,s)u(s)ds\) and \(\gamma >K(x,s)\in L^{2}[0,1]\). It is assumed that \( a(x){\ge }\beta> \lambda \gamma >0,\) where \({\lambda }\) is a positive constant and \(\int _0^1g_i(x)dx<1,i=1,2\), \(g_i(x)\) are non negative sufficiently smooth functions satisfying appropriate regularity constraints.

The task will be addressed using a central difference scheme for approximating the second-order derivative and the composite trapezoidal rule for approximating an integral part, considering Shiskin-type meshes. The proposed method provides an optimal second-order rate of convergence \(\left( CN^{-2}\right) \), but lately, using the extrapolation technique, a fourth order rate of convergence \(\left( CN^{-4}\right) \) will be obtained.

The article is organized as follows: In Sect. 2, we present some issues concerning the exact solution. Section 3 constructs the Shishkin-type mesh and the computational analysis. Section 4 introduces the Richardson extrapolation, its application and the corresponding convergence analysis. Section 5 presents some numerical examples with graphs and tables to show the performance of the proposed method. Finally, Sect. 6 summarizes the article’s conclusions.

Along this work, we consider C to be any positive constant independent of \(\varepsilon \). The standard supremum norm, \(||\mathfrak {f}||_{\mathfrak {D}}=\displaystyle \sup _{y\in \mathfrak {D}}|\mathfrak {f}(y)|\), on a given domain \(\mathfrak {D}\), will be used along the paper. If the domain is clear, we use only \(||\mathfrak {f}||\).

2 Stability and bounds on the derivatives of the continuous solution

Theorem 2.1

Let \(\Xi (x)\) be any function such that \(\mathcal {K}\Xi (0)\ge 0\), \(\mathcal {K}\Xi (1)\ge 0\) and \(L\Xi (x)\ge 0,~ \forall x\in \Omega \), where \(\mathcal {K}\) and the differential operator L are defined in (1.1). Then, it holds that \(\Xi (x)\ge 0\), \(\forall x\in \overline{\Omega }=[0,1]. \)

Proof

Consider the function \(t(x)=1+x\), which is non negative for \(x \in \overline{\Omega }\). Let denote

There exists \(x_0\in \overline{\Omega }\) such that \(\Xi (x_0)+\mu t(x_0)=0\) and thus \(\Xi (x)+\mu t(x)\ge 0,\forall x\in \overline{\Omega }\). As a result, the function \((\Xi +\mu t)\) attains its minimum at \(x=x_0\). We proceed by contradiction. If we assume that the conclusion is false, then it is \(\mu >0\). Now, consider two cases:

Case (i): \(x_0=0\) or \(x_0=1\);

Note that \(\mathcal {K}(\Xi +\mu t)(x_0)= \mathcal {K}\Xi (x_0)+\mu \mathcal {K}t(x_0) >0\). On the other hand, it is

which is a contradiction.

Case (ii): \(x_0\in \Omega .\)

According to the hypothesis, it is \(L\Xi (x_0)\ge 0\). On the other hand, it is

and thus, we arrive at a contradiction.

Theorem 2.2

(Stability Result)

Let u(x) be the solution of problem (1.1). Then, the following bounds of the solution and its derivatives hold:

-

(i)

\(||u|| \le C \max _{x\in \Omega } \Big \{\mathcal {K}u(0),\mathcal {K}u(1),Lu(x)\Big \}.\)

-

(ii)

\(||u^{(k)}|| \le C (1+\varepsilon ^{-k/2}), ~\text{ for }~k=1,2,3,4.\)

Proof

Proof is available in Miller et al. (1996, Chapter 6, p. 46).

Lemma 2.3

Let consider the decomposition of the solution u(x) of problem (1.1) in the form \(u=v+w_{L}+w_{R}\), where v is smooth and \(w_{L}, w_{R}\) are singular components. Then, it holds for \(k=0,1,2,3,4\) that

-

(i)

\(|v^{(k)}(x)| \le C (1+\varepsilon ^{-(k-2)/2})\).

-

(ii)

\(|w^{(k)}_{L}(x)| \le C \varepsilon ^{-k/2} e^{-x\sqrt{\beta /\varepsilon } }\).

-

(iii)

\(|w^{(k)}_{R}(x)| \le C \varepsilon ^{-k/2} e^{-(1-x)\sqrt{\beta /\varepsilon } }.\)

Proof

(i) The proof is available in Miller et al. (1996, Chapter 6, p 48).

(ii) Consider \( \psi ^{\pm }(x) =C e^{-x\sqrt{\beta /\varepsilon } }\pm w_{L}(x),\) then

By Theorem 2.1 we have that \(\psi ^{\pm }(x)\ge 0\) and thus

For the derivative bounds of regular component, the proof is the same as in Miller et al. (1996), resulting in

(iii) It can be proven similarly as in the previous case that

3 Non-uniform meshes and analysis

3.1 Non-uniform meshes

3.2 Shishkin mesh (S-mesh)

The transition parameter \(\sigma \) is defined as

where \(\sigma _0>0\) is an user choice parameter and it is assumed that \(\sqrt{\varepsilon }\le N^{-1}\). More details about the S-mesh can be found in Miller et al. (1996); Shishkin and Shishkina (2009). The mesh points are defined as \(\mathcal {G}_{x}=\{x_1,x_2,\ldots , x_n\} \in [0, 1]\), where

with \(H_1=\frac{4\sigma }{N}\), \(H_2=\frac{2(1-2\sigma )}{N}\). The step sizes in the space variable are given by \(h_i=x_i-x_{i-1},\) for \(i=1,\ldots ,N\).

3.3 Bakhvalov–Shishkin mesh (B–S-mesh)

For a detailed construction of the B–S mesh one can refer to Liu and Yang (2022). The mesh points are

where, \(\sigma _1=\min \Bigg \{\dfrac{1}{4},\dfrac{\sigma _0\sqrt{\varepsilon }}{\beta }\ln \min \{\varepsilon ^{-1},N\}\Bigg \}\) and \(\vartheta =4\Big (\exp \big (-\beta \sigma _1/(\sigma _0\sqrt{\varepsilon })\big )-1\Big )\). The step sizes, in this case are denoted as in the previous case by \(h_i=x_i-x_{i-1}\) for \(i=1,\ldots , N\).

3.4 Numerical approach

Given a mesh function \(\phi _i\), the backward, forward and center difference operators are defined as follows:

respectively and the approximate second-order operator is given by

where \(\phi _{i}=\phi (x_i).\)

We discretize problem (1.1) using the central difference scheme for the second order derivative and the trapezoidal method for the integral part.

The proposed numerical scheme is given as follows:

where,

\(U_i=U(x_i)\) is the approximate solution to the exact solution of the problem (1.1), \(a_i=a(x_i),~ f_i=f(x_i),~K_{i,j}=K(x_i,s_j) \) and \(\left\{ x_i \right\} \) are the grid points considered.

The following result is a discrete version of Theorem 2.1 and the proof can be obtained similarly.

Theorem 3.1

Given any discrete function \(\Xi (x_i)\) on a mesh \(\left\{ x_i \right\} _{i=1}^N\) such that \(\mathcal {K}^N\Xi (x_0)\ge 0\), \(\mathcal {K}^N\Xi (x_N)\ge 0\) and \(L^N\Xi (x_i)\ge 0\), where \(\mathcal {K}^N\), \(L^N\) are defined similarly as in Theorem 2.1, it holds that \(\Xi (x_i)\ge 0\), \(\forall x_i\in \overline{\Omega }^N. \)

Proof

Consider \(t(x_i)=1+x_i,\)

Note that the function is non negative on \(x_i \in \overline{\Omega }^N\). Let

Furthermore, \(\exists ~ x_k\in \overline{\Omega }^N\) such that \(\Xi (x_k)+\mu t(x_k)=0\) and \(\Xi (x_i)+\mu t(x_i)\ge 0,\forall x_i\in \overline{\Omega }^N\). As a result, at \(x=x_k\), the function \((\Xi +\mu t)\) attains its minimum. We proceed by contradiction. If the theorem is false, then it is \(\mu >0\).

Case (i): \(x_k=x_0\) or \(x_k=x_N\). Then

Case (ii): \(x_k\in \Omega ^N\). In this case, we have

It any case, we arrive at a contradiction.

Theorem 3.2

If \(\phi _i\) is any mesh function, then

Proof

One can prove this easily by using Theorem 3.1. \(\square \)

3.5 Error estimate for the difference schemes

Error estimates are bounds on the error of the numerical solution. The discrete maximum norm is a measure of the error that takes the maximum absolute value of the errors at the mesh points. Formally, if u is the exact solution and \(U^N\) is the numerical solution on a mesh with parameter N, then the discrete maximum norm of the error is \(\Vert (u-U^N)(x_i)\Vert _{\infty }=\max |u(x)-U^N(x_i)| \) for all mesh points \(x_i\).

We demonstrate that the numerical solution approaches near first-order convergence when applied on a Shishkin mesh, while it achieves definitive first-order convergence on a Bakhvalov–Shishkin mesh. Additionally, we calculate error estimates using the discrete maximum norm to evaluate the solution accuracy.

Remark

Using the integral form of the truncation term and the composite trapezoidal rule on the range [0, 1],

with \(~\tau _{0}=\frac{h_{1}}{2}, ~~\tau _{i}=\frac{h_{i}+h_{i+1}}{2}, ~~~~~i=1,2,3,...,N-1,~~\tau _{N}=\frac{h_{N}}{2}\) and

Similarly as in (3.2), then

where

Theorem 3.3

Given u and \(U^N\), which are respectively the solutions to (1.1) and (3.1), we get the following estimate of the numerical errors (3.1)

Proof

The discrete solution U can be decomposed into smooth (V) and singular (W) components. The error can be written in the form

By Miller Miller et al. (1996), it is

Now, for the \(L_{2}\) operator, then

Now, we analyzed the bounds for the smooth and singular components.

Smooth components:

At the points \(x_k=x_{0}\) and \(x_k=x_{N}\), then

By Theorem 2.2 gives

Singular components:

The estimate for \(W_{L}-w_{L}\) is presented first. We must consider two cases, \(\sigma =\frac{1}{4}\) or \(\sigma =2\sqrt{\frac{\varepsilon }{\beta }}\ln N<\frac{1}{4}\) to be used in the argumentation.

Case(i): \(\sigma =\frac{1}{4}.\)

We have a uniform mesh in this instance and \(\frac{1}{4}\le 2\sqrt{\frac{\varepsilon }{\beta }}\ln N\). It is obvious that \(x_i-x_{i-1}=N^{-1}\) and \(\varepsilon ^{-\frac{1}{2}}\le C\ln N\). By Miller et al. (1996), we get that

At \(x=x_0\) or \(x=x_N\), then

where \(x_{i-1}\le \chi _i\le x_i\). Applying Theorem 3.2 in Miller et al. (1996) to the function \((W_L-w_L)(x_i)\) gives

Case(ii): \(\sigma <\frac{1}{4}.\)

The resulting mesh is a piece wise uniform one, with the mesh spacing \(2(1-2\sigma )/N\) in the sub interval \([\sigma , 1-\sigma ]\) and \(4\sigma /N\) in each of the sub intervals \([0, \sigma ]\) and \([1-\sigma , 1].\)

By Miller et al. (1996), then

and

At \(x=x_0\) or \(x= x_N,\) then

where \(x_{i-1}\le \chi _i\le x_i\). Applying Lemma 4.2 in Miller et al. (1996) to the function \((W_L-w_L)(x_i)\) gives

The error estimates for \(W_{R}\) are established using a similar procedure. Therefore,

\(\square \)

4 Post-process technique

We use the Richardson extrapolation approach to improve the accuracy of the proposed scheme. The discrete problem (3.1) is first solved on the Shishkin type mesh \(\mathbb {G}^{2N}\), which is created by dividing each Shishkin mesh named \(\mathbb {G}^{N}\) by a specified transition parameter. Consequently, the appropriate S-mesh nodes are \( \mathbb {G}^{2N}=\{\widetilde{x}_i \in [0, 1]: 0=\widetilde{x}_0<\widetilde{x}_1<\dots<\widetilde{x}_{2N-1}<\widetilde{x}_{2N}=1\} \) given by

and the grid points in the B–S-mesh are given by

Now, from Theorem 3.3, the error is

for \(x_i\in \mathbb {G}^N\). Let \(\widetilde{U}(\widetilde{x}_i)\) represent the discrete solution on the mesh \(\mathbb {G}^{2N}\) (see (3.1)). From Theorem 3.3, we get

for \(\widetilde{x}_i, \in \mathbb {G}^{2N}\). Now, the elimination of the \(o(N^{-2})\) term from (4.1) and (4.2) leads to the following approximation

Therefore, we use the extrapolation formula as

Theorem 4.1

Let \(U_{exp}\) represent the result of the Richardson extrapolation method (4.4) by solving the discrete problem (3.1) on two meshes \(\mathbb {G}^N\) and \(\mathbb {G}^{2N}\) and u be the solution of the continuous problem (1.1). Also, assume that \(\sqrt{\varepsilon } \le N^{-1}\). Then we have the following error-bound

Proof

The error can be written in the form

For the first term, we have (the complete proof of this bound available in Natividad and Stynes 2000; Shishkin and Shishkina 2016)

Now, to get a bound for the \(L_{2}\) operator, we write

We decompose \(\widetilde{U}\) on \(\mathbb {G}^{2N}\) as \(\widetilde{U}=\widetilde{V}+\widetilde{W}\), where \(\widetilde{W}\) is layer component and \(\widetilde{V}\) is smooth layer on \(\mathbb {G}^{2N}.\)

Smooth component:

from the integral form of Taylor series expansion and using derivative bounds as we have done in Theorem 3.3, we have

From the stability result we can write

Similarly we can obtain on \(\mathbb {G}^{2N}\),

From the extrapolation formula (4.4), we can write

Layer components:

from the integral form of Taylor series expansion and using derivative bounds as we have done in Theorem 3.3, we have

From stability result we can write

Similarly we can obtain on \(\mathbb {G}^{2N}\),

From the extrapolation formula (4.4), we can write

Hence, we get bound on S-mesh

Similarly, we can prove the bound B–S mesh

For more details about B–S mesh, one can find in Mohapatra and Govindarao (2021). \(\square \)

5 Computational simulations

The suggested approach is used to solve two test problems and the results are presented in this section.

Example 5.1

Consider the following problem:

Example 5.2

Consider the following problem:

The exact solutions to the above problems are unknown. We apply the concept of the double mesh principle to get the pointwise errors and to confirm the \(\varepsilon \)-uniform convergence. Let denote \(\widetilde{U}^N(x_i)\), the numerical result obtained on the Shishkin-type mesh created with the fixed transition parameter. This mesh is based on the \({\widetilde{\mathbb {G}}}^{2N}\) grid.

Now, we determine both maximum element-wise errors before and after extrapolation for each \(\varepsilon \) by \( {E}_\varepsilon ^{N}=\max _{(x_i)\in \mathbb {G}^N} |U^{N}(x_i)-\widetilde{U}^{N}(x_i,t_n)|\) and \( {E}_\varepsilon ^{N}=\max _{(x_i)\in \mathbb {G}^N} |U_{exp}^{N}(x_i)-\widetilde{U}_{exp}^{N}(x_i)|\). The corresponding order of convergence is defined by \({P}_\varepsilon ^{N}=\log _2\Bigg (\frac{{E}_ \varepsilon ^{N}}{{E}_\varepsilon ^{2N}}\Bigg )\). Here, the double mesh concept is also applied to the obtained extrapolation solution for \(\widetilde{U}_{exp}^{N}(x_i)\).

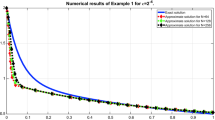

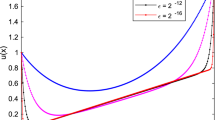

For different values of \(\varepsilon \), the approximate solutions for Example 5.1 are plotted in Fig. 1 on a S-mesh. Numerical solutions of Example 5.2 are plotted in Fig. 2. These figures show that when \(\varepsilon \) decreases, boundary layers are present near \(x = 0\) and \(x=1\). Before extrapolation, the error is plotted in Fig. 3a on a S-mesh and in Fig. 3b on a B–S mesh for Example 5.1. Similarly, after extrapolation, the errors are plotted in Fig. 4a for a S-mesh and in Fig. 4b for a B–S mesh of Example 5.1. From these figures, one can observe that the error is less on B–S mesh compared to S-mesh, as well as the use of the extrapolation approach results in a decrease in the errors.

The calculated maximum pointwise errors and the corresponding rate of convergence for Example 5.1 by using the proposed scheme are presented before extrapolation in Table 1 and after extrapolation in Table 2. Similarly, for Example 5.1, before extrapolation, the results are given in Table 3 and after extrapolation, the results are shown in Table 4. One can observe from these tables that before extrapolation, the rate of convergence is almost two (up to a logarithmic factor) on the S-mesh, but on the B–S mesh, the rate of convergence is two. Similarly, after extrapolation, the convergence rate is almost four (up to a logarithmic factor) on the S-mesh, but on the B–S mesh, the convergence rate is four. We note that the accuracy is higher on B–S meshes compared to S-meshes in both cases, before extrapolation and after extrapolation. To visualize the numerical order of convergence, the maximum pointwise errors before extrapolation are plotted in a log–log scale plot on the S-mesh in Fig. 5a and B–S-mesh in Fig. 5b. Similarly, maximum pointwise errors after extrapolation are plotted in Fig. 6 on the S-mesh and B–S mesh, respectively.

6 Conclusion

In this article, Fredholm integro-differential equations involving a small parameter with integral boundary conditions are solved numerically. The central difference scheme is used for approximating the derivative and the trapezoidal rule is used for approximating the integral part considering Shishkin-type meshes (Shishkin and Bakhvalov–Shishkin meshes). We prove that the proposed numerical scheme converges uniformly with respect to the small parameter \(\varepsilon \), providing a second-order accuracy. Then, we use the post processing technique based on the extrapolation approach, providing a fourth order convergence rate. The numerical scheme has been tested in two examples, confirming the theoretical bounds.

Data availability

Not applicable.

References

Amiraliyev GM, Sevgin S (2006) Uniform difference method for singularly perturbed Volterra integro-differential equations. Appl Math Comput 179:731–741

Amiraliyev GM, Durmaz ME, Kudu M (2018) Uniform convergence results in singularly perturbed Fredholm integro-differential equations. J Math Anal 9(6):55–64

Brunner H (2018) Numerical analysis and computational solution of integro-differential equations. Springer, Cham

Brunner H, van der P (1986) The numerical solution of Volterra equations CWI monographs. North Holland, Amsterdam

Cakır M, Gunes B (2022) A new difference method for the singularly perturbed Volterra-Fredholm integro-differential equations on a Shishkin mesh. Hacet J Math Stat 51(3):787–799

Chen J, He M, Zeng T (2019) A multiscale Galerkin method for second-order boundary value problems of Fredholm integro-differential equation II: Efficient algorithm for the discrete linear system. J Vis Commun Image R 58:112–118

Chen J, He M, Huang Y (2020) A fast multiscale Galerkin method for solving second order linear Fredholm integro-differential equation with Dirichlet boundary conditions. J Comput Appl Math 64:112352

Cimen E, Cakir M (2021) A uniform numerical method for solving singularly perturbed Fredholm integro-differential problem. Comput Appl Math 40(42):1–14

De Bonis MC, Occorsio D, Themistoclakis W (2021) Filtered interpolation for solving Prandtl’s integro-differential equations. Numer Algorithms 88:679–709

De Bonis MC, Mennouni A, Occorsio D (2023) A numerical method for solving systems of hypersingular integro-differential equations. Electron Trans Numer Anal 58:378–393

De Gaetano A, Arino O (2000) Mathematical modeling of the intravenous glucose tolerance test. J Math Biol 40:136–168

De Marsily G (1986) Quantitative hydrogeology-groundwater hydrology for engineers Inc. Orlando, Florida

Durmaz ME, Amiraliyev GM (2021) A robust numerical method for a singularly perturbed Fredholm integro-differential equation. Mediterr J Math 18(24):1–17

Durmaz ME, Cakır M, Amirali I, Amiraliyev GM (2022) Numerical solution of singularly perturbed Fredholm integro-differential equations by homogeneous second-order difference method. J Comput Appl Math 412:114327

Durmaz ME, Cakır M, Amiraliyev G (2022) Parameter uniform second-order numerical approximation for the integro-differential equations involving boundary layers. Commun Fac Sci Univ Ank Ser A1 Math Stat 71(4):954–967

Durmaz ME, Amiraliyev G, Kudu M (2022) Numerical solution of a singularly perturbed Fredholm integro-differential equation with Robin boundary condition. Turk J Math 46(1):207–224

Durmaz ME, Amirali I, Amiraliyev GM (2022) An efficient numerical method for a singularly perturbed Fredholm integro-differential equation with integral boundary condition. J Appl Math Comput 69(1):505–528

Fahim A, Araghi MAF (2018) Numerical solution of convection-diffusion equations with memory term based on sinc method. Comput Methods Differ Equ 6(3):380–395

Grimmer R, Liu JH (1994) Singular perturbations in viscoelasticity. Rocky Mountain J Math 24:61–75

Jalilian R, Tahernezhad T (2020) Exponential spline method for approximation solution of Fredholm integro-differential equation. Int J Comput Math 97(4):791–801

Jerri A (1999) Introduction to integral equations with applications. Wiley, New York

Kudu M, Amirali I, Amiraliyev GM (2016) A finite-difference method for a singularly perturbed delay integro-differential equation. J Comput Appl Math 308:379–390

Lange CG, Smith DR (1993) Singular perturbation analysis of integral equations: part II. Stud Appl Math 90(1):1–74

Liu X, Yang M (2022) Error estimations in the balanced norm of finite element method on Bakhvalov–Shishkin triangular mesh for reaction-diffusion problems. Appl Math Lett 123:107523

Lodge AS, McLeod JB, Nohel JAA (1978) Nonlinear singularly perturbed Volterra integro-differential equation occurring in polymer rheology. Proc R Soc Edinb Sect A 80:99–137

Mennouni A (2020) Improvement by projection for integro-differential equations. Math Methods Appl Sci. https://doi.org/10.1002/mma.6318

Miller JJH, O’Riordan E, Shishkin IG (1996) Fitted numerical methods for singular perturbation problems. World Scientific, Singapore

Mohapatra J, Govindarao L (2021) A fourth-order optimal numerical approximation and its convergence for singularly perturbed time delayed parabolic problems. Iran J Numer Anal Optim 12(2):250–276

Natividad MC, Stynes M (2000) An extrapolation technique for a singularly perturbed problem on Shishkin mesh. In: Proceedings of the third asian mathematical conference, vol 2002, pp 383–388

Nefedov NN, Nikitin AG (2000) The asymptotic method of differential inequalities for singularly perturbed integro-differential equations. Differ Equ 36(10):1544–1550

Nefedov NN, Nikitin AG (2007) The Cauchy problem for a singularly perturbed integro-differential Fredholm equation. Comput Math Math Phys 47(4):629–637

Saadatmandi A, Dehghan M (2010) Numerical solution of the higher-order linear Fredholm integro-differential-difference equation with variable coefficients. Comput Math Appl 59:2996–3004

Sekar E (2022) Second order singularly perturbed delay differential equations with non-local boundary condition. J Comput Appl Math 417:114498

Sekar E, Tamilselvan A (2019) Singularly perturbed delay differential equations of convection-diffusion type with integral boundary condition. J Appl Math Comput 59(1):701–722

Sekar E, Tamilselvan A (2019) Parameter uniform method for a singularly perturbed system of delay differential equations of reaction–diffusion type with integral boundary conditions. Int J Appl Math 5(3):1–12

Sekar E, Tamilselvan A, Vadivel R (2021) Nallappan Gunasekaran, Haitao Zhu, Jinde Cao, Xiaodi Li, Finite difference scheme for singularly perturbed reaction diffusion problem of partial delay differential equation with non local boundary condition. Adv Differ Equ 1:1–20

Shishkin GI, Shishkina LP (2009) Difference methods for singular perturbation problems. CRC Press, Boca Raton

Shishkin GI, Shishkina LP (2016) Difference scheme of highest accuracy order for a singularly perturbed reaction–diffusion equation based on the solution decomposition method. Proc Steklov Inst Math 292(1):262–275

Siddiqi SS, Arshed S (2013) Numerical solution of convection-diffusion integro-differential equations with a weakly singular kernel. J Basic Appl Sci Res 3(11):106–120

Acknowledgements

The authors express their gratitude to the referees for their helpful and constructive comments.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

All the authors contributed equally and significantly to writing this article and typed, read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no Conflict of interest concerning the publication of the manuscript.

Ethical approval

Not applicable.

Additional information

Communicated by Donatella Occorsio.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Govindarao, L., Ramos, H. & Elango, S. Numerical scheme for singularly perturbed Fredholm integro-differential equations with non-local boundary conditions. Comp. Appl. Math. 43, 126 (2024). https://doi.org/10.1007/s40314-024-02636-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-024-02636-3

Keywords

- Singular perturbation

- Integral boundary conditions

- Boundary layer

- Central difference scheme

- Uniform convergence