Abstract

In this paper, an effective iterative technique for analytical solutions of a class of nonlinear singular boundary value problems (SBVPs) occurring in different physical situations is presented. In constructing the recursive approach for the iterative solution components, a technique that relies on establishing a corresponding integral representation is used. This approach provides a highly accurate approximate solution with a few iterations. We also discussed the convergence of the methodology. Furthermore, we consider some numerical examples from various physical situations, including real-life problems, to demonstrate the efficacy of the technique. As seen in the presented numerical tests, our new proposal outperforms traditionally existing iterative methods in the literature. To demonstrate the comparable efficiency and robustness of the proposed iterative procedure, the numerical results are compared to existing results in the literature. It is apparently, an advanced approach to deal with various forms of highly nonlinear problems. This approach works well for SBVPs with nonlinear boundary conditions also as depicted by a numerical example.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the present study, the following class of two-point nonlinear SBVPs is considered

subject to the following boundary conditions

or,

where finite constants are \(\mu >0\), \( \nu \ge 0\), \(\psi \), and \(\omega \). We assume that f(x, y) is continuous in \(([0, 1]\times {\mathbb {R}})\) and \(\partial f(x, y)/\partial y\) exists and is continuous.

Although one can consider the problem (1) with the following general nonlinear boundary conditions

Problems (1)–(3) frequently occur in different fields of applied science and engineering. Some of these include chemical reactor, control and optimization, boundary layer theory, astrophysics theory, isothermal gas sphere, spherical cloud thermal behavior, nuclear physics, atomic structure, electrohydrodynamics and tumor growth problem (Verma et al. 2020b; Rufai and Ramos 2020, 2021b, a; Ramos and Vigo-Aguiar 2008; Moaaz et al. 2021; Tomar 2021a; Verma et al. 2020a; Van Gorder and Vajravelu 2008; Singh et al. 2016; Verma et al. 2021b; Tomar 2021b; Pandey and Tomar 2021; Verma et al. 2021a; Zhao et al. 2021). Therefore, in physical models, these problems occur naturally, often due to an impulsive sink or source term and having singularity associated with the independent variable. Such problems also arise when generalized ordinary differential equations are obtained with spherical or cylindrical symmetry from partial differential equations. Here, a few special cases of these problems are listed.

-

1.

Michaelis–Menten uptake kinetics in steady-state oxygen diffusion (Lin 1976; McElwain 1978) when \(\alpha =2\) and \(f=-\frac{\eta y(x)}{y(x)+\rho }\), \(\eta ,\rho >0\).

-

2.

The equilibrium of isothermal gas sphere (Keller 1956) when \(\alpha = 2\) and \(f= y(x)^5\).

-

3.

The thermal explosions (Khuri and Sayfy 2010; Chambre 1952) when \(\alpha =1, 2\) and \(f=\rho e^{y(x)}\), where \(\rho \) is a physical parameter.

-

4.

The modeling of the heat source distribution of the human head (Flesch 1975; Duggan and Goodman 1986) when \(\alpha =2\) and \(f=\rho e^{-\eta y(x)}\), \(\eta ,\rho >0\).

For a detailed literature survey one may refer to Verma et al. (2020b) and the references therein. In Ford and Pennline (2009), Pandey (1996), Pandey (1997) and Russell and Shampine (1975) the existence and uniqueness of the problem is studied. Note that the singularity behavior at \(x=0\) is the key difficulty in solving such problems.

As stated, at the initial point \(x=0\), the problem (1) has a singularity, so it adds complexity in terms of obtaining the closed-form solution. Therefore, several researchers have developed various analytical and numerical methods over the past decades to look for the numerical and approximate analytical solution to these problems. Some of the well-known methods in the literature are the Taylor wavelet method (Gümgüm 2020), Cubic splines (Kanth and Bhattacharya 2006; Chawla et al. 1988), B-splines (Çağlar et al. 2009), finite difference method (Chawla and Katti 1985), variational iteration method (VIM) (Kanth and Aruna 2010), Adomian decomposition method (ADM) (Inç and Evans 2003) and its modified versions (Kumar et al. 2020; Wazwaz 2011), a combination of VIM and homotopy perturbation method (VIMHPM) (Singh and Verma 2016), Optimal homotopy analysis method (OHAM) (Singh 2018), a reproducing kernel method (Niu et al. 2018), compact finite difference method (Roul et al. 2019), fixed-point iterative schemes (Tomar 2021a; Assadi et al. 2018) and nonstandard finite difference schemes (Verma and Kayenat 2018). It should be noted that the existing analytical methods require more iterations in order to obtain a relatively good precise solution, resulting in very high x powers and a high number of terms in successive approximate solutions. In addition, a large number of steps are demanded by the numerical technique to get an acceptable numerical solution to the problems. In comparison, to get a highly accurate approximate solution, the proposed methodology needs just a few iterations, resulting in low power of x and fewer terms in the approximate solutions obtained. Unlike the perturbation approaches, the suggested iterative approach does not presume any small parameters in the problems. In addition, to deal with nonlinear terms, the ADM requires the evaluation of Adomian polynomials, which can be time demanding in some cases. At the same time, the OHAM’s convergence depends on the optimal parameter. Therefore, an appropriate methodology must be established that overcomes the limitations mentioned earlier and offers a precise solution to the problems.

The strength of the work presented is two-fold. First, we construct an integral operator and derive the variational iteration (VIM) without using the Lagrange multiplier and restricted variations as required to construct the VIM formula. We then develop an effective algorithm based on domain decomposition by dividing the interval [0, 1] into uniform division subintervals. The approximate solutions are obtained in each subinterval in terms of unknowns constants. Then the values of unknown constants are evaluated by assuming the conditions of continuity of the solution y(x), and its derivative at the end of each subinterval. Then these imposed continuity conditions produce the system of nonlinear equations. To solve the corresponding nonlinear system of equations, the Newton–Raphson method is then implemented. The expansion strategy of the Taylor series is used for computational efficiency and to tackle the strong nonlinear terms such as \(e^{y(x)}\). The key advantages of our approach are that it uses a few iterates to provide a highly precise solution and solves the strong nonlinearity present in the problem very efficiently. Approximate analytical solutions with minimal polynomials are generated by the proposed method. Besides, the suggested approach can be easily applied to large-scale problems as well as parameter-related problems. The method’s convergence is also discussed in the paper. We consider some numerical test examples to support the applicability and robustness of the method. The numerical findings are compared with existing approaches to show the efficacy of the procedure. We also illustrate that the scheme applies to nonlinear singular problems associated with nonlinear boundary conditions.

The draft of this article is structured as follows. The iterative technique for solving the problems (1)–(3) is derived in Sect. 2. We also discussed the convergence analysis of the method in Sect. 3. The numerical simulations are provided to explain the work in Sect. 4 and the comparison of the numerical results is tabulated to show the high performance and superiority of our proposal. Finally, the work is concluded with Sect. 5.

2 The construction of method

In this section, we derive an iterative scheme to solve the considered problem (1) effectively with boundary conditions (2)–(3).

For this purpose and simplicity, problem (1) may be written as follows

Let us define (5) as follows

Now subtracting and adding \((x^{\alpha }y'(x))'\) from (6) leads to

and re-write (7) as follows:

Now transform (8) into an associated integral representation by integrating (8) from 0 to x, then we have

Integrating (9) from 0 to x again, we get

Now we get the following integral form after changing the integration order in (10)

where K(x, t) is defined as

Using the following identity

after replacing the value of \(\Lambda \) from (6), Eq. (11) can be written in the following operator form

where

Now Picard’s method for (13) implies

or,

Note that, (14) can be written as follows:

where

Observe that, (15) corresponds to the VIM (Kanth and Aruna 2010; Ramos 2008), which has been developed here without using the Lagrange multipliers and constrained variations that are essential in the usual construction of the standard VIM. Notice that, due to strong nonlinear terms, the successive iterations of (15) lead to the complex integrals, so that the resulting integrals can not be evaluated effectively and require a high number of iterates to get a good accuracy.

To overcome the aforementioned limitations, we introduce an effective algorithm to solve the problem (1) by dividing the interval [0, 1] into N equally spaced subintervals as \(0=x_{0}<x_{1}< \cdots<x_{N-1}<x_{N}=1\), where \(h=1/N\), \(x_{i}=ih,~~0\le i \le N\).

Letting \(y(x_{i})=c_{i}\) and \(y'(x_{i})=c'_{i}\) for \( 0\le i \le (N-1)\). Now according to (14), we can construct the following piecewise scheme on the subintervals \([x_{i}, x_{i+1}]\). Let \(y_{i,n}\) be the nth order approximate solution of (14) on the subinterval \([x_{i}, x_{i+1}]\), \( 0\le i \le (N-1)\).

On the subinterval \([x_{0}, x_{1}]\), the iterative scheme is defined for \(n\ge 0\) as follows:

Begin with the initial value \( y_{0,0}(x) =c_{0}\) for boundary conditions (2) and \( y_{0,0}(x) =\omega +c_{0}x\) for boundary conditions (3), we can easily get the nth order approximate solution \(y_{0,n}(x) \) using the iterative formula (16) on the subinterval \([x_{0}, x_{1}]\) in terms of the unknown constant \(c_{0}\) which will be evaluated further.

On the subinterval \([x_{i}, x_{i+1}]\), \( 1 \le i \le (N-1)\), the iterative scheme is defined for \(n\ge 0\) as follows:

Starting with the initial guess, which is the solution of the corresponding homogenous equation on the subinterval \([x_{i}, x_{i+1}]\),

we can easily get the nth order approximate solution \(y_{i,n}(x) \) on the subinterval \([x_{i}, x_{i+1}]\) in terms of unknowns constants \(c_{i}\) and \(c'_{i}\), \( 1\le i \le (N-1)\).

Now, we get the approximate solutions \(y_{i,n}(x) \) on the subintervals \([x_{i}, x_{i+1}]\), \( 0\le i \le N-1\) in terms of \((2N-1)\) unknown constants \(c_{0}, c_{1}, \ldots ,c_{N-1} \) and \(c'_{1}, c'_{2}, \ldots ,c'_{N-1} \). Then all these approximate solutions matched together to obtain a continuous solution on the interval [0, 1] by assuming the continuity of the solution and its derivative at the end points of the subintervals. Hence, we can construct a continuous solution if \(y_{i, n}(x)\) and \(y'_{i, n}(x)\) have same values at the grid points. Therefore, the approximate solution of (1) over [0, 1] leads to the solution of the following nonlinear system of \((2N-1)\) equations

Now solving (18) using the Newton–Raphson method, the \((2N-1)\) unknowns coefficients \(c_{i}\) and \(c'_{i}\) can be evaluated. Therefore, by evaluation of unknowns constants \(c_{0}, c_{1}, \ldots ,c_{N-1} \) and \(c'_{1}, c'_{2}, \ldots ,c'_{N-1} \), an approximate solution to the problem (1) on the entire interval [0, 1] can be achieved using the scheme (17).

Note that, in some cases, the method (17) leads to very complicated integrals because of strong nonlinearity terms which are impossible to evaluate. To overcome this limitation, we use the Taylor series approach around \(t_{i}\) to approximate the integrals as follows:

where

The accuracy of the solution, however, depends on the number of terms r, and it is noted that more terms of Taylor series are needed as the iteration proceeds to achieve an expected accuracy.

Algorithm: Now, we summarize the basic structure of the proposed algorithm in the following steps.

-

1.

Discretize the interval [0, 1] into equally spaced subintervals \([x_{i}, x_{i+1}]\), \( 0 \le i \le (N-1)\) with \(x_{0}=0\) and \(x_{N}=1\).

-

2.

On each subinterval, obtain the approximate solutions of problem (1) using the proposed iterative formula (19) in terms of unknowns constants \(c_{0}, c_{1}, \ldots ,c_{N-1} \) and \(c'_{1}, c'_{2}, \ldots ,c'_{N-1} \).

-

3.

The approximate solutions obtained in step 2 matched together to form a continuous solution on the interval [0, 1], which leads to a system of nonlinear equations.

-

4.

Solve the system of nonlinear equation obtained in step 3 using Newton–Raphson method and once the unknowns constants computed, one can get an approximate solution to the considered problem.

3 Convergence of the proposed scheme

Here, the convergence analysis of proposed method with boundary conditions (2)–(3) is addressed.

To demonstrate that the iterative sequence of approximate solutions \(y_{i,n}(x)\) is convergent, assume that f(x, y(x)) satisfies the following Lipschitz condition

and

Now, consider the partial sum

of the series

3.1 Convergence of the iterative formula (17)

Now, we will show that the partial sum (20) of the series (21) converges a limit \(y_{i}(x)\) as n approaches to infinity, for \(x\in [x_{i}, x_{i+1}]\). After integrating by parts of the first terms in the integrand of (17), we have

where K(x, t) is given by (12) and letting

For \(n=0\), (22) implies the following inequality

and using the fact that f satisfies Lipschitz condition and (23), we have

and by following the similar process of (24), we can easily get

Now, using (23), (24) and (25), we get the following estimate using a simple induction

In view of (26), it is easy to see that the series (21) is absolutely convergent on the each subinterval \([x_{i}, x_{i+1}]\). Hence, the following infinite series

is uniform convergent on each subinterval \([x_{i}, x_{i+1}]\). Consequently, it follows that the nth partial sum of the infinite series (22) tends to \(y_{i}(x)\) as n approaches to infinity, for each \(x\in [x_{i}, x_{i+1}]\). This completes the proof.

3.2 Convergence of the iterative formula (19)

Now, by following the above analysis, we will show that the partial sum (20) of the series (21) converges. In a similar way to (22), we can reduce the iterative formula (19) in the following form

where

Let us consider \(r=pn+q\), where p and q are known fixed numbers and using the fact that \(T_{i,r}\) is the approximation of \( f(t, y_{i,n}(t))\), then we have \( T_{i,r} \le f(t, y_{i,n}(t))\) for \(x_{i}\le x\le x_{i+1}\).

For \(n=0\), (27) gives the following estimate

and for \(n=1\), we have

and in a similar way, (27) yields

Now, in view of (23)–(26) and by following the above analysis, from (28)–(30) we have

and

Consequently, from the above analysis, the series (21) is absolutely convergent.

Next, let us define \( {\widetilde{y}} \) is the solution obtain by the iterative formula (19), then

where

Now, from (22) and (31), we have

It is easy to see that, as \(n\rightarrow \infty \), then \(r \rightarrow \infty \) and consequently \(T_{i,r}\approx f(t, y_{i,1}(t))\). Hence, \({\widetilde{y}} \approx y \) and this completes the proof.

3.3 Error analysis

Using (22), we obtain

From the mean-value theorem, we have

where \(\xi \) lies between \( y_{i,n}\) and \(y_{i,n-1}\). Now, letting \( |f_{y_{i}}(y_{i}(x))|\le {\widetilde{F}}_{i}\) and by combining (33) and (34) yields

and hence

where \(\psi =\kappa _{i}{\widetilde{F}}_{i} N^{-1} \). This shows that there is a linear convergence and a simple induction yields that

4 Numerical results

We consider some numerical test cases in this section to demonstrate the applicability of the proposed methodology. To demonstrate the efficiency of the proposed approach to SBVPs with nonlinear boundary conditions, we present one numerical example. Maple 18 software package is used to evaluate the numerical results in this paper.

We define the absolute error

and the maximum absolute error

to compare the method’s accuracy, where \(y_{n}\) denotes the nth approximate iterative solution and y is the closed form solution.

Example 1

Consider the linear SBVP Tomar (2021a)

This problem has the true solution \(y(x)=1+\frac{x^2}{16}\). First, we successfully apply the proposed scheme (17) to get the approximate iterative solution of (36) and the maximum absolute errors for different iterations are presented in Table 1. From, Table 1, it is clear that as the number of iterations increases, the absolute error decreases. Also, it is clear that as the value of N increases for an iterative step, the absolute error decreases.

Next, we solve (36) using the present method (19) with two terms of Taylor’s series and get the following successive approximation for \(n=0\) and \(N=5\):

On the interval [0, 0.2],

On the interval [0.2, 0.4],

On the interval [0.4, 0.6],

On the interval [0.6, 0.8],

On the interval [0.8, 1.0],

Observe that, the approximate solutions \(y _{i,1}(x)\), \(0\le i \le 4\) are the analytical solution \(y(x)=1+0.0625x^2\) of the problem. While the maximum absolute error of the VIM Kanth and Aruna (2010) for \(y_{4}(x)\) is \(2.0 \times 10^{-08}\) with 10th degree polynomial. Clearly, we may say that the present approach is effective. In case, we solve (36) using the present method (19) with three terms of Taylor’s series and then the maximum absolute error is \(4.1 \times 10^{-04}\) for \(n=0\) and \(N=5\), however for the next iteration i.e. \(n=1\) and \(N=5\), we achieved the the analytical solution \(y _{i,2}(x)=1+0.0625x^2\), \(0\le i \le 4\).

Example 2

Consider the following linear problem Tomar (2021a)

The analytical solution of this problem is \(y(x)= \exp (x^{\gamma })\).

We successfully apply the proposed scheme (17) to get the approximate iterative solution of (37). Comparisons between the maximum absolute error for \( \alpha =0.5\) and \(\gamma =4\) obtained by our method and the existing methods Tomar (2021a); Roul and Warbhe (2016); Chawla and Katti (1982); Kumar and Aziz (2004) are tabulated in Tables 2 and 3. It is clear from tables that the proposed method with a fewer number of iterations and mesh sizes produces a better solution than the existing methods. Moreover, for \( \alpha =2\) and \(\gamma =5\), the maximum absolute error for the proposed method is \(2.3\times 10^{-04}\) for \(n=1\) and \(N=10\) using 20 degree polynomials. Clearly, we may say that the obtained results are sufficiently accurate. Further, we plot absolute errors of (37) obtained using the presented method with various values of \( \alpha \) and \(\gamma \) for \(n=3\) and \(N=10\) in Figs. 1 and 2, which confirm the effectiveness of the method. It is clear that as the number of iterations progresses the absolute error decreases rapidly.

Graph of absolute error of Example 2 for \(n=3\) and \(N=10\)

Graph of the absolute errors of Example 2 for \(n=3\) and \(N=10\)

Example 3

Consider the following nonlinear SBVP Gümgüm (2020), which arises in the field of equilibrium of isothermal gas sphere

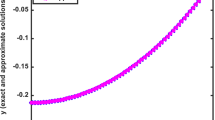

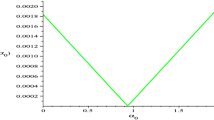

This problem has analytical solution \(y(x)= \sqrt{\frac{3}{3+x^{2}}}\). Using the proposed approach (19) for \(n=3\), \(N=8\) and \(r=(n+6)\) with 11th degree polynomial, we solved the problem (38) and the maximum absolute error obtained by our method is presented in Table 4 along with those obtained by existing methods such as an optimised global hybrid block method Ramos and Singh (2021) with \(M=8\), Taylor wavelet method Gümgüm (2020) using \(M=9\) and 8th order polynomial, VIMHPM Singh and Verma (2016) with 14 iterations and 28th degree polynomial, and 15th degree polynomial, VIM Kanth and Aruna (2010) with 4 iterations and 42nd degree polynomial and the ADM Kumar et al. (2020) with 16 terms. From Table 4, it is clear that the present approach provides far better results than the preexisting methods using a few iterations. Note that, using a lower degree polynomial, the proposed method provides an accurate approximate solution, and hence we may say that the proposed method is an effective and highly promising. In addition, in Fig. 3, the graphs of the absolute error and the obtained solution for \(n=3\) and \(N=10\) are represented. Further, the maximum absolute error and computation run times in seconds are tabulated in Table 5 for the proposed method using \(n=3\) along with other existing methods. Numerical results given in Tables 4 and 5, display a good performance of the proposed scheme.

Graphs of Example 3 for \(n=3\) and \(N=10\)

Example 4

Consider the following nonlinear SBVP Tomar (2021a), which arises in the field of thermal explosion in cylindrical vessel

The problem (39) has analytical solution \(y(x)=2\ln \big (\frac{v+1}{vx^{2}+1}\big )\), where \(v=3-2\sqrt{2}\). The maximum absolute error for \(n=2\), \(N=10\) and \(r=2n\) produced by our scheme is tabulated in Table 6 along with the maximum absolute errors obtained by preexisting methods such as B-spline method Çağlar et al. (2009) with \(N=20\) mesh size and VIMHPM Singh and Verma (2016) with 8 iterations, which confirms the accuracy and superiority of the present approach over the existing methods. The absolute error and the obtained solution are also plotted in Fig. 4.

Graphs of Example 4 for \(n=2\) and \(N=10\)

Example 5

Consider the following nonlinear SBVP Singh and Verma (2016), which describes the thermal distribution profile in the human head

The analytical solution to this problem is unknown. We successfully solve (40) using the proposed method with \(n=3\), \(N=10\) and \(r=4n\), to obtain the approximate iterative solution of (40) for various values of \(\mu \) and \(\nu \). The numerical results obtained using our method are tabulated in Table 7 for \(\mu =0.1, \nu =1\) along with compact finite difference method (CFDM) Roul et al. (2019) with mesh size 20 and ADM Kumar et al. (2020) with 14 terms. From tables, note that the obtained results of our method are matching with the results of the existing methods. Since the closed-form solution of the problem (40) is not known, then we consider the residual error

to reveal the efficiency of the method. In Figs. 5 and 6, we depicted the approximate solutions for different values of \(\mu \) and \(\nu \) to exhibit the accuracy of the method. From these figures, it is clear that for a fixed \(\nu \), the solution profile is decreasing if \(\mu \) is increasing, and for a fixed \(\mu \), the solution profile is increasing if \(\nu \) is increasing. Moreover, the solution profile remains unchanged if \(\mu \) and \(\nu \) simultaneously decreasing or increasing. Therefore, the proposed method is capable of proper study of the behavior of the problem. It is worth to point out that the proposed approach solves the problem efficiently for different values of \(\mu \) and \(\nu \) as the maximum absolute residual error demonstrated in Table 8 while VIMHPM Singh and Verma (2016) failed for \(\mu =0.1\) and \(\nu =1\) because the obtained maximum absolute residual error of Singh and Verma (2016) is \(1.1\times 10^{8}\).

Graph of the approximate solutions of Example 5\(n=3\) and \(N=10\)

Graph of the approximate solutions of Example 5\(n=3\) and \(N=10\)

Example 6

Consider the following nonlinear SBVP Singh and Verma (2016) that arises from a rotationally symmetrical, shallow membrane cap study

The true solution to this problem is unknown. To obtain the approximate solution of (41), we successfully implemented the scheme for \(n=3\), \(N=10\) and \(r=3n\). The numerical results obtained using our scheme are tabulated in Table 9 along with preexisting methods such as VIM Kanth and Aruna (2010) with 3 iterations and VIMHPM Singh and Verma (2016) with 6 iterations. Since the closed-form solution of the problem (41) is not known, then we consider the residual error

to check the efficiency of the method. The approximate solution for \(n=3\) and \(N=10\) is plotted in Fig. 7 and the obtained maximum absolute residual error is \(4.5\times 10^{-16}\), that confirms the method’s effectiveness.

Graph of the approximate solution for Example 6

Example 7

Consider the following singular diffusion problem with nonlinear boundary condition given in Garner and Shivaji (1990)

where \(\beta ,k>0\) and this problem models the oxygen diffusion in a spherical cell with Michaelis–Menten oxygen uptake.

The analytical solution to this problem is unknown. We solve problem (42) using the proposed iterative scheme for \(n=3\), \(N=10\) and \(r=3n\) with \(\beta = \beta _{0}=\beta _{1}=k= k_{0}=k_{1}=\beta _{2}=2\). Since the closed-form solution of the problem (42) is not known, then we consider the residual error

The graph of the approximate solution is depicted in Fig. 8 and the obtained maximum absolute residual error is \(7.4\times 10^{-15}\), which confirms the efficiency and effectiveness of the method.

Graph of the approximate solution for Example 7

5 Conclusion

In this work, we introduced an effective technique for solving linear and nonlinear singular boundary value problems with two types of boundary conditions arising in many scientific phenomena. In the proposed algorithm, we first discretize the domain [0, 1] into a finite number of equally spaced subintervals and then implement the introduced iterative formula to solve the problems in each subinterval. The proposed technique produces a highly accurate and very reliable solution to the problems in a few iterates, as demonstrated by numerical results. The method produces an accurate approximate solution of highly nonlinear problems and the numerical results show a good performance of the proposed method. The effectiveness and robustness have been justified by seven numerical examples, and the obtained results have been compared with the pre-existing methods to demonstrate the superiority of the method. It is clear from numerical simulations that the technique is very useful to study the behavior of problems with parameters and fast convergence of the iterative solutions can be observed as iteration proceeds. Therefore, the present method is a highly promising tool for solving different types of singular problems with strong nonlinearity. Further, the proposed technique has the capability to solve the nonlinear problems with nonlinear boundary conditions which has been illustrated by a numerical example.

References

Assadi R, Khuri S, Sayfy A (2018) Numerical solution of nonlinear second order singular BVPs based on Green’s functions and fixed-point iterative schemes. Int J Appl Comput Math 4(6):134

Çağlar H, Çağlar N, Özer M (2009) B-spline solution of non-linear singular boundary value problems arising in physiology. Chaos Solitons Fractals 39(3):1232–1237

Chambre P (1952) On the solution of the Poisson–Boltzmann equation with application to the theory of thermal explosions. J Chem Phys 20(11):1795–1797

Chawla M, Katti C (1982) Finite difference methods and their convergence for a class of singular two point boundary value problems. Numerische Mathematik 39(3):341–350

Chawla M, Katti C (1985) A uniform mesh finite difference method for a class of singular two-point boundary value problems. SIAM J Numer Anal 22(3):561–565

Chawla M, Subramanian R, Sathi H (1988) A fourth order method for a singular two-point boundary value problem. BIT Numer Math 28(1):88–97

Duggan R, Goodman A (1986) Pointwise bounds for a nonlinear heat conduction model of the human head. Bull Math Biol 48(2):229–236

Flesch U (1975) The distribution of heat sources in the human head: a theoretical consideration. J Theor Biol 54(2):285–287

Ford WF, Pennline JA (2009) Singular non-linear two-point boundary value problems: existence and uniqueness. Nonlinear Anal Theory Methods Appl 71(3–4):1059–1072

Garner J, Shivaji R (1990) Diffusion problems with a mixed nonlinear boundary condition. J Math Anal Appl 148(2):422–430

Gümgüm S (2020) Taylor wavelet solution of linear and nonlinear Lane–Emden equations. Appl Numer Math 158:44–53

Inç M, Evans DJ (2003) The decomposition method for solving of a class of singular two-point boundary value problems. Int J Comput Math 80(7):869–882

Kanth AR, Aruna K (2010) He’s variational iteration method for treating nonlinear singular boundary value problems. Comput Math Appl 60(3):821–829

Kanth AR, Bhattacharya V (2006) Cubic spline for a class of non-linear singular boundary value problems arising in physiology. Appl Math Comput 174(1):768–774

Keller JB (1956) Electrohydrodynamics I. The equilibrium of a charged gas in a container. J Ration Mech Anal 5(4):715–724

Khuri SA, Sayfy A (2010) A novel approach for the solution of a class of singular boundary value problems arising in physiology. Math Comput Model 52(3–4):626–636

Kumar M, Aziz T (2004) A non-uniform mesh finite difference method and its convergence for a class of singular two-point boundary value problems. Int J Comput Math 81(12):1507–1512

Kumar M et al (2020) Numerical solution of singular boundary value problems using advanced Adomian decomposition method. Eng Comput 37:2853–2863

Lin S (1976) Oxygen diffusion in a spherical cell with nonlinear oxygen uptake kinetics. J Theor Biol 60(2):449–457

McElwain D (1978) A re-examination of oxygen diffusion in a spherical cell with Michaelis–Menten oxygen uptake kinetics. J Theor Biol 71(2):255–263

Moaaz O, Ramos H, Awrejcewicz J (2021) Second-order Emden-Fowler neutral differential equations: a new precise criterion for oscillation. Appl Math Lett 118:107172

Niu J, Xu M, Lin Y, Xue Q (2018) Numerical solution of nonlinear singular boundary value problems. J Comput Appl Math 331:42–51

Pandey RK (1996) On a class of weakly regular singular two-point boundary value problems-II. J Differ Equ 127(1):110–123

Pandey RK (1997) On a class of regular singular two point boundary value problems. J Math Anal Appl 208(2):388–403

Pandey RK, Tomar S (2021) An effective scheme for solving a class of nonlinear doubly singular boundary value problems through quasilinearization approach. J Comput Appl Math 392:113411

Ramos H, Singh G (2021) A high-order efficient optimised global hybrid method for singular two-point boundary value problems. East Asian J Appl Math 11(3):515–539

Ramos H, Vigo-Aguiar J (2008) A new algorithm appropriate for solving singular and singularly perturbed autonomous initial-value problems. Int J Comput Math 85(3–4):603–611

Ramos JI (2008) On the variational iteration method and other iterative techniques for nonlinear differential equations. Appl Math Comput 199(1):39–69

Roul P, Warbhe U (2016) A novel numerical approach and its convergence for numerical solution of nonlinear doubly singular boundary value problems. J Comput Appl Math 296:661–676

Roul P, Goura VP, Agarwal R (2019) A compact finite difference method for a general class of nonlinear singular boundary value problems with neumann and robin boundary conditions. Appl Math Comput 350:283–304

Rufai MA, Ramos H (2020) Numerical solution of second-order singular problems arising in astrophysics by combining a pair of one-step hybrid block Nyström methods. Astrophys Space Sci 365:1–13

Rufai MA, Ramos H (2021a) Numerical integration of third-order singular boundary-value problems of Emden-Fowler type using hybrid block techniques. Commun Nonlinear Sci Numer Simul 106069

Rufai MA, Ramos H (2021) Numerical solution for singular boundary value problems using a pair of hybrid Nyström techniques. Axioms 10(3):202

Russell R, Shampine L (1975) Numerical methods for singular boundary value problems. SIAM J Numer Anal 12(1):13–36

Singh M, Verma AK (2016) An effective computational technique for a class of Lane–Emden equations. J Math Chem 54(1):231–251

Singh R (2018) Optimal homotopy analysis method for the non-isothermal reaction-diffusion model equations in a spherical catalyst. J Math Chem 56(9):2579–2590

Singh R, Wazwaz AM, Kumar J (2016) An efficient semi-numerical technique for solving nonlinear singular boundary value problems arising in various physical models. Int J Comput Math 93(8):1330–1346

Tomar S (2021) An effective approach for solving a class of nonlinear singular boundary value problems arising in different physical phenomena. Int J Comput Math 98(10):1–18

Tomar S (2021) A rapid-converging analytical iterative scheme for solving singular initial value problems of Lane–Emden type. Int J Appl Comput Math 7(3):1–17

Van Gorder RA, Vajravelu K (2008) Analytic and numerical solutions to the Lane–Emden equation. Phys Lett A 372(39):6060–6065

Verma AK, Kayenat S (2018) On the convergence of mickens’ type nonstandard finite difference schemes on Lane–Emden type equations. J Math Chem 56(6):1667–1706

Verma AK, Pandit B, Escudero C (2020a) Numerical solutions for a class of singular boundary value problems arising in the theory of epitaxial growth. Eng Comput 37(7):2539–2560

Verma AK, Pandit B, Verma L, Agarwal RP (2020) A review on a class of second order nonlinear singular BVPs. Mathematics 8(7):1045

Verma AK, Kumar N, Singh M, Agarwal RP (2021a) A note on variation iteration method with an application on Lane–Emden equations. Eng Comput 38(10):3932–3943

Verma AK, Pandit B, Agarwal RP (2021) Analysis and computation of solutions for a class of nonlinear sbvps arising in epitaxial growth. Mathematics 9(7):774

Wazwaz AM (2011) The variational iteration method for solving nonlinear singular boundary value problems arising in various physical models. Commun Nonlinear Sci Numer Simul 16(10):3881–3886

Zhao T, Zhang Z, Wang T (2021) A hybrid asymptotic and augmented compact finite volume method for nonlinear singular two point boundary value problems. Appl Math Comput 392:125745

Acknowledgements

The authors thank the referees for the valuable comments and suggestions to improve the quality of this paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by Jose Alberto Cuminato.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Tomar, S., Verma, A.K. & Vajravelu, K. An effective method for solving singular boundary value problems with some relevant physical applications. Comp. Appl. Math. 41, 17 (2022). https://doi.org/10.1007/s40314-021-01715-z

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-021-01715-z

Keywords

- Singular boundary value problem

- Piecewise iterative technique

- Physiology problem

- Astrophysics problem

- Thermal explosion problem