Abstract

We propose a numerical scheme based upon the Bernstein approximation method for computational solution of a new class of Volterra integral equations of the third kind (3rdVIEs). Construction of the technique and its practicality for proposed equations have been introduced. Furthermore we have examined the numerability and convergence analysis of the proposed scheme. Finally, we demonstrate a series of numerical examples demonstrating the effectiveness of this new technique for solving 3rdVIEs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and preliminaries

In the early 1900s, Vito Volterra developed new types of equations for his work on the phenomenon of population growth and called these equations integral equations. In more detail, the integral equation is the equation that contains the unknown function under the integral sign. Integral equations; arise in chemistry, biology, physics and engineering applications modelled with initial value problems for a finite closed interval (Wazwaz 1997). There are three basic kinds of integral equations, but in this study we focus on the third kind due to the its characteristic properties than the others.

The Volterra integral equations of the third kind (3rdVIEs) can be expressed in the literature as follows:

where \(\lambda \) is constant, \( \alpha \in [0,1) \), \( \beta >0 \), \( \alpha +\beta \ge 1 \). In addition to these, g(x) is a continuous function on \( [0,\tau ] \) and \({\mathcal {K}}(x,t) \) is continuous on the domain,

such that

where \( {\mathcal {K}}^{*}(x,t) \) is a continuous function on \( \Delta \) and \( f(\cdot ) \) is unknown function to be determined. As it can be easily seen that the coefficient of the f(x) on the left-hand side of (1.1), which causes to be 3rdVIEs different from the Volterra integral equations of the first and second kind (denoted by 1stVIEs and 2ndVIEs, respectively), gives the characteristic feature to the 3rdVIEs. Therefore, they help us understand why this equations are called the third kind in the literature. As a consequence of these, over the past century, there has been a dramatic increase in the studies of the above-mentioned equations.

The existence and uniqueness theorems and regularity properties of the computational solutions to the 3rdVIEs, including equations associated with for both weakly singular kernels (\( 0<\alpha <1 \)) and smooth kernel (\( \alpha =0 \)) have been presented in Allaei et al. (2015) for \( \beta >1 \) and \( \beta \in [0,1) \). Additionally, at the same study, the authors have provided the conditions for g and \( {\mathcal {K}} \) to obtain the cordial Volterra integral equations (cVIEs), for detailed information about cVIEs see Vainikko (2009, 2010). Therefore, this enabled to apply to Eq. (1.1) some derived consequences known for cordial equations. In addition these, the case of \( \alpha +\beta >1 \) has a special significance with regard to compactness. In more detail, if \( {\mathcal {K}}^{*}(x,t)>0 \), the integral operator related to third kind Volterra integral equations in (1.1) is not compact. In the circumstances, the classical computational techniques can not guarantee the solvability of the third kind Volterra integral equations.

As it is understood, it follows from Allaei et al. (2015), that under certain conditions on \( {\mathcal {K}}(x,t) \), \( \alpha \) and \( \beta \) the integral operator in the (1.1) is compact and then the algebraical system resulting from the collocation technique is uniquely solvable for entire adequately small mesh sizes. However, in the non-compact circumstances, generally, the solvability of the algebraical system with equally distanced or graded mesh is not guaranteed. That is to say, computational approaches to obtain numerical solution of 3rdVIEs take an important place in the literature. In Allaei et al. (2017), the authors have presented the spline collocation technique in the piece-wise polynomial spaces directly to 3rdVIEs with noncompact operator. Additionally, in this study, the authors show that the operator associated the equivalent 2ndVIEs is compact under specific conditions which led the resultant system is uniquely computable for all adequately small size mesh diameters. In addition to these, classical and adapted version of collocation technique for solving 3rdVIEs for both linear and non-linear cases is explained, analysed and tested detailed in Shayanfard et al. (2019, 2020) and Song et al. (2019). Moreover, a spectral collocation technique, based upon the generalized Jacobi wavelets accompanied by the Gauss–Jacobi numerical integration formula has been presented in Nemati et al. (2021). Another approach for solving 3rdVIEs has been presented in Nemati and Lima (2018) by an operational matrix. All these studies show that the 3rdVIEs is worth studying and researches on this subject will increase day by day.

In addition to these, polynomials are one of the most widely used mathematical tools since they are easy to define, can be calculated quickly in the modern computer system and used to express functions in a simple form. Therefore, they played a significant role in approximation theory and numerical analysis for many years. Studies in approximation theory began when Weierstrass proved that it is possible to approximate continuous functions with the help of polynomials. In 1912, Bernstein defined new polynomials called Bernstein polynomials in the method used in the proof of the Weierstrass approximation theory Bernstein (1913). In more detailed, for each bounded function on [0, 1] , \( n\ge 1 \) and \( x\in [0,1] \), Bernstein approximations are defined as

where  and

and  is binomial coefficient. A number of different operators have been introduced and different generalizations have been made on the basis of the linear and positive Bernstein operators that produced by Bernstein polynomials. Today, studies are still carried out using these operators, Altomare and Leonessa (2006), Altomare (2010), Altomare and Rasa (1999) and Usta (2020).

is binomial coefficient. A number of different operators have been introduced and different generalizations have been made on the basis of the linear and positive Bernstein operators that produced by Bernstein polynomials. Today, studies are still carried out using these operators, Altomare and Leonessa (2006), Altomare (2010), Altomare and Rasa (1999) and Usta (2020).

Throughout this and next sections, C[0, 1] is the space of whole continuous real valued functions on [0, 1] , endowed with the supremum norm \( \Vert \cdot \Vert _{\infty } \) and the natural point-wise ordering. In addition to these, if \( m\in {\mathbb {N}} \), the symbol \( C^m[0,1] \) denote by the space of whole continuously m-times differentiable functions on [0, 1] .

Theorem 1

Let \( f\in C[0,1] \). Then, \( {\mathfrak {B}}_n(f) \) converges to f uniformly on [0, 1] .

Proof

For detailed proof, see Powel (1981). \(\square \)

Furthermore, the well-recognized Voronovskaya type theorem for the classical Bernstein operators \( ({\mathfrak {B}}_n)_{n\ge 1} \) was expressed as follows.

Theorem 2

Let \( f\in C^2[0,1] \), \( n\in {\mathbb {N}} \) and \( 0\le x \le 1 \). Then, the following inequality holds;

where \( {\tilde{\omega }} \) is the least concave limit superior of the first order modulus of continuity denoted by \( \omega \), satisfying for \( \epsilon \ge 0 \)

Proof

For detailed proof, see DeVore and Lorentz (1993). \(\square \)

In other words, one can state the above-mentioned theorem as follows, Bustamante (2017)

which means the rate of convergence of the Bernstein operators is at least \(n^{-1}\) for \( f\in C^2[0,1] \).

In this direction, Maleknejad et al. (2011) introduced the new method for solving 1stVIEs and 2ndVIEs using the Bernstein approximation method. In this work, they presented the convergence analysis for the introduced methods using the Voronovskaja type theorem for classical Bernstein approximation. Additionally, Usta et al. (2021) have extended this work using the Szasz–Mirakyan operators to solve 1stVIEs and 2ndVIEs and presented convergence properties of the introduced method.

In this study, motivated by the above studies, we construct a numerical scheme to solve 3rdVIEs by means of Bernstein approximation method. We will then give convergence analysis results to prove that the solution technique we proposed is theoretically consistent. Finally we will strengthen our claim with numerical results.

The rest of the presented manuscript is constructed as follows: in Sect. 2, the construction of the technique is presented, along with the Bernstein approximation method. In Sect. 3, the convergence analysis of introduced methods is given with the help of Voronovskaja type theorem of Bernstein operators. Numerical examples are presented in Sect. 4, while some concluding remarks and farther directions of study are discussed in Sect. 5.

2 Construction of the numerical scheme

The main goal in this part is to construct a numerical scheme to solve 3rdVIEs with the aid of Bernstein approximation method in a simple one dimensional setting. In line with this objective, we tackle the 3rdVIEs given in (1.1). To solve 3rdVIEs computationally, firstly, we need to approximate the unknown function f(x) which need to be determined, as follows,

where \( {\mathcal {P}}_{n,k} \) given as above. Since Bernstein approximation is valid for the functions defined on [0, 1] , let us consider the following third kind Volterra integral equation, that is to say,

For computationally solving of this kind of integral equation, we approximate the unknown function f by (2.1). In other words, by direct substitution of the expansions for f(x) into 3rdVIEs, we deduce that,

then we have

which yields,

It is outstanding to emphasise here that it is required to change x with \( x_i=i/n+\varepsilon \), where \( \varepsilon \) is arbitrary small number, before one determines the unknown coefficients \( f\left( k/n \right) \), \( k=0,1, \ldots , n \). In other words, in order to avoid from singularity problem, we can select for \( x_i \), \( i=0,1,2,\ldots ,n \) any other distinct values in [0, 1] except singular values of our integral equation, that is to say, \( x_i=i/n+\varepsilon \), \( i=0,1,2,\ldots ,n-1 \) and \( x_n=1-\varepsilon \). So we can express in matrix notation the fully discretized 3rdVIEs as follows,

where

where \( \varvec{\alpha } \) is a \( n \times n \) matrix and \( \varvec{\beta } \) and \( \mathbf{X} \) are \( n \times 1 \) vectors. These are the matrices that will be the essential part in the Matlab implementation. Then, in an effort to compute the array of \( \mathbf{x} \), first of all, we are in need of to calculate matrix \( \varvec{\alpha } \) and vector \( \varvec{\beta } \) computationally. By the end of this process, accordingly, we will deduce \( \mathbf{X} \) vector with n components. Eventually, when we specify the vector \( \mathbf{X} \) we can obtain the approximate computational solution of 3rdVIEs.

It is note that, we can show f(k/n) , \( k=0,1,2,\ldots ,n \) by \( f_n(k/n)\), \( k=0,1,2,\ldots ,n \) that are our solution in nodes k/n, \( k=0,1,2,\ldots ,n \) and by substituting them in (1.2), we can find \( {\mathfrak {B}}_n(f_n(x_k)) \), \( k=0,1,2,\ldots ,n \) that is a solution for the third kind Volterra integral equation (1.1).

Now we provide an error bound for the scheme presented above in the following theorem.

3 Convergence analysis

Now, we focus on the converge analysis of the proposed solution technique to validate it theoretically. In parallel with this purpose, we need to find an upper bound for \( \sup _{x_i\in [0,1]}\vert f(x_i)-{\mathfrak {B}}_n(f_n(x_i)) \vert \) where it must go to zero in limit case. The following theorem show that the numerical solution of the 3rdVIEs convergence the exact one with larger n.

Theorem 3

Consider the 3rdVIEs given in (1.1). Assume that \({\mathcal {K}}(x,t) \) is continuous kernel on the square \( [0,1]^2 \) and \( \varvec{\alpha } \) is a matrix given in (2.3). Then assume that the numerical solution of 3rdVIEs given in (1.1) belong to \( (C \cap L^2)([0,1]) \). If the matrix \( \varvec{\alpha } \) is invertible, then the following inequality holds,

where

and f(x) is the exact solution, \( {\mathfrak {B}}_n(f_n(x)) \) is the numerical solution of the introduced technique, \( x_i=i/n \) for \( i=0,1,\ldots , n \).

Proof

Thanks to the well-known triangular inequalities, we have

So, finding an upper limit for \( {\mathcal {A}}_1 \) and \( {\mathcal {A}}_2 \) will be enough to conclude the proof. Let begin with \( {\mathcal {A}}_1 \). With the aid of the Voronovskaya type theorem given in (1.3), we obtain the following upper bound for \( {\mathcal {A}}_1 \).

Then it is enough to find an upper bound for \( {\mathcal {A}}_2 \) to finalize the proof of theorem. If we replace \(f(\cdot ) \) with \({\mathfrak {B}}_n(f(\cdot )) \) in the equation

then the function g(x) in the left hand side of the above equation is switch by a new function which we denote by h(x) . So we get

Using the (3.3) and (3.4), we deduce the following inequality,

Then using (3.5) and (1.3), we obtain that

On the other hand, we have \( [\varvec{\alpha }][\mathbf{X} ]=[\varvec{\beta }] \) and \( [\varvec{\alpha }][\tilde{\mathbf{X }}]=[\tilde{\varvec{\beta }}] \), where

therefore using (3.6), we have

which yields,

Finally, by substituting (3.7) and (3.2) into (3.1), we get the desired results thus the proof is completed. \(\square \)

The drawback of the Theorem 3 is the error bound of the scheme contains the quantity \( \Vert \alpha ^{-1} \Vert \). Hence, in the following Lemma using extra condition, we found a bound for \( \Vert \alpha ^{-1} \Vert \) and condition number of \( \alpha \).

Lemma 1

Suppose that the same circumstances in previous theorem hold. Let \( \mathbf{I} \) is the \( n\times n \) identity matrix and \( \Vert \cdot \Vert \) is the maximum norm of rows. In the given circumstances, we have

such that

where \( \nabla _1=\max \nolimits _{i} \vert \lambda \vert \int _{0}^{x_i}\dfrac{1}{(x_i-t)^{\alpha }}\vert {\mathcal {K}}(x_i,t)\vert \mathrm{{d}}t\).

Proof

Let us begin with finding an upper bound \( \Vert \varvec{\alpha } \Vert \) utilizing (2.3), as follows

Then, we need to find another upper bound for \( \Vert \varvec{\alpha }^{-1} \Vert \). For that, we have

Due to the geometric series theorem, we conclude that

Therefore,

thus the desired result has been obtained. \(\square \)

4 Numerical results

So far this manuscript has focused on the construction of the numerical solution procedure of 3rdVIEs and the convergence analysis. In this section we will present and test various constructive computational experiments to demonstrate the proficiency of our numerical algorithm for solving 3rdVIEs. Henceforward, Max-Err stands for the maximum modulus error, i.e., \( \Vert f(x)-{\mathcal {B}}_n(f_n(x))\Vert \), and Rms-Err stands for the standard root mean squared error, i.e.

where f(x) is the exact solution 3rdVIEs, \( {\mathcal {B}}_n(f_n(x)) \) is the approximate solution 3rdVIEs, and Neval is the number of the test points. Time represents the CPU time consumed in each numerical examples. All these examples have been performed in MATLAB because it is convenient for our algorithm.

4.1 Experiment 1

(Nemati et al. 2021) We first consider the following 3rdVIEs,

where B(p, q) is the Beta function defined by,

for \( p,q>0 \). The exact solution of the above 3rdVIEs here is \( f(x)=x^{3/2} \), and \( \varepsilon =0.01 \).

In Table 1, we present the maximum error, root mean square error and the CPU time for the solution of the first experiment for different values of n. Additionally, in Fig. 1, we draw the exact solution and approximate solution of 3rdVIEs at the same Figure. Both Table and Figure show that, the proposed method provide the reliable numerical solution method for 3rdVIEs. Additionally, the last column of Table 1 shows the computational time of algorithm.

4.2 Experiment 2

(Nemati et al. 2021) Now, let consider another example given as below,

The exact solution of the above 3rdVIEs here is \( f(x)=x^{13/4} \), and \( \varepsilon =0.001 \).

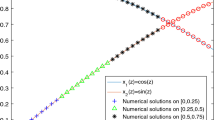

Similarly, in the first round of the second experiment, in Table 2, we present the maximum error, root mean square error and the CPU time for the solution of the second experiment for different values of n. In addition to this, in Fig. 2, we plot the exact solution and approximate solution of 3rdVIEs at the same figure.

It can be easily seen that it is immediately obvious from these results, both table and figure show that, the proposed method provide the reliable numerical solution method for 3rdVIEs.

4.3 Experiment 3

(Shayanfard et al. 2019) Finally, we present the last experiment to show the reliability of the method as follows

The exact solution of the above 3rdVIEs here is \( f(x)=x \), and \( \varepsilon =0.01 \).

Finally, in Table 3, we present the maximum error, root mean square error and the CPU time for the solution of the first experiment for different values of n. Furthermore, in Fig. 3, we draw the exact solution and approximate solution of 3rdVIEs at the same figure. We see from the table and figure that the proposed method provide the reliable numerical solution method for 3rdVIEs. Again, the last column of Tables 2 and 3 demonstrate the CPU time of algorithm.

In this section, it has been provided a series of the numerical experiments and these show that the proposed method can be applied successfully for solving 3rdVIEs. Especially, this method provide good results in the small number of iteration.

5 Concluding remarks

In the existing paper, we proposed and tested a numerical scheme based upon the Bernstein approximation technique for solving a new class of 3rdVIEs. Construction of the technique and its practicality for presented equations have been introduced. in additional to these, we have examined the numerability and convergence analysis of the proposed scheme. At the end of the paper, we tested a series of numerical examples demonstrating the effectiveness of this new technique for solving 3rdVIEs.

References

Allaei SS, Yang ZW, Brunner H (2015) Existence, uniqueness and regularity of solutions to a class of third kind Volterra integral equations. J Integral Equ Appl 27:325–342

Allaei SS, Yang ZW, Brunner H (2017) Collocation methods for third kind VIEs. IMA J Numer Anal 37(3):1104–1124

Altomare F (2010) Korovkin-type theorems and approximation by positive linear operators. Surv Approx Theory 5: 92–164. Free available online at http://www.math.techmion.ac.il/sat/papers/13/

Altomare F, Leonessa V (2006) On a sequence of positive linear operators associated with a continuous selection of Borel measures. Mediterr J Math 33(4):363–382

Altomare F, Rasa I (1999) Feller semigroups, Bernstein type operators and generalized convexity associated with positive projections. In: New developments in approximation theory (Dortmund 1998), 9–32, Internat. Ser. Numer. Math., 132. Birkhauser Verlag, Basel

Bernstein SN (1913) Demonstration du theoreme de Weierstrass, fondee sur le calculus des piobabilitts. Commun Soc Math 13:1–2

Bustamante J (2017) Bernstein operators and their properties. Chicago

DeVore RA, Lorentz GG (1993) Constructive approximation. Springer, Berlin

Maleknejad K, Hashemizadeh E, Ezzati R (2011) A new approach to the numerical solution of Volterra integral equations by using Bernstein’s approximation. Commun Nonlinear Sci Numer Simul 16:647–655

Nemati S, Lima PM, Torres DFM (2021) Numerical solution of a class of third kind Volterra integral equations using Jacobi wavelets. Numer Algorithms 86:675–691

Nemati S, Lima PM (2018) Numerical solution of a third kind Volterra integral equation using an operational matrix technique, 2018 European Control Conference (ECC), Limassol, pp 3215–3220

Powel MJD (1981) Approximation theory and methods. Cambridge University Press, Cambridge

Shayanfard F, Laeli Dastjerdi H, Maalek Ghaini FM (2019) A numerical method for solving Volterra integral equations of the third kind by multistep collocation method. Comput Appl Math 38:174

Shayanfard F, Laeli Dastjerdi H, Maalek Ghaini FM (2020) Collocation method for approximate solution of Volterra integro-differential equations of the third kind. Appl Numer Math 150:139–148

Song H, Yang Z, Brunner H (2019) Analysis of collocation methods for nonlinear Volterra integral equations of the third kind. Calcolo 56(1):7

Usta F (2020) Approximation of functions by new classes of linear positive operators which fix \(1,\varphi \) and \(1,\varphi ^2 \). An Stiint Univ “Ovidius” Constanta Ser Mat 28(3):255–265

Usta F, İlkhan M, Kara EE (2021) Numerical solution of Volterra integral equations via Szasz–Mirakyan approximation method. Math Methods Appl Sci 44(9):7491–7500

Vainikko G (2009) Cordial Volterra integral equations 1. Numer Funct Anal Optim 30:1145–1172

Vainikko G (2010) Cordial Volterra integral equations 2. Numer Funct Anal Optim 31:191–219

Wazwaz AM (1997) A first course in integral equations. World Scientific, Singapore

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Hui Liang.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Usta, F. Bernstein approximation technique for numerical solution of Volterra integral equations of the third kind. Comp. Appl. Math. 40, 161 (2021). https://doi.org/10.1007/s40314-021-01555-x

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-021-01555-x