Abstract

We propose a spectral collocation method, based on the generalized Jacobi wavelets along with the Gauss–Jacobi quadrature formula, for solving a class of third-kind Volterra integral equations. To do this, the interval of integration is first transformed into the interval [− 1, 1], by considering a suitable change of variable. Then, by introducing special Jacobi parameters, the integral part is approximated using the Gauss–Jacobi quadrature rule. An approximation of the unknown function is considered in terms of Jacobi wavelets functions with unknown coefficients, which must be determined. By substituting this approximation into the equation, and collocating the resulting equation at a set of collocation points, a system of linear algebraic equations is obtained. Then, we suggest a method to determine the number of basis functions necessary to attain a certain precision. Finally, some examples are included to illustrate the applicability, efficiency, and accuracy of the new scheme.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider the following Volterra integral equation (VIE)

where β > 0, α ∈ [0,1), α + β ≥ 1, f(t) = tβg(t) with a continuous function g, and κ is continuous on the domain Δ := {(t, x) : 0 ≤ x ≤ t ≤ T} and is of the form

where κ1 is continuous on Δ. The existence of the term tβ in the left-hand side of (1) gives it special properties, which are distinct from those of VIEs of the second kind (where the left-hand side is always different from zero), and also distinct from those of the first kind (where the left-hand side is constant and equal to zero). This is why in the literature they are often mentioned as VIEs of the third kind. This class of equations has attracted the attention of researchers in the last years. The existence, uniqueness, and regularity of solutions to (1) were discussed in [1]. In that paper, the authors have derived necessary conditions to convert the equation into a cordial VIE, a class of VIEs which was studied in detail in [2, 3]. This made possible to apply to (1) some results known for cordial equations. In particular, the case α + β > 1 is of special interest, because in this case, if κ1(t, x) > 0, the integral operator associated to (1) is not compact and it is not possible to assure the solvability of the equation by classical numerical methods. In [1], the authors have introduced a modified graded mesh and proved that with such mesh the collocation method is applicable and has the same convergence order as for regular equations.

In [4], two of the authors of the present paper have applied a different approach, which consisted in expanding the solution as a series of adjusted hat functions and approximating the integral operator by an operational matrix. The advantage of that approach is that it reduces the problem to a system of linear equations with a simple structure, which splits into subsystems of three equations, making the method efficient and easy to implement [4]. A limitation of this technique is that the optimal convergence order (O(h4)) can be attained only under the condition that the solution satisfies u ∈ C4([0, T]), which is not the case in many applications.

It is worth remarking here that there is a close connection between equations of class (1) and fractional differential equations [5]. Actually, the kernel of (1) has the same form as the one of a fractional differential equation, and if we consider the case κ(t, x) ≡ 1, then the integral operator is the Riemann–Liouville operator of order 1 − α. Therefore, it makes sense to apply to this class of equations numerical approaches that have recently been applied with success to fractional differential equations and related problems [5].

One of these techniques were wavelets, a set of functions built by dilation and translation of a single function φ(t), which is called the mother wavelet. These functions are known as a very powerful computational tool. The term wavelet was introduced by Jean Morlet about 1975 and the theory of the wavelet transform was developed by him and Alex Grossmann in the 80s [6, 7]. Some developments exist concerning the multiresolution analysis algorithm based on wavelets [8] and the construction of compactly supported orthonormal wavelet basis [9]. Wavelets form an unconditional (Riesz) basis for L2(R), in the sense that any function in L2(R) can be decomposed and reconstructed in terms of wavelets [10]. Many authors have constructed and used different types of wavelets, such as B-spline [11], Haar [12], Chebyshev [13], Legendre [14], and Bernoulli [15] wavelets. The advantage of employing wavelets, in comparison with other basis functions, is that when using them one can improve the accuracy of the approximation in two different ways: (i) by increasing the degree of the mother function (assuming that it is a polynomial); (ii) by increasing the level of resolution, that is, reducing the support of each basis function.

We underline that the application of wavelets has special advantages in the case of equations with non-smooth solutions, as it is the case of (1). In such cases, increasing the degree of the polynomial approximation is not a way to improve the accuracy of the approximation; however, such improvement can be obtained by increasing the level of resolution.

In a recent work [16], Legendre wavelets were applied to the numerical solution of fractional delay-type integro-differential equations. In the present paper we will apply a close technique to approximate the solution of (1).

The paper is organized as follows. Section 2 is devoted to the required preliminaries for presenting the numerical technique. In Section 3, we give some error bounds for the best approximation of a given function by a generalized Jacobi wavelet. Section 4 is concerned with the presentation of a new numerical method for solving equations of type Eq. (1). In Section 5, we suggest a criterion to determine the number of basis functions. Numerical examples are considered in Section 6 to confirm the high accuracy and efficiency of this new numerical technique. Finally, concluding remarks are given in Section 7.

2 Preliminaries

In this section, we present some definitions and basic concepts that will be used in the sequel.

2.1 Jacobi wavelets

The Jacobi polynomials \(\left \{P_{i}^{(\nu ,\gamma )}(t)\right \}_{i=0}^{\infty }\), ν, γ > − 1, t ∈ [− 1, 1], are a set of orthogonal functions with respect to the weight function

with the following orthogonality property:

where δij is the Kronecker delta and

The Jacobi polynomials include a variety of orthogonal polynomials by considering different admissible values for the Jacobi parameters ν and γ. The most popular cases are the Legendre polynomials, which correspond to ν = γ = 0, Chebyshev polynomials of the first-kind, which correspond to ν = γ = − 0.5, and Chebyshev polynomials of the second-kind, which correspond to ν = γ = 0.5.

We define the generalized Jacobi wavelets functions on the interval [0, T) as follows:

where k = 1, 2, 3, … is the level of resolution, n = 1, 2, 3, … , 2k− 1, m = 0, 1, 2, … , is the degree of the Jacobi polynomial, and t is the normalized time. The interested reader can refer to [17, 18] for more details on wavelets. Jacobi wavelet functions are orthonormal with respect to the weight function

where

An arbitrary function u ∈ L2[0, T) may be approximated using Jacobi wavelet functions as

where

2.2 Gauss–Jacobi quadrature rule

For a given function u, the Gauss–Jacobi quadrature formula is given by

where tl, l = 1,…, N, are the roots of \(P_{N}^{(\nu ,\lambda )}\), ωl, l = 1,…, N, are the corresponding weights given by (see [19]):

and RN(u) is the remainder term which is as follows:

According to the remainder term (3), the Gauss–Jacobi quadrature rule is exact for all polynomials of degree less than or equal to 2N − 1. This rule is valid if u possesses no singularity in (− 1, 1). It should be noted that the roots and weights of the Gauss–Jacobi quadrature rule can be obtained using numerical algorithms (see, e.g., [20]).

3 Best approximation errors

The aim of this section is to give some estimates for the error of the Jacobi wavelets approximation of a function u in terms of Sobolev norms and seminorms. With this purpose, we extend to the case of Jacobi wavelets some results which were obtained in [21] for the best approximation error by Jacobi polynomials in Sobolev spaces. The main result of this section is Theorem 1, which establishes a relationship between the regularity of a given function and the convergence rate of its approximation by Jacobi wavelets.

We first introduce some notations that will be used in this paper. Suppose that \(L^{2}_{w^{*}}(a,b)\) is the space of measurable functions whose square is Lebesgue integrable in (a, b) relative to the weight function w∗. The inner product and norm of \(L^{2}_{w^{*}}(a,b)\) are, respectively, defined by

and

The Sobolev norm of integer order r ≥ 0 in the interval (a, b), is given by

where u(j) denotes the j th derivative of u and \(H_{w^{*}}^{r}(a,b)\) is a weighted Sobolev space relative to the weight function w∗.

For ease of use, for some fixed values of − 1 < ν, γ < 1, we set

For starting the error discussion, first, we recall the following lemma from [21].

Lemma 1 (See 21)

Assume that \(u\in H_{w}^{\mu }(-1,1)\) with μ ≥ 0 and \(L_{M}(u) \in \mathbb {P}_{M}\) denotes the truncated Jacobi series of u, where \(\mathbb {P}_{M}\) is the space of all polynomials of degree less than or equal to M. Then,

where

and C is a positive constant independent of the function u and integer M. Also, for 1 ≤ r ≤ μ, one has

Suppose that πM(Ik, n) denotes the set of all functions whose restriction on each subinterval \(I_{k,n}=\left (\frac {n-1}{2^{k-1}}T, \frac {n}{2^{k-1}}T\right )\), n = 1, 2, … , 2k− 1, are polynomials of degree at most M. Then, the following lemma holds.

Lemma 2

Let \(u_{n} : I_{k,n} \rightarrow \mathbb {R}\), n = 1, 2, … , 2k− 1, be functions in \(H_{w_{n,k}}^{\mu }(I_{k,n})\) with μ ≥ 0. Consider the function \({u}_{n}: (-1,1) \rightarrow \mathbb {R}\) defined by \((\bar {u}_{n})(t)=u_{n}\left (\frac {T}{2^{k}}(t+2n-1)\right )\) for all t ∈ (− 1, 1). Then, for 0 ≤ j ≤ μ, we have

Proof

Using the definition of the L2-norm and the change of variable \(t^{\prime }=\frac {T}{2^{k}}(t+2n-1)\), we have

which proves the lemma. □

In order to continue the discussion, for convenience, we introduce the following seminorm for \(u \in H_{w_{k}}^{\mu }(0,T)\), 0 ≤ r ≤ μ, M ≥ 0 and k ≥ 1, which replaces the seminorm (6) in the case of a wavelet approximation:

If we choose M such that M ≥ μ − 1, it can be easily seen that

The next theorem provides an estimate of the best approximation error, when Jacobi wavelets are used, in terms of the seminorm defined by (8).

Theorem 1

Suppose that \(u \in H_{w_{k}}^{\mu }(0,T)\) with μ ≥ 0 and

is the best approximation of u based on the Jacobi wavelets. Then,

and, for 1 ≤ r ≤ μ,

where in (10) and (11) the constant C denotes a positive constant that is independent of M and k but depends on the length T.

Proof

Consider the function \(u_{n}:I_{k,n}\rightarrow \mathbb {R}\) such that un(t) = u(t) for all t ∈ Ik, n. Then, from (4) and Lemma 2 for 0 ≤ r ≤ μ, we have

By setting r = 0 in (12), we obtain

where we have used (5) and Lemma 2. This completes the proof of (10). Furthermore, using (12) for 1 ≤ r ≤ μ and k ≥ 1, we get:

where we have used (4), (7), and Lemma 2. Therefore, we have proved (11). □

Remark 1

We can also obtain estimates for the Jacobi wavelets approximation in terms of the L2-norm. With M ≥ μ − 1, if we combine (9) with (10), we get

4 Method of solution

In this section, we propose a method for solving the VIE (1). To this end, by using a suitable change of variable, we transform the interval of the integral to [− 1,1]. Suppose that

Therefore, (1) is transformed into the following integral equation:

In order to compute the integral part of (13), we set ν = −α and γ = 0 as the Jacobi parameters and use the Gauss–Jacobi quadrature rule. Then, we have

where sl are the zeros of \(P_{N}^{(-\alpha ,0)}\) and wl are given using (2) as

We consider an approximation of the solution of (1) in terms of the Jacobi wavelets functions as follows:

where the Jacobi wavelets coefficients ui, j are unknown. In order to determine these unknown coefficients, we substitute (15) into (14) and get

In this step, we define the following collocation points

where τm, m = 0,1,…, M, are the zeros of \(P_{M+1}^{(\nu ,\gamma )}\). Therefore, tn, m are the shifted Gauss–Jacobi points in the interval \(\left (\frac {n-1}{2^{k-1}}T,\frac {n}{2^{k-1}}T\right )\), corresponding to the Jacobi parameters ν and γ. By collocating (16) at the points tn, m, we obtain

By considering n = 1,2,…,2k− 1 and m = 0,1,…, M, in the above equation, we have a system of linear algebraic equations that can be rewritten as the following matrix form:

where

and the entries of the rows of the matrix A are the expressions in the bracket in (17), which vary corresponding to the values of i and j, i.e., the coefficients of ui, j, i = 1,2,…,2k− 1, j = 0,1,…, M, for tn, m, that are all nonzero and positive. Since the functions \(\psi _{i,j}^{(\nu ,\gamma )}\) are orthonormal and the nodes tn, m are pairwise distinct, the matrix A is nonsingular. Therefore, (18) is uniquely solvable. By solving this system using a direct method, the unknown coefficients ui, j are obtained. Finally, an approximation of the solution of (1) is given by (15).

5 A criterion for choosing the number of wavelets

Now we discuss the choice of adequate values of k and M (number of basis functions). To do this, we suppose that u(⋅) ∈ C2N([0, T]) and κ(⋅,⋅) ∈ C2N([0, T] × [0, T]). Using the error of the Gauss–Jacobi quadrature rule given by (3), and substituting ν = −α and γ = 0 in it, the exact solution of (1) satisfies the equation

where

for η ∈ (0, T). Therefore, we have

where

Suppose that t≠ 0. Then we obtain that

Let \(U_{k,M}(t)=\sum \limits _{i=1}^{2^{k-1}}\sum \limits _{j=0}^{M}u_{i,j} \psi _{i,j}^{(\nu ,\gamma )}(t)\) be the numerical solution of (1) obtained by the proposed method in Section 4. From the definition of the Jacobi wavelets, for the collocation points tn, m, n = 1,…,2k− 1, m = 0,1,…, M, we have

By definition, the restriction of the functions \(\psi _{n,j}^{(\nu ,\gamma )}(t)\), n = 1,…,2k− 1, on the subinterval Ik, n, which we denote here by \(\rho _{n,j}^{(\nu ,\gamma )}(t)\), is smooth. Therefore, we can define

For a given ε > 0, since all the collocation points, tn, m, n = 1,…,2k− 1, m = 0,1,…, M, are positive, using (19), we can choose k and M such that for all tn, m, the following criterion holds:

6 Numerical examples

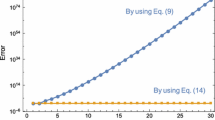

In this section, we consider three examples of VIEs of the third-kind and apply the proposed method to them. The weighted L2-norm is used to show the accuracy of the method. In all the examples, we have used N = 10 in the Gauss–Jacobi quadrature formula and the following notation is used to show the convergence of the method

where e(k) is the L2-error obtained with resolution k.

Example 1

As the first example, we consider the following third-kind VIE, which is an equation of Abel type [1, 4]:

where

The exact solution of this equation is \(u(t)=t^{\frac {13}{4}}\), which belongs to the space H3([0,1]). We have employed the method for this example with different values of M, k, ν and γ, and reported the results in Tables 1, 2 and Fig. 1. Table 1 displays the weighted L2-norm of the error for three different classes of the Jacobi parameters, which include ν = γ = 0.5 (second-kind Chebyshev wavelets), ν = γ = 0 (Legendre wavelets), and ν = γ = − 0.5 (first-kind Chebyshev wavelets) with different values of M and k. Moreover, the ratio of the error versus k is given in this table. It can be seen from Table 1 that the method converges faster in the case of the second-kind Chebyshev wavelets. In Table 2, we compare the maximum absolute error at the collocation points obtained by our method with the error of the collocation method introduced in [1], and the operational matrix method based on the adjusted hat functions [4]. From this table, it can be seen that our method gives more accurate results with less collocation points (we have used 192) than the method of [1] (N = 256) and also, has higher accuracy with a smaller number of basis functions (we have used 192) than the method of [4] (193). In Fig. 1, we show the error function obtained by the method based on the second-kind Chebyshev wavelets with M = 6, k = 4 (left) and M = 6, k = 6 (right).

Example 2

Consider the following third-kind VIE, which is used in the modelling of some heat conduction problems with mixed-type boundary conditions [1, 4]:

This equation has the exact solution \(u(t)=t^{\frac {5}{2}}\) (u ∈ H2([0,1])). The numerical results are given in Tables 3, 4 and Fig. 2. The L2-norms and the ratio of the error given in Table 3 confirm the superiority of the second-kind Chebyshev wavelets compared to the Legendre wavelets and first-kind Chebyshev wavelets. The method converges slower in this example than in the previous one, which could be expected, due to the lower regularity of the solution. A comparison between the maximum absolute error at collocation points of the present method and the methods given in [1] and [4] is presented in Table 4. Moreover, the error functions in the case of the second-kind Chebyshev wavelets with M = 6, k = 4 and M = 6, k = 6, can be seen in Fig. 2.

Example 3

Consider the following VIE of the third kind:

where

This equation has the exact solution \(u(t)=t^{\frac {9}{5}}\) (u ∈ H1([0,1])). The numerical results for this example are displayed in Table 5 and Fig. 3, which confirm the higher accuracy of the second-kind Chebyshev wavelets method compared with the Legendre wavelets and first-kind Chebyshev wavelets methods. Since the exact solution in this case is not so smooth as in the previous examples, the method converges slower.

7 Concluding remarks

In this work, a numerical method based on Jacobi wavelets has been introduced for solving a class of Volterra integral equations of the third-kind. First, the Jacobi wavelet functions have been introduced. Some error bounds are presented for the best approximation of a given function using the Jacobi wavelets. A numerical method based on the Jacobi wavelets, together with the use of the Gauss–Jacobi quadrature formula, has been proposed in order to solve Volterra integral equations of the third-kind. A criterion has been introduced for choosing the number of basis functions necessary to reach a specified accuracy. Numerical results have been included to show the applicability and high accuracy of this new technique. Our results confirm that the new method has higher accuracy than the other existing methods to solve the considered class of equations.

References

Allaei, S.S., Yang, Z.-W., Brunner, H.: Collocation methods for third-kind VIEs. IMA J. Numer. Anal. 37(3), 1104–1124 (2017)

Vainikko, G.: Cordial Volterra integral equations I. Numer. Funct. Anal. Optim. 30(9-10), 1145–1172 (2009)

Vainikko, G.: Cordial Volterra integral equations 2. Numer. Funct. Anal. Optim. 31(1-3), 191–219 (2010)

Nemati, S., Lima, P.M.: Numerical solution of a third-kind Volterra integral equation using an operational matrix technique. In: 2018 European Control Conference (ECC), Limassol, pp 3215–3220 (2018)

Sidi Ammi, M. R., Torres, D. F. M.: Analysis of fractional integro-differential equations of thermistor type. In: Kochubei, A., Luchko, Y. (eds.) Handbook of Fractional Calculus with Applications, Vol. 1, De Gruyter, Berlin, Boston, 327–346 (2019)

Grossmann, A., Morlet, J.: Decomposition of Hardy functions into square integrable wavelets of constant shape. SIAM J. Math Anal. 15(4), 723–736 (1984)

Grossmann, A., Morlet, J., Paul, T.: Transforms associated to square integrable group representations. J. Math. Phys. 26, 2473–2479 (1985)

Daubechies, I., Lagarias, J. C.: Two-scale difference equations. II. Local regularity, infinite products of matrices and fractals. SIAM J. Math. Anal. 23(4), 1031–1079 (1992)

Mallat, S.: A theory of multiresolution signal decomposition: the wavelet representation. IEEE Trans. Pattern Anal. Mach. Intell. 11(7), 674–693 (1989)

Daubechies, I.: Orthonormal bases of compactly supported wavelets. Comm. Pure Appl Math. 41(7), 909–996 (1988)

Li, X.: Numerical solution of fractional differential equations using cubic B-spline wavelet collocation method. Commun. Nonlinear Sci. Numer. Simul. 17 (10), 3934–3946 (2012)

Chen, Y.M., Yi, M.X., Yu, C.X.: Error analysis for numerical solution of fractional differential equation by Haar wavelets method. J. Comput. Sci. 3(5), 367–373 (2012)

Li, Y.: Solving a nonlinear fractional differential equation using Chebyshev wavelets. Commun. Nonlinear Sci. Numer. Simul. 15(9), 2284–2292 (2010)

Jafari, H., Yousefi, S.A., Firoozjaee, M.A., Momani, S., Khalique, C.M.: Application of Legendre wavelets for solving fractional differential equations. Comput. Math. Appl. 62(3), 1038–1045 (2011)

Rahimkhani, P., Ordokhani, Y., Babolian, E.: Numerical solution of fractional pantograph differential equations by using generalized fractional-order Bernoulli wavelet. J. Comput. Appl. Math. 309, 493–510 (2017)

Nemati, S., Lima, P.M., Sedaghat, S.: Legendre wavelet collocation method combined with the Gauss–Jacobi quadrature for solving fractional delay-type integro-differential equations. Appl. Numer. Math. 149, 99–112 (2020)

Boggess, A., Narcowich, F.J.: A First Course in Wavelets with Fourier Analysis, second edition. John Wiley & Sons, Inc., Hoboken, NJ (2009)

Walnut, D.F.: An introduction to wavelet analysis, Applied and Numerical Harmonic Analysis, Birkhäuser Boston, Inc., Boston MA (2002)

Shen, J., Tang, T., Wang, L.-L.: Spectral Methods, Springer Series in Computational Mathematics, vol. 41. Springer, Heidelberg (2011)

Pang, G., Chen, W., Sze, K.Y.: Gauss-Jacobi-type quadrature rules for fractional directional integrals. Comput Math. Appl. 66(5), 597–607 (2013)

Canuto, C., Hussaini, M.Y., Quarteroni, A., Zang, T.A.: Spectral Methods, Scientific Computation. Springer-Verlag, Berlin (2006)

Acknowledgment

Pedro M. Lima acknowledges support from Fundação para a Ciência e a Tecnologia (FCT, the Portuguese Foundation for Science and Technology) through the grant UIDB/04621/2020 (CEMAT); Delfim F. M. Torres was supported by FCT within project UIDB/04106/2020 (CIDMA).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Nemati, S., Lima, P.M. & Torres, D.F.M. Numerical solution of a class of third-kind Volterra integral equations using Jacobi wavelets. Numer Algor 86, 675–691 (2021). https://doi.org/10.1007/s11075-020-00906-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-020-00906-9