Abstract

In this paper, we introduce a modified Halpern algorithm for approximating a common solution of split equality convex minimization problem and split-equality fixed-point problem for Bregman quasi-nonexpansive mappings in p-uniformly convex and uniformly smooth Banach spaces. We introduce a generalized step size such that the algorithm does not require a prior knowledge of the operator norms and prove a strong convergence theorem for the sequence generated by our algorithm. We give some applications and numerical examples to show the consistency and accuracy of our algorithm. Our results complement and extend many other recent results in this direction in literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(E_1\) and \(E_2\) be Banach spaces and let C and Q be nonempty closed convex subsets of \(E_1\) and \(E_2\), respectively. We denote the dual of \(E_1\) and \(E_2\) by \(E_1^*\) and \(E_2^*\), respectively. Let \(A{:}\,E_1\rightarrow E_2\) ba a bounded linear operator. The split feasibility problem (SFP) can be formulated as:

The notion of SFP was first introduced by Censor and Elfving (1994) in the framework of Hilbert spaces for modeling inverse problems which arise from phase retrievals and medical image reconstruction. The SFP has attracted much attention due to its applications in modeling real-world problems such as inverse problem in signal processing, radiation therapy, data denoising and data compression (see Ansari and Rehan 2014; Bryne 2002; Censor et al. 2005, 2006; Mewomo and Ogbuisi 2018; Shehu and Mewomo 2016 for details). A very popular algorithm constructed to solve the SFP in real Hilbert spaces was the following CQ-algorithm proposed by Bryne (2002). Let \(x_1\in C\) and compute

where \(A^*\) is the adjoint of A, \(P_C\) and \(P_Q\) are the metric projections of C and Q, respectively, \(\mu \in (0, \frac{2}{\lambda })\) with \(\lambda \) being the spectral radius of the operator \(A^*A\). The sequence generated by (1.2) was shown to converge weakly to a solution of the SFP (1.1).

Schöpfer et al. (2008) studied the problem (1.1) in p-uniformly convex real Banach spaces which are also uniformly smooth and proposed the following algorithm: for \(x_1\in E_1\), set

where \(\Pi _C\) denotes the Bregman projection from \(E_1\) onto C and \(J^E\) is the duality mapping. The algorithm (1.3) generalizes the CQ-algorithm proposed by Bryne (2002). For several extensions of the CQ-algorithm and work on the SFP, please see Bryne (2004), Qu and Xiu (2005) and Yang (2004) and the references therein.

Moudafi and Thakur (2014) studied the proximal split minimization problem (PSMP) as generalization of SFP in real Hilbert spaces. Moudafi and Thakur (2014) considered finding a solution \(x^*\in H_1\) of the problem

where \(f{:}\,H_1\rightarrow {\mathbb {R}}\cup \{+\infty \}\), \(g{:}\,H_2\rightarrow {\mathbb {R}}\cup \{+\infty \}\) are two proper, convex, lower-semicontinuous functions and \(g_\mu (x) =\min _{u\in H_2}\{g(u)+\frac{1}{2\mu }\Vert u-x\Vert ^2\}\) stands for the Moreau–Yosida approximate of the function g with respect to the parameter \(\mu \).

By the differentiability of Yosida-approximate \(g_\mu \), (1.4) can be formulated as: find \(x^*\in H_1\) such that

where \(\mathrm{prox}_{\mu }g(x) = \arg \min \{g(u)+\frac{1}{2\mu }\Vert u -x\Vert ^2\}\) is the proximal mapping of g and \(\partial f(x)\) is the subdifferential of f at x defined as

The inclusion (1.5) in turn yields the following equivalent fixed-point formulation

Thus, (1.6) suggests to consider the following split proximal algorithm.

Now, let \(T{:}\,H \rightarrow H\) be a nonlinear operator, a point \(x \in H\) is called a fixed point of T if \(Tx = x\). The set of all fixed points of T is denoted by F(T). Let \(H_1, H_2\) be real Hilbert spaces, \(T_1{:}\,H_1 \rightarrow H_1\), \(T_2{:}\,H_2 \rightarrow H_2\) be two nonlinear operators such that \(F(T_1)\) and \(F(T_2)\) are nonempty. The split common fixed point problem (SCFPP) is defined as:

where \(A{:}\,H_1 \rightarrow H_2\) is a bounded linear operator. The SCFPP was first studied by Censor and Segal (2009) in the setting of Hilbert spaces for the case where \(T_1\) and \(T_2\) are nonexpansive mappings. They proposed the following algorithm and proved its weak convergence to a solution of (1.7) under some suitable conditions.

where \(\gamma \in (0, \frac{2}{\lambda })\) with \(\lambda \) being the spectral radius of the operator \(A^*A\). Moudafi (2011) studied the SCFPP in infinite-dimensional Hilbert spaces. Moudafi (2011) proposed the following algorithm (1.8) and obtained a weak convergence theorem for finding solution of (1.7) for quasi-nonexpansive mappings.

where \(\gamma \in (0, \frac{1}{\lambda \beta })\) for \(\beta \in (0, 1)\) and \(\lambda \) being the spectral radius of the operator \(A^*A\).

Let \(H_1\), \(H_2\) and \(H_3\) be real Hilbert spaces and let C, Q be nonempty, closed and convex subsets of \(H_1\) and \(H_2\), respectively. Let \(A{:}\,H_1\rightarrow H_3\), \(B{:}\,H_2\rightarrow H_3\) be bounded linear operators, the split equality fixed point problem (SEFPP) is defined as

where \(T_1{:}\,H_1\rightarrow H_1\) and \(T_2{:}\,H_2\rightarrow H_2\) are nonlinear mappings on \(H_1\) and \(H_2\), respectively. The SEFPP allows asymmetric and partial relations between the variables x and y. It has applications in phase retrievals, decision sciences, medical image reconstruction and intensity-modulated radiation therapy. If in (1.9), \(H_2 = H_3\) and \(B =I\), the identity mapping, then SEFPP (1.9) reduces to the SCFPP (1.7). The SEFPP was introduced by Moudafi (2012) in the framework of Hilbert spaces for firmly nonexpansive operators. To solve this problem, Moudafi (2012) proposed the following alternating algorithm:

where \(\{\gamma _n\}\) is a positive non-decreasing sequence such that \(\gamma _n\in \big (\epsilon , \min (\frac{1}{\lambda _A}, \frac{1}{\lambda _B})-\epsilon )\), \(\lambda _A\), \(\lambda _B\) stand for the spectral radii of \(A^*A\) and \(B^*B\), respectively, \(I - T_1\) and \(I - T_2\) are demiclosed at 0. It was established that the iterative scheme (1.10) converges weakly to a solution of (1.9) in Hilbert spaces.

Motivated by the work of Moudafi (2012), Moudafi and Al-Shemas (2013) studied the SEFPP (1.9) in the setting of Hilbert spaces and proposed the following simultaneous algorithm:

where \(\{\gamma _n\}\subset (\epsilon , \frac{2}{\lambda _A+\lambda _B}-\epsilon )\). They proved that the iterative scheme (1.11) converges weakly to a solution of problem (1.9).

Ma et al. (2013) also proposed the following algorithm for solving the SEFPP (1.9) in Hilbert spaces:

where \(\{\gamma _n\}\subset (\epsilon , \frac{2}{\lambda _A+\lambda _B}-\epsilon )\), \(\{\alpha _n\}\subset [ \alpha , 1]\) for some \(\alpha > 0\) and \(U{:}\,H_1\rightarrow H_1\) and \(T{:}\,H_2\rightarrow H_2\) T are firmly quasi-nonexpansive mappings. They proved strong and weak convergence theorems for the iterative scheme under some mild conditions.

Note that algorithms (1.10)–(1.12) depend on a prior knowledge of the operator norms for their implementation. To overcome this difficulty, Zhao (2015) introduced a new algorithm with a way of selecting the stepsize such that its implementation does not require prior knowledge of the operator norms. In particular, Zhao (2015) proposed the following iterative method: choose initial guess \(x_0\in H_1, y_0\in H_2\),

where \(\alpha _k\in [0, 1], \beta _k\in [0, 1]\), and

\(n\in \Omega \) otherwise \(\gamma _n = \gamma ~(\gamma ~ \text {being any nonnegative value})\), where the set of indexes \(\Omega = \{n{:}\,Ax_n - By_n\ne 0\}\).

We note that while there are many literature on solving the SEFPP in Hilbert spaces there are only few literature on SEFPP in Banach spaces. Our aim in this paper is to study the SEFPP in the setting of other Banach spaces higher than the Hilbert spaces.

Let \(E_1,\) \(E_2\) and \(E_3\) be p-uniformly convex and uniformly smooth Banach spaces and let \(f{:}\,E_1\rightarrow {\mathbb {R}}\cup \{+\,\infty \}\) and \(g{:}\,E_2\rightarrow {\mathbb {R}}\cup \{+\,\infty \}\) be proper, convex and lower semicontinuous functions. Let \(T_1{:}\,E_1\rightarrow E_1\) and \(T_2{:}\,E_2\rightarrow E_2 \) be Bregman quasi-nonexpansive mappings. In this paper, we consider the following split equality convex minimization problem (SECMP) and fixed point problem:

where \(A{:}\,E_1\rightarrow E_3\) and \(B{:}\,E_2\rightarrow E_3\) are bounded linear operators.

It is important to consider the problem of finding a common solution of SECMP and SEFPP due to its possible applications to mathematical models whose constraints can be expressed as SECMP and SEFPP. This happens, in particular, in the practical problems such as in signal processing, network resource allocation, image recovery, see for instance Iiduka (2012, 2015) and Maingé (2008b).

We denote the set of solutions of problem (1.14) by \(\Omega \). We introduce a modified Halpern iterative algorithm with a generalized stepsize so that the implementation of our algorithm does not require prior knowledge of the operator norms. We prove a strong convergence result and give some applications of our result to other nonlinear problems. Finally, we present a numerical example to show the efficiency and accuracy of our algorithm. This result also extends the result of Mewomo et al. (2018) from Hilbert to Banach spaces settings.

2 Preliminaries

In this section, we give some preliminaries, definitions and results which will be needed in the sequel. We denote by ‘\(x_n \rightharpoonup x\)’ and ‘\(x_n \rightarrow x\)’, the weak and the strong convergence of \(\{x_n\}\) to a point x, respectively.

Let E be a real Banach space with the norm \(\Vert \cdot \Vert \) and \(E^*\) be the dual with the norm \(\Vert \cdot \Vert _*\). We denote the value of the functional \(j\in E^*\) at \(x\in E\) by \(\langle x, j\rangle \). Let \(1<q\le 2\le p\) with \(\frac{1}{p}+\frac{1}{q} = 1\). The modulus of convexity of E is the function \(\delta _E(\epsilon ){:}\,(0, 2]\rightarrow [0, 1]\) defined by

E is said to be uniformly convex if \(\delta _{E}(\epsilon )>0\) and p-uniformly convex if there exists a \(C_p > 0\) such that \(\delta _{E}(\epsilon )\ge C_p\epsilon ^p\), for any \(\epsilon \in (0, 2]\). The \(L_p\) spaces, \(1<p<\infty \) are uniformly convex. A uniformly convex Banach space is strictly convex and reflexive. Also, the modulus of smoothness of E is the function \(\rho _{E}{:}\,[0, \infty )\rightarrow [0, \infty )\) defined by

E is called uniformly smooth if \(\lim _{\tau \rightarrow 0^+}\frac{\rho _{E}(\tau )}{\tau }=0\) and q-uniformly smooth if there exists \(C_q>0\) such that \(\rho _{E}(\tau )\le C_q\tau ^q\). Every uniformly smooth space Banach is smooth and reflexive.

A continuous strictly increasing function \(\phi {:}\,{\mathbb {R}}^+\rightarrow {\mathbb {R}}^+\) such that \(\phi (0)=0\) and \(\lim _{t\rightarrow \infty }\phi (t) = \infty \) is called a gauge function. Given a gauge function \(\phi \), the mapping \(J^E_{\phi }{:}\,E\rightarrow 2^{E^*}\) defined by

is called the duality mapping with gauge function \(\phi \). It is known (see Chidume 2009) that \(J^E_{\phi }(x)\) is nonempty for any \(x\in E\). In the particular case where \(\phi (t) = t\), the duality map \(J = J_{\phi }\) is called the normalized duality map. If \(\phi (t) = t^{p-1}\) where \(p>1\), the duality mapping \(J^E_{\phi } = J^E_p\) is called the generalized duality mapping from E to \(2^{E^*}\). Let \(\phi \) be a gauge function and \(f(t) = \int ^{t}_{0}\phi (s)\mathrm{d}s\), then f is a convex function on \({\mathbb {R}}^+\).

It is known that when E is uniformly smooth, then \(J^E_p\) is norm to norm uniformly continuous on bounded subsets of E and E is smooth if and only if \(J^E_p\) is single valued (see Chidume 2009).

If E is p-uniformly convex and uniformly smooth, then \(E^*\) is q-uniformly smooth and uniformly convex. This then implies that the duality mapping \(J^{E}_p\) is one-to-one, single valued and satisfies \(J^{E}_p = (J^{E^*}_q)^{-1}\) (see, e.g. Chidume 2009; Cioranescu 1990).

Xu and Roach (1991) proved the following inequality for q-uniformly smooth Banach spaces.

Lemma 2.1

Let \(x, y\in E\). If E is a q-uniformly smooth Banach space, then there exists a \(C_q>0\) such that

Definition 2.2

(Bauschke et al. 2003; Bauschke and Combettes 2011) A function \(f{:}\,E\rightarrow {\mathbb {R}}\cup \{+\infty \}\) is said to be

-

(1)

proper if its effective domain \(D(f) = \{x\in E{:}\,f(x)<+\infty \}\) is nonempty,

-

(2)

convex if \(f(\lambda x+(1-\lambda )y)\le \lambda f(x)+(1-\lambda )f(y)\) for every \(\lambda \in (0, 1)\), \(x, y \in D(f),\)

-

(3)

lower semicontinuous at \(x_0\in D(f)\) if \(f(x_0)\le \lim _{x\rightarrow x_0}\inf f(x)\).

Let \(x\in \mathrm{int}(\mathrm{dom}\,f)\), for any \(y\in E\), the directional derivative of f at x denoted by \(f^0(x,y)\) is defined by

If the limit at \(t\rightarrow 0^+\) in (2.1) exists for each y, then the function f is said to be directionally differentiable at x.

Let \(f{:}\,E\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be a proper, convex and lower semicontinuous function and \(x\in \mathrm{int dom}(f)\). The subdifferential of f at x is the convex set defined by

and the Fenchel conjugate of f is the function \(f^*{:}\,E^*\rightarrow {\mathbb {R}}\cup \{+\infty \}\) defined by

Given a directionally differentiable function f, the bifunction \(\Delta _f{:}\,\text {dom}\,f \times \text {int dom}\,f\rightarrow [0, +\,\infty )\) defined by

where \(\langle \nabla f(x), y \rangle = f^0(x,y)\) is called the Bregman distance with respect to f. Note that \(\Delta _f(y, x)\ge 0\) (see Bauschke et al. 2003). In general, the Bregman distance is not a metric due to the fact that it is not symmetric. However, it possesses some distance-like properties. From (2.2), one can show that the following equality called three-point identity is satisfied:

In addition, if \(f(x) = \frac{1}{p}\Vert x\Vert ^p\), where \(\frac{1}{p}+\frac{1}{q}=1\), then we have

The Bregman projection

is the unique minimizer of the Bregman distance (see Schöpfer 2007). It can be characterized by the variational inequality:

We associate with \(f(x) = \frac{1}{p}\Vert x\Vert ^p\), the function \(V_p{:}\,E\times E^*\rightarrow [0, +\infty )\) defined by

\(V_p(x, {\bar{x}})\ge 0\) follows from Young’s inequality and the following properties are satisfied (see Kohsaka and Takahashi 2005; Phelps 1993):

Also, \(V_p\) is convex in the second variable. Thus, for all \(z\in E\)

A point \(x^*\in C\) is called an asymptotic fixed points of T if C contains a sequence \(\{x_n\}\) which converges weakly to \(x^*\) such that \(\lim _{n\rightarrow \infty }\Vert x_n - Tx_n\Vert =0\). The set of asymptotic fixed points of T will be denoted by \({\hat{F}}(T)\).

Definition 2.3

(Reich and Sabach 2010b, 2011) A mapping \(T{:}\,C\rightarrow E\) is said to be

-

(1)

nonexpansive if \(\Vert Tx - Ty\Vert \le \Vert x - y\Vert \) for each \(x, y \in C\),

-

(2)

quasi-nonexpansive if \(F(T)\ne \emptyset \) and \(\Vert Tx - Ty^*\Vert \le \Vert x - y^*\Vert \) for each \(x\in C\) and \(y^*\in F(T)\),

-

(3)

Bregman nonexpansive if

$$\begin{aligned} \Delta _p(Tx, Ty)\le \Delta _p(x, y)\quad \forall x, y\in C, \end{aligned}$$ -

(4)

Bregman quasi-nonexpansive if \(F(T)\ne \emptyset \) and

$$\begin{aligned} \Delta _p(Tx, y^*)\le \Delta _p(x, y^*)\quad \forall x \in C, y^*\in F(T), \end{aligned}$$ -

(5)

Bregman relative nonexpansive if \(F(T)\ne \emptyset \), \({\hat{F}}(T)=F(T)\) and

$$\begin{aligned} \Delta _p(Tx, y^*)\le \Delta _p(x, y^*)\quad \forall x \in C, y^*\in F(T), \end{aligned}$$ -

(6)

Bregman firmly nonexpansive if for all \(x, y\in C,\)

$$\begin{aligned} \Delta _p(Tx, Ty)+\Delta _p(Ty, Tx)+\Delta _p(Tx, x)+\Delta _p(Ty, y)\le \Delta _p(Tx, y)+\Delta _p(Ty, x). \end{aligned}$$

From these definitions, it is evident that the class of Bregman quasi-nonexpansive contains the class of Bregman relative nonexpansive, the class of Bregman firmly nonexpansive and the class of Bregman nonexpansive mapping with \(F(T)\ne \emptyset \).

Let E be a p-uniformly convex and uniformly smooth real Banach space and \(f{:}\,E\rightarrow {\mathbb {R}}\cup \{+\,\infty \}\) be a proper, convex and lower semicontinuous function. The proximal mapping associated with f with respect to the Bregman distance is defined as

Bauschke et al. (2003) explore some important properties of the operator \(\mathrm {prox}_{\gamma f}\). We note from Bauschke et al. (2003) that \(\text {dom prox}_{\gamma f}\subset \text {int dom}\phi \) and \(\text {ran prox}_{\gamma f}\subset \mathrm {dom}\phi \cap \mathrm {dom}\,f\), where \(\phi (x) = \frac{1}{p}\Vert x\Vert ^p\) and it is convex and Gâteaux differentiable. Furthermore, if \(\text {ran prox}_{\gamma f}\subset \text {int dom}\phi \), then \(\mathrm{prox}_{\gamma f}\) is Bregman firmly nonexpansive and single-valued on its domain if \(\text {int dom}\phi \) is strictly convex. The set of fixed points of \(\mathrm{prox}_{\gamma f}\) is indeed the set of minimizers of f (see Bauschke et al. 2003 for more details). Throughout this paper, we shall assume that \(\text {ran prox}_{\gamma f}\subset \text {int dom}\phi \).

The following result can be obtained from Jolaoso et al. (2017, Lemma 2.18).

Lemma 2.4

(Jolaoso et al. 2017) Let E be a p-uniformly convex Banach space which is uniformly smooth. Let \(f{:}\,E \rightarrow {\mathbb {R}}\cup \{+\infty \}\) be a proper, convex and lower semicontinuous function and let \(\mathrm{prox}_{\gamma f}{:}\,E \rightarrow E\) be the proximal operator associated with f for \(\gamma >0\), then the following inequality holds: for all \(x \in E\) and \( z \in F(\mathrm{prox}_{\gamma f})\), we have

The following results will be needed to establish our main theorem.

Lemma 2.5

(Naraghirad and Yao 2013) Let E be a smooth and uniformly convex real Banach space. Let \(\{x_n\}\) and \(\{y_n\}\) be two sequences in E. Then \(\lim _{n\rightarrow \infty }\Delta _p(x_n, y_n) = 0\) if and only if \(\lim _{n\rightarrow \infty }\Vert x_n - y_n\Vert = 0\).

Lemma 2.6

(Xu 2002) Let \(\{a_n\}\) be a sequence of nonnegative real numbers satisfying the following relation:

where \(\{\alpha _n\}\subset (0, 1) \) and \(\{\delta _n\}\subset {\mathbb {R}}\) such that \(\sum ^{\infty }_{n=1}\alpha _n = \infty \), and \(\limsup _{n\rightarrow \infty }\delta _n\le 0\). Then \(\lim _{n\rightarrow \infty }a_n=0\).

Lemma 2.7

(Maingé 2008a) Let \(\{a_n\}\) be sequence of real numbers such that there exists a subsequence \(\{n_i\}\) of \(\{n\}\) with \(a_{n_i}< a_{n_{i}+1}\) for all \(i\in {\mathbb {N}}\). Then, there exists an increasing sequence \(\{m_k\}\subset {\mathbb {N}}\) such that \(m_k\rightarrow \infty \) and the following properties are satisfied by all (sufficiently large) numbers \(k\in {\mathbb {N}}\):

In fact, \(m_k\) is the largest number n in the set \(\{1, 2, \ldots k\}\) such that the condition \(a_n\le a_{n+1}\) holds.

Lemma 2.8

(Xu and Roach 1991) Let \(q\ge 1\) and \(r>0\) be two fixed real numbers. Then, a Banach space E is uniformly convex if and only if there exists a continuous, strictly increasing and convex function \(g{:}\,{\mathbb {R}}^+\rightarrow {\mathbb {R}}^+\), \(g(0)=0\) such that for all \(x, y\in B_r\) and \(0\le \lambda \le 1\),

where \(W_q(\lambda ):=\lambda ^q(1-\lambda )+\lambda (1-\lambda )^q\) and \(B_r:=\{x\in E{:}\,\Vert x\Vert \le r\}.\)

3 Main results

In this section, we present a modified Halpern algorithm for solving (1.14) where \(T_1\) and \(T_2\) are Bregman quasi-nonexpansive mappings.

Theorem 3.1

Let \(E_1, E_2\) and \(E_3\) be p-uniformly convex real Banach spaces which are also uniformly smooth. Let C and Q be nonempty closed, convex subsets of \(E_1\) and \(E_2\), respectively, \(A{:}\,E_1\rightarrow E_3\) and \(B{:}\,E_2\rightarrow E_3\) be bounded linear operators. Let \(f{:}\,E_1\rightarrow {\mathbb {R}}\cup \{+\infty \}\) and \(g{:}\,E_2\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be proper, convex and lower semicontinuous functions, \(T_1{:}\,E_1\rightarrow E_1\) and \(T_2{:}\,E_2\rightarrow E_2 \) be Bregman quasi-nonexpansive mappings such that \(\Omega \ne \emptyset \). For fixed \(u \in E_1\) and \(v \in E_2\), choose an initial guess \((x_1,y_1) \in E_1 \times E_2\) and let \(\{\alpha _n\} \subset [0,1]\). Assume that the nth iterate \((x_n,y_n) \subset E_1 \times E_2\) has been constructed; then we compute the \((n+1)th\) iterate \((x_{n+1},y_{n+1})\) via the iteration :

for \(n \ge 1\), \(\{\beta _n\}, ~ \{\delta _n\} \subset (0,1)\), where \(A^*\) is the adjoint operator of A. Further, we choose the stepsize \(\gamma _n\) such that if \(n \in \Gamma := \{n{:}\,Ax_n - B y_n \ne 0 \}\), then

where \(C_q\) and \(D_q\) are constants of smoothness of \(E_1\) and \(E_2\), respectively. Otherwise, \(\gamma _{n} = \gamma \) \((\gamma \) being any nonnegative value). Then \(\{x_n\}\) and \(\{y_n\}\) are bounded.

Proof

Let \((x,y) \in \Omega \), using Lemma 2.1, (2.3) and the Bregman firmly nonexpansivity of prox operators, we have

Following similar to the argument as in (3.3), we have

Adding (3.3) and (3.4) and noting that \(Ax = By\), we obtain

From the choice of \(\gamma _n\) (3.2), we have that

Thus from (3.1) and (3.6), we get

Thus, the last inequality implies that \(\{x_n\}\) and \(\{y_n\}\) are bounded. Consequently, \(\{u_n\}\), \(\{v_n\}\), \(\{T_1u_n\}\) and \(\{T_2v_n\}\) are bounded. \(\square \)

Theorem 3.2

Let \(E_1, E_2\) and \(E_3\) be p-uniformly convex real Banach spaces which are also uniformly smooth. Let C and Q be nonempty closed, convex subsets of \(E_1\) and \(E_2\), respectively, \(A{:}\,E_1\rightarrow E_3\) and \(B{:}\,E_2\rightarrow E_3\) be bounded linear operators. Let \(f{:}\,E_1\rightarrow {\mathbb {R}}\cup \{+\infty \}\) and \(g{:}\,E_2\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be proper, convex and lower semicontinuous functions, \(T_1{:}\,E_1\rightarrow E_1\) and \(T_2{:}\,E_2\rightarrow E_2 \) be Bregman quasi-nonexpansive mappings such that \(F(T_i) = {\hat{F}}(T_i)\), \(i=1, 2\) and \(\Omega \ne \emptyset \). For fixed \(u \in E_1\) and \(v \in E_2\), choose an initial guess \((x_1,y_1) \in E_1 \times E_2\) and let \(\{\alpha _n\} \subset [0,1]\). Suppose \((\{x_n\},\{y_n\})\) is generated by algorithm (3.1) and the following conditions are satisfied:

-

(i)

\(\lim _{n\rightarrow \infty }\alpha _n = 0,\) and \(\sum _{n =0}^\infty \alpha _n = \infty \),

-

(ii)

\(0< a \le \liminf _{n\rightarrow \infty }\beta _n \le \limsup _{n\rightarrow \infty }\beta _n <1,\)

-

(iii)

\(0< b \le \liminf _{n\rightarrow \infty }\delta _n \le \limsup _{n\rightarrow \infty }\delta _n <1.\)

Then the sequence \(\{(x_n,y_n)\}\) converges strongly to \((x^*,y^*) = (\Pi _\Omega u, \Pi _\Omega v).\)

Proof

Let \((x,y)\in \Omega \). Then from (2.4) and (2.5), we have

Similarly for \(\Delta _p(y, y_{n+1})\), we obtain

Thus, from (3.5), (3.7) and (3.8), we obtain that

To this end, let \(\Gamma _n = \Delta _p(x, x_n)+\Delta _p(y, y_n)\) and \(\tau _n = \alpha _n\langle J_p^{E_1}u - J_p^{E_1}x, x_{n+1}-x\rangle + \alpha _n\langle J_p^{E_2}v - J_p^{E_2}y, y_{n+1} - y\rangle \). We consider the following cases:

Case 1 Suppose \(\exists \) \(n_0\in {\mathbf {N}}\) such that \(\{\Gamma _n\}\) is monotonically non-increasing for all \(n\ge n_0\). Since \(\Gamma _n\) is bounded it implies that \(\{\Gamma _n\}\) converges and

Set

it follows from (3.10) that

as \(n \rightarrow \infty \). By the choice of the stepsize (3.2), there exists a very small \(\epsilon >0\) such that

which means that

and hence

Hence

This implies that

Also from (3.11), we have

Let \(w_n = J_q^{E_1^*}(\beta _nJ_p^{E_1}u_n +(1-\beta _n)J_p^{E_1}T_1u_n)\) and \(z_n = J_q^{E_2^*}(\delta _nJ_p^{E_2}v_n +(1-\delta _n)J_p^{E_2}T_2v_n)\). Using Lemma 2.8, we have

Similarly, we have

By adding (3.14) and (3.15), we have

Observe that

therefore, from (3.16), we get

This implies that

Hence

Thus, we obtain

By the continuity of g, we have

Also, since \(J_q^{E_1}\) and \(J_q^{E_2}\) are uniformly continuous on bounded subsets of \(E_1\) and \(E_2\), respectively, then

Furthermore,

and

This implies that

Also

therefore, by Lemma 2.5, we have

Similarly

and

Now, let \(s_n = J_q^{E_1^*}(J_p^{E_1}x_n-\gamma _nA^*J_p^{E_3}(Ax_n - By_n))\) and \(t_n = J_q^{E_2^*}(J_p^{E_2}y_n + \gamma _n B^*J_p^{E_3}(Ax_n - By_n))\). Note that from (3.3) and (3.4), we have

Using Lemma 2.4, we obtain

Hence from (3.10), we have

Hence

Thus by Lemma 2.5, we get

Since \(E_1\) and \(E_2\) are uniformly smooth, then \(J_p^{E_1}\) and \(J_p^{E_2}\) are uniformly continuous on bounded subsets of \(E_1\) and \(E_2\), respectively. Therefore

It follows from the definition of \(s_n\) that

Hence

Similarly, we can show that

It follows therefore from (3.22) that

and

Hence, by combining (3.20), (3.21) and (3.24), we get

Similarly, we obtain

Since \(E_1\), \(E_2\) are uniformly convex and uniformly smooth and \(\{(x_n, y_n)\}\) is bounded, there exists a subsequence \(\{(x_{n_i}, y_{n_i})\}\) of \(\{(x_n,y_n)\}\) such that \((x_{n_i}, y_{n_i})\,\rightharpoonup \,({\bar{x}}, {\bar{y}}) \in E_1 \times E_2\). Also from (3.24) and (3.25), we obtain that \(\{(u_{n_i},v_{n_i})\}\,\rightharpoonup \,({\bar{x}},{\bar{y}})\). Since \({\hat{F}}(T_i) = F(T_i),\) for \(i=1,2\), it follows from (3.19) that \({\bar{x}} \in F(T_1)\) and \({\bar{y}} \in F(T_2)\). Furthermore, we show that \({\bar{x}} \in \mathrm{Argmin}(f)\) and \({\bar{y}} \in \mathrm{Argmin}(g)\). Since \(s_{n_i} - x_{n_i} \rightarrow 0\), as \(i \rightarrow \infty \), it follows from (3.22) that \({\bar{x}} = \mathrm{prox}_{\gamma _{n_i}f}({\bar{x}})\), hence \({\bar{x}}\) is a fixed point of the proximal operator of f, or equivalently, \(0 \in \partial f({\bar{x}})\). Thus, \({\bar{x}} \in \mathrm{Argmin}(f)\). Similarly, we obtain that \({\bar{y}} \in \mathrm{Argmin}(g)\).

Now, since \(A{:}\,E_1 \rightarrow E_3\) and \(B{:}\,E_2 \rightarrow E_3\) are bounded linear operators, we have \(Ax_{n_i} \rightharpoonup A{\bar{x}}\) and \(By_{n_i} \rightharpoonup B {\bar{y}}\). By the weak lower semicontinuity of the norm and (3.13), we have

Hence, \(A{\bar{x}} = B{\bar{y}}\). This implies that \(({\bar{x}},{\bar{y}}) \in \Omega \).

Next, we show that \(\{(x_n,y_n)\}\) converges strongly to \((x^*,y^*) = (\Pi _\Omega u, \Pi _\Omega v).\) From (3.10), we have

Choose subsequences \(\{x_{n_i}\}\) of \(\{x_n\}\) and \(\{y_{n_i}\}\) of \(\{y_n\}\) such that

and

Since \((x_{n_i},y_{n_i}) \rightharpoonup ({\bar{x}},{\bar{y}})\) and from (3.26) and (3.27), we get

and

Hence, from (3.28), (3.29), (3.30) and using Lemma 2.6, we get that

This therefore implies that \((x_n,y_n) \rightarrow (x^*,y^*) = (\Pi _\Omega u, \Pi _\Omega v).\)

Case 2

Suppose \(\Gamma _n\) is not eventually monotonically decreasing. Then by Lemma 2.7, there exists a nondecreasing sequence \(\{m_k\}\subset {\mathbb {N}}\) such that \(m_k\rightarrow \infty \) and

Following similar argument as in case 1, we obtain \(\Vert x_{m_k} - u_{m_k}\Vert \rightarrow 0\), \(\Vert y_{m_k} - v_{m_k}\Vert \rightarrow 0\), \(\Vert T_1u_{m_k} - u_{m_k}\Vert \rightarrow 0\) and \(\Vert T_2v_{m_k} - v_{m_k}\Vert \rightarrow 0\) as \(k\rightarrow \infty \).

Also

and

From (3.10), we obtain

Since \(0\le \Gamma _{m_k}\le \Gamma _{{m_k}+1}\), then from (3.33), we have

Hence

Therefore from (3.31) and (3.32), we obtain

This implies that

Hence \(\{(x_n, y_n)\}\) converges strongly to \((x^*, y^*) = (\Pi _\Omega u, \Pi _\Omega v).\) In both cases, we obtain that \((x_n,y_n) \rightarrow (x^*,y^*)\). This completes the proof. \(\square \)

We now give the following direct consequences of our main result.

-

(i)

Taking \(T_1\) and \(T_2\) to be Bregman firmly nonexpansive mappings on \(E_1\) and \(E_2\), respectively. Note that the class of Bregman firmly nonexpansive mappings satisfies the property \({\hat{F}}(T)= F(T)\) (see Lemma 15.6 in Reich and Sabach 2011, page 308). Then, we have the following results:

Corollary 3.3

Let \(E_1, E_2\) and \(E_3\) be p-uniformly convex real Banach spaces which are also uniformly smooth. Let C and Q be nonempty closed, convex subsets of \(E_1\) and \(E_2\), respectively, \(A{:}\,E_1\rightarrow E_3\) and \(B{:}\,E_2\rightarrow E_3\) be bounded linear operators. Let \(f{:}\,E_1\rightarrow {\mathbb {R}}\cup \{+\infty \}\) and \(g{:}\,E_2\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be proper, convex and lower semicontinuous functions, \(T_1{:}\,E_1\rightarrow E_1\) and \(T_2{:}\,E_2\rightarrow E_2 \) be Bregman firmly nonexpansive mappings such that \(\Omega \ne \emptyset \). For fixed \(u \in E_1\) and \(v \in E_2\), choose an initial guess \((x_1,y_1) \in E_1 \times E_2\) and let \(\{\alpha _n\} \subset [0,1]\). Suppose \((\{x_n\},\{y_n\})\) is generated by the following algorithm: for a fixed \((u,v) \in E_1 \times E_2\),

for \(n \ge 1\), \(\{\beta _n\}, ~ \{\delta _n\} \subset (0,1)\), where \(A^*\) is the adjoint operator of A. Further, we choose the stepsize \(\gamma _n\) such that if \(n \in \Gamma := \{n {:}\,Ax_n - B y_n \ne 0 \}\), then

Otherwise, \(\gamma _{n} = \gamma \) \((\gamma \) being any nonnegative value). Assume that the following conditions are satisfied:

-

(i)

\(\lim _{n\rightarrow \infty }\alpha _n = 0,\) and \(\sum _{n =0}^\infty \alpha _n = \infty \),

-

(ii)

\(0< a \le \liminf _{n\rightarrow \infty }\beta _n \le \limsup _{n\rightarrow \infty }\beta _n <1,\)

-

(iii)

\(0< b \le \liminf _{n\rightarrow \infty }\delta _n \le \limsup _{n\rightarrow \infty }\delta _n <1.\)

Then the sequence \(\{(x_n,y_n)\}\) converges strongly to \((x^*,y^*) = (\Pi _\Omega u, \Pi _\Omega v).\)

-

(ii)

Taking \(f = i_C\) and \(g= i_Q\), i.e., the indicator functions on C and Q, respectively. The the proximal operators \(\mathrm{prox}_{\gamma f} = \Pi _C\) and \(\mathrm{prox}_{\gamma g} =\Pi _Q\) (see Bauschke et al. 2003). Hence, we have the following result.

Corollary 3.4

Let \(E_1, E_2\) and \(E_3\) be p-uniformly convex real Banach spaces which are also uniformly smooth. Let C and Q be nonempty closed, convex subsets of \(E_1\) and \(E_2\), respectively, \(A{:}\,E_1\rightarrow E_3\) and \(B{:}\,E_2\rightarrow E_3\) be bounded linear operators. Let \(T_1{:}\,E_1\rightarrow E_1\) and \(T_2{:}\,E_2\rightarrow E_2 \) be Bregman quasi-nonexpansive mappings such that \(F(T_1) \ne \emptyset \) and \(F(T_2) \ne \emptyset \). For fixed \(u \in E_1\) and \(v \in E_2\), choose an initial guess \((x_1,y_1) \in E_1 \times E_2\) and let \(\{\alpha _n\} \subset [0,1]\). Suppose \((\{x_n\},\{y_n\})\) is generated by the following algorithm: for a fixed \((u,v) \in E_1 \times E_2\),

for \(n \ge 1\), \(\{\beta _n\}, ~ \{\delta _n\} \subset (0,1)\), where \(A^*\) is the adjoint operator of A. Further, we choose the stepsize \(\gamma _n\) such that if \(n \in \Gamma := \{n {:}\,Ax_n - B y_n \ne 0 \}\), then

Otherwise, \(\gamma _{n} = \gamma \) (\(\gamma \) being any nonnegative value). Assume that the following conditions are satisfied:

-

(i)

\(\lim _{n\rightarrow \infty }\alpha _n = 0,\) and \(\sum _{n =0}^\infty \alpha _n = \infty \),

-

(ii)

\(0< a \le \liminf _{n\rightarrow \infty }\beta _n \le \limsup _{n\rightarrow \infty }\beta _n <1,\)

-

(iii)

\(0< b \le \liminf _{n\rightarrow \infty }\delta _n \le \limsup _{n\rightarrow \infty }\delta _n <1.\)

Then the sequence \(\{(x_n,y_n)\}\) converges strongly to \((x^*,y^*) = (\Pi _{F(T_1)} u, \Pi _{F(T_2)} v).\)

-

(iii).

Finally, if we let \(E_1, E_2\) and \(E_3\) to be real Hilbert spaces. Then we have the following corollary from our main result.

Corollary 3.5

Let \(H_1, H_2\) and \(H_3\) be real Hilbert spaces, C and Q be nonempty closed, convex subsets of \(H_1\) and \(H_2\), respectively, \(A{:}\,H_1\rightarrow H_3\) and \(B{:}\,H_2\rightarrow H_3\) be bounded linear operators. Let \(f{:}\,H_1\rightarrow {\mathbb {R}}\cup \{+\infty \}\) and \(g{:}\,H_2\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be proper, convex and lower semicontinuous functions, \(T_1{:}\,H_1\rightarrow H_1\) and \(T_2{:}\,H_2\rightarrow H_2 \) be quasi-nonexpansive mappings such that \(\Omega \ne \emptyset \). For fixed \(u \in H_1\) and \(v \in H_2\), choose an initial guess \((x_1,y_1) \in H_1 \times H_2\) and let \(\{\alpha _n\} \subset [0,1]\). For arbitrary \(x_0, u\in H_1\) and \(y_0, v\in H_2\) define an iterative algorithm by

for \(n \ge 1\), \(\{\beta _n\}, ~ \{\delta _n\} \subset (0,1)\), where \(A^*\) is the adjoint operator of A. Further, we choose the stepsize \(\gamma _n\) such that if \(n \in \Gamma := \{n {:}\,Ax_n - B y_n \ne 0 \}\), then

Otherwise, \(\gamma _{n} = \gamma \) (\(\gamma \) being any nonnegative value). Assume that the following conditions are satisfied:

-

(i)

\(\lim _{n\rightarrow \infty }\alpha _n = 0,\) and \(\sum _{n =0}^\infty \alpha _n = \infty \),

-

(ii)

\(0< a \le \liminf _{n\rightarrow \infty }\beta _n \le \limsup _{n\rightarrow \infty }\beta _n <1,\)

-

(iii)

\(0< b \le \liminf _{n\rightarrow \infty }\delta _n \le \limsup _{n\rightarrow \infty }\delta _n <1.\)

Then (3.5) converges strongly to \((x^*, y^*)= (P_\Omega u, P_\Omega v).\)

4 Applications

4.1 Split equality convex minimization and equilibrium problems

Let C be a nonempty, closed and convex subset of a real Banach space E and \(G{:}\,C \times C \rightarrow {\mathbb {R}}\) be a nonlinear bifunction. The Equilibrium Problem (EP) introduced by Blum and Oettli (1994) as a form of generalization of variational inequality problem is given as

We shall denote the set of solutions of the EP with respect to the bifunction G by EP(G). Several algorithms have been introduced for finding the solution of EP in Banach spaces. For solving EP, it is customary to assume that the bifunction G satisfies the following conditions:

-

(A1)

\(G(x, x)=0\), for all \(x\in C\),

-

(A2)

G is monotone, i.e., \(G(x, y)+G(y, x)\le 0\), for all \(x, y\in C\),

-

(A3)

for all \(x, y, z\in C\), \(\limsup _{t\rightarrow 0^{+}}G(tz+(1-t)x, y)\le G(x, y)\),

-

(A4)

for each \(x\in C\), \(G(x,\cdot )\) is convex and lower semi-continuous.

The resolvent operator of the bifunction G with respect to the Bregman distance \(\Delta _p\) is given as

It was proved in Reich and Sabach (2010a) that \(\mathrm{Res}^p_G\) satisfies the following properties:

-

i.

\(\mathrm{Res}^p_G\) is single-valued;

-

ii.

\(\mathrm{Res}^p_G\) is a Bregman firmly nonexpansive mapping;

-

iii.

\(F(\mathrm{Res}^p_G) = \mathrm{EP}(G)\);

-

iv.

EP(G) is a closed and convex subset of C;

-

v.

for all \(x\in E\) and \(q\in F(\mathrm{Res}^p_G)\)

$$\begin{aligned} \Delta _p(q, \mathrm{Res}^p_G(x))+\Delta _p(\mathrm{Res}^p_G(x), x)\le \Delta _p(q, x). \end{aligned}$$

We now consider the following Split Equality Convex Minimization and Equilibrium Problems:

Let \(E_1\), \(E_2\) and \(E_3\) be real Banach spaces, C and Q be nonempty, closed and convex subsets of \(E_1\) and \(E_2\), respectively. Let \(G_1{:}\,C \times C \rightarrow {\mathbb {R}}\) and \(G_2{:}\,Q \times Q \rightarrow {\mathbb {R}}\) be bifunctions, \(f{:}\,E_1\rightarrow {\mathbb {R}}\cup \{+\infty \}\) and \(g{:}\,E_2\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be proper, lower-semicontinous convex functions, \(A{:}\,E_1 \rightarrow E_3\) and \(B{:}\,E_2 \rightarrow E_3\) be bounded linear operators.

We denote the solution set of the Problem (4.1) by \(\Gamma .\) Finding the common solutions of convex minimization problem, equilibrium problem and fixed point problem (4.1) has been studied recently by many authors in the setting of real Hilbert spaces (see for instance Abass et al. 2018; Jolaoso et al. 2018; Ogbuisi and Mewomo 2017; Okeke and Mewomo 2017; Tian and Liu 2012; Yazdi 2019). However, there are very few results on the split equality convex minimization problem and split equality equilibrium problems in higher Banach spaces.

Setting \(T_1 = \mathrm{Res}_{G_1}^p\) and \(T_2 = \mathrm{Res}_{G_2}^p\) in our Theorem 3.2, we obtain the following result for approximating solution of Problem (4.1) in uniformly convex Banach spaces.

Theorem 4.1

Let \(E_1, E_2\) and \(E_3\) be p-uniformly convex real Banach spaces which are also uniformly smooth. Let C and Q be nonempty closed, convex subsets of \(E_1\) and \(E_2\), respectively, \(A{:}\,E_1\rightarrow E_3\) and \(B{:}\,E_2\rightarrow E_3\) be bounded linear operators. Let \(f{:}\,E_1\rightarrow {\mathbb {R}}\cup \{+\infty \}\) and \(g{:}\,E_2\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be proper, lower-semicontinous convex functions, \(G_1{:}\,C \times C \rightarrow {\mathbb {R}}\), and \(G_2{:}\,Q \times Q \rightarrow {\mathbb {R}}\) be bifunctions satisfying condition (A1)–(A4) such that \(\Gamma \ne \emptyset \). For fixed \(u \in E_1\) and \(v \in E_2\), choose an initial guess \((x_1,y_1) \in E_1 \times E_2\) and let \(\{\alpha _n\} \subset [0,1]\). Assume that the \(n\mathrm{th}\) iterate \((x_n,y_n) \subset E_1 \times E_2\) has been constructed; then we compute the \((n+1)\mathrm{th}\) iterate \((x_{n+1},y_{n+1})\) via the iteration:

for \(n \ge 1\), \(\{\beta _n\}, ~ \{\delta _n\} \subset (0,1)\), where \(A^*\) is the adjoint operator of A. Further, we choose the stepsize \(\gamma _n\) such that if \(n \in \Gamma := \{n {:}\,Ax_n - B y_n \ne 0 \}\), then

Otherwise, \(\gamma _{n} = \gamma \) (\(\gamma \) being any nonnegative value). In addition, if the following conditions are satisfied:

-

(i)

\(\lim _{n\rightarrow \infty }\alpha _n = 0,\) and \(\sum _{n =0}^\infty \alpha _n = \infty \),

-

(ii)

\(0< a \le \liminf _{n\rightarrow \infty }\beta _n \le \limsup _{n\rightarrow \infty }\beta _n <1,\)

-

(iii)

\(0< b \le \liminf _{n\rightarrow \infty }\delta _n \le \limsup _{n\rightarrow \infty }\delta _n <1.\)

Then the sequence \(\{(x_n,y_n)\}\) converges strongly to \((x^*,y^*) \in \Gamma .\)

4.2 Zeros of maximal monotone operators

Let E be a Banach space with dual \(E^*\). Let \(A{:}\,E\rightarrow 2^{E^*}\) be a multivalued mapping. The graph of A denoted by gr(A) is defined by \(gr(A)=\{(x, u)\in E\times E^*{:}\,u\in Ax\}\). A is called a non-trivial operator if \(gr(A)\ne \emptyset \). A is called a monotone operator if \(\forall \) \((x, u), (y, v)\in gr(A)\), \(\langle x -y, u - v\rangle \ge 0.\)

A is said to be a maximal monotone operator if the graph of A is not a proper subset of the graph of any other monotone operator. The Bregman resolvent operator associated with A is denoted by \(\mathrm{Res}_{A}\) and defined by

It is known that \(\mathrm{Res}_A\) is single-valued and Bregman firmly nonexpansive. Also, \(\forall \) \(x\in E\), \(\lambda \in (0, \infty )\), \(x\in A^{-1}(0)\) if and only if \(x\in F(\mathrm{Res}_{\lambda A})\) (see Bauschke et al. 2003). It is also known (see Reich and Sabach 2010a) that \(D_p(z, \mathrm{Res}_Ax)+D_p(\mathrm{Res}_Ax, x)\le D_p(z, x)\).

Let \(E_1, E_2\) and \(E_3\) be p-uniformly convex real Banach spaces which are also uniformly smooth. Let \(C_1\) and \(C_2\) be nonempty closed and convex subsets of \(E_1\) and \(E_2\), respectively. Let \(f{:}\,E_1\rightarrow {\mathbb {R}}\cup \{+\infty \}\) and \(g{:}\,E_2\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be proper, lower-semicontinous convex functions. Let \(T_1{:}\,E_1\rightarrow 2^{E^*_1}\) and \(T_2{:}\,E_2\rightarrow 2^{E_2^{*}}\) be maximal monotone operators and \(A{:}\,E_1\rightarrow E_3\) and \(B{:}\,E_2\rightarrow E_3\) be bounded linear operators. Consider the following problem:

Since \(\mathrm{Res}_{\lambda A}\) is Bregman firmly nonexpansive and \(F(\mathrm{Res}_{\lambda A}) = A^{-1}(0)\), then we have the following result for approximating solution of (4.3) in uniformly convex real Banach spaces.

Theorem 4.2

Let \(E_1, E_2\) and \(E_3\) be p-uniformly convex real Banach spaces which are also uniformly smooth. Let \(C_1\) and \(C_2\) be nonempty closed and convex subsets of \(E_1\) and \(E_2\), respectively. Let \(f{:}\,E_1\rightarrow {\mathbb {R}}\cup \{+\infty \}\) and \(g{:}\,E_2\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be proper, lower-semicontinous convex functions. Let \(T_1{:}\,E_1\rightarrow 2^{E^*_1}\) and \(T_2{:}\,E_2\rightarrow 2^{E_2^{*}}\) be maximal monotone operators and \(A{:}\,E_1\rightarrow E_3\) and \(B{:}\,E_2\rightarrow E_3\) be bounded linear operators. Assume \(\Omega = \big \{(x, y)\in T^{-1}_1(0)\times T^{-1}_2(0){:}\,x\in \mathrm{Arg} \min {f}, y\in \mathrm{Arg} \min {g}, Ax = By\big \} \ne \emptyset \). For fixed \(u \in E_1\) and \(v \in E_2\), choose an initial guess \((x_1,y_1) \in E_1 \times E_2\) and let \(\{\alpha _n\} \subset [0,1]\). Assume that the \(n\mathrm{th}\) iterate \((x_n,y_n) \subset E_1 \times E_2\) has been constructed; then we compute the \((n+1)\mathrm{th}\) iterate \((x_{n+1},y_{n+1})\) via the iteration:

for \(n \ge 1\), \(\{\beta _n\}, ~ \{\delta _n\} \subset (0,1)\), where \(A^*\) and \(B^*\) are the adjoint operators of A and B, respectively. Further, we choose the stepsize \(\gamma _n\) such that if \(n \in \Gamma := \{n{:}\,Ax_n - B y_n \ne 0 \}\), then

Otherwise, \(\gamma _{n} = \gamma \) (\(\gamma \) being any nonnegative value). In addition, if the following conditions are satisfied:

-

(i)

\(\lim _{n\rightarrow \infty }\alpha _n = 0,\) and \(\sum _{n =0}^\infty \alpha _n = \infty \),

-

(ii)

\(0< a \le \liminf _{n\rightarrow \infty }\beta _n \le \limsup _{n\rightarrow \infty }\beta _n <1,\)

-

(iii)

\(0< b \le \liminf _{n\rightarrow \infty }\delta _n \le \limsup _{n\rightarrow \infty }\delta _n <1.\)

Then the sequence \(\{(x_n,y_n)\}\) converges strongly to \((x^*,y^*) \in (\Pi _{\Omega }u, \Pi _{\Omega }v)\).

5 Numerical example

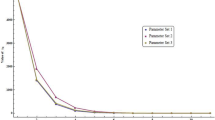

In this section, we present two examples to show the behaviour of the iterative algorithm presented in this paper.

Example 5.1

Let \(E_1 = E_2 = E_3 = {\mathbb {R}}^3\) and let A and B be \(3 \times 3\) randomly generated matrices. Let \(f(x) = ||x||_2\) for all \(x \in {\mathbb {R}}^{3}\), the proximal operator with respect to f is defined as

Also, define \(g(x) = \max \Big \{1- |x|, 0\Big \}\) for \(x \in {\mathbb {R}}^{3}\), then the proximal operator of g is given by

Take \(C = \{ x \in {\mathbb {R}}^{3}{:}\,\langle a,x \rangle \ge b \}\), where \(a = (1,-\,5,4)\) and \(b =1\). Then

Also, let \(Q = \{x \in {\mathbb {R}}^{3}{:}\,\langle c,x \rangle = d \},\) where \(c = (1,2,3)\) and \(d = 4\). Then, we have that

Suppose \(T_1 = P_C\) and \(T_2 = P_Q\), let \(u = \mathrm{rand}(3,1)\) and \(v = 0.5*\mathrm{rand}(3,1)\). We let \(\alpha _n = \frac{1}{n+1}\), \(\beta _n = \frac{2n}{3(n+1)}\) and \(\delta _n = \frac{3n+5}{7n+9}\). Then our algorithm (3.1) becomes

for \(n\ge 1\). If \(Ax_n - By_n\ne 0\), then we choose \(\gamma _n \in (0, \frac{2\Vert Ax_n - By_n\Vert ^2}{\Vert A^T(Ax_n - By_n)\Vert ^2 + \Vert B^T(Ax_n - By_n)\Vert ^2})\). Else, \(\gamma _n = \gamma \) (\(\gamma \) being any positive real number). We choose various values of the initial points \(x_1\) and \(y_1\) as follows:

-

Case 1

-

(a)

\(x_1 = 1 * \mathrm{rand}(3,1)\), \(y_1 = 2* \mathrm{rand}(3,1),\)

-

(b)

\(x_1 = -5 * \mathrm{rand}(3,1),\) \(y_1 = -10*\mathrm{rand}(3,1)\),

-

(a)

-

Case 2

-

(a)

\(x_1 = -0.1*\mathrm{rand}(3,1)\), \(y_1 = 0.2*\mathrm{rand}(3,1),\)

-

(b)

\(x_1 = 0.5*\mathrm{rand}(3,1),\) \(y_1 = -1*\mathrm{rand}(3,1).\)

-

(a)

Using \(\frac{\max \{||x_{n+1}-x_{n}||^2,||y_{n+1}-y_{n}||^2\}}{\max \{||x_2-x_1||^2,||y_2 - y_1||^2\}}<10^{-3}\) as the stopping criterion, we plot the graphs of \(||x_{n+1} -x_{n}||^2\) and \(||y_{n+1} -y_{n}||^2\) against the number of iterations in each cases. We note that the change in the initial values does not have any significant effect on the number of iterations nor the cpu time. The numerical results can be found in Figs. 1 and 2.

Example 5.1, left: Case 1 (a), time: 0.0321 s; right: Case 1 (b), time: 0.0481 s

Example 5.1, left: Case 2 (a), time: 0.0211 s; right: Case 2 (b), time: 0.0427 s

Example 5.2

In this second example, we consider the infinite-dimensional space and compare our algorithm (3.1) with algorithm (1.13) of Zhao (2015). Let \(E_1=E_2=E_3= L^2([0,2\pi ])\) with norm \(||x||^2 = \int _{0}^{2\pi }|x(t)|\mathrm{d}t\) and inner product \(\langle x,y \rangle = \int _{0}^{2\pi }x(t)y(t)\mathrm{d}t,\) \(x,y \in E.\) Suppose \(C := \{x \in L^2([0,2\pi ]){:}\,\int _{0}^{2\pi }(t^2+1)x(t)\mathrm{d}t \le 1\}\) and \(Q:= \{x \in L^2([0,2\pi ]){:}\,\int _{0}^{2\pi }|x(t)-\sin (t)|^2 \le 16\}\) are subsets of \(E_1\) and \(E_2\), respectively. Define \(A{:}\,L^2([0,2\pi ]) \rightarrow L^2([0,2\pi ])\) by \(A(x)(t) = \int _{0}^{2\pi }\exp ^{-st}x(t)\mathrm{d}t\) for all \(x \in L^2([0,2\pi ])\) and \(By(t) = \int _{0}^{2\pi }\frac{1}{10}(x(t))\mathrm{d}t.\) It is easy to verify that A and B are bounded linear operators.

Example 5.2, left: Case 1 (a); right: Case 1 (b)

Now, let \(f= i_C\) and \(g=i_Q\), the indicator functions on C and Q, respectively, then \(\mathrm{prox}_{\gamma f} =\Pi _C\) and \(\mathrm{prox}_{\gamma g} = \Pi _Q\). Also, let \(T_1x(t) = \int _{0}^{2\pi } x(t)\mathrm{d}t\) and \(T_2y(t) = \int _{0}^{2\pi } \frac{1}{4}y(t)\mathrm{d}t\), choose \(u= \cos (3t),\) \(v = \exp ^{-2t}\), \(\alpha _n = \frac{5}{10(n+1)}\), \(\beta _n = \frac{5n}{8n+7}\) and \(\delta _n = \frac{3n-2}{5n+5}.\) Then our algorithm (3.1) becomes:

for \(n\ge 1\). If \(Ax_n - By_n\ne 0\), then we choose \(\gamma _n \in (0, \frac{2\Vert Ax_n - By_n\Vert ^2}{\Vert A^*(Ax_n - By_n)\Vert ^2 + \Vert B^*(Ax_n - By_n)\Vert ^2})\). Else, \(\gamma _n = \gamma \) (\(\gamma \) being any positive real number). We choose various values of the initial points \(x_1\) and \(y_1\) as follows:

-

Case 1

-

(a)

\(x_1 = 2t^3\exp ^{5t}\), \(y_1 = t^3+2t-5,\)

-

(b)

\(x_1 = 2t\sin (3\pi t),\) \(y_1 = t^2\cos (2\pi t)\),

-

(a)

-

Case 2

-

(a)

\(x_1 = 3\exp ^{-5t}\), \(y_1 = 2t\sin (3t),\)

-

(b)

\(x_1 = \exp ^{2t},\) \(y_1 = \frac{3}{10}\exp ^{2t}.\)

-

(a)

Using \(\frac{||x_{n+1}-x_{n}||^2+||y_{n+1}-y_{n}||^2}{||x_2-x_1||^2+||y_2 - y_1||^2}<10^{-5}\) as the stopping criterion, we plot the graphs of \(||x_{n+1} -x_{n}||^2+||y_{n+1} -y_{n}||^2\) against the number of iterations in each cases and also compare the performance of our algorithm (5.3) with algorithm (1.13). The numerical results are reported in Table 1 and Figs. 3 and 4.

Example 5.2, left: Case 2 (a); right: Case 2 (b)

References

Abass HA, Ogbuisi FU, Mewomo OT (2018) Common solution of split equilibrium problem and fixed point problem with no prior knowledge of operator norm. UPB Sci Bull Ser A 80(1):175–190

Ansari QH, Rehan A (2014) Split feasibility and fixed point problems. In: Ansari QH (ed) Nonlinear analysis: approximation theory, optimization and application. Springer, New York, pp 281–322

Bauschke HH, Combettes PL (2011) Convex analysis & monotone operator theory in Hilbert spaces. Springer, Berlin

Bauschke HH, Borwein JM, Combettes PL (2003) Bregman monotone optimization algorithms. SIAM J Control Optim 42(2):596–636

Blum E, Oettli W (1994) From optimization and variational inequalities to equilibrium problems. Math Stud 63(1–4):123–145

Bryne C (2002) Iterative oblique projection onto convex subsets and the split feasibility problem. Inverse Probl 18(2):441–453

Bryne C (2004) A unified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Probl 20(1):103–120

Censor Y, Elfving T (1994) A multiprojection algorithm using Bregman projections in a product space. Numer Algorithms 8(2):221–239

Censor Y, Segal A (2009) The split common fixed point problem for directed operators. J Convex Anal 16:587–600

Censor Y, Elfving T, Kopf N, Bortfeld T (2005) The multiple-sets split feasibility problem and its applications for inverse problems. Inverse Probl 21:2071–2084

Censor Y, Bortfeld T, Martin B, Trofimov A (2006) A unified approach for inversion problems in intensity-modulated radiation therapy. Phys Med Biol 51:2353–2365

Chidume CE (2009) Geometric properties of Banach spaces and nonlinear iterations, Lecture Notes in Mathematics, vol 1965. Springer, London

Cioranescu I (1990) Geometry of Banach spaces, duality mappings and nonlinear problems. Kluwer, Dordrecht

Iiduka H (2012) Fixed point optimization algorithm and its application to network bandwidth allocation. J Comput Appl Math 236:1733–1742

Iiduka H (2015) Acceleration method for convex optimization over the fixed point set of a nonexpansive mappings. Math Prog Ser A 149:131–165

Jolaoso LO, Ogbuisi FU, Mewomo OT (2017) An iterative method for solving minimization, variational inequality and fixed point problems in reflexive Banach spaces. Adv Pure Appl Math 9(3):167–184

Jolaoso LO, Oyewole KO, Okeke CC, Mewomo OT (2018) A unified algorithm for solving split generalized mixed equilibrium problem and fixed point of nonspreading mapping in Hilbert space. Demonstr Math 51:211–232

Kohsaka F, Takahashi W (2005) Proximal point algorithms with Bregman functions in Banach spaces. J. Nonlinear Convex Anal 6(3):505–523

Maingé PE (2008a) Strong convergence of projected subgradient methods for nonsmooth and nonstrictly Convex minimization. Set-Valued Anal 16(7):899–912

Maingé PE (2008b) A hybrid extragradient viscosity method for monotone operators and fixed point problems. SIAM J Control Optim 49:1499–1515

Mewomo OT, Ogbuisi FU (2018) Convergence analysis of iterative method for multiple set split feasibility problems in certain Banach spaces. Quaest Math 41(1):129–148

Moudafi A (2011) A note on the split common fixed-point problem for quasi-nonexpansive operators. Nonlinear Anal 74(12):4083–4087

Moudafi A (2012) Alternating CQ-algorithms for convex feasibility and fixed points problems. J Nonlinear Convex Anal 15:1–10

Moudafi A, Al-Shemas E (2013) Simultaneous iterative methods for split equality problem. Trans Math Program Appl 1(2):1–11

Moudafi A, Thakur BS (2014) Solving proximal split feasibility problems without prior knowledge of operator norms. Optim Lett 8:2099–2110. https://doi.org/10.1007/s11590-013-0708-4

Ma YF, Wang L, Zi XJ (2013) Strong and weak convergence theorems for a new split feasibility problem. Int Math Forum 8(33):1621–1627

Naraghirad E, Yao JC (2013) Bregman weak relatively non expansive mappings in Banach space. Fixed Point Theory Appl. https://doi.org/10.1186/1687-1812-2013-141

Ogbuisi FU, Mewomo OT (2017) On split generalized mixed equilibrium problems and fixed point problems with no prior knowledge of operator norm. J Fixed Point Theory Appl 19(3):2109–2128

Okeke CC, Mewomo OT (2017) On split equilibrium problem, variational inequality problem and fixed point problem for multi-valued mappings. Ann Acad Rom Sci Ser Math Appl 9(2):255–280

Mewomo OT, Ogbuisi FU, Okeke CC (2018) On split equality minimization and fixed point problems. Novi Sad J Math 48(2):21–39

Phelps RR (1993) Convex functions, Monotone operators and differentiability, Lecture Notes in Mathematics, 2nd edn, vol 1364. Springer, New York

Qu B, Xiu N (2005) A note on th CQ-algorithm for the split feasibility problem. Inverse Probl 21(5):1655–1665

Reich S, Sabach S (2010a) Two strong convergence theorems for Bregman strongly non-expansive operators in reflexive Banach spaces. Nonlinear Anal Theory Methods Appl 73(1):122–135

Reich S, Sabach S (2010b) Two strong convergence theorems for a proximal method in reflexive Banach spaces. Numer Funct Anal Optim 31(1):22–44

Reich S, Sabach S (2011) Existence and approximation of fixed points of Bregman firmly nonexpansive mappings in reflexive Banach spaces. In: Bauschke H, Burachik R, Combettes P, Elser V, Luke D, Wolkowicz H (eds) Fixed-point algorithms for inverse problems in science and engineering. Springer, New York, pp 301–316

Schöpfer F (2007) Iterative regularization method for the solution of the split feasibility problem in Banach spaces. Ph.D. thesis, Saabrücken

Schöpfer F, Schuster T, Louis AK (2008) An iterative regularization method for the solution of the split feasibility problem in Banach spaces. Inverse Probl 24(5):055008

Shehu Y, Mewomo OT (2016) Further investigation into split common fixed point problem for demicontractive operators. Acta Math Sin (Engl Ser) 32(11):1357–1376

Tian M, Liu L (2012) Iterative algorithms based on the viscosity approximation method for equilibrium and constrained convex minimization problem. Fixed Point Theory Appl 2012:201

Xu HK (2002) Another control condition in an iterative method for nonexpansive mappings. Bull Austral Math Soc 65(1):109–113

Xu ZB, Roach GF (1991) Characteristics inequalities of uniformly convex and uniformly smooth Banach spaces. J Math Anal Appl 157(1):189–210

Yang Q (2004) The relaxed CQ-algorithm solving the split feasibility problem. Inverse Probl 20(4):1261–1266

Yazdi M (2019) New iterative methods for equilibrium and constrained minimization problems. Asian-Eur J Math 12(1):12. https://doi.org/10.1142/S1793557119500426

Zhao J (2015) Solving split equality fixed-point problem of quasi-nonexpansive mappings without prior knowledge of operators norms. Optimization 64(12):2619–2630. https://doi.org/10.1080/02331934.2014.883515

Acknowledgements

The authors thank the anonymous referee for valuable and useful suggestions and comments which led to the great improvement of the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Gabriel Haeser.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Taiwo, A., Jolaoso, L.O. & Mewomo, O.T. A modified Halpern algorithm for approximating a common solution of split equality convex minimization problem and fixed point problem in uniformly convex Banach spaces. Comp. Appl. Math. 38, 77 (2019). https://doi.org/10.1007/s40314-019-0841-5

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-019-0841-5

Keywords

- Split feasibility problem

- Minimization problem

- Proximal operator

- Bregman quasi-nonexpansive

- Split equality problem

- Fixed point problem