Abstract

Using chain rule, we propose a modified secant equation to get a more accurate approximation of the second curvature of the objective function. Then, based on this modified secant equation we present a new BFGS method for solving unconstrained optimization problems. The proposed method makes use of both gradient and function values, and utilizes information from two most recent steps, while the usual secant relation uses only the latest step information. Under appropriate conditions, we show that the proposed method is globally convergent without convexity assumption on the objective function. Comparative numerical results show computational efficiency of the proposed method in the sense of the Dolan–Moré performance profiles.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the unconstrained nonlinear optimization problem

where f is twice continuously differentiable. The quasi-Newton methods are popular iterative methods for solving (1), whose iterates are constructed as follows:

where \(\alpha _k\) is a step size and \(d_k\) is a descent direction obtained by solving \(B_k d_k=-g_k,\) where \(g_k =\bigtriangledown f(x_k)\) and \(B_k \) is an approximation of the Hessian matrix of f at \(x_k\) which satisfies the secant equation.

The standard secant equation can be established as follows (see Dennis and Schnabel 1983). We have

where \(s_k=x_{k+1}-x_k.\) Since \(B_{k+1}\) is to approximate \(G(x_{k+1})=\nabla ^2 f(x_{k+1}),\) the secant equation is defined to be

where \(y_k=g_{k+1}-g_k\). The relation (3) is sometimes called the standard secant equation.

A famous family of quasi-Newton methods is Broyden family Broyden (1965) in which the updates are defined by

where \(\mu \) is a scale parameter. The BFGS, DFP and SR1 updates are obtained by setting \(\mu =0\), \(\mu =1\) and \(\mu =1/(1-s^\mathrm{T}_{k}B_k s_k/s^\mathrm{T}_{k}y_k ),\) respectively.

Among quasi-Newton methods, the most efficient method is the BFGS method Broyden (1970).

When f is convex, the global convergence of the BFGS method have been studied by some authors (see Byrd and Nocedal 1989; Byrd et al. 1987; Griewank 1991; Powell 1976; Toint 1986). However, the BFGS method is very efficient as regards numerical performance, but Dai (2003) have constructed an example to show that this method may fail for non-convex functions with inexact Wolfe line searches. In addition, Mascarenhas (2004) showed that the nonconvergence of the standard BFGS method even with exact line search.

Global convergence of the BFGS method for the general functions under Wolfe line search is still an open problem. Recently, Yuan et al. (2017, 2018) provided a positive answer, and proved the global convergence of BFGS method under a modified weak Wolfe–Powell line search technique for general functions.

To obtain better quasi-Newton methods, many modified methods have been presented (see Li and Fukushima 2001a, b; Wei et al. 2006; Yuan and Wei 2009, 2010; Yuan et al. 2017, 2018; Zhang et al. 1999; Zhang and Xu 2001).

Li and Fukushima (2001a, b) made a modification on the standard BFGS method as follows:

with

where C is a positive constant.

They showed this method is globally convergent without a convexity assumption on the objective function f.

The usual secant equation employs only the gradients and the available function values are ignored. To get a higher order accuracy of approximating the Hessian matrix of the objective function, several researchers have modified the usual secant equation (3) to make full use of both the gradient and function values (see Wei et al. 2006; Yuan and Wei 2009, 2010; Yuan et al. 2017, 2018; Zhang et al. 1999; Zhang and Xu 2001).

Wei et al. (2006), using Taylor’s series, modified (3) as follows:

where \(\tilde{y}_k=y_k + \frac{\vartheta _k}{\Vert s_k\Vert ^{2}} s_k\) and \(\vartheta _{k}=2 (f_{k}-f_{k+1})+ (g_{k}+g_{k+1})^\mathrm{T} s_{k}.\)

Recently, Yuan and Wei (2010) considered an extension of the modified secant equation (7) as follows:

Numerical results of Yuan and Wei (2010) showed that the modified BFGS method suggested by Yuan and Wei outperformed the CG methods proposed by Wei et al. (2006) and Li and Fukushima (2001b) and the standard BFGS method Broyden (1970).

Such modified secant equations make use of both the available gradient and function values only at the last two points. Here, we employ chain rule, and introduced a different secant relation utilizing information from three most recent points and using both the available gradient and function values. Then, we make use of the new secant equation in a BFGS updating formula.

This work is organized as follows: In Sect. 2, we first employ chain rule to derive an alternative secant equation and then we outline our proposed algorithm. In Sect. 3, we investigate the global convergence of the proposed method. Finally, in Sect. 4, we report some numerical results.

2 Two-step BFGS method

In this section, we obtain a new secant equation. Next, we use this secant equation and we give the algorithm.

2.1 Proposed modified secant equation

Here, we intend to make use of three iterates \(x_{k-1},\) \(x_{k}\) and \(x_{k+1}\) generated by some quasi-Newton algorithm. Using chain rule to function \(\bigtriangledown ^2 f(X(t)),\) we know

where X(t) is a differentiable curve in \(\mathbb {R}^n.\)

Now, suppose that X(t) is the interpolating curve so that

Of course there are various choices for X(t). Here, motivated by Ford and Saadallah (1987), we define a nonlinear interpolating with a free parameter as follows:

where \(q(t)=a_0 + a_1 t + a_2 t^2\) (\(\{a_i\}_{i=0}^{2}\) are constant vectors) and \(\theta \) is a parameter to be chosen later.

Since \(q(t)=x(t,\theta ) (1+t\theta )\) is a second degree polynomial and hence, may be written in its Lagrangian form

where \(q(t_j)=x(t_j,\theta )(1+t_j \theta )\) and the \(L_j(t)\) are the basic Lagrange polynomials:

After some algebraic manipulations, (12) can be written as

Therefore

Taking the derivative from both sides of (15), we obtain:

On the other hand, using Lagrange interpolation we have

Substituting relation (17) into (9), we obtain:

where \(\frac{\mathrm{d}X(t)}{\mathrm{d}t}\equiv \frac{\mathrm{d}x(t,\theta )}{\mathrm{d}t}\) given by (16).

Now, by considering \(B_{k+1}\) as a new approximation of \(\nabla ^2 f(x_{k+1}),\) (18) leads to

where \(B_{k-1}\) and \(B_{k}\) approximate \(\nabla ^2 f(x_{k-1}) \) and \(\nabla ^2 f(x_{k}),\) respectively.

Equation (19) provides a new modified secant relation as follows:

where \(y_k ^{*}\) and \(s_k ^{*}\) are given by

and

with \(\frac{\mathrm{d}X(t)}{\mathrm{d}t}\equiv \frac{\mathrm{d}x(t,\theta )}{\mathrm{d}t}\) given by (16).

Now, the issue is choosing a strategy to determine a numerical value for \(\theta \). Define

Clearly we have

On the other hand, a reasonable estimate of the integral would be given by

Remark A

In constructing this estimate of the integral, we are using advantage of the fact that \(t=0\) is an interior point of the interval of integration \([-1,1].\)

On the other hand, from Eq. (16) we have

From (24), (25) and (26), we obtain

Obviously (27) is a good estimation of \(\theta \) and it dose not require expensive computations.

Also, it is easy to see that if the denominator, \((1 + t\theta )\) in (11), becomes zero over the interval \([-1,1 ],\) then the interpolating curve \(x(t,\theta )\) is undesirable. To overcome this difficulty, since the denominator \((1 + t\theta )\) is positive at \(t=0\), we impose the two conditions as follows:

that is,

In implementing the new algorithm, if the condition (28) dose not hold, then we set \(\theta =0.\)

2.2 Proposed BFGS algorithm

Here, we apply the modified secant equation given in the previous subsection then we propose new modified BFGS method such that \(B_{k+1}\) update by

where \(y_k ^{*}\) and \(s_k ^{*}\) are given by

and

with \(\frac{\mathrm{d}X(t)}{\mathrm{d}t}\) and \(\theta \) are given by (16) and (27) respectively.

We note that this new modified BFGS contains information from the three most recent points where the usual BFGS method and modified BFGS method introduced by Li and Fukushima (2001b), Wei et al. (2006) and Yuan and Wei (2010), make use of the information merely at the two latest points. In addition, both the available gradient and function values are being utilized.

We know, \({s^{*}_{k}}^\mathrm{T}{y^{*}_{k}}>0,\) is sufficient to ensure \(B_{k+1}\) to be positive definite (see Nocedal and Wright 2006) and consequently, the generated search directions are descent directions. However, for a general function f, \({s^{*}_{k}}^\mathrm{T}y^{*}_{k} \) may not be positive for all \(k\ge 0,\) and consequently \(B_{k+1}\) may not be positive definite.

For preserving positive definiteness of the updates, we set

where \(\delta \) is a positive constant.

Remark B

From (32), it is easy to see that \({s^{*}_{k}}^\mathrm{T}y^{*}_{k}>0\) therefore the matrix \(B_{k+1}\) generated by (32), is symmetric and positive definite for all k.

We can now give a new BFGS algorithm using new secant relation (20), as Algorithm 1.

Algorithm 1: The new modified BFGS method.

Step 1: Give \( \varepsilon \) as a tolerance for convergence, \(\sigma _1 \in (0,1),\) \(\sigma _2\in (\sigma _1,1),\) a starting point \(x_0 \in \mathbb {R} ^n,\) and a positive definite matrix \(B_0.\) Set \(k = 0\).

Step 2: If \(\Vert g_k \Vert <\varepsilon \) then stop.

Step 3: Compute a search direction \( d_k\): Solve \(B_k d_k =- g_k.\)

Step 4: Compute the step length \(\alpha _k\) satisfying the following Wolfe conditions:

Step 5: Set \(x_{k+1} = x_k + \alpha _k d_k\). Compute \(s_{k}^{*}\) and \(y_{k}^{*}\) by (30) and (31) respectively, with \(\theta \) given by (27). If \({s_{k}^{*}}^\mathrm{T} y_{k}^{*}< 10^{-4} \Vert s_{k}^{*}\Vert \Vert y_{k}^{*}\Vert \) then set \( s_{k}^{*}=s_k\) and \(y_{k}^{*}=y_k.\)

Step 6: Update \(B_{k+1}\) by (32).

Step 7: Set \(k = k + 1\) and go to Step 2.

1pc

Next, we will investigate the global convergence of Algorithm 1.

3 Convergence analysis

To establish the global convergence of the Algorithm 1, we need some commonly used assumptions.

Assumption A

(i) The level set \(D = \{x \mid f(x) \le f(x_0)\}\) is bounded, where \(x_0\) is the starting point of Algorithm 1.

(ii) The function f is twice continuously differentiable and there is constant \(L> 0,\) such that

It is clear that Assumption A implies

where m is a positive constant

Since \(B_k\) is a approximate G(x) at \(x_k,\) similar to Yuan and Wei (2009) and Zhu (2005) we give the following assumption.

Assumption B

Assume that \(B_k\) is a good approximation to G(x) at \(x_k.\), i.e.,

where \( \varepsilon _k \in (0, 1)\) are suitable quantities.

On the other hand, we have

Hence, we can give

where \(\gamma = \varepsilon _k+m.\)

Using Assumption A and the Wolfe conditions, \(\lbrace f(x_k)\rbrace \) is a nonincreasing sequence, which ensures \(\lbrace x_k \rbrace \subset D\) and the existence of \(x^{*}\) such that

To establish the global convergence of Algorithm 1, we present the following useful Lemmas.

Lemma 3.1

Let f satisfies assumptions A and B, and \(\{x_k\}\) be generated by Algorithm 1 and there exist constants \(a_1\) and \(a_2\) such that

for infinitely many k. Then, we have

Proof

Since \(s_{k}= \alpha _k d_k,\) it is clear that (39) holds true if \(s_{k}\) is replaced by \(d_k\). From (39) and the relation \(g_{k} = - B_{k}d_k,\) we have

Let \(\Lambda \) be the set of indices k for which (39) hold. Using (34) and Assumption asB, we have

This implies that, for any \(k \in \Lambda ,\)

Moreover, by (38), we obtain

which yields

Using (33), we get

which ensures

This together with (43) lead to

which a long with (41), yields (40). \(\square \)

Now, we prove the global convergence of Algorithm 1.

Theorem 3.1

Let f satisfy the assumptions A and B and \(\lbrace x_k\rbrace \) be generated by Algorithm 1. Then, we have

Proof

Using Lemma 3.1, it is sufficient to show relation (39) holds for infinitely many k. Using (37), we have

Since \(B_{k},\) in Algorithm 1 is symmetric and positive definite then there exists \(a_2\) such that

Then, Lemma 3.1 completes the proof. \(\square \)

4 Numerical results

We compare the performance of the following four methods on some unconstrained optimization problems:

MBFGS: proposed method (Algorithm 1).

BFGS: the usual BFGS method using (3) [2].

BFGS\(A_k(2)\): the modified BFGS method of Wei et al. using (5) Wei et al. (2006).

MBFGS\(A_k(2)\): the modified BFGS of Yuan and Wei using (7) Yuan and Wei (2010).

1pc We have tested all the considered algorithms on 120 test problems from CUTEr library Gould et al. (2003). A summary of these problems are given in Table 1. All the codes were written in Matlab 7.14.0.739 (2012a) and run on PC with CPU Intel(R) Core(TM) i5-4200 3.6 GHz, 4 GB of RAM memory and Centos 6.2 server Linux operating system. In the four algorithms, the initial matrix is set to be the identity matrix and \(\varepsilon = 106\). In Algorithm 1 we set \(\sigma _1 =0.01,\) and \(\sigma _2 =0.9 \) and \(\delta =10^{-6}\).

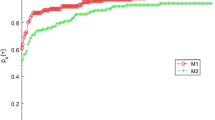

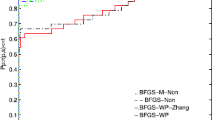

We used the performance profiles of Dolan and More (2002) to evaluate performance of these four algorithms with respect to CPU time, the number of iterations and the total number of function and gradient evaluations computed as \(N_f + nN_g\) where \(N_f\) and \(N_g\), respectively, denote the number of function and gradient evaluations (note that to account for the higher cost of \(N_g\), as compared to \(N_f\) the former is multiplied by n).

Figures 1, 2 and 3 demonstrate the results of the comparisons of the four methods. From these figures, it is clear that Algorithm 1 (MBFGS) is the most efficient in solving these 120 test problems.

5 Conclusion

We introduced a modified BFGS (MBFGS) method using a new secant equation. An interesting feature of the proposed method was taking both the gradient and function values into account. Another important property of the MBFGS method was the utilization of information from the two most recent steps instead of the last step alone. Under suitable assumptions, we established the global convergence of the proposed method. Numerical results on the collection of problems from the CUTEr library showed the proposed method to be more efficient as compared to several proposed BFGS methods in the literature.

References

Broyden CG (1965) A class of methods for solving nonlinear simultaneous equations. Math Comput 19:577–593

Broyden CG (1970) The convergence of a class of double rank minimization algorithms-Part 2: the new algorithm. J Inst Math Appl 6:222–231

Byrd R, Nocedal J (1989) A tool for the analysis of quasi-Newton methods with application to unconstrained minimization. SIAM J Numer Anal 26:727–739

Byrd R, Nocedal J, Yuan Y (1987) Global convergence of a class of quasi-Newton methods on convex problems. SIAM J Numer Anal 24:1171–1189

Dai Y (2003) Convergence properties of the BFGS algorithm. SIAM J Optim 13:693–701

Dennis JE, More JJ (1974) A characterization of superlinear convergence and its application to quasi-Newton methods. Math Comput 28:549–560

Dennis JE, Schnabel RB (1983) Numerical methods for unconstrained optimization and nonlinear equations. Prentice-Hall, Englewood Cliffs

Dolan E, More JJ (2002) Benchmarking optimization software with performance profiles. Math Program 91:201–213

Fletcher R (1970) A new approach to variable metric algorithms. Comput J 13:317–322

Ford JA, Saadallah AF (1987) On the construction of minimisation methods of quasi-Newton type. J Comput Appl Math 20:239–246

Goldfarb D (1970) A family of variable metric methods derived by variational means. Math Comput 24:23–26

Gould NIM, Orban D, Toint PhL (2003) CUTEr, a constrained and unconstrained testing environment, revisited. ACM Trans Math Softw 29:373–394

Griewank A (1991) The global convergence of partitioned BFGS on problems with convex decompositions and Lipschitzian gradients. Math Program 50:141–175

Li DH, Fukushima M (2001) On the global convergence of BFGS method for nonconvex unconstrained optimization problems. SIAM J Optim 11:1054–1064

Li DH, Fukushima M (2001) A modified BFGS method and its global convergence in nonconvex minimization. J Comput Appl Math 129:15–35

Mascarenhas WF (2004) The BFGS method with exact line searches fails for non-convex objective functions. Math Program 99:49–61

Nocedal J, Wright SJ (2006) Numerical optimization. Springer, New York

Pearson JD (1969) Variable metric methods of minimization. Comput J 12:171–178

Powell MJD (1976) Some global convergence properties of a variable metric algorithm for minimization without exact line searches. In: Cottle RW, Lemke CE (eds) Nonlinear programming. SIAMAMS proceedings, vol IX. SIAM, Philadelphia

Shanno DF (1970) Conditioning of quasi-Newton methods for function minimization. Math Comput 24:647–656

Toint PL (1986) Global convergence of the partioned BFGS algorithm for convex partially separable opertimization. Math Program 36:290–306

Wei Z, Li G, Qi L (2006) New quasi-Newton methods for unconstrained optimization problems. Appl Math Comput 175:1156–1188

Yuan G, Wei Z (2010) Convergence analysis of a modified BFGS method on convex minimizations. Comput Optim Appl 47:237–255

Yuan G, Sheng Z, Wang B, Hu W, Li C (2018) The global convergence of a modified BFGS method for nonconvex functions. J Comput Appl Math 327:274–294

Yuan G, Wei Z (2009) BFGS trust-region method for symmetric nonlinear equations. J Comput Appl Math 230:44–58

Yuan G, Wei Z, Lu X (2017) Global convergence of BFGS and PRP methods under a modified weak Wolfe–Powell line search. Appl Math Model 47:811–825

Zhang JZ, Deng NY, Chen LH (1999) New quasi-Newton equation and related methods for unconstrained optimization. J Optim Theory Appl 102:147–167

Zhang JZ, Xu CX (2001) Properties and numerical performance of quasi-Newton methods with modified quasi-Newton equation. J Comput Appl Math 137:269–278

Zhu DT (2005) Nonmonotone backtracking inexact quasi-Newton algorithms for solving smooth nonlinear equations. Appl Math Comput 161:875–895

Acknowledgements

We would like to thank the referees and the associate editor, whose very helpful suggestions led to much improvement of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Joerg Fliege.

Rights and permissions

About this article

Cite this article

Dehghani, R., Bidabadi, N. & Hosseini, M.M. A new modified BFGS method for unconstrained optimization problems. Comp. Appl. Math. 37, 5113–5125 (2018). https://doi.org/10.1007/s40314-018-0620-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40314-018-0620-8