Abstract

We study the numerical approximation of a homogeneous Fredholm integral equation of second kind associated with the Karhunen–Loève expansion of Gaussian random fields. We develop, validate, and discuss an algorithm based on the Galerkin method with two-dimensional Haar wavelets as basis functions. The shape functions are constructed from the orthogonal decomposition of tensor-product spaces of one-dimensional Haar functions, and a recursive algorithm is employed to compute the matrix of the discrete eigenvalue system without the explicit calculation of integrals, allowing the implementation of a fast and efficient algorithm that provides considerable reduction in CPU time, when compared with classical Galerkin methods. Numerical experiments confirm the convergence rate of the method and assess the approximation error and the sparsity of the eigenvalue system when the wavelet expansion is truncated. We illustrate the numerical solution of a diffusion problem with random input data with the present method. In this problem, accuracy was retained after dropping the coefficients below a threshold value that was numerically determined. A similar method with scaling functions rather than wavelet functions does not need a discrete wavelet transform and leads to eigenvalue systems with better conditioning but lower sparsity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A large class of random processes, stationary or non-stationary, can be expressed in terms of deterministic orthogonal functions and uncorrelated random variables through Karhunen–Loève (K–L) expansion (Karhunen 1946; Loève 1955). This representation is obtained from the eigenvalues and eigenfunctions of a homogeneous Fredholm integral equation of the second kind whose kernel is given by the covariance function of the random process. Once these eigenpairs are found, the K–L expansion is constructed, roughly speaking, from a linear combination of the eigenfunctions whose weights are independent and identically distributed random variables. This approach has been pioneered by Ghanem and Spanos (1991) and is widely used for modeling uncertainty in problems of elasticity, heat and mass transfer, fluid mechanics, and acoustics (Azevedo et al. 2012; Motamed et al. 2013; Zhang and Lu 2004).

The numerical solution of the Fredholm integral equation becomes more involving in two-dimensional domains. Several methods have been proposed in literature in this case, such as Nyström/collocation methods (Liang and Lin 2010; Xie and Lin 2009), finite element methods (Frauenfelder et al. 2005; Oliveira and Azevedo 2014), and wavelet Galerkin methods (Babolian et al. 2011; Derili et al. 2012).

The practical use of K–L expansion depends of an accurate and efficient computation of eigenpairs. In this sense, one promising technique that has been explored in the one-dimensional case is the Galerkin method with Haar wavelets using the pyramid algorithm (Mallat 1989) to compute the entries of the discrete eigenvalue problem. This method has been shown to be superior to Galerkin methods with polynomial and trigonometric basis functions (Phoon et al. 2002). In this study, we extend the work carried out in Phoon et al. (2002), Stefanou and Papadrakakis (2007) by constructing a 2D Haar wavelet basis for the computation of the eigenpairs of covariance functions with a two-dimensional domain. Rather than the tensor-product basis employed in Babolian et al. (2011), Derili et al. (2012), we consider the non-standard form (Beylkin et al. 1991, eq. (2.14)) (see also Proppe 2012), which is constructed from the orthogonal decomposition of the tensor-product space. Such a basis is suitable to the pyramid algorithm. Moreover, the non-standard form increases the sparsity of the matrix representation of the kernel (Beylkin et al. 1991, Figs. 2–3 and 9–10). In addition to the detailed extension from 1D to 2D, we compare the Galerkin methods with Haar wavelets and Haar scaling functions (the latter is equivalent to the piecewise-constant finite element method Azevedo and Oliveira 2012; Frauenfelder et al. 2005) in terms of stability and efficiency.

The paper is organized in the following way. In Sect. 2, we introduce the K–L expansion and review the one-dimensional Haar functions and the Galerkin method. In Sect. 3, we construct the two-dimensional Haar wavelet basis and the discrete wavelet transform of two-dimensional covariance kernels. In Sect. 4, we carry out several numerical tests to probe the accuracy and the efficiency of the method: we evaluate the approximation error of the eigenvalues as well as the eigenfunctions (by means of the ensemble variance of K–L expansion Stefanou and Papadrakakis 2007); we investigate the effect of neglecting matrix coefficients below a prescribed threshold value (Stefanou and Papadrakakis 2007) in the approximation error and sparsity of the eigenvalue system; we consider the five-spot problem on stationary and heterogeneous media, in which the approximate eigenpairs are employed in a truncated K–L expansion of the log hydraulic conductivity field; and we contrast the computational cost and numerical conditioning of the bases with Haar wavelets and Haar scaling functions.

The following notations will be employed throughout this work. The index i is used for eigenvalues and eigenfunctions, as in (1); the indices \(k\) and \(l\) are used for matrices and vectors, as in (10); the index \(j\) is used for wavelet scaling and the indices \(n\) and \(m\) are used for wavelet translations, as in (12); and the index \(s\) is used to identify elements of a direct sum, as in (15).

2 Preliminary concepts

Let us first describe the Fredholm eigenvalue problem in a general setting. Let D be a compact subset of \(\mathbb {R}^d\), \(d=1,2,3\). We consider the Hilbert space \(L^2(D)\) of real-valued functions equipped with the usual inner product

Let \(C:D\times D\rightarrow \mathbb {R}\) be a symmetric and nonnegative-definite covariance kernel, i.e., for any finite subset \(\tilde{D}\subset D\) and for any function \(u:\tilde{D}\rightarrow \mathbb {R}\),

We have that C admits the spectral decomposition

where the nonnegative, monotonically decreasing eigenvalues \(\lambda _{i}\) and the orthonormal eigenfunctions \(u_{i}\) (\(i\ge 1\)) are the solutions of the homogeneous Fredholm integral equation

Furthermore, let \(Y:D \times \Omega \rightarrow \mathbb {R}\) be a second-order random field with expectation

and two-point covariance

where \(\Omega \) represents the set of outcomes and \(\mu \) is a probability measure. If the covariance kernel \(C(\varvec{x},\varvec{y})\) satisfies the above assumptions, then Y can be written as

in that \(\xi _{i}(\omega )\) are independent and identically distributed random variables (Loève 1978). This representation is known as the Karhunen–Loève (K–L) expansion. On the other hand, the truncated K–L expansion is given as

where \(M\) is known as the stochastic dimension of the truncated field. Choosing a large value for M demands a higher computational effort to compute and store the eigenfunctions, whereas choosing a small value for M may lead to an inaccurate representation of the random field.

2.1 1D Haar functions

The Haar system of orthonormal functions is built from the scaling function \(\phi (t)\) and the mother wavelet \(\psi (t)\) defined as

These functions satisfy the refinement equations

From \(\phi \) and \(\psi \) we define the functions \(\phi _{j,n}(x)=2^{j/2}\phi (2^{j}x-n)\) and \(\psi _{j,n}(x)=2^{j/2}\psi (2^{j}x-n)\), where the integers \(j\) and \(n\) denote the scaling and translation parameters, respectively. Using the refinement equations (4), we obtain

The vector spaces \(V_j\) spanned by \(\{\phi _{j,n}(t)\}_{n\in \mathbb {Z}}\) satisfy

constituting a multi-resolution analysis, whereas the vector spaces \(W_j\) spanned by \(\{\psi _{j,n}(t)\}_{n\in \mathbb {Z}}\) serve as orthogonal complements between consecutive levels \(j\) and \(j+1\), i.e.,

In particular, the following orthogonality relations hold:

2.2 Variational formulation and Galerkin approximation

Let us consider the variational formulation of the Fredholm integral equation (2): find \(\lambda _{i}\in \mathbb {R}\) and \(u_{i}(\varvec{x}) \in L^2(D)\) \((i= 1,2,\ldots )\) such that

Let \(\mathcal {W}_h\) be a finite-dimensional subspace of \(L^2(D)\) with \(\text{ dim }\mathcal {W}_h=N\). The Galerkin approximation to (8) in \(\mathcal {W}_h\) consists of finding \(\lambda _{i}^h\in \mathbb {R}\) and \(u_{i}^h \in \mathcal {W}_h\) \((1\le i\le N)\) such that

Given a basis \(\{v_1,\ldots , v_{N}\}\subset L^2(D)\) for \(\mathcal {W}_h\), let us write the approximate eigenfunction \(u^h_{i}\) as follows:

It follows from formulation (9) that \(\varvec{u}_{i}=[u_{1,i},\ldots ,u_{N,i}]^T\) satisfies the generalized eigenvalue problem \(\varvec{C}\varvec{u}_{i} = \lambda _{i}^h\varvec{W}\varvec{u}_{i}\), where the matrices \(\varvec{C}\) and \(\varvec{W}\) are defined by the coefficients

If the basis functions \(v_1,\ldots , v_{N}\) are orthonormal, the problem reduces to the standard eigenvalue problem \(\varvec{C}\varvec{u}_{i} = \lambda _{i}^h\varvec{u}_{i}\).

Given \(J>0\), we have from (6) and (7) the relation \(V_{J} = V_0\oplus W_0\oplus W_1 \oplus \cdots \oplus W_{J-2} \oplus W_{J-1}\). If we restrict to a bounded domain, then spaces \(V_j\) and \(W_j\) become finite dimensional. In particular, for \(D=[0,1]\) we have

This motivates the choices \(N=2^{J}\) and \(\mathcal {W}_h=V_{J} = \text{ span }\{ v_1, v_2, \ldots , v_{N}\}\), where \(v_1(x)=\phi _0(x)\) and \(v_{k}(x) = \psi _{j, n}(x)\) for \(k>1\), where \(k= 2^{j} + n\), with \(n= 0, 1, \ldots , 2^{j} - 1\) and \(j= 0, 1, \ldots , J-1\). Taking into account that the truncated K–L expansion (3) requires at least M eigenpairs, we select \(N\ge M\).

3 Two-dimensional Haar basis

Following Mallat (1989), we consider the 2D multi-resolution analysis \(\cdots \subset V_{-1}\subset V_0 \subset V_{1} \subset \cdots \) formed by the tensor-product spaces

and the orthogonal decompositions of the spaces \(V_{j+1}^x\) and \(V_{j+1}^y\) given by (7):

where \(W_j\) is defined as

Taking into account that

we find

Consequently, we obtain

where

By successively using (11), we find \(V_{J} = V_0\oplus W_0\oplus W_1 \oplus \cdots \oplus W_{J-2} \oplus W_{J-1}\). Note that \(V_0 = \text{ span }\{\psi ^{(0)}_{0,n,m}(x,y)\}_{n,m\in \mathbb {Z}}\), with

As in the 1D case, when we restrict ourselves to the domain \(D=[0,1]\times [0,1]\), the space \(V_{J}\) becomes finite dimensional. We choose

and

where the indices \(0\le j\le J-1\), \(0\le n,m\le 2^{j}-1\), and \(s=1,2,3\) are related to the global index \(k\) as follows:

Figure 1 illustrates the basis functions \(v_{k}(x,y)\) for \(2\le k\le 4\) and \(5\le k\le 7\). These indices correspond to \(j=0\) and \(j=1\), respectively, when \(n=m=0\) and \(1\le s\le 3\). Note that the basis functions are ordered in such a way that the functions which share the same support are grouped together.

From (5), (12), and (13), we find the following refinement equations for the 2D functions:

3.1 Constructing the discrete eigenvalue system

To compute the entries \(C_{k,l}\) defined in (10) of the discrete eigenvalue system, let us recast these entries in tensor form:

These coefficients are related to \(C_{k,l}\) as follows:

The first step is to compute \(C^{0,J,n_{k},m_{k}}_{0,J,n_{l},m_{l}}\) for \(0\le n_{k},m_{k},n_{l},m_{l}<2^{J}\):

where \(D_{p}=[n_{p}2^{-J},(n_{p}+1)2^{-J}]\times [m_{p}2^{-J},(m_{p}+1)2^{-J}]\), \(p=k,l\). We approximate the integral above by the rectangle rule:

Recalling that \(V_0 = \text{ span }\{\psi ^{(0)}_{0,n,m}(x,y)\}_{n,m\in \mathbb {Z}}\), we have that the entries in (23) define the discrete eigenvalue system \(\varvec{C}\varvec{u}_{i} = \lambda _{i}^h\varvec{u}_{i}\) corresponding to the Galerkin method with the space \(\mathcal {W}_h = V_0\). In the following we describe a pyramid algorithm to recover the remaining coefficients without further numerical integration.

We start using the refinement equations (17)–(20) and the bilinearity of \(a(\cdot ,\cdot )\) to compute, for each \(0\le n_{l},m_{l}<2^{J}\), the coefficients \(C^{s_{k},j_{k},n_{k},m_{k}}_{0,J,n_{l},m_{l}}\) for \(0\le n_{k},m_{k}<2^{j_{k}}\), \(0\le s_{k}\le 3\), and \(j_{k}=J-1,\ldots ,0\). For instance, we have from (17 18 19) that

Afterwards, we let \(j_{l}\) vary from \(J-1\) to 0 following the same procedure. Altogether, we have the following algorithm:

As pointed out in Beylkin et al. (1991), the complexity of this algorithm is \(\mathcal {O}(N^2)\), \(N=2^{2J}\). Once we obtained the coefficients \(C_{k,l}\) in (22), we determine the eigenvalues \(\lambda _{i}^h\) and eigenvectors \(c_l^{(i)}\) in (9).

Remark 1

For implementation purposes, the coefficients in (21) can be written as matrices with the aid of global indices similarly to (16):

Remark 2

Let us compare the computational effort of the above algorithm with the standard finite element method of degree p for (8). If we employed a mesh with \(h\times h\) square elements \(\Omega _e\), \(h=2^{-J}\), we would have a total of \(N=2^{2J}\) elements. Let us denote the i-th global shape function as \(N_i(\varvec{x})\). The components of the system matrix \(\varvec{C}\) in (10) may be computed as follows:

where \(J_e(\varvec{\xi })\) is the Jacobian determinant of the transformation from element \(\Omega ^e\) to the reference element \(\hat{\Omega }=[-1,1]\times [-1,1]\), \(n_\mathrm{int}\) is the number of integration points in each spatial direction, and \(\{\varvec{\xi }_j,w_j\}\) are the integration points and weights of the \(n_\mathrm{int}\times n_\mathrm{int}\) product Gaussian quadrature in \(\hat{\Omega }\).

In the assembly step of a standard finite element algorithm, we compute, for \(k,l=1,\ldots ,(p+1)^2\), the elemental contributions

where \(\hat{N}_{i}(\varvec{\xi })\) is the i-th local shape function in the reference element \(\hat{\Omega }\). Note that the number of terms in the form \(C_{kl}^{e,e'}(j,j')\) is \(n_\mathrm{int}^4(p+1)^4N^2\). Although this procedure is also \(\mathcal {O}(N^2)\), the dependence on \(n_\mathrm{int}\) and on p renders its computational cost prohibitive, as illustrated in Table 1.

4 Numerical experiments

Let us proceed with the numerical validation of the proposed algorithm. The numerical experiments were carried out in a dual-core notebook with 8 Gb RAM and a 2.30 GHz Intel Core i5 processor. We consider the domain \(D = [0,1]\times [0,1]\) and the exponential covariance function

where the parameters \(\eta \) and \(\sigma ^2\) are the correlation scale and variance, respectively. The eigenvalues and eigenfunctions of this covariance function are, respectively, \(\lambda _{i} = \lambda _{i_1}^{1\mathrm{D}}\lambda _{i_2}^{1\mathrm{D}}\) and \(u_{i}(x_1,x_2) = u_{i_1}^{1\mathrm{D}}(x)u_{i_2}^{1\mathrm{D}}(x_2)\), where

Eigenvalues of the exponential covariance function (24) with variance \(\sigma ^2=1\) and correlation length \(\eta = 0.1\), 0.4 and 1

Index \(i=i(i_1,i_2)\) is set to arrange the eigenvalues \(\lambda _{i}\) in decreasing order. Moreover, parameters \(\gamma _1,\gamma _2,\ldots \) satisfy \((\eta ^2\gamma ^2 - 1)\sin (\gamma ) = 2\eta \gamma \cos (\gamma )\). Since these eigenpairs are well known (see, e.g., Ghanem and Spanos 1991), the exponential kernel (24) is a common benchmark for Fredholm integral eigenvalue problems (Schwab and Todor 2006; Zhang and Lu 2004), although it is not suitable for representing stationary processes due to its non-differentiability at the origin (Spanos et al. 2007). As in Zhang and Lu (2004) we choose \(\sigma =1\) and three different values of the correlation length (\(\eta = 0.1,\ 0.4\) and 1). Figure 2 shows the eigenvalues \(\lambda _n\), whose decay is faster as the correlation length \(\eta \) increases.

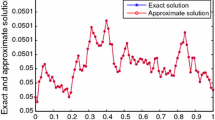

The eigenvalue problem (2) with covariance kernel (24) is discretized with the Galerkin scheme (9) with refinement level \(J\), i.e., with the finite-dimensional space (14), (15). Eigenvalues \(\lambda ^{h}_{i}(J)\) are compared with exact solutions \(\lambda _{i}\) in terms of relative error (see Fig. 3),

for each \(\lambda _i\) (\(i= 1,\) and 10). The error curves have a decay of order \(\mathcal {O}(h^2)\), \(h = 2^{-J}\), coinciding with the estimates obtained from Oliveira and Azevedo (2014, p. 50) for two-dimensional, piecewise-constant finite elements with reduced integration. A similar order of convergence has also been observed in the one-dimensional case by Phoon et al. (2002).

It is worth noting that the required computational effort dramatically increases in the two-dimensional case. At the refinement level J, the eigenvalue system has dimensions \(N\times N\) (\(N=2^{2J}\)) and demands \(\mathcal {O}(N^2)\) operations to be constructed. As J increases, memory soon becomes an issue, and strategies to render the system matrix sparse (as discussed in Sect. 4.2) become important.

Relative errors of the 2D Haar approximation of the a first and b tenth eigenvalues of the exponential covariance function (24) with variance \(\sigma ^2=1\) and correlation length \(\eta = 0.1\), 0.4 and 1

Let us study the eigenfunction error as well. For simplicity, we consider the relative error of the first eigenfunction only (which is associated with an eigenvalue of multiplicity one). Following Spence (1978, 1979), we consider the infinity norm. Figure 4 shows a monotone decreasing of the error for all correlation lengths. The convergence rate was again \(\mathcal {O}(h^2)\), \(h = 2^{-J}\). Such a result is consistent with the experiments reported in Spence (1978, 1979) for Nyström method with the trapezoidal rule.

Relative error of the 2D Haar approximation of the first eigenfunction of the exponential covariance function (24) with variance \(\sigma ^2=1\) and correlation length \(\eta = 0.1\), 0.4 and 1

4.1 Ensemble variance of K–L expansion

In our next experiment, similarly to the one presented by Stefanou and Papadrakakis (2007), we evaluate the Haar approximation of the ensemble variance of the truncated process \(Y_{M}\),

with \(M\le N,\) and admitting the variance target \(\sigma ^2=1\). In Figs. 5, 6 and 7 we employ the refinement level \(J= 5\) and display the ensemble variance profiles along the diagonal and the horizontal directions. The profiles along the vertical direction were identical to the horizontal profiles and are not shown here. As shown in Figs. 6 and 7, the variance of \(Y^{h}_{M}\) converges faster to the reference variance in strongly correlated random fields, i.e., the number of terms needed in the K–L expansion to provide the correct variance decreases with the correlation length, consistently with the experiments in the one-dimensional case presented in Stefanou and Papadrakakis (2007). In fact, since eigenvalues decrease faster as the correlation length increases (Fig. 2), the final terms \(\lambda _{i}^h(u_{i}^h(\varvec{x}))^2\) in sum (26) become less significant.

In analogy with Huang et al. (2001, Fig. 8), Fig. 8 compares the numerical ensemble variance \(Y^{h}_{M}\) at the origin with the analytical ensemble variance \(Y_{M}\), i.e.,

It is interesting to note that \(\mathrm{Var}(Y^{h}_{M}(\varvec{0}))\) approaches the target variance more rapidly than \(\mathrm{Var}(Y_{M}(\varvec{0}))\). We also noted that the eigenvalues obtained by Haar basis are larger than those generated by analytical solution for each stochastic dimension \(M\), as also reported in Phoon et al. (2002) for the one-dimensional case.

4.2 Sparsity of the matrix

The advantage of using a basis of wavelet functions rather than scaling functions (i.e., a basis derived from \(V_0\otimes W_0\otimes \cdots \otimes W_{J-1}\) instead of \(V_{J}\)) is the flexibility to reduce the number of parameters in the representation of the covariance without necessarily loosing the fine scale details. Specifically, we can render the matrix \(\varvec{C}\) sparse by dropping matrix coefficients below a threshold value \(\epsilon \) (Beylkin et al. 1991; Stefanou and Papadrakakis 2007), leading to a truncated matrix \(\varvec{C}_{\epsilon }\). We employ \(J= 5\), as in the previous experiments and select \(M= 2^{10}\).

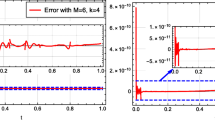

Let us first investigate how the accuracy of the eigenfunctions is affected by truncation. Figure 9 shows the relative change \(r_{\epsilon } = |\lambda _{i}^{h}(J) -\lambda _{i}^{h}(J,\epsilon )|/|\lambda ^{h}_{i}(J)|\) (\(J=5\)) of the first and tenth eigenvalues of the truncated matrix \(\varvec{C}_{\epsilon }\) with respect to the eigenvalues of the full matrix \(\varvec{C}\). In particular, the threshold value \(\epsilon = 10^{-6}\) provides comparatively good approximations for these eigenvalues. For instance, the relative change of the tenth eigenvalue for \(\eta =1\), namely \(1.03\times 10^{-4}\), is 0.7% of the relative error \(1.4\times 10^{-2}\) shown in Fig. 3b.

Figure 10 presents the dependence of the ensemble variance (26) on the threshold value \(\epsilon \) when \(M= N/2\) \((N=2^{10})\) and \(\eta = 0.1\). Again the threshold value \(\epsilon =10^{-6}\) provides sufficient accuracy. Similarly to Stefanou and Papadrakakis (2007, Fig. 1), the higher value \(\epsilon = 10^{-4}\) leads to numerical instability. Indeed, we observed that the matrix ceases to be positive definite for this threshold value.

Now we consider the sparsity of the truncated matrix \(\varvec{C}_{\epsilon }\). Figure 11 shows the number of nonzero elements in \(\varvec{C}_{\epsilon }\) for bases with scaling functions and wavelets. The sensitivity of the matrix \(\varvec{C}\) to the threshold value \(\epsilon \) is higher in the wavelet basis, and the matrix of the scaling function basis becomes sparse only from \(\epsilon = 10^{-4}\) onwards.

Figure 12 shows the sparsity pattern for both bases when \(\epsilon = 10^{-6}\) and \(\eta = 0.1\). In this case, the wavelet basis requires about half the storage of the basis of scaling functions. One can also notice the typical finger pattern for the wavelet basis (Beylkin et al. 1991).

4.3 Example: quarter five-spot problem

We now present a steady-state diffusion problem in a randomly heterogeneous medium with the classical quarter of a five-spot arrangement. The boundary value problem governing this model is stated as:

The log hydraulic conductivity \(Y(\varvec{x}; \omega ) = \log (\kappa (\varvec{x}; \omega ))\) is a Gaussian field with mean zero and covariance function of exponential type (24) with variance \(\sigma ^2=1\) and correlation length \(\eta = 0.1,\ 0.4, 1 [L]\), where [L] is a consistent length unit (Zhang and Lu 2004). The domain \(D=[0,L_1]\times [0, L_2]\), with \(L_1=L_2=1 [L]\), has no-flow boundaries at the four sides. The source term consists of one injection well at the lower left corner and one pumping of source at the upper right corner, with strength \(1 [L^3/T]\) (herein [T] is a consistent time unit) (Fig. 13).

The reference solution was computed by the Monte Carlo method with \(N_r = 20{,}000\) samples generated from a Gaussian sequential simulation (for details see Zhang 2002, p. 190). For each realization, we employed bilinear finite elements in a mesh of \(2^{J}\times 2^{J}\) elements, with \(J=5\). The statistical moments of reference solution are computed from

Afterwards, we compute the samples \(p_{M}(\varvec{x};\varvec{\xi }^{(i)})\), \(1\le i\le 5000\), in the case where the log-conductivity is given by the truncated K–L expansion \(Y_{M}(\varvec{x};\varvec{\xi }^{(i)})\) in (3), and the eigenpairs of (2) are approximated with the Galerkin method with 2D Haar wavelet basis functions. We evaluate the mean \(\mu _{p}(\varvec{x})\) and variance \(\sigma _{p}^{2}(\varvec{x})\) of these samples as in (28). It is important to note that the Haar basis allows other methods for quantification of uncertainty, such as the quasi-Monte Carlo and sparse grid collocation methods (Azevedo and Oliveira 2012), but this is not the focus of study in this paper. Once these statistical moments are obtained, we graphically illustrate the quality of the approximation by plotting profiles along the diagonal line considering \(M=2^{-5}N,2^{-1}N,\; N=2^{2J}\).

Figures 14, 15 and 16 show the mean and variance of the approximate hydraulic head with correlation lengths \(\eta = 0.1,\ 0.4\), and 1[L] respectively. In all cases, the mean exhibits minimum and maximum values at the injection and production well, respectively, and the variance shows a singular peak in vicinity of the plateau away from them. In Fig. 14 we observed a slight discrepancy between stochastic dimensions, specially for \(M=2^{-5}N\). This can be due to the correlation length which induces loss of regularity and accuracy of the numerical solution, because of the high variability of the media. However, the mean and variance exhibit good agreement with the reference solution when the correlation length increases (see Figs. 15, 16), except near the wells where strong gradients are observed.

The last experiment in this section consists of repeating the simulation in the case of shortest correlation length (\(\eta =0.1 [L]\)), but computing the truncated K–L expansion from the sparse covariance matrix associated to the threshold value \(\epsilon =10^{-6}\). As expected, the statistical moments of this solution (Fig. 17) are qualitatively similar to the moments that were found with the full covariance matrix (Fig. 14).

4.4 CPU time and condition number

We have seen in Sect. 3.1 that the \(N\times N\) system matrix for the wavelet basis has an additional cost of \(\mathcal {O}(N^2)\) with respect to the system matrix for the basis of scaling functions. On the other hand, Sect. 4.2 pointed out that the wavelet basis provides a sparser system, which not only requires less computer memory, but also is more rapidly processed in iterative solvers (see Beylkin et al. 1991). In this section we evaluate the trade-off between these aspects, taking into account total CPU time and conditioning.

Motivated by the results from the previous sections, we employ the threshold value \(\epsilon = 10^{-6}\), which is also recommended elsewhere (Beylkin et al. 1991). In addition to the exponential covariance (24) with correlation length \(\eta = 0.1\) and \(\eta = 1\), we consider the sinc covariance defined in \([0,1]\times [0,1]\) by

with \(c=10\) and \(c=30\).

Table 2 shows the CPU times (in seconds) \(T_{\psi }\) and \(T_{\phi }\) for building the matrices and finding the \(M=2^{-1}N\) largest eigenpairs with the bases of wavelets and scaling functions, respectively. We employed Matlab’s built-in iterative eigenvalue solver eigs(). The efficiency of the wavelet basis becomes apparent at \(J=7\), for which the total CPU time can reduce to nearly half the time spent with the basis of scaling functions. Similar results (not shown herein) were found with the exponential kernel and with the sinc kernel with \(c = 30\).

In Table 3, we compute the condition numbers relative to the wavelet basis (\(\kappa (\varvec{C}_{\psi })\)) and scale basis (\(\kappa (\varvec{C}_{\phi })\)). As noted in Stefanou et al. (2005) for both separable and Gaussian exponential covariances, matrix truncation deteriorates the conditioning of the eigensystem. An increase in the threshold value \(\epsilon \) makes the conditioning worse, as seen in Sect. 4.2. We further notice that the basis with scaling functions is generally robust under truncation.

5 Conclusions

In this paper, we studied a Galerkin method with bidimensional Haar wavelets to obtain approximate solutions to the truncated K–L expansion.

Many aspects related to the wavelet Galerkin approximation in the one-dimensional case (Stefanou and Papadrakakis 2007; Phoon et al. 2002) have also been observed in two dimensions, specially the strong dependence of the errors on the correlation length. However, the variation of the ensemble variance of the truncated K–L expansion cannot be completely described only in one space dimension (see Fig. 10).

From our numerical experiments with the truncated covariance matrix, we noticed that dropping the coefficients below the threshold value \(\epsilon =10^{-6}\) preserved the accuracy of the method, indicating that this threshold value is sufficient to obtain statistical moments with sufficient accuracy.

Our experiments on CPU time and conditioning suggest that the basis with Haar wavelets becomes more efficient, but less stable than the basis with Haar scaling functions for higher refinement levels (\(J\ge 6\)). Taking into account that bases with scaling functions are also more flexible to handle general meshes and non-rectangular domains (Azevedo and Oliveira 2012; Frauenfelder et al. 2005), scaling functions seem to be a more appropriate choice for multi-dimensional integral equations than wavelet functions.

References

Azevedo JS, Murad MA, Borges MR, Oliveira SP (2012) A space-time multiscale method for computing statistical moments in strongly heterogeneous poroelastic media of evolving scales. Int J Numer Methods Eng 90(6):671–706

Azevedo JS, Oliveira SP (2012) A numerical comparison between quasi-Monte Carlo and sparse grid stochastic collocation methods. Commun Comput Phys 12:1051–1069

Babolian E, Bazm S, Lima P (2011) Numerical solution of nonlinear two-dimensional integral equations using rationalized Haar functions. Commun Nonlinear Sci Numer Simul 16(3):1164–1175

Beylkin G, Coifman R, Rokhlin V (1991) Fast wavelet transforms and numerical algorithms. I. Commun Pure Appl Math 44(2):141–183

Derili HA, Sohrabi S, Arzhang A (2012) Two-dimensional wavelets for numerical solution of integral equations. Math Sci 6:4

Frauenfelder P, Schwab C, Todor R (2005) Finite elements for elliptic problems with stochastic coefficients. Comput Methods Appl Mech Eng 194:205–228

Ghanem R, Spanos P (1991) Stochastic finite element: a spectral aproach. Springer, New York

Huang H, Quek S, Phoon K (2001) Convergence study of the truncated Karhunen–Loeve expansion for simulation of stochastic processes. Int J Numer Methods Eng 52(9):1029–1043

Karhunen K (1946) Zur spektraltheorie stochastischer prozesse. Ann Acad Sci Fennicae 34:1–7

Liang F, Lin FR (2010) A fast numerical solution method for two dimensional Fredholm integral equations of the second kind based on piecewise polynomial interpolation. Appl Math Comput 216(10):3073–3088

Loève M (1955) Probability theory. Van Nostrand, Princeton

Loève M (1978) Probability theory II, 4th edn. Springer, New York

Mallat SG (1989) A theory for multiresolution signal decomposition: the wavelet representation. IEEE Trans Pattern Anal Mach Intell 11(7):674–693

Motamed M, Nobile F, Tempone R (2013) A stochastic collocation method for the second order wave equation with a discontinuous random speed. Numer Math 123(3):493–536

Oliveira SP, Azevedo JS (2014) Spectral element approximation of Fredholm integral eigenvalue problems. J Comput Appl Math 257:46–56

Phoon KK, Huang SP, Quek ST (2002) Implementation of Karhunen–Loève expansion for simulation using a wavelet-Galerkin scheme. Probab Eng Mech 17(3):293–303

Proppe C (2012) Multiresolution analysis for stochastic finite element problems with wavelet-based Karhunen–Loève expansion. Math Probl Eng 2012:1–15

Schwab C, Todor R (2006) Karhunen–Loève approximation of random fields by generalized fast multipole methods. J Comput Phys 217(1):100–122

Spanos P, Beer M, Red-Horse J (2007) Karhunen–Loève expansion of stochastic processes with a modified exponential covariance kernel. J Eng Mech 133(6):773–779

Spence A (1978) Error bounds and estimates for eigenvalues of integral equations. Numer Math 29(2):133–147

Spence A (1979) Error bounds and estimates for eigenvalues of integral equations. ii. Numer Math 32(2):139–146

Stefanou G, Kallimanis A, Papadrakakis M (2005) On the efficiency of the Karhunen–Loève expansion methods for the simulation of Gaussian stochastic fields. In: Proceedings of 9th international conference on structural safety & reliability (ICOSSAR 2005), pp 2503–2508. Rome

Stefanou G, Papadrakakis M (2007) Assessment of spectral representation and Karhunen–Loève expansion methods for the simulation of Gaussian stochastic fields. Comput Methods Appl Mech Eng 196(21–24):2465–2477

Xie WJ, Lin FR (2009) A fast numerical solution method for two dimensional Fredholm integral equations of the second kind. Appl Numer Math 59(7):1709–1719

Zhang D (2002) Stochastic methods for flow in porous media: coping with uncertainties. Academic, San Diego

Zhang D, Lu Z (2004) An efficient, high-order pertubation approach for flow in random porous media via Karhunen–Loève and polynomial expansions. J Comput Phys 194(2):773–794

Acknowledgements

This work was supported by research networks CENPES-PETROBRAS and INCT-GP, and by Brazilian agencies Fundação Araucária (Project number 39.591) and CNPq (Grants 306083/2014-0 and 441489/2014-1).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Abimael Loula.

Rights and permissions

About this article

Cite this article

Azevedo, J.S., Wisniewski, F. & Oliveira, S.P. A Galerkin method with two-dimensional Haar basis functions for the computation of the Karhunen–Loève expansion. Comp. Appl. Math. 37, 1825–1846 (2018). https://doi.org/10.1007/s40314-017-0422-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40314-017-0422-4