Abstract

In this paper, we investigate the possible treatment of a class of fractional-order delay differential equations. In delay differential equations, the evolution of the state depends on the past time, which increases the complexity of the model. The fractional term is defined in the Caputo sense, and to find its solution we discretize the unknown solution using a truncated series based on orthogonal Chelyshkov functions. Then, the resulting system in terms of the unknown coefficients is solved that guarantees to produce highly accurate solutions. A detailed error analysis for the proposed technique is studied to give some insight into the error bound of the proposed technique. The method is then tested on some examples to verify the efficiency of the proposed technique. The method proves the ability to provide accurate solutions in terms of error and computational cost and through some comparisons with other related techniques. Thus, the method is considered a promising technique to encounter such problems and can be considered as an efficient candidate to simulate such problems with applications in science.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Fractional calculus has become a rapidly increasing branch of science that gained an increasing interest in recent years with various areas of applications ranging from physical attainments and engineering to natural phenomena and financial viewpoints. A regularly expanding number of fractional frameworks show up typically in disciplines such as viscoplasticity, the vibration of earthquake motions, circuits in electrical engineering, and so on. The use of fractional operator extended in simulating these problems or phenomena in the form of differential equations, especially with a delayed part which may refer to memory terms. While trying to describe any physical process, one may assume that the process depends only on the present state which is verified for a large of dynamical processes. This assumption is not verified for all the physical processes which may lead to some drawbacks on the performance while analyzing the system. In this situation, the assumption may be better changed to consider that the behavior of the system may also depend on the previous information of the previous state which may provide better simulation but may increase the complexity of the model. These modified systems can be called the delay problem that has many applications in different areas of science and engineering. For example, Ockendon et al. [1] described the behavior and dynamics of a current collection system for an electric locomotive through some delayed systems. Also, Ajello et al. [2] introduced a delay model describing the stage-structured population growth with density dependent on time delay. In addition, some other applications of delayed models in science [3] and biology can be found in [4, 5].

In recent years, there have been several attempts to solve integer and non-integer real-life models of different types. For example, Sedaghat et al. [6] adapted a colocation method based on Chebyshev polynomials for solving a delay pantograph differential equations. Also, Bernoulli polynomials have been used to solve the pantograph delay differential equations as in [7]. In addition, Adel et al. [8] adapted a collocation method based on Bernoulli polynomials for solving pantograph-type equations of Lane–Emden type with applications in astrophysics. Also, in [9] a delayed version of the famous Ambartsumian model has been solved using Bernoulli functions. Yüzbaşı et al. have used various basis functions to solve generalized and multi-pantograph differential equations through collocation approaches in [10,11,12,13]. Izadi et al. in [14,15,16,17,18,19,20,21] employed some novel techniques for solving different types of equations using various polynomials acquiring good results. Other methods and models include neural network method for solving single delay differential equation [22], Zhou method [23], fractional-order Boubaker polynomials [24], Taylor wavelet method [25], spectral collocation methods [26, 27], variational iteration method [28], one-leg θ-method [29], hybrid of block-pulse functions and Taylor series [30], Hermite wavelet method [31], and Legendre pseudospectral method [32].

Orthogonal functions play an important role in finding numerical solutions to differential equations. These functions play the role of converting the solution of the model into solving an algebraic system of equations. For example, Saadatmandi et al. [33] adapted the Legendre operational matrix of fractional derivative defined in the Caputo sense for solving the class of fractional-order differential equations. Also, Maleknejad et al. [34] provided a novel wavelet approach based on Müntz–Legendre polynomials for solving distributed order fractional differential equations in the time domain. Chebyshev polynomials have also been used to solve differential equations of different types. Li et al. [35] employed the Chebyshev wavelet method for solving nonlinear fractional differential equations. In addition, the second kind Chebyshev wavelet has been used by Rashidinia et al. [36] to simulate the result of a general form of a distributed order fractional differential equations. A similar approach can be found by Li et al. in [35], and also the (shifted) Chebyshev polynomials have been utilized by Bhrawy et al. [37] for solving the linear fractional differential equations. In addition, the (shifted) Jacobi polynomials have been used to solve the fractional differential equations by Behroozifar et al. [38]. The block pulse polynomials have been used several times for solving different problems of the fractional type including Li et al. [39] for solving the fractional differential equations and Maleknejad et al. [40] for solving the nonlinear two-dimensional fractional integro-differential equations.

Additionally, among these functions is the orthogonal Chelyshkov polynomials which were presented by Chelyshkov in [41] for the first time and have been used for solving differential equations. Using these polynomials, some numerical solutions have been found to some models including linear functional integro-differential equations with variable coefficients [42], weakly singular integral equations in [43], Volterra–Fredholm integral equations in [44] and two-variable distributed order fractional differential equations in [45]. Moreover, the operational matrix of fractional derivatives utilizing Chelyshkov polynomials to solve multi-order fractional differential equations has been considered by Talaei et al. [46]. The technique of operational matrix of fractional integration for solving a class of nonlinear fractional differential equations has been proposed by Meng et al. [47] for finding the solution of nonlinear fractional differential equations and by Al-Sharif et al. [48] introducing the integral operational matrix of fractional order.

The main subject of this work is to solve the fractional delay differential equations (FDDEs) numerically with the approximation algorithm based on the Chelyshkov functions. Some novel aspects of the designed algorithm are as follows:

-

A wide class of delay fractional differential equations are being considered with the fractional derivative defined in terms of the Caputo fractional derivative.

-

A new method based on the Chelyshkov functions and their differentiation matrix is used to solve this type of equation dealing with different complexities of the problem.

-

The comparison of the proposed results from the used technique is presented to check the correctness of the models proving the superiority of the proposed technique over other methods even for some examples with the exact solution depending on the fractional order.

-

The error bound for the proposed technique is examined in details ensuring that the provided technique provides convergent solutions.

It is worth mentioning that to the best of our knowledge, this is the first time the proposed technique relying on Chelyshkov functions is being considered for solving this wide class of fractional delay problems. In this paper, we consider the following FDDEs

where \(\gamma \ge \mu >0\), and \(\nu >0\) are some known constants as the orders of the fractional derivatives interpreting in the Caputo’s sense. Also, the parameters \(\omega\), \(\tau (t)\) and \((\omega \,t-\tau (t))\) are the constant and variable delay arguments of the model, and G is a given function. The FADEs in (1) are dealt with the initial conditions

Some researchers have studied on existence and uniqueness of delay fractional differential equations and fractional pantograph equations. We refer the readers to [49,50,51].

The outline of this study is organized as follows. In Sect. 2, first, some preliminary facts regarding the fractional operators are illustrated. Then, some definitions for the Chelyshkov functions are given from a different perspective. In Sect. 3, the steps of the proposed technique are illustrated in detail to obtain the approximate solution of (1). The error bounds in the \(L^2\) weighted and \(L_{\infty }\) norms are obtained for the present method in Sect. 4. The numerical examples are solved by the suggested method in Sec. 5. In Sect. 6, we give a conclusion for the study.

Some preliminary facts

A review of fractional calculus

In this part, some fundamental information related to fractional calculus will be given. The fractional derivative of f(t) in the Caputo sense is defined as follows [52]:

being \(m-1<\alpha <m\), \(m\in \mathbb {N}\), \(t>0\), \(f\in C_{-1}^{m}\), and \(D=\frac{{\text {d}}}{{\text {d}}x}\). When c is a constant, we have

Moreover, if it is \(f(t)=t^{\beta }\), then the Caputo fractional derivative of f becomes as follows [53]:

A review of Chelyshkov functions

In 2006 year, Chelyshkov defined a new polynomial via Jacobi polynomials \(P_m^{\alpha ,\beta }(t)\) in [41] and named them as Chelyshkov polynomials. These polynomials are defined by

It is also shown in [41] that \(y(z)=z\,\rho _{\ell ,L}(z)\) satisfies the following second-order differential equation

This implies that these polynomials are the solutions of the following differential equation in the Sturm-Liouville form

Chelyshkov polynomials were expressed more explicity in [41, 42] as follows:

The set of Chelyshkov polynomials \(\{\rho _{\ell ,L}(z)\}_{\ell =0}^L\) for \(L=0,1,\ldots\) forms an orthogonal system on [0, 1] with respect to the weight function \(w(z) = 1\), namely

We now use of substitution \(z=t^{\alpha }\) with \(\alpha >0\) in (5) to get fractional-order Chelyshkov differential equation. In the singular Sturm–Liouville form, it can be written as follows

where \(\rho ^{\alpha }_{\ell ,L}(t):=\rho _{\ell ,L}(t^{\alpha })\). Note that by setting \(\alpha =1\) in (7) we will recover (5). Thus, after some manipulations in (6), the explicit expression of the solution of (7) becomes as follows

In here, the set \(\{\rho ^{\alpha }_{\ell ,L}(t)\}_{\ell =0}^L\) for \(L=0,1,\ldots\) are orthogonal with respect to \(w_{\alpha }(t)\equiv t^{\alpha -1}\) for \(0\le t\le 1\) in the sense that

In order to devise the Chelyshkov matrix approach, we constitute the vector of Chelyshkov bases as

Here, \(\pmb {M}^{\alpha }_{L}(t)=\left[ 1\quad t^{\alpha }\quad t^{2\alpha }\quad \ldots \quad t^{L\alpha }\right]\) denotes the monomial basis and \(\pmb {E}_L\) is a lower triangular matrix of size \((L+1)\times (L+1)\) defined as follows. According to the case of being an odd number and even number of L, respectively, the matrix \(\pmb {E}_L\) becomes as follows:

and

Clearly, the determinant of \(\pmb {E}_L\) as the product of the diagonal elements in both cases is nonzero, since the product of the diagonal elements is not zero.

By utilizing our method, we will aim to obtain the approximate solutions of (1) in terms of Chelyshkov functions with fractional powers in the form

Thus, we use the Chelyshkov matrix technique to determine \((L+1)\) unknown coefficients \(a_{\ell }\). In this respect, let us define the vector \(\pmb {A}_L=[a_0\quad a_1\quad \ldots \quad a_L]^t\). With the aid of (9), we are able to rewrite \({\chi }_{L,\alpha }(t)\) in (10)

The Chelyshkov Matrix Technique

Firstly, we constitute the matrix representations of all unknowns functions in (1) by utilizing the solution form (11). By using (9), let us rewrite the solution form as

To our approximation algorithm, we associate a set of \((L+1)\) collocation points \(\{t_r\}_{r=0}^L\) on [0, 1]. To this end, we employ

By evaluating the relation (12) at the collocation points (13) gives us the following matrix expression for the solution \(\chi (t)\) as

Next, our goal is to obtain the matrix representations for \({}^C {D}^{(s)}_{t}{\chi }_{L,\alpha }(t)\) for \(s=\gamma\), \(\mu\). According to (12), we require to compute the s-order fractional derivatives denoted by \(\pmb {M}^{(s)}_{\alpha }(t)\) for \(s=\gamma ,\mu\). In these cases, we get

Mathematically, the calculation of \(\pmb {M}^{(s)}_{\alpha }(t)\) is carried out through using properties (3)–(4). Practically, we utilize the Algorithm (3.1) to compute it efficiently. One can easily seen that the complexity of this algorithm not more than \(\mathcal {O}(L+1)\) [14, 54].

After evaluating the Eq. (15) at the collocation points, we have proved the following results

Theorem 3.1

The matrix forms of \({}^C {D}^{(s)}_{t}{\chi }_{L,\alpha }(t)\), \(s=\gamma ,\mu\), evaluated at the collocation points (13) are represented by

where

To express \(\chi (\omega \,t-\tau (t))\) in a matrix form, the next theorem will be established.

Theorem 3.2

For any function \(\tau (t)\) and constant \(\omega\), the matrix form of \({\chi }_{L,\alpha }(\omega \,t-\tau (t))\) evaluated at the collocation points (13) has the matrix representation

Here, the matrices \(\widehat{\pmb {M}},~\widehat{\pmb {F}}_{\omega ,\tau },~\widehat{\pmb {E}}_L\), \(\widehat{\pmb {A}}_L\) are as in (20) and the vector \(\pmb {X}_{\omega ,\tau }\) is given by

Proof

Let us prove the result when \(\alpha =1\). Due to (12), we obtain

Our attempt is to write \(\pmb {M}^{1}_L(\omega \,t-\tau (t))\) in terms of \(\pmb {M}^{1}_L(t)\), which is already defined in (9). Using of binomial formula reveals that

Now, we introduce the matrix \(\pmb {F}_{\omega ,\tau ,L}(t)\) depends on the parameters \(\omega\), \(\tau (t)\), and L as follows:

This enables us to express the vector \(\pmb {M}^{1}_L(\omega \,t-\tau (t))\) in the form

After inserting the former relation (19) into (18), we have

We then insert the collocation points (13) into the preceding equation and obtain the following block diagonal matrices

By considering the definition of \(\pmb {X}_{\omega ,\tau }\) the proof is complete for \(\alpha =1\). For other values of \(\alpha\), see the following Remark.\(\square\)

Remark 3.3

Let us remark that the complexity of computing \(\pmb {X}_{\omega ,\tau }(\omega \,t-\tau (t))\) via Theorem (3.2) is \(\mathcal {O}((L+1)^3)\). However, in practice, we calculate it through using the symbolic toolbox in MATALB. In this case, the corresponding cost is of order \(\mathcal {O}((L+1)^2)\). In this respect, we first compute the monomial vector \(\pmb {M}^{\alpha }_L(t)\) with the aid of symbolic notations. Once the vector \(\pmb {M}^{\alpha }_L(t)\) is created, we substitute \((\omega \,t-\tau (t))\) into it to get

By placing the collocation points (13) in the last equation, we get the matrix

Thus, the matrix \(\pmb {X}_{\omega ,\tau }\) in Theorem (3.2) with lower efforts can be expressed as

The same conclusion can be drawn for \(\alpha =1\) as well.

Now, let us construct an explicit expression for \({}^C {D}^{(\nu )}_{t}{\chi }_{L,\alpha }(\omega \,t-\tau (t))\) at the collocation points (13). By differentiating (12) with regard to the fractional derivative \(\nu\) and then substituting \((\omega \,t-\tau (t))\) into the resultant equation, we get

Thus, we use the collocation points (13) in (22) to get

where

Now let us collocate the FDDEs model problem (1) at the set of collocation points (13) as follows:

for \(r=0,1,\ldots ,L\). We put the matrix forms (14), (16), (17) or (21), and (23) into (24) and thus get a fundamental nonlinear matrix equation.

In the linear case, we assume that

where \(\theta _j(t)\), \(j=0,1,2,3\) and q(t) are some known functions. By collocating the preceding equation at the collocation points and then utilizing (14)-(23), we have

In (25), we have used the coefficient matrices as well as the vector \(\pmb {Q}\) as

We ultimately obtain the fundamental linear matrix equation for the underlying model problem (1).

Proposition 3.4

Suppose that the solution of (1) can be obtained in the form based on Chelyshkov functions (12). Then, we get

Proof

The proof of the fundamental matrix equation (26) is straightforward just by placing (14), (16), (21), and (23) into (25).\(\square\)

Note that in the case of \(\alpha =1\) and using Theorem 3.1, we get the following modified matrix equation instead of (26) as

It is worth pointing out that the matrix equation (26) or (27) is linear in terms of unknown coefficient \(\pmb {A}_L\) to be calculated. However, what is left to implement the initial conditions (2) so that they take part in the fundamental matrix equation (26). This task is the subject of the next part.

Initial conditions in the matrix form

Our main concern is to express the initial conditions (2) in the matrix formats. This enables us to determine the solution of (1) through solving the linear or nonlinear fundamental matrix equation as described above.

Let us first transform \(\chi (0)=\pi _0\) into a matrix format. For this purpose, let us approach \(t\rightarrow 0\) in (12) yielding

For the remaining initial conditions, we require to compute \(\frac{{\text {d}}^k}{{\text {d}}t^k}\pmb {M}^{\alpha }_{L}(t)\) for \(k=1,2,\ldots ,n-1\) due to (12). This task is performed by calling Algorithm 3.1 utilizing integer values \(s=1,\ldots ,n-1\). Upon defining \(\pmb {M}^{(k)}_{L,\alpha }(t):=\frac{{\text {d}}^k}{{\text {d}}t^k}\pmb {M}^{\alpha }_{L}(t)\) and letting \(t\rightarrow 0\), we arrive at the following matrix relations

for \(k=1,2,\ldots ,n-1\).

Remark 3.5

For \(\alpha =1\), an alternative approach has been used in the literature, see cf. [14]. In this approach, one may find a relationship between \(\pmb {M}^{1}_{L}(t)\) and its k-order derivatives \(\frac{{\text {d}}^k}{{\text {d}}x^k}\pmb {M}^{1}_{L}(t)\) through an differentiation matrix \(\pmb {D}\) for \(k\ge 1\) as

Therefore, after differentiating (12) k times with respect to t, we find

It now suffices to let \(t\rightarrow 0\) to get

for \(k=1,2,\ldots ,n-1\).

We finally combine the fundamental matrix equation (26) or (27), and the matrix forms of the initial conditions (2). To do so, the replacements of the first n rows of the matrix \([\pmb {Y}; \pmb {Q}]\) are carried out by the row matrices \([\widetilde{\pmb {Y}}_k; \pi _k]\) for \(k=0,1,\ldots ,n-1\). Let us denoted \([{\widetilde{\pmb {Y}}}; \widetilde{\pmb {Q}}]\) to be the modified fundamental matrix equation. Once this linear or nonlinear algebraic matrix equation is solved, the unknown coefficients \(a_{\ell }\), \(\ell =0,1,\ldots ,L\) are determined and thus the desired approximation \({\chi }_{L,\alpha }(t)\) of FDDEs (1) is computed.

Approximation results for Chelyshkov functions

An error bound in the \(L^2_{w}\) norm

Let us consider the convergence properties of the Chelyshkov functions in the weighted \(L^2_w[0,1]\) norm. We recall that the norm of an arbitrary function h(t) with respect to the weight function w(t) is given by

By defining the space spanned by the Chelyshkov basis functions

we have the following theorem.

Theorem 4.1

Suppose that for \(s=0,1,\ldots , L\) we have \({}^C {D}^{(s\alpha )}_{t}\chi (t)\in C(0,1]\). Let us denoted \(\chi _{L-1,\alpha }(t)=\pmb {\Psi }^{\alpha }_{L-1}(t)\,\pmb {A}_{L-1}\) to be the best approximation out of \(\mathcal {S}_{L-1}^{\alpha }\) to \(\chi (t)\). Then, we get an error bound as:

where \(|{}^C {D}^{(L\alpha )}_{t}\chi (t)|\le M^{\infty }_{\alpha }\), for \(t\in (0,1]\).

Proof

For a similar proof, we refer the readers to [55, Theorem 2.]. \(\square\)

An error bound in the \(L_{\infty }\) norm

We now aimed to obtain an error bound for the suggested method in the \(L_{\infty }\) norm. In the following theorem, we will construct an upper boundary of errors.

Theorem 4.2

(Upper Boundary of Errors) Let us assume that \(\chi (t)\) and \(\chi _{L,\alpha }(t)=\pmb {\Psi }^{\alpha }_{L}(t)\,\pmb {A}_{L}\) are the exact solution and the Chelyshkov series solution with \(\alpha L\)-th degree of Eq. (1) in interval \(0\le t\le 1\). Let \(\chi _{L,\alpha }^{Mac}(t)=\pmb {M}^{\alpha }_{L}(t)\,\widetilde{\pmb {A}_L}\) be the expansion of the generalized Maclaurin series [56] by \(\alpha L\)-th degree of \(\chi _{}(t)\). Then, the errors of the Chelyshkov series solution \(\chi _{L,\alpha }(t)\) are bounded as follows:

for some \(c_t\in (0,1)\). Here, \(\pmb {M}^{\alpha }_{L}(t)\), \(\pmb {\Psi }^{\alpha }_{L}(t)\), and the matrix \(\pmb {E}_L\) are defined in Sect. 2, relation (9).

Proof

Firstly, by utilizing the triangle inequality and the generalized Maclaurin expansion \(\chi _{L,\alpha }^{Mac}(t)\) by \(\alpha L\)-th degree, we can arrange the error \(\Vert \chi _{}(t)-\chi _{L,\alpha }(t)\Vert _{\infty }\) as follows:

According to (12), the Chelyshkov series solution \(\chi _{L,\alpha }(t)=\pmb {\Psi }^{\alpha }_{L}(t)\pmb {A}_{L}\) can be written in the matrix form \(\chi _{L,\alpha }(t)=\pmb {M}^{\alpha }_{L}(t)\pmb {E}_L \pmb {A}_{L}\). Also, we know that the expansion of the generalized Maclaurin series by \(\alpha L\)-th degree of \(\chi _{}(t)\) is \(\chi _{L,\alpha }^{Mac}(t)=\pmb {M}^{\alpha }_{L}(t)\,\widetilde{\pmb {A}_L}\). Hence, by using this information, we can write the following inequality

The norm \(\Vert \pmb {M}_L^\alpha (t)\Vert\) in [0, 1] is bounded as \(\Vert \pmb {M}_L^\alpha (t)\Vert _{\infty }\le 1\). Then, Eq. (31) can be arranged as follows:

On the other hand, by utilizing that the reminder term of the generalized Maclaurin series [56] \(\chi _{L,\alpha }^{Mac}(t)\) by \(\alpha L\)-th degree is

where \(c_t\) is some constant in (0, 1). Therefore, we get the following error bound

Lastly, by combining Eqs. (30), (32) and (33), we have proved the desired result.\(\square\)

Simulation examples

In this part, the applications of novel Chelyshkov matrix algorithm for the considered delay model (1) are demonstrated through numerical simulations. We validate our numerical results by developing diverse test examples as well as a comparison with available computational and experimental results has been performed. For implementation and visualization, we utilize MATLAB software version 2017a. In order to evaluate the accuracy as well as the convergence of the proposed Chelyshkov matrix technique, we define

However, the exact solutions are usually not at hand for some examples or at various values of fractional orders \(\gamma ,\mu ,\nu\). In these cases, we define the residual error function to testify the accuracy of the presented approach

for \(t\in [0,1]\).

Test case 5.1 We firstly consider the FDDEs model problem of the form [57,58,59,60]

being \(\gamma \in (0,1]\) and initial condition is \(\chi (0)=0\). A straightforward calculation shows that true exact solution is given by \(\chi (t)=t^2-t\) for all values of \(\gamma \in (0,1]\).

Let’s begin computations by setting \(\tau (t)=ce^{-t}\), \(c=0.01\) and using \(L=2\). Taking this value of L would be sufficient to get an accurate solution. Using \(\gamma\) and \(\alpha\) both equal to unity, the approximate solution is

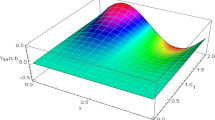

which is exactly the true solution. The aforesaid approximation along with the related absolute error is shown in Fig. 1.

Note, using diverse values of \(0<\gamma <1\) will also lead to the same exact solution as for \(\gamma =1\). In the next experiments, the results of absolute errors utilizing diverse values of \(\gamma =0.25,0.5,075\) are reported. Table 1 tabulates the results of \(\mathcal {E}_{L,\alpha }(t)\), which are calculated at some points \(t_i=2i/10\) while i varies from 0 to 5. A comparison with the outcomes of operational-based methods with constant \(\tau =0.01\) are presented in Table 1 to testify the validity of our numerical results. These methods are the Bernoulli wavelet method (BWM) [57], the Chebyshev spectral collocation method (CSCM) [59], and the Chebyshev functions of third kind (CFTK) [60] Looking at Table 1 reveals that our numerical model results are more accurate in comparison to other existing computational results.

Next, we investigate the influence of utilizing diverse delayed functions \(\tau (t)\) on the computed solutions. We take \(\tau (t)=e^t,e^{-t},\sin (t),\sqrt{t}\), \(\tau (t)=t^{10}\). In all cases, the obtained approximate solutions by the Chelyshkov matrix approach are the same and coincide with the exact true solution. In Fig. 2, we depict the related absolute errors, which are obtained using \(\gamma =0.5\), \(\alpha =1\), and \(L=2\).

Test case 5.2 As the second test problem, let us consider the FDDEs of the form

with initial condition \(\chi (0)=1\). It is not a difficult task to show that the exact solution of (5.2) for \(\gamma =1\) is \(\chi (t)=e^t\).

Firstly, \(N=3,5\) being used for this example. Considering \(\gamma ,\alpha =1\), the resulting approximative solutions via Chelyshkov matrix technique for \(t\in [0,1]\) are as follows:

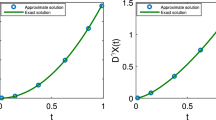

The obtained approximations along with the exact solution are visualized in Fig. 3. In addition to \(L=3,5\), the resulting absolute errors for \(L=7,9\) are also depicted in this figure, but on the right plot. Clearly, the exponential convergence of the proposed algorithm can be visible when the number of bases getting large.

Moreover, for \(\gamma =1\), the results of absolute errors \({\mathcal {E}}_{{L},{1}}(t)\) utilizing diverse values of \(L=5,7,9\), and \(L=11\) are reported in Table 2. Comparisons are further made in this table between the outcomes of the present technique and the other existing methods, i.e., the Chebyshev wavelet method (CWM) [61] and the CFTK [60].

Let us consider the behavior of solutions when the fractional order \(0<\gamma <1\) is used. In this respect, we take \(\gamma =0.5,0.75\). Additionally, we utilize \(\gamma ,\alpha =1\). Fig. 4 displays the solutions \(\chi _{10,\alpha }(t)\), where both \(\alpha =1\) and \(\alpha =\gamma\) are used for comparisons. In the right graph, the residual errors \({\mathcal {R}}_{{10},{\alpha }}(t)\) for \(t\in [0,1]\) are also depicted. It can be readily observed that a smaller magnitude of error is achieved when the degrees of local basis functions \(\alpha\) are taken as the fractional order of model \(\gamma\).

Test case 5.3 The third test case is devoted to the following nonlinear FDDEs defined on \(0\le t\le 1\) as

The initial conditions are \(\chi (0)=1\), \(\chi '(0)=0\). For \(\gamma =2\), an easy calculation shows that \(\chi (t)=\cos t\) is the exact solution of this model problem.

First, we set \(\gamma =2,\alpha =1\). Utilizing \(L=5,10\) in the Chelyshkov matrix procedure, we get the following polynomial forms for the approximate solutions for \(t\in [0,1]\) as follows:

and

Let us consider the series form of the exact solution, i.e., \(\cos t\approx 1-\frac{t^2}{2!}+\frac{t^4}{4!}-\cdots +\frac{t^{10}}{10!}\). A comparison between the achieved approximations and the exact one indicates the good alignment between them, especially when L is increased. Moreover, the absolute errors \({\mathcal {E}}_{{L},{1}}(t)\) utilizing diverse values of \(L=5,8,10,15\) in the approximate solutions are presented in Table 3. The outcomes of the BWM [57] with \(k=2\) and various \(M=7,9\) as well as the modified Laguerre wavelet method MLWM [62] with \(k=1\) and different \(M=10,20\) are also reported in Table 3. We observe that the proposed method using a lower number of bases produces more accurate results than the BWM method does.

Figure 5 displays the numerical solutions corresponding to diverse fractional orders \(\gamma =1.5,1.6,1.7,1.8\), and 1.9. Besides, Fig. 5 shows the results related to \(\gamma =2\) at which the exact solution is available. In all plots, we use \(\alpha =1\) and \(L=10\) is taken. On the right panel, the behavior of residual errors \({\mathcal {R}}_{{10},{1}}(t)\), \(t\in [0,1]\) at different values of \(\gamma\) are visualized.

Precisely speaking, in Table 4, the computed values of numerical solutions \(\chi _{10,1}(t)\) for various \(\gamma =1.5,1.6,\ldots ,1.9\) at some points \(t\in [0,1]\) for Example 5.3 are shown.

Test case 5.4 We consider the following FDDEs

The given initial conditions are \(\chi (0)=1\), \(\chi '(0)=-1\), and \(\chi ''(0)=1\). If \(\gamma =3\), one can show that \(\chi (t)=e^{-t}\) is the exact solution of (5.4).

First, we take \(\gamma =3\) and \(\alpha =1\). We get the following polynomial solutions when using \(L=5,10\) in the Chelyshkov matrix approach on [0, 1] as follows:

Furthermore, the numerical evaluations of the latter approximate solution are presented in Table 5. The resulting absolute errors at some points \(t\in [0,1]\) are also shown. The numerical results of two existing schemes, namely the MLWM [62] with \(k=1,M=20\) and the BWM [57] utilizing \(k=2,M=7\), are reported in this table for comparison.

In the next study, we examine the impact of using non-integer order values on the obtained numerical solutions. Let us consider \(L=10\) and \(\alpha =1\). The numerical evaluations at \(\gamma =2.5,2.7,2.9\) are seen in Table 6. The associated residual errors are further presented in Fig. 6. This figure also shows the errors for other values of \(\gamma =2.6,2.8,3\).

Our further goal is to examine the benefits of utilizing fractional-order Chelyshkov functions in the presented matrix technique.

Test case 5.5 Let us consider the nonlinear FDDEs of the form

where \(g(t)=\frac{3\sqrt{\pi }}{\Gamma (\frac{5}{2}-\gamma )}t^{\frac{3}{2}-\gamma }-t^{\frac{3}{2}}-t\) and with the initial condition \(\chi (0)=0\). It can be seen that the exact solution is given by \(\chi (t)=t\sqrt{t}\).

Let us first consider \(\gamma =\frac{1}{2}\) and \(L=5\). To highlight the discrepancy between integer and non-integer order of basis functions, we set \(\alpha =1\) and \(\alpha =\frac{1}{2}\) in the experiments. For \(\alpha =1\), the following approximate solution via Chelyshkov matrix technique is gotten on [0, 1]

which is obviously far from the exact solution. Even using a larger number of L cannot lead to a significant change in the accuracy of approximate solutions due to appearance of the fractional power in the exact solution. One remedy is to utilize a set of fractional bases so that the exact solution is written in terms of them. In this case, we set \(\alpha =\frac{1}{2}\). Thus, we get \(\pmb {M}_{5}^{\frac{1}{2}}=[ 1\quad t^{1/2}\quad t\quad t^{3/2}\quad t^2\quad t^{5/2}]\). The corresponding approximation is

which is in excellent agreement with the exact solution. Note that one obtains also the same accurate solutions using a smaller number of bases. For instances, taking \(L=3\) with \(\alpha =\frac{1}{2}\) gives us

The same approximation result is obtained with \(L=2\) and \(\alpha =\frac{3}{2}\) as

All the above approximations together with related absolute errors are depicted in Fig. 7.

We further utilize diverse \(\gamma =\frac{1}{4},\frac{1}{2}\), and \(\gamma =\frac{3}{4}\) in the computations. Correspondingly, the values of \(\alpha =\frac{1}{2},\frac{3}{4}\) are used. The numerical results are presented in Table 7 with \(L=3\). By looking at the numerical results we can see that employing the fractional-order Chelyshkov functions gives rise to a considerable achievement of accuracy in the approximate solutions and even with a small number of bases.

Test case 5.6 We consider the following FDDEs as

where \(g(t)=e^{-t}+(0.32t-0.5)e^{-0.8t}\) and with the initial condition \(\chi (0)=0\). It can be seen that for \(\gamma =1\), the exact analytical solution is \(\chi (t)=te^{-t}\).

As for the previous examples, we consider first \(\gamma ,\alpha =1\) and \(L=5\) as well as \(L=10\). Employing the Chelyshkov matrix technique, the following polynomial solutions are obtained on [0, 1] as

In comparison with the series expansion of \(te^{-t}\), we find that the obtained solutions are in good alignment especially when L getting larger. The exponential convergence of the proposed technique is presented in Table 8, in which we have used \(L=5,7,9,11\), and \(L=15\). A comparison with the scheme CFTK [60] is further presented in Table 8.

Finally, we take \(L=10\) and see the effect of fractional order of differential equation as well as basis functions. The numerical solutions \(\chi _{10,\alpha }(x)\) utilizing diverse values of \(\gamma =0.25,0.5,0.75\) are depicted in Fig. 8. To show the discrepancy between integer and non-integer basis functions, the graphical representations of the solutions using two different \(\alpha =1\) and \(\alpha =\gamma\) are shown in Fig. 8. As previously observed in the former examples and in our former experiences with other polynomial bases [55], utilizing \(\alpha =\gamma\) leads to more accurate results. Based on this fact, we report numerical results for different values of \(\gamma =0.25,0.5,0.75\) in Table 9 while considering \(\alpha =\gamma\). The related residual errors are shown in Fig. 9.

Test case 5.7 Lastly, we consider the following nonlinear FDDEs

whose exact solution \(\chi _{\gamma }(t)=t^{\gamma +\frac{3}{2}}-t\) depending on the parameter \(\gamma\) and with the initial condition \(\chi (0)=0\). This model for \(\gamma =\frac{1}{2}\) considered in [63].

We take \(\gamma =1/2\) and \(\alpha =1\). We then utilize \(L=2\) and the collocation points \(\{0,\frac{1}{2},1\}\). After solving the nonlinear fundamental matrix equation \([{\widetilde{\pmb {Y}}}; \widetilde{\pmb {Q}}]\) described in Section 4, we get the coefficient matrix \(\pmb {A}_2\) as

Therefore, the resulting approximate solution obtained via our algorithm is

which clearly coincides with the exact solution excellently. Similarly, using \(L=3\), we get

To validate our results and to make a comparison with the outcomes obtained via the Jacobi spectral Galerkin method (JSGM) [63], we calculate the \(L_2\) and \(L_{\infty }\) error norms. In this respect, we compute

The results are tabulated in Table 10. Obviously, our results using less number of bases and less computational efforts are more accurate than those reported by the JSGM.

Finally, we examine the behavior of approximate solutions when the fractional order \(\gamma\) is varied on (0, 1]. To this end, we consider \(\gamma =0.1,0.25,0.5,0.75\) and \(\gamma =1\) while the values of \(\alpha\) are chosen appropriately as \(\alpha =\frac{1}{5},\frac{1}{4},1,\frac{1}{2}\) accordingly. Moreover, we take \(L=8,7,2,10,5\), respectively, for each \(\gamma\). Graphical representations of the aforesaid approximations together with achieved absolute errors are shown in Fig. 10.

Conclusions

In this research, a class of linear and nonlinear fractional delay differential equations is being investigated. The solution to such models is found through a novel collocation method named the Chelyshkov collocation approach depending on the use of Chelyshkov functions. The fractional derivative is defined in the Caputo type, and the fractional differentiation matrices for the Chelyshkov functions are being derived. These matrices along with the collocation points are then used to convert the designed models into a system of algebraic equations and thus this system is then solved to find the unknown coefficients that are used to represent the required solution. A detailed error analysis is being performed for the presented technique provide that the proposed approach ensures sufficient convergence. The method is tested for several examples of different types including some with the exact solution depending on the fractional order. The satisfactory absolute error results for all the examples ensure that the provided technique is better than other related methods in terms of absolute error and computational cost. One can determine based on these accessible observers that the designed technique is accurate and fast. This may provide a future insight to some applications to this technique as to consider more complex models with applications.

References

Ockendon, J., Tayler, A.: The dynamics of a current collection system for an electric locomotive. Proc. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 322, 447–468 (1971)

Ajello, W., Freedman, H.I., Wu, J.: A model of stage structured population growth with density depended time delay. SIAM J. Appl. Math. 52, 855–869 (1992)

Smith, H.L.: An Introduction to Delay Differential Equations with Applications to the Life Sciences. Springer, New York (2011)

Lakshmikantham, V., Leela, S.: Differential and Integral Inequalities. Academic Press, New York (1969)

Gourle, S.A., Kuang, Y.: A stage structured predator-prey model and its dependence on maturation delay and death rate. J. Math. Biol. 49, 188–200 (2004)

Sedaghat, S., Ordokhani, Y., Dehghan, M.: Numerical solution of the delay differential equations of pantograph type via Chebyshev polynomials. Commun. Nonlinear Sci. Numer. Simul. 17, 4815–4830 (2012)

Tohidi, E., Bhrawy, A., Erfani, K.: A collocation method based on Bernoulli operational matrix for numerical solution of generalized pantograph equation. Appl. Math. Model. 37, 4283–4294 (2013)

Adel, W., Sabir, Z.: Solving a new design of nonlinear second-order Lane-Emden pantograph delay differential model via Bernoulli collocation method. Eur. Phys. J. Plus 135(6), 427 (2020)

Adel, W., Rezazadeh, H., Eslami, M., Mirzazadeh, M.: A numerical treatment of the delayed Ambartsumian equation over large interval. J. Interdisc. Math. 23(6), 1077–1091 (2020)

Yüzbaşı, Ş: An efficient algorithm for solving multi-pantograph equation systems. Comput. Math. with Appl. 64, 589–603 (2012)

Yüzbaşı, Ş, Ismailov, N.: A Taylor operation method for solutions of generalized pantograph type delay differential equations. Turk. J. Math. 42(2), 395–406 (2018)

Yüzbaşı, Ş, Gök, E., Sezer, M.: Residual correction of the Hermite polynomial solutions of the generalized pantograph equations. Trends Math. Sci 3(2), 118–125 (2015)

Yüzbaşı, Ş, Gök, E., Sezer, M.: Laguerre matrix method with the residual error estimation for solutions of a class of delay differential equations. Math. Method Appl. Sci. 37(4), 453–463 (2017)

Izadi, M., Srivastava, H.M.: An efficient approximation technique applied to a non-linear Lane-Emden pantograph delay differential model. Appl. Math. Comput. 401, 126123 (2021)

Izadi, M.: A discontinuous finite element approximation to singular Lane-Emden type equations. Appl. Math. Comput. 401, 126115 (2021)

Izadi, M.: Numerical approximation of Hunter-Saxton equation by an efficient accurate approach on long time domains. U.P.B. Sci. Bull. Ser. A 83(1), 291–300 (2021)

Zaeri, S., Saeedi, H., Izadi, M.: Fractional integration operator for numerical solution of the integro-partial time fractional diffusion heat equation with weakly singular kernel. Asian-Eur. J. Math. 10(4), 1750071 (2017)

Izadi, M., Negar, M.R.: Local discontinuous Galerkin approximations to fractional Bagley-Torvik equation. Math. Methods Appl. Sci. 43(7), 4813–4978 (2020)

Izadi, M., Yüzbası, Ş, Adel, W.: Two novel Bessel matrix techniques to solve the squeezing flow problem between infinite parallel plates. Comput. Math. Math. Phys. 61(12), 2034–2053 (2021)

Izadi, M., Afshar, M.: Solving the Basset equation via Chebyshev collocation and LDG methods. J. Math. Model. 9(1), 61–79 (2021)

Izadi, M., Srivastava, H.M.: A discretization approach for the nonlinear fractional logistic equation. Entropy 22(11), 1328 (2020)

Fang, J., Liu, C., Simos, T.E., Famelis, I.T.: Neural network solution of single-delay differential equations. Mediterr. J. Math. 17(1), 1–15 (2020)

Edeki, S.O., Akinlabi, G.O., Hinov, N., Zhou: method for the solutions of system of proportional delay differential equations. In MATEC Web of conferences, vol. 125, p. 02001. EDP Sciences (2017)

Rabiei, K., Ordokhani, Y.: Solving fractional pantograph delay differential equations via fractional-order Boubaker polynomials. Eng. Comput. 35(4), 1431–1441 (2019)

Toan, P., Thieu, N., Razzaghi, M.: Taylor wavelet method for fractional delay differential equations. Eng. Comput. 37, 231–240 (2021)

Dabiri, A., Butcher, E.A.: Numerical solution of multi-order fractional differential equations with multiple delays via spectral collocation methods. Appl. Math. Model. 56, 424–448 (2018)

Izadi, M., Srivastava, H.M.: A novel matrix technique for multi-order pantograph differential equations of fractional order. Proc. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 477(2253), 2021031 (2021)

Yu, Z.: Variational iteration method for solving the multi-pantograph delay equation. Phys. Lett. A. 372(43), 6475–6479 (2008)

Wang, W., Li, S.-F.: On the one-leg θ-methods for solving nonlinear neutral functional differential equations. Appl. Math. Comput. 193(1), 285–301 (2007)

Marzban, H., Razzaghi, M.: Solution of multi-delay systems using hybrid of block-pulse functions and Taylor series. J. Sou. Vib. 292, 954–963 (2006)

Saeed, U.: Hermite wavelet method for fractional delay differential equations. Differ. Equ. 2014, 359093 (2014). https://doi.org/10.1155/2014/359093

Khader, M., Hendy, A.S.: The approximate and exact solutions of the fractional-order delay differential equations using Legendre seudospectral method. Int. J. Pur. Appl. Math. 74(3), 287–297 (2012)

Saadatmandi, A., Dehghan, M.: A new operational matrix for solving fractional-order differential equations. Comput. Math. Appl. 59, 1326–1336 (2010)

Maleknejad, K., Rashidinia, J., Eftekhari, T.: Numerical solutions of distributed order fractional differential equations in the time domain using the Müntz-Legendre wavelets approach. Numer. Methods Partial Differ. Equ. 37(1), 707–731 (2021)

Li, Y.: Solving a nonlinear fractional differential equation using Chebyshev wavelets. Commun. Nonlinear Sci. Numer. Simul. 15, 2284–2292 (2010)

Rashidinia, J., Eftekhari, T., Maleknejad, K.: A novel operational vector for solving the general form of distributed order fractional differential equations in the time domain based on the second kind Chebyshev wavelets. Numer. Algor. 88, 1617–1639 (2021)

Bhrawy, A.H., Alofi, A.S.: The operational matrix of fractional integration for shifted Chebyshev polynomials. Appl. Math. Lett. 26, 25–31 (2013)

Behroozifar, M., Ahmadpour, F.: Comparative study on solving fractional differential equations via shifted Jacobi collocation method. Bull. Iranian Math. Soc. 43, 535–560 (2017)

Li, Y., Sun, N.: Numerical solution of fractional differential equations using the generalized block pulse operational matrix. Comput. Math. Appl. 62, 1046–1054 (2011)

Maleknejad, Kh., Rashidinia, J., Eftekhari, T.: Operational matrices based on hybrid functions for solving general nonlinear two-dimensional fractional integro-differential equations. Comput. Appl. Math. 39, 1–34 (2020)

Chelyshkov, V.S.: Alternative orthogonal polynomials and quadratures. Electron. Trans. Numer. Anal. 25, 17–26 (2006)

Oğuza, C., Sezer, M.: Chelyshkov collocation method for a class of mixed functional integro-differential equations. Appl. Math. Comput. 259, 943–954 (2015)

Rasty, M., Hadizadeh, M.: A product integration approach on new orthogonal polynomials for nonlinear weakly singular integral equations. Acta Appl. Math. 109, 861–873 (2010)

Shali, J.A., Darania, P., Akbarfam, A.A.: Collocation method for nonlinear Volterra-Fredholm integral equations. J. Appl. Sci. 2, 115–121 (2012)

Rahimkhani, P., Ordokhania, Y., Lima, P.M.: An improved composite collocation method for distributed-order fractional differential equations based on fractional Chelyshkov wavelets. Appl. Numer. Math. 145, 1–27 (2019)

Talaei, Y., Asgari, M.: An operational matrix based on Chelyshkov polynomials for solving multi-order fractional differential equations. Neural Comput. Appl. 30, 1369–1379 (2018)

Meng, Z., Yi, M., Huang, J., Song, L.: Numerical solutions of nonlinear fractional differential equations by alternative Legendre polynomials. Appl. Math. Comput. 336, 454–464 (2018)

Al-Sharif, M.S., Ahmed, A.I., Salim, M.S.: An integral operational matrix of fractional-order Chelyshkov functions and its applications. Symmetry 12(11), 1755 (2020)

Balachandran, K., Kiruthika, S., Trujillo, J.J.: Existence of solutions of nonlinear fractional pantograph equations. Acta Math. Sci. 33, 712–720 (2013)

Ghasemi, M., Jalilian, Y., Trujillo, J.J.: Existence and numerical simulation of solutions for nonlinear fractional pantograph equations. Int. J. Comput. Math. 94, 2041–2062 (2017)

Huseynov, I.T., Mahmudov, N.I.: Delayed analogue of three-parameter Mittag-Leffler functions and their applications to Caputo-type fractional time delay differential equations. Math. Methods Appl. Sci. (2020). https://doi.org/10.1002/mma.6761

Kilbas, A.A., Sirvastava, H.M., Trujillo, J.J.: Theory and application of fractional differential equations. In: North-Holland Mathematics Studies, vol. 204. Elsevier, Amsterdam (2006)

Podlubny, I.: Fractional Differential Equations. Academic Press, New York (1999)

Izadi, M., Srivastava, H.M.: Numerical approximations to the nonlinear fractional-order Logistic population model with fractional-order Bessel and Legendre bases. Chaos Solitons Fract. 145, 110779 (2021)

Izadi, M.: A comparative study of two Legendre-collocation schemes applied to fractional logistic equation. Int. J. Appl. Comput. Math. 6(3), 71 (2020)

Odibat, Z., Shawagfeh, N.T.: Generalized Taylors formula. Appl. Math. Comput. 186(1), 286–293 (2007)

Rahimkhani, P., Ordokhani, Y., Babolian, E.: A new operational matrix based on Bernoulli wavelets for solving fractional delay differential equations. Numer. Algor. 74, 223–245 (2017)

Rakhshan, S.A., Effati, S.: A generalized Legendre-Gauss collocation method for solving nonlinear fractional differential equations with time varying delays. Appl. Numer. Math. 146, 342–360 (2019)

Ali, K.K., Abd El Salam, M.A., Mohamed, E.M.: Chebyshev operational matrix for solving fractional order delay differential equations using spectral collocation method. Arab. J. Basic Appl. Sci. 26(1), 342–353 (2019)

Singh, H.: Numerical simulation for fractional delay differential equations. Int. J. Dyn. Control 9(2), 463–474 (2020)

Iqbal, M.A., Ali, A., Mohyud-Din, S.T.: Chebyshev wavelets method for fractional delay differential equations. Int. J. Mod. Appl. Phys. 4(1), 49–61 (2013)

Iqbal, M.A., Saeed, U., Mohyud-Din, S.T.: Modified Laguerre Wavelets Method for delay differential equations of fractional-order. Egyptian J. Basic Appl. Sci. 2(1), 50–54 (2015)

Yang, Ch., Lv, X.: Generalized Jacobi spectral Galerkin method for fractional pantograph differential equation. Math. Methods Appl. Sci. 44(1), 153–165 (2021)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Izadi, M., Yüzbaşı, Ş. & Adel, W. A new Chelyshkov matrix method to solve linear and nonlinear fractional delay differential equations with error analysis. Math Sci 17, 267–284 (2023). https://doi.org/10.1007/s40096-022-00468-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40096-022-00468-y