Abstract

In this survey, we discuss the state of the art of robust combinatorial optimization under uncertain cost functions. We summarize complexity results presented in the literature for various underlying problems, with the aim of pointing out the connections between the different results and approaches, and with a special emphasis on the role of the chosen uncertainty sets. Moreover, we give an overview over exact solution methods for NP-hard cases. While mostly concentrating on the classical concept of strict robustness, we also cover more recent two-stage optimization paradigms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Combinatorial optimization problems arise in many real-world applications, e.g., in the fields of economy, industry, or transport logistics. For many such problems, theoretically (or practically) fast algorithms have been developed under the assumption that all problem data is known precisely. However, the situation becomes more complex when considering uncertainty in the problem parameters. For example, the travel times for the shortest path problem or the vehicle routing problem can be subject to uncertainty, since we cannot predict the exact traffic situation in the future. One successful approach to tackle uncertainty in the input data is robust optimization: for a given set U containing all relevant scenarios, i.e., all sufficiently likely realizations of the uncertain parameters, a solution is sought that is feasible for every scenario in U and that is worst-case optimal under this constraint. This idea was first introduced by Soyster in Soyster (1973). The approach received increasing attention in the late 1990s. Kouvelis and Yu studied finite uncertainty sets U for several combinatorial optimization problems in Kouvelis and Yu (1996). Almost at the same time, Ben-Tal and Nemirovski (1998, 1999) studied robust convex problems with conic or ellipsoidal uncertainty sets. Furthermore, El Ghaoui et al. applied the idea to semi-definite problems and least squares problems (Ghaoui et al. 1998; Ghaoui and Lebret 1997). Later, Bertsimas and Sim introduced budgeted uncertainty sets to reduce what they call the Price of Robustness (Bertsimas and Sim 2004a). A survey over robust optimization approaches for discrete and interval uncertainty can be found in Aissi et al. (2009). The different uncertainty sets and their robust counterparts are intensively studied in Li et al. (2011).

Subsequently, new robust optimization paradigms were presented and studied in the literature, with the main objective of making the approach better applicable to practical problems. Besides various two-stage approaches (Ben-Tal et al. 2004; Liebchen et al. 2009; Adjiashvili et al. 2015), which we will discuss in detail in Sect. 4, several other paradigms have been investigated, e.g., min–max regret robustness (Averbakh and Lebedev 2005; Inuiguchi and Sakawa 1995; Chassein and Goerigk 2016; Kouvelis and Yu 1996; Averbakh and Lebedev 2004; Aissi et al. 2005a, b, c) or the light robustness approach (Fischetti et al. 2009; Fischetti and Monaci 2009; Schöbel 2014). Surveys studying several of the different approaches can be found in Aissi et al. (2009), Bertsimas et al. (2011), Gabrel et al. (2014), Kasperski and Zieliński (2016), Ben-Tal and Nemirovski (2002), Gorissen et al. (2015) and Beyer and Sendhoff (2007); they also cover distributional robustness, which forms a connection between robust and stochastic optimization.

In the present survey, we consider general combinatorial optimization problems of the form

where \(X\subseteq \{ 0,1 \}^{n}\) describes the certain set of feasible solutions and where only the cost vector \(c\in \mathbb {R}^n\) is subject to uncertainty. In particular, we assume that an uncertainty set \(U\subseteq \mathbb {R}^n\) is given which contains all possible cost vectors c. The classical robust counterpart of Problem (P) is then given by Problem

In contrast to other surveys on this topic, we aim at pointing out the differences between several common classes of uncertainty sets, with a focus on ellipsoidal uncertainty; see Sect. 2. In Sect. 3, we will sort and structure the complexity results for Problem (RP) achieved in the literature for several underlying combinatorial problems, again with a focus on the role of the chosen class of uncertainty set. Typical complexity results for Problem (RP) are illustrated for the most elementary case \(X=\{0,1\}^n\), including sketches of the main proofs. Furthermore, we will discuss exact methods to solve Problem (RP) for the NP-hard cases, covering IP-based methods as well as oracle-based algorithms, which can be applied to every combinatorial problem (P) given by an optimization oracle. Finally, in Sect. 4, we will give an overview over various robust two-stage approaches presented in the literature and point out the connections between them.

2 Common uncertainty sets

The choice of the uncertainty set U is crucial in the design of the robust counterpart (RP). On the one hand, this choice should reflect the situation given in the application and lead to a realistic model of the given uncertainty, including the user’s attitude toward risk. On the other hand, the choice of U influences the tractability of the resulting problem (RP). For this reason, many different types of uncertainty sets have been investigated in the literature and are still being proposed. Roughly, most of these uncertainty sets can be classified as discrete, polyhedral, or ellipsoidal sets. For a study on the geometric relationship between the common uncertainty classes see Li et al. (2011).

2.1 Discrete uncertainty

Discrete (or scenario-based) uncertainty sets are finite sets \(U=\{c_1,\dots ,c_m\}\). They form the most intuitive case of uncertainty sets. In practice, uncertain problem data is often given as a finite list of scenarios observed in the past, e.g., the prices of stocks in portfolio optimization or the shipping volumes in the design of a transport network.

Unfortunately, in spite of their conceptual simplicity, assuming discrete uncertainty nearly always leads to intractable robust counterparts; see Sect. 3.1.1. In fact, for many well-studied underlying combinatorial problems, such as the shortest path problem or the spanning tree problem, the robust counterpart (RP) turns out to be weakly NP-hard if the number m of scenarios is fixed and strongly NP-hard if m is part of the input.

2.2 Polyhedral uncertainty

Even if it seems natural in practice to define uncertain costs by a finite list of possible scenarios, in particular when only finitely many observations from the past are available, there is no reason to exclude convex combinations of these scenarios: if two scenarios are likely enough to appear, then why should the convex combination of them not be a likely scenario? This leads to the concept of polyhedral (or polytopal) uncertainty, as polytopes are exactly the convex hulls of finite sets.

For most models of robust optimization, including the robust counterpart defined in (RP), it is easy to prove that changing from U to its convex hull does not change the problem, as the worst case in the inner maximization problem will be attained in an extreme point anyway. This seems to suggest that discrete and polytopal uncertainty sets are equivalent. However, this is not true for all robust two-stage optimization paradigms; see Sect. 4. Moreover, even if the equivalence holds from an abstract point of view, it does not hold from an algorithmic or complexity-theoretic point of view: the convex hull of m points can have exponentially many facets in m, and, vice versa, the number of vertices of a polytope can be exponential in the number of its facets. In particular, complexity results do not necessarily carry over from the discrete to the polyhedral case or vice versa.

In fact, the number of vertices is exponential for one of the most widely used polyhedral uncertainty set, namely interval uncertainty. Here, every objective function coefficient can vary independently in an interval \([l_i,u_i]\), so that U is an axis-parallel box

Note that the number of vertices is \(2^n\) here, so that a reduction to the discrete case is not efficient. However, using interval uncertainty, the classical robust counterpart is as easy to solve as the underlying problem, since we can just replace every uncertain coefficient by \(u_i\).

On the other hand, interval uncertainty leads to very conservative solutions, as it takes into account the possibility that every cost coefficient attains its worst case value independently. In an effort to mitigate this effect, the concept of budget uncertainty (also called Gamma uncertainty) has been introduced (Bertsimas and Sim 2004a). Building on the interval uncertainty set, the idea is to allow only a fixed number \(\varGamma \) of coefficients to deviate from their mean values. This leads to the uncertainty set

Dealing with a minimization problem and since \(X\subseteq \{ 0,1 \}^{n}\), we only need to consider positive deviations in the coefficients. For the classical robust counterpart (RP), we can equivalently consider the uncertainty set

as it was first introduced in Bertsimas and Sim (2004a), where \(c_0=\tfrac{1}{2} (u+l)\) is the center of the box [l, u] and \(d=\tfrac{1}{2}(u-l)\) are the maximum deviations from the center, and \(e_i\) denotes the i-th unit vector. Note that this set U is not a polytope in general, but when replacing it by its convex hull

assuming \(\varGamma \in \mathbb {N}\), the problem becomes a special case of polyhedral uncertainty. Since the original set U is finite here, budget uncertainty could also be considered a special case of discrete uncertainty; see Sect. 2.1 above. However, if \(\varGamma \) is not fixed, the number of scenarios is exponential in n, so that this viewpoint may be problematic in terms of complexity. Note that all three versions of the budgeted uncertainty set are equivalent for the classical robust counterpart (RP) while in general all sets differ from each other and can lead to different solutions, e.g., in the case of two-stage robustness; see Sect. 4.

An alternative approach is to bound the absolute deviation of c from the mean \(c_0\) by \(\varGamma \); see Sect. 4.1. All these models have in common that, on the one hand, they cut off the extreme scenarios in the interval uncertainty set and thus lead to less conservative solutions, and on the other hand, they usually yield tractable robust counterparts, assuming that the underlying problem is tractable; see Sect. 3.2.1.

Several extensions of budgeted and general polyhedral uncertainty sets have been devised in the literature. In multi-band uncertainty sets, the single deviation band is partitioned into multiple sub-bands for which the concept of budgeted uncertainty is applied independently (Büsing and D’Andreagiovanni 2012, 2013; Claßen et al. 2015). The concept of decision-dependent uncertainty sets was studied in Nohadani and Sharma (2016) and Lappas and Gounaris (2018). The authors consider uncertainty sets U(x) which depend on the decision x. This concept was also applied to budgeted uncertainty sets, assuming that the parameter \(\varGamma \) is not fixed but a function of the solution x (Poss 2013; Sim 2004).

In general, for arbitrary polytopes U, the robust counterpart (RP) turns out to be strongly NP-hard, no matter whether U is given by an inner or by an outer description; see Sect. 3.1.2.

2.3 Ellipsoidal uncertainty

Both discrete and polytopal uncertainty sets often depend on a collection of observed scenarios. These finitely many scenarios are only an approximation of the real distribution of the cost coefficients. In particular, when making the reasonable assumption that the cost coefficients are normally distributed, the confidence sets turn out to be ellipsoids of the form

where \(c_0\in \mathbb {R}^n\) is the expected value of the uncertain objective vector c and \(\varSigma \) is the covariance matrix of the entries of c—for sake of simplicity, we assume \(\varSigma \succ 0\) here. The parameter r describes the level of confidence, i.e., the risk the user is willing to take—a larger r leads to more conservative solutions. Given the set (3), it is easy to see, e.g., using conic duality, that (RP), can be rewritten as a nonlinear optimization problem

The existence of a smooth closed form expression of the objective function distinguishes ellipsoidal uncertainty from the uncertainty sets discussed above; it forms the basis of many solution approaches.

Problems of the form (4) are also known as mean-risk optimization problems in the literature, as their objective function is a weighted sum of the mean \(c_0^\top x\) and the risk \(\sqrt{x^\top \varSigma x}\) of the chosen solution x. Often, the risk part is modeled as \(x^\top \varSigma x\), which may lead to different optimal solutions. Mean-risk optimization is particularly popular in portfolio optimization, where the concept was introduced already in the 1950s by Markowitz (1952). For a comprehensive overview over mathematical methods of portfolio optimization, see Cornuejols and Tütüncü (2006).

Another natural way to derive ellipsoidal uncertainty sets is by considering the so-called Value-at-Risk model. The objective (in the minimization case) is to find a feasible solution \(x\in X\) and a value \(z\in \mathbb {R}\) such that the probability of x having an objective value worse than z is at most a given \(\varepsilon \in (0,1)\). Under this condition, the aim is to minimize z. The resulting problem thus reads

Assuming again that the entries of c are normally distributed, i.e., \(c\sim \mathcal{N}(c_0,\varSigma )\), one can show that the constraint \(\text {Pr}(c^\top x\ge z)\le \varepsilon \) is equivalent to

where \(\varPhi \) is the cumulative distribution function of the standard normal distribution. In summary, the above problem can be recast as (4) with \(r:=\varPhi ^{-1}(1-\varepsilon )\).

Usually, full information about the given distribution of c is not available in practice. However, one may approximate the mean \(c_0\) and the covariance matrix \(\varSigma \) by means of a finite set of observations. This is often done in portfolio optimization; see, e.g., Chang et al. (2000). Arguably, the resulting normal distribution yields a more realistic model of the inherent uncertainty than the finite set of observations itself.

In general, ellipsoidal uncertainty sets lead to intractable counterparts (RP) again. However, in the special case of uncorrelated cost coefficients—or when correlations are ignored in the model—the complexity-theoretic situation becomes more interesting. We then have \(\varSigma = Diag (\sigma )\) for some \(\sigma \in \mathbb {R}_+^n\) and, using binarity, we can rewrite Problem (4) as

Surprisingly, up to our knowledge, it is an open problem whether (5) is tractable for all X for which the underlying problem (P) is tractable. In particular, no tractable (certain) combinatorial optimization problem is known for which (5) turns out to be NP-hard. It is known however that an FPTAS exists as soon as the underlying problem admits an FPTAS (Nikolova 2010a). Furthermore (5) can be solved in polynomial time if X is a matroid. We further discuss this in Sect. 3.2.2.

2.4 General norms

Many of the uncertainty sets discussed above can be defined by means of a norm function \(\Vert .\Vert :\mathbb {R}^n\rightarrow \mathbb {R}\). Indeed, it is a natural approach to assume that the cost function c can vary within a certain radius around the expected scenario \(c_0\), with respect to a given norm. The resulting uncertainty set is thus of the form

where r again models the risk-averseness of the user. Defining \(\Vert c\Vert :=\sqrt{c^\top \varSigma ^{-1} c}\), we obtain ellipsoidal uncertainty as a special case, while the \(\infty \)-norm, after an appropriate scaling, gives rise to interval uncertainty. The convex variants of budgeted uncertainty correspond to a combination of the \(\infty \)-norm with the 1-norm; the two latter norms give rise to polytopal uncertainty sets. In general, a closed form expression for the robust counterpart (RP) for the set in (6) is

where \(\Vert .\Vert ^*\) is the dual norm to \(\Vert .\Vert \); see also Bertsimas et al. (2004).

In analogy to ellipsoidal uncertainty sets corresponding to the 2-norm, one can consider uncertainty sets based on the p-norm. Many results for the ellipsoidal case can be easily generalized to p-norms with \(p\in (1,\infty )\), e.g., the tractability of the robust counterpart for uncorrelated costs in the case \(X=\{0,1\}^n\) (Ilyina 2017).

2.5 Unbounded uncertainty sets

Most uncertainty sets considered in the literature are bounded. Essentially, this can be assumed without loss of generality. Indeed, if an unbounded direction a of U exists, it is easy to see that the inner maximization problem yields an implicit linear constraint \(a^\top x\le 0\), since for all \(x\in \mathbb {R}^n\) violating this constraint the inner maximum in (RP) is infinite. Adding this constraint explicitly allows to remove the unbounded direction from U. In other words, allowing unbounded directions in U means to allow to impose linear constraints on X. Note that if U is convex and unbounded, there always exists an unbounded direction. If uncertain constants are taken into account, as discussed in Sect. 2.6 below, it is even possible to model an affine linear constraint of the form \(a^\top x\le b\) by adding an unbounded direction \((a,-b)\) to U.

2.6 Uncertain constants

For a certain problem of the form (P), adding a constant in the objective function does not have any affect on the set of optimal solutions, so that constants are usually not considered explicitly. However, this changes in the uncertain setting, as also the constant may be uncertain. Nevertheless, this is usually not covered in the literature. Clearly, the robust counterpart including a constant,

is at least as hard as Problem (RP) for most classes of uncertainty sets, as the case of a certain constant can be modeled as a special case. It can be shown that also the reverse is true for most classical combinatorial optimization problems, i.e., including the uncertain constant does not increase the complexity of the problem (Kurtz 2016). Besides others, this is true for the shortest path problem, the spanning tree problem, the knapsack problem, and the unconstrained binary problem where \(X=\{0,1\}^n\). On the other hand, allowing an uncertain constant often simplifies NP-hardness proofs, as we will see in the following section.

3 Strictly robust optimization

We consider the strictly robust counterpart (RP) of the underlying problem (P). We are mostly interested in the complexity of (RP), which of course depends both on the feasible set X and the uncertainty set U. We start by reviewing the complexity results for general discrete, polyhedral, and ellipsoidal uncertainty sets in Sect. 3.1. In Sect. 3.2, we will focus on uncertainty sets that often lead to tractable robust counterparts. In Sect. 3.3, we will survey possible solution approaches for NP-hard cases.

3.1 Complexity for general sets

It turns out that the strictly robust counterpart (RP) is often NP-hard for general discrete, polyhedral, and ellipsoidal uncertainty sets, even in cases where the underlying problem (P) is tractable. Some of the main hardness results are collected in the following subsections, where we distinguish between weakly and strongly NP-hard variants of (RP) and also cover the question whether polynomial time approximation schemes exist. For the convenience of the reader, we will present proofs for the most elementary case \(X=\{0,1\}^n\), which is usually not considered in the literature.

3.1.1 Discrete uncertainty

The robust counterpart (RP) of many classical combinatorial optimization problems, including the shortest path and the spanning tree problem, is NP-hard even if U contains only two scenarios (Kouvelis and Yu 1996; Aissi et al. 2005b, c; Baumann et al. 2015). We now illustrate this by giving a short proof of NP-hardness for the most elementary case \(X=\{0,1\}^n\). In particular, this shows that the hardness is not related to any combinatorial structure of X, but only stems from the integrality constraints.

Theorem 1

The robust counterpart (RP) is NP-hard for \(X=\{0,1\}^n\) if the uncertainty set U contains exactly two scenarios.

Proof

By the discussion in Sect. 2.6, it suffices to show the NP-hardness of Problem (RPC) for the case of two scenarios, i.e., \(U=\{(c_1,{\bar{c}}_1),(c_2,{\bar{c}}_2)\}\). We describe a polynomial reduction of the (weakly) NP-hard Subset Sum problem to (RPC). Given integers \(s_1,\dots ,s_n\) and S, we have to decide whether there exists a subset \(I\subseteq \{1,\dots ,n\}\) with \(\sum _{i\in I}s_i=S\). We construct a corresponding instance of (RPC) by setting \((c_1,{\bar{c}}_1)=(s,-S)\) and \((c_2,{\bar{c}}_2)=(-s,S)\). We then have

It follows that there exists a set \(I \subseteq \{1, \cdots , n\}\) with \(\sum _{i \in I} s_i=S\) if and only if the optimal value of the constructed instance of (RPC) is zero. \(\square \)

Nevertheless, for several problems, e.g., the shortest path problem, the spanning tree problem, the knapsack problem and the unconstrained binary problem, pseudopolynomial algorithms have been found under the assumption that the number m of scenarios is fixed (Kouvelis and Yu 1996; Aissi et al. 2005d; Baumann et al. 2015). Most of the latter algorithms are based on dynamic programming. As a simple example, we observe

Theorem 2

For each fixed m, the robust counterpart (RP) admits a pseudopolynomial algorithm as well as a PTAS for \(X=\{0,1\}^n\) if the uncertainty set U contains exactly m scenarios. It admits an FPTAS if \(m=2\).

Proof

The problem can be reduced to the solution of m multidimensional knapsack problems with \(m-1\) constraints each (Baumann et al. 2015): given \(U=\{c_1,\dots ,c_m\}\), the i-th of these problems reads

The latter problems can be solved by pseudopolynomial algorithms and by a PTAS, if m is fixed, and by an FPTAS if \(m=2\); see, e.g., Kellerer et al. (2004). \(\square \)

Note however that there exists no FPTAS for the bidimensional knapsack problem unless \(\text{ P }=\text{ NP }\), so that the above construction does not directly yield an FPTAS for \(m\ge 3\) scenarios. Nevertheless, it has been shown that the min–max versions of the shortest path problem, the spanning tree problem and the knapsack problem all admit an FPTAS (Aissi et al. 2005a) for a fixed number of scenarios.

Aissi et al. prove that a pseudopolynomial algorithm for the min–max problem with a fixed number of scenarios always exists if the underlying search problem can be solved in polynomial time (Aissi et al. 2005d). Here the underlying search problem is the problem of finding, for a given objective value and a given cost vector, a solution which attains the value with respect to the given cost vector, or returns that no such solution exists.

An interesting problem in its robust version is the min-cut problem. While the robust min s–t-cut problem is strongly NP-hard even if the number of scenarios is fixed, the robust min-cut problem can be solved in polynomial time (Armon and Zwick 2006; Aissi et al. 2005c). To the best of our knowledge, it is still an open question whether the robust assignment problem is weakly or strongly NP-hard for a fixed number of scenarios.

When considering an unbounded number of scenarios, all of the mentioned problems become strongly NP-hard in their robust min–max versions (Kouvelis and Yu 1996; Aissi et al. 2005b, c). Again, we include a proof for the unconstrained binary problem, as to the best of our knowledge this result has not been proved in the literature yet.

Theorem 3

The robust counterpart (RP) is strongly NP-hard for \(X=\{0,1\}^n\) if the uncertainty set U is finite but unbounded.

Proof

Again, it suffices to show NP-hardness of Problem (RPC) containing an uncertain constant, for a finite set \(U=\{(c_1,{\bar{c}}_1),\dots ,(c_m,{\bar{c}}_m)\}\). For the reduction, we use the strongly NP-hard Set Cover problem: for \(k\in \mathbb {N}\), a given set of elements \(I=\left\{ 1,\ldots ,m\right\} \), and a set of subsets \(J\subseteq 2^I\), the problem is to decide if there exists a set of at most k subsets contained in J, such that each \(i\in I\) is contained in at least one of the subsets. We define an instance of the robust unconstrained binary problem (with uncertain constant) as follows: we set \(X=\{ 0,1 \}^{|J|}\) and define for each \(i\in I\) a scenario \((c_i,0)\in \mathbb {R}^{|J|+1}\) where \((c_i)_j = 1\) if element i is contained in the j-th subset of J and 0 otherwise. Furthermore, we add another scenario \((-M{\mathbf 1},Mk + 1)\), where \({\mathbf 1}\) is the all-one vector and M is big enough. If U is defined as the set of all constructed scenarios, there exists a solution for the set cover problem if and only if problem

has an optimal value greater or equal to 1. \(\square \)

An overview over the complexity of min–max problems under discrete uncertainty sets can be found in Aissi et al. (2009).

3.1.2 Polyhedral uncertainty

For general polyhedral uncertainty, Problem (RP) is NP-hard for most of the classical combinatorial problems, since we can easily reduce the two-scenario case by choosing U as the convex hull of the two scenarios. Note however that this does not settle the question whether the problem is weakly or strongly NP-hard. Even if the case of discrete uncertainty with unbounded number m of scenarios is strongly NP-hard, this does not imply strong NP-hardness for the general polyhedral uncertainty case, since the number of facets of the convex hull of the given m scenarios might be exponential in m. In other words, we cannot construct an outer description of U in an efficient way. Furthermore, considering polyhedra U with a fixed number of facets is not reasonable in general, since this implies unboundedness of U for \(n\ge m\); see Sect. 2.5. However, for an unbounded number of facets, the problem turns out to be strongly NP-hard for \(X=\{0,1\}^n\). To the best of our knowledge, this result has not been proved in the literature yet.

Theorem 4

The robust counterpart (RP) is strongly NP-hard for \(X=\{0,1\}^n\) and a polytope U (given by an outer description).

Proof

For the reduction, we use the strongly NP-hard Bin Packing problem. Given positive integers \(a_1,\dots ,a_t\) and C, the problem can be formulated as

In short, this problem can be written as

where \(n:=t^2+t\), \(d\in \{0,1\}^{n}\), \(A\in \mathbb {Z}^{m\times n}\), and \(b\in \mathbb {Z}^{m}\) with \(m:=2t\). Now consider the polytope

where \(a_i\) denotes the i-th row of A. One can easily verify that the vectors \((-a_i,b_i)\) are linearly independent. This implies that an outer description of U can be computed efficiently. Moreover, for each fixed \(y\in \{0,1\}^n\), we have

In summary, Problem (7) reduces to

which is of the form (RPC). Finally, the uncertain constant can again be eliminated, as discussed in Sect. 2.6. \(\square \)

In this case, the hardness essentially comes from the fact that we can use the polytope U to model linear constraints on X; see also the discussion in Sect. 2.5. However, the crucial step is to move from an inner to an outer description of U, which in general is not efficient. Note that the set U constructed in the latter proof together with the reduction from the Bin Packing problem could also be used to prove the result of Theorem 3.

On the other hand, if U is originally given by an inner description, i.e., U is the convex hull of a set of vectors \(U={{\mathrm{conv}}}\left( \{c_1,\ldots ,c_m\}\right) \), then we can easily reduce the robust min–max problem with unbounded number of scenarios, again by choosing U as the convex hull of the scenarios. Therefore, also in this case Problem (RP) is strongly NP-hard for most of the classical problems.

3.1.3 Ellipsoidal uncertainty

For general ellipsoidal uncertainty, Problem (RP) is NP-hard as well for most of the classical problems. This can again be proved by reducing the two-scenario problem, by choosing U as the convex hull of the two scenarios (Sim 2004). Note that the resulting ellipsoid is only one-dimensional, but also a reduction from the two-scenario problem to the min–max problem with full-dimensional ellipsoidal uncertainty, as defined in Sect. 2.3, is possible (Ilyina 2017). In Baumann et al. (2015), it has been proved that the unconstrained binary min–max problem with ellipsoidal uncertainty is strongly NP-hard. For convenience of the reader, we sketch the proof here.

Theorem 5

The robust counterpart (RP) is strongly NP-hard for \(X=\{0,1\}^n\) and an ellipsoid U (given by the covariance matrix \(\varSigma \) and the mean vector \(c_0\)).

Proof

We describe a polynomial reduction from Binary Quadratic Programming, which is a strongly NP-hard problem (equivalent to the Maximum-Cut Problem). We are thus given a problem of the form

where \(Q\in \mathbb {Z}^{n\times n}\) is any symmetric matrix and \(L\in \mathbb {Z}^n\). Using the binary of x, we may assume that Q is positive definite, so that also

is positive definite for \(c:=L^\top L+1\in \mathbb {Z}\). Now (8) can be reduced to

For \(M:=\sum _{ij}|A_{ij}|+1\in \mathbb {Z}\), we can in turn rewrite this as

The latter problem is of the form (4). \(\square \)

3.2 Tractable uncertainty sets

As discussed in the previous sections, Problem (RP) is NP-hard for most classical combinatorial optimization problems even if U only contains two scenarios or is a general polytope or ellipsoid. Therefore, in order to obtain positive complexity results, it is generally necessary to restrict oneself to more specific uncertainty sets. In particular, one may expect tractable robust counterparts in the case of interval or budgeted uncertainty, see Sect. 3.2.1, or for uncorrelated ellipsoidal uncertainty, see Sect. 3.2.2.

3.2.1 Interval and budgeted uncertainty

As already mentioned before, using interval uncertainty leads to robust counterparts as easy as the underlying problem: it can be easily verified that Problem (RP) with \(U=[l,u]\) is equivalent to Problem (P) with objective vector u. This approach often leads to very conservative solutions, since all uncertain parameters are allowed to attain their worst case values at the same time. To tackle this problem, Bertsimas and Sim introduced budgeted uncertainty sets (Bertsimas and Sim 2004a). They propose to add a budget constraint to the interval uncertainty set, which limits the number of variables which may differ from their mean value at the same time; see (1). They prove that the corresponding robust counterpart (RP) can be reduced to solving \(n+1\) deterministic problems (Bertsimas and Sim 2003). Therefore, the problem can be solved in polynomial time as soon as the underlying problem can be solved in polynomial time. In a similar way, one can prove that (RP) can be reduced to the solution of only two deterministic problems when considering

i.e., the variant where the absolute deviation is bounded by \(\varGamma \). Furthermore, Bertsimas and Sim prove that, if an \((1+\varepsilon )\)-approximation algorithm for the deterministic problem exists, then we can approximate the robust min–max version with budgeted uncertainty set with the same accuracy by solving \(n+1\) deterministic problems by the approximation algorithm (Bertsimas and Sim 2003). The results in Bertsimas and Sim (2003) were later improved in Álvarez-Miranda et al. (2013) and Park and Lee (2007). In Lee (2014), the authors prove that it is sufficient to solve \(\lceil \frac{n-\varGamma }{2}\rceil +1\) deterministic problems to calculate an optimal solution of (RP) with budgeted uncertainty.

Similar results as in Bertsimas and Sim (2003) hold for variable budgeted uncertainty, introduced in Poss (2013) and Sim (2004). Here, instead of a fixed parameter \(\varGamma \), we are given a function \(\gamma : X\rightarrow \mathbb {N}\) and define the uncertainty set as

which requires to extend the definition of the robust counterpart (RP) due to the dependence of U from x. In Poss (2013), the author proves that the resulting problem can be solved by solving at most \(n(n+1)\) deterministic problems if we assume that \(\gamma \) is an affine function in x. Furthermore, given a dynamic programming algorithm for a combinatorial problem that satisfies certain assumptions, the author derives a dynamic programming algorithm for Problem (RP) with budgeted and variable budgeted uncertainty. Besides other problems, the latter construction is applicable for the shortest path problem, the traveling salesman problem, and the scheduling problem under budgeted uncertainty sets.

An extension to uncertainty sets modeled by multiple knapsack constraints was studied in Poss (2017). Here the author extends the results in Bertsimas and Sim (2003) to derive exact and approximation algorithms for the robust counterpart. Furthermore, besides other results the NP-hardness of the robust counterpart is proved for the case that the number of knapsack constraints is part of the input.

3.2.2 Uncorrelated ellipsoidal uncertainty

Another subclass which may lead to tractable robust counterparts is the class of uncorrelated ellipsoidal uncertainty sets, i.e., we have that U is an axis-parallel ellipsoid. In this case, the correlation matrix \(\varSigma \) in (3) is a diagonal matrix and the corresponding robust counterpart is given by Problem (5). It has been shown that the latter problem can be solved in polynomial time if X is a matroid (Nikolova 2010a, b). In particular, it can be solved efficiently if \(X=\{ 0,1 \}^{n}\) or if X models the feasible set of the spanning tree problem. This essentially follows from the submodularity of the objective function in (5).

The latter result can also be derived by interpreting Problem (5) as a bicriteria problem with objective functions \((c_0^\top x, \sigma ^\top x)\). Nikolova (2010a) proved that any optimal solution of Problem (5) is an extreme efficient solution of the bicriteria problem, i.e., it is an extreme point of the pareto frontier. Therefore, all optimal solutions can be obtained by solving the linear problem

for appropriate \(\lambda \in [0,1]\). For matroids, an optimal solution of the latter problem depends only on the ordering of the coefficients in the objective function, so that we only have to consider the values of \(\lambda \) for which the ordering changes. However, for a given pair of variables the order can change at most once, which shows that the robust problem can be solved by reduction to at most \(n\atopwithdelims ()2\) certain problems.

For most classical combinatorial optimization problems, it is not known whether Problem (5) is tractable or NP-hard. However, again by considering extreme points of the Pareto frontier, Nikolova proves that if the underlying problem admits an FPTAS—which applies in particular if it can be solved in polynomial time—then also Problem (5) admits an FPTAS.

A further oracle-based result is proved by Bertsimas and Sim (2004b). By replacing the concave square-root function by its subgradients and solving the corresponding linear deterministic problem over x for each value in \(\{ \sigma ^\top x \ | \ x\in \{ 0,1 \}^{n}\}\), they obtain an exact algorithm for (5).

In Mokarami and Hashemi (2015), the authors show that the constrained shortest path problem under uncorrelated ellipsoidal uncertainty can be solved in pseudopolynomial time.

3.3 Solution approaches for NP-hard cases

As discussed in Sect. 3.1, the robust counterpart (RP) is NP-hard for many uncertainty sets U even if the underlying problem (P) is tractable. This implies that, unless P \(=\) NP, there is no efficient way of reducing (RP) to (P) in general, i.e., no algorithm for solving (RP) by solving a polynomial number of problems of type (P)—as it was the case for interval or budgeted uncertainty; see Sect. 3.2.1. However, in spite of the NP-hardness, an exact solution of such problems is desirable. Though most of the literature in robust combinatorial optimization concentrates on theoretical complexity issues or the design of new robust optimization paradigms, some papers also discuss exact methods for NP-hard cases. In this section, we describe some approaches that are applicable to general problems of type (RP). These can be divided into two main groups: algorithms relying on an optimization oracle for the underlying problem (RP) and those using integer programming (IP) formulations.

3.3.1 IP-based approaches

Assuming that a compact integer formulation \(X=\{x\in \{0,1\}^n\mid Ax\le b\}\) is given, we obtain a natural relaxation

of Problem (RP). A basic, but important observation is that (10) is a convex optimization problem. In fact, the objective function

is convex, whatever uncertainty set U is considered. This observation gives rise to a straightforward exact solution approach to solve Problem (RP): the solution of the relaxation (10) yields a lower bound for (RP), which can be embedded into a branch-and-bound approach for solving Problem (RP) to optimality.

Even if the convexity of Problem (10) does not depend on the structure of U, different classes of uncertainty sets lead to (practically) more or less efficient algorithms for computing the lower bounds. In case of a discrete set \(U=\{c_1,\dots ,c_m\}\), Problem (10) simply reduces to the linear program (LP)

For polyhedral U (given by an outer description), we can dualize the linear program (11) and again obtain a compact LP formulation for (10). Finally, the ellipsoidal case leads to a second-order cone problem (SOCP) of the form

Both LPs and SOCPs can be solved efficiently in theory, e.g., by interior point methods. Moreover, modern IP solvers such as CPLEX (Corporation 2015) or Gurobi (Gurobi Optimization 2016) can often handle SOCP constraints, so that Problem (RP) can be directly addressed for all mentioned classes of uncertainty sets. These solvers often also allow to include separation algorithms, for cases in which a compact formulation of (P) does not exist, e.g., for the spanning tree problem.

Several authors propose methods to improve the performance of IP-based approaches, either by presenting cutting planes to enforce the model and the resulting dual bounds or by developing alternative formulations. Atamtürk (2006) presents extended formulations for mixed-integer 0–1 programs under generalized budgeted uncertainty. Cutting planes exploiting submodularity in the case of uncorrelated ellipsoidal uncertainty are devised in Atamtürk and Narayanan (2008). The authors in Monaci et al. (2013) develop a dynamic programming algorithm for the robust knapsack problem under budgeted uncertainty and compare it besides others to a compact formulation and the branch-and-cut algorithm developed in Fischetti and Monaci (2012).

A different approach for convex uncertainty sets is based on scenario generation; see, e.g., Mutapcic and Boyd (2009) for the general setting and a convergence analysis. The basic idea is to produce scenarios \(c_1,\dots ,c_m\) iteratively and to solve the LP relaxation (12) in every iteration, yielding an optimal solution \((x^*,z^*)\). Next, a worst case scenario \(c_{m+1}\) for \(x^*\) is computed, which in our situation can be done by maximizing the linear function \((x^*)^\top c\) over \(c\in U\). Now if \(c_{m+1}^\top x^* \le z^*\), then \(x^*\) is an optimal solution for the relaxation (10), otherwise \(c_{m+1}\) is added to the set of scenarios and Problem (12) is solved again. This approach is compared experimentally to the standard reformulation approach in Fischetti and Monaci (2012) for budgeted uncertainty and in Bertsimas and Lubin (2016) for budgeted and ellipsoidal uncertainty. The authors of the latter paper discuss many variants and implementation details and conclude that none of the two approaches dominates the other. In Pessoa and Poss (2015), telecommunication network design problems under two sources of uncertainty with quadratic dependencies are considered. Besides other results, a scenario generation approach is developed and tested for polyhedral and ellipsoidal uncertainty.

Finally, various approaches based on Benders decomposition have been devised for robust mixed-integer programming; see, e.g., Saito and Murota (2007) for the ellipsoidal uncertainty case or Naoum-Sawaya and Buchheim (2016) for a problem-specific approach that however allows to address very general uncertainty sets.

3.3.2 Oracle-based approaches

In many situations, it is preferable to have an algorithm for Problem (RP) that is purely based on an optimization oracle for the underlying problem (P). This may be the case because the underlying problem is well-studied, so that fast solution algorithms exist, or because the underlying problem is so complex that it is not desirable to re-investigate it from a robust optimization point of view. Moreover, there may not be any compact IP formulation at hand.

In the case of interval or budgeted uncertainty, the robust counterpart can be reduced to the solution of at most linearly many instances of the underlying problem, as discussed in Sect. 3.2.1. For uncorrelated ellipsoidal uncertainty sets, the basic idea of the FPTAS mentioned in Sect. 3.2.2 can be extended to obtain an exact oracle-based algorithm for (RP); see Nikolova (2010a, b). The number of oracle calls in the latter approach is exponential in general; however, it is linear in the number of breakpoints of the bicriteria optimization problem \(\min _{x\in X} (c_0^\top x,\sigma ^\top x)\) derived from the reformulated problem (5).

Few approaches have been presented in the literature that can be applied, in principle, to general sets U. An algorithm based on Lagrangian decomposition has been presented in Baumann et al. (2014) for the case of uncorrelated ellipsoidal uncertainty. The approach decouples the nonlinear objective function from the underlying linear problem; it requires optimization oracles for both the underlying linear problem (P) and for the unconstrained nonlinear problem

The Lagrangian bound can be computed by a subgradient method and then be integrated into a branch-and-bound-scheme. In the uncorrelated ellipsoidal uncertainty case, Problem (14) can be solved in \(O(n\log n)\) time (Baumann et al. 2014). However, for most other types of uncertainty, Problem (14) remains NP-hard; see Theorems 1, 3, 4, and 5. Nevertheless, it can be solved in pseudopolynomial time for a fixed finite number of scenarios; see Theorem 2. Moreover, within a branch-and-bound-scheme, it is enough to compute lower bounds for (14). Such bounds can be obtained by relaxing \(\{0,1\}^n\) to \([0,1]^n\), leading to a convex problem again, or by considering underestimators in the ellipsoidal case (Ilyina 2017).

Lower bounds in a branch-and-bound-scheme can also be obtained by solving the relaxed problem

In case no compact description of \({{\mathrm{conv}}}\left( X\right) \) as in (10) is given, several approaches have been studied which make use of a linear optimization oracle for X. One general approach to solve Problem (15), originally proposed to solve min–max–min-robust counterparts (see Sect. 4.4), uses column generation to produce new worst case scenarios iteratively. It can be considered a dual approach to the iterative scenario generation method described above, based on the problem

which is equivalent to (15). More precisely, the algorithm starts with a set of feasible solutions \(X_0\subseteq X\) and then alternates between computing a worst-case scenario \(c^*\) for Problem (15) over \({{\mathrm{conv}}}\left( X_0\right) \) and computing an optimal solution for (P) with objective \(c^*\), to be added to \(X_0\). The algorithm stops when no feasible solution exists that can improve the worst-case solution. In the former step, the problem reduces to a linear optimization problem over U, with additional linear constraints. In the discrete and polyhedral case, one again obtains an LP, while the ellipsoidal case leads to a quadratic problem. In both cases, the subproblem can thus be solved efficiently. In the latter step, one can use the optimization oracle to check whether a new feasible solution has to be added to \(X_0\). For details see Buchheim and Kurtz (2017).

A related approach to Problem (15) using a Frank–Wolfe-type strategy has been devised in Buchheim et al. (2015), where it is applied to general ellipsoidal uncertainty. The algorithm is again of an iterative nature. In each iteration, a set \(X'\) of feasible solutions is considered, as well as a point \(x'\) in their convex hull. Then the gradient of the objective function (11) in \(x'\) is computed and minimized over the set X, using the linear optimization oracle. An exact line search is performed between \(x'\) and the resulting linear minimizer, and the set \(X'\) is updated. A Frank–Wolfe-approach for the case of uncorrelated ellipsoidal uncertainty has been presented in Bertsimas and Sim (2004b).

4 Robust two-stage problems

A general robust two-stage problem can be formulated as

where \(x\in X\) are the first-stage decisions which have to be taken before the scenario is known. After the scenario \(\xi \in U\) materializes, we choose the best possible second-stage decisions \(y\in Y\), such that the pair (x, y) is feasible for the actual scenario, i.e., \((x,y)\in Z_\xi \). As common in robust optimization, we optimize the worst case objective value of \(f_\xi (x,y)\) over all scenarios \(\xi \in U\). As before, we will concentrate on combinatorial optimization under cost uncertainty and thus assume in the following that \(X\subseteq \{ 0,1 \}^{n_1}\) and \(f_\xi (x,y)=\xi ^\top (x,y)\). For the second-stage, we assume the general case \(Y\subseteq \mathbb {R}^{n_2}\) and \(Z_\xi \subseteq \mathbb {R}^{n_1+n_2}\) and study the cases of real and integer recourse separately in the following sections. Moreover, we focus on the case where \(Z_\xi \) does not depend on \(\xi \).

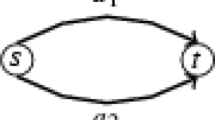

The two-stage approach is tailored for problems for which a subset of the decisions can be implemented after the scenario is known. Applications occur, e.g., in the field of network design problems where in the first stage a capacity on an edge must be bought such that, after the real costs on each edge are known, a minimum cost flow is sent from a source to a sink (Bertsimas and Goyal 2013). Further applications can be problem formulations involving slack variables or, more generally, auxiliary variables depending on the real decision variables and the realized scenario. In a strictly robust setting, such variables must be determined independently of the scenario, which is not possible in general (and not necessary in practice).

Clearly, one can generalize this approach to more than two stages. A multi-stage approach is applicable when the decisions are made in several steps, assuming that the uncertainty vanishes gradually.

4.1 Adjustable robustness

Adjustable robustness was first introduced in Ben-Tal et al. (2004) and can be seen as the beginning of two-stage models in robust optimization. In fact, this approach is often just called Two-Stage Robustness. While the adjustable robust approach was originally introduced for general linear formulations with uncertainty in the constraints, later the approach was applied to combinatorial problems. Considering combinatorial problems with uncertainty only occurring in the cost function, the general linear adjustable robust counterpart is of the form

for feasible sets \(X\subseteq \{ 0,1 \}^{n_1}\), \(Y\subseteq \mathbb {R}^{n_2}\), and \(Z\subseteq \mathbb {R}^{n_1+n_2}\). A recent survey on this topic can be found in Yanıkoğlu et al. (2017).

Formally, by setting \(n_2=0\), the classical robust min–max problem is a special case of (ARP) and therefore all NP-hardness results from Sect. 3 carry over to Problem (ARP). Furthermore for interval uncertainty \(U=[l,u]\), as for the classical min–max problem, it can be easily verified that Problem (ARP) is equivalent to the deterministic problem with objective vector u if \(Y\subseteq \mathbb {R}_+^{n_2}\). However, for a closer investigation of complexity and solution approaches, an important question is whether we assume Y (or Z) to be a discrete set or not, i.e., whether we consider real or integer recourse.

4.1.1 Real recourse

We first discuss the case where no additional integrality constraints occur in the second stage, which has been investigated intensively in the literature.

The adjustable robust counterpart was originally introduced for problems with uncertain constraints and certain objective. In our setting, we can use a straightforward level set transformation to shift the uncertainty from the objective function into the constraints and hence also apply methods designed for problems with uncertain constraints. Even if the feasible sets Y and Z are given by linear uncertain constraints, the adjustable robust counterpart is NP-hard (Minoux 2011). Ben-Tal et al. (2004) propose to approximate the problem by assuming that the optimal values of the wait and see variables y are affine functions of the uncertainty parameters \(\xi \), i.e., \(y=y_0 + W\xi \) for a vector \(y_0\) and a matrix W of appropriate size. The second-stage decisions y are then replaced by the choice of \(y_0\) and W. The authors in Ben-Tal et al. (2004) prove that in the case of fixed recourse, i.e., if the constraint parameters of the second-stage decisions are not uncertain, the problem is equivalent to a classical min–max problem with uncertainty in the constraints and therefore computationally tractable if we have a separation oracle for U. Note that in the case of these so-called affine recourse decisions U can be replaced by its convex hull if Y and Z can be described by inequalities given by quasiconvex functions in \(\xi \). Affine recourse decisions and related and extended ideas have been studied intensively in the literature (Atamtürk and Zhang 2007; Ben-Tal et al. 2005; Calafiore 2008; Georghiou et al. 2015; Bertsimas and Georghiou 2015; Chen and Zhang 2009; Bertsimas and Georghiou 2014; Iancu 2010; Kuhn et al. 2011; Shapiro 2011; Vayanos et al. 2012). Moreover, it has been proved that under certain assumptions affine decision rules are optimal for the adjustable robust counterpart (Bertsimas et al. 2010; Iancu et al. 2013).

However, if we consider the general Problem (ARP) with real recourse for the combinatorial version with cost uncertainty, we obtain a classical robust min–max problem if U and the feasible sets Y and Z are convex. Indeed, applying a classical minimax theorem to the inner problem

we can swap the maximum and the minimum. Therefore in this case (ARP) is equivalent to the classical min–max problem

Note that the latter result even holds without assuming affine decision rules.

For the case of real but not necessarily affine recourse, decomposition methods have been proposed for budget (Billionnet et al. 2014) and polyhedral (Ayoub and Poss 2016) uncertainty.

4.1.2 Integer recourse

We now focus on the case of integer recourse i.e. \(Y\subseteq \mathbb {Z}^{n_2}\), which so far has been investigated much less intensively in the literature. Kasperski and Zieliński (2017) consider combinatorial problems, where a subset of the variables of the solution are determined in the second stage. They prove that Problem (ARP) is NP-hard for the shortest path problem, the minimum s–t-cut problem and the minimum assignment problem, even if U contains only two scenarios and \(n_1,n_2\ge 1\). They prove for the same underlying problems that the adjustable counterpart is even strongly NP-hard if the number of scenarios is unbounded. Furthermore it has been proved that Problem (ARP) is NP-hard for the selection problem even if U only contains two scenarios and that it is strongly NP-hard for the spanning tree problem and the selection problem with unbounded number of scenarios (Kasperski and Zieliński 2011, 2015).

However, the positive complexity results of the min–max problem for budgeted uncertainty are not transferable to the adjustable robust counterpart: considering budgeted uncertainty for adjustable robustness, note that in general the variants of Problem (ARP) with budgeted uncertainty sets (1) and (2) are not equivalent, different from the classical min–max problem.

In Kasperski and Zieliński (2017), the authors prove that Problem (ARP) is NP-hard for the shortest path problem and the spanning tree problem with budgeted uncertainty defined as in (1) if the budget parameter \(\varGamma \) is part of the input. It is an open question whether the problem remains NP-hard for a constant parameter \(\varGamma \). In Chassein et al. (2017), besides other problems, the adjustable robust counterpart of the selection problem is studied under budgeted uncertainty. The authors derive a mixed-integer formulation for the budgeted uncertainty sets (1) and (9) and prove that the problem can be solved in polynomial time for the latter variant.

Approximation algorithms have been developed for the two-stage variant of uncertain network problems and general LP formulations for an exponential number of scenarios (Feige et al. 2007; Khandekar et al. 2008). Furthermore, an exact column-and-constraint generation algorithm has been devised in Zeng and Zhao (2013).

More recently, general approaches for solving Problem (ARP) in the case of integer recourse, at least approximately, have been developed. They use nonlinear decision rules (Bertsimas and Georghiou 2014, 2015) or partitionings of the uncertainty sets (Bertsimas and Dunning 2016; Postek and Hertog 2016; Subramanyam et al. 2017; Hanasusanto et al. 2015).

4.2 Recoverable robustness

Recoverable robust optimization problems have been introduced in Liebchen et al. (2009). The main idea is to calculate a solution which works well in an average scenario and then, after the upcoming scenario is known, can be turned into a feasible solution which is optimal for the given costs in the scenario. As an application example, the approach has been studied for timetabling problems in Liebchen et al. (2009). Here, we aim at computing a good timetable for the case where no disturbances occur. If a disturbance occurs, we want to slightly change the pre-calculated solution to make it feasible and tractable for the actual situation. Formally, the main idea behind this approach is that a set \(\mathcal A\) of recovery algorithms is given such that each of them can be applied to a solution x and a scenario \(\xi \) to construct a feasible solution for the scenario.

When applied to problems with uncertainty only in the costs, the recoverable robust approach can be interpreted as follows: we aim at computing a solution x, such that the best feasible solution we can compute from x by one of our algorithms is worst-case optimal. A special case of this variant is the so-called robust optimization with incremental recourse, introduced in Nasrabadi and Orlin (2013). Here, instead of arbitrary algorithms in the second stage, we allow to change the solution x up to a certain distance. In the combinatorial setting, this leads to the problem

where \(\delta \) is the given distance measure and \(k\in \mathbb {N}\). Typical distance measures investigated in the literature are \(\delta _1(x,y) = |y\setminus x|\) or \(\delta _2(x,y) = |x\setminus y|\) or the symmetric difference.

In the following, we list results for the distance measure \(\delta _1\). An overview about further results for different measures can be found in Kasperski and Zieliński (2016). For discrete uncertainty sets it has been proven that Problem (IRRP) is NP-hard for the minimum spanning tree problem and the selection problem even if U only contains two scenarios and that both problems are strongly NP-hard if the number of scenarios is unbounded (Kasperski and Zieliński 2015; Kasperski et al. 2014). The recoverable robust counterpart is strongly NP-hard for the shortest path problem even for two scenarios. The recoverable knapsack problem is weakly NP-hard for a fixed number of scenarios and strongly NP-hard for an unbounded number of scenarios (Büsing et al. 2011b). For interval uncertainty, the selection problem and the spanning tree problem are solvable in polynomial time (Kasperski and Zieliński 2015; Hradovich et al. 2016). The shortest path problem is strongly NP-hard in this case (Büsing 2012). For budgeted uncertainty, in Büsing et al. (2011a) a linear integer formulation of quadratic size is derived for the recoverable knapsack problem. For the budgeted uncertainty variants (1) and (9) the recoverable shortest path problem is strongly NP-hard (Nasrabadi and Orlin 2013). Further results on recoverable robustness for combinatorial problems can be found in Büsing (2011).

4.3 Bulk robustness

The concept of Bulk Robustness was presented in Adjiashvili et al. (2015) and studied for the shortest path problem and the minimum matroid basis problem. In contrast to the previous models, this approach considers a set of failure scenarios, where each failure scenario is a set of edges which can break down simultaneously. The aim is to calculate a set of edges such that, if we remove the edges of any failure scenario from this set, it still contains the edge set of a feasible solution of the combinatorial problem. In particular, a solution of a bulk-robust counterpart is a superset of a feasible solution, but not a feasible solution itself in general.

As an application, consider a railway system for which a shortest path has to be calculated. Because of possible constructions or accidents it can happen that a section of the railway system is not passable anymore. In this case, we can use the bulk-robust idea to calculate a set of sections which always contain a feasible path no matter which of the failure scenarios occurs.

The bulk-robust approach is studied for several combinatorial optimization problems in Adjiashvili et al. (2015, 2016, 2017). An extension, which is at the same time related to the recoverable robust idea, was presented in Adjiashvili and Zenklusen (2011) for the shortest path problem: in a second stage, after the failure scenario is known, it is allowed to add r edges to the pre-calculated edge set to connect the given nodes s and t. Note that both approaches consider only constraint uncertainty given by the failure scenarios.

A natural way to adapt the bulk-robust concept to problems with only cost uncertainty is to ask for a set of edges such that the best solution contained in the set is worst-case optimal. However, this approach is only reasonable if the vector x is either restricted or penalized by the objective function, as otherwise the optimal solution is always \({\mathbf 1}\). We thus obtain the two problem variants

and

A generalization of the former model was mentioned in Kasperski and Zieliński (2017), but to the best of our knowledge, no complexity results have been devised for this problem. Note however that both variants are at least as hard as the original bulk-robust problem: the failure scenarios can be modeled by large enough costs in the corresponding scenarios. This implies that both variants are NP-hard for the shortest path problem and the assignment problem (Adjiashvili et al. 2015, 2016).

4.4 K-adaptability

As mentioned in Sect. 4.1, Problem (2SRP) in general is NP-hard. Besides using affine decision rules, another way to approximate Problem (2SRP) is to limit the number of second-stage solutions by a given parameter K and calculate these solutions in the first stage. This idea, called K-adaptability, has been introduced in Bertsimas and Caramanis (2010) and has been applied to robust two-stage problems in Hanasusanto et al. (2015). For the case that only the objective function is uncertain, the authors of Hanasusanto et al. (2015) show that this problem is equivalent to the exact problem (2SRP) for large enough K. Furthermore, by dualizing the inner max–min problem, the authors provide a mixed-integer linear programming formulation of polynomial size, which they evaluated for the shortest path problem, besides others. For the general case of constraint uncertainty, the authors prove that even evaluating the objective function is NP-hard.

As a special case of K-adaptability in two-stage robust optimization, in Buchheim and Kurtz (2017) the authors study problems of the form

where again \(X\subseteq \{ 0,1 \}^{n}\) and \(U\subseteq \mathbb {R}^n\). Problem (KAP) is an extension of the classical min–max problem (RP) and yields better solutions in general if \(k>1\). The idea is to calculate k solutions by solving problem (KAP) once and to quickly choose the best of them after the scenario is known.

It is shown in Buchheim and Kurtz (2017) that Problem (KAP) with an additional uncertain constant is NP-hard for \(X=\{0,1\}^n\) and any fixed k even if U is a polyhedron given by an inner description. Furthermore, it is shown that Problem (KAP) is equivalent to

for \(k\ge n+1\), which is a continuous convex problem. By using results on the equivalence of optimization and separation for convex problems proved in Grötschel et al. (1993), the authors show that for general bounded convex uncertainty sets over which a linear function can be optimized efficiently, Problem (KAP) for \(k\ge n+1\) can be solved in polynomial time as soon as the underlying deterministic problem can be solved in polynomial time. Furthermore, the authors provide an exact oracle-based algorithm to solve the problem for \(k\ge n+1\), see Sect. 3.3.2, as well as a heuristic approach for \(k\le n\). For discrete uncertainty sets, the problem is analyzed in Buchheim and Kurtz (2016). The authors show that the complexity of Problem (KAP) coincides with the complexity of the corresponding min–max problem (RP) for many classical combinatorial optimization problems.

5 Conclusion

Considering all classical types of uncertainty sets discussed above, the main dividing line between hard and easy cases seems to be the inclusion of correlations: in the case of interval uncertainty, where all cost coefficients can vary independently, the robust counterpart inherits the complexity of the underlying problem. In the case of uncorrelated ellipsoidal uncertainty, it is not known yet whether the same is true, but positive general results exist. On the other hand, uncertainty sets allowing to model correlations, i.e., general discrete, polyhedral, and ellipsoidal sets, usually lead to NP-hard counterparts. The budgeted uncertainty case is on the borderline, as it takes correlations into account in a rudimentary way, without increasing the complexity.

Apart from many interesting open complexity-theoretic questions, we think that there is a lot of potential for improving exact methods for general classes of uncertainty sets. By a more extensive use of techniques from mathematical programming and nonlinear (robust) optimization, we believe that such methods can become more powerful even in the combinatorial optimization setting. In particular, such nonlinear methods are relevant when dealing with non-finite and non-polyhedral uncertainty sets. Such sets often yield a more realistic description of the given uncertainty, while at the same time not necessarily leading to harder robust counterparts.

References

Adjiashvili D, Zenklusen R (2011) An s–t connection problem with adaptability. Discrete Appl Math 159(8):695–705

Adjiashvili D, Stiller S, Zenklusen R (2015) Bulk-robust combinatorial optimization. Math Program 149(1–2):361–390

Adjiashvili D, Bindewald V, Michaels D (2016) Robust assignments via ear decompositions and randomized rounding. In: Chatzigiannakis I, Mitzenmacher M, Rabani Y, Sangiorgi D (eds) 43rd international colloquium on automata, languages, and programming (ICALP 2016). Leibniz international proceedings in informatics (LIPIcs), vol 55. Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik, Dagstuhl, Germany, pp. 71:1–71:14. https://doi.org/10.4230/LIPIcs.ICALP.2016.71

Adjiashvili D, Bindewald V, Michaels D (2017) Robust assignments with vulnerable nodes. Technical report. http://arxiv.org/abs/1703.06074

Aissi H, Bazgan C, Vanderpooten D (2005a) Approximation complexity of min–max (regret) versions of shortest path, spanning tree, and knapsack. In: Algorithms—ESA 2005. Lecture notes in computer science, vol 3669. Springer, Berlin, pp 862–873

Aissi H, Bazgan C, Vanderpooten D (2005b) Complexity of the min–max and min–max regret assignment problems. Oper Res Lett 33(6):634–640

Aissi H, Bazgan C, Vanderpooten D (2005c) Complexity of the min–max (regret) versions of cut problems. In: Algorithms and computation. Springer, Berlin, pp 789–798

Aissi H, Bazgan C, Vanderpooten D (2005d) Pseudo-polynomial algorithms for min–max and min–max regret problems. In: 5th international symposium on operations research and its applications (ISORA 2005), pp 171–178

Aissi H, Bazgan C, Vanderpooten D (2009) Min–max and min–max regret versions of combinatorial optimization problems: a survey. Eur J Oper Res 197(2):427–438

Álvarez-Miranda E, Ljubić I, Toth P (2013) A note on the Bertsimas & Sim algorithm for robust combinatorial optimization problems. 4OR 11(4):349–360. https://doi.org/10.1007/s10288-013-0231-6

Armon A, Zwick U (2006) Multicriteria global minimum cuts. Algorithmica 46(1):15–26

Atamtürk A (2006) Strong formulations of robust mixed 0–1 programming. Math Program 108:235–250

Atamtürk A, Narayanan V (2008) Polymatroids and mean-risk minimization in discrete optimization. Oper Res Lett 36(5):618–622

Atamtürk A, Zhang M (2007) Two-stage robust network flow and design under demand uncertainty. Oper Res 55(4):662–673

Averbakh I, Lebedev V (2004) Interval data minmax regret network optimization problems. Discrete Appl Math 138(3):289–301

Averbakh I, Lebedev V (2005) On the complexity of minmax regret linear programming. Eur J Oper Res 160(1):227–231. https://doi.org/10.1016/j.ejor.2003.07.007

Ayoub J, Poss M (2016) Decomposition for adjustable robust linear optimization subject to uncertainty polytope. Comput Manag Sci 13(2):219–239

Baumann F, Buchheim C, Ilyina A (2014) Lagrangean decomposition for mean–variance combinatorial optimization. In: Combinatorial optimization—third international symposium, ISCO 2014. lecture notes in computer science, vol 8596. Springer, Berlin, pp 62–74

Baumann F, Buchheim C, Ilyina A (2015) A Lagrangean decomposition approach for robust combinatorial optimization. Technical report, Optimization online

Ben-Tal A, Nemirovski A (1998) Robust convex optimization. Math Oper Res 23(4):769–805

Ben-Tal A, Nemirovski A (1999) Robust solutions of uncertain linear programs. Oper Res Lett 25(1):1–13

Ben-Tal A, Nemirovski A (2002) Robust optimization-methodology and applications. Math Program 92(3):453–480

Ben-Tal A, Goryashko A, Guslitzer E, Nemirovski A (2004) Adjustable robust solutions of uncertain linear programs. Math Program 99(2):351–376

Ben-Tal A, Golany B, Nemirovski A, Vial JP (2005) Retailer–supplier flexible commitments contracts: a robust optimization approach. Manuf Serv Oper Manag 7(3):248–271

Bertsimas D, Caramanis C (2010) Finite adaptability in multistage linear optimization. IEEE Trans Autom Control 55(12):2751–2766

Bertsimas D, Dunning I (2016) Multistage robust mixed-integer optimization with adaptive partitions. Oper Res 64(4):980–998

Bertsimas D, Georghiou A (2014) Binary decision rules for multistage adaptive mixed-integer optimization. Math Program 167:1–39

Bertsimas D, Georghiou A (2015) Design of near optimal decision rules in multistage adaptive mixed-integer optimization. Oper Res 63(3):610–627

Bertsimas D, Goyal V (2013) On the approximability of adjustable robust convex optimization under uncertainty. Math Methods Oper Res 77(3):323–343

Bertsimas D, Lubin IDM (2016) Reformulation versus cutting-planes for robust optimization. Comput Manag Sci 13(2):195–217

Bertsimas D, Sim M (2003) Robust discrete optimization and network flows. Math Program 98(1–3):49–71

Bertsimas D, Sim M (2004a) The price of robustness. Oper Res 52(1):35–53

Bertsimas D, Sim M (2004b) Robust discrete optimization under ellipsoidal uncertainty sets. Citeseer

Bertsimas D, Pachamanova D, Sim M (2004) Robust linear optimization under general norms. Oper Res Lett 32(6):510–516

Bertsimas D, Iancu DA, Parrilo PA (2010) Optimality of affine policies in multistage robust optimization. Math Oper Res 35(2):363–394

Bertsimas D, Brown DB, Caramanis C (2011) Theory and applications of robust optimization. SIAM Rev 53(3):464–501

Beyer HG, Sendhoff B (2007) Robust optimization—a comprehensive survey. Comput Methods Appl Mech Eng 196(33):3190–3218

Billionnet A, Costa MC, Poirion PL (2014) 2-Stage robust MILP with continuous recourse variables. Discrete Appl Math 170:21–32

Buchheim C, Kurtz J (2016) Min–max–min robust combinatorial optimization subject to discrete uncertainty. Optimization online

Buchheim C, Kurtz J (2017) Min–max–min robust combinatorial optimization. Math Program 163(1):1–23

Buchheim C, De Santis M, Rinaldi F, Trieu L (2015) A Frank–Wolfe based branch-and-bound algorithm for mean-risk optimization. Technical report, Optimization online

Büsing C (2011) Recoverable robustness in combinatorial optimization. Ph.D. thesis, Technical University of Berlin

Büsing C (2012) Recoverable robust shortest path problems. Networks 59(1):181–189

Büsing C, D’Andreagiovanni F (2012) New results about multi-band uncertainty in robust optimization. In: International symposium on experimental algorithms. Springer, Berlin, pp 63–74

Büsing C, D’Andreagiovanni F (2013) Robust optimization under multi-band uncertainty—part I: theory. arXiv preprint arXiv:1301.2734

Büsing C, Koster A, Kutschka M (2011a) Recoverable robust knapsacks: \(\gamma \)-scenarios. Network optimization. Springer, Berlin, pp 583–588

Büsing C, Koster AM, Kutschka M (2011b) Recoverable robust knapsacks: the discrete scenario case. Optim Lett 5(3):379–392

Calafiore GC (2008) Multi-period portfolio optimization with linear control policies. Automatica 44(10):2463–2473

Chang TJ, Meade N, Beasley J, Sharaiha Y (2000) Heuristics for cardinality constrained portfolio optimisation. Comput Oper Res 27:1271–1302

Chassein A, Goerigk M (2016) Min–max regret problems with ellipsoidal uncertainty sets. arXiv preprint arXiv:1606.01180

Chassein A, Goerigk M, Kasperski A, Zieliński P (2017) On recoverable and two-stage robust selection problems with budgeted uncertainty. arXiv preprint arXiv:1701.06064

Chen X, Zhang Y (2009) Uncertain linear programs: extended affinely adjustable robust counterparts. Oper Res 57(6):1469–1482

Claßen G, Koster AM, Schmeink A (2015) The multi-band robust knapsack problem—a dynamic programming approach. Discrete Optim 18:123–149

Cornuejols G, Tütüncü R (2006) Optimization methods in finance, vol 5. Cambridge University Press, Cambridge

Corporation I (2015) IBM ILOG CPLEX optimization studio: CPLEX user’s manual. https://www.ibm.com/us-en/marketplace/ibm-ilog-cplex

El Ghaoui L, Lebret H (1997) Robust solutions to least-squares problems with uncertain data. SIAM J Matrix Anal Appl 18(4):1035–1064

El Ghaoui L, Oustry F, Lebret H (1998) Robust solutions to uncertain semidefinite programs. SIAM J Optim 9(1):33–52

Feige U, Jain K, Mahdian M, Mirrokni V (2007) Robust combinatorial optimization with exponential scenarios. In: Integer programming and combinatorial optimization, pp 439–453

Fischetti M, Monaci M (2009) Light robustness. In: Ahuja RK, Möhring RH, Zaroliagis CD (eds) Robust and online large-scale optimization. Springer, Berlin, pp 61–84

Fischetti M, Monaci M (2012) Cutting plane versus compact formulations for uncertain (integer) linear programs. Math Program Comput 4:1–35

Fischetti M, Salvagnin D, Zanette A (2009) Fast approaches to improve the robustness of a railway timetable. Transp Sci 43(3):321–335

Gabrel V, Murat C, Thiele A (2014) Recent advances in robust optimization: an overview. Eur J Oper Res 235(3):471–483

Georghiou A, Wiesemann W, Kuhn D (2015) Generalized decision rule approximations for stochastic programming via liftings. Math Program 152(1–2):301–338

Gorissen BL, Yanıkoğlu İ, den Hertog D (2015) A practical guide to robust optimization. Omega 53:124–137

Grötschel M, Lovász L, Schrijver A (1993) Geometric algorithms and combinatorial optimization. Springer, Berlin

Gurobi Optimization I (2016) Gurobi optimizer reference manual. http://www.gurobi.com. Accessed 3 Sept 2018

Hanasusanto GA, Kuhn D, Wiesemann W (2015) K-Adaptability in two-stage robust binary programming. Oper Res 63(4):877–891

Hradovich M, Kasperski A, Zieliński P (2016) The robust recoverable spanning tree problem with interval costs is polynomially solvable. arXiv preprint arXiv:1602.07422

Iancu DA (2010) Adaptive robust optimization with applications in inventory and revenue management. Ph.D. thesis, Massachusetts Institute of Technology

Iancu DA, Sharma M, Sviridenko M (2013) Supermodularity and affine policies in dynamic robust optimization. Oper Res 61(4):941–956

Ilyina A (2017) Combinatorial optimization under ellipsoidal uncertainty. Ph.D. thesis, TU Dortmund University

Inuiguchi M, Sakawa M (1995) Minimax regret solution to linear programming problems with an interval objective function. Eur J Oper Res 86(3):526–536. https://doi.org/10.1016/0377-2217(94)00092-Q

Kasperski A, Zieliński P (2011) On the approximability of robust spanning tree problems. Theor Comput Sci 412(4–5):365–374

Kasperski A, Zieliński P (2015) Robust recoverable and two-stage selection problems. arXiv preprint arXiv:1505.06893

Kasperski A, Zieliński P (2016) Robust discrete optimization under discrete and interval uncertainty: a survey. In: Doumpos M, Zopounidis C, Grigoroudis E (eds) Robustness analysis in decision aiding, optimization, and analytics. Springer, Berlin, pp 113–143

Kasperski A, Zieliński P (2017) Robust two-stage network problems. In: Operations research proceedings 2015. Springer, Berlin, pp 35–40

Kasperski A, Kurpisz A, Zieliński P (2014) Recoverable robust combinatorial optimization problems. In: Operations research proceedings 2012. Springer, Berlin, pp 147–153

Kellerer H, Pferschy U, Pisinger D (2004) Knapsack problems. Springer, Berlin

Khandekar R, Kortsarz G, Mirrokni V, Salavatipour M (2008) Two-stage robust network design with exponential scenarios. Algorithms ESA 2008:589–600

Kouvelis P, Yu G (1996) Robust discrete optimization and its applications. Springer, Berlin

Kuhn D, Wiesemann W, Georghiou A (2011) Primal and dual linear decision rules in stochastic and robust optimization. Math Program 130(1):177–209

Kurtz J (2016) Min–max–min robust combinatorial optimization. Ph.D. thesis, TU Dortmund University

Lappas NH, Gounaris CE (2018) Robust optimization for decision-making under endogenous uncertainty. Comput Chem Eng 111:252–266

Lee T (2014) A short note on the robust combinatorial optimization problems with cardinality constrained uncertainty. 4OR 12(4):373–378. https://doi.org/10.1007/s10288-014-0270-7

Li Z, Ding R, Floudas CA (2011) A comparative theoretical and computational study on robust counterpart optimization: I. Robust linear optimization and robust mixed integer linear optimization. Ind Eng Chem Res 50(18):10567–10603

Liebchen C, Lübbecke M, Möhring R, Stiller S (2009) The concept of recoverable robustness, linear programming recovery, and railway applications. In: Ahuja R, Möhring R, Zaroliagis C (eds) Robust and online large-scale optimization. Springer, Berlin, pp 1–27

Markowitz H (1952) Portfolio selection. J Finance 7(1):77–91

Minoux M (2011) On 2-stage robust LP with RHS uncertainty: complexity results and applications. J Glob Optim 49(3):521–537

Mokarami S, Hashemi SM (2015) Constrained shortest path with uncertain transit times. J Glob Optim 63(1):149–163. https://doi.org/10.1007/s10898-015-0280-9

Monaci M, Pferschy U, Serafini P (2013) Exact solution of the robust knapsack problem. Comput Oper Res 40(11):2625–2631. https://doi.org/10.1016/j.cor.2013.05.005

Mutapcic A, Boyd S (2009) Cutting-set methods for robust convex optimization with pessimizing oracles. Optim Methods Softw 24(3):381–406

Naoum-Sawaya J, Buchheim C (2016) Robust critical node selection by benders decomposition. INFORMS J Comput 28(1):162–174. https://doi.org/10.1287/ijoc.2015.0671

Nasrabadi E, Orlin JB (2013) Robust optimization with incremental recourse. arXiv preprint arXiv:1312.4075

Nikolova E (2010a) Approximation algorithms for offline risk-averse combinatorial optimization. Technical report

Nikolova E (2010b) Approximation algorithms for reliable stochastic combinatorial optimization. In: Serna M, Shaltiel R, Jansen K, Rolim J (eds) Approximation, randomization, and combinatorial optimization. Algorithms and techniques. Springer, Berlin, pp 338–351

Nohadani O, Sharma K (2016) Optimization under decision-dependent uncertainty. arXiv preprint arXiv:1611.07992

Park KC, Lee KS (2007) A note on robust combinatorial optimization problem. Manag Sci Financ Eng 13(1):115–119

Pessoa AA, Poss M (2015) Robust network design with uncertain outsourcing cost. INFORMS J Comput 27(3):507–524

Poss M (2013) Robust combinatorial optimization with variable budgeted uncertainty. 4OR 11(1):75–92

Poss M (2017) Robust combinatorial optimization with knapsack uncertainty. Discrete Optim 27:88–102

Postek K, den Hertog D (2016) Multistage adjustable robust mixed-integer optimization via iterative splitting of the uncertainty set. INFORMS J Comput 28(3):553–574

Saito H, Murota K (2007) Benders decomposition approach to robust mixed integer programming. Pac J Optim 3:99–112

Schöbel A (2014) Generalized light robustness and the trade-off between robustness and nominal quality. Math Methods Oper Res 80:1–31

Shapiro A (2011) A dynamic programming approach to adjustable robust optimization. Oper Res Lett 39(2):83–87

Sim M (2004) Robust optimization. Ph.D. thesis, Massachusetts Institute of Technology

Soyster AL (1973) Convex programming with set-inclusive constraints and applications to inexact linear programming. Oper Res 21(5):1154–1157