Abstract

Side-channel analysis and fault injection attacks are two typical threats to cryptographic implementations, especially in modern embedded devices. Thus, there is an insistent demand for dual side-channel and fault injection protections. As we know, masking is a kind of provable countermeasure against side-channel attacks. Recently, inner product masking (IPM) was proposed as a promising higher-order masking scheme against side-channel analysis, but not for fault injection attacks. In this paper, we devise a new masking scheme named IPM-FD. It is built on IPM, which enables fault detection. This novel masking scheme has three properties: the security orders in the word-level probing model, bit-level probing model and the number of detected faults. IPM-FD is proven secure both in the word-level and in the bit-level probing models and allows for end-to-end fault detection against fault injection attacks. Furthermore, we illustrate its security order by interpreting IPM-FD as a coding problem and then linking it to one defining parameters of linear code and show its implementation cost by applying IPM-FD to AES-128.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

With the advent of Internet of Things (IoT), more and more cryptographic libraries are implemented in software. Now, IoT objects are, most of the time, not made of secure hardware. Therefore, it is important for the software to protect itself in a sound manner. In this article, we assume that the implementation is free from configuration and coding bugs. Still, in this case, attackers can leverage two techniques to extract information: side-channel and fault injection analyses. Indeed, it is known that a single faulty encryption in AES can fully disclose 128 bits of the secret key [1]. It can be noted that some combined side-channel and fault analyses exist against protected implementations [7, 11].

On the one hand, protections against side-channel analysis aim at reducing the signal-to-noise ratio (see definition in [24, § 4.3.2]) an attacker can get. One option is to balance the leakage, a technique which is used to linearize the control flow. For instance, cache-timing attacks can be alleviated by removing conditional opcodes whose condition is sensitive and sensitive pointer dereferencing. Besides, we assume Meltdown and ZombieLoad attack categories are irrelevant as the code we are interested in is at the baremetal level. Still, there is the possibility of sensitive value leakage, which is properly addressed by randomization (masking [24, Chap. 9]). Indeed, sensitive values leak through a non-injective and noisy channel; thence, single trace attacks are unpractical.

On the other hand, protections against fault injection attacks boil down to detection of errors, using either spatial, temporal or information redundancy. Other techniques rely on invariant checking, such as idempotence of encryption composed by decryption.

In this paper, we present a joint countermeasure to both attacks, which is more efficient than two countermeasures piled one on top of each other.

State of the art. In scientific literature, early countermeasures against both side-channel and fault injection attacks have been designed in hardware. Several gate-level logic styles have been introduced, in particular dual rail with precharge logic, aiming at balancing the leakage. Namely, redundant encodings, where each bit a is represented as a pair of bits \((a_f, a_t)\), such that \(a_f=\lnot a_t=a\) during computation evaluation phase. Owing to this redundancy, the total number of bits set to 1 is unchanged (if in addition, the evaluation phase is interleaved with a precharge phase, the Hamming distance between two states is also constant, irrespective of the sensitive data manipulated). Besides, the redundant encoding \(a_f=\lnot a_t=a\) allows for computation checks, as in evaluation phase, \(a_f=a_t\) (two configurations, namely (0, 0) and (1, 1), are forbidden). Starting from wave dynamic differential logic (WDDL [24, Chap. 7]), other improvements have been successively introduced (MDLP, iMDPL [21], ParTI [33], etc.) Also, some exotic styles have been proposed (asynchronous logic [27], adiabatic logic [26], etc.). All this corpus requires hardware support.

In this paper, we target software-level countermeasures. We build upon the higher-order side-channel countermeasure known as IPM [2] to enrich it to detect faults injected during the computation.

Contributions. We devise an end-to-end fault-detection scheme which operates from within a provable high-order multivariate masking scheme. In practice, we enhance IPM scheme to enable end-to-end side-channel and fault injection detection, while keeping security proofs in the probing security model. Furthermore, we quantify the impact of both side-channel and fault detection on a complete AES-128 to show the advantages of our new scheme.

This work is an extension of the previous eponymous conference paper [8]. We highlight below the new extensions incorporated in this paper:

-

The generalization of IPM and IPM-FD to (O)DSM is presented to emphasize the connections and differences between two schemes. This generalization allows us to optimize the former by using constructions of the latter in a coding theoretic approach. For instance, some optimal codes in (O)DSM would also be applicable in IPM and IPM-FD.

-

We clarify the fault models by showing the essential different assumptions under these models, which determine the fault detection capability of IPM-FD and (O)DSM. We insist that our IPM-FD only considers the last two fault models since we focus on the end-to-end protections.

-

By comparing the IPM-FD and BM-FD (Boolean masking with fault detection), we demonstrate the advantages of the former over the latter. Specifically, IPM-FD needs less shares to achieve the same security order at word level. Furthermore, the bit-level security order of IPM-FD can be much higher than BM-FD given the same number of shares.

-

We insist that the systematic construction of optimal codes for IPM-FD and DSM at both word level and bit level is still an open problem. In this paper, we only provide the metrics and some results with small number of shares by an exhaustive study. Note that another exhaustive study for optimal linear codes for IPM is also available in a related specialized paper [10].

Outline. The rest of this paper is organized as follows: Sect. 2 introduces two typical schemes as the state of the art of countermeasures. Our novel protection is presented in Sect. 3, with security analysis and optimal code selection in Sect. 4. The practical performance evaluation is presented in Sect. 5. Finally, Sect. 6 concludes the paper and opens some perspectives.

2 State of the art on side-channel and fault protection

Side-channel protections considered in this work come in two flavors:

-

Inner product masking (IPM) [2] is a word-oriented (e.g., byte-oriented) masking scheme, equipped with universal operations (namely addition and multiplication). It is optimized to resist attacks at both the word-level and bit-level probing model [30], which is suitable for computing cryptographic algorithms that are subject to high-order side-channel analysis.

-

Direct sum masking (DSM) [5] is a masking scheme which allows for concurrent side-channel and fault injection protection. It expresses the masking as the two encodings of the secret in a code C, and masks in a code D, respectively. This allows us to recover the information by decoding from C and to check the masks by decoding from D.

These two protections are presented, one after the other, in this section.

2.1 Inner product masking

2.1.1 Notations

Computations are carried out in characteristic two finite fields: \({\mathbb {F}}_2\) for bits and \({\mathbb {K}}\) for larger fields. In practice \({\mathbb {K}}\) can be \({\mathbb {F}}_{2^l}\) for some l, e.g., \(l=8\) for AES, and \(l=4\) for PRESENT. The elements from \({\mathbb {K}}\) are termed words, and they are also referred to as bytes when \(l=8\) and to nibbles when \(l=4\). We denote \(+\) the addition in characteristic two fields \({\mathbb {K}}\), which is bitwise XOR. Recall that the subtraction is the same operation as the addition in \({\mathbb {K}}\). Elements of \({\mathbb {F}}_2\) are denoted as \(\{0,1\}\), and elements of \({\mathbb {F}}_{2^l}\) (as words) are represented as polynomials. In this paper, we use \({\mathbb {F}}_{2^4} \cong {\mathbb {F}}_2[\alpha ]/\langle \alpha ^4+\alpha +1\rangle \), and \({\mathbb {F}}_{2^8} \cong {\mathbb {F}}_2[\alpha ]/\langle \alpha ^8+\alpha ^4+\alpha ^3+\alpha +1\rangle \) (that of AES).

We recall that linear codes are space vectors, characterized by their base field \({\mathbb {K}}\), their length n and their dimension k. In addition, linear codes have parameters traditionally denoted as [n, k, d], where d is the minimum distance. The dual of a linear code D is the linear code \(D^\perp \) whose code words are orthogonal to all code words of D. The dual distance \(d^\perp \) of a linear code D happens to be equal to the minimum distance of \(D^\perp \) [23].

Let n be the number of shares in IPM, and the coefficient vector in IPM is \(\mathbf {L}=(L_1, L_2, \ldots , L_n)\) where \(L_1=1\) for performance reason [2, § 1.2].

Definition 1

(IPM data representation) A word of secret information \(X\in {\mathbb {K}}\) is represented in IPM as a tuple of n field elements:

where \(\mathbf {M} = (M_2, M_3, \ldots , M_n)\) is the mask materials, and \({\mathbf {G}}\) and \({\mathbf {H}}\) are generating matrices of linear codes C and D, respectively, as shown below:

The secret information X can be demasked by inner product between two vectors as: \(X = \langle \mathbf {L}, \mathbf {Z} \rangle = \sum _{i=1}^n L_i Z_i . \) Finally, we introduce some handy subset notations. Let \(\mathbf {Z} = (Z_1, \ldots , Z_n) = (Z_i)_{i\in \{1,\ldots ,n\}}\) be a vector. We have:

For instance, \(Z_{\{i\}\cup \{k+1,\ldots ,n\}}\), for \(1\le i\le k\le n\), represents the \((n-k+1)\) vector \((Z_i, Z_{k+1}, Z_{k+2}, \ldots , Z_n)\).

2.1.2 Security order regarding side-channel analysis

The security of IPM is stated in the probing model [17]: The security order is the maximum number of shares which are independent to masked information. We clarify word-level and bit-level security orders as follows:

-

Word-level ( l -bit) security order \({\varvec{d_w}}\): Since many devices perform computation on word-level data, byte-level operations are very common especially on embedded devices. In this paper, we also present instances for 4-bit (nibble) variables for adopting IPM to protect implementation of lightweight cipher like PRESENT, Simon, Speck, etc.

-

Bit-level security order \({\varvec{d_b}}\): In practice, each bit of sensitive variable can be investigated independently and/or several bits can be evaluated jointly. We consider here the number of bits that can be probed by attackers in one time, which is consistent with the bit-level probing model proposed by Poussier et al. [30].

The main advantage of IPM is the higher bit-level security order than Boolean masking, which is called “security order amplification” in [36]. It has been proven in [30] that side-channel resistance is directly connected to the dual distance \(d^\perp _D\) of the code D generated by \({\mathbf {H}}\). Precisely, the security order t of IPM is equal to \(t=d^\perp _D-1\) [30].

The dual distance of linear code D is equal to the minimum distance of the dual code \(D^\perp \) [23]. It is easy to see that the latter has dimension 1 and is generated by a \(1\times n\) matrix:

In order to investigate the bit-level security, the definition of expansion is introduced as follows.

Definition 2

(Code Expansion) By using subfield representation, the elements in \({\mathbb {K}}={\mathbb {F}}_{2^l}\) are decomposed into \({\mathbb {F}}_2\), and we have:

where \(I_l\) is the \(l\times l\) identity matrix in \({\mathbb {F}}_2\) and \({\mathbb {L}}_i\) (\(2\le i\le n\)) are \(l\times l\) matrices.

To derive the matrices, we can use that \({\mathbb {F}}_{2^l}\) is a field extension of \({\mathbb {F}}_2\), and given an irreducible polynomial P over \({\mathbb {F}}_2\) and denoting each element \(a\in {\mathbb {F}}_{2^l}\) as \(\sum _{i=0}^{l-1} a_i \alpha ^i \; [\mod P(\alpha )\; ]\), replace a by \((a_0, \ldots , a_{l-1})\). Under the computer algebra system Magma [35], P is DefiningPolynomial\(({\mathbb {F}}_{2^l})\) and \(D'\) is the representation of D in subfield (SubfieldRepresentationCode(D)). If D has parameters \([n,k,d]_{2^l}\), then \(D'\) has parameters \([n l, k l, d']_2\), where \(d'\ge d\). IPM opportunistically exploits the fact that this inequality can be strict, and attempts to maximize the difference \(d'-d\).

At word level, we notice that the dual distance of D is equal to n as long as \(\forall i, ~L_i\ne 0\). As a result, the word-level security order of IPM is \(d_w=n-1\) which is in consistence with [2]. In addition, security order \(d_b\) at the bit level of IPM is equal to the dual distance of the code expanded by D from \({\mathbb {F}}_{2^l}\) to \({\mathbb {F}}_2\). A typical example of IPM codes matrices \({\mathbf {G}}=(1, 0)\) and \({\mathbf {H}}=(L_2=\alpha ^8, 1)\) in \({\mathbb {F}}_{2^8}\) is given in Fig. 1. The security order at word (byte) level is \(d_w=n-1=1\) and at bit level is \(d_b=3\) because the dual code of \(D={{\,\mathrm{span}\,}}(H)\) is generated by \((1, L_2)\), which, after projection in \({\mathbb {F}}_2\), has parameters \([16,8,4]_2\).

Moreover, addition and multiplication are proven to be \(t=(n-1)\)-order secure at word level in [3] using t-SNI property [4]; thus, the word-level security order is maintained by composition. Still, when a variable is reused, caution must be taken where a refresh algorithm is always adopted to avoid dependence. The refresh operation allows us to decorrelate two copies of a variable that need to be used at two places (to avoid side-channel flaws as put forward in [14]). However, IPM cannot detect faults since no redundancy is inserted to the coding.

2.2 Direct sum masking

Direct sum masking has been originally introduced as orthogonal direct sum masking (ODSM [5]). The secret \(\mathbf {X}\) is represented as a bit vector in \({\mathbb {F}}_2^l\). It is encoded using generating matrix \({\mathbf {G}}\) (of size \(l\times nl\) in \({\mathbb {F}}_2\)) as a word in \({\mathbb {F}}_2^{n l}\). Some random masks \(\mathbf {M}\), drawn uniformly in \({\mathbb {F}}_{2}^{(n-1)l}\), are encoded with matrix \({\mathbf {H}}\) (of size \((n-1)l\times nl\)). After masking the secret with the mask materials, one gets the protected information:

The features of the DSM are the following:

-

Elements are bits;

-

Computation on masked variable \(\mathbf {Z}\) occurs matricially;

-

Side-channel protection is ensured at order \(d^\perp _D-1\);

-

Fault detection allows detecting \(d_C-1\) bitflips.

Orthogonal direct sum masking (ODSM) is a particular case of DSM for which \({\mathbf {G}}{\mathbf {H}}^{\mathsf {T}}=0_{k\times (n-k)}\), or said differently, C and D are mutually dual codes. An illustration of DSM and ODSM is provided in Fig. 2. In this figure, without loss of generality, the matrices \({\mathbf {G}}\) and \({\mathbf {H}}\) are written in systemic form. The conditions for \(C={{\,\mathrm{span}\,}}({\mathbf {G}})\) and \(D={{\,\mathrm{span}\,}}({\mathbf {H}})\) to be complementary are recalled in the following:

Lemma 1

( [28, Proposition 1]) Let \(0\le k\le n\), and

Then, the following three statements are equivalent:

-

1.

\(\begin{pmatrix} {\mathbf {G}} {\mathbf {H}} \end{pmatrix}\in {\mathbb {F}}_2^{n\times n}\) is invertible;

-

2.

\(I_k+{\mathbf {P}}{\mathbf {L}}^{\mathsf {T}}\in {\mathbb {F}}_2^{k\times k}\) is invertible;

-

3.

\(I_{n-k}+{\mathbf {L}}{\mathbf {P}}^{\mathsf {T}}\in {\mathbb {F}}_2^{(n-k)\times (n-k)}\) is invertible.

A detailed comparison between DSM and IPM is proposed in Table 1.

On the contrary to IPM, the matrices \({\mathbf {G}}\) and \({\mathbf {H}}\) do not have specific form (recall IPM matrices are formatted as Eqn. 2 and Eqn. 3). However, there is no general inverse operation of “SubfieldRepresentation” (recall Def. 2) for DSM. Therefore, IPM is a special case of DSM, but some DSM encodings (Eqn. 6) cannot be represented as IPM.

ODSM uses orthogonal codes such that recovering \(\mathbf {M}\) is straightforward knowing \(\mathbf {Z}\): It consists in an orthogonal projection from space vector \({\mathbb {F}}_2^{nl}\) onto D. Actually, the complete commutative diagram involved in DSM is depicted in Fig. 3. The operations are explicited below:

-

Information vector \(\mathbf {X}\) is encoded as \(\mathbf {X} {\mathbf {G}}\) (using linear application \(E_C\)), while decoding of \(\mathbf {X} {\mathbf {G}}\) into \(\mathbf {X}\) is ensured by the decoding application \(D_C\);

-

Similarly, masking random variables \(\mathbf {M}\) are encoded as \(\mathbf {M} {\mathbf {H}}\) (using linear application \(E_D\)). Decoding of \(\mathbf {M} {\mathbf {H}}\) into \(\mathbf {M}\) is ensured by the decoding application \(D_D\);

-

Creating an encoded word \(\mathbf {Z}\) consists of adding one code word \(\mathbf {X} {\mathbf {G}}\) from C to one code word \(\mathbf {M} {\mathbf {H}}\) from D. In reverse, projections of \(\mathbf {Z}\in {\mathbb {F}}_2^{nl}\) to C (resp. D) are obtained by linear projection operation \(\varPi _C\) (resp. \(\varPi _D\)).

When C and D are orthogonal, then \({\mathbf {G}}{\mathbf {H}}^{\mathsf {T}}={\mathbf {0}}\), the all-zero \(l\times (n-1)l\) matrix. As a result, we have \(\varPi _C(\mathbf {Z})=\mathbf {Z} {\mathbf {G}}^{\mathsf {T}} ({\mathbf {G}} {\mathbf {G}}^{\mathsf {T}})^{-1} {\mathbf {G}}\) and \(\varPi _D(\mathbf {Z})=\mathbf {Z} {\mathbf {H}}^{\mathsf {T}} ({\mathbf {H}} {\mathbf {H}}^{\mathsf {T}})^{-1} {\mathbf {H}}\) as in [5].

This allows for the verification that an attacker who injects a fault has not corrupted (useless in terms of exploitation) the masks \(\mathbf {M}\). In practice, the attack (addition of a nonzero bit vector \(\epsilon \in {\mathbb {F}}_2^{nl}\backslash \{0\}\)) is undetected if and only if \(\epsilon \in C\). Indeed, otherwise \(\epsilon \) has a nonzero component in space vector D, and the fault injection is detected. The fault detection capability can be quantified in two models:

-

1.

Assumption 1: The difficulty of the attack is larger if the number of flipped bits is larger. Thus, undetected faults \(\epsilon \in C\backslash \{0\}\) must have Hamming weights \(\ge d_C\), where \(d_C\) is the minimum distance of code C.

-

2.

Assumption 2: The attacker can corrupt Z regardless of the value of \(\epsilon \), but cannot control the value of \(\epsilon \). Said differently, \(\epsilon \) is a random variable uniformly distributed in \({\mathbb {F}}_2^{nl}\backslash \{0\}\). This fault is undetected provided \(\epsilon \in C\backslash \{0\}\). As C has dimension l, the cardinality of \(C\backslash \{0\}\) is \(2^{l}-1\). Therefore, the probability that the fault is not detected equals \(\frac{2^{l}-1}{2^{nl}-1} \approx 2^{-l(n-1)}\). This number is independent from the code C, but depends on code D.

Thus, the probability of undetected faults gets lower as l and n increase. However, this approach has three drawbacks:

-

First of all, the masks used in ODSM remain unchanged during each call of cipher, which allows fault detection. But the “static” masks may pose a vulnerability since masks should be refreshed to avoid unintended dependencies between sensitive variables.

-

Secondly, it allows only to check errors on states \(\mathbf {Z}\), but not during nonlinear computations (which are tabulated, i.e., operations on \(\mathbf {Z}\) consist in lookup table accesses). From a hardware point of view, this means that ODSM allows us to detect faults in sequential logic (e.g., register banks, RAM, etc.), but not in combinational logic (e.g., logic gates or ROM).

-

Thirdly, during verification, that is, the projection of \(\mathbf {Z} + \epsilon \) in space vector D, the state \(\mathbf {Z}\) is manipulated; hence, additional leakage is produced, which must be taken into account in the security evaluation of ODSM representation (Eqn. 6). This is the reason we suggest detecting faults at the very end (end-to-end fault detection), like after encryption or decryption.

The first two points are structural weaknesses and will be fixed in Algorithm 1, starting from Sect. 3. For the third one, some codes suitable for DSM are constructed by Carlet et al. in [6] by duplicating the masks \(\mathbf {M}\), while this solution does not allow an end-to-end scheme.

3 Novel end-to-end fault detection scheme

3.1 Rationale

The core idea in our new scheme is to duplicate (two or more times) the secret X, rather than duplicating masks \(\mathbf {M}\) as in [6], so that it can be checked at the end (when it is no longer sensitive–e.g., a ciphertext is a non-sensitive variable, so as the plaintext).

Our new scheme is a IPM-like masking scheme, called IPM-FD. Since IPM is a promising high-order masking scheme, we extend it with fault detection capability so that it can resist both side-channel analysis and fault injection attacks simultaneously. Specifically, we represent the information as a vector \((X_1, X_2, \ldots , X_k)\in {\mathbb {K}}^k\) where \({\mathbb {K}}={\mathbb {F}}_{2^l}\).

We propose the new encoding as follows. Let us denote:

Definition 3

(IPM-FD data representation) Let \(X_i\in {\mathbb {K}}\) be the k copies of secret information; then, the encoding is represented as a tuple of n elements in \({\mathbb {K}}\):

where

where \(I_k\) is the \(k\times k\) identity matrix in \({\mathbb {K}}\), and L is a matrix of size \((n-k)\times k\), that is,

L has coefficients \((L_{i,j})_{k<i\le n,1\le j\le k}\).

This definition 3 is a generalization of Def. 1. In practice, we will call Eqn. 7 with redundancy to detect faults in the information X, i.e., \((X_1, X_2, \ldots , X_k)=(X, X, \ldots , X)\), as:

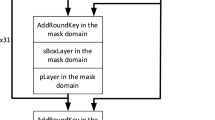

For the sake of convenience, the IPM-FD encoding used in this paper is depicted in Fig. 4. It illustrates a protection using \(n=3\) shares of \(l=8\) bits, with the following security features:

-

\(d_w=1\) (first-order secure at byte level), because dual distance of \({\mathbf {H}}\) in \({\mathbb {F}}_{2^8}\) is 2;

-

\(d_b=3\) (third-order secure at bit level), since the dual distance of the optimal \({\mathbf {H}}\) over \({\mathbb {F}}_{2}\) is 4—the subfield representation (by Def. 2) of the dual code \({\mathbf {H}}^\perp \) spawn by \(\begin{pmatrix} 1 &{} L_2 &{} L_3 \\ \end{pmatrix}\) has parameters \([24,8,4]_2\) where we take \(L_2=\alpha ^8\) and \(L_3=\alpha ^{17}\) as optimal parameters (from an exhaustive search over all possible candidates of \(L_2\) and \(L_3\) over \({\mathbb {F}}_{2^8}\)) in this case (Fig. 4).

Computation can be carried out on such \(\mathbf {Z}\), and when it is over (e.g., the complete AES is finished), the implementation can check whether the k copies of the information are the same. This allows us to detect up to \((k-1)\) errors (there is an error if the k copies are not equal to each other). It is worth noting that this model is stronger than the one in ODSM where only errors \(\epsilon \) with Hamming weight \(w_H(\epsilon )>d_C\) are detected in ODSM.

Repeating X k times may increase the signal captured by the attacker by a factor k; however, it is irrelevant to security order. Indeed, there is more signal, but it is correlated; therefore, it has no impact on the amount of information. Notice that, as a future extension, one might consider an encoding of information X which is more efficient in terms of rate than the simple k-times repetition code \(X\mapsto (X,\ldots ,X)\). However, such representation in Eqn. 8 allows for an end-to-end security protection against fault injection attacks, as illustrated in Algorithm 1.

For fault detection, either Algorithm 1 is started from scratch, or other actions, such as event logging for subsequent analysis (aiming at taking proactive actions to plug this leak), are triggered off. It is obvious that detecting fault in each intermediate phase can be carried out at any place in Algorithm 1, especially during step 5. However, such precaution is superfluous, as an overall check is done at the end, that is, at line 8. In addition, intermediate checks would disclose when the fault occurs (e.g., at which round), which delivers precious feedback to the attacker regarding the accuracy and the reproducibility of the setups.

Therefore, the design of IPM-FD scheme for a specific cryptographic algorithm can be simplified to select good parameters \({\mathbf {G}}\) and \({\mathbf {H}}\), which is corresponding to choose good codes for IPM-FD. We first show how to perform basic operations in the next subsection.

3.2 Computing with representation of IPM-FD

First of all, we present one instance of IPM-FD with \(k=2\) to clarify its encoding. We denote \(\mathbf {X}=(X_1, X_2)\in {\mathbb {K}}^2\), and \(\mathbf {M}=(M_3, \ldots , M_n) \in {\mathbb {K}}^{n-2}\). Thus, we have Eqn. 7 such that,

or said differently, we have \(\mathbf {Z}=(Z_1, \ldots , Z_n)\in {\mathbb {K}}^n\) which is equal to:

Here, we can see that \((Z_1, Z_3, \ldots , Z_n) \in {\mathbb {K}}^{n-1}\) and \((Z_2, Z_3, \ldots , Z_n) \in {\mathbb {K}}^{n-1}\) are two IPM sharings [2]. Therefore, we have \(k=2\) ways to demask:

where as a convention, \(L_{1,1} = L_{2,2} = 1\), \(L_{1,2} = L_{2,1} = 0\) and

It is known that universal computation can be achieved by Lagrange interpolation, which only requires addition and multiplication. Hereafter, we present three basic algorithms, with the most general case (k words of information and scalable with different k) used to build a complete masked cryptographic implementation.

3.2.1 Secure addition of IPM-FD

With Eqn. 8, we denote encoding of X and \(X^\prime \) by \(\mathbf {Z}\) and \(\mathbf {Z}^\prime \), respectively; thus, the addition is linear and can be calculated straightforwardly as in Algorithm 2.

3.2.2 Secure refresh algorithm for IPM-FD

As suggested in [31], we need to apply a refresh algorithm after each squaring operation to keep independence between masks (Algorithm 4 with \(\mathbf {Z} = \mathbf {Z}'\)). The algorithm for the refresh of IPM-FD is given in Algorithm 3. Notice that this algorithm can be computed in place, meaning that the output overwrites the input.

3.2.3 Secure multiplication of IPM-FD

Secure multiplication can be achieved by selecting only one among the k first coordinates, while keeping the \((n-k)\) masks, and multiplying \((n-k+1)\) shares by using the original IPM multiplication. Therefore, multiplication of IPM-FD is implemented in Algorithm 4.

Multiplication is repeated k times on shares in \({\mathbb {K}}^{n-k+1}\), and the resulting \(\mathbf {P}[j]\in {\mathbb {K}}^{n-k+1}\) for \(j\in \{1, \ldots , k\}\) are applied from line 4 to line 6 as in Algorithm 4 to homogenize masks in \((k-1)\) sharings with the same masks as \(\mathbf {P}[1]\).

We refer to line 4 to line 6 of Algorithm 4 as the homogenization algorithm used to merge the results \(\mathbf {P}[j]\) where \(1\le j \le k\). Thus, we have the following lemma, which applies to non-redundant sharings such as that of Eqn. 1.

Lemma 2

(Homogenization of two sharings) Let \(\mathbf {Z} = (Z_1, \ldots , Z_{n})\) and \(\mathbf {Z}' = (Z'_1, \ldots , Z'_{n})\) be two sharings that \(\langle L, \mathbf {Z} \rangle = X\) and \(\langle L', \mathbf {Z}' \rangle = X'\). There exists an equivalent sharing \(\mathbf {Z}''\) and an algorithm to transform \(\mathbf {Z}'\) into \(\mathbf {Z}''\) such that \(\mathbf {Z}\) and \(\mathbf {Z}''\) share all coordinates but the first one.

Proof

We apply a pivot technique to \(\mathbf {Z}''\). Let \(\epsilon \in {\mathbb {K}}\). We notice that the new sharing \(\mathbf {Z}'' = \mathbf {Z}' + (L'_2 \epsilon , \epsilon , 0, \ldots , 0)\) also represents the same unmasked value as \(\mathbf {Z}'\) does. Indeed, \(\langle L', Z' \rangle =X'\), and \(\langle L', (L'_2 \epsilon , \epsilon , 0, \ldots , 0) \rangle = L'_2 \epsilon + L'_2 \epsilon = 0\). By choosing \(\epsilon = \mathbf {Z}'_2 + \mathbf {Z}_2\), we get for \(\mathbf {Z}''\):

Therefore, \(\mathbf {Z}''\) now has the same the second share (coordinate at position 2) with \(\mathbf {Z}\). The complete homogenization is thus the repetition of this process for all the coordinates \(i\in \{2,\ldots ,n\}\). Notice that this algorithm does leak information neither on \(\mathbf {Z}\) nor on \(\mathbf {Z}'\), since it consists only of additions of masks to a sharing from an independent sharing. It is akin to a refresh procedure albeit where the new masks are actually a compensation of \(\mathbf {Z}'\) masks by those of \(\mathbf {Z}\), while keeping the masking invariant of Eqn. 1. Actually, it is a refresh algorithm using the masks of the difference \(\mathbf {Z}\oplus \mathbf {Z}'\). \(\square \)

By using Algorithm 1, one can start with plaintext and key representation as Eqn. 8 and carry addition/multiplication (and refresh if needed) to implement any cryptographic algorithms like AES and end up with a ciphertext still with the form as Eqn. 8. Hence, verification can be done only at the very end. Another advantage of IPM-FD is its scalability, by choosing different values of k and n.

4 Security analysis of IPM-FD and optimal codes selection

The security level of IPM-FD can be characterized by three metrics, namely word-level security order \(d_w\), bit-level security order \(d_b\) and number of detected faults \(d_f\) (for instance, if the number of faulted words is smaller than \(d_f+1\), then the fault will be detected). In this section, we show the security order of IPM-FD and how to choose optimal codes by interpreting IPM-FD as a coding problem.

4.1 Security of fault detection

We assess the security of IPM-FD against fault injection attacks in a coding theoretic approach. Assume a code of parameters \([n,k,d]_q\) over \({\mathbb {F}}_q\); there are three kinds of attackers in the state of the art:

-

An attacker which can corrupt one to \(d-1\) symbols (elements of field \({\mathbb {F}}_q\)). We assume here that faulting two symbols is somehow more difficult than faulting one symbol, etc. It is all the more difficult to fault, for the attacker, as more symbols must be corrupted simultaneously.

-

An attacker which can randomly change a code word to a different one, which may not be a valid code word. We assume that the attacker has no control over the faulted value and if the faulted value is a valid code word, then the fault cannot be detected.

-

An attacker which can choose the error \(\epsilon \) that best suits him. In this scenario, the attacker will choose \(\epsilon \) which maximizes her advantage, on replacing all code words z by \(z+\epsilon \). This model assumes a much stronger attacker, but it does not always represent a realistic use case as the requirements (costs) are quite high. This model has been promoted initially by Mark Karpovsky et al. [18,19,20], who also proposed robust codes and algebraic manipulation detection (AMD) codes.

Accordingly, the probabilities to detect a fault in those three cases are:

-

\(100\%\) for the first case when the number of faulted symbols \(< d\). But this holds only if the verification can be done on each and every code word, which is not the case for us (we check only at the very end). Thus, we cannot claim any security level when chaining operations.

-

\(1-2^{k-n}\) for the second case. This detection rate is also valid end to end (i.e., with verification delayed on the ciphertext). Indeed, there are two cases: either the fault replaces a code word with a valid code word, and this will not be detected, neither by checking right on the targeted code word nor later on. Same reasoning otherwise: If the fault replaces a code word by a non-code word, then the non-code word will keep being a non-code word after all the operations (and we do not consider double faults here). Therefore, detection (in code or not) can be carried out at any point in time after the fault has been injected.

-

\(1-|C \cap (C+\epsilon )|/|C|\) for the third case. Same reasoning as for the second case—this metric will stay unaltered throughout the computation.

In our IPM-FD setup, we support the last two models. Since we use the repetition code in IPM-FD, the minimum distance of the linear code C is \(d_C=k\). Hence, the security in sense of fault injection attack is now assessed with respect to number of detected faults as:

It is obvious that any faults can be detected if the k copies of results are inconsistent.

4.2 Security order of IPM-FD on SCA resistance

The addition and refresh algorithms are secure since there is no degradation on masks; we focus on multiplication algorithm Algorithm 4, and we have the following Theorem 1.

Theorem 1

The multiplication of IPM-FD in Algorithm 4 is \(d^\perp _D-1\)-order secure.

Proof

The k times of \(\mathsf {IPMult}\) multiplications at line 2 are secure at \((n-k)\)th order [2]. After their application, the k shared variables \(\mathbf {P}[j]\), \(1\le j\le k\), are masked by \(N_{i,j}\) (\(k+1\le i\le n, 1\le j\le k\)) that are \((n-k)\times k\) uniformly distributed and i.i.d. random variables.

At step 6, indexed by i (\(k+1\le i\le n\)), the contents of \(P_j\) are:

It is easy to see that any combinations of intermediate variations with mixed variables masked by \(N_{i,j}\) and \(N_{i,j'}\), for \(j\ne j'\), require more intermediate values to be probed than strategies which focus on a given \(N_{i,j}\) (for a given j).

The key-dependent variables which are only in \(P_{i,1}\) (since homogenization process consists in turning \(N_{i,j}\) into \(N_{i,1}\)) are those at:

-

line 2: \(X_1 X^\prime _1 + \sum _{i=k+1}^n L_{i,1} N_{i,1}\), and the \((n-k)\) masks \(N_{i,1}\) (\(k+1\le i\le n\));

-

line 6: for \(i=n\), \(P_j = X_j X^\prime _j + \sum _{i=k+1}^n L_{i,j} N_{i,1}\).

Finally, those shares are combined in an orderly manner as \(\mathbf {P}\) (line 7). Together, they have the shape:

where \(\mathbf {N} = (N_{k+1,1},\ldots ,N_{n,1}) \in {\mathbb {K}}^{n-k}\) is a uniformly distributed tuple of i.i.d random variables. Since \(d_D^\perp -1\) columns of \({\mathbf {H}}\) are independent [22, Theorem 10], which means if the attacker probes up to \(d\le (d_D^\perp -1)\) variables, the secret \(X_j\) encoded as an element of \({\mathbb {F}}_{2^l}^{n-k+1}\) is perfectly masked. The security order of Algorithm 4 is \((d_D^\perp -1)\). \(\square \)

In summary, the security order at word-level \(d_w\) and bit-level \(d_b\) of IPM-FD corresponds to \((d_D^\perp -1)\) at word level and \((d_D^{\perp \prime }-1)\) bit level (by Code Expansion defined in Def. 2), respectively. In particular, the maximum word-level security order \(d_w\) is \((n-k)\), since \(d_D^\perp \le (n-k+1)\) from singleton bound [34], with equal if and only if \(d_D^\perp \) is maximized.

4.3 Choosing optimal codes for IPM-FD

Two security orders \(d_w\) and \(d_b\) are connected to dual distance of D at word level and bit level, by encoding Eqn. 7 and Eqn. 8. Thus, we can search for minimal n satisfying the given requirements on the three parameters \(d_f\), \(d_w\) and \(d_b\). Since the best \(d_b\) is very difficult to obtain, we first search for codes given \(d_f\) and \(d_w\) and then find the best one with respect to optimal \(d_b\). For the first step, Algorithm 5 is adopted for selecting codes with minimal n given \(d_f\) and \(d_w\). In this algorithm, BKLC is short for “best known linear code.”

The second step is to choose the best code with maximal bit-level security order \(d_b\). We propose Algorithm 6 to select optimal codes with maximized \(d_b\). Notice that this algorithm 6 is conceptual, as in line 3, it is not possible in practice to enumerate all codes. This line is to be understood according to either some algebraic code construction (parametric design pattern, greedy algorithm, etc.) or code random choice (using genetic algorithms, random generating matrices, etc.).

We present some examples for codes in \({\mathbb {F}}_{2^8}\) in Table 2 (for \({\mathbb {F}}_{2^4}\) in Table 5, resp) calculated by Magma for small k and n. Interestingly, we compare the original IPM and IPM-FD with n and \(n+1\) shares, respectively, since in IPM-FD redundancy is needed for fault detection. For IPM with \(n=3\), we have optimal parameters \(d_w=2\) and \(d_b=5\), while for IPM-FD with \(n=4,~k=2\), the optimal \(d_w\) and \(d_b\) are \(d_w = 2\) and \(d_b = 4\). Hence, there is a trade-off for fault detection, which sacrifices the bit-level side-channel resistance. For instance, for \(k=2\), we can detect one error.

We recall that the security order of IPM at bit level is given by the minimum distance of the code generated by \({\mathbf {H}}^\perp = (1, L_2, \ldots , L_n)\) (projected from \({\mathbb {K}}={\mathbb {F}}_{2^l}\) to the binary ground field \({\mathbb {F}}_2^l\)). Now, adding fault detection capability, the security order of IPM-FD becomes that of the minimum distance of the code generated by Eqn. 11. However, the minimum distance of this code is less than that generated by either \((1, L_{3,1}, L_{4,1}, \ldots , L_{n,1})\) or \((1, L_{3,2}, L_{4,2}, \ldots , L_{n,2})\).

4.4 Comparison between IPM-FD and Boolean masking with fault detection

We recall that, in the state of the art about masking countermeasures, Boolean masking (BM, [25, §4]) is presented as a particularly convenient masking scheme, since sharing and demasking only involve XOR operations. In contrast, IPM, in addition to field additions (XORs), is furthermore encumbered with field multiplication with constants (the \(L_i\in {\mathbb {K}}\) values). This makes implementations more complex on programming (code size) and less efficient to implement. In practice, BM is thus a particular case of IPM, where all coefficients \(L_i=1\in {\mathbb {K}}\).

Still, one historical advantage of IPM over BM, which initially justified for the scheme, is that, at a given side-channel security order at word level, IPM is more efficient at bit level (e.g., when the leakage model is the Hamming weight or the Hamming distance).

Now, in this paper, we put forward a second advantage of IPM, in the context of fault detection (FD). Table 3 compares IPM-FD with BM-FD in this respect. It clearly appears that fault detection is not straightforward in BM-FD, whereas it is for IPM-FD. As an example, when detecting one single fault (\(d_f=1\)), and targeting a second-order protection in terms of word-level side-channel, IPM-FD manages to reach \(d_w=2\) with only \(n=4\) shares, thanks to:

However in Boolean masking scheme counterpart (i.e., in BM-FD), it is not possible to reach a minimum distance for \(H^\perp \) of value \(=3\) with a code length \(n=4\). Indeed, in systematic form, it would look as:

Now, as the minimum distance is 3, the weight of each line must be 3. Therefore, all \(2+2\) question marks (“?” symbol) must be nonzero, that is, equal to 1 (in the case of BM). Hence, the difference between the two lines is equal to \(\begin{pmatrix} 1&1&0&0 \end{pmatrix}\), which has a weight \(=2\), therefore a contradiction. However, let us notice that the problem can be solved by considering a length extended by one, that is:

where among the three question marks in one line, at least two are nonzero (\(=1\)). Knowing that the constraint is not only to have the number of ones \(\ge 3\) in each line, but also in the sum of the two lines, we can use:

But its length is \(n=5\), i.e., larger by one unit compared to IPM case, where constants can be chosen arbitrarily in the whole \({\mathbb {F}}_{256}\) and not only in \(\{0,1\}\subset {\mathbb {F}}_{256}\).

Summarizing up, as shown in Table 3, the IPM-FD is better than BM-FD in two aspects given the same \(d_f\). Firstly, IPM-FD needs less shares than BM-FD when achieving the same word-level security order (denoted in red bold font in Table 3). Secondly, the bit-level security order in IPM-FD is much higher than in BM-FD given the same \(d_w\) (denoted in black bold font in Table 3). It is worthy noting that the advantages of IPM-FD over BM-FD become much larger when the number of shares increases. However, in order to find the good or even optimal codes for IPM-FD, it is necessary to turn to DSM scheme.

5 Practical implementation and performances

We implement IPM-FD scheme on AES-128 based on (thanks to) open-source implementation of masked AES by Coron et al. [12, 13]. All the computations are made with additions, multiplications and lookups in some pre-computed tables. The random number generator comes from the https://libsodium.gitbook.io/ Sodium library [16]. Each sensitive variable (\(16\times (10+1)\) subkeys from the Key Schedule routine and 16 bytes in state array) is masked into n shares using \(n-k\) random bytes. In particular, regarding nonlinear operations, the S-box of a masked value is computed online instead of the 256-sized table, where its polynomial expression obtained via Lagrange interpolation is:

After demasking a shared variable, we check that the data have no faults injected by comparing the k copies and raising an alarm if any fault is detected. Our implementation works for any \(n\ge k\). Specially, for \(n<5\) and \(k<3\) we choose the best known linear code (BKLC) D obtained with Magma; otherwise, we randomly generate a matrix for masking.

Our implementation of IPM-FD on AES (in C) is publicly available [9]. Furthermore, the optimal linear codes for IPM by an exhaustive study are available [10].

5.1 Performance evaluation

We make a comparison for the same security order at word level, between:

-

No fault detection (classic IPM, \(k=1\))—this is our reference

-

Single fault detection by temporal redundancy (repeat twice the IPM computation)

-

Single fault detection embedded into IPM (so-called IPM-FD for \(k=2\))

Performance-wise, Table 4 shows that two fault detection strategies (temporal repetition and IPM-FD) are at essentially the same cost.

But if we consider the most time-consuming operation—the field multiplication: the number of field multiplications in IPM on n shares (Algorithm 5 of [2]) is \(3 n^2 -n\). However, the number of multiplications in IPM-FD on n shares is:

-

\(k (3(n-k+1)^2-(n-k+1))\) regarding the k IPM multiplications on \(n-k+1\) shares,

-

\((k-1)(n-k)\) regarding the \((k-1)\) homogenizations.

Hence, a total complexity of \(k (3(n-k+1)^2-(n-k+1))+(k-1)(n-k)\) is:

-

\(3n^2-n\) for IPM-FD with \(k=1\),

-

\(6n^2-13n+6\) for IPM-FD with \(k=2\).

Now, we have that \(2 \times (3n^2-n) > 6n^2-13n+6\), which are shown in Fig. 5. Therefore, it is more interesting, complexity-wise, to use IPM-FD for \(k=2\) than repeating a computation twice.

Notice that temporal redundancy is prone to fault injection attacks [29, 32], whereby an attacker would reproduce exactly the same fault on the repeated executions. Therefore, our IPM-FD is intrinsically stronger against fault attacks, at the same cost in terms of execution speed.

6 Conclusion and perspectives

IPM shows an advantageous property—higher security order at bit level \(d_b\) than at word level—as a promising alternative to Boolean masking. In this paper, we propose a novel end-to-end fault detection scheme called IPM-FD, which is a IPM-like scheme to detect faults by redundancy on secrets rather than on masks. The IPM-FD is also a unified scheme to resist side-channel analysis and fault injection attack simultaneously. We also present an example by applying IPM-FD to AES and provide a comparison on performance with different settings.

As a perspective, we notice that the performances of both IPM and IPM-FD can be improved by choosing small (or sparse) values for \(L_{i,j}\in {\mathbb {K}}\) scalars. This strategy is similar to that already employed by Rijndael inventors, for instance when designing the MixColumns operation. This raises the question of finding codes with sparse matrices of high dual distance.

Secondly, we show in Tables 2, 5 and 6 for results by an exhaustive study, which is very time-consuming and even impossible to find the optimal one when the number of shares n gets larger. Hence, a systematic (e.g., algebraic) construction of better codes than mere repetition codes is much more preferable and could be leveraged. However, it is still an open problem to construct optimal or suboptimal codes for IPM-FD. One possible approach is to convert some constructions [6] in DSM to IPM-FD which needs further study.

Besides, we notice that our fault detection paradigm applies also to the case of Boolean masking, i.e., IPM, where all constants \(L_{i,j}\) are equal to 1, which can also enable enhancements of currently deployed software code with respect to detection of perturbations.

Notes

BKLC is the short of the best known linear code in Magma [35].

References

Ali, Subidh, Mukhopadhyay, Debdeep, Tunstall, Michael: Differential fault analysis of AES: towards reaching its limits. J. Cryptogr. Eng. 3(2), 73–97 (2013)

Balasch, J., Faust, S., Gierlichs, B.: Inner product masking revisited. In: Elisabeth, O., Marc F., editors, Advances in Cryptology - EUROCRYPT 2015 - 34th Annual International Conference on the Theory and Applications of Cryptographic Techniques, Sofia, Bulgaria, April 26-30, 2015, Proceedings, Part I, volume 9056 of Lecture Notes in Computer Science, pp 486–510. Springer, (2015)

Balasch, J., Faust, S., Gierlichs, B., Paglialonga, C., Standaert, F.-X.: Consolidating inner product masking. In: Tsuyoshi Takagi and Thomas Peyrin, editors, Advances in Cryptology - ASIACRYPT 2017 - 23rd International Conference on the Theory and Applications of Cryptology and Information Security, Hong Kong, China, December 3-7, 2017, Proceedings, Part I, volume 10624 of Lecture Notes in Computer Science, pp. 724–754. Springer, (2017)

Barthe, Gilles, Belaïd, Sonia, Dupressoir, François, Fouque, Pierre-Alain, Grégoire, Benjamin: Compositional verification of higher-order masking: application to a verifying masking compiler. IACR Cryptol. ePrint Arch. 2015, 506 (2015)

Bringer, J., Carlet, C., Chabanne, H., Guilley, S., Maghrebi, H.: Orthogonal direct sum masking—a smartcard friendly computation paradigm in a code, with builtin protection against side-channel and fault attacks. In: David Naccache and Damien Sauveron, editors, Information Security Theory and Practice. Securing the Internet of Things - 8th IFIP WG 11.2 International Workshop, WISTP 2014, Heraklion, Crete, Greece, June 30 - July 2, 2014. Proceedings, volume 8501 of Lecture Notes in Computer Science, pp. 40–56. Springer, (2014)

Carlet, C., Güneri, C., Mesnager, S., Özbudak, F.: Construction of some codes suitable for both side channel and fault injection attacks. In: Lilya Budaghyan and Francisco Rodríguez-Henríquez, editors, Arithmetic of Finite Fields—7th International Workshop, WAIFI 2018, Bergen, Norway, June 14-16, 2018, Revised Selected Papers, volume 11321 of Lecture Notes in Computer Science, pp. 95–107. Springer, (2018)

Chakraborty, Abhishek, Mazumdar, Bodhisatwa, Mukhopadhyay, Debdeep: A combined power and fault analysis attack on protected grain family of stream ciphers. IEEE Trans. CAD Integr. Circuits Syst. 36(12), 1968–1977 (2017)

Cheng, W., Carlet, C., Goli, K., Danger, J.-L., Guilley, S.: Detecting faults in inner product masking scheme—IPM-FD: IPM with fault detection, August 24 2019. In: 8th International Workshop on Security Proofs for Embedded Systems (PROOFS). Atlanta, GA, USA (2019)

Cheng, W., Carlet, C., Goli, K., Danger, J.-L., Guilley, S.: Detecting faults in inner product masking scheme—IPM-FD: IPM with fault detection, August (2019). https://github.com/Qomo-CHENG/IPM-FD

Cheng, W., Guilley, S., Danger, J.-L., Carlet, C., Mesnager, S.: Optimal Linear Codes for IPM, January (2020). https://github.com/Qomo-CHENG/OC-IPM

Clavier, C., Feix, B., Gagnerot, G., Roussellet, M.: Passive and active combined attacks on AES. In: FDTC, pp. 10–18. IEEE Computer Society, 21 August 2010. Santa Barbara, CA, USA. (2010) https://doi.org/10.1109/FDTC.2010.17

Coron, J.-S.: HTable countermeasure against side-channel attacks—reference implementation for the masking scheme presented in [13]. https://github.com/coron/htable

Coron, J.-S.: Higher order masking of look-up tables. In: Phong Q. Nguyen and Elisabeth Oswald, editors, EUROCRYPT, volume 8441 of Lecture Notes in Computer Science, pp. 441–458. Springer (2014)

Coron, J.-S., Prouff, E., Rivain, M.: Side channel cryptanalysis of a higher order masking scheme. In: Pascal, P., Ingrid, V., editors, CHES, volume 4727 of LNCS, pp. 28–44. Springer (2007)

Danger, Jean-Luc, Guilley, Sylvain, Heuser, Annelie, Legay, Axel, Tang, Ming: Physical security versus masking schemes. In: Koç, Çetin Kaya (ed.) Cyber-Physical Systems Security, pp. 269–284. Springer, Berlin (2018)

Denis, F.: The Sodium cryptography library, Jul (2019)

Ishai, Y., Sahai, A., Wagner, D.: Private circuits: securing hardware against probing attacks. In: CRYPTO, volume 2729 of Lecture Notes in Computer Science, pp. 463–481. Springer, August 17–21 2003. Santa Barbara, California, USA (2003)

Karpovsky, M.G., Kulikowski, K.J., Wang, Z.: Robust error detection in communication and computation channels. In: in Proceedings of International Workshop on Spectral Techniques (2007)

Karpovsky, Mark G., Nagvajara, Prawat: Optimal codes for minimax criterion on error detection. IEEE Trans. Inf. Theory 35(6), 1299–1305 (1989)

Karpovsky, Mark G., Taubin, Alexander: New class of nonlinear systematic error detecting codes. IEEE Trans. Inf. Theory 50(8), 1818–1820 (2004)

Kirschbaum, M., Popp, T.: Evaluation of a DPA-resistant prototype chip. In: ACSAC, pp. 43–50. IEEE Computer Society, 7-11 December 2009. Honolulu, Hawaii (2009)

Jessie MacWilliams, F., Sloane, Neil J .A.: The Theory of Error-Correcting Codes. Elsevier, Amsterdam, North Holland (1977). ISBN: 978-0-444-85193-2

MacWilliams, F.J., Sloane, N.J.A. Neil James A.: The theory of error correcting codes. North-Holland mathematical library. North-Holland Pub. Co. New York, Amsterdam, New York, 1977. Includes index (1977)

Mangard, S., Oswald, E., Popp, T.: Power Analysis Attacks: Revealing the Secrets of Smart Cards. Springer, December 2006. ISBN 0-387-30857-1, http://www.dpabook.org/ (2006)

Messerges, T.S.: Securing the AES finalists against power analysis attacks. In: Bruce Schneier, editor, Fast Software Encryption, 7th International Workshop, FSE 2000, New York, NY, USA, April 10-12, 2000, Proceedings, volume 1978 of Lecture Notes in Computer Science, pp. 150–164. Springer (2000)

Monteiro, C., Takahashi, Y., Sekine, T.: Low power secure AES S-box using adiabatic logic circuit. In: 2013 IEEE Faible Tension Faible Consommation, pp. 1–4, June (2013)

Moore, Simon, Anderson, Ross, Mullins, Robert, Taylor, George, Fournier, Jacques JA: Balanced self-checking asynchronous logic for smart card applications. J. Microprocess. Microsyst. 27(9), 421–430 (2003)

Ngo, X.T., Bhasin, S., Danger, J.-L., Guilley, S., Najm, Z.: Linear complementary dual code improvement to strengthen encoded circuit against hardware Trojan horses. In: IEEE International Symposium on Hardware Oriented Security and Trust, HOST 2015, Washington, DC, USA, 5-7 May, 2015, pp. 82–87. IEEE (2015)

Patranabis, S., Chakraborty, A., Nguyen, P.H., Mukhopadhyay, D.: A biased fault attack on the time redundancy countermeasure for AES. In: Stefan, M., Axel, Y.P., editors, Constructive Side-Channel Analysis and Secure Design - 6th International Workshop, COSADE 2015, Berlin, Germany, April 13-14, 2015. Revised Selected Papers, volume 9064 of Lecture Notes in Computer Science, pp. 189–203. Springer (2015)

Poussier, R., Guo, Q., Standaert, F.-X., Carlet, C., Guilley, S.: Connecting and improving direct sum masking and inner product masking. In: Thomas, E., Yannick, T., editors, Smart Card Research and Advanced Applications—16th International Conference, CARDIS 2017, Lugano, Switzerland, November 13-15, 2017, Revised Selected Papers, volume 10728 of Lecture Notes in Computer Science, pp. 123–141. Springer (2017)

Rivain, M., Prouff, E.: Provably secure higher-order masking of AES. In: Stefan, M., François-Xavier, S., editors, CHES, volume 6225 of LNCS, pp. 413–427. Springer (2010)

Saha, S., Jap, D., Breier, J., Bhasin, S., Mukhopadhyay, D., Dasgupta, P.: Breaking redundancy-based countermeasures with random faults and power side channel. In: 2018 Workshop on Fault Diagnosis and Tolerance in Cryptography, FDTC 2018, Amsterdam, The Netherlands, September 13, 2018, pp. 15–22. IEEE Computer Society (2018)

Schneider, T., Moradi, A., Güneysu, T.: Parti - towards combined hardware countermeasures against side-channel and fault-injection attacks. In: Matthew Robshaw and Jonathan Katz, editors, Advances in Cryptology - CRYPTO 2016 - 36th Annual International Cryptology Conference, Santa Barbara, CA, USA, August 14-18, 2016, Proceedings, Part II, volume 9815 of Lecture Notes in Computer Science, pp. 302–332. Springer (2016)

Singleton, Richard C.: Maximum distance q -nary codes. IEEE Trans. Inf. Theory 10(2), 116–118 (1964)

University of Sydney (Australia). Magma Computational Algebra System. http://magma.maths.usyd.edu.au/magma/, Accessed on Aug 22, 2014

Wang, W., Standaert, F.-X., Yu, Y., Pu, S., Liu, J., Guo, Z., Gu, D.: Inner product masking for bitslice ciphers and security order amplification for linear leakages. In: Kerstin, L.-R., Michael, T., editors, Smart Card Research and Advanced Applications - 15th International Conference, CARDIS 2016, Cannes, France, November 7-9, 2016, Revised Selected Papers, volume 10146 of Lecture Notes in Computer Science, pp. 174–191. Springer (2016)

Acknowledgements

This work has been partly financed via the project TeamPlay (https://teamplay-h2020.eu/), a project from European Union’s Horizon2020 research and innovation program, under grant agreement No. 779882, and also supported by SECODE project (https://secode.telecom-paristech.fr/) under grant No. ANR-15-CHR2-0007 funded by the CHIST-ERA program and coordinated by ANR.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work is an extension of [8] (PROOFS 2019).

An optimal codes for IPM-FD with \(k=2\)

An optimal codes for IPM-FD with \(k=2\)

By using Magma [35], we present some instances for IPM-FD with \(k=2\), in particular \({\mathbb {K}}= {\mathbb {F}}_{2^4}\) in Table 5 and \({\mathbb {K}}= {\mathbb {F}}_{2}\) in Table 6, respectively. Interestingly, we notice that for \({\mathbb {K}}= {\mathbb {F}}_{2}\) the best minimum distance of \({\mathbf {H}}^\perp \) is equal to BKLC(GF(2), n, 2), where n is the same as in Table 6.

Rights and permissions

About this article

Cite this article

Cheng, W., Carlet, C., Goli, K. et al. Detecting faults in inner product masking scheme. J Cryptogr Eng 11, 119–133 (2021). https://doi.org/10.1007/s13389-020-00227-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13389-020-00227-6