Abstract

Direct Sum Masking (DSM) and Inner Product (IP) masking are two types of countermeasures that have been introduced as alternatives to simpler (e.g., additive) masking schemes to protect cryptographic implementations against side-channel analysis. In this paper, we first show that IP masking can be written as a particular case of DSM. We then analyze the improved security properties that these (more complex) encodings can provide over Boolean masking. For this purpose, we introduce a slight variation of the probing model, which allows us to provide a simple explanation to the “security order amplification” for such masking schemes that was put forward at CARDIS 2016. We then use our model to search for new instances of masking schemes that optimize this security order amplification. We finally discuss the relevance of this security order amplification (and its underlying assumption of linear leakages) based on an experimental case study.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

1 Introduction

Masking is among the most investigated countermeasures against side-channel analysis. It aims at performing cryptographic computations on encoded (aka secret shared) data in order to amplify the impact of the noise in the adversary’s observations [10, 13, 14, 31]. For example, in the context of block ciphers, a lot of attention has been paid to the efficient exploitation of simple encodings such as additive (e.g., Boolean ones in [12, 25, 32]) or multiplicative ones (e.g., [19, 20]). Very summarized, the main advantage of these simple encodings is that they enable efficient implementations [22].

In parallel, an alternative trend has investigated the potential advantages of slightly more complex encodings. Typical examples include polynomial masking (e.g., [18, 21, 33]), Inner Product (IP) masking [1, 2, 17] and code-based masking (e.g., [5,6,7,8,9]). While computing over these encodings is generally more expensive [25], the recent literature has shown that their elaborate algebraic structure also leads to improved security properties. For example, it can decrease the information leakages observed in “low noise conditions” [1, 2, 18, 21, 33]. Also, it can improve the “statistical security order” (or security order in the bounded moment leakage model [3]) in case of linear leakage functions [8, 26, 38]. So while it is an open problem to find out which masking scheme offers the best security vs. efficiency tradeoff for complete implementations in actual devices, the better understanding and connection of simple and complex encoding functions appears as a necessary first step in this direction.

For this purpose, and as a starting point, we note that it has already been shown in [2] that IP masking can be viewed as a generalization of simpler encodings (Boolean, multiplicative, affine and polynomial). So our focus in this paper will be on the connection between IP masking and the Direct Sum Masking (DSM) [5, 9], which is a quite general instance of code-based masking. Our contributions in this respect are as follows:

First, we connect IP masking and DSM by showing that the first one can be seen as a particular case of the latter one. Second, we analyze the security properties of these masking schemes. In particular, we show that the “security order amplification” put forward in previous works can be easily explained thanks to (a variation of) the probing model [27], by considering bit-level security, rather than larger (field-element-level) security. Thanks to this connection, we then express how to best optimize the security order amplification (i.e., the bit-level security) based on the dual distance of a binary code. We further perform an informed search on code instances which allows us to improve the state-of-the-art parameters for IP encodings. We finally propose experiments discussing the interest and limitations of security order amplification in practice (i.e., the relevance of the linear leakage assumption).

Cautionary note. Our focus in this paper is on encodings. Admittedly, an even more important issue is to compute (in particular, multiply) efficiently over encodings. In this respect, while the literature on IP masking provides solutions to this problem [1, 2], it remains an open challenge to describe efficient multiplication algorithms for DSM.

2 Connecting DSM and IP Masking

In this section we first introduce the two masking schemes that we will analyze, namely IP masking and DSM. We then show how these two methods are connected, focusing only on their functional description (security will be investigated in Sect. 3).

2.1 Notations

We use capital letters for random variables and small caps for their realizations. We denote the conditional probability of a random variable A given B with \(\mathrm {P}\left[ A|B\right] \). We use sans serif font for functions (e.g., \(\mathsf {F}\)) and calligraphic fonts for sets (e.g., \(\mathcal {A}\)). Given a field \(\mathbb {K}\), we denote by \(a \cdot b\) the field multiplication between two elements a and b. We denote by \([a]_2\) the binary vector representation of some element \(a \in \mathbb {F}_{2^k}\) for some k. We use capital bold letters for matrices (e.g., \(\mathbf {M}\)) and small bold caps for raw vectors (e.g., \(\mathbf {v}\)). We denote by \(\mathbf {v}(i)\) the i-th element of a vector \(\mathbf {v}\). We denote by \(\mathbf {M}^T\) (resp. \(\mathbf {v}^T\)) the transpose of a matrix M (resp. a vector \(\mathbf {v}\)). The inner product between two vectors \(\mathbf {v}_1\) and \(\mathbf {v}_2\) is noted \(\langle \mathbf {v}_1,\mathbf {v}_2 \rangle \). In the rest of the paper, x denotes a k-bit secret value that we wish to mask and \([x]_2\) its binary vector representation.

2.2 Inner Product Masking

IP masking was introduced in [1, 2, 17] as a generalization of Boolean masking. Instead of simply splitting a secret value as the sum of random shares, it decomposes the secret as the inner product between random values and a public vector. More formally, the first step of the IP encoding is to select a public vector \(\mathbf {l}=(l_0,...,l_{n-1})\in \mathbb {F}_{2^k}^n \backslash \{0\}\) (with \(l_0\) generally set as \(l_0=1\) for performance reasons), where n is the number of shares. A sensitive variable \(x \in \mathbb {F}_{2^k}\) is then encoded as the vector \(\mathbf {s}_{IP}=(s_0,...,s_{n-1})\in \mathbb {F}_{2^k}^n\) such that \(x=\langle \mathbf {l} , \mathbf {s}_{IP} \rangle \). Algorithm 1 describes the masking initialization procedure, where the function \(\mathsf {rand}(\mathbb {F}_{2^k})\) returns a random element uniformly from \(\mathbb {F}_{2^k}\). Boolean masking is the particular case of IP masking where \(l_i=1\) for \(i \in [0,n-1]\).

2.3 Direct Sum Masking

DSM [5, 9] describes masking from an error correcting code viewpoint. As opposed to IP masking, this scheme works on the bit level. That is, a sensitive variable x is viewed as belonging to \(\mathbb {F}_2^k\) instead of \(\mathbb {F}_{2^k}\) and is thus represented as \([x]_2\). It allows adding an arbitrary amount m of bits of randomness to the encoding of \([x]_2\) (i.e., not necessarily a multiple of k as in IP masking). As a result, the final encoding \(\mathbf {s}_{DSM}\) of \([x]_2\) lays in \(\mathbb {F}_2^{k+m}\). As a first step, the vector space \(\mathbb {F}_2^{k+m}\) is decomposed in two subspaces C and D of dimensions k and m:

where C and D respectively represent the spaces where the sensitive variable and the mask lay. That is, the sensitive variables (resp., the mask) are the code words of C (resp., D) of length of \(k+m\). We denote by \(\mathbf {G}\) and \(\mathbf {H}\) the generator matrices of C and D. The encoding of \([x]_2\) is the vector \(\mathbf {s}_{DSM}= [x]_2\mathbf {G} + \mathbf {y}\mathbf {H}\), where \(\mathbf {y} \in \mathbb {F}_2^m\) is a random binary vector. Recovering \([x]_2\) (i.e., decoding) or \(\mathbf {y}\) from \(\mathbf {s}_{DSM}\) can then be achieved thanks to a projection on their respective space. We stress the fact that while this scheme has been designed to thwart both side-channel and fault attacks (if C and D are orthogonal), we only focus on the side-channel part.

2.4 Unifying DSM and IP Masking

From the previous description of IP masking and DSM, we now show how these two schemes are connected. We recall that the IP encoding of a sensitive variable x using n shares is the vector \(\mathbf {s}_{IP}=(s_0 = x+l_1 \cdot s_1 + ... + l_{n-1} \cdot s_{n-1}, s_1, ... , s_{n-1}) \in \mathbb {F}_{2^k}^n\). In order to make the connection with DSM, we first have to move its base field from \(\mathbb {F}_{2}\) to \(\mathbb {F}_{2^k}\). That is, we want the final DSM encoding to belong to \(\mathbb {F}_{2^k}^n\). We next decompose \(\mathbb {F}_{2^k}^n\) using two supplementary subspaces C and D such that \(\mathbb {F}_{2^k}^n = C \oplus D\), where the dimension of C is 1 and the dimension of D is \(n-1\). As in the previous subsection, we denote by \(\mathbf {G}\) and \(\mathbf {H}\) the generator matrices of C and D that we define as follow (where each element belong to \(\mathbb {F}_{2^k}\)):

Equation 3 then shows the encoding vector \(\mathbf {s}_{MIX}\) of a secret \(x \in \mathbb {F}_{2^k}\) using a randomness vector \(\mathbf {y}=(y_1,...,y_{n-1}) \in \mathbb {F}_{2^k}^{n-1}\):

The encoding of an IP masking can thus be written by adapting the DSM scheme base field and choosing the generating matrices accordingly. However, this modification discards one property of the original DSM scheme. Namely, the number of bits of randomness added to the encoding cannot be arbitrarily chosen as it has to be a multiple of k. Besides, we note that the discussions in [5] additionally required the codes C and D to be orthogonal. Yet, this additional property is not required in our discussions that focus only on side-channel security, and where the secret x can be recovered using a projection: \(x = \langle \mathbf {l}, \mathbf {s}_{MIX} \rangle \).

3 Probing Security and Bit Probing Security

In this section, we discuss the side-channel resistance of the IP masking and DSM in the probing model [27]. For each method, we look at the security of the encoding. In the case of IP masking, we assume that the size k of the base field corresponds to the word size of the implementation and the probes allow the adversary to observe such field elements. As the DSM works on the bit level, we additionally introduce the bit-probing model, where each probe can only look at one bit of the encoding. We finally make the link between the (general, ie.g., field-level) probing security and the bit-probing security of the inner product masking. This connection will be used in the next section in order to explain the security order amplification of the IP masking.

3.1 Probing Security

The probing model introduced in [27] formalizes the security improvement obtained with the masking countermeasure. Informally, dth-order probing security ensures that the distribution of any d or less intermediate variables manipulated during the algorithm execution is independent of any secret value. From an attacker point-of-view, it implies that only the combination of at least \(d+1\) intermediate variables can give information on the secret. As a result, the practical security increases exponentially in the number of shares, which is intuitively explained by the fact that the adversary has to estimate higher-order statistical moments, a task of which the sampling complexity grows exponentially in the order [10, 13, 14, 31]. In the case of IP masking with n shares, previous works showed that the encoding has a probing security of order \(d=n-1\) [1, 2].

3.2 Bit-Probing Security

Thanks to the link exhibited in the previous section, we naturally have that DSM is secure in the probing model as well, which also follows the analysis in [5, 9]. However, since DSM works at the bit level, we additionally define the bit-probing security as the security in a tweaked probing model, where each probe can evaluate only one bit of the encoding (even if this encoding is defined for larger fields). In this model, the security order is thus the maximum number \(d'\) such that any combination of \(d'\) bits of \(\mathbf {s}_{DSM}\) is independent of the secret \([x]_2\). More formally, the bit-probing security of the DSM scheme is given by:

Proposition 1

Let C and D two codes of generator matrices \(\mathbf {G}\) and \(\mathbf {H}\) define a DSM encoding. Let k and m respectively be the dimensions of C and D. The bit-probing security of the DSM encoding defined by C and D is equal to the distance of the dual code (called the dual distance) of D minus 1.

Proof

Let \(\mathbf {s}\) be the encoding of some value \([x]_2\). We have \(\mathbf {s}_{DSM} = [x]_2\mathbf {G} + \mathbf {y}\mathbf {H}\), a vector of \(k+m\) bits. The bit-probing security is the number \(d'\) such that at least \(d'+1\) elements of \(\mathbf {s}_{DSM}\) are required to recover at least one bit of \([x]_2\). If we consider the system given by Eq. 4:

where only the right part is known, \(d'+1\) is equal to the smallest number of sub-equations of this system that allows recovering at least one bit of \([x]_2\). We assume that the dual distance of D is equal to \(d+1\), which is the minimum number of columns of \(\mathbf {H}\) that can be linearly dependent. This means that at least \(d+1\) sub-equations of the system in Eq. 4 are required to suppress the influence of \((\mathbf {y}\mathbf {H})^T\), and thus get information on \([x]_2\). As a result, the bit-probing security of the DSM encoding is equal to d. \(\square \)

Note that as will be clear next, the bit-probing security order (which can be higher than the probing security order) does not always guarantee a higher practical security order (i.e., in the bounded moment or noisy leakage models [3, 31]) than predicted by the (field-level) probing security order. Yet, it will be instrumental in explaining the security order amplification for certain types of leakage functions put forward in [38].

3.3 Inner Product and Bit-Probing Security

We now consider the bit-probing security of the IP masking encoding. In Sect. 2.4, we showed how IP masking and DSM are linked by changing the base field of the DSM scheme from \(\mathbb {F}_2\) to \(\mathbb {F}_{2^k}\). In order to assess the bit-probing security of the IP masking by using Proposition 1, we have changed the base field back from \(\mathbb {F}_{2^k}\) to \(\mathbb {F}_2\). As a result, we want a new encoding \(\mathbf {s}_{MIX2}\) that belongs to \(\mathbb {F}_{2}^{kn}\) such that each bit of \(\mathbf {s}_{MIX}\) and \(\mathbf {s}_{MIX2}\) are the same: \([\mathbf {s}_{MIX}(i)]_2 = (\mathbf {s}_{MIX2}(ki),...,\mathbf {s}_{MIX2}(k(i+1)-1)).\)

As a preliminary, we first define by \(\mathbf {L}_i\) (with \(i \in [1,n-1]\)) the \(k \times k\) binary matrices that represent the multiplication by \(l_i\) in \(\mathbb {F}_{2^k}\). That is, given some value \(x \in \mathbb {F}_{2^k}\), we define \(\mathbf {L_i}\) such that \([l_i \cdot x]_2 = (\mathbf {L}_i \times {[x]_2}^T)^T\). The matrix \(\mathbf {L}_i\) can be constructed such that its j-th column is equal to \([\alpha ^j \cdot l_i]_2\), where \(\alpha \) is a root of the polynomial used to create \(\mathbb {F}_{2^k}\).

We now define two codes C and D such that \(\mathbb {F}_{2}^{kn} = C \oplus D\), the dimension of C is k, and the dimension of D is \(k(n-1)\), with their generator matrix \(\mathbf {G}\) and \(\mathbf {H}\) specified as follow:

such that the first k columns of \(\mathbf {G}\) are 1 and the next \(k(n-1)\) are 0. Here, \(\mathbf {1}_k\) denotes the \(k \times k\) identity matrix and \(\mathbf {0}_k\) denotes the \(k \times k\) zero matrix. Equation 6 then shows the encoding vector \(\mathbf {s}_{MIX2}\) of a secret \([x]_2 \in \mathbb {F}_{2}^k\) using a randomness vector \(\mathbf {y}=(y_1,...,y_{k(n-1)}) \in \mathbb {F}_{2}^{k(n-1)}\):

From Proposition 1, we know that the bit-probing security of \(\mathbf {s}_{MIX2}\) corresponds to the dual distance of \(\mathbf {H}\) minus 1 (which depends on the selection of the \(\mathbf {l} = (l_1,...,l_{n-1})\) vector of the IP masking, as already hinted in [38]). As a result, the best bit-probing security using n shares can be achieved by selecting \(\mathbf {l}\) such that the dual distance of \(\mathbf {H}\) is maximized.

4 Security Order Amplification

Under some physical assumption that will be discussed later, it has been observed that the concrete security order of the IP encoding (in the bounded moment or noisy leakage models [3, 31]) can be higher than the one given by its probing security [38]. In this section, we provide a formal explanation of this phenomenon that we so-far denoted as security order amplification. We first introduce the bounded moment model that we will use for this purpose [3]. We then apply this model to the IP masking, and explain its link with security order amplification.

4.1 Bounded Moment Model

The bounded moment leakage model has been introduced in [3], mainly in order to formalize the security of parallel implementations and to connect probing security with current (moment-based) evaluation practices such as [35].

For our following discussions, we will consider a n-share masked implementation with encoding \(\mathbf {s}=(s_0,...,s_{n-1})\) of a secret x that manipulates all the shares within N cycles. As in [3], we denote by \(\mathcal {Y}_c\) the set of shares that are manipulated during the cycle c (\(0 \le c \le N-1\)) and by \(n_c\) the cardinal of \(\mathcal {Y}_c\). We assume that the random variable \(L_c\) that represents the leakage associated to the computation during the cycle c follows a linear model:

where \(\mathsf {L}_c^i\) denotes the deterministic leakage part associated to the manipulation of the share \(\mathcal {Y}_c(i)\) and \(R_c\) a random noise variable. Note that by linear model, we mean that the different \(\mathsf {L}_c\)’s are summed in Eq. 7, which is needed to ensure that the leakages corresponding to different shares are independent (otherwise even the probing security order will not be reflected in the bounded moment or noisy leakage models). By contrast, so far we do not assume that the \(\mathsf {L}_c\)’s are linear (this will be only needed for security order amplification).

A fully serial implementation corresponds to the case \(N=n\) and \(n_c=1, c\in [0,N-1]\). On the opposite, a fully parallel implementation would be \(N=1\) and \(n_0 = n\). In the later case, higher-order probing security can never be achieved as a single variable contains the information on all shares. Hence, the bounded moment model has been introduced to characterize the security of such fully parallel implementations. Basically, having a bounded moment security of order d means that any statistical moment up to the order d of the leakage distribution \(\{\mathsf {L}_c\}_{c=0}^{N-1}\) is independent of the secret. More formally, Definition 1 describes the bounded moment security.

Definition 1

Let \(\{L_c\}_{c=0}^{N-1}\) be the leakages of a N cycles parallel masked implementation that manipulates a secret x. We denote by \(\mathsf {E}\) the expectation operation. This implementation is security at order d if, for all N-tuples \(d_i \in \mathbb {N}^N\) such that \(\sum _{i=0}^{N-1} d_i \le d\), we have that \(\mathsf {E}(\mathsf {L}_0^{d_0} \times ... \times \mathsf {L}_{N-1}^{d_{N-1}})\) is independent of x.

Interestingly, it has been shown in [3] that proving security at order o in the probing model (for a serial n-cycle implementation) implies security at order o in the bounded moment model for the corresponding parallel (1-cycle) implementation. We will use this theorem in the next subsection to prove the security order amplification of the inner product masking.

4.2 Security Order Amplification for IP Masking

We now assume an implementation of the IP masking with n shares on \(\mathbb {F}_2^k\), where one share corresponds to one variable. That is, we consider the encoding \(\mathbf {s}_{MIX}=(s_0,...,s_{n-1})\) such that each \(s_i\) is manipulated independently. We denote by \(L_i\) the random variable that represents the leakage on \(s_i\). We assume that the different \(L_i\)’s are independent and are the sum of a deterministic and random part: \(L_i =\mathsf {L}_i(s_i) + R_i\), where \(\mathsf {L}_i\) denotes the deterministic part of the leakage and \(R_i\) denotes a random noise variable. As stated in Sect. 3.1, this encoding has a probing security of order \(d=n-1\). However, it has been noticed in [38] that the actual security of the encoding can be higher than d if the leakage function is linear in the bits of the variable. That is, in this case, information on the secret can be only obtained by estimating a statistical moment \(d'\) of the leakage distribution, with \(d' \ge d\). This can be intuitively explained by the public vector \(\mathbf {l}\) that mixes the bits of the different shares, as opposed to Boolean masking where knowing the first bit of each share directly reveals the first bit of the secret. More formally, the security amplification property of the inner product masking is given by Proposition 2.

Proposition 2

Let \(\mathbf {s}_{MIX}=(s_0,...,s_{n-1})\) be the n shares of the IP encoding vector defined by the public vector \(\mathbf {l} = (1,l_1,...,l_{n-1})\). If the functions \(\mathsf {L}_i\) manipulating these shares are linear in the bits of \(s_i\), the bounded moment security order \(d'\) of the IP encoding given by \(\mathbf {s}_{MIX}\) is equal to the bit-probing security of its equivalent encoding \(\mathbf {s}_{MIX2}\).

Proof

As we assume that the \(\mathsf {L}_i\)’s are linear in the bits of \(s_i\), the leakage \(L_i\) can be represented as follows:

with \(\mathsf {F}_i^j, j\in [0,k-1]\) a deterministic function in the bit j of \(s_i\) and \((\alpha _i,\alpha _i^0,..., \alpha _i^{k-1}) \in \mathbb {R}^{k+1}\). We can see that the last line of Eq. 8 has the same form as Eq. 7. As we have n different leakages \(L_i\), each one linearly manipulating k bits, the full leakages \(\{L_i\}_{i=0}^{n-1}\) of \(\mathbf {s}_{MIX}\) can be viewed as the leakages of a parallel implementation of \(\mathbf {s}_{MIX2}\) with \(N=n\) cycles, each one manipulating \(n_c=k\) single-bit variables. As a result, the bounded security of a serial implementation (called A) of \(\mathbf {s}_{MIX}\) is the same as a parallel implementation (called B) of \(\mathbf {s}_{MIX2}\) with \(N=n\) and \(N_c=k\). From [3], we know that proving the bounded security of B is equivalent to proving the probing security of its serial implementation. As the probing security of the serial implementation of B corresponds to the case where one probe can only evaluate one bit, it corresponds to the bit-probing security of \(\mathbf {s}_{MIX2}\), which conclude the proof. \(\square \)

Intuitively, this result simply corresponds to the observation that while probing security at order d implies bounded moment security at order d in case the leakages of the shares are independent (with each share a field element), bit-security at order \(d'\) implies bounded moment security at order \(d'\) in the case where not only the leakages of the shares are independent (with each share being a field element), but also the different bits of each field element is manipulated independently (which is ensured by the linear leakage assumption). This result implies that, under the linear leakage assumption, maximizing the bounded moment security of the IP encoding \(\mathbf {s}_{MIX}\) is the same as maximizing the bit-probing security of \(\mathbf {s}_{MIX2}\). The next step is thus to find the best public vectors \(\mathbf {l}\) so that the bit-probing security of \(\mathbf {s}_{MIX2}\) is maximized.

5 Searching for Good Codes

As shown in Proposition 1, the key parameter characterizing the bit-probing security is the dual distance of the linear code \(\mathcal {D}\) with generator matrix:

where \(\mathbf {I}_{k}\) is an identity matrix with dimension k and \(\mathbf {L}_{i}\) is the matrix representation of a finite field element \(l_{i}\). Therefore, we have the following proposition.

Proposition 3

The problem of searching for instantiations of an IP masking scheme with good bit-probing security is equivalent to that of searching for an [nk, k] linear code \(\mathcal {C}_{g}\) over \(\mathbb {F}_{2}\) with large minimal distance and generator matrix:

The best possible linear codes with a small dimension k (e.g., \(k\le 8\)) are well-studied in literature, see [4, 23, 37]. Therefore, the minimal distance of \(\mathcal {C}_{g}\) can be upper-bounded. Moreover, the sub-matrix \(\mathbf {L}_{i}^{T}\) in \(\mathbf {G}_{g}\) is connected to the underlying irreducible polynomial \(g(x) \in \mathbb {F}_{2}[X]\), which defines the field extension from \(\mathbb {F}_{2}\) to \(\mathbb {F}_{2^{k}}\).

We now consider the practically-relevant case study of implementing the AES securely, i.e., when \(k=8\) and we use the AES polynomial \(x^{8} + x^{4} + x^{3} + x + 1\). In the following subsection, we show that one can choose the \(l_{i}\)’s to form an IP masking scheme with bit-probing security that is close to the upper bound defined by the best possible eight dimensional binary linear codes.

5.1 Application: 8-bit Implementation of the AES

The problem of determining the largest possible minimum distance of an eight dimensional binary linear code is settled in [4], i.e., it is equal to or slightly smaller than the distance defined by the Griesmer Bound [24].

The companion matrix (see [28]) of \(g(x) = x^{8} + x^{4} + x^{3} + x + 1\) is defined as:

whose last row is of the form (1 1 0 1 1 0 0 0). Thus, all the possible field elements of \(\mathbb {F}_{2^{8}}\) can be enumerated as:

for all \(\mathbf {a} = (a_{0}, a_{1}, \ldots , a_{7}) \in \mathbb {F}_{2}^{8}\). We next use three approaches for finding good linear codes with generator matrix satisfying the constraint in Eq. 10.

- Exhaustive search: :

-

When n is small, i.e., less than 4, we can do a brute-force search. That is, we enumerate all possible generator matrices \(\mathbf {G}_{g}\) with the same form as that in Eq. (10), and then test its minimum distance.

- Random search: :

-

We choose \(\mathbf {L}_{i}\) at random to construct \(\mathbf {G}_{g}\) and then test its minimum distance.

- Inductive search: :

-

We construct good [8n, 8] linear codes satisfying Eq. (10) from good \([8(n-n_{0}), 8]\) linear codes satisfying Eq. (10), where \(n_{0}\) is a small positive integer (e.g., 1, 2, 3, or 4). That is, we fix the first \(8(n-n_{0})\) columns of \(\mathbf {G}_{g}\) as the found generator matrix of a good code with length \(8(n-n_{0})\), exhaust all possible \(\mathbf {L}_{i}\)’s, for \(i = n-n_{0}, \ldots , n-1\), and then test its minimum distance.

The numerical results by running Magma are shown in Table 1, where the column n is the number of shares, the column \(n\cdot k\) is the code length, the column \(d_{best}^{\text{ IP }}\) is the best minimum distance found from linear codes with a generator matrix satisfying Eq. (10), the column \(d_{best}^{\text{ U }}\) is the upper bound derived from the best achievable minimum distance for any [8n, 8] linear codes, and the last column \(\varDelta \) is the difference between the prior two columns (i.e., the gap between IP masking and DSM). It is clear from this table that IP masking can achieve near-optimal bit-probing security if the number of shares is relatively small. Actually, most of the interesting choices of n in practice are covered in this table (since, due to performance reasons, state-of-the-art implementations of IP masking so far did not go beyond 2 or 3 shares). The constructed good codes also show an approach to instantiate IP masking with good bit-probing security. That is, one can determine the finite field elements \(l_{i}\)’s from the found generator matrix \(\mathbf {G}_{g}\) corresponding to a good linear code. We did exhaustive search for \(n = 2,3,4\), random search for \(n = 5,6\), and inductive search for \(n = 7\). Therefore, we cannot rule out the possibility of finding a linear code to reach the upper bound when \(n\ge 6\) with more computational efforts.Footnote 1

Concretely and as an example, this table shows that when considering IP masking with three shares, the standard probing model guarantees a security order 2. In case the shares only give rise to linear leakages, the bit-level probing model guarantees a security of order 7.

6 Experimental Validation

The previous positive results admittedly (highly) depend on a physical assumption (i.e., linear leakages) that may not be perfectly respected. So in order to validate the theoretical results, we now consider a practical security evaluation of an IP encoding implementation. In this respect, this case study comes with the (usual) cautionary note that the only thing we show next is that there exist implementations for which (essentially) linear leakages are observed for certain samples. This does not imply that the security order amplification can be observed for full implementations (which, as mentioned in the introduction, is left as an important scope for further research). Yet, it shows that this security order amplification can at least be used to reduce the amount of leaky samples in an implementation and/or their informativeness.

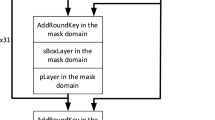

6.1 Target Implementation

Our experiments are performed on a 32 bits ARM Cortex-M4 microcontroller using the Atmel SAM4C-EK evaluation kit running at 100 MHz.Footnote 2 We implemented the IP encoding using two shares. We performed the trace acquisition using a Lecroy WaveRunner HRO 66 ZI oscilloscope running at 500 megasamples per second. We monitored the voltage variation using a 4.7 \(\varOmega \) resistor set in the supply circuit of the chip. For each execution and a given value of \(l_1\), we select a random secret x, a random value \(s_1\) and compute the encoding \(\mathbf {s}=(s_0=x + l_1 \cdot s_1 ,s_1)\). We acquire the leakages by triggering the measurement prior to the successive load of these two shares \(s_0\) and \(s_1\) into the memory.

6.2 Analysing the Leakages

Leakage Detection. Our first experiments aim at analyzing how the device leaks. As a preliminary, we start by identifying the points of interest that corresponds to the manipulation of \(s_0\) and \(s_1\) (in an evaluator-friendly setting where we know these values). Figure 1 shows the result of the correlation between the different time samples with the Hamming weight of \(s_0\) (left) and \(s_1\) (right) using 40,000 traces. Our following analyses only focus on the two samples giving the maximum correlation for both shares. We refer to the time sample corresponding to the manipulation of \(s_0\) (resp. \(s_1\)) as \(t_0\) (resp. \(t_1\)).

Linear Regression. The theoretical results on the security order amplification of Sect. 4 rely on the assumption that the leakage function is linear. As this assumption is hardware-dependent, we first evaluated the linearity of the leakages produced by our target. For a given time sample, linear regression is perfectly suited for this purpose [34]: it allows estimating how the manipulated data is leaked at the bit level. Denoting by \(\mathsf {L}: \mathbb {F}_2^8 \rightarrow \mathbb {R}\) the deterministic part of the actual leakage function, a linear regression of degree q gives the function \(\hat{\mathsf {L}}_q\) that approximate \(\mathsf {L}\) by using bit combinations of degree up to q. As a results, it is a suitable tool to estimate the linearity of the leakages, by just comparing regressions of degree 1 and 2. The description of the resulting \(\hat{\mathsf {L}}_1\) and \(\hat{\mathsf {L}}_2\) approximations are given by Eq. 12. The coefficients \(\alpha , \alpha _i\) and \(\alpha _{i,j}\) belong to \(\mathbb {R}\) and are the results of the linear regression:

Using the same traces as for the points of interest detection, we computed the linear regression at \(t_1\) using both a linear (\(\hat{\mathsf {L}}_1\)) and a quadratic (\(\hat{\mathsf {L}}_2\)) basis (\(t_0\) gave same results and is thus omitted). The left (resp., right) part of Fig. 2 shows the resulting coefficients for the linear (resp., quadratic) basis. The first value indexed by 0 corresponds to the offset \(\alpha \). The next 8 values indexed from 1 to 8 are the linear coefficient \(\alpha _i\). Finally, the quadratic coefficients \(\alpha _{i,j}\) are indexed from 9 to 36 (only in the right figure). We can see that the linear terms are significantly more dominant than the quadratic ones. As a result, it provides some confidence that our target (samples) are good candidates for the linear leakages assumption.

Mutual Information. Evaluating the linearity of the leakage function by only looking at the coefficients of \(\hat{\mathsf {L}}_1\) and \(\hat{\mathsf {L}}_2\) has two drawbacks. First, it is hard to judge if the models have converged. Secondly, we cannot know if the small values given by the quadratic coefficients are significant or come from estimation errors. In order to get rid of these two problems and push the analysis one step further, we compute the perceived information introduced in [16] arising from \(\hat{\mathsf {L}}_1\) and \(\hat{\mathsf {L}}_2\). The latter metric essentially captures the amount of information that can be extracted from a model, possibly biased by estimation and assumption errors. (Because of place constraints, we refer to this previous work for the details).

Figure 3 shows this perceived information for the linear model \(\hat{\mathsf {L}}_1\) and the quadratic model \(\hat{\mathsf {L}}_2\) in function of the number of traces used for the estimation of the model. As expected, the quadratic model needs more samples to converge as it is more complex. Interestingly, we can see that both models converge towards approximately the same value. This now formally confirms that the quadratic model does not bring significantly more information than the linear one in our setting. As a consequence, we deduce that the true leakages of our target are close to linear (and therefore that it is a good candidate to benefit from security order amplification).

6.3 Concrete Security Assessment

We now present additional results of concrete security analyses performed on our implementation. For this purpose, and in order to directly evaluate whether the security order of our IP encoding was amplified, we aim at detecting the lowest statistical moment in the leakages that reveals information on the secret. To do so, we apply the \(\rho \)-test with K-fold cross-validation as described in [15]. Note that in order to limit the (high) data requirements for this last experiment, we used the trick proposed in [36], Sect. 3.2 and performed a preliminary averaging of our traces (assuming mask knowledge) before trying to detect higher-order statistical dependencies. Namely, we used a 60\(\times \) averaging for the second-order detections and 100\(\times \) averaging for the third-order ones.

Correlation-Test. Given a leakage L, the \(\rho \)-test allows detecting a mean dependency between L and the secret x. The first step is to estimate a model from a profiling set \(\mathcal {L}_{prof}\) of \(N_{prof}\) leakage samples on L. This model corresponds to the average leakage on L for each value of the secret x. The next step is to use this model on a test set \(\mathcal {L}_{att}\) of \(N_{test}\) samples. We compute the correlation r between \(\mathcal {L}_{test}\) and our model applied on the secret values used to generate \(\mathcal {L}_{test}\). We then compute the normalized Fisher’s z-transformation on r:

where \(\mathsf {ln}\) denotes the natural logarithm. The obtained value \(r_z\) can be approximately interpreted as following a normal distribution with mean 0 and variance 1. As in [15], we set the confidence threshold of \(r_z\) that detects the presence of a dependency to 5.

K-fold cross validation. We use a 4-fold cross validation in order to reduce the variability of the \(\rho \)-test. That is, we acquire set \(\mathcal {L}\) of N leakages that we partition in 4 independent subsets \(\mathcal {L}_i, i\in [1,4]\) of equal size. We then apply the \(\rho \)-test 4 times by using a different test set each time (more precisely, iteration i uses \(\mathcal {L}_i\) as a test set and \(\cup _{j \ne i} \mathcal {L}_j\) as profiling set).

Evaluation results. In our first (reference) experiment, we used \(l_1\) equal to 1, which is equivalent to a Boolean masking encoding. The corresponding DSM representation is such that the dual distance of D is equal to two. As we expect a second-order dependency, we apply the \(\rho \)-test on the center product \(L=(L_{t_1}-\bar{L}_{t_1})\cdot (L_{t_2}-\bar{L}_{t_2})\), where \(\bar{L}_{t_1}\) (resp. \(\bar{L}_{t_2}\)) denotes the sample mean of \(L_{t_1}\) (resp. \(L_{t_2}\)). Figure 4 shows the result of the \(\rho \)-test with 4-fold cross validation. The x-coordinate shows the number of average traces being used, and the y coordinate shows the confidence value. The black curves is the line \(y=5\) that shows the confidence threshold. Each of the remaining 4 curves represents one of the cross validation tests. As expected, we quickly detect a second order leakage after roughly 5,000 average traces.

As a second experiment, we set \(l_1=3\), which is the hexadecimal representation of the polynomial \(x+1\). The corresponding ODSM representation is such that the dual distance of D is equal to three. That is, we expect the lowest statistical moment that gives information on the secret to be equal to three, thus having a security order amplification. We verify this in two steps. First, we apply the \(\rho \)-test on the center product as in the previous experiment to detect if any second-order dependency can be seen. Secondly, we apply the \(\rho \)-test on a new center product \(L=(L_{t_1}-\bar{L}_{t_1})\cdot (L_{t_2}-\bar{L}_{t_2})\cdot (L_{t_2}-\bar{L}_{t_2})\) to detect the presence of a third-order dependency. The left (resp., right) part of Fig. 5 shows the results of the \(\rho \)-test with 4-fold cross validation for the second-order (resp., third-order) test. Again, the x-coordinate represents the number of average traces, the y coordinates the confidence value and the black curve the confidence threshold. As we can see on the left part of the figure, no second-order leakages are detected with up to 100,000 average traces. However, the right part of the figure shows a third-order dependency around 60,000 average traces. This confirms both the high linearity of the leakages of this chip and the relevance of the theoretical investigations in Sect. 4.

Discussion. To conclude, we emphasize that the results of the \(\rho \)-test experiment for \(l_1=3\) were based on 100\(\times \) averaged traces, leading to a Signal-to-Noise Ratio close to 1 (which is out of the noise levels where masking security proofs apply [14]). So this experiment does not formally prove that no second-order dependency could appear for this noise level (without averaging). In this respect, we recall that this choice was motivated by time constraints (without averaging, we could not detect third-order dependencies either). Besides, and in view of the leakage analysis in Sect. 6.2, we are confident that the security order amplification put forward in this last section does actually correspond to our theoretical expectations with (close enough to) linear leakages.

Notes

- 1.

Note that the inductive search allows us to find good linear codes with relatively large minimum distance rather quickly. For instance, we can easily obtain a desired [72, 8, 30] linear code by the inductive search with the code length 8n increasing by 16 gradually from 24 to 72. The gap \(\varDelta \) here is only \(-2\). This task takes less than 2 min when using the online Magma calculator, while it is almost intractable by the other two approaches even running on a powerful local Magma server.

- 2.

References

Balasch, J., Faust, S., Gierlichs, B.: Inner product masking revisited. In: Oswald, E., Fischlin, M. (eds.) [30], pp. 486–510. Springer, Heidelberg (2015)

Balasch, J., Faust, S., Gierlichs, B., Verbauwhede, I.: Theory and practice of a leakage resilient masking scheme. In: Wang, X., Sako, K. (eds.) ASIACRYPT 2012. LNCS, vol. 7658, pp. 758–775. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-34961-4_45

Barthe, G., Dupressoir, F., Faust, S., Grégoire, B., Standaert, F.-X., Strub, P.-Y.: Parallel implementations of masking schemes and the bounded moment leakage model. In: Coron, J.-S., Nielsen, J.B. (eds.) [11], pp. 535–566. Springer, Cham (2017)

Bouyukliev, I., Jaffe, D.B., Vavrek, V.: The smallest length of eight-dimensional binary linear codes with prescribed minimum distance. IEEE Trans. Inf. Theor. 46(4), 1539–1544 (2000)

Bringer, J., Carlet, C., Chabanne, H., Guilley, S., Maghrebi, H.: Orthogonal direct sum masking - a smartcard friendly computation paradigm in a code, with builtin protection against side-channel and fault attacks. In: Naccache, D., Sauveron, D. (eds.) WISTP 2014. LNCS, vol. 8501, pp. 40–56. Springer, Heidelberg (2014). https://doi.org/10.1007/978-3-662-43826-8_4

Carlet, C., Danger, J.-L., Guilley, S., Maghrebi, H.: Leakage squeezing of order two. In: Galbraith, S.D., Nandi, M. (eds.) INDOCRYPT 2012. LNCS, vol. 7668, pp. 120–139. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-34931-7_8

Carlet, C., Danger, J.-L., Guilley, S., Maghrebi, H.: Leakage squeezing: optimal implementation and security evaluation. J. Math. Cryptol. 8(3), 249–295 (2014)

Carlet, C., Danger, J.-L., Guilley, S., Maghrebi, H., Prouff, E.: Achieving side-channel high-order correlation immunity with leakage squeezing. J. Cryptogr. Eng. 4(2), 107–121 (2014)

Carlet, C., Guilley, S.: Complementary dual codes for counter-measures to side-channel attacks. Adv. Math. Commun. 10(1), 131–150 (2016)

Chari, S., Jutla, C.S., Rao, J.R., Rohatgi, P.: Towards sound approaches to counteract power-analysis attacks. In: Wiener, M.J. (ed.) CRYPTO 1999. LNCS, vol. 1666, pp. 398–412. Springer, Heidelberg (1999). https://doi.org/10.1007/3-540-48405-1_26

Coron, J.-S., Nielsen, J.B. (eds.): EUROCRYPT 2017. LNCS, vol. 10210. Springer, Cham (2017)

Coron, J.-S., Prouff, E., Rivain, M., Roche, T.: Higher-order side channel security and mask refreshing. In: Moriai, S. (ed.) FSE 2013. LNCS, vol. 8424, pp. 410–424. Springer, Heidelberg (2014). https://doi.org/10.1007/978-3-662-43933-3_21

Duc, A., Dziembowski, S., Faust, S.: Unifying leakage models: from probing attacks to noisy leakage. In: Nguyen, P.Q., Oswald, E. (eds.) [29], pp. 423–440. Springer, Heidelberg (2014)

Duc, A., Faust, S., Standaert, F.-X.: Making masking security proofs concrete - or how to evaluate the security of any leaking device. In: Oswald, E., Fischlin, M. (eds.) [30], pp. 401–429. Springer, Heidelberg (2015)

Durvaux, F., Standaert, F.-X.: From improved leakage detection to the detection of points of interests in leakage traces. In: Fischlin, M., Coron, J.-S. (eds.) EUROCRYPT 2016. LNCS, vol. 9665, pp. 240–262. Springer, Heidelberg (2016). https://doi.org/10.1007/978-3-662-49890-3_10

Durvaux, F., Standaert, F.-X., Veyrat-Charvillon, N.: How to certify the leakage of a chip? In: Nguyen, P.Q., Oswald, E. (eds.) [29], pp. 459–476. Springer, Heidelberg (2014)

Dziembowski, S., Faust, S.: Leakage-resilient cryptography from the inner-product extractor. In: Lee, D.H., Wang, X. (eds.) ASIACRYPT 2011. LNCS, vol. 7073, pp. 702–721. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-25385-0_38

Fumaroli, G., Martinelli, A., Prouff, E., Rivain, M.: Affine masking against higher-order side channel analysis. In: Biryukov, A., Gong, G., Stinson, D.R. (eds.) SAC 2010. LNCS, vol. 6544, pp. 262–280. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-19574-7_18

Genelle, L., Prouff, E., Quisquater, M.: Thwarting higher-order side channel analysis with additive and multiplicative maskings. In: Preneel, B., Takagi, T. (eds.) CHES 2011. LNCS, vol. 6917, pp. 240–255. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-23951-9_16

Golić, J.D., Tymen, C.: Multiplicative masking and power analysis of AES. In: Kaliski Jr., B.S., Koç, C.K., Paar, C. (eds.) CHES 2002. LNCS, vol. 2523, pp. 198–212. Springer, Heidelberg (2003). https://doi.org/10.1007/3-540-36400-5_16

Goubin, L., Martinelli, A.: Protecting AES with Shamir’s secret sharing scheme. In: Preneel, B., Takagi, T. (eds.) CHES 2011. LNCS, vol. 6917, pp. 79–94. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-23951-9_6

Goudarzi, D., Rivain, M.: How fast can higher-order masking be in software? In: Coron, J.S., Nielsen, J. (eds.) [11], pp. 567–597. Springer, Cham (2017)

Grassl, M.: Tables of linear codes and quantum codes (2015). http://www.codetables.de/. Accessed 25 Apr 2017

Griesmer, J.H.: A bound for error-correcting codes. IBM J. Res. Dev. 4(5), 532–542 (1960)

Grosso, V., Prouff, E., Standaert, F.-X.: Efficient masked S-boxes processing – a step forward –. In: Pointcheval, D., Vergnaud, D. (eds.) AFRICACRYPT 2014. LNCS, vol. 8469, pp. 251–266. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-06734-6_16

Grosso, V., Standaert, F.-X., Prouff, E.: Low entropy masking schemes, revisited. In: Francillon, A., Rohatgi, P. (eds.) CARDIS 2013. LNCS, vol. 8419, pp. 33–43. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-08302-5_3

Ishai, Y., Sahai, A., Wagner, D.: Private circuits: securing hardware against probing attacks. In: Boneh, D. (ed.) CRYPTO 2003. LNCS, vol. 2729, pp. 463–481. Springer, Heidelberg (2003). https://doi.org/10.1007/978-3-540-45146-4_27

Lidl, R., Niederreiter, H.: Finite Fields. Encyclopedia of Mathematics and its Applications. Advanced Book Program/World Science Division. Addison-Wesley Publishing Company, Boston (1983)

Nguyen, P.Q., Oswald, E. (eds.): EUROCRYPT 2014. LNCS, vol. 8441. Springer, Heidelberg (2014)

Oswald, E., Fischlin, M. (eds.): EUROCRYPT 2015. LNCS, vol. 9056. Springer, Heidelberg (2015)

Prouff, E., Rivain, M.: Masking against side-channel attacks: a formal security proof. In: Johansson, T., Nguyen, P.Q. (eds.) EUROCRYPT 2013. LNCS, vol. 7881, pp. 142–159. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-38348-9_9

Rivain, M., Prouff, E.: Provably secure higher-order masking of AES. In: Mangard, S., Standaert, F.-X. (eds.) CHES 2010. LNCS, vol. 6225, pp. 413–427. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15031-9_28

Roche, T., Prouff, E.: Higher-order glitch free implementation of the AES using secure multi-party computation protocols - extended version. J. Cryptogr. Eng. 2(2), 111–127 (2012)

Schindler, W., Lemke, K., Paar, C.: A stochastic model for differential side channel cryptanalysis. In: Rao, J.R., Sunar, B. (eds.) CHES 2005. LNCS, vol. 3659, pp. 30–46. Springer, Heidelberg (2005). https://doi.org/10.1007/11545262_3

Schneider, T., Moradi, A.: Leakage assessment methodology - extended version. J. Cryptogr. Eng. 6(2), 85–99 (2016)

Standaert, F.-X.: How (not) to use welch’s t-test in side-channel security evaluations. IACR Cryptology ePrint Archive, 2017, p. 138 (2017)

van Tilborg Henk, C.A.: The smallest length of binary 7-dimensional linear codes with prescribed minimum distance. Discrete Math. 33(2), 197–207 (1981)

Wang, W., Standaert, F.-X., Yu, Y., Pu, S., Liu, J., Guo, Z., Gu, D.: Inner product masking for bitslice ciphers and security order amplification for linear leakages. In: Lemke-Rust, K., Tunstall, M. (eds.) CARDIS 2016. LNCS, vol. 10146, pp. 174–191. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-54669-8_11

Acknowledgements

François-Xavier Standaert is a research associate of the Belgian Fund for Scientific Research. This work has been funded in parts by the European Commission through the H2020 project 731591 (acronym REASSURE), the CHIST-ERA project SECODE and the ERC project 724725 (acronym SWORD).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Poussier, R., Guo, Q., Standaert, FX., Carlet, C., Guilley, S. (2018). Connecting and Improving Direct Sum Masking and Inner Product Masking. In: Eisenbarth, T., Teglia, Y. (eds) Smart Card Research and Advanced Applications. CARDIS 2017. Lecture Notes in Computer Science(), vol 10728. Springer, Cham. https://doi.org/10.1007/978-3-319-75208-2_8

Download citation

DOI: https://doi.org/10.1007/978-3-319-75208-2_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-75207-5

Online ISBN: 978-3-319-75208-2

eBook Packages: Computer ScienceComputer Science (R0)