Abstract

In this paper, some iterative methods with third order convergence for solving the nonlinear equation were reviewed and analyzed. The purpose is to find the best iteration schemes that have been formulated thus far. Hence, some numerical experiments and basin of attractions were performed and presented graphically. Based on the five test functions it was found that the best method is D87a due Dong’s Family method (Int J Comput Math 21:363–367, 1987).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Iterative methods are widely used for finding roots of a nonlinear equation of the following form

where \(f:D\subset \mathbf {C} \rightarrow \mathbf {C}\), which is defined on an open interval D. Moreover, solving nonlinear equations using the iterative methods is a basic and extremely valuable tool in all fields of science as well as in economics and engineering. Analytical procedures for solving such problems are hardly available. It is indispensable to calculate approximate solutions based on numerical methods. The general procedure is to start with one initial approximation to the root and attain a sequence of iterates, which in the limit converge to the actual solution. The Newton–Raphson iteration

is probably the most widely used algorithm for finding roots. The Newton method converges quadratically and requires two evaluations for each iteration step, one evaluation of f and one of \(f'\) [16]. The Newton–Raphson iteration is an example of a one-point iteration, i.e., in each iteration step the evaluations are taken at a single point. Let \( \alpha \) be multi roots of \( f(x_{k})=0 \) with multiplicity m i.e., \( f^{(i)}(\alpha )=0, i=0, 1, \ldots , m-1 \) and \( f^{(m)}(\alpha )\ne 0 \). If functions \( f^{(m-1)} \) and \( f^{1/m} \) have only a simple zero at \( \alpha \), any of the iterative methods for a simple zero may be used [25]. The Newton method for finding a simple zero \( \alpha \) has been modified by Scheroder to find multiple zeros of a nonlinear equation which is of the form

with convergence of two [19].

The important aspect that related to these method are order of convergence and number of iteration evaluation. In recent years, a large number of multi-point methods for finding simple and multiple roots of nonlinear equations have been developed and analyzed to improve the order of convergence of classical methods see [6, 9, 12,13,14, 17, 18, 21, 22, 24, 29]. The objective of this paper is to find the best methods from the literature by comparing the numerical performance and the dynamical behavior of basin attraction. We focus on the methods with known multiplicity m. We compare the methods with the third order convergence, and the same efficiency index. The iterative method of order p requiring n function evaluations per iteration is defined by \(E(n,p)=\root n \of {p}\), see [16]. These methods are non-optimal due to Traub [11] conjecture that multipoint iteration based on n evaluation has optimal order \(2^{n-1}\).

Some of the existing methods of third order convergence are listed as bellow:

-

1.

Dong’s method (a) (1982) [4]

-

2.

Dong’s method (b) (1982) [4]

-

3.

Victory and Neta’s method [27]

-

4.

Dong’s method (1987) [5]

-

5.

Osada’s method [15]

-

6.

Chun et al.’s method [3]

-

7.

Homeier’s method [10]

-

8.

Heydari et al.’s method [9]

-

9.

Zhou et al.’s method [29]

-

10.

Sharifi et al.’s method [21]

-

11.

Ferrara et al.’s method [6]

This paper is organized as follow: Sect. 2 lists all the reviewed methods and the test functions used and the numerical comparisons. The dynamical behavior of the methods are illustrated in Sect. 3. Finally, a conclusion is provided in Sect. 4.

2 Numerical examples

2.1 Numerical methods

In following, details of the reviewed method are listed below .

-

1.

Dong’s method (a) (1982) (D82a) [4]. The iteration is given by

$$\begin{aligned} \begin{aligned} y_{k}&= x_{k}-\sqrt{m}\frac{f(x_{k})}{f'(x_{k})}, \\ x_{k+1}&= y_{k}-m\left( 1-\frac{1}{\sqrt{m}} \right) ^{1-m}\frac{f(y_{k})}{f'(x_{k})}. \end{aligned} \end{aligned}$$(2.1) -

2.

Dong’s method (b) (1982) (D82b) [4]. The iteration is given by

$$\begin{aligned} \begin{aligned} y_{k}&= x_{k}-\frac{f(x_{k})}{f'(x_{k})},\\ x_{k+1}&=y_{k}+\frac{\frac{f(x_{k})}{f'(x_{k})}f(y_{x})}{f(y_{k})-\left( 1-\frac{1}{m} \right) ^{m-1}f(x_{k})}. \end{aligned} \end{aligned}$$(2.2) -

3.

Victory and Neta’s method (VN) [27]. The iteration is given by

$$\begin{aligned} \begin{aligned} y_{k}&=x_{k}-\frac{f(x_{k})}{f'(x_{k})},\\ x_{k+1}&=y_{k}-\frac{f(y_{k})}{f'(x_{k})}\cdot \frac{f(x_{k})+Af(y_{k})}{f(x_{k})+Bf(y_{k})}, \end{aligned} \end{aligned}$$(2.3)where \(A=\mu ^{2m}-\mu ^{m+1}, B=-\frac{\mu ^{m}(m-2)(m-1)+1}{(m-1)^{2}} \) and \(\mu =\frac{m}{m-1}\).

-

4.

Dong’s method (1987) (D87) [5]. The iteration is given by

$$\begin{aligned} \begin{aligned} y_{k}&= x_{k}-\frac{f(x_{k})}{f'(x_{k})},\\ x_{k+1}&= y_{k}-\frac{f(x_{k})}{\left( \frac{m}{m-1} \right) ^{m+1}f'(y_{k})+\frac{m-m^{2}-1}{(m-1)^{2}}f'(x_{k})}. \end{aligned} \end{aligned}$$(2.4) -

5.

Osada’s method (OS) [15]. The iteration is given by

$$\begin{aligned} x_{k+1}=x_{k}-\frac{1}{2}m(m+1)\frac{f(x_{k})}{f'(x_{k})}+\frac{1}{2}(m-1)^{2}\frac{f'(x_{k})}{f''(x_{k})}. \end{aligned}$$(2.5) -

6.

Chun and Neta’s method (CN) [3]. The iteration is given by

$$\begin{aligned} \begin{aligned} x_{n+1}&=x_{n}-\frac{m\left[ (2 \theta -1)m +3-2 \theta \right] }{2}\frac{f(x_{n})}{f'(x_{n})}\\&\quad +\,\frac{\theta (m-1)^{2}}{2}\frac{f'(x_{n})}{f''(x_{n})}-\frac{(1-\theta )m^{2}}{2}\frac{f(x_{n})^{2}f''(x_{n})}{f'(x_{n})^{3}}, \end{aligned} \end{aligned}$$(2.6)where \(\theta = \frac{1}{2},-1,\) in computation we used \(\theta = -1\).

-

7.

Homeier’s method (HM) [10]. The iteration is given by

$$\begin{aligned} \begin{aligned} y_{k}&=x_{k}-\frac{m}{m+1}\frac{f(x_{k})}{f'(x_{k})},\\ x_{k+1}&=x_{k}-m^{2}\left( \frac{m}{m+1} \right) ^{m-1}\frac{f(x_{k})}{f'(y_{k})}+m(m-1)\frac{f(x_{k})}{f'(x_{k})}. \end{aligned} \end{aligned}$$(2.7) -

8.

Heydari et al.’s method (HY) [9]. The iteration is given by

$$\begin{aligned} \begin{aligned} y_{k}&=x_{k}-\theta \frac{f(x_{k})}{f'(x_{k})},\\ x_{k+1}&=x_{k}-\frac{\frac{m(\mu \theta -\theta +\mu )}{\theta (\mu -1)}f(x_{k})-\frac{m\mu ^{1-m}}{\theta (\mu -1)}f(y_{k})}{f'(x_{k})}, \end{aligned} \end{aligned}$$(2.8)where \(\mu = \frac{m-\theta }{m}\) and \( \theta =\frac{2m}{m+2}\).

-

9.

Zhou et al.’s method (ZH) [29]. The iteration is given by

$$\begin{aligned} \begin{aligned} y_{k}&=x_{k}- \frac{f(x_{k})}{f'(x_{k})},\\ x_{k+1}&=x_{k}+m(m-2)\frac{f(x_{k})}{f'(x_{k})}-m(m-1)\left( \frac{m}{m-1} \right) ^{m}\frac{f(y_{k})}{f'(x_{k})}. \end{aligned} \end{aligned}$$(2.9) -

10.

Sharifi et al.’s method (SH) [21]. The iteration is given by

$$\begin{aligned} \begin{aligned} y_{k}&=x_{k}- \frac{f(x_{k})}{f'(x_{k})},\\ x_{k+1}&=x_{k}- \frac{\alpha f(x_{k})+f(y_{k})}{\beta f'(x_{k})}, \end{aligned} \end{aligned}$$(2.10)where \(\alpha =(\mu -1)\frac{\mu ^{\mu }}{m^{m}},\)\(\beta = \frac{\mu ^{\mu }}{m^{m+1}}\) and \(\mu =m-1\).

-

11.

Ferrara et al.’s method (FR) [6]. The iteration is given by

$$\begin{aligned} \begin{aligned} y_{k}&=x_{k}- \frac{f(x_{k})}{f'(x_{k})},\\ x_{k+1}&=x_{k}- \frac{\theta f(x_{k})}{\theta f(x_{k})-f(y_{k})}\frac{f(x_{k})}{ f'(x_{k})}, \end{aligned} \end{aligned}$$(2.11)where \(\theta =\left( \frac{-1+m}{m} \right) ^{-1+m}.\)

2.2 Numerical test functions

We have analyzed all eleven nonlinear third order convergence multiple root methods with the test functions listed in Table 1. To obtain high possible accuracy and avoid the loss of significant digits, we have utilized the multi-precision arithmetic with 200 significant decimal places in the programming package of Mathematica 10.3 [8].

The computational order of convergence (COC) is approximated as [28]

The approximated computational order of convergence, (ACOC) is calculated by [7]

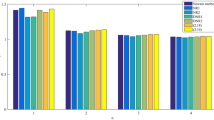

Table 2 exhibits that the method D87a [5] performs better in term of error for each iteration as compared to others.

3 Basin of attraction

In this section we observe the performance of all methods by using basin of attraction. Basin of attraction suggests that the methods converge if the initial guess are chosen correctly. We now investigate the stability region from basin of attraction. For more information, see [1, 20] and [26].

Let \(G:\mathbf {C} \rightarrow \mathbf {C} \) be a rational map on the complex plane. For \(z\in \mathbf {C} \), we specify its orbit as the set \(orb(z)=\{z,\,G(z),\,G^2(z),\dots \}\). A point \(z_0 \in \mathbf {C} \) is called periodic point with minimal period m if \(G^m(z_0)=z_0\), where m is the smallest integer with this property. A periodic point with minimal period 1 is called fixed point. Moreover, a point \(z_0\) is called attracting if \(|G'(z_0)|<1\), repelling if \(|G^{\prime }(z_0)|>1\), and neutral otherwise. The Julia set of a nonlinear map G(z), denoted by J(G), is the closure of the set of its repelling periodic points. The complement of J(G) is the Fatou set F(G), where the basin of attraction of the different roots lies [2].

We use a \(512\times 512\) grid of square \([-\,3,3] \times [-\,3,3] \in \mathbb {C} \) for basin of attraction figures. Next, we setup a color to each point \(z_{0} \in \mathbb {D}\) according to the root to which the corresponding orbit of the method starting from \(z_{0}\) converges. Point with black color is marked if the orbit does not converge to the root, apparently after at most 100 iterations it has distance to any of the roots, which larger than \(10^{-3}\). Hence, we analyzed the basin of attraction by their colors for different methods.

We have tested the listed methods in for their basin of attraction by the test functions tabular in Table 3.

In Figs. 1, 2, 3, 4 and 5, different colors are used for distinct roots. The brighter the color the less the iteration used in computation. When it less of iteration step the colors became more brighter compare than other. Note that, the black color stands for the lack of convergence to any of the roots. Figures 1, 2, 3, 4 and 5 show that for all test function considered in Table 3, method D87 [5] presents less black point and also has larger brighter region in comparison with other method with the same order of convergence which means that D87 provides faster convergences.

4 Conclusion

In conclusion, from the numerical experiments and basin of attraction, D87 gives better performance compare to others with the same order of convergences.

References

Amat, S., Busquier, S., Plaza, S.: Review of some iterative root-finding methods from a dynamical point of view. Scientia 10, 3–35 (2004)

Babajee, D.K.R., Cordero, A., Soleymani, F., Torregrosa, J.R.: Improved three-step schemes with high efficiency index and their dynamics. Numer. Algorithms 65, 153–169 (2014)

Chun, C., Neta, B.: A third-order modification of Newton’s method for multiple roots. Appl. Math. Comput. 211, 474–479 (2009)

Dong, C.: A basic theorem of constructing an iterative formula of the higher order for computing multiple roots of an equation. Math. Numer. Sin. 11, 445–450 (1982)

Dong, C.: A family of multiopoint iterative functions for finding multiple roots of equations. Int. J. Comput. Math. 21, 363–367 (1987)

Ferrara, M., Sharifi, S., Salimi, M.: Computing multiple zeros by using a parameter in Newton–Secant method. SeMA J. 74, 361–369 (2017)

Grau-Sánchez, M., Noguera, M., Gutiérrez, J.M.: On some computational orders of convergence. Appl. Math. Lett. 23, 472–478 (2010)

Hazrat, R.: Mathematica: A Problem-Centered Approach. Springer, New York (2010)

Heydari, M., Hosseini, S.M., Loghmani, G.B.: Convergence of a family of third-order methods free from second derivatives for finding multiple roots of nonlinear equations. World Appl. Sci. J. 11, 507–512 (2010)

Homeier, H.H.H.: On Newton-type methods for multiple roots with cubic convergence. J. Comput. Appl. Math. 231, 249–254 (2009)

Kung, H.T., Traub, J.F.: Optimal order of one-point and multipoint iteration. J. Comput. Appl. Math. 21, 643–651 (1974)

Lotfi, T., Sharifi, S., Salimi, M., Siegmund, S.: A new class of three-point methods with optimal convergence order eight and its dynamics. Numer. Algorithms 68, 261–288 (2015)

Matthies, G., Salimi, M., Sharifi, S., Varona, J.L.: An optimal eighth-order iterative method with its dynamics. Jpn. J. Ind. Appl. Math. 33, 751–766 (2016)

Nik Long, N.M.A., Salimi, M., Sharifi, S., Ferrara, M.: Developing a new family of Newton–Secant method with memory based on a weight function. SeMA J. 74, 503–512 (2017)

Osada, N.: An optimal multiple root-finding method of order three. J. Comput. Appl. Math. 51, 131–133 (1994)

Ostrowski, A.M.: Solution of Equations and Systems of Equations, vol. 9. Academic Press, London (2009)

Salimi, M., Lotfi, T., Sharifi, S., Siegmund, S.: Optimal Newton–Secant like methods without memory for solving nonlinear equations with its dynamics. Int. J. Comput. Math. 94, 1759–1777 (2017)

Salimi, M., Nik Long, N.M.A., Sharifi, S., Pansera, B.A.: A multi-point iterative method for solving nonlinear equations with optimal order of convergence. Jpn. J. Ind. Appl. Math. 35, 497–509 (2018)

Schroder, E.: Uber unendlich viele algorithmen zur Auflosung der Gliechungen. Math. Ann. 2, 317365 (1870)

Scott, M., Neta, B., Chun, C.: Basin attractors for various methods. Appl. Math. Comput. 218, 2584–2599 (2011)

Sharifi, S., Ferrara, M., Nik Long, N.M.A., Salimi, M.: Modified Potra–Pták method to determine the multiple zeros of nonlinear equations. arXiv:1510.00319 (2015)

Sharifi, S., Ferrara, M., Salimi, M., Siegmund, S.: New modification of Maheshwari method with optimal eighth order of convergence for solving nonlinear equations. Open Math. 14, 443–451 (2016)

Sharifi, S., Siegmund, S., Salimi, M.: Solving nonlinear equations by a derivative-free form of the Kings family with memory. Calcolo 53, 201–215 (2016)

Sharifi, S., Salimi, M., Siegmund, S., Lotfi, T.: A new class of optimal four-point methods with convergence order 16 for solving nonlinear equations. Math. Comput. Simul. 119, 69–90 (2016)

Traub, J.F.: Iterative Methods for the Solution of Equations. Prentice Hall, New York (1964)

Varona, J.L.: Graphic and numerical comparison between iterative methods. Math. Intell. 24, 37–46 (2002)

Victory, H.D., Neta, B.: A higher order method for multiple zeros of nonlinear functions. Int. J. Comput. Math. 12, 329–335 (1983)

Weerakoon, S., Fernando, T.G.I.: A variant of Newton’s method with accelerated third-order convergence. Appl. Math. Lett. 13, 87–93 (2000)

Zhou, X., Chen, X., Song, Y.: Families of third and fourth order methods for multiple roots of nonlinear equations. Appl. Math. Comput. 219, 6030–6038 (2013)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Jamaludin, N.A.A., Nik Long, N.M.A., Salimi, M. et al. Review of some iterative methods for solving nonlinear equations with multiple zeros. Afr. Mat. 30, 355–369 (2019). https://doi.org/10.1007/s13370-018-00650-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13370-018-00650-3